Strengthening Small Object Detection in Adapted RT-DETR Through Robust Enhancements

Abstract

1. Introduction

- Randomized layer replacement in the encoder, designed to improve robustness under weak supervision.

- Multi-scale feature extraction and fusion of already extracted features from the encoder, enabling the model to retain fine spatial cues that are often lost during downsampling.

- Use of an adaptive focal loss function, which balances dense small object regions with sparse supervision to improve training stability.

2. Related Work

2.1. Small Object Detection in Industry

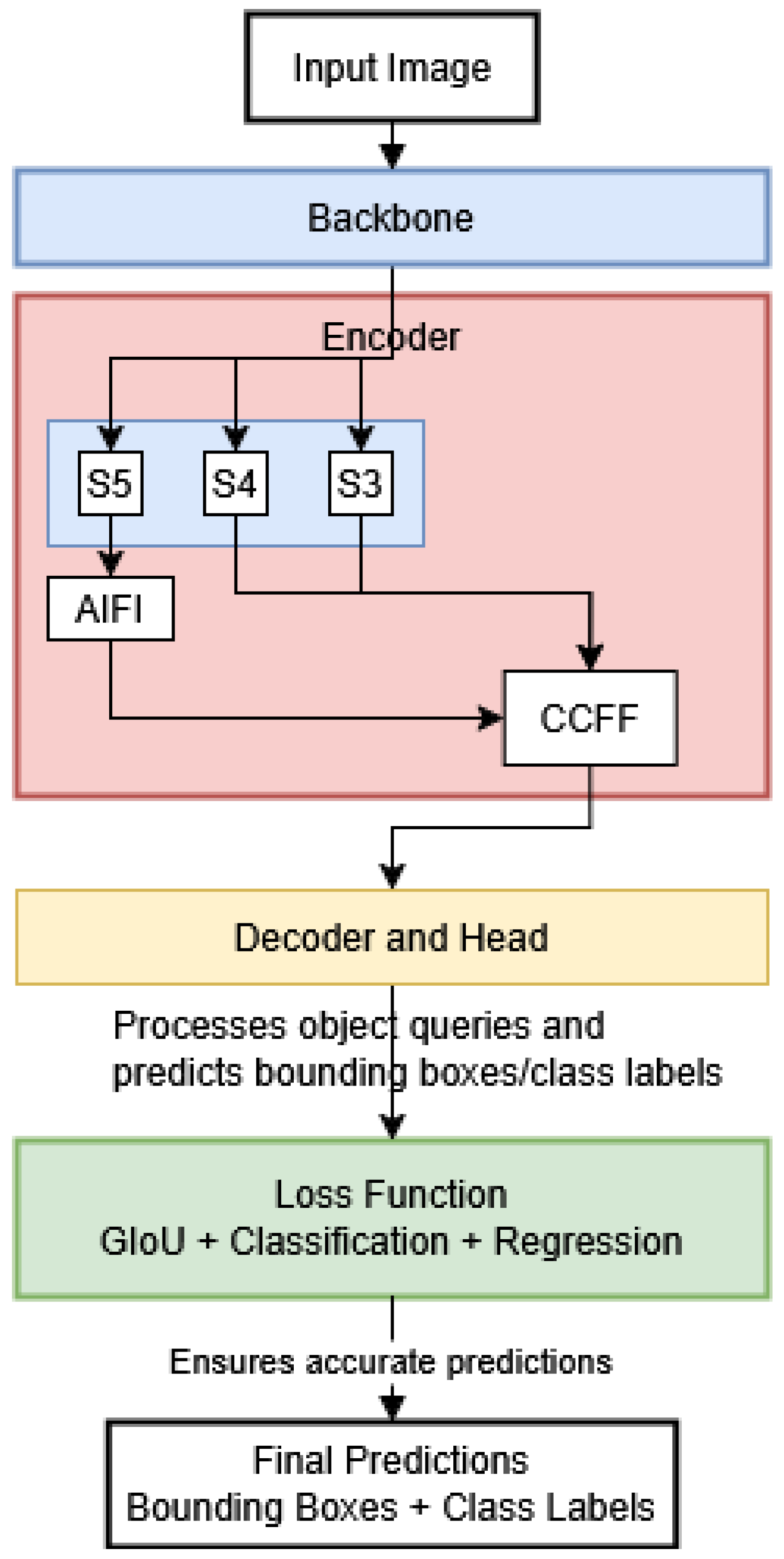

2.2. RT-DETR

- Query selection module: It uses object queries which are learned embeddings that represent potential objects in the image.

- Attention mechanism: RT-DETR employs conventional multi-head self-attention within the encoder and cross-attention in the decoder that interacts with object queries and multi-scale image features. Certain variants also utilize deformable attention to enhance efficiency and accelerate inference.

- Hybrid encoder: Made from two modules, CNN-based Cross-Scale Feature Fusion (CCFF) and Attention-based Intra-Scale Feature Interaction (AIFI). CCFF and AIFI form the basis of feature fusion as in the neck of the hybrid encoder.

| Authors and Domain | Primary Challenges | Backbone Improvements | Feature Fusion | Attention Mechanism | Additional Innovations | Small Object Focus |

|---|---|---|---|---|---|---|

| 1. Railway rutting defects [23] | Irregular defect distribution, varying sizes, and complex backgrounds | Faster CGLU module (combines PConv and gating mechanisms) | BiFPN with learnable weights (replaces CCFF) | H-AIFI with Hilo attention (high-/low-frequency paths) | Partial convolution | Yes |

| 2. Free-range chicken detection [24] | Small target detection, multi-scale targets, and clustering occlusions | SDTM (Space-to-Depth Transformer Module) | Standard | BiFormer (sparse and fine-grained attention) | contextual token (CoT) module, Space-to-Depth conversion | Yes |

| 3. Video object detection [25] | Motion blur, occlusions, and poor lighting in video frames | Standard | Decoupled Feature Aggregation Module | Separate self-attention | Two-step training strategy | Indirect |

| 4. Detection in drone aerial images [26] | Small object sizes, indistinct features, and motion blur | CF-Block with CGLU (Convolutional Gated Linear Unit) and FasterNet Block with PConv | SOEP (Small Object Enhance Pyramid) with SPDConv + Omni-Kernel Module | Channel and spatial attention in HS-FPN | W-ShapeIoU loss function | Yes |

| 5. Remote sensing object detection [27] | Infrared ship detection, small objects, and variable sizes | ResNet18 | DRB-CFFM (Dilated Reparam Block-Based Cross-Scale Feature Fusion Module) | CGA-IFI (Intra-Scale Feature Interaction), which uses cascaded group attention | EIoU loss function | Yes |

| 6. Drone object detection [28] | UAV aerial images, small objects, and diverse backgrounds | ESDNet backbone network with Fast-Residual and shallow feature enhancement module | Feature fusion using shallow SFEM layer | Enhanced Dual-Path Feature Fusion Attention Module | – | Yes |

| 7. Drone object detection [29] | UAV aerial images, small objects, and diverse backgrounds | ResNet18 | Re-calibration attention unit and re-parame-terized module | Deformable attention mechanism called DAttention | Focaler-IoU loss function | Yes |

| 8. Pavement distress detection [30] | Complex road backgrounds, diverse shapes, and high computational resource requirements | Enhanced backbone made from ADown module and the uniquely crafted Layer Aggregation Diverse Branch Block | Proposed MEFF (Multi-enhanced feature fusion) with DySample and DBBFuse | Optimized Intra-Scale Feature Interaction with AICBAM (combining channel and spatial attention) | – | Indirect |

| 9. Drone object detection [31] | Complex background, drastic scale changes, and dense small targets | GHSA (Gated Single-Head Attention) Block into ResNet-18 | Proposed ESO-FPN, combining SPD Conv and LKDA-Fusion for fine-grained details | Enhanced AIFI with MMSA (Multi-Scale Multi-Head Self-Attention) | Introduced ESVF Loss, extending variFocal loss with EMA and slide weighting | Yes |

| 10. Fire smoke detection [32] | Blurry smoke, high variability, and small objects | Enhanced with attention modules and 4D input | Redesigned with multi-scale fusion, 3D conv, and small object branch | Standard | New dataset and use of (EIoU) as the regression loss | Yes |

| 11. Wheat ears detection [33] | Occluded wheat ears, complex background, and dense objects | Introduced Space-to-Depth (SPD-Conv) with non-stride convolutional layer | Context-Guided Blocks (CGBlocks) to form CGFM (Context-Guided Cross-Scale Feature Fusion Module) | Standard | Focal Loss to handle class imbalance and optimized weights for losses | No |

| 12. Ground glass pulmonary nodule [34] | Small and ill-defined objects, and irregular shapes | Introduced FCGE (FasterNet + ConvGLU + ELA) blocks in ResNet18 | HiLo-AIFI instead of AIFI and DGAK (Dynamic Grouped Alterable Kernel) blocks for fusion in CCFF | HiLo module to replace Multi-Head Self-Attention | – | Yes |

| 13. Fruit ripeness detection [35] | High computation and multiple objects | PP-HGNet with Rep Block (with PConv) and Efficient Multi-Scale Attention (EMA) after the Stem block | Standard | Standard | Cross-space learning by reshaping channel dimensions into batch dimensions | No |

| 14. Human detection [36] | Limited resolution, lack of detail, and poor contrast | ConvNeXt | Feature Pyramid Network (FPN) is added between the backbone and the encoder | Standard | GIoU loss is replaced with CIoU loss | No |

| 15. Infrared electrical equip. detection [37] | Small targets and irregular target shapes | ResNet18 backbone is replaced with a custom-designed Multi-Path Aggregation Block (MAB) | The RepC3 module in the neck (CCFM) is replaced with the GConvC3 module | Multi-Scale Deformable Attention (MSDA) | BBox loss function is replaced with Focaler-EIoU | Yes |

| 16. Tomato ripeness detection [38] | Computational cost and diverse shapes, sizes, and ripeness stages | ResNet-18 with a custom PConv Block | Neck network with a slimneck-SSFF architecture combining GSConv, VoVGSCSP, and the SSFF module | Deformable attention in AIFI (encoder) | Integrated Inner-IoU loss with EIoU into a new Inner-EIoU loss | Yes |

| 17. Wafer surface defect detection [39] | Small particle defects and elongated linear scratches | ResNet50 with Dynamic Snake Convolution (DSConv) | CCFM module is replaced with a custom Residual Fusion Feature Pyramid Network (RFFPN) | AIFI module in the encoder is replaced with Deformable Attention Encoder (DAE) | – | Yes |

| 18. Jet engine blade surface defect detection [18] | Small particles, scratches, and cracks | ResNet18 that integrates partial convolution (PConv) and FasterNet | CCFM is replaced with HS-FPN (with channel attention (CA) and Selective Feature Fusion (SFF) module) | Standard | Introduced IoU-aware query selection mechanism and Inner-GIoU loss fn | Yes |

| 19. Rail defect detection [40] | Small target detection and noise interference | ResNet-50 with the Bottle2Neck module from Res2Net | Adds the RepBi-PAN structure to enhance CCFF | Standard | Uses loss WIoU and Hard Negative Sample Optimization Strategy | Yes |

| 20. Infrared ship detection [41] | Small low-contrast objects, scale disparity, and complex marine environments | CPPA backbone (Cross-Stage Partial with Parallelized Patch-Aware Attention) | HS-FPN (High-Level Screening FPN) with channel attention | MDST (Multi-Layer Dynamic Shuffle Transformer) | Multi-branch feature extraction (local, global, and convolution) | Yes |

| 21. Thermal infrared object detection [42] | Poor contrast and noise interference | Introduced partial convolution (PConv) and FasterStage to optimize ResNet18 | Replaced RepC3 with FMACSP (Feature Map Attention Cross-Stage Partial) | Efficient Multi-Scale Attention (EMA) in FMACSP (attention in fusion block) | – | No |

| 22. Remote sensing object detection [43] | Improving small object detection in remote sensing imagery | Standard | Made EBiFPN (enhanced BiFPN with DySample) | Cascaded group attention | Novel loss function called Focaler-GIoU | Yes |

| 23. Coal gangue detection [44] | Computational complexity, small targets, indistinct features, and complex background | FasterNet network with EMA attention to improve FasterBlock module | In CCFM block, RepC3 is upgraded to Dilated Re-param Block (DRB) | Enhances the AIFI module with improved learnable position encoding | Data augmentation with Stable Diffusion + LoRA | Yes |

| 24. UAV-based power line inspection [45] | Small object detection, low detection accuracy, and inefficient feature fusion | Replaced with GELAN (Generalized Efficient Layer Aggregation Network) for better feature extraction and efficiency | Instead of CCFF, handled within GELAN, improving multi-scale semantic fusion | Standard | New Reweighted L1 loss focusing on small objects | Yes |

| 25. Drone object detection [31] | Small object detection, lightweight model design, and efficient feature fusion | Introduces GSHA block and MMSA in ResNet18 | Proposes ESO-FPN, using large kernels + dual-domain attention to fuse features effectively for small objects | Standard | Introduces ESVF Loss (EMASlideVariFocal Loss) to dynamically focus on hard samples | Yes |

| 26. Tomato detection [46] | Small object detection, occlusion handling, low detection accuracy, and efficiency in complex environments | ResNet-50 replaced with Swin Transformer | Implicit fusion via Swin Transformer + BiFormer dual-level attention (no separate fusion module) | Standard | – | Yes |

| 27. RT-DETRv3 (General) object detection [47] | Sparse supervision, weak decoder training, and slow convergence | Retains ResNet but adds CNN-based auxiliary branch for dense supervision during training | Better encoder learning from auxiliary supervision | Introduces self-attention perturbation and shared-weight decoder branch for dense positive supervision (training only) | VFL and distributed focus loss (DFL) | Indirect |

| 28. RTS-DETR (general) object detection [48] | Small object detection, positional encoding limitations, and feature fusion inefficiency | Standard | Improves CCFM with Local Feature Fusion Module (LFFM) using spatial and channel attention, and multi-scale alignment | Core attention unchanged; improved via LPE and attentional fusion | Introduces new loss using Normalized Wasserstein Distance (NWD) + Shape-IoU for better small object regression accuracy | Yes |

| 29. Aquarium object detection [49] | Small object real-time detection | HGNetv2 with ImageNet pre-training | Learnable weights for feature maps | Standard | Bottom-up paths preserving details | Yes |

| 30. Traffic sign detection [50] | Small, distant, and poorly defined traffic signs | FasterNet Inverted Residual Block | Enhanced CCFM + S2 shallow layer integration | ASPPDAT (ASPP + Deformable Attention Transformer) | ASPPDAT, Inner-GIoU Loss, S2 fusion, and FasterNet in RT-DETR | Yes |

| 31. Steel surface defect detection [51] | Resource constraints in industrial settings and need for edge deployment | MobileNetV3 (lightweight architecture) | DWConv and VoVGSCSP structure | Standard | MPDIoU loss function for improved bounding box prediction | No |

| 32. Open-set object detection [52] | Novel class detection | Standard | RepC3 is replaced in CCFM (Manhattan Self-Attention) | MaSA (Manhattan Self-Attention) | MPDIoU Loss, a new bounding box regression loss | No |

Learning from the Literature Review

3. Proposed New Child of RT-DETR

3.1. Increasing Representational Capacity of Encoder

- Hebbian Layer: Inspired by Hebbian theory, this layer updates weights based on the correlation between the input and output neurons. The learning rule strengthens connections where simultaneous activation occurs, but it lacks a mechanism to weaken connections, which can lead to unbounded growth of weights.

- Oja’s Layer: An extension of Hebbian learning, Oja’s rule modifies Hebbian learning to include a normalization factor that prevents the weights from growing indefinitely. It does this by introducing a forgetting factor, ensuring that the sum of the squares of the weights converges to a constant, thus stabilizing the learning process.

- Randomized Layers: The concept of randomized layers involves using network components where weights are randomly initialized and then frozen, exempting them from training. In our implementation, we replace specific feed-forward (nn.Linear) layers in the transformer encoder with a custom two-part module. The first part is a hidden layer with fixed random weights, initialized uniformly between -1 and 1. The second part is a standard, trainable output layer.

3.1.1. Detailed Discussion for Randomized Layers

Practical Considerations

Motivation

Intuitive Explanation

3.2. Improving Extracted Features by Encoder

3.3. Better Classification Loss

- -

- represents the predicted probability of the true class;

- -

- is a balancing factor to address class imbalance;

- -

- is a focusing parameter that reduces the loss contribution from easy-to-classify examples.

- -

- N is the batch size;

- -

- C is the number of classes;

- -

- is the predicted probability of class c for sample i.

3.4. Final Configuration

- The encoder’s representational capacity was enhanced by replacing the original fully connected layer block with a more expressive structure.

- The features extracted by the hybrid encoder block were further refined using a combination of a Multi-Scale Feature Extractor (to capture features at varying spatial scales), Fuzzy Attention (to enhance multi-scale feature representation), and Multi-Resolution Fusion (to combine features from different resolutions—low, medium, and high—within the encoder).

- An adaptive focal loss was employed in place of the traditional classification loss. Unlike the Focaler-IoU proposed in [43] and the focal loss for classification in [33], this adapted loss function serves as a weighting mechanism in the classification part designed to better support the detection of smaller objects.

4. Description of Datasets Used for Model Evaluation

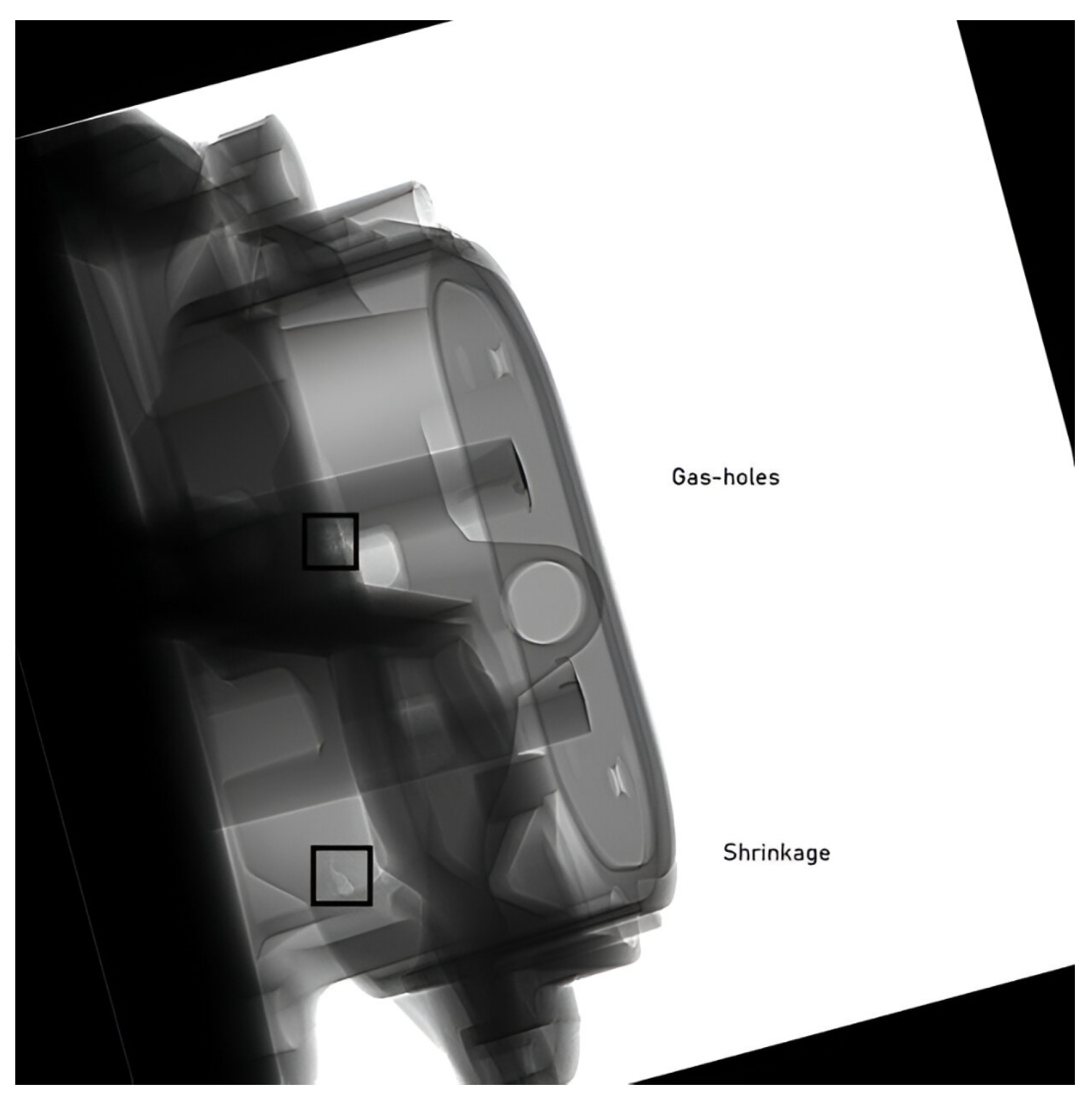

4.1. Al-Cast Detection

4.2. Synthetic

4.3. SerDes Receiver Pattern

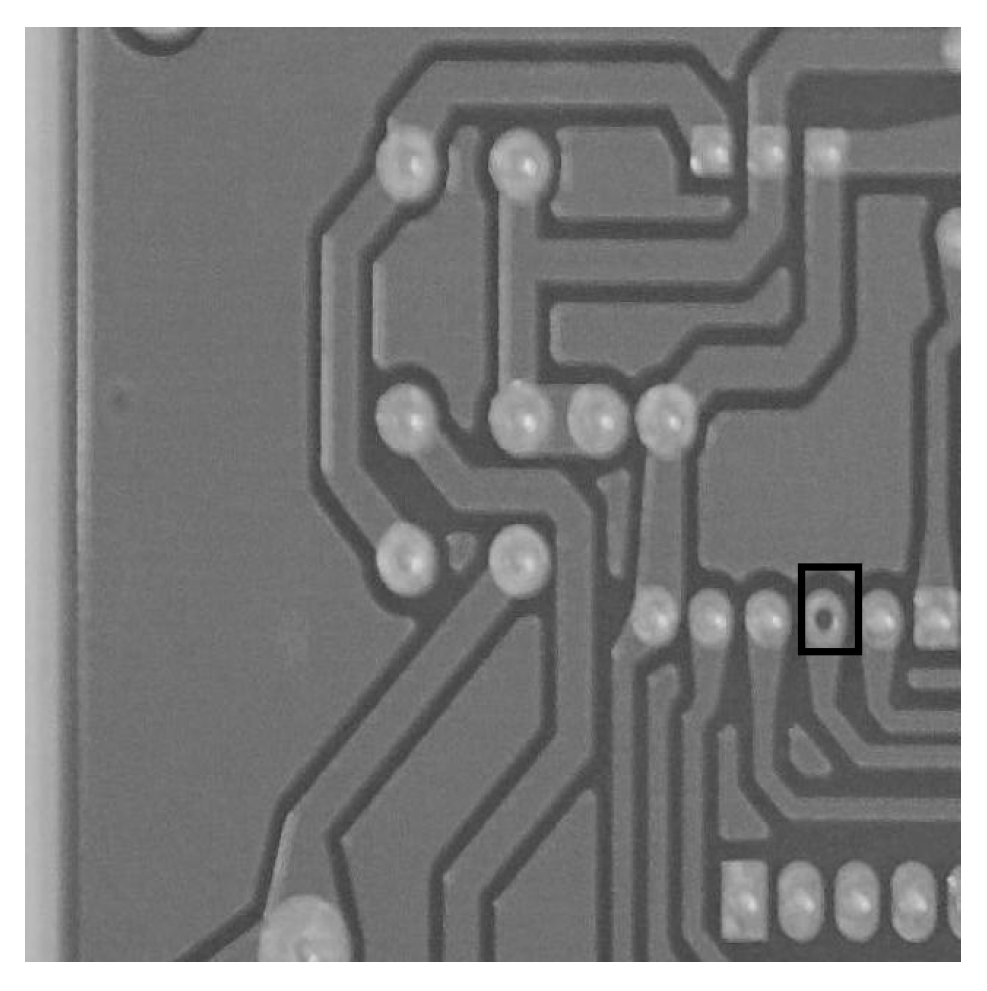

4.4. PCB Dataset

4.5. Blood Cell Object Detection Dataset

- Platelets: 76 instances (51 small, 24 medium, and 1 large)

- RBCs: 819 instances (813 medium and 6 large)

- WBCs: 72 instances (10 medium and 62 large)

5. Experiments and Results

5.1. Metrics

5.2. Al-Cast Dataset

5.2.1. Experiment 1: Comparison of Object Detection Models

- YOLO-NAS: It is the item of progressed Neural Architecture Search incorporated in object detection, meticulously designed to address the confinements of past YOLO models [60]. Evaluated using pre-trained weights (COCO) and after fine-tuning for 20 epochs on the Al-Cast dataset.

- YOLO11vS: One of the most recent model in Ultralytics’ YOLO series of real-time object detectors [61]. Yolo11Vs model version evaluated using pre-trained weights (COCO) and after fine-tuning for 20 epochs on the Al-Cast dataset.

- OWL-ViT: A foundation model based on a CLIP backbone [62], pre-trained on COCO and OpenImages (1.7M images). Fine-tuning for object detection is currently infeasible due to the lack of text descriptors for bounding boxes, as you need both for fine-tuning and only image modality with bounding boxes.

- RT-DETR: Trained on the COCO 2017 dataset (200k images) and evaluated using both pre-trained weights and fine-tuning for 20 epochs on the Al-Cast dataset.

- mAP (small) (area < pixels): 0.389;

- mAP (medium) ( < area < pixels): 0.589;

- mAP (large) (area > pixels): N/A.

5.2.2. Experiment 2: Comparison of Alternatives to FCNN

- Overall mAP@0.50: 0.619 (validation set).

- mAP (small) (area < pixels): 0.23;

- mAP (medium) (< area < pixels): 0.83;

- mAP (large) (area > pixels): N/A.

- Training set: Small—2823, medium—1271, and large—0;

- Validation set: Small—788, medium—339, and large—0;

- Gas Holes (primarily small objects): mAP = 0.233;

- Shrinkage (larger objects): mAP = 0.739.

5.2.3. Experiment 3: Randomized Layers with Enhanced Encoder Features

5.2.4. Experiment 4: Final Configuration with Combination of Adaptive Focal Loss and the Other Two Modifications

5.2.5. Experiment 5: Ablation Study for All the Modifications

5.3. Synthetic Dataset

5.4. Pattern Dataset

5.5. PCB Dataset

5.6. Blood Cell Object Detection Dataset

- mAP for RBC detection improved from 0.5126 to 0.5202.

- mAP for WBC detection saw the largest absolute increase, rising from 0.7770 to 0.870.

6. Discussion

6.1. Experiment 2 (Modification 1: Randomized Layers)

6.2. Experiment 3 (Modifications 1 + 2: Randomized Layers with Enhanced Encoder Features)

6.3. Experiment 4 (Modifications 1 + 2 + 3: Final Configuration)

6.4. Synthetic and Pattern Datasets

6.5. PCB Dataset

6.6. BCCD Dataset

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIFI | Attention-based Intra-Scale Feature Interaction |

| API | Application Programming Interface |

| Al-Cast | Aluminum die-cast dataset |

| AutoML | Automated machine learning |

| CCFF | Cross-Scale Channel Feature Fusion |

| DL | Deep learning |

| IoT | Internet of Things |

| KPI | Key Performance Indicator |

| mAP | Mean Average Precision |

| ML | Machine learning |

| QA/QC | Quality assessment/quality control |

| RTDETR | Real-Time DEtection TRansformer Model |

References

- Ullah, Z.; Qi, L.; Pires, E.; Reis, A.; Nunes, R.R. A Systematic Review of Computer Vision Techniques for Quality Control in End-of-Line Visual Inspection of Antenna Parts. Comput. Mater. Contin. 2024, 80, 2387–2421. [Google Scholar] [CrossRef]

- Bhanu Prasad, P.; Radhakrishnan, N.; Bharathi, S.S. Machine vision solutions in automotive industry. In Soft Computing Techniques in Engineering Applications; Springer: Cham, Switzerland, 2014; pp. 1–14. [Google Scholar]

- Ren, Z.; Fang, F.; Yan, N.; Wu, Y. State of the art in defect detection based on machine vision. Int. J. Precis. Eng. Manuf.-Green Technol. 2022, 9, 661–691. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Janesick, J.R.; Elliott, T.; Collins, S.; Blouke, M.M.; Freeman, J. Scientific charge-coupled devices. Opt. Eng. 1987, 26, 692–714. [Google Scholar] [CrossRef]

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Chauhan, V.; Fernando, H.; Surgenor, B. Effect of illumination techniques on machine vision inspection for automated assembly machines. In Proceedings of the Canadian Society for Mechanical Engineering (CSME) International Congress, Toronto, ON, Canada, 1–4 June 2014; pp. 1–6. [Google Scholar]

- Ashebir, D.A.; Hendlmeier, A.; Dunn, M.; Arablouei, R.; Lomov, S.V.; Di Pietro, A.; Nikzad, M. Detecting multi-scale defects in material extrusion additive manufacturing of fiber-reinforced thermoplastic composites: A review of challenges and advanced non-destructive testing techniques. Polymers 2024, 16, 2986. [Google Scholar] [CrossRef]

- Chalich, Y.; Mallick, A.; Gupta, B.; Deen, M.J. Development of a low-cost, user-customizable, high-speed camera. PLoS ONE 2020, 15, e0232788. [Google Scholar] [CrossRef]

- Czerwińska, K.; Pacana, A.; Ostasz, G. A Model for Sustainable Quality Control Improvement in the Foundry Industry Using Key Performance Indicators. Sustainability 2025, 17, 1418. [Google Scholar] [CrossRef]

- Huang, S.H.; Pan, Y.C. Automated visual inspection in the semiconductor industry: A survey. Comput. Ind. 2015, 66, 1–10. [Google Scholar] [CrossRef]

- Shao, R.; Zhou, M.; Li, M.; Han, D.; Li, G. TD-Net: Tiny defect detection network for industrial products. Complex Intell. Syst. 2024, 10, 3943–3954. [Google Scholar] [CrossRef]

- Giri, K.J. SO-YOLOv8: A novel deep learning-based approach for small object detection with YOLO beyond COCO. Expert Syst. Appl. 2025, 280, 127447. [Google Scholar]

- Dai, M.; Liu, T.; Lin, Y.; Wang, Z.; Lin, Y.; Yang, C.; Chen, R. GLN-LRF: Global learning network based on large receptive fields for hyperspectral image classification. Front. Remote. Sens. 2025, 6, 1545983. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Wu, D.; Wu, R.; Wang, H.; Cheng, Z.; To, S. Real-time detection of blade surface defects based on the improved RT-DETR. J. Intell. Manuf. 2025. [Google Scholar] [CrossRef]

- Kim, B.; Shin, M.; Hwang, S. Design and Development of a Precision Defect Detection System Based on a Line Scan Camera Using Deep Learning. Appl. Sci. 2024, 14, 12054. [Google Scholar] [CrossRef]

- Liang, T.; Bao, H.; Pan, W.; Pan, F. Traffic sign detection via improved sparse R-CNN for autonomous vehicles. J. Adv. Transp. 2022, 2022, 3825532. [Google Scholar] [CrossRef]

- Du, S.; Pan, W.; Li, N.; Dai, S.; Xu, B.; Liu, H.; Xu, C.; Li, X. TSD-YOLO: Small traffic sign detection based on improved YOLO v8. IET Image Process. 2024, 18, 2884–2898. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Yu, C.; Chen, X. Railway rutting defects detection based on improved RT-DETR. J. Real-Time Image Process. 2024, 21, 146. [Google Scholar] [CrossRef]

- Li, X.; Cai, M.; Tan, X.; Yin, C.; Chen, W.; Liu, Z.; Wen, J.; Han, Y. An efficient transformer network for detecting multi-scale chicken in complex free-range farming environments via improved RT-DETR. Comput. Electron. Agric. 2024, 224, 109160. [Google Scholar] [CrossRef]

- Chen, H.; Huang, W.; Zhang, T. Optimized RT-DETR for accurate and efficient video object detection via decoupled feature aggregation. Int. J. Multimed. Inf. Retr. 2025, 14, 5. [Google Scholar] [CrossRef]

- Zheng, Z.; Jia, Y. An Improved RT-DETR Algorithm for Small Object Detection in Aerial Images. In Proceedings of the 2024 17th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 26–28 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Wang, H.; Wu, Z.; Wei, Z. CDE-DETR: A Real-Time End-To-End High-Resolution Remote Sensing Object Detection Method Based on RT-DETR. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 8090–8094. [Google Scholar] [CrossRef]

- Kong, Y.; Shang, X.; Jia, S. Drone-DETR: Efficient Small Object Detection for Remote Sensing Image Using Enhanced RT-DETR Model. Sensors 2024, 24, 5496. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Yin, L.; Zhang, L.; Wu, F. DV-DETR: Improved UAV Aerial Small Target Detection Algorithm Based on RT-DETR. Sensors 2024, 24, 7376. [Google Scholar] [CrossRef] [PubMed]

- Han, T.; Hou, S.; Gao, C.; Xu, S.; Pang, J.; Gu, H.; Huang, Y. EF-RT-DETR: A efficient focused real-time DETR model for pavement distress detection. J. Real-Time Image Process. 2025, 22, 63. [Google Scholar] [CrossRef]

- Liu, Y.; He, M.; Hui, B. ESO-DETR: An Improved Real-Time Detection Transformer Model for Enhanced Small Object Detection in UAV Imagery. Drones 2025, 9, 143. [Google Scholar] [CrossRef]

- ZHENG, Y.; HUANG, Z.; Binbin, C.; Chao, W.; ZHANG, Y. Improved Real-Time Smoke Detection Model Based on RT-DETR. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 403. [Google Scholar] [CrossRef]

- Pan, J.; Song, S.; Guan, Y.; Jia, W. Improved Wheat Detection Based on RT-DETR Model. IAENG Int. J. Comput. Sci. 2024, 52, 705. [Google Scholar]

- Tang, S.; Bao, Q.; Ji, Q.; Wang, T.; Wang, N.; Yang, M.; Gu, Y.; Zhao, J.; Qu, Y.; Wang, S. Improvement of RT-DETR model for ground glass pulmonary nodule detection. PLoS ONE 2025, 20, e0317114. [Google Scholar] [CrossRef]

- Wu, M.; Qiu, Y.; Wang, W.; Su, X.; Cao, Y.; Bai, Y. Improved RT-DETR and its application to fruit ripeness detection. Front. Plant Sci. 2025, 16, 1423682. [Google Scholar] [CrossRef]

- Xie, L.; Ren, M. Infrared Image Human Detection Based on Improved RT-DETR Algorithm. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer (EIECT), Shenzhen, China, 15–17 November 2024; pp. 310–314. [Google Scholar] [CrossRef]

- Li, S.; Long, L.; Fan, Q.; Zhu, T. Infrared Image Object Detection of Substation Electrical Equipment Based on Enhanced RT-DETR. In Proceedings of the 2024 4th International Conference on Intelligent Power and Systems (ICIPS), Yichang, China, 6–8 December 2024; pp. 321–329. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Yang, J.; Ma, X.; Chen, J.; Li, Z.; Tang, X. Lightweight tomato ripeness detection algorithm based on the improved RT-DETR. Front. Plant Sci. 2024, 15, 1415297. [Google Scholar] [CrossRef]

- Xu, A.; Li, Y.; Xie, H.; Yang, R.; Li, J.; Wang, J. Optimization and Validation of Wafer Surface Defect Detection Algorithm Based on RT-DETR. IEEE Access 2025, 13, 39727–39737. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, Y.; Sun, Y. Research on Rail Defect Recognition Method Based on Improved RT-DETR Model. In Proceedings of the 2024 5th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 12–14 April 2024; pp. 1464–1468. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, Y.; Shen, J.; Liu, F. Improved RT-DETR for Infrared Ship Detection Based on Multi-Attention and Feature Fusion. J. Mar. Sci. Eng. 2024, 12, 2130. [Google Scholar] [CrossRef]

- Du, X.; Zhang, X.; Tan, P. RT-DETR based Lightweight Design and Optimization of Thermal Infrared Object Detection for Resource-Constrained Environments. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; pp. 7917–7922. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, Z.; Li, X. RS-DETR: An Improved Remote Sensing Object Detection Model Based on RT-DETR. Appl. Sci. 2024, 14, 10331. [Google Scholar] [CrossRef]

- Yan, P.; Wen, Z.; Wu, Z.; Li, G.; Zhao, Y.; Wang, J.; Wang, W. Intelligent detection of coal gangue in mining Operations using multispectral imaging and enhanced RT-DETR algorithm for efficient sorting. Microchem. J. 2024, 207, 111789. [Google Scholar] [CrossRef]

- Ding, H.; Zhou, C.; Lian, C. An Improved RT-DETR Model for UAV-Based Power Line Inspection Under Various Weather Conditions. In Proceedings of the 2024 China Automation Congress (CAC), Qingdao, China, 1–3 November 2024; pp. 941–945. [Google Scholar]

- Zhao, Z.; Chen, S.; Ge, Y.; Yang, P.; Wang, Y.; Song, Y. RT-DETR-Tomato: Tomato Target Detection Algorithm Based on Improved RT-DETR for Agricultural Safety Production. Appl. Sci. 2024, 14, 6287. [Google Scholar] [CrossRef]

- Wang, S.; Xia, C.; Lv, F.; Shi, Y. RT-DETRv3: Real-Time End-to-End Object Detection with Hierarchical Dense Positive Supervision. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 1628–1636. [Google Scholar] [CrossRef]

- Li, W.; Li, A.; Li, Z.; Kong, X.; Zhang, Y. RTS-DETR: Efficient Real-Time DETR for Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 1211–1216. [Google Scholar] [CrossRef]

- Huang, J.; Li, T. Small Object Detection by DETR via Information Augmentation and Adaptive Feature Fusion. In Proceedings of the 2024 ACM ICMR Workshop on Multimodal Video Retrieval (ICMR ’24), Phuket, Thailand, 10–14 June 2024; pp. 39–44. [Google Scholar] [CrossRef]

- Liang, N.; Liu, W. Small Target Detection Algorithm for Traffic Signs Based on Improved RT-DETR. Eng. Lett. 2025, 33, 140. [Google Scholar]

- Mao, H.; Gong, Y. Steel surface defect detection based on the lightweight improved RT-DETR algorithm. J. Real-Time Image Process. 2025, 22, 28. [Google Scholar] [CrossRef]

- An, G.; Huang, Q.; Xiong, G.; Zhang, Y. VLP Based Open-set Object Detection with Improved RT-DETR. In Proceedings of the 2024 4th International Conference on Artificial Intelligence, Big Data and Algorithms (CAIBDA 2024), Zhengzhou, China, 21–23 June 2024; pp. 101–106. [Google Scholar] [CrossRef]

- Robinson, I.; Robicheaux, P.; Popov, M. RF-DETR. SOTA Real-Time Object Detection Model. 2025. Available online: https://github.com/roboflow/rf-detr (accessed on 1 September 2025).

- Parlak, I.E.; Emel, E. Deep learning-based detection of aluminum casting defects and their types. Eng. Appl. Artif. Intell. 2023, 118, 105636. [Google Scholar] [CrossRef]

- Unakafov, A.; Unakafova, V.; Bouse, D.; Parthasarathy, R.; Schmitt, A.; Madan, M.; Reich, C. START: Self-Adapting Tool for Automated Receiver Testing—using receiver-specific stress symbol sequences for above-compliance testing. In Proceedings of the DesignCon, Santa Clara, CA, USA, 28–30 January 2025; pp. 876–900. Available online: https://www.designcon.com/ (accessed on 24 September 2025).

- Roboflow. PCB Computer Vision Project. 2025. Available online: https://universe.roboflow.com/manav-madan/pcb-aibaz-gzujv (accessed on 18 June 2025).

- cosmicad; akshaylambda. BCCD Dataset: Blood Cell Detection Dataset. 2018. Available online: https://public.roboflow.com/object-detection/bccd (accessed on 1 September 2025).

- Ninja, D. Visualization Tools for BCCD Dataset. 2025. Available online: https://datasetninja.com/bccd (accessed on 16 September 2025).

- Skalski, P. How to Train RT-DETR on a Custom Dataset with Transformers. 2024. Available online: https://blog.roboflow.com/train-rt-detr-custom-dataset-transformers/ (accessed on 11 April 2025).

- Aharon, S.; Dupont, L.; oferbaratz; Masad, O.; Yurkova, K.; Fridman, L.; Lkdci; Khvedchenya, E.; Rubin, R.; Bagrov, N.; et al. Super-Gradients. GitHub repository, 2021. Available online: https://zenodo.org/records/7789328 (accessed on 24 September 2025).

- Jocher, G.; Qiu, J. Ultralytics YOLO11. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 24 September 2025).

- Minderer, M.; Gritsenko, A.; Stone, A.; Neumann, M.; Weissenborn, D.; Dosovitskiy, A.; Mahendran, A.; Arnab, A.; Dehghani, M.; Shen, Z.; et al. Simple Open-Vocabulary Object Detection. In Computer Vision—ECCV 2022; Springer Nature: Cham, Switzerland, 2022; pp. 728–755. [Google Scholar] [CrossRef]

| Category | Representative Papers (IDs) and Observations |

|---|---|

| Backbone innovations | All except 4 (3, 22, 28, and 32) apply modifications to the backbone. Backbone modifications are the most common approach. Authors often replace ResNet with lighter (MobileNetV3 and FasterNet) or specialized designs (HGNetv2, GELAN, and ConvNeXt). This reflects the need for stronger low-level feature extraction for small objects. However, frequent reliance on ImageNet-pre-trained CNNs shows a trade-off: novelty vs. transferability. |

| Neck/feature fusion modules | All except 2 (2 and 13) apply modifications to the neck directly in the feature fusion part. Multi-scale feature aggregation is nearly universal, with BiFPN/EBiFPN, SOEP, slim-neck SSFF, and custom fusion blocks. These methods improve small object recall but also add computational overhead. |

| Training strategies and supervision | Not so common as seen in works by 3, 13, 19, 23, and 29. Auxiliary branches, two-step training, synthetic augmentation, and diffusion-based data expansion are less common but strategically important. This reflects recognition of data scarcity and weak supervision for small targets. However, limited adoption indicates challenges in reproducibility and computational costs. |

| Model | Direct Inference | mAP@0.50 |

|---|---|---|

| YOLO-NAS | 0 | 0.7507 |

| YOLO11s | 0 | 0.7000 |

| OWL-ViT | 0 | – |

| RT-DETR | 0 | 0.7560 |

| Layer Type | Direct Inference | mAP@0.50:0.95 | mAP@0.50 | mAP@0.75 |

|---|---|---|---|---|

| Linear (original) | 0 | 0.39 | 0.75 | 0.34 |

| HebbianLayer | 0 | 0.43 | 0.82 | 0.40 |

| Oja’s Layer | 0 | 0.33 | 0.70 | 0.24 |

| Randomized Neural Network | 0 | 0.45 | 0.88 | 0.40 |

| Overall mAP@0.5 | 0.914 |

| mAP@0.5 (Small Objects) | 0.412 |

| Overall mAP@0.5 | 0.920 |

| mAP@0.5 (Small Objects) | 0.513 |

| Final Config (Modifications 1 + 2 + 3) | mAP@0.5 = 0.900 | mAP@0.50:0.95 = 0.750 | mAP@0.75 = 0.630 |

| Original RT-DETR | mAP@0.5 = 0.590 | mAP@0.50:0.95 = 0.330 | mAP@0.75 = 0.280 |

| YOLO11vS | mAP@0.5 = 0.914 | mAP@0.50:0.95 = 0.512 | mAP@0.75 = N/A |

| Only Feature Extractor | mAP@0.5 = 0.810 | mAP@0.50:0.95 = 0.480 | mAP@0.75 = 0.540 |

| Only Fuzzy Attention | mAP@0.5 = 0.850 | mAP@0.50:0.95 = 0.470 | mAP@0.75 =0.480 |

| Only Adaptive Focal Loss | mAP@0.5 = 0.710 | mAP@0.50:0.95 = 0.469 | mAP@0.75 = 0.420 |

| Condition | Original | Final Configuration |

|---|---|---|

| No-Occ—Low Contrast | 0.800 | 0.800 |

| No-Occ—Med Contrast | 0.623 | 0.642 |

| Partial-Occ—Low Contrast | 0.785 | 0.812 |

| Partial-Occ—Med Contrast | 0.688 | 0.808 |

| Subset | Original | Final Configuration |

|---|---|---|

| PSS1 | 0.903 | 0.932 |

| PSS2 | 0.943 | 0.936 |

| PSS3 | 0.881 | 0.918 |

| Metric | Original | Final Configuration |

|---|---|---|

| mAP@0.5 | 0.898 | 0.890 |

| mAP@small | 0.252 | 0.337 |

| mAP@medium | 0.431 | 0.459 |

| Metric | Original | Final Configuration |

|---|---|---|

| mAP@0.5 (overall) | 0.872 | 0.881 |

| mAP@0.5 (platelets) | 0.449 | 0.547 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Madan, M.; Reich, C. Strengthening Small Object Detection in Adapted RT-DETR Through Robust Enhancements. Electronics 2025, 14, 3830. https://doi.org/10.3390/electronics14193830

Madan M, Reich C. Strengthening Small Object Detection in Adapted RT-DETR Through Robust Enhancements. Electronics. 2025; 14(19):3830. https://doi.org/10.3390/electronics14193830

Chicago/Turabian StyleMadan, Manav, and Christoph Reich. 2025. "Strengthening Small Object Detection in Adapted RT-DETR Through Robust Enhancements" Electronics 14, no. 19: 3830. https://doi.org/10.3390/electronics14193830

APA StyleMadan, M., & Reich, C. (2025). Strengthening Small Object Detection in Adapted RT-DETR Through Robust Enhancements. Electronics, 14(19), 3830. https://doi.org/10.3390/electronics14193830