Abstract

Facial beauty prediction (FBP) is a cutting-edge topic in deep learning, aiming to endow computers with human-like esthetic judgment capabilities. Current facial beauty datasets are characterized by multi-class classification and imbalanced sample distributions. Most FBP methods focus on improving accuracy (ACC) as their primary goal, aiming to indirectly optimize other metrics. In contrast to ACC, which is well known to be a poor metric in cases of highly imbalanced datasets, the recall measures the proportion of correctly identified samples for each class, effectively evaluating classification performance across all classes without being affected by sample imbalances, thereby providing a fairer assessment of minority class performance. Therefore, targeting recall improvement facilitates balanced classification across all classes. The Macro Recall (MR), which averages the recall of all the classes, serves as a comprehensive metric for evaluating a model’s performance. Among numerous classic models, ConvNeXt, which integrates the designs of the Swin Transformer and ResNet, performs exceptionally well regarding its MR but still suffers from inter-class confusion in certain categories. To address this issue, this paper introduces contrastive learning (CL) to enhance the class separability by optimizing feature representations and reducing confusion. However, directly applying CL to all the classes may degrade the performance for high-recall categories. To this end, we propose using an ensemble strategy, ECL-ConvNeXt: First, ConvNeXt is used for multi-class prediction on the whole of dataset A to identify the most confused class pairs. Second, samples predicted to belong to these class pairs are extracted from the multi-class results to form dataset B. Third, true samples of these class pairs are extracted from dataset A to form dataset C, and CL is applied to improve their separability, training a dedicated auxiliary binary classifier (ConvNeXtCL-ABC) based on ConvNeXt. Subsequently, ConvNeXtCL-ABC is used to reclassify dataset B. Finally, the predictions of ConvNeXtCL-ABC replace the corresponding class predictions of ConvNeXt, while preserving the high recall performance for the other classes. The experimental results demonstrate that ECL-ConvNeXt significantly improves the classification performance for confused class pairs while maintaining strong performance for high-recall classes. On the LSAFBD dataset, it achieves 72.09% ACC and 75.43% MR; on the MEBeauty dataset, 73.23% ACC and 67.50% MR; on the HotOrNot dataset, 62.62% ACC and 49.29% MR. The approach is also generalizable to other multi-class imbalanced data scenarios.

1. Introduction

Facial beauty prediction (FBP) is a cutting-edge topic in computer vision and artificial intelligence, aiming to simulate humans’ perception of facial beauty using algorithms and explore the deep connections between machine learning and humans’ esthetic cognition. In recent years, advancements in deep learning have significantly propelled the development of FBP, with deep learning-based models [1] demonstrating outstanding performance in classification tasks. However, the current FBP datasets often exhibit multi-class distributions with imbalanced samples. For instance, in a five-class classification task, one class might account for over 60% of the total samples, while the remaining four classes collectively constitute less than 40%. Since every class should hold equal significance, such significant sample imbalance will cause improvements in overall metrics to stem primarily from enhancements in the majority classes that dominate the sample distribution, even at the expense of degrading metrics for other classes.

Previous studies have typically prioritized improvements in accuracy (ACC) as their primary evaluation metric, expecting that enhancing the ACC will indirectly optimize other metrics and improve the model’s overall classification performance. This focus on ACC assumes that a higher proportion of correct predictions inherently leads to better model generalization across various tasks. In summary, the reliance on ACC as the primary metric reflects a common assumption in prior work that optimizing it will broadly enhance model performance, though this may overlook other critical aspects of classification quality.

The ACC measures the proportion of correctly predicted samples relative to the total sample size but is heavily influenced by the sample distribution. In the aforementioned five-class scenario, if a model predicts all samples to belong to the majority class, which constitutes over 60% of the dataset, the ACC can still exceed 60%, despite the model having no capability to recognize the minority classes. Conversely, if a model accurately identifies both the majority and minority classes, the ACC can genuinely reflect its performance; however, if it only correctly identifies the minority classes while misclassifying the majority class, the ACC will significantly decrease due to the dominance of the majority class. Thus, while ACC provides a straightforward measure of predictive success, its sensitivity to class imbalance can mask a model’s true discriminative ability, particularly in datasets with uneven class distributions.

This paper focuses on improving the Macro Recall (MR) to enhance a model’s balanced recognition capability across all classes, thereby promoting a synergistic improvement in the ACC. The recall measures the proportion of correctly identified samples for a given class. In a five-class task, each class has its own recall rate. The MR, calculated as the average recall across all the classes, assigns equal weight to each class, making it unaffected by the class size. For example, in the five-class task mentioned above, if a model only predicts the majority class for all the samples, the recall for that class will be high, but the recall for the other four classes will be near zero, resulting in a low MR. If the model can identify both majority and minority classes in a balanced manner, the MR will accurately reflect its performance. Even if the model only correctly identifies minority classes while misclassifying the majority class, the MR will remain high due to the equal class weighting, effectively mitigating the impact of a sample imbalance on the evaluation. Thus, improving the MR under imbalanced sample distributions is a key challenge in FBP research.

ConvNeXt [2], a convolutional neural network architecture proposed in 2022, incorporates design principles from a Vision Transformer [3] (ViT), offering both efficiency and robust feature extraction capabilities. In multi-class tasks like FBP, ConvNeXt performs exceptionally well for most classes but still encounters confusion between certain highly similar classes, limiting further improvements in its MR.

Contrastive learning (CL), an emerging feature learning paradigm, enhances the similarity of positive sample pairs while increasing the dissimilarity of negative pairs, effectively improving a model’s ability to distinguish similar classes. This approach holds significant potential for addressing class confusion in multi-class tasks, providing a new technical pathway for optimizing FBP models. However, in multi-class classification tasks, directly applying CL will indiscriminately adjust the feature representations of all the categories, leading to a decline in the performance for categories with good classification abilities.

In a multi-class confusion matrix, the columns correspond to the predicted classes, and the values within each column indicate the number of samples from various true classes that were predicted to belong to the corresponding class. This means that the samples assigned to a given predicted class may originate from any true class. For instance, if samples from the columns for predicted classes A and B are selected to construct a dedicated binary classification dataset, the true classes of these samples can be any of the original classes, but the prediction targets are restricted to classes A and B. When an auxiliary binary classifier (ABC) model re-predicts these samples, it only affects the distribution of the values in columns A and B for the rows corresponding to the true classes in the confusion matrix. For example, a sample originally predicted to be class A may be reclassified as class B or remain as class A, while the values in the columns for other predicted classes will remain unchanged.

Specifically, for samples from true class C, the sum of the values in columns A and B of the corresponding row in the confusion matrix will remain constant after binary reclassification, but the distribution between these two columns may shift. This adjustment means that the theoretical upper limit of the recall for the targeted class pair is determined by the proportion of samples from true classes A and B in the original dataset. Notably, this optimization process targets only the selected class pair, leaving the confusion matrix rows unaffected outside columns A and B and thus ensuring performance stability for the non-targeted classes.

Based on this property of the confusion matrix, this paper proposes an ensemble strategy that precisely optimizes the most confused class pairs in multi-class classification: ConvNeXt is used as the primary model for full-class classification, and an auxiliary binary classifier, ConvNeXtCL-ABC, based on CL and ConvNeXt, is introduced to specifically optimize the most confused class pairs identified by the primary ConvNeXt model. By leveraging CL to enhance the feature separability between the target classes, ConvNeXtCL-ABC effectively compensates for the deficiencies of the primary ConvNeXt model regarding specific classes while preserving its superior performance for others. This primary–auxiliary combined strategy achieves the precise optimization of weak classes, avoiding the high computational cost of complex ensemble models and striking an efficient balance between model complexity and performance improvement.

The main contributions of this paper are as follows:

- We indicate that, in multi-class imbalanced datasets, specifically improving per-class recall to enhance MR more effectively reflects improved overall classification ability compared to solely improving ACC, as validated by experimental results;

- We propose an ensemble strategy combining the primary ConvNeXt multi-class model with ConvNeXtCL-ABC to precisely optimize confused class pairs in multi-class tasks;

- We conducted extensive experiments on three multi-class imbalanced datasets to validate the superiority and robustness of the proposed method in facial beauty prediction tasks.

This paper is structured as follows for the subsequent content: Section 2 provides a review of relevant studies; Section 3 details the proposed method; Section 4 describes the experimental process and its comparison with existing approaches; and Section 5 offers a summary of the work, along with discussions on future research directions.

2. Related Work

2.1. Facial Beauty Prediction

With the rise of deep learning, researchers have been exploring its application in FBP [1,4,5,6]. The study in [1] proposed a novel personalized FBP method based on meta-learning, suitable for small-scale datasets. Furthermore, ref. [4] optimized an adaptive Transformer with global and local multi-head self-attention for FBP, achieving superior performance across datasets of varying sizes. The FBPFormer model was developed in [5], focusing on local and global facial beauty features, and a dynamic exponential loss function was proposed to adjust the model’s optimization objectives dynamically. In [6], a convolutional neural network’s ability to extract facial features was optimized, using generative adversarial networks to generate facial data. Although these studies improved the FBP performance in terms of the adaptability to small datasets, robustness to noise, and label utilization, most methods used ACC as their primary evaluation metric. In real-world FBP datasets with multi-class and imbalanced characteristics, the ACC struggles to objectively reflect the model’s discriminative ability across all the classes. Therefore, this paper adopts the MR metric as its core evaluation metric, exploring how to improve it while preserving the true data distribution.

2.2. ConvNeXt

ConvNeXt is a pure convolutional neural network architecture inspired by a ViT. Due to its depthwise separable convolutions, inverted bottleneck structures, 7 × 7 large-kernel convolutions, Gaussian Error Linear Unit (GELU) activation, and layer normalization (LN), it has optimized feature extraction capabilities, demonstrating exceptional performance in tasks such as image classification, segmentation, and detection, particularly when handling imbalanced datasets, low-resolution images, and complex scenes [7,8,9]. The study in [7] integrated ConvNeXt and an MiT model trained on multi-scale images with test-time augmentation, mitigating label noise and small-dataset limitations and significantly reducing the misclassifications in boundary regions. A further study, ref. [8], used ConvNeXt V2 as a feature extractor, combining asymmetric loss functions and view prediction aggregation to address long-tail distribution issues in the MIMIC-CXR dataset. Through multi-view prediction aggregation, the detection accuracy and overall classification performance were significantly improved. Finally, the study in [9] proposed PolypNextLSTM, a lightweight video segmentation network based on a streamlined ConvNeXt backbone, combined with bidirectional ConvLSTM modules and a UNet-style decoder. PolypNextLSTM addressed issues such as a lack of temporal context, low computational efficiency, fast motion, and scale variations, significantly improving the segmentation accuracy. This study adopted ConvNeXt as a baseline model, leveraging its robust feature extraction and multi-class prediction capabilities to achieve an optimal initial classification performance.

2.3. Contrastive Learning

CL is a machine learning technique through which robust representations are learned by pulling similar samples’ features closer together and pushing those of dissimilar samples apart. This effectively enhances the feature discriminability when handling imbalanced datasets and high-dimensional image data. Supervised contrastive learning [10] extends CL to supervised settings, using joint training with cross-entropy and contrastive losses to encourage feature clustering for similar samples and separation for dissimilar ones and addressing the insufficient robustness of class representations. Multi-view augmentation-based contrastive learning [11,12] generates more positive sample pairs by enhancing the multi-view diversity, increasing the intra-class compactness and representation robustness in long-tail recognition. The study [13] utilizes multi-visual modalities, further combines temporal-level feature learning, and enhances the separability of categories by improving feature robustness. Parameterized contrastive learning [14] introduces learnable parameterized class centers and logistic adjustment techniques to increase the distance between contrastive pairs, addressing deficiencies in representation learning and classification in long-tail recognition, while balanced contrastive learning [15] employs class weight embedding and class averaging strategies to balance the impact of positive and negative sample pairs, enhancing the feature expressiveness for tail classes. In addition, targeted supervised contrastive learning [16] aligns class features with regular simplex vertices to address deficiencies in the class feature compactness and discriminability in long-tail recognition. Subclass-balanced contrastive learning [17] strengthens the feature robustness for tail classes using subclass division and balanced contrastive loss, improving the balance of class representations. Finally, decoupled contrastive learning [18] optimizes feature learning for tail classes by separating contrastive learning from the rest of the process, enhancing the representation discriminability and stability.

Overall, this paper proposes an ensemble strategy that combines CL with ConvNeXt to construct a dedicated auxiliary binary classification module for the most confused class pairs in multi-class tasks. This approach significantly enhances the discriminability of the confused classes without compromising ConvNeXt’s classification performance for the dominant classes.

3. Methodology

3.1. Overall Framework

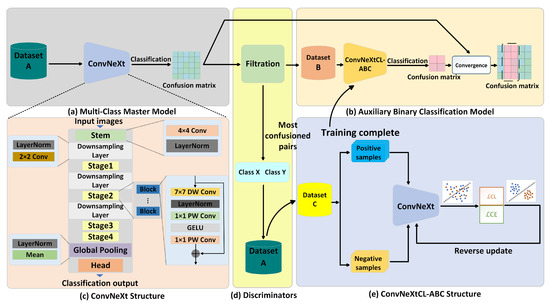

ECL-ConvNeXt is an ensemble strategy combining CL with ConvNeXt. The overall architecture, as shown in Figure 1, consists of a primary multi-class model, a discriminator, and an ABC model. First, the primary model, based on ConvNeXt, performs multi-class prediction. The discriminator quantifies and ranks the confusion matrix generated by the primary model using a validation set to identify the two most confused classes. Next, ConvNeXtCL-ABC is trained to optimize the discriminative ability for these two classes. Finally, during inference, ConvNeXtCL-ABC’s predictions replace the primary model’s original predictions for the corresponding two classes in the confusion matrix, thereby improving the overall MR. The process is detailed in Algorithm 1.

Figure 1.

Overall ECL-ConvNeXt framework. (a) Multi-Class Master Model: ConvNeXt model for multi-class facial beauty prediction. (b) Auxiliary Binary Classification Model: ConvNeXtCL-ABC for resolving confused class pairs. (c) ConvNeXt Structure: Architecture of the primary ConvNeXt model. (d) Discriminators: Modules for identifying confused class pairs to guide optimization. (e) ConvNeXtCL-ABC Structure: Architecture integrating contrastive learning for binary classification.

3.2. ConvNeXt

ConvNeXt builds upon the ResNet-50 framework [19]. Its overall architecture, illustrated in Figure 1c, consists of four components: an initial feature extraction layer (Stem), four progressive feature extraction stages (Stages), downsampling layers (Downsampling Layer), and a classification head (Head).

In the Stem stage, the model employs a single non-overlapping convolution operation, mimicking the patch embedding strategy of a ViT to reduce the spatial resolution while increasing the channel dimensions and achieving efficient feature compression. The Stages phase involves a differentiated design, constructing a hierarchical feature pyramid by dynamically adjusting the number of residual blocks (Blocks) and downsampling strategies across the Stages. The core innovation of each Block lies in the introduction of 7 × 7 large-kernel depthwise convolutions, which enhance spatial context modeling by expanding the receptive field—a design inspired by the local window attention mechanism of the Swin Transformer [20]. Additionally, in this model, the depthwise convolution operation is shifted to the middle layer of the inverted bottleneck structure, significantly improving the computational efficiency by optimizing the computation path.

| Algorithm 1 ECL-ConvNeXt ensemble strategy algorithm. |

|

Regarding the activation function, in ConvNeXt, the traditional ReLU is replaced with GELU, introducing smooth non-linear characteristics to emulate Transformer activation behavior. Furthermore, the model’s activation strategy is streamlined by retaining only a single GELU layer per Block, balancing the model’s computational efficiency and feature representation capacity by reducing the number of activation function layers—a design also inspired by the sparse activation mechanisms of Transformers.

Regarding normalization, in ConvNeXt, BatchNorm is replaced with LN, which is located at the start of the inverted bottleneck. Each Block is equipped with a single LN layer, reducing the computational overhead of normalization while enhancing the training stability and convergence speed due to standardized feature distributions.

3.3. Discriminator

The discriminator’s core function is to identify the most confused class pair in the ConvNeXt primary multi-class model, directing the optimization of the ConvNeXtCL-ABC model. Its architecture is shown in Figure 1d. First, the primary ConvNeXt model generates a confusion matrix on the validation set , where represents the number of samples with true class i predicted as class j.

In the row-normalized confusion matrix, each element represents the proportion of class i samples misclassified as class j, calculated as

where is the total number of samples with true class i in the validation set .

The average misclassification probability between classes i and j is

where and are the mutual misclassification proportions between classes i and j.

The two most confused classes, denoted as and , are selected by

where C is the total number of classes.

Dataset is constructed as

used to train the ConvNeXtCL-ABC model. Dataset is constructed as

providing inputs for re-prediction by ConvNeXtCL-ABC.

3.4. Auxiliary Binary Classifier ConvNeXtCL-ABC Based on CL and ConvNeXt

CL allows useful feature representations to be learned by maximizing the similarity between similar samples and minimizing it between dissimilar ones, significantly enhancing the feature discriminability and reducing misclassifications. In binary classification experiments conducted in recent years, ConvNeXt has demonstrated superior performance compared to numerous classic models. Therefore, this study constructed an auxiliary binary classifier, ConvNeXtCL-ABC, based on ConvNeXt and CL, to reclassify the most confused class pairs identified by the ConvNeXt primary multi-class model. The overall architecture is depicted in Figure 1e.

The ConvNeXtCL-ABC model is trained on a subset, , of the full-class dataset , where contains all images and their labels, is an image, is a label, N is the total number of samples, and C is the number of classes. The subset includes samples with true labels of or , where and are the two most confused classes in the multi-class classification task, as identified in the confusion matrix. The goal of CL is to optimize feature embeddings, bringing features of the same class closer together and pushing features of different classes apart. The contrastive loss function is defined as

where is the feature embedding, is the ConvNeXtCL-ABC model parameter, is the feature of a positive sample from the same class, is the feature of a negative sample from a different class, is the set of negative samples, is the cosine similarity, and is the temperature parameter.

As a binary classifier, to ensure classification accuracy, ConvNeXtCL-ABC uses the cross-entropy loss, defined as

where is the predicted probability, is the indicator function (1 if , 0 otherwise), are class indices, and is the classification head function.

The total loss combines contrastive learning and the classification task:

where is the balancing factor.

3.5. Ensemble Strategy

To further optimize the performance of the ConvNeXt multi-class model for the most confused class pair , ConvNeXtCL-ABC is ensembled as an auxiliary binary classifier into the multi-class framework. In the confusion matrix, of the ConvNeXt primary multi-class model, represents the number of samples with a true class of i predicted to belong to class j. For classes and , the confusion matrix of the binary classification subset is defined as

The binary MR is

In Formula (10), the binary macro recall is given, which measures the average recall of classes and in the binary classification task, evaluating the performance of the ConvNeXtCL-ABC model in correctly identifying samples from these two classes.

During the prediction process using both the ConvNeXt primary multi-class model and ConvNeXtCL-ABC, let the initial confusion matrix of the ConvNeXt primary model be M and the optimized confusion matrix be . When the ConvNeXt primary model predicts a sample, , to be , ConvNeXtCL-ABC re-predicts it to be , adjusting and . Specifically, for a true class of , define

representing the total number of samples with a true class of i predicted to be or by the ConvNeXt primary model. After optimization,

where is the prediction result obtained by ConvNeXtCL-ABC, and

In Formula (14), it is ensured that the total number of samples predicted as or for a true class i remains unchanged after optimization by ConvNeXtCL-ABC, with only their distribution between and being adjusted. For non-target classes, ,

In Formula (15), it is indicated that the confusion matrix entries for non-target classes () remain unchanged after optimization, ensuring that the ConvNeXtCL-ABC model only affects predictions for and . The optimized recall is

In Formula (16), the optimized recall for classes and is given, reflecting the proportion of correctly classified samples for these classes after re-prediction by ConvNeXtCL-ABC. The optimized MR is

In Formula (17), the optimized macro recall is given, averaging the recall of all classes, including the optimized recalls for and and the unchanged recalls for non-target classes, to evaluate the overall classification performance. where remains unchanged for . The upper bound of the recall is

In Formula (18), the theoretical upper bounds for the optimized recalls of classes and are given, representing the maximum achievable recall if all samples predicted as or by the primary model are correctly reclassified by ConvNeXtCL-ABC.

By jointly optimizing and , ConvNeXtCL-ABC significantly improves the discriminability of and . This optimizes the prediction distribution of and in the multi-class confusion matrix while ensuring stable performance for the non-target classes, alleviating the issue of class confusion in multi-class tasks.

4. Experimental Results and Discussion

4.1. Experimental Datasets

4.1.1. LSAFBD Dataset

The Large-Scale Asia Facial Beauty Database (LSAFBD) [21], established by our project team, contains 20,000 labeled facial images and 80,000 unlabeled facial images, with an image resolution of 144 × 144. The labeled images include 10,000 male facial images and 10,000 female facial images, including Asian faces of various ethnicities and age groups. The images encompass diverse lighting conditions, camera angles, and occlusion scenarios. The labeled images are categorized into five beauty levels: “0” (extremely unattractive), “1” (unattractive), “2” (average), “3” (attractive), and “4” (extremely attractive), based on the average scores assigned by 75 volunteers. The experiments conducted in this study used all the labeled female facial images from the LSAFBD mentioned above.

4.1.2. MEBeauty Dataset

The MEBeauty dataset [22] is a facial image dataset containing 2550 male and female facial images, including Black, Asian, Caucasian, Latin, Indian, and Middle Eastern ethnicities. The images were rated by approximately 300 individuals from diverse cultural and social backgrounds, with the ratings divided into three categories: “0” (unattractive), “1” (average), and “2” (attractive). The dataset includes facial images of various ages, genders, and unconstrained expressions and positions. The experiments in this study used 2334 facial images from the MEBeauty dataset.

4.1.3. HotOrNot Dataset

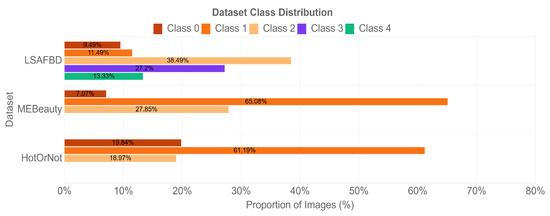

The HotOrNot dataset [23] comprises 2056 facial images collected from the internet, with a low resolution and cluttered backgrounds, pose variations, suboptimal lighting conditions, and unaligned faces, making it a challenging dataset for facial beauty prediction. The images were labeled by multiple raters as “0” (unattractive), “1” (average), or “2” (attractive). The facial beauty label distributions across the three datasets are illustrated in Figure 2.

Figure 2.

Facial beauty label distribution of LSAFBD, MEBeauty, and HotOrNot datasets.

4.2. Experimental Environment and Implementation Details

The LSAFBD, MEBeauty, and HotOrNot datasets were randomly split into training, validation, and test sets in a ratio of six to two to two, using stratified sampling to ensure that the distributions of the various sets were consistent with that of the overall dataset. The experimental environment configuration are summarized in Table 1. In detail, all the experiments were conducted on a single computational node in a high-functioning computing cluster, outfitted with two Intel Xeon Gold 5218 processors, 188GB of memory, and four NVIDIA Tesla V100-PCIE-32GB GPUs. The experiments were run on a CentOS 7.6 operating system, using Python 3.8.0 software, with all the algorithms implemented using the PyTorch 2.4.0 deep learning framework. An Adam optimizer was used, combined with a StepLR scheduler that reduced the learning rate every five epochs, with an initial learning rate of 0.0001. Training was conducted for 80 epochs, with an early stopping mechanism monitoring the validation loss and a patience value of 3. We set the batch size to 32. For the contrastive learning component, the temperature coefficient was set to 0.07, the weight ratio of the contrastive loss to the cross-entropy loss was 1:1, and a distributed data parallel strategy was employed to accelerate the training.

Table 1.

Experimental environment configuration.

4.3. Evaluation Metrics

The assessment metrics adopted in this research included the ACC, recall, MR, Macro Precision (MPR), and Macro F1-score (MF1). The ACC represents the proportion of correctly classified samples in relation to the total number of samples, with higher values indicating a better overall classification performance. The recall measures the proportion of correctly classified samples for the ith class, with higher values indicating a stronger recognition ability for that class. The MR is the average of the recall values across all the classes, with higher values indicating a more balanced classification performance across all the classes. The MPR is the average of the precision values across all classes, where precision for the ith class is the proportion of samples classified as class i that are actually in class i, reflecting the accuracy of positive predictions for each class. The MF1 is the average of the F1-score values across all classes, where the F1-score for the ith class is the harmonic mean of precision and recall for that class, balancing both metrics to assess classification performance comprehensively. These metrics are defined as follows:

where C is the number of classes, represents the number of samples correctly classified as class i (True Positives), represents the number of samples in class i incorrectly classified as non-i classes (False Negatives), and represents the number of samples not in class i incorrectly classified as class i (False Positives).

4.4. Comparative Experiments

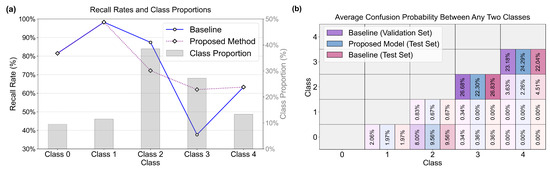

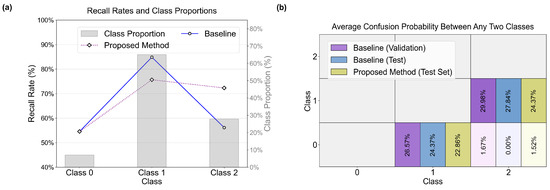

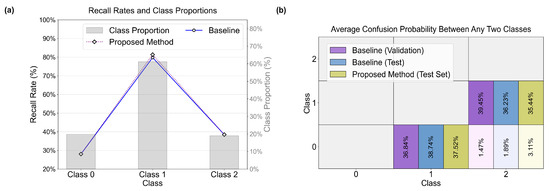

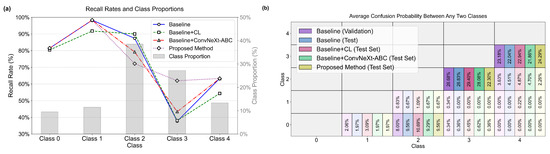

To validate the effectiveness of the proposed method, experiments were conducted on three imbalanced datasets—LSAFBD, MEBeauty, and HotOrNot—comparing its performance with that of classic models. The experimental results are presented in Table 2. Quantitative metrics are shown in Figure 3, Figure 4 and Figure 5 and analyzed to evaluate the optimization effects of ConvNeXt-XLarge and the proposed method, as well as the differences in their performance.

Table 2.

Comparative experimental results achieved on LSAFBD, MEBeauty, and HotOrNot datasets.

Figure 3.

Comparison between the proposed approach and the basic model with respect to the LSAFBD dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

Figure 4.

Comparison between the proposed approach and the basic model with respect to the MEBeauty dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

Figure 5.

Comparison between the proposed approach and the basic model with respect to the HotOrNot dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

4.4.1. Comparative Experiments Conducted on the LSAFBD Dataset

CS3Darknet-S [24] employs depthwise separable convolutions and a focusing module to optimize low-resource feature extraction, while DaViT-T [25] integrates channel and spatial attention to enhance representation in complex scenes. DeiT-T [26] improves small-scale model generalization through knowledge distillation and strong data augmentation. Furthermore, FastViT-T [27] balances efficiency and performance using a hybrid convolution–attention design, and FocalNet-T [28] introduces focal modulation to enhance local features, optimizing imbalanced classification. Hiera-T [29] improves complex image modeling through multi-scale feature fusion. Mixer-B [30] replaces self-attention with channel and spatial mixing for efficient extraction, while PiT-S [31] reduces the computational load using pooling operations, optimizing small-dataset classification. MobileNetV4-S [32] uses depthwise separable convolutions and bottleneck designs suitable for mobile devices, ViT-T [3] models global features using patch embedding and self-attention, and XCiT-S [33] enhances feature interaction using cross-channel attention. Swin [20] optimizes local modeling for high-resolution images using sliding window attention. CaiT-S [34] optimizes feature representation using class tokens and image patch interaction. The hybrid architecture of CoAt-M [35] improves its performance using multi-scale feature extraction. CoAtNet [36] optimizes classification by integrating deep convolutions and global attention, ConvIT-T [37] enhances Transformer spatial modeling using local convolutions, and ConvMixer [38] achieves efficient extraction using deep convolutions and channel mixing. Finally, the baseline model, ConvNeXt-XLarge [2], integrates ResNet and Transformer designs, leveraging large-kernel convolutions and layer normalization to optimize imbalanced dataset classification.

As listed in Table 2, the baseline model used the ConvNeXt model’s ConvNeXt-XLarge configuration. In the FBP task on the LSAFBD dataset, the proposed method outperformed all 18 comparative models in terms of ACC, MPR, MR, and MF1 and achieved the best performance. Specifically, the proposed method achieved an ACC of 72.09%, an MPR of 75.24%, an MR of 75.43%, and an MF1 of 75.28%—which are improvements of 0.74%, 0.84%, 1.79%, and 2.03%, respectively, over the baseline’s corresponding metrics of 71.35%, 74.40%, 73.64%, and 73.25%. As shown in Figure 3, in the analysis of the average misclassification probability for any two classes, the baseline ConvNeXt-XLarge model exhibited the highest probability of 26.68% for classes 2 and 3 on the validation set. In contrast, the proposed method achieved recall rates of 72.17% and 61.95% for classes 2 and 3, respectively, the sum of which surpassed that of the baseline’s 87.39% and 37.68%. Additionally, the proposed method reduced the average misclassification probability to 22.30%, a reduction of 0.15% compared to the baseline’s 26.83%.

4.4.2. Comparative Experiments on the MEBeauty Dataset

As listed in Table 2, in the FBP task on the MEBeauty dataset, the proposed method outperformed all comparative models in terms of MR and MF1 while showing slight decreases in ACC and MPR. Specifically, it attained an ACC of 73.23%, an MPR of 68.52%, an MR of 67.50%, and an MF1 of 67.61%. Compared to the baseline ConvNeXt-XLarge model, this represents an improvement of 1.87% in MR and 0.01% in MF1, while the ACC decreased by 1.56% and the MPR decreased by 4.03%. The decreases in ACC and MPR are closely related to the dataset’s class distribution and the model’s recall rate adjustments, as observed in Figure 4a. Figure 4a indicates that the MEBeauty dataset has a highly imbalanced class distribution. Class1 accounts for the largest proportion at 65.08%, followed by class2 at 27.85%, and class0 at the smallest 7.07%. This means class1 has a dominant impact on the overall calculation of ACC and MPR, as its performance changes carry greater weight in the aggregate metrics. From the recall rate perspective, the baseline model prioritized the dominant class1 and achieved a high recall rate of 84.87% for this class. The proposed method, however, reduced the recall rate of class1 to 75.66%. Although the proposed method significantly improved the recall rate of class2 from 56.15% to 72.31% and maintained the recall rate of class0 at 54.55%, the loss in recall for class1—given its 65.08% proportion—had a more pronounced impact. For ACC, the reduction in correctly classified samples from the large-scale class1 far offset the increase in correctly classified samples from class2, leading to a slight drop in overall accuracy. For MPR, as a metric that averages precision across all classes, the decreased recall of class1 introduced more misclassifications that affected its precision. Meanwhile, the smaller proportion of class2 limits the contribution of its precision improvement. These two factors together resulted in the observed decrease in MPR. Figure 4 shows that the baseline model exhibited the highest average misclassification probability of 29.98% for classes 1 and 2 on the validation set. The proposed method achieved recall rates of 75.66% and 72.31% for classes 1 and 2, respectively, demonstrating more balanced performance compared to the baseline’s 84.87% and 56.15%. Additionally, the average misclassification probability decreased to 24.37%, a reduction of 3.47% compared to the baseline’s 27.84%.

4.4.3. Comparative Experiments on the HotOrNot Dataset

As listed in Table 2, in the FBP task on the HotOrNot dataset, the proposed method performed the best among 18 models. It achieved an ACC of 62.62%, an MPR of 56.39%, an MR of 49.29%, and an MF1 of 51.00%, which represents an improvement of 0.97% in ACC, 0.78% in MPR, 0.53% in MR, and 0.59% in MF1, respectively, over the baseline ConvNeXt-XLarge model’s corresponding metrics of 61.65% ACC, 55.61% MPR, 48.76% MR, and 50.41% MF1. According to Figure 5, the baseline ConvNeXt-XLarge model exhibited the highest average misclassification probability of 39.45% for classes 1 and 2 on the validation set. The proposed method achieved recall rates of 81.35% and 38.46% for classes 1 and 2, respectively, the sum of which was greater than that of the baseline’s 79.76% and 38.46%. Furthermore, the average misclassification probability was reduced to 35.44%, a decrease of 0.79% compared to the baseline’s 36.23%.

From the above, it is evident that the proposed method resolved the class confusion issues by analyzing the baseline ConvNeXt-XLarge model’s average misclassification probabilities for any two classes in the validation sets of each dataset. This approach significantly reduced the average misclassification probability for the most confused classes, improving both the MR and ACC.

4.5. Ablation Experiments

To investigate the contributions of the auxiliary binary classifier, contrastive learning, and the ensemble strategy to the performance, ablation experiments were performed with the LSAFBD and MEBeauty and HotOrNot datasets. The experiments included the baseline model, the baseline model with contrastive learning (Baseline+CL), the baseline model with a ConvNeXt-based auxiliary binary classifier (Baseline+ConvNeXt-ABC), and the proposed method (the baseline model with both CL and a ConvNeXt-based auxiliary binary classifier). The baseline model used the ConvNeXt model’s ConvNeXt-XLarge configuration.

4.5.1. Ablation Experiments on the LSAFBD Dataset

The results of the ablation experiments on the LSAFBD dataset are shown in Table 3 and Figure 6. The experimental data indicate that, compared to the baseline model, applying contrastive learning or a ConvNeXt-based auxiliary binary classifier alone led to declines in ACC, MR, and MF1, with mixed changes in MPR. In contrast, the proposed method by leveraging the strengths of both strategies achieved improvements across all metrics.

Table 3.

Ablation experiments on the LSAFBD dataset.

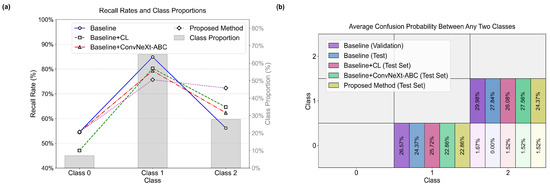

Figure 6.

Recall rates and average misclassification probabilities for each class pair in ablation experiments on LSAFBD dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

As shown in Figure 6b, the baseline model exhibited the highest mutual misclassification probability of 26.68% for classes 2 and 3 on the validation set. From Table 3, when applying contrastive learning alone to the baseline model (Baseline+CL), the strategy made the model focus more on classes with severe confusion classes 2 and 3 leading to a 2.60% increase in recall for class 2 and a 0.37% increase for class 3. However, this also affected classes with distinct feature differentiation classes 0, 1, and 4 whose recall rates generally declined with decreases of 1.06%, 6.52%, and 8.99% respectively. Although this resulted in a 0.96% increase in MPR partial gains in precision from optimized confused classes the overall performance degraded shown by a 2.72% decrease in MR, a 0.95% decrease in ACC, and a 1.15% decrease in MF1 due to imbalanced improvements across classes. When only the ConvNeXt-based auxiliary binary classifier was introduced (Baseline+ConvNeXt-ABC), the reduction in classification count failed to separate the features of the confusing class pair. The model remained severely confused between class 2 and class 3. Specifically, while the recall rate for class 3 increased by 5.70% the recall rate for class 2 dropped by 8.19%. This imbalance led to decreases of 0.50% in MR, 1.60% in ACC, 1.19% in MPR, and 0.3% in MF1.

In contrast, the proposed method adopts a targeted and balanced optimization strategy. The preceding experiments show that using contrastive learning to focus on specific classes can indeed improve the recall rates of the targeted classes. Thus, the proposed method uses the auxiliary binary classifier to stabilize the recall rates of non-confused classes thereby avoiding recall rate fluctuations in non-target classes caused by contrastive learning while applying contrastive learning specifically to classes 2 and 3. This targeted application of contrastive learning significantly separates the features of classes 2 and 3 resulting in a 24.26% increase in recall for class 3 and only a 15.21% decrease in recall for class 2. Ultimately, this coordinated adjustment drives overall performance improvements increases of 1.81% in MR, 0.75% in ACC, 0.88% in MPR, and 2.03% in MF1 fully demonstrating the effectiveness of the proposed method.

4.5.2. Ablation Experiments on the MEBeauty Dataset

The results of the ablation experiments on the MEBeauty dataset are shown in Table 4 and Figure 7. The analysis indicated that introducing contrastive learning or a ConvNeXt-based auxiliary binary classifier alone led to performance declines whereas the proposed method enhanced MR and MF1 by combining contrastive learning and the auxiliary binary classifier. As shown in Figure 7b, the baseline model exhibited the highest misclassification probability of 29.98% for classes 1 and 2 with the validation set as the basis. From Table 4, it can be seen that when applying CL alone to the baseline model (Baseline+CL), the recall rate for class 2 increased by 8.46% but those for classes 0 and 1 decreased by 6.06% and 4.61%, respectively, resulting in decreases of 0.73% in the MR, 1.07% in the ACC, 1.81% in the MPR, and 1.85% in the MF1. When only the ConvNeXt-based auxiliary binary classifier was used (Baseline+ConvNeXt-ABC), the recall rate for class 2 increased by 6.15% but the rate for class 1 decreased by 5.59%; although the MR increased by 0.19%, the high proportion of class 1 in the sample led to decreases of 1.93% in the ACC, 2.37% in the MPR, and 1.13% in the MF1.

Table 4.

Results of the ablation experiment on the MEBeauty dataset.

Figure 7.

Recall rates and average misclassification probabilities for each class pair in ablation experiments on MEBeauty dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

In contrast, the proposed method adopts a targeted optimization strategy. The preceding experiments show that contrastive learning can improve the average recall rate of classes 1 and 2. On this premise, the proposed method first uses the auxiliary binary classifier to keep the recall rate of non-confusable class 0 unchanged and then applies contrastive learning to classes 1 and 2 to optimize their feature distance. This optimization leads to a 16.15% increase in the recall rate of class 2 and only a 9.21% decrease in the recall rate of class 1 resulting in a 2.31% increase in the MR. Although the sample distribution caused a 1.50% decrease in the ACC and a 1.7% decrease in the MPR the significant improvement in MR still led to a slight increase in MF1 and the general performance stayed better than that of other approaches underscoring the benefit of employing an ensemble strategy in imbalanced dataset tasks.

4.5.3. Ablation Experiments on the HotOrNot Dataset

The results of the ablation experiment on the HotOrNot dataset are presented in Table 5 and Figure 8. Specifically, in Figure 8b, the baseline model exhibited the highest misclassification probability of 39.45% for classes 1 and 2 on the validation set.

Table 5.

Results of the ablation experiment on the HotOrNot dataset.

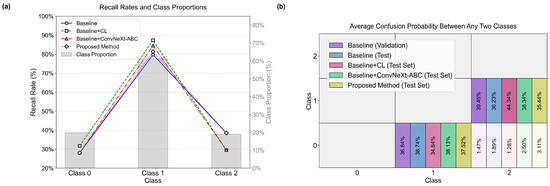

Figure 8.

Recall rates and average misclassification probabilities for each class pair in ablation experiments on HotOrNot dataset. In (a), two line charts show the recall rates of different models across various categories, while the bar chart illustrates the proportion of each category in terms of quantity. In (b), the average misclassification probabilities between two different categories are presented. Each data point represents the average probability of mutual confusion between the corresponding two categories, with darker colors indicating more severe confusion.

From Table 5, when applying contrastive learning alone to the baseline model (Baseline+CL), the strategy enhanced recall for classes 0 and 1 with increases of 12.20% and 7.54% respectively, but severely impaired class 2 with a 26.92% drop in recall. This imbalance resulted in a 2.40% decrease in MR, though the sample distribution contributed to gains of 1.94% in ACC, 6.07% in MPR, and 1.7% in MF1. When only the ConvNeXt-based auxiliary binary classifier was introduced (Baseline+ConvNeXt-ABC), the recall rate for class 1 increased by 4.76% but class 2 saw an 8.97% decrease. This led to a 1.40% drop in MR, alongside a 1.21% increase in ACC and 0.94% increase in MPR due to sample bias, while MF1 decreased by 1.08%.

In contrast, the proposed method first leverages the auxiliary binary classifier to maintain the recall rate of non-confusable class 0, then applies contrastive learning to optimize the confused classes 1 and 2, resulting in a 1.59% increase in recall for class 1 while keeping class 2’s recall stable, and leading to improvements in all key metrics: MR increases by 0.53%, ACC by 0.97%, MPR by 0.78%, and MF1 by 0.59%. This demonstrates significantly better overall performance compared to single-strategy approaches.

4.5.4. Ablation Experiment on Dataset Split Ratios

To investigate how different dataset split ratios (training set: validation set: test set) affect model performance, this paper conducted ablation experiments on the LSAFBD, MEBeauty, and HotOrNot datasets. The split ratios were set to 1:7:2, 2:6:2, 3:5:2, 4:4:2, 5:3:2, 6:2:2, and 7:1:2, respectively. Table 6 presents the detailed results, with performance metrics including ACC, MPR, MR, and MF1 employed for each dataset column.

Table 6.

Performance comparison under different dataset split ratios (training set: validation set: test set).

As listed in Table 6, model performance exhibits notable variations across different datasets when using distinct split ratios. Specifically, the LSAFBD dataset achieves optimal results with a 5:3:2 ratio, while both MEBeauty and HotOrNot perform well under the 6:2:2 split. This observation suggests that the optimal split ratio may be contingent on dataset-specific characteristics; however, a larger proportion of training data generally contributes to more robust and stable model performance. Notably, the 6:2:2 ratio features equal proportions of validation and test sets—this balance enables the validation set to more accurately mimic the test set’s distribution, thereby enhancing the reliability of performance evaluation. Consequently, the 6:2:2 ratio is selected as the experimental split parameter in this study.

4.5.5. Ablation Experiment on Contrastive Loss Weight Ratios

To explore the influence of different contrastive loss weight ratios on model performance, this paper conducted ablation experiments on the three datasets LSAFBD, MEBeauty, and HotOrNot. The weight ratios were set to 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, and 0.9, respectively. Table 7 presents the detailed results.

Table 7.

Performance comparison under different contrastive loss weight ratios.

As listed in Table 7, the contrastive loss weight ratio significantly affects model performance across datasets. LSAFBD achieves optimal results at a ratio of 0.6, MEBeauty also reaches its best performance at a ratio of 0.6 and HotOrNot attains optimal results at a ratio of 0.4. This suggests that an appropriate contrastive loss weight typically between 0.4 and 0.6 can effectively enhance the model’s discriminative ability while excessively high or low ratios may lead to suboptimal performance.

4.5.6. Ablation Experiment on Discriminator

To analyze the impact of the discriminator on model performance, this paper conducted ablation experiments on the three datasets LSAFBD, MEBeauty, and HotOrNot. For the experiments, all category pairs with an average confusion probability exceeding 10% were selected, rather than relying on the discriminator’s selection. Table 8 presents the detailed results, including the confusing category pairs, average confusion probability, and four performance metrics.

Table 8.

Performance comparison under different confusing categories in the discriminator.

As listed in Table 8, for the LSAFBD dataset, the pairing of class 2 and class 3 with a confusion probability of 26.68% achieves better performance than the pairing of class 3 and class 4 with a confusion probability of 23.18%. For the MEBeauty dataset, the pairing of class 1 and class 2 with a confusion probability of 29.98% outperforms the pairing of class 0 and class 1 with a confusion probability of 26.57%. For the HotOrNot dataset, the pairing of class 1 and class 2 with a confusion probability of 39.45% yields superior results compared to the pairing of class 0 and class 1 with a confusion probability of 36.84%. These results indicate that when optimizing on category pairs with higher average confusion probability, the corresponding model performance is generally better.

4.5.7. Ablation Experiment on Activation Function

This study takes the ConvNeXt model as the basic network architecture since the ConvNeXt model itself claims that the GELU activation function exhibits better performance than RELU in relevant tasks. To verify the applicability of this claim to the task of facial attribute analysis and to ensure the rationality of the activation function selection in the proposed model, ablation experiments comparing RELU and GELU were conducted on the three datasets LSAFBD, MEBeauty, and HotOrNot. Detailed experimental results are presented in Table 9.

Table 9.

Performance comparison under different activation functions.

As shown in Table 9, the GELU activation function achieves better performance than RELU across all three datasets and all four evaluation metrics which is consistent with the performance claim of the ConvNeXt model. For the LSAFBD dataset compared with RELU whose ACC is 69.98%, MPR is 73.09%, MR is 72.65%, and MF1 is 72.78% GELU brings significant improvements: ACC increases by 2.11%, MPR increases by 2.15%, MR increases by 2.78%, and MF1 increases by 2.50%. On the MEBeauty dataset GELU also shows obvious advantages: ACC rises from 71.52% to 73.23% with an increase of 1.71%, MPR rises from 66.85% to 68.52% with an increase of 1.67%, MR rises from 65.29% to 67.50% with an increase of 2.21%, and MF1 rises from 65.61% to 67.61% with an increase of 2.00%. Even for the HotOrNot dataset where the overall model performance is relatively lower due to data distribution characteristics, GELU still maintains stable optimization effects ACC increases by 1.70%, MPR increases by 1.31%, MR increases by 0.36%, and MF1 increases by 0.57%.

These results confirm that in the context of the ConvNeXt-based model used in this study the GELU activation function with its ability to implement smooth non-linear transformations and mitigate the “dying ReLU” problem can more effectively capture fine-grained feature information in facial data.

4.5.8. Ablation Experiment on Data Augmentation

Data augmentation is a widely adopted strategy to address the issue of sample imbalance in datasets. To validate the effectiveness of data augmentation in mitigating sample imbalance this study conducted ablation experiments on different data augmentation ratios. A data augmentation ratio of 1 indicates no data augmentation while a ratio of 10 indicates augmented data is 10 times the original data volume. The experiments were performed on three datasets LSAFBD, MEBeauty, and HotOrNot with detailed results presented in Table 10.

Table 10.

Performance comparison under different data augmentation ratios.

As shown in Table 10, the performance of the model varies under different data augmentation ratios across the three datasets. For the LSAFBD dataset, the no-augmentation setting ratio 1 yields better results in MPR MR and MF1 with MPR reaching 75.24%, MR reaching 75.43%, and MF1 reaching 75.28%, which are 0.28%, 0.81%, and 0.52% higher than those of the ratio 10 respectively. Only ACC shows a slight advantage at ratio 10 with a marginal increase of 0.03%.

On the MEBeauty dataset, the ratio 10 performs better in ACC and MPR with ACC at 73.45%, 0.22% higher than ratio 1, and MPR at 71.38%, 2.86% higher than ratio 1. However, the no-augmentation setting ratio 1 leads in MR and MF1 with MR at 67.50%, 0.79% higher than ratio 10, and MF1 at 67.61%, 0.45% lower than ratio 10.

For the HotOrNot dataset, the no-augmentation setting ratio 1 outperforms ratio 10 in all metrics. ACC at ratio 1 is 62.62%, 0.24% higher than ratio 10, MPR is 56.39%, 1.93% higher, MR is 49.29%, 0.66% higher, and MF1 is 51.00%, 1.08% higher.

These results indicate that the impact of data augmentation ratio on model performance is closely related to dataset characteristics. The no-augmentation setting ratio 1 is more conducive to improving the model’s overall performance especially in terms of recall and F1-score which are critical for addressing sample imbalance in HotOrNot and LSAFBD. Excessive data augmentation ratio 10 may introduce noise or dilute the distribution characteristics of the original data thereby limiting the model’s ability to learn effective features.

4.6. Statistical Significance Testing

To verify whether the performance improvement of the proposed method has statistical significance, this paper conducted an independent t-test based on the MR metric. This trial pitted the approach put forward against multiple conventional methods with respect to LSAFBD, MEBeauty, and HotOrNot. In independent t-tests, the p-value is a critical indicator for evaluating the difference between two groups of data, and a p-value less than 0.05 indicates a statistically significant difference between the groups.

The p-values obtained from the MR-based independent t-tests are presented in Table 11. It can be clearly observed from the table that all p-values are below the 0.05 significance level. This indicates that the proposed method outperforms other methods in terms of the MR metric on LSAFBD, MEBeauty, and HotOrNot.

Table 11.

The p-values based on MR for the proposed method compared with classical models on LSAFBD, MEBeauty, and HotOrNot datasets.

4.7. Comparison with Other Facial Beauty Prediction Methods

The proposed method enhances MR while indirectly affecting other evaluation metrics. To validate its performance, we carried out comparison experiments using the LSAFBD dataset to directly compare with state-of-the-art methods. The results are listed in Table 12. TransBLS-H [4] is an enhanced adaptive Transformer model that uses global and local multi-head self-attention, significantly improving the FBP performance across datasets of varying sizes. The SDB-BLS models [39] address FBP’s limitation of single feature extraction by combining dual-branch feature fusion with broad learning systems. The multi-task learning model [40] involving an adaptive sharing policy and attention feature fusion addresses the underutilization of labeled information by improving labeled information utilization. The MSCD-MAE method [41] combines masked autoencoder based on multi-scale convolution strategy with knowledge distillation for FBP, addressing limited labeled data issues by enhancing feature extraction via self-supervised masked autoencoder and compressing the model through distillation, improving prediction accuracy.

Table 12.

Comparison of the proposed method with existing facial beauty prediction methods.

As listed in Table 12, the proposed method achieved an ACC of 72.09%, MPR of 75.24%, MR of 75.43%, and MF1 of 75.28% on the LSAFBD dataset, outperforming other methods. The experimental results demonstrate that the proposed method significantly enhanced the model’s overall classification ability while effectively improving multiple evaluation metrics, enabling it to excel in the facial beauty prediction task.

5. Conclusions

The experimental results demonstrate that the ACC is significantly affected by sample imbalances and does not always align with the MR. The MR, by averaging the recall rates across all the classes, is unaffected by the sample distribution, providing a fairer representation of the model’s classification ability for each class. Therefore, a strategy prioritizing MR improvement while synergistically enhancing the ACC is more scientifically sound. The proposed ECL-ConvNeXt method achieved a high MR in the facial beauty prediction task, fully validating the effectiveness of the ensemble strategy and contrastive learning. Specifically, on the LSAFBD dataset, it reaches 72.09% ACC, 75.24% MPR, 75.43% MR, and 75.28% MF1; on the MEBeauty dataset, 73.23% ACC, 68.52% MPR, 67.50% MR, and 67.61% MF1; on the HotOrNot dataset, 62.62% ACC, 56.39% MPR, 49.29% MR, and 51.00% MF1. By combining the ConvNeXt primary model with CL and a ConvNeXt-based auxiliary binary classifier, this method significantly enhanced the model’s performance on unbalanced multi-class datasets and shows strong applicability to other similar image categorization tasks. However, it is worth noting that when severe confusion occurs between multiple class pairs—especially when these pairs share overlapping classes—the proposed model faces limitations: the overlapping classes prevent the simultaneous deployment of multiple auxiliary binary classifiers, a phenomenon consistently observed across all such severely confused pairs during experiments. Additionally, the base model adopted in the current method only prioritizes classification performance, with no consideration given to model lightweighting, which may restrict its application in resource-constrained scenarios. Thus, future work will focus on addressing the aforementioned limitations, including resolving the conflict of overlapping classes in severely confused multi-class pairs and exploring lightweight optimization for the base model.

Author Contributions

Conceptualization, J.G. and W.X.; methodology, J.G. and W.X.; software, W.X., H.C. and Z.C.; validation, W.X., H.C., Z.C. and Z.Z.; formal analysis, J.G. and Z.C.; investigation, J.G., Z.Z. and H.L.; resources, J.G.; data curation, W.X. and H.C.; writing—original draft preparation, J.G., W.X. and Z.Z.; writing—review and editing, J.G., W.X., H.C., Z.C., Z.Z. and H.L.; visualization, H.C.; supervision, J.G.; project administration, W.X. and H.L.; funding acquisition, J.G. All authors have read and agreed to the published version of the manuscript.

Funding

The present research received support from the National Natural Science Foundation of China Grant No. 61771347.

Informed Consent Statement

Not applicable.

Data Availability Statement

MEBeauty: The dataset employed in this work is openly accessible: https://github.com/fbplab/MEBeauty-database (obtained on 3 September 2025). HotOrNot: Dataset utilized in this research is publicly available: https://www.researchgate.net/publication/261595808_Female_Facial_Beauty_Dataset_ECCV2010_v10 (accessed on 3 September 2025). LSAFBD: The data used in this research can be obtained upon request from the corresponding author. The data are not openly accessible owing to privacy concerns.

Acknowledgments

The numerical computations in this paper were supported by the Wuyi University High-Performance Computing Center.

Conflicts of Interest

The authors make a declaration of no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| FBP | Facial beauty prediction |

| ACC | accuracy |

| MR | Macro Recall |

| MPR | Macro Precision |

| MF1 | Macro F1-score |

| CL | contrastive learning |

| ConvNeXtCL-ABC | a dedicated auxiliary binary classifier based on ConvNeXt |

| ViT | Vision Transformer |

| ABC | an auxiliary binary classifier model |

| GELU | Gaussian Error Linear Unit |

| LN | layer normalization |

| Stem | an initial feature extraction layer |

| Stages | feature extraction stages |

| DownsamplingLayer | downsampling layers |

| Head | a classification head |

| Block | residual block |

| LSAFBD | The Large-Scale Asia Facial Beauty Database |

| Confusion Probability | Average Confusion Probability Between Any Two Classes |

| TP | True Positives |

| FN | False Negatives |

| FP | False Positives |

References

- Lebedeva, I.; Ying, F.; Guo, Y. Personalized facial beauty assessment: A meta-learning approach. Vis. Comput. 2023, 39, 1095–1107. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Computer Vision and Pattern Recognition Conference CVPR, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the 9th International Learning Representations Conference ICLR, Austria Hosted Virtual Event, Virtual, 3–7 May 2021. [Google Scholar] [CrossRef]

- Gan, J.; Xie, X.; He, G.; Luo, H. TransBLS: Transformer combined with broad learning system for facial beauty prediction. Appl. Intell. 2023, 53, 26110–26125. [Google Scholar] [CrossRef]

- Liu, Q.; Lin, L.; Shen, Z.; Yu, Y. FBPFormer: Dynamic convolutional transformer for global-local-contextual facial beauty prediction. In Proceedings of the 32nd International Conference on Artificial Neural Networks and Machine Learning Proceedings ICANN, Heraklion, Greece, 26–29 September 2023. [Google Scholar] [CrossRef]

- Laurinavičius, D.; Maskeliūnas, R.; Damaševičius, R. Improvement of facial beauty prediction using artificial human faces generated by generative adversarial network. Cogn. Comput. 2023, 15, 998–1015. [Google Scholar] [CrossRef]

- Nasios, I. Enhancing kelp forest detection in remote sensing images using crowdsourced labels with Mixed Vision Transformers and ConvNeXt segmentation models. Int. J. Remote Sens. 2025, 46, 2159–2177. [Google Scholar] [CrossRef]

- Holste, G.; Zhou, Y.; Wang, S.; O’Connor, M.; Gill, A.; Saed, A.; Rajpurkar, P. Towards long-tailed, multi-label disease classification from chest X-ray: Overview of the CXR-LT challenge. Med. Image Anal. 2024, 97, 103224. [Google Scholar] [CrossRef]

- Bhattacharya, D.; Reuter, K.; Behrendt, F.; Maack, L.; Grube, S.; Schlaefer, A. PolypNextLSTM: A lightweight and fast polyp video segmentation network using ConvNext and ConvLSTM. Int. J. CARS 2024, 19, 2111–2119. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharya, D.; Reuter, K.; Behrendt, F.; Maack, L.; Grube, S.; Schlaefer, A. Supervised contrastive learning. In Proceedings of the Neural Information Processing Systems Advances Conference NeurIPS, Virtual, 6–12 December 2020; pp. 18661–18673. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G. Big self-supervised models are strong semi-supervised learners. In Proceedings of the Neural Information Processing Systems Advances Conference NeurIPS, NeurIPS, Virtual, 6–12 December 2020; pp. 22243–22255. [Google Scholar]

- Chen, X.; Xie, S.; He, K. An empirical study of training self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9640–9649. [Google Scholar] [CrossRef]

- Chen, H.; Zendehdel, N.; Leu, M.C.; Yin, Z. Fine-grained activity classification in assembly based on multi-visual modalities. J. Intell. Manuf. 2024, 35, 2215–2233. [Google Scholar] [CrossRef]

- Cui, J.; Zhong, Z.; Liu, S.; Yu, B.; Jia, J. Parametric contrastive learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 715–724. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, Z.; Chen, J.; Chen, Y.P.; Jiang, Y.G. Balanced contrastive learning for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 6908–6917. [Google Scholar] [CrossRef]

- Li, T.; Cao, P.; Yuan, Y.; Wu, Z.; Wang, Y.; Liu, Y.; Wu, C.Y. Targeted supervised contrastive learning for long-tailed recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 6918–6928. [Google Scholar] [CrossRef]

- Hou, C.; Zhang, J.; Wang, H.; Zhou, T. Subclass-balancing contrastive learning for long-tailed recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 5395–5407. [Google Scholar] [CrossRef]

- Xuan, S.; Zhang, S. Decoupled contrastive learning for long-tailed recognition. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 6396–6403. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Zhai, Y.; Gan, J.; Wu, B.; He, G. Benchmark of a large scale database for facial beauty prediction. In Proceedings of the 1st International Conference on Intelligent Information Processing (ICIIP), Melbourne, Australia, 18–21 November 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Lebedeva, I.; Guo, Y.; Ying, F. MeBeauty: A multi-ethnic facial beauty dataset in-the-wild. Neural Comput. Appl. 2022, 34, 14169–14183. [Google Scholar] [CrossRef]

- Xu, L.; Xiang, J.; Yuan, X. CRNet: Classification and regression neural network for facial beauty prediction. In Proceedings of the Pacific Rim Conference on Multimedia (PCM), Hefei, China, 21–22 September 2018; pp. 661–671. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Computer Vision and Pattern Recognition Conference Workshops CVPRW, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar] [CrossRef]

- Ding, M.; Xiao, B.; Codella, N.; Luo, P.; Wang, J.; Yuan, L. DaViT: Dual attention vision transformers. In Proceedings of the Computer Vision Conference of Europe ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 74–92. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the Machine Learning International Conference ICML, Virtual, 18–24 July 2021; pp. 10347–10357. [Google Scholar] [CrossRef]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. FastViT: A fast hybrid vision transformer using structural reparameterization. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 5762–5772. [Google Scholar] [CrossRef]

- Yang, J.; Li, C.; Dai, X.; Gao, J. Focal modulation networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; pp. 4203–4217. [Google Scholar] [CrossRef]

- Ryali, C.; Hu, Y.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.-Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A hierarchical vision transformer without the bells-and-whistles. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; pp. 29441–29454. [Google Scholar] [CrossRef]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszoreit, J.; et al. MLP-Mixer: An all-MLP architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar] [CrossRef]

- Heo, B.; Yun, S.; Han, D.; Chun, S.; Choe, J.; Oh, S.J. Rethinking spatial dimensions of vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 11936–11945. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Zhou, X.; Liu, C.; et al. MobileNetV4: Universal models for the mobile ecosystem. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024; pp. 78–96. [Google Scholar] [CrossRef]

- El-Nouby, A.; Touvron, H.; Caron, M.; Bojanowski, P.; Douze, M.; Joulin, A.; Laptev, I.; Neverova, N.; Synnaeve, G.; Verbeek, J.; et al. XCiT: Cross-covariance image transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 20014–20027. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Sablayrolles, A.; Synnaeve, G.; Jégou, H. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision Proceedings ICCV, Montreal, QC, Canada, 10–17 October 2021; pp. 32–42. [Google Scholar] [CrossRef]

- Xu, W.; Xu, Y.; Chang, T.; Tu, Z. Co-scale conv-attentional image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision Proceedings ICCV, Montreal, QC, Canada, 10–17 October 2021; pp. 9981–9990. [Google Scholar] [CrossRef]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying convolution and attention for all data sizes. Adv. Neural Inf. Process. Syst. 2021, 34, 3965–3977. [Google Scholar] [CrossRef]

- d’Ascoli, S.; Touvron, H.; Leavitt, M.L.; Morcos, A.S.; Biroli, G.; Sagun, L. ConViT: Improving vision transformers with soft convolutional inductive biases. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; pp. 2286–2296. [Google Scholar] [CrossRef]

- Chen, C.F.R.; Fan, Q.; Panda, R. CrossViT: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 357–366. [Google Scholar] [CrossRef]

- Gan, J.; Chen, H.; Xu, W.; Li, H.; Zhuang, Z.; Chen, Z. Facial Beauty Prediction Combining Dual-Branch Feature Fusion with a Stacked Broad Learning System. IEEE Access 2025, 13, 130883–130895. [Google Scholar] [CrossRef]

- Gan, J.; Luo, H.; Xiong, J.; Xie, X.; Li, H.; Liu, J. Facial beauty prediction combined with multi-task learning of adaptive sharing policy and attentional feature fusion. Electronics 2023, 13, 179. [Google Scholar] [CrossRef]

- Gan, J.; Xiong, J. Masked autoencoder of multi-scale convolution strategy combined with knowledge distillation for facial beauty prediction. Sci. Rep. 2025, 15, 2784. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).