1. Introduction

The IoT has woven a digital fabric into our daily lives, connecting billions of devices from smart speakers and home appliances to wearables and in-car infotainment systems [

1]. These platforms generate an unprecedented volume of user feedback, including product ratings, voice commands, and textual reviews, which represent a goldmine for improving user experience and service quality [

2]. However, the value of this data is critically undermined by a trust crisis fueled by the proliferation of deceptive content, particularly fake reviews [

3,

4]. Malicious actors exploit these channels to artificially boost or damage product reputations, eroding consumer trust and disrupting fair market dynamics [

5].

Detecting fake reviews in the IoT ecosystem presents a unique and formidable set of challenges that transcend those of traditional web platforms [

6,

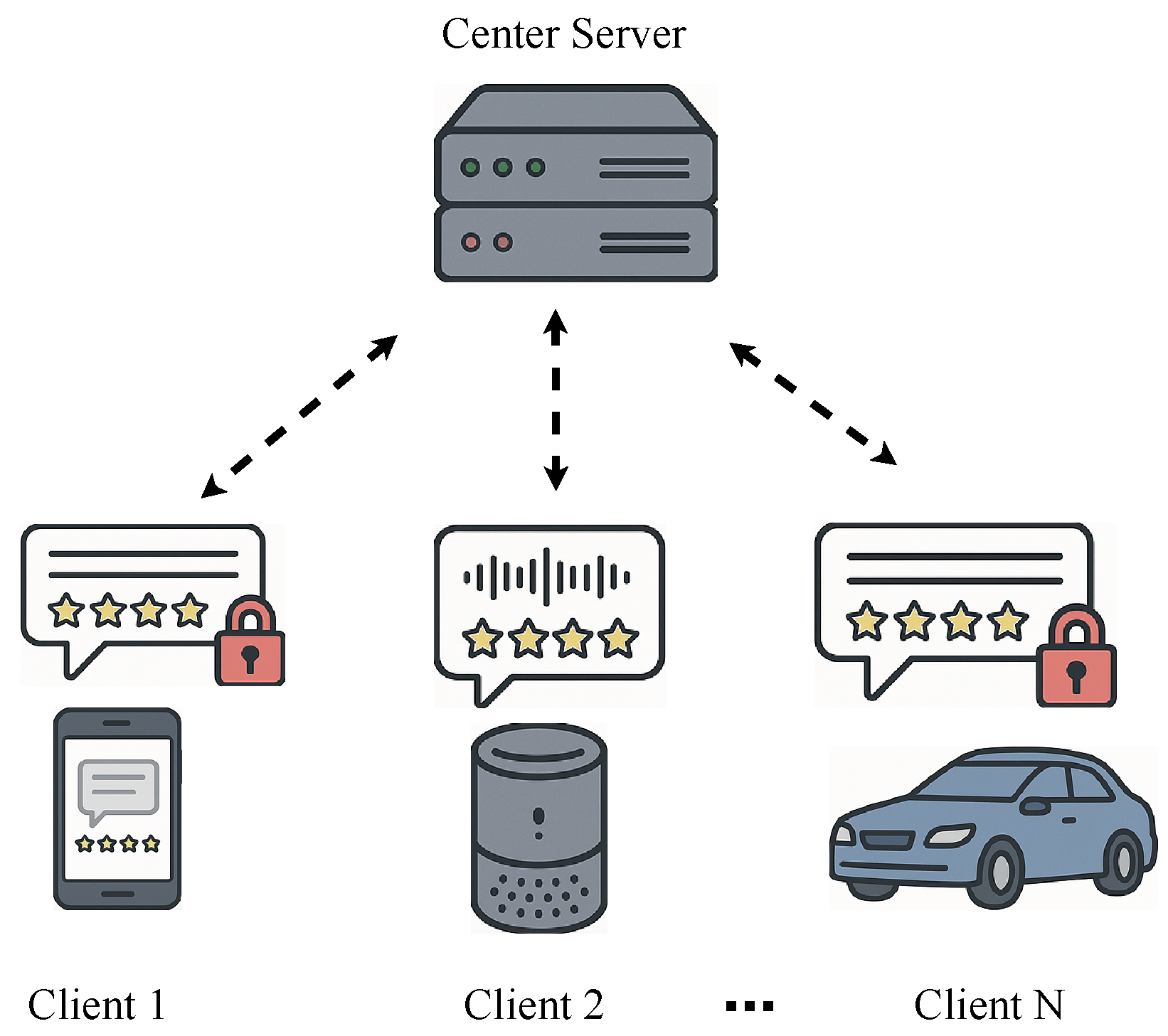

7]. As depicted in

Figure 1, review data is not centralized but is naturally siloed across a multitude of heterogeneous IoT platforms. A user might review a service via a voice command on a smart speaker, a text entry on a smart TV, or a rating on a companion mobile app. This leads to three primary challenges. First, data silos and privacy: User data is distributed and often contains sensitive personal information. Centralizing this data for analysis is often infeasible due to privacy regulations like GDPR and CCPA, as well as prohibitive communication costs. Second, data heterogeneity: The data is Non-IID (Non-Independent and Identically Distributed). Different devices generate data with varying statistical properties, modalities (text, audio, ratings), and, critically, varying quality. Some clients may be sources of high-fidelity data, while others might unknowingly or maliciously contribute low-quality or poisoned data. Third, system heterogeneity: The IoT devices themselves possess disparate computational capabilities, network connectivity, and power constraints. A robust solution must be efficient and adaptable to this diverse hardware landscape.

Federated learning (FL) has emerged as a promising paradigm to address the data silo and privacy challenge by enabling collaborative model training on decentralized data [

8,

9]. However, standard FL algorithms like FedAvg are vulnerable to the “garbage-in, garbage-out” problem; they treat all clients equally, allowing low-quality or malicious data to degrade the global model’s performance significantly [

10,

11]. This is particularly detrimental in fake review detection, where adversarial actors can actively try to poison the training process [

12,

13].

Recent advances in federated learning have attempted to address these challenges through various approaches. Personalized federated learning methods [

14,

15] aim to handle data heterogeneity by allowing clients to maintain personalized models while still benefiting from collaborative training. Robust aggregation techniques [

16,

17] have been developed to mitigate the impact of malicious clients. However, these approaches often lack the fine-grained data quality assessment needed for effective fake review detection in IoT environments [

18,

19].

To overcome these limitations, this paper introduces FedDQ: a Privacy-Preserving Federated Review Analytics framework with Data Quality Optimization. FedDQ is specifically designed for the complexities of the IoT environment. Instead of naively aggregating model updates, it empowers a central server to intelligently orchestrate the learning process based on a fine-grained understanding of each client’s data quality, without ever accessing the raw data itself.

The main contributions of this work are as follows:

A Data Quality-Aware FL Framework with Multi-modal Support: We design and implement FedDQ, an FL framework that integrates a comprehensive data quality assessment mechanism. The framework quantifies the reliability of each client’s data locally and uses this metric to perform a weighted global aggregation, significantly enhancing model accuracy and robustness. This framework is inherently multi-modal, capable of processing textual, image, and metadata for a more holistic analysis.

Multi-faceted Data Quality Modeling: We propose a multi-faceted data quality score that captures textual coherence, behavioral anomalies, and cross-modal consistency. This provides a more holistic assessment of data trustworthiness than text-only approaches.

Heterogeneity-Aware Design with Privacy Guarantees: FedDQ is tailored for heterogeneous IoT environments by considering device capabilities in its training protocol. We integrate differential privacy into the framework to provide formal privacy guarantees for both client data and the calculated quality scores, defending against inference attacks.

Extensive Empirical Validation: We conduct comprehensive experiments simulating various IoT scenarios, including Non-IID data distributions, system heterogeneity, and adversarial attacks. Our results demonstrate that FedDQ consistently and significantly outperforms state-of-the-art FL baselines in fake review detection.

2. Related Work

Our research builds upon three key areas: fake review detection, federated learning in IoT, and data quality management in distributed systems.

2.1. Fake Review Detection

Early research on fake review detection relied heavily on feature engineering, extracting linguistic features (e.g., n-grams, sentiment polarity) and behavioral features (e.g., review burstiness, reviewer history) to train classical machine learning models like SVM or Logistic Regression [

20]. While effective to a degree, these methods struggle with the subtlety and evolving nature of deceptive text.

The advent of deep learning revolutionized the field [

21,

22]. Models like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) were applied to capture more complex textual patterns [

23,

24]. More recently, large pre-trained language models like BERT have set new benchmarks by providing rich, contextualized text representations [

25,

26]. Transformer-based architectures have shown particular promise in detecting subtle linguistic cues that indicate deceptive content [

27].

Advanced neural architectures have been developed specifically for fake review detection. Graph neural networks (GNNs) have been employed to model reviewer-product relationships and detect coordinated attacks [

28]. Attention mechanisms have been integrated to focus on the most discriminative parts of review text [

29]. Multi-task learning approaches have been proposed to jointly detect fake reviews and predict review helpfulness [

30].

Some works have also explored multi-modal detection, incorporating metadata or user profile images alongside text to improve accuracy [

31], a direction we also pursue. Recent studies have investigated the use of stylometric features and writing style analysis for fake review detection [

32,

33]. However, nearly all of these approaches assume centralized access to a large, clean dataset, which is an unrealistic assumption in the modern IoT landscape.

2.2. Federated Learning in IoT

Federated learning was introduced to train models on data distributed across a large number of devices, such as mobile phones, without data centralization [

34]. Its applications in IoT are rapidly growing, spanning areas like healthcare, smart cities, and industrial IoT [

35,

36].

Several challenges unique to IoT have been addressed [

37]. To handle statistical heterogeneity (Non-IID data), methods like FedProx were proposed, which adds a proximal term to the local objective function to restrict local updates from deviating too far from the global model [

38]. Clustered federated learning approaches have been developed to group clients with similar data distributions [

39]. To address system heterogeneity, efforts have focused on asynchronous updates or client selection strategies that favor devices with better resources [

40,

41].

Recent advances in federated optimization have introduced adaptive learning rate schedules and momentum-based methods to improve convergence in heterogeneous environments [

42]. Communication-efficient techniques, such as gradient compression and local updating strategies, have been developed to reduce the communication overhead in resource-constrained IoT devices [

43]. Our work incorporates these considerations but argues that they are insufficient without also addressing the fundamental issue of data quality.

2.3. Data Quality and Robustness in Federated Learning

The performance of FL is highly sensitive to the quality of client data [

11]. A few malicious or low-quality clients can poison the global model or slow down convergence [

44]. This has spurred research into robust FL.

Some approaches focus on robust aggregation rules at the server, such as using the median or trimmed mean instead of a weighted average to filter out outlier updates [

45,

46]. Others attempt to measure client contributions or reputations to down-weight malicious participants [

47,

48]. For instance, proposes a reputation-based mechanism where clients that consistently submit updates disagreeing with the majority are penalized [

49].

Byzantine-robust federated learning has gained significant attention, with methods designed to handle arbitrary malicious behavior [

50]. Secure aggregation protocols have been developed to protect against inference attacks while maintaining model utility [

51,

52]. Blockchain-based approaches have been proposed to ensure the integrity and traceability of federated learning processes [

53]. Unlike these methods, which are often reactive or rely on statistical properties of the model updates, FedDQ takes a proactive approach by directly assessing the quality of the underlying data using a multi-faceted, interpretable metric.

Differential Privacy (DP) is a standard technique that provides formal privacy guarantees by injecting calibrated noise into model updates [

54,

55]. This can also incidentally improve robustness against some attacks but often comes at the cost of reduced model accuracy [

56]. Recent work has focused on developing more sophisticated noise mechanisms that better preserve utility while maintaining privacy [

57].

Our work, FedDQ, distinguishes itself from prior art by proposing a proactive and multi-faceted data quality metric that is computed locally and used to guide the entire learning process. Unlike reputation systems that are reactive, our method assesses data quality directly. Unlike simple robust aggregators, our quality score is more fine-grained and interpretable. A comparative summary of existing federated analytics methods and the key advantages of FedDQ is provided in

Table 1.

3. System Model and Problem Formulation

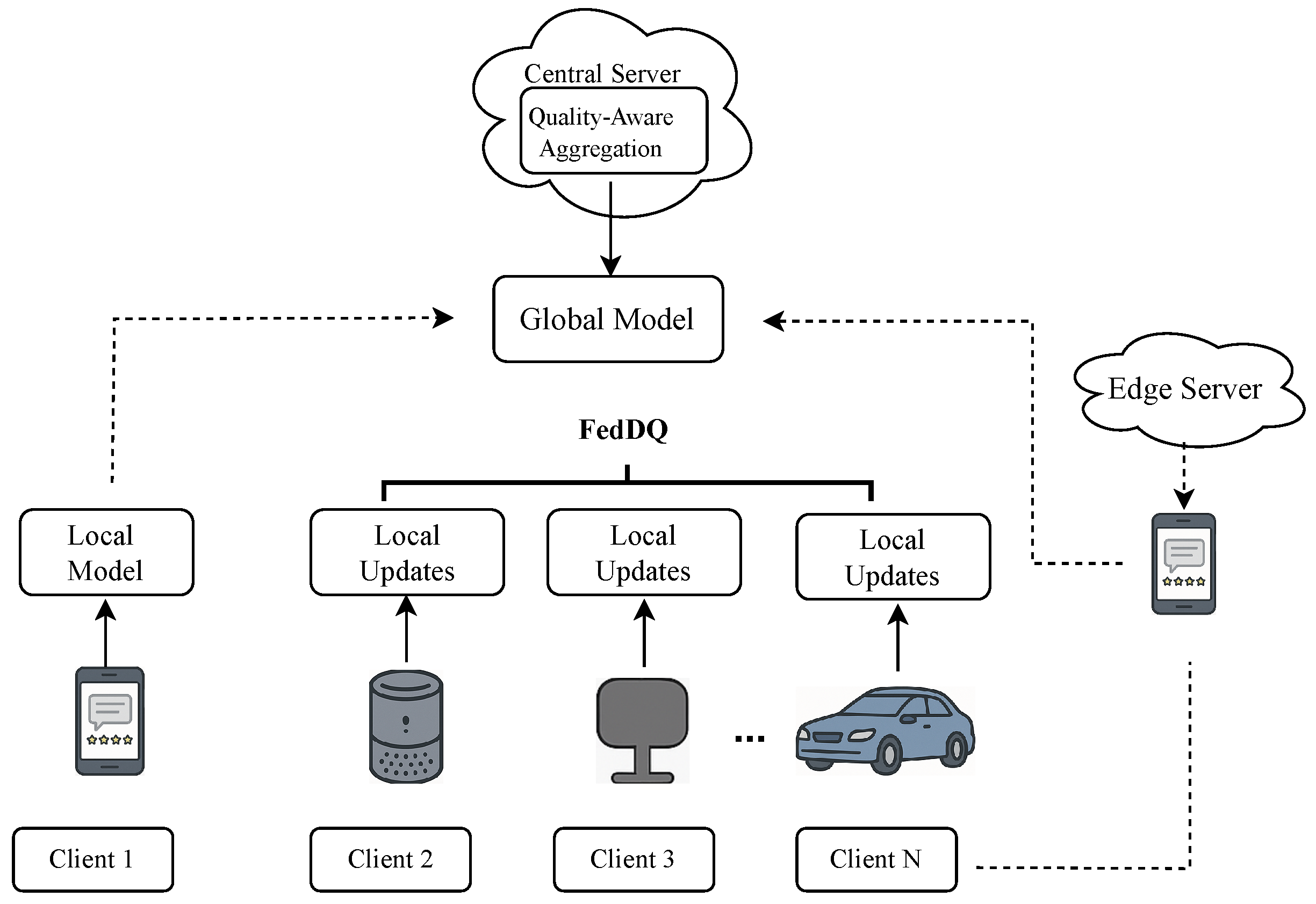

We consider a federated learning system for review analytics deployed across a heterogeneous IoT network, as illustrated in

Figure 2.

3.1. System Architecture

The system consists of three main entities.

First, the central server is a trusted entity responsible for orchestrating the FL process. It initializes the global model, aggregates client updates, and sends the updated model back to the clients. It does not have access to any raw client data.

Second, edge servers act as intermediaries in large-scale IoT deployments, managing clusters of clients such as those within a smart home or vehicle. They can perform partial model aggregation to reduce the communication load on the central server. While our primary model focuses on a two-tier client–server architecture, the framework is extensible to a hierarchical structure.

Third, the system includes a large set of heterogeneous clients, denoted by , each owning a local dataset of review data. These clients are heterogeneous in two key aspects. Regarding data heterogeneity, the local datasets are not Non-IID. The dataset size , the class distribution (fake vs. real reviews), and the data quality vary significantly across clients. Each data point in can be multi-modal, represented as , where is the review text, is the associated metadata (e.g., image, timestamp), and is the label. Regarding system heterogeneity, clients differ in hardware capabilities such as CPU and memory, power availability (e.g., battery-powered vs. plugged-in), and network connectivity (e.g., Wi-Fi vs. cellular).

3.2. Threat Model

We adopt a standard threat model in federated learning.

First, the central server is assumed to be honest but curious. It faithfully executes the aggregation protocol but may attempt to infer information about a client’s private data from the received model updates. Our goal is to protect against such inference attacks.

Second, a subset of clients may be potentially malicious. Their goal is to disrupt the training process or degrade the final model’s performance. This can be achieved through data poisoning, by injecting mislabeled or fake data into their local dataset, or through model poisoning, by sending maliciously crafted model updates. A particularly insidious attack involves malicious clients fabricating a high-quality score

to increase the impact of their poisoned updates. We assume the proportion of malicious clients is bounded by

, consistent with prior work on robust FL [

45,

50].

3.3. Problem Formulation

The objective of the system is to collaboratively train a global model with parameters

w that can accurately classify reviews as fake or genuine. The global optimization problem is to minimize a loss function

:

where

is the weight of client

k, and

is the local loss function for client

k on its data

:

Here,

is the prediction of the model with parameters

w for a multi-modal input

, and

ℓ is a suitable loss function (e.g., cross-entropy).

Traditional FedAvg solves this by having clients compute local gradients and averaging them. Our work contends that the client weight should not depend solely on data quantity , but also on its quality. We reformulate the aggregation step to incorporate a data quality score, as detailed in the next section.

4. The FedDQ Framework Methodology

The core of our FedDQ framework lies in its ability to assess data quality locally and leverage this information for robust global model aggregation. This section details the four key components: multi-modal review encoding, the data quality score computation, the quality-aware aggregation strategy, and heterogeneity-aware local training.

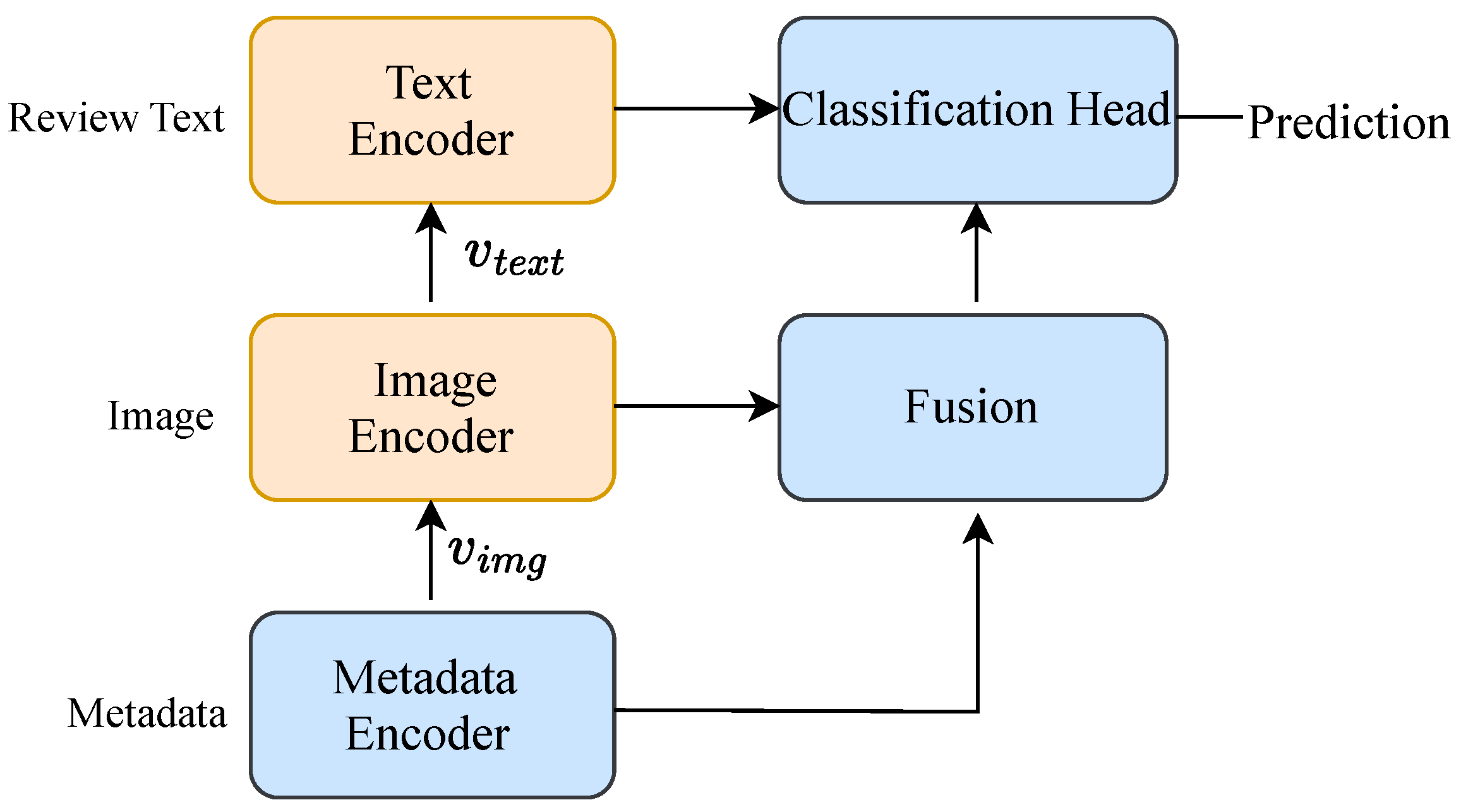

4.1. Multi-Modal Review Encoding

To capture the rich information in IoT reviews, we employ a multi-modal feature extraction model that processes text, images, and metadata. As shown in

Figure 3, for a given review sample

, the text encoder first processes the review text

x using a pre-trained language model. Specifically, we adopt DistilBERT [

64], a distilled version of BERT, to encode the text into a high-dimensional vector

, which captures deep contextual and semantic features indicative of deception.

If an image

is associated with the review, the image encoder employs MobileNetV2 [

65], a lightweight Convolutional Neural Network suitable for resource-constrained devices, to extract an image feature vector

.

For other metadata (such as the review timestamp, rating, and reviewer’s tenure), we concatenate the features and feed them through a small multi-layer perceptron (MLP) to obtain a metadata feature vector .

These feature vectors are then concatenated and passed through a final classification head (another MLP) to produce the final prediction. The parameters of this entire network constitute the model parameters . For clients with missing modalities (e.g., no images), the corresponding feature vectors are filled with zeros, and the final classification head is trained to be robust to these missing inputs.

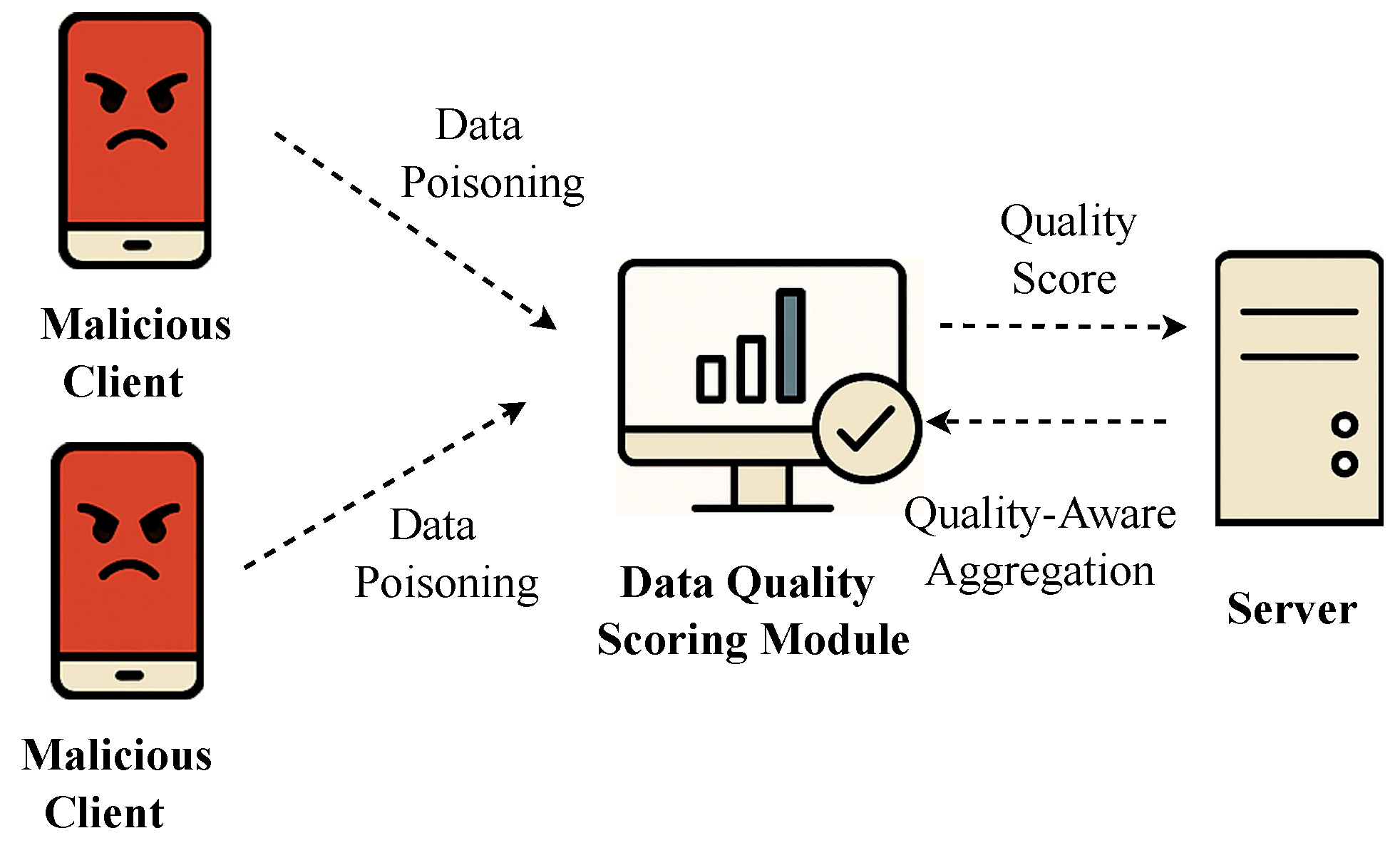

4.2. Data Quality Score Computation

This is a critical innovation of FedDQ. After each local training epoch, but before sending an update, each client

k computes a data quality score

for its dataset

. This score is a weighted combination of multiple sub-metrics, designed to be computed locally without revealing private data (

Figure 4).

where

are hyperparameters balancing the importance of each metric. In our experiments, we set the weights uniformly (

) to demonstrate the general applicability of the combined score without domain-specific tuning, though these can be optimized on a validation set if available.

Label confidence () measures the consistency between the provided labels and the model’s own predictions on the local data. A high degree of disagreement may indicate mislabeled data. We can estimate this using the entropy of the model’s predictions on the local training set. Lower entropy suggests higher confidence.

Textual quality () assesses the linguistic quality of the review texts. This can be a combination of metrics such as text length (very short or very long reviews are suspicious), perplexity from a small language model (where high perplexity indicates gibberish), and repetition scores.

Behavioral anomaly () captures suspicious reviewer patterns from metadata. Metrics include review burstiness (i.e., many reviews in a short time) and rating deviation (i.e., a reviewer’s ratings are consistently extreme compared to the average). This is calculated based on the statistics of the local dataset .

Cross-modal consistency () assesses the semantic alignment between the text and the image for multi-modal data. We compute the cosine similarity between the text and image embeddings, and . To ensure this comparison is meaningful, a linear projection layer is added after each encoder. These layers are pre-trained on a public multi-modal dataset to align the embeddings into a shared latent space and are kept frozen during federated training. A low similarity score suggests that the image is irrelevant or misleading. For clients with text-only data, this score component is set to the average cross-modal score of the participating clients in the previous round (or a neutral 0.5 in the first round). This approach prevents unfairly boosting text-only clients while not penalizing them for missing a modality.

4.3. Quality-Aware Aggregation (Q-FedAvg)

At the end of a communication round

t, each client

k sends its updated model parameters

and its quality score

to the server. The server then performs Quality-Aware Federated Averaging (Q-FedAvg). Instead of weighting by just the dataset size

, we incorporate the quality score

. To defend against malicious clients reporting a fraudulent high

, the server performs a verification step. It maintains a small, clean validation dataset. Before the final aggregation, it can provisionally test each client’s update and penalize clients whose updates degrade performance on this set, overriding their self-reported score. The global model is updated as shown in

Figure 5:

This ensures that clients with larger, higher-quality datasets have a greater influence on the global model, while effectively down-weighting or filtering out contributions from clients with noisy, malicious, or low-quality data.

4.4. Heterogeneity-Aware Local Training

To accommodate system heterogeneity, FedDQ allows for a dynamic adjustment of local computational effort. Clients with higher computational power and better network conditions can be assigned more local training epochs (

) per round. Furthermore, there is a synergy with data quality: we can assign fewer epochs to clients with low data quality scores (

) to prevent them from overfitting on their noisy data. A simple policy could be as follows:

where

is a base number of epochs, and

is a normalized score representing client

k’s computational capability. This adaptive approach improves overall efficiency and robustness.

The complete FedDQ algorithm is outlined in Algorithm 1.

| Algorithm 1 The FedDQ Algorithm |

- 1:

Server executes: - 2:

Initialize global model - 3:

for each communication round do - 4:

Select a subset of clients - 5:

for each client in parallel do - 6:

- 7:

end for - 8:

Verify client-reported scores using a server-side validation set; adjust scores for malicious clients. - 9:

Aggregate updates using Q-FedAvg (Equation ( 4)): - 10:

- 11:

end for - 12:

Return

- 13:

procedure ClientUpdate() - 14:

Download w from server - 15:

Determine local epochs based on resources and quality - 16:

for each local epoch do - 17:

Train on local data to update model parameters - 18:

end for - 19:

Compute local data quality score - 20:

Apply privacy mechanism (e.g., DP) to and - 21:

Return updated and score to server - 22:

end procedure

|

5. Security and Privacy Design

Ensuring security and privacy is paramount in FedDQ. We address this through two primary mechanisms: providing formal privacy guarantees using Differential Privacy and designing resilience against adversarial attacks.

5.1. Differential Privacy for Data and Quality Scores

To protect against inference attacks by the honest-but-curious server, we apply -Differential Privacy (DP). DP ensures that the inclusion or exclusion of any single data point in a client’s dataset has a negligible effect on the output.

For model updates, we adopt client-level DP. Before uploading its model update

, client

k first clips the update’s L2 norm to a threshold

C and then adds Gaussian noise scaled by

:

The noise scale

is chosen to satisfy a predefined privacy budget

.

For quality scores, the score

itself could leak information about the client’s data distribution. Therefore, we also add noise to

before uploading it. Since

is a scalar in

, we can use the Laplace mechanism or add bounded Gaussian noise to protect its value while preserving its general utility for weighting. To mitigate the impact of this noise on the aggregation stability, we can apply min-max normalization or clipping to the noisy

values before they are used in Equation (

4), ensuring the weights remain stable and meaningful even with a strong privacy budget (small

).

5.2. Resilience to Backdoor and Data Poisoning Attacks

Our data quality-aware design provides inherent resilience against certain attacks, as illustrated in

Figure 6.

In the case of data poisoning, if a malicious client trains on a dataset with many mislabeled examples (e.g., labeling fake reviews as genuine), the metric will likely detect a high inconsistency between the labels and the model’s predictions, resulting in a low-quality score. The Q-FedAvg mechanism will then naturally down-weight this client’s harmful contribution.

For backdoor attacks, where a malicious client attempts to embed a hidden trigger in the model, a common strategy involves injecting a small number of poisoned samples. While this might not be caught by statistical metrics alone, the quality-aware aggregation acts as a line of defense. If the attack significantly perturbs the model update, it can be filtered. More importantly, the server-side verification mechanism introduced in

Section 4.3 provides a direct defense: updates containing a backdoor are likely to cause a performance drop on the server’s clean validation set (which does not contain the trigger), allowing the server to flag the malicious update and penalize the client. While FedDQ primarily mitigates backdoor attacks via local quality metrics and server-side validation, future extensions may integrate anomaly detection on client updates using cosine similarity checks or clustering-based detection to provide more systematic defenses.

6. Experiments and Results

We conducted a series of experiments to evaluate the performance of FedDQ against several baseline methods under various simulated IoT scenarios.

6.1. Experimental Setup

We used two public datasets. The first is the Amazon Review Dataset, a large-scale collection of product reviews with associated images and metadata. We utilized a pre-processed subset of 50,000 reviews. Since ground-truth labels for fake reviews are unavailable, we adopted a common methodology from the literature by using a combination of heuristic filters to create proxy labels. These included identifying reviews from users with only one review, detecting abnormal rating spikes for a product, and flagging high textual similarity to other reviews. This resulted in approximately 20% of the data being labeled as fake. This dataset was used to evaluate the full multi-modal capabilities of FedDQ. The second is the Yelp Dataset (text-centric), a well-known benchmark for fake review detection containing primarily text reviews. We used a balanced subset of 30,000 reviews (15,000 genuine, 15,000 fake) to focus on the textual and behavioral quality metrics. While not native IoT datasets, these platforms serve as robust and widely-used proxies for the kind of user-generated multi-modal (text, image, metadata) content common in modern IoT applications.

To simulate a heterogeneous IoT environment, we distributed the data among clients. We simulated Non-IID data distributions using a Dirichlet distribution () over the class labels. A low concentration parameter created a highly heterogeneous scenario where each client’s data distribution was skewed, while created a more homogeneous (near-IID) scenario for comparison.

We compared FedDQ against several baselines. The centralized baseline is a model trained on all data pooled together, serving as an upper bound on performance but not privacy-preserving. FedAvg [

59] is the standard federated averaging algorithm. FedProx [

60] is an FL method with a proximal term to handle statistical heterogeneity, with

. FedAvg+DP combines FedAvg with client-level differential privacy, with parameters

and

.

We measured performance using accuracy, precision, recall, and F1-score on a held-out global test set. We also tracked convergence speed (the number of rounds to reach 90% of final accuracy) and communication overhead (total MB transmitted).

The framework was simulated using Python 3.8 with PyTorch 1.12 and the Flower FL framework. The base model was a DistilBERT text encoder combined with a MobileNetV2 image encoder. The client hardware heterogeneity was simulated by assigning random computational delays (50–500 ms per local batch) and network delays (10–100 ms RTT).

We also simulated two adversarial settings. In the label flip attack, 10% of randomly selected clients had their training labels flipped (fake ↔ genuine). In the Gaussian noise attack, 20% of clients added significant Gaussian noise () to their image data to simulate low-quality sensors or attacks.

6.2. Overall Performance Comparison

Table 2 presents the main results on the Amazon multi-modal dataset under a highly heterogeneous setting (

) with 10% label-flip attackers. FedDQ consistently outperforms all federated baselines across all metrics, achieving an F1-score of 87.6%, which is a significant 13.8% absolute improvement over standard FedAvg and only 5.8% lower than the centralized upper bound. FedProx show some robustness but are still considerably outperformed by FedDQ. While FedAvg+DP provides privacy, it suffers a noticeable drop in performance due to the added noise.

6.3. Impact of Data Heterogeneity

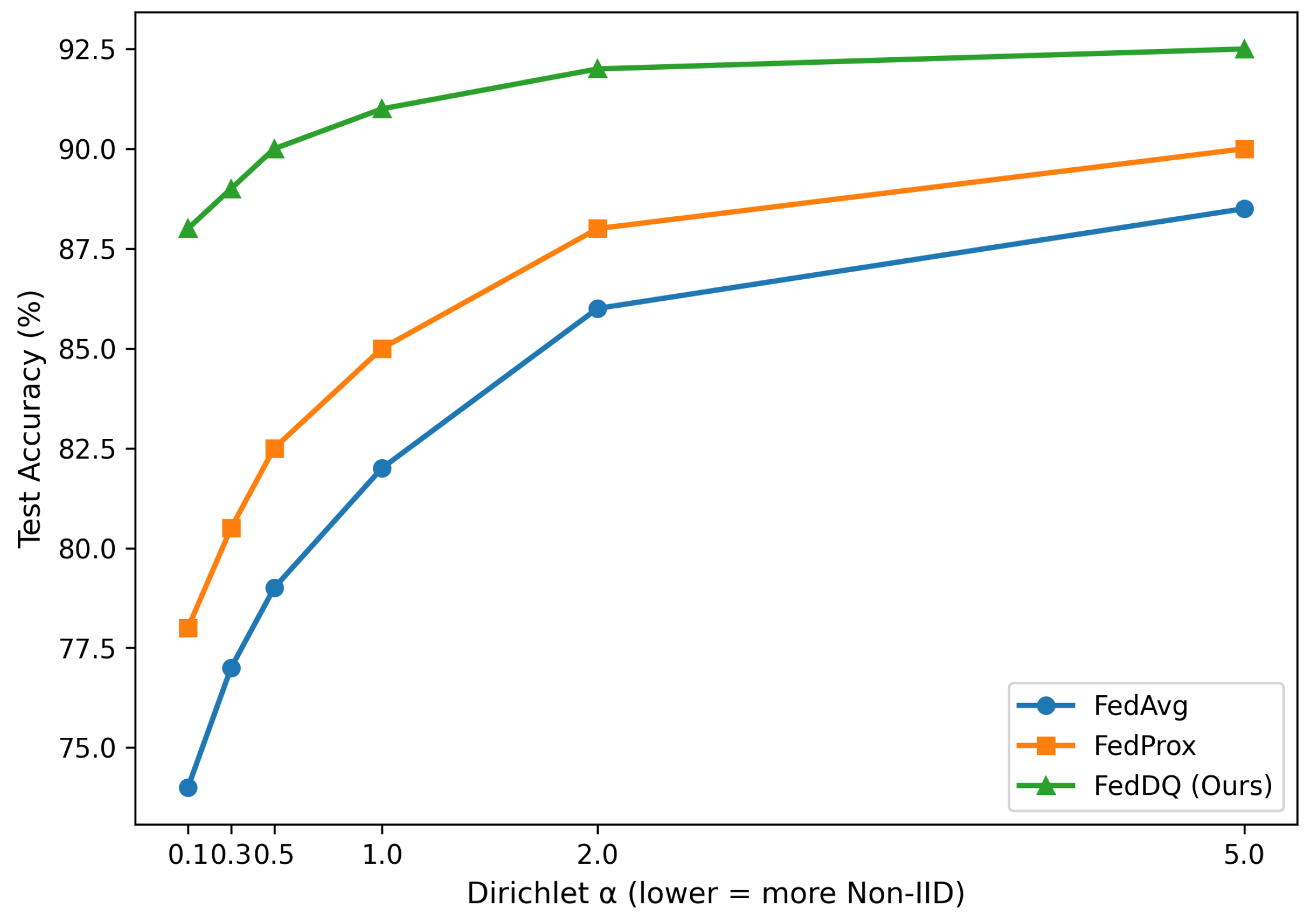

Figure 7 shows the test accuracy of different methods as the data heterogeneity (controlled by the Dirichlet parameter

) varies. As expected, all FL methods perform better under more homogeneous data distributions (

). However, FedDQ maintains a stable and high performance across all levels of heterogeneity, demonstrating its robustness. In contrast, the performance of FedAvg drops sharply as the data becomes more Non-IID (

). FedProx mitigate this drop but are still less effective than FedDQ, which actively identifies and leverages high-quality data partitions regardless of their distribution.

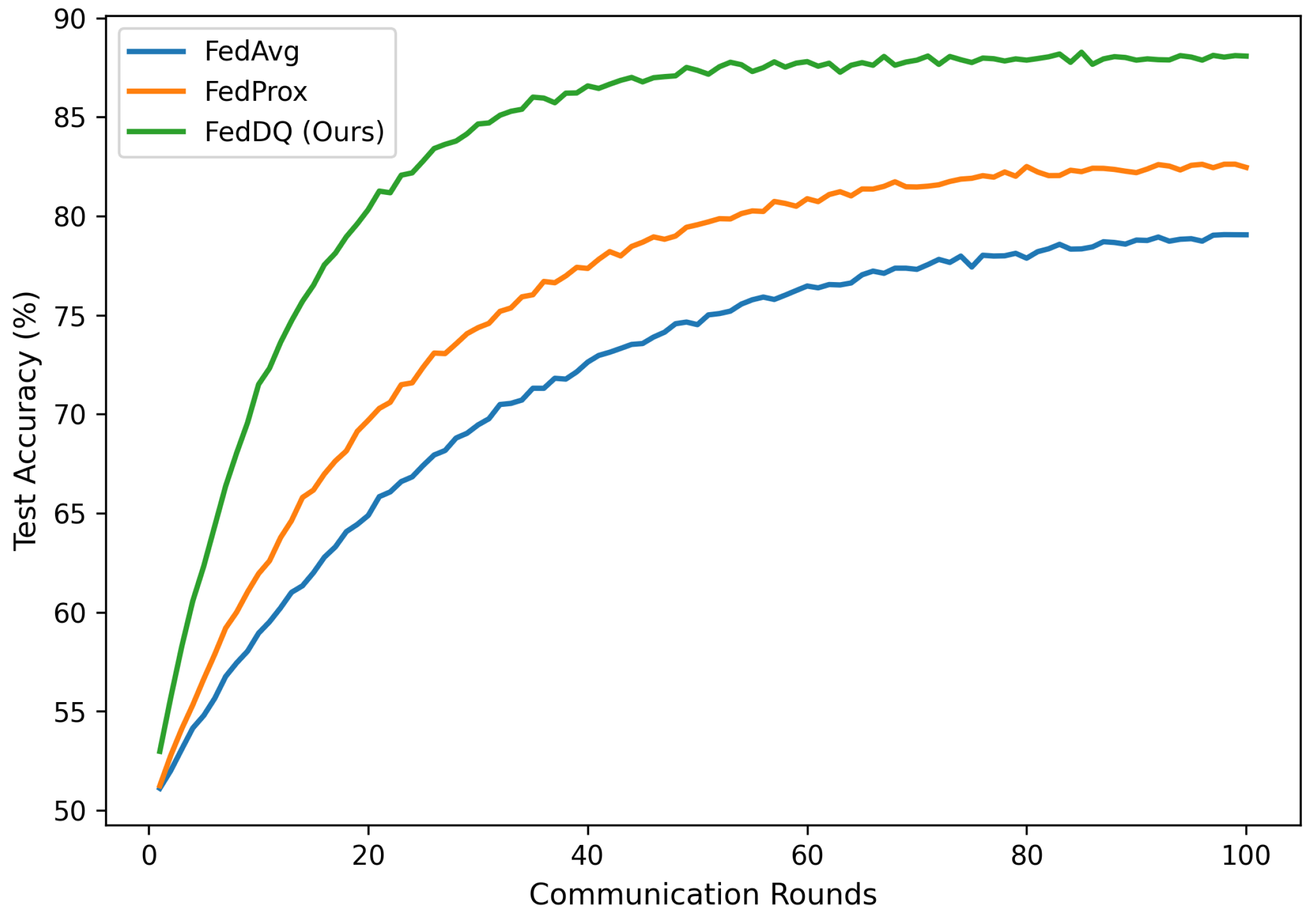

6.4. Convergence Analysis

Figure 8 illustrates the convergence behavior of the compared methods on the Yelp dataset under a noisy scenario (20% clients with Gaussian noise). FedDQ not only achieves a higher final accuracy but also converges significantly faster than other methods. It reaches 85% accuracy in nearly half the number of communication rounds required by FedAvg and FedProx. This is because the quality-aware aggregation prioritizes informative and reliable updates, steering the global model more efficiently towards the optimum and wasting fewer rounds on noisy or malicious contributions.

6.5. Robustness Against Attacks

We evaluated the robustness of FedDQ against the two attack scenarios. The results, shown in

Table 3, highlight FedDQ’s strong defensive capabilities. Under the label-flip attack, FedDQ’s F1-score only drops by 1.9% (from 89.5% to 87.6%), whereas FedAvg’s performance drops by 1.7%. Similarly, in the Gaussian noise scenario, FedDQ’s inherent quality assessment, particularly the

metric, effectively down-weights the contributions from clients with corrupted image data, demonstrating superior resilience. While its performance drops by 4.4 percentage points, this is significantly less than the 20.4% drop seen in FedAvg, highlighting the effectiveness of the quality assessment module in mitigating the impact of corrupted data.

6.6. Impact of Differential Privacy Budget

To quantify the trade-off between privacy and utility, we evaluated all methods under different client-level DP budgets (

), while fixing

.

Table 4 summarizes the results on the Amazon dataset in a heterogeneous setting (

). As expected, stronger privacy guarantees (lower

) lead to a decrease in model performance. However, FedDQ consistently outperforms FedAvg + DP across all privacy levels. Even with a strict privacy budget of

, FedDQ maintains an F1-score above 80%, while FedAvg + DP drops to 68.4%. With a moderate privacy budget of

, FedDQ achieves 82.3%, which is only a small reduction compared to its non-private setting. These results confirm that FedDQ provides strong privacy protection with graceful utility degradation.

6.7. Sensitivity to Adversary Concentration

We further studied robustness when varying the proportion of adversarial clients. Specifically, we simulated label-flip attackers at different concentrations (0–40%).

Table 5 reports the F1-scores on the Yelp dataset. At the baseline setting without adversaries (0%), FedAvg, FedProx, and FedAvg + DP achieve 79.0%, 82.5%, and 72.5% F1-scores, respectively, while FedDQ attains the highest performance of 89.1%. With 10% adversaries, the performance of FedAvg, FedProx, and FedAvg + DP declines moderately to 70.2%, 76.5%, and 67.6%, respectively, whereas FedDQ maintains a strong 86.8%. As the proportion of malicious clients further increases, FedAvg shows severe vulnerability, collapsing below 60% once adversaries reach 20%. FedProx demonstrates relatively better resilience, but still degrades substantially beyond 30% adversaries. FedAvg + DP offers limited protection, with performance dropping to nearly 50% at 40% adversaries. In contrast, FedDQ remains consistently robust across all levels of adversary concentration. Even under 40% malicious participation, FedDQ still achieves 80.1% F1-score. This highlights the effectiveness of the proposed quality-aware aggregation and score verification mechanisms in mitigating the impact of poisoned updates.

6.8. Ablation Study

To quantitatively validate the contribution of each component of our proposed multi-faceted quality score, we conducted an ablation study on the Yelp dataset. We created variants of FedDQ by removing specific data quality metrics. Since the Yelp dataset is text-only, the cross-modal consistency metric (

) is not applicable to this analysis. The results in

Table 6 demonstrate that each quality component contributes to the overall performance. Removing the textual quality metric (

) had the most significant negative impact, followed by the label confidence (

). The full FedDQ model achieves the best performance, validating the effectiveness of our multi-faceted quality assessment design. The higher absolute F1-score on Yelp compared to Amazon stems from its balanced, text-only nature, while Amazon contains noisy multi-modal data which presents a more complex challenge.

6.9. Efficiency and Communication Cost

We analyzed the computational and communication overhead of FedDQ compared to FedAvg. As shown in

Table 7, FedDQ introduces a modest 8.5% increase in local computation time per round due to the calculation of the quality score

.

Table 8 provides a further breakdown, showing that each quality metric is individually lightweight, making the total overhead manageable for most IoT devices. The communication cost is nearly identical, as only a single scalar value (

) is added to the transmitted model update. This minimal overhead is clearly justified by the substantial gains in accuracy, convergence speed, and robustness demonstrated in the previous experiments.

7. Discussion

Our experimental results validate the effectiveness of FedDQ. However, there are several practical considerations and limitations to discuss regarding its deployment in real-world IoT ecosystems. The proposed data quality score is powerful but not infallible. Its effectiveness relies on the assumption that quality deficits (e.g., mislabeling, gibberish text) are detectable through the chosen metrics. Sophisticated adversaries could craft attacks that are harder to detect. For example, they might generate plausible-looking but subtly misleading reviews that fool the metric. Furthermore, our server-side score verification depends on the quality of the server’s validation set; if this set is not representative, its effectiveness may be limited. Moreover, the optimal weights () for combining the sub-scores may vary across different domains and datasets, potentially requiring some tuning. Future work could explore adaptive or learnable weighting schemes.

Scalability and deployment are also important considerations. Our simulations with

clients demonstrate the core principles of FedDQ, but real-world IoT deployments can involve millions of devices. Scaling to this level requires strategies like client sampling and hierarchical coordination, where edge servers aggregate updates from local clusters of devices. FedDQ is compatible with these approaches; the server would sample a subset of clients per round, and quality scores would inform both selection and aggregation. The framework can also handle client volatility (devices dropping in and out), as the quality score provides a mechanism to re-evaluate clients that rejoin the network. While we designed FedDQ with IoT constraints in mind (e.g., lightweight models, dynamic epochs), the local computation of the quality score adds overhead.

Table 7 shows that FedDQ incurs a slight increase in local computation time (for calculating

) and a negligible increase in communication (for sending the scalar

). We argue this is a worthwhile trade-off for the substantial gains in accuracy and robustness. For extremely constrained devices (e.g., microcontrollers), a simplified version of the quality score might be necessary.

The integration of Differential Privacy is crucial for providing formal privacy guarantees. However, there is an inherent trade-off between the strength of privacy (controlled by

) and model utility. Our experiment in

Section 6.6 quantifies this trade-off, showing that a balance must be struck based on the specific application’s requirements. In scenarios where strong privacy is mandated by regulation, FedDQ can still deliver reasonable performance, but system designers must carefully calibrate

to maintain acceptable utility.

Although developed for fake review detection, the core idea of FedDQ—locally assessing data quality to inform global aggregation—is highly generalizable. It could be applied to other federated analytics tasks in IoT, such as anomaly detection in sensor data, activity recognition from wearable devices, or medical diagnosis from distributed hospital data, where data quality is a pervasive concern. We expect future research to further adapt FedDQ’s principles to broader applications, making quality-aware federated learning a practical and reliable paradigm across domains.

8. Conclusions and Future Work

This paper introduced FedDQ, a federated learning framework designed to perform robust and privacy-preserving review analytics on the heterogeneous and distributed data generated by IoT devices. By integrating a multi-faceted, locally computed data quality score into the aggregation process, FedDQ effectively mitigates the negative impact of low-quality and malicious data that cripples standard federated learning algorithms. Our quality-aware approach, combined with a design that accounts for system heterogeneity and provides formal differential privacy guarantees, creates a practical and powerful solution for building trustworthy AI systems in the IoT era.

Our extensive experiments demonstrated that FedDQ achieves superior accuracy, faster convergence, and greater robustness against adversarial attacks compared to existing methods. The results underscore the critical importance of moving beyond simple data quantity-based aggregation to more intelligent, quality-driven orchestration in federated learning.

For future work, we plan to explore several exciting directions. First, we will investigate the use of more advanced, self-supervised methods for data quality assessment to reduce reliance on heuristics. Second, we aim to extend FedDQ to handle federated training of large language models (LLMs) in the IoT domain, which presents unique challenges in communication and computation. Third, we plan to validate our framework on native IoT datasets containing diverse modalities such as voice reviews and time-series sensor data. Finally, developing lightweight, hardware-aware versions of our framework will be crucial for deployment on the most resource-scarce IoT endpoints, further broadening the applicability of trustworthy federated intelligence.