A Machine Learning Approach to Investigating Key Performance Factors in 5G Standalone Networks

Abstract

1. Introduction

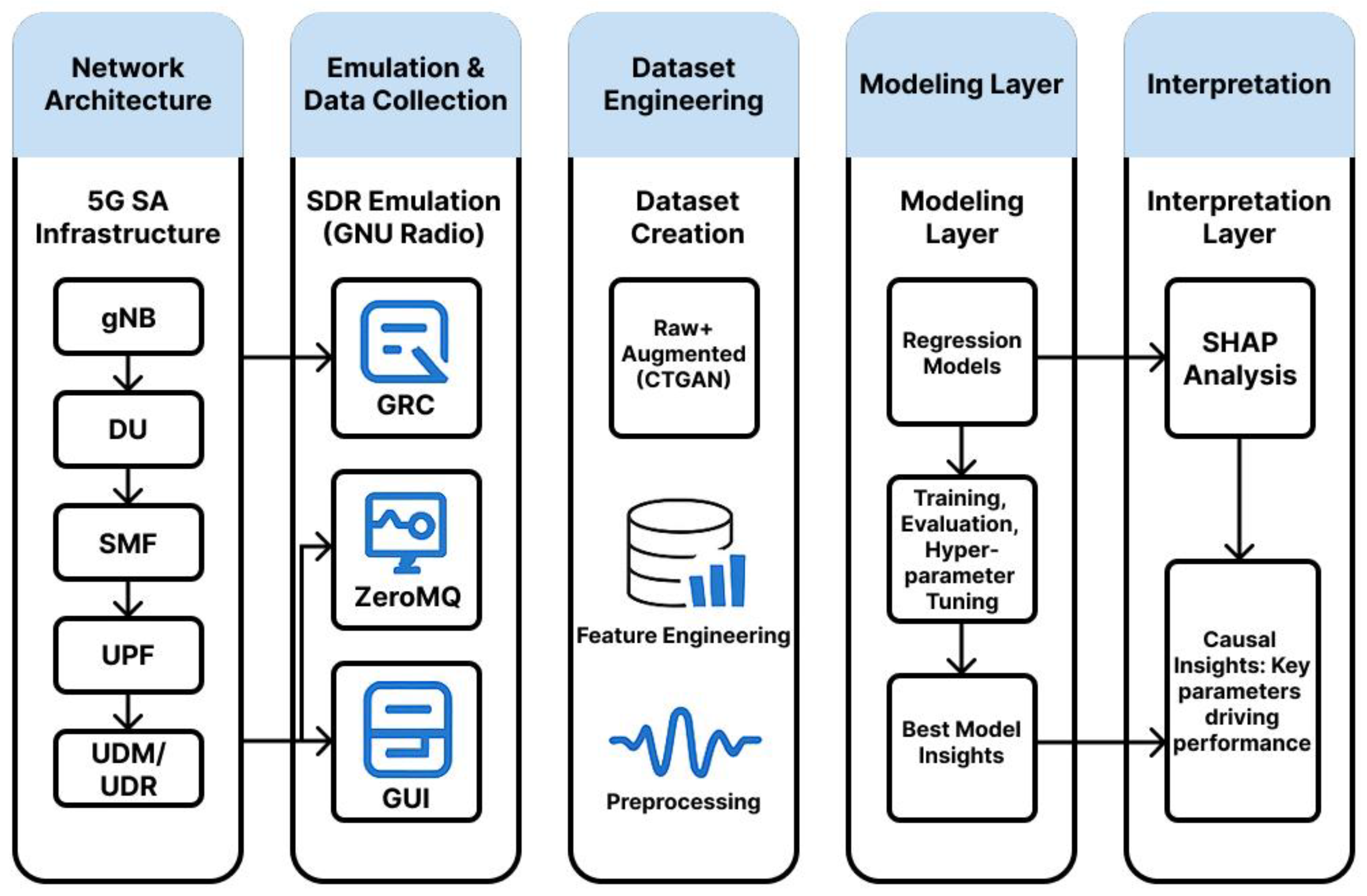

2. Materials and Methods

2.1. 5G Standalone System Architecture

2.1.1. Network Architecture Components

- AMF (Access and Mobility Management Function)—manages user registration, authentication and mobility procedures.

- SMF (Session Management Function)—manages PDU sessions and QoS policies.

- UPF (User Plane Function)—provides routing and forwarding of user traffic.

- NRF (Network Repository Function)—provides network function discovery and registration services.

- UDM/UDR (Unified Data Management/Repository)—manages subscription data and user profiles.

- gNB (Next Generation NodeB)—5G base station supporting NR PHY/MAC/RLC/PDCP protocols.

- CU (Central Unit)—centralized unit for processing high-level proto-calls.

- DU (Distributed Unit)—distributed physical layer processing unit.

2.1.2. Protocols and Interfaces

- Physical layer (PHY)—OFDM support with cyclic prefix, SC-FDMA multiple access in uplink.

- Channel Access Layer (MAC)—resource scheduling, HARQ, power management.

- Radio Link Control (RLC) layer—segmentation, ARQ, packet duplication.

- Packet Data Convergence Layer (PDCP)—header compression, encryption, reordering.

2.2. Multi-User Scenarios (Multi-UE)

2.2.1. Multi-UE Concept

2.2.2. Model of a Multi-User System

- is the received reference signal power at time t [dBm].

- —quality of the received reference signal [dB].

- —channel quality indicator (0–15).

- —modulation and coding scheme (0–28).

- —block error rate (0,1).

- —throughput [Mbps].

- —time advance [µs].

2.2.3. Resource Scheduling Algorithm

- —instantaneous data transmission rate for .

- —average data rate for in the previous time window.

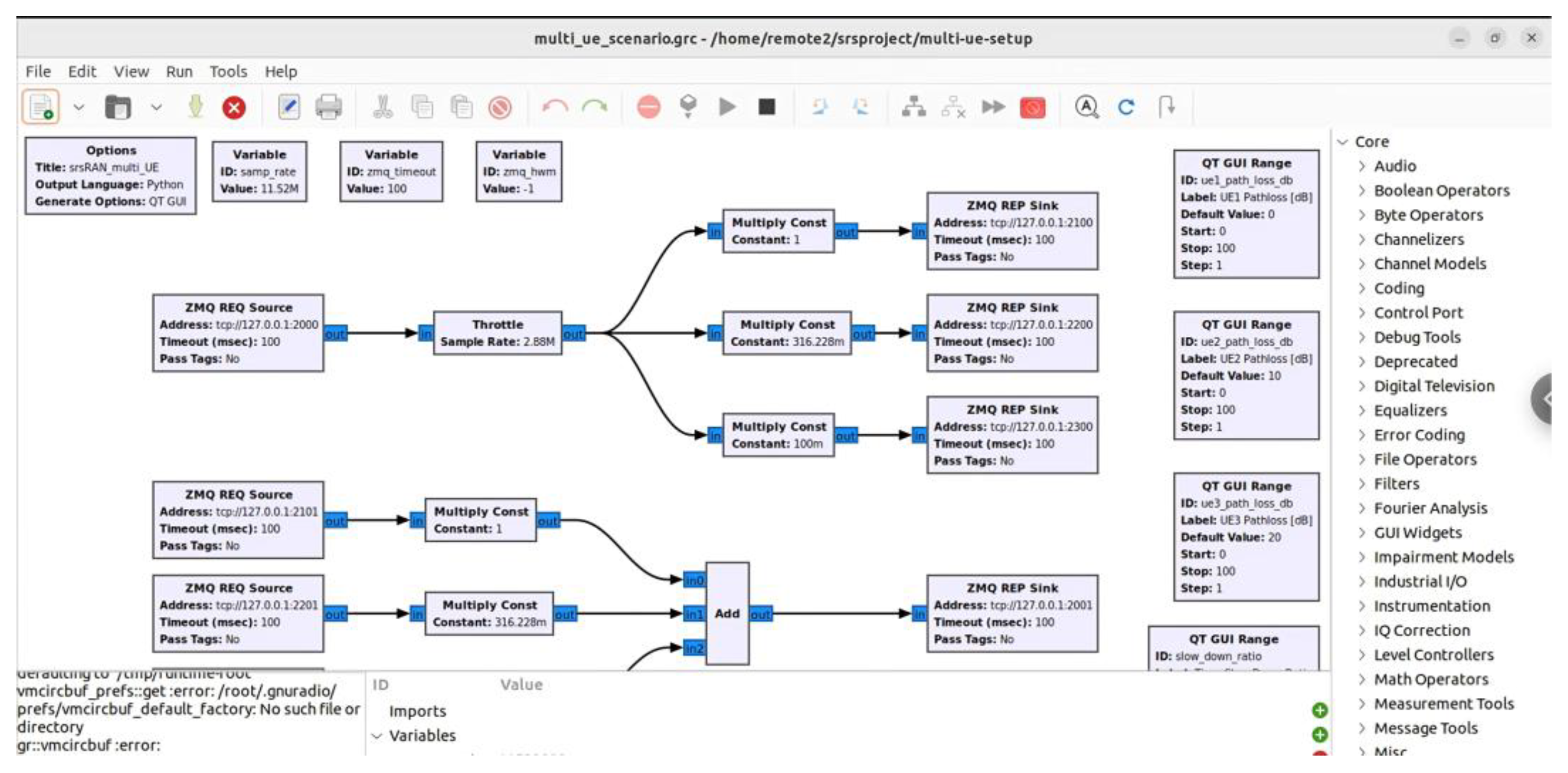

2.3. GNU Radio Architecture

- GNU Radio Companion (GRC)—a graphical design environment for inline radio systems to run a multi-UE (multi-user) communication scenario.

- Signal Processing Libraries—A set of blocks for modulation, demodulation, filtering, and other operations.

- USRP Hardware Driver (UHD)—drivers for working with hardware SDR devices.

- ZeroMQ blocks—interfaces for interprocess communication.

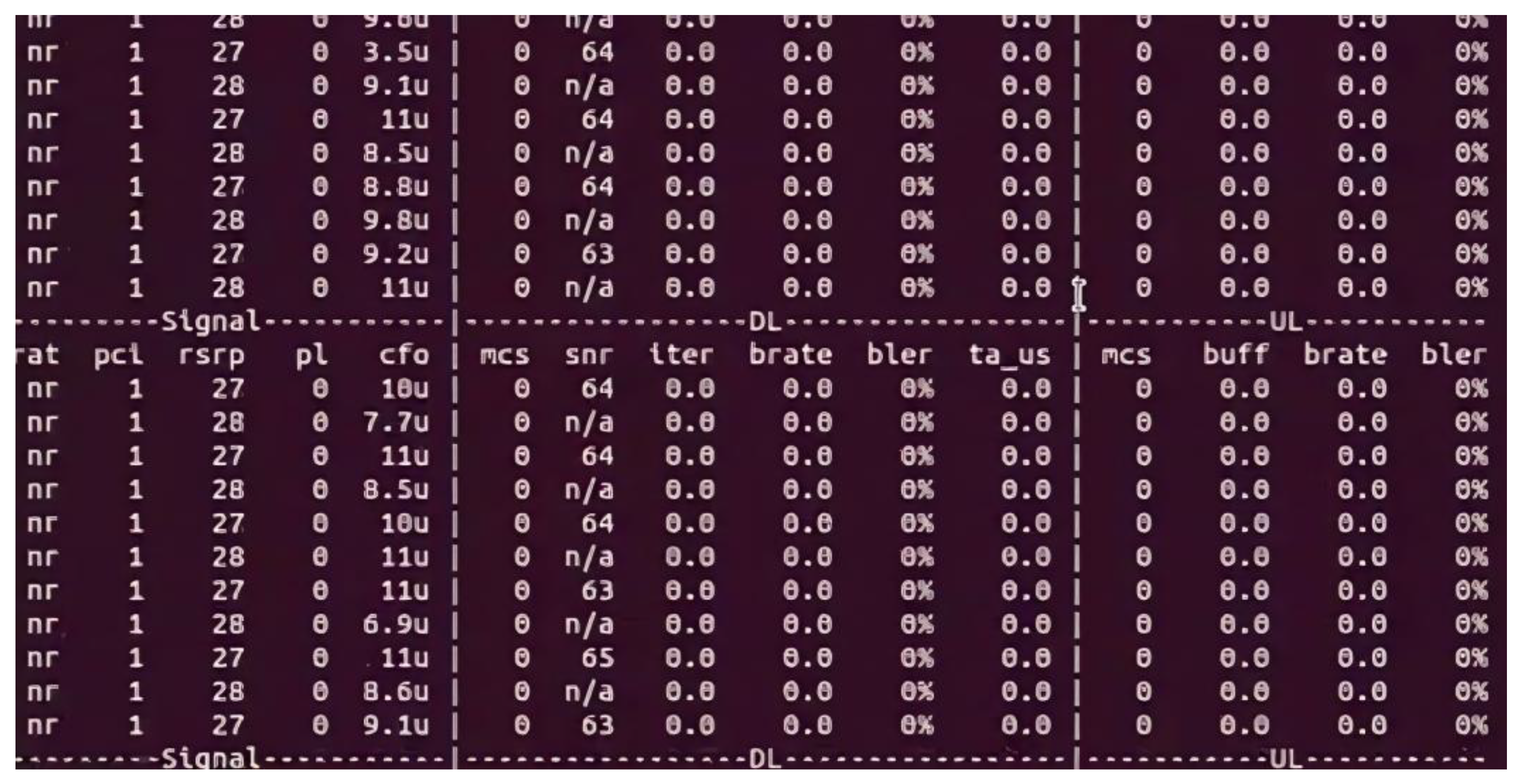

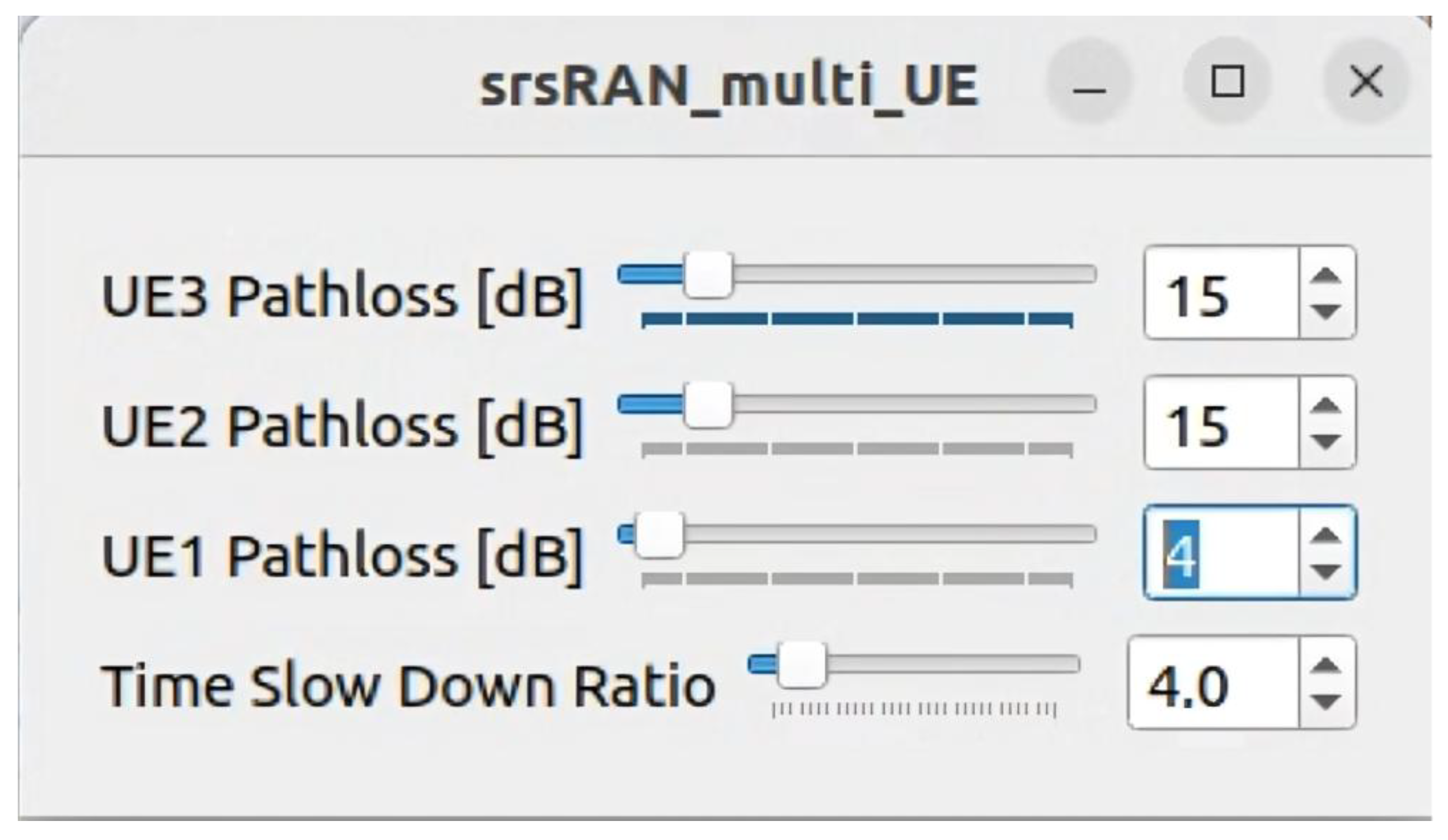

Experimental Control and Parameter Variation

- Independent Variable: The primary parameter varied during the experiments was the Pathloss [dB] for each of the three UEs. This was adjusted independently for each user via the GNU Radio GUI panel to simulate changes in signal attenuation and user location.

- Controlled Variables: To isolate the impact of pathloss, key system parameters were held constant throughout all experimental runs. These included the Proportional Fair scheduling algorithm, the number of active UEs (three), the carrier frequency, and the channel bandwidth.

- Data Collection: For each combination of pathloss settings, the system was run for a set duration, and the resulting Key Performance Indicators (KPIs), such as brate_dl and mcs_dl, were logged to form the initial dataset.

2.4. Dataset and Augmentation

Data Preprocessing and Feature Engineering

- Data Cleaning: Numerical columns containing text suffixes (e.g., ‘k’ for thousands and ‘n’ for nanoseconds) were converted to a standard numeric format.

- Removal of Irrelevant Features: As previously mentioned, the dataset was generated under specific conditions to analyze how certain parameters influence download speeds in an ideal network environment. Because the experiment focused on isolating a few key variables, other factors (like connection quality, signal strength, and error rates) were intentionally kept constant. This approach resulted in numerous columns containing only a single value. Since these constant features lack variance and provide no information for pattern recognition, they were removed during preprocessing. This step streamlines the dataset, focusing the analysis solely on the parameters that varied during the experiment.

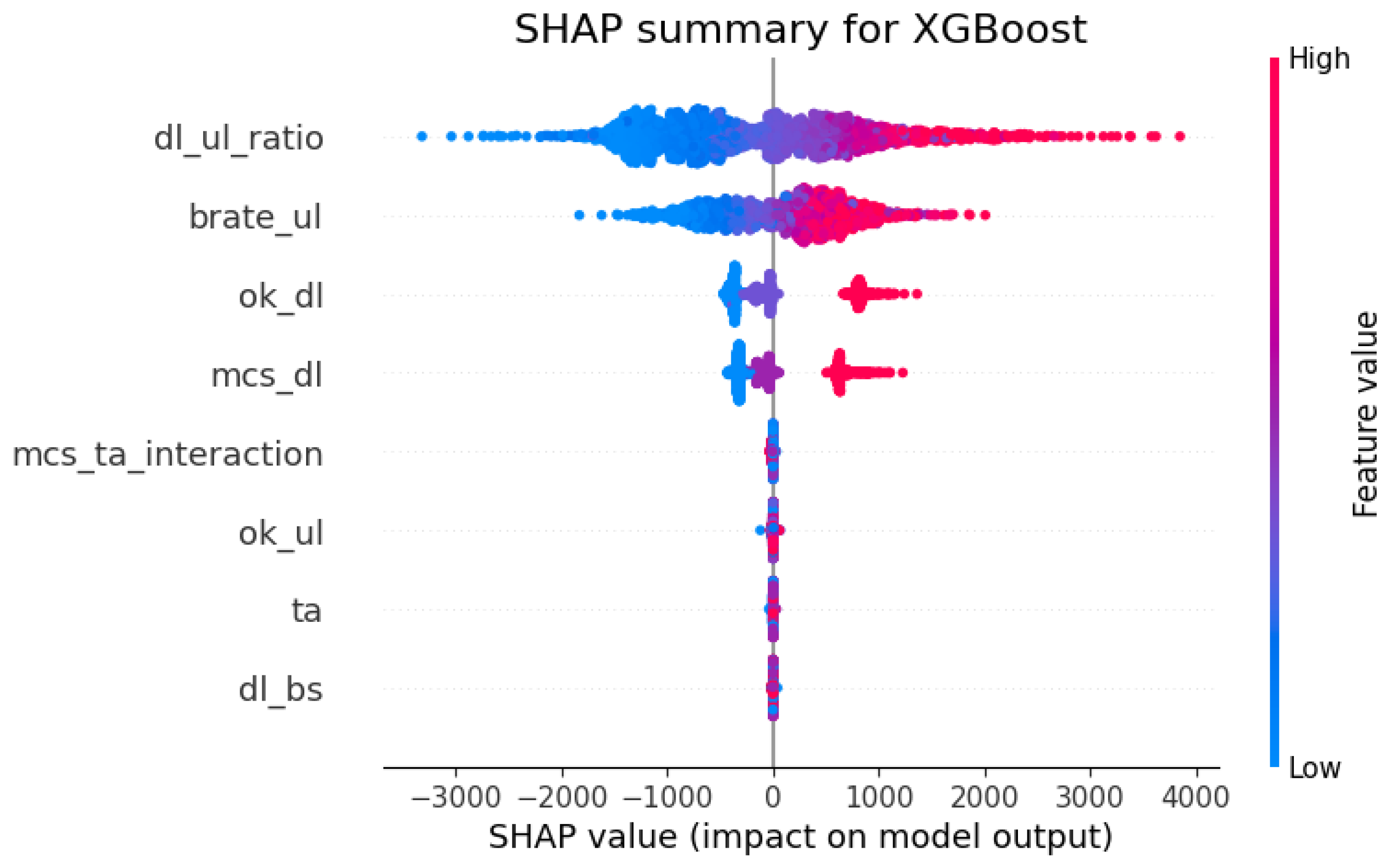

- dl_ul_ratio: The ratio of downlink to uplink bitrate (brate_dl/brate_ul), designed to capture traffic asymmetry.

- mcs_ta_interaction: The product of the Modulation and Coding Scheme and Timing Advance (mcs_dl * ta), intended to help the model capture non-linear relationships.

2.5. Modeling and Evaluation

2.5.1. Machine Learning Models

- Linear Regression: A baseline model that assumes a linear relationship between features and the target [27].

- Decision Tree (max_depth = 7, min_samples_leaf = 1, min_samples_split = 2): A non-linear model that partitions the data based on feature values [28].

- Random Forest (max_depth = 7, min_samples_leaf = 1, n_estimators = 200): An ensemble method that builds multiple decision trees and merges their predictions to improve accuracy and control overfitting [29].

- Gradient Boosting (learning_rate = 0.1, max_depth = 5, n_estimators = 300): An ensemble technique that builds models sequentially, where each new model corrects the errors of the previous one [30].

- XGBoost (learning_rate = 0.05, max_depth = 7, n_estimators = 300, subsample = 0.7): A highly optimized and efficient implementation of the gradient boosting algorithm [31].

2.5.2. Evaluation Metrics

- Mean Absolute Error (MAE): Measures the average magnitude of the errors in a set of predictions, without considering their direction.

- Root Mean Squared Error (RMSE): This is the square root of the average of squared errors. It gives more weight to larger errors and is useful because the final error score is in the same units as the target variable.

- R-squared (R2): The coefficient of determination, which represents the proportion of the variance in the dependent variable that is predictable from the independent variable(s).

2.5.3. Model Optimization and Interpretation

- Hyperparameter Tuning: The optimal hyperparameters for the tree-based models, as listed in Section 3.1, were identified using GridSearchCV. This method performs an exhaustive search over a specified parameter grid, using 3-fold cross-validation to find the combination of parameters that yields the best performance on the R2 metric [32].

- Model Interpretation: To address the “black box” problem and understand the drivers of the model’s predictions, we employed SHAP. Rooted in cooperative game theory, SHAP is a state-of-the-art framework that explains the output of any machine learning model by calculating each feature’s contribution to a prediction. It was chosen for its theoretical soundness and its ability to provide clear, global insights into feature importance. SHAP not only ranks features but also shows the direction and magnitude of their impact, allowing for a deeper analysis of the model’s decision-making process and the key parameters driving network performance [33].

3. Results

3.1. GNU Radio GUI Panel—Simulation Controls

3.2. Dataset and Target Variable

3.3. Model Performance Comparison

3.3.1. Analysis of the Best Model: XGBoost

3.3.2. Model Interpretation with SHAP

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Saad, W.; Bennis, M.; Chen, M. A vision of 6G wireless systems: Applications, trends, technologies, and challenges. IEEE Netw. 2019, 34, 134–142. [Google Scholar] [CrossRef]

- Zhang, Z.; Xiao, Y.; Ma, Z.; Xiao, M.; Ding, Z.; Lei, X.; Karagiannidis, G.K.; Fan, P. 6G wireless networks: Vision, requirements, architecture, and key technologies. IEEE Veh. Technol. Mag. 2019, 14, 28–41. [Google Scholar] [CrossRef]

- Tariq, F.; Khandaker, M.R.A.; Wong, K.K.; Imran, M.A.; Bennis, M.; Debbah, M. A speculative study on 6G. IEEE Wirel. Commun. 2020, 27, 118–125. [Google Scholar] [CrossRef]

- Strinati, E.C.; Barbarossa, S.; Gonzalez-Jimenez, J.L.; Ktenas, D.; Cassiau, N.; Maret, L.; Dehos, C. 6G: The next frontier: From holographic messaging to artificial intelligence using subterahertz and visible light communication. IEEE Veh. Technol. Mag. 2019, 14, 42–50. [Google Scholar] [CrossRef]

- Shafin, R.; Liu, L.; Chandrasekhar, V.; Chen, H.; Reed, J.; Zhang, J.C. Artificial intelligence-enabled cellular networks: A critical path to beyond-5G and 6G. IEEE Wirel. Commun. 2020, 27, 212–217. [Google Scholar] [CrossRef]

- Kato, N.; Fadlullah, Z.M.; Tang, F.; Mao, B.; Tani, S.; Okamura, A.; Liu, J. Optimizing space-air-ground integrated networks by artificial intelligence. IEEE Wirel. Commun. 2019, 26, 140–147. [Google Scholar] [CrossRef]

- Morocho-Cayamcela, M.E.; Lim, W. Artificial intelligence in 5G technology: A survey. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2019; IEEE: Washington, DC, USA; pp. 560–565. [Google Scholar]

- Sun, Y.; Peng, M.; Mao, S. Deep reinforcement learning for intelligent resource management in 5G and beyond. IEEE Wirel. Commun. 2019, 26, 8–14. [Google Scholar]

- Cheng, N.F.; Pamuklu, T.; Erol-Kantarci, M. Reinforcement learning based resource allocation for network slices in O-RAN midhaul. In Proceedings of the 2023 IEEE 20th Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2025; IEEE: Washington, DC, USA; pp. 678–683. [Google Scholar]

- Gao, Z. 5G traffic prediction based on deep learning. Comput. Intell. Neurosci. 2022, 2022, 3174530. [Google Scholar] [CrossRef]

- Sinha, A.; Agrawal, A.; Roy, S.; Uduthalapally, V.; Das, D.; Mahapatra, R.; Shetty, S. AnDet: ML-Based Anomaly Detection of UEs in a Multi-Cell B5G Mobile Network for Improved QoS. In Proceedings of the 2024 International Conference on Computing, Networking and Communications (ICNC), Big Island, HI, USA, 19–22 February 2024; pp. 500–505. [Google Scholar]

- Bega, D.; Gramaglia, M.; Banchs, A.; Sciancalepore, V.; Costa-Perez, X. A deep learning approach to 5G network slicing resource management. IEEE Trans. Mob. Comput. 2020, 20, 3056–3069. [Google Scholar]

- Khan, L.U.; Saad, W.; Han, Z.; Hossain, E.; Hong, C.S. Federated learning for internet of things: Recent advances, taxonomy, and open challenges. IEEE Commun. Surv. Tutor. 2021, 23, 1759–1799. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Chen, T.; Yu, J.; Minakhmetov, A.; Gutterman, C.; Sherman, M.; Zhu, S.; Santaniello, S.; Biswas, A.; Seskar, I.; Zussman, G.; et al. A software-defined programmable testbed for beyond 5G optical-wireless experimentation at city-scale. IEEE Netw. 2022, 36, 108–115. [Google Scholar] [CrossRef]

- Nahum, C.V.; Pinto, L.D.N.M.; Tavares, V.B.; Batista, P.; Lins, S.; Linder, N.; Klautau, A. Testbed for 5G connected artificial intelligence on virtualized networks. IEEE Access 2020, 8, 223202–223213. [Google Scholar]

- Alsamhi, S.H.; Ma, O.; Ansari, M.S.; Almalki, F.A. Survey on Collaborative Smart Drones and Internet of Things for Improving Smartness of Smart Cities. IEEE Access 2019, 7, 128125–128152. [Google Scholar] [CrossRef]

- Fourati, H.; Maaloul, R.; Chaari, L. A Survey of 5G Network Systems: Challenges and Machine Learning Approaches. Int. J. Mach. Learn. Cyber. 2020, 12, 385–431. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep Learning in Mobile and Wireless Networking: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Usama, M.; Ahmad, R.; Qadir, J. Examining machine learning for 5G and beyond through an adversarial lens. IEEE Netw. 2021, 35, 188–195. [Google Scholar] [CrossRef]

- Polaganga, R.K.; Liang, Q. Extending Causal Discovery to Live 5G NR Network With Novel Proportional Fair Scheduler Enhancements. IEEE Internet Things J. 2024, 12, 288–296. [Google Scholar] [CrossRef]

- Pearl, J. The seven tools of causal inference, with reflections on machine learning. Commun. ACM 2019, 62, 54–60. [Google Scholar] [CrossRef]

- Glymour, C.; Zhang, K.; Spirtes, P. Review of causal discovery methods based on graphical models. Front. Genet. 2019, 10, 524. [Google Scholar] [CrossRef]

- Shah, R.D.; Peters, J. The hardness of conditional independence testing and the generalized covariance measure. Ann. Stat. 2020, 48, 1514–1538. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef] [PubMed]

- Arya, L.; Raju, E.S.; Santosh, M.V.S.; MPJ, S.K.; Rastogi, R.; Elloumi, M.; Arumugam, T.; Namasivayam, B. Explainable Artificial Intelligence (XAI) for Ethical and Trustworthy Decision-Making in 6G Networks. In 6G Networks and AI-Driven Cybersecurity; IGI Global Scientific Publishing: Palmdale, PA, USA, 2025; pp. 217–250. [Google Scholar] [CrossRef]

- Montgomery, D.C.; Peck, E.A.; Vining, G.G. Introduction to Linear Regression Analysis, 6th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Mienye, I.D.; Jere, N. A Survey of Decision Trees: Concepts, Algorithms, and Applications. IEEE Access 2024, 12, 86716–86727. [Google Scholar] [CrossRef]

- Schonlau, M.; Zou, R.Y. The Random Forest Algorithm for Statistical Learning. Stata J. Promot. Commun. Stat. Stata 2020, 20, 3–29. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A Comparative Analysis of Gradient Boosting Algorithms. Artif. Intell. Rev. 2020, 54, 1937–1967. [Google Scholar] [CrossRef]

- Ogunleye, A.; Wang, Q.-G. XGBoost Model for Chronic Kidney Disease Diagnosis. IEEE/ACM Trans. Comput. Biol. Bioinf. 2020, 17, 2131–2140. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Müller, A.; Nothman, J.; Louppe, G.; et al. Scikit-Learn: Machine Learning in Python. arXiv 2012. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; NIPS: Cambridge, MA, USA, 2017. [Google Scholar]

| Column Name | Description |

|---|---|

| mcs_dl | A parameter influencing download speed. |

| brate_dl | The download speed from the network. |

| ok_dl | Count of successfully received download packets. |

| dl_bs | The volume of data queued for download. |

| brate_ul | The upload speed to the network. |

| ok_ul | Count of successfully transmitted upload packets. |

| ta | Synchronizes a device’s signal with the cell tower. |

| dl_ul_ratio | The ratio of download speed to upload speed. |

| mcs_ta_interaction | A combined value showing the interaction between mcs_dl and ta. |

| Model | MAE | RMSE | R2 | R2 (3-Fold CV Mean) |

|---|---|---|---|---|

| XGBoost | 37.375 | 83.309 | 0.998 | 0.996 |

| Gradient Boosting | 45.166 | 91.437 | 0.997 | 0.995 |

| Random Forest | 150.927 | 236.735 | 0.981 | 0.978 |

| Decision Tree | 179.224 | 352.247 | 0.959 | 0.967 |

| Linear Regression | 417.181 | 626.936 | 0.869 | 0.880 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nurakhov, Y.; Mukhanbet, A.; Aibagarov, S.; Imankulov, T. A Machine Learning Approach to Investigating Key Performance Factors in 5G Standalone Networks. Electronics 2025, 14, 3817. https://doi.org/10.3390/electronics14193817

Nurakhov Y, Mukhanbet A, Aibagarov S, Imankulov T. A Machine Learning Approach to Investigating Key Performance Factors in 5G Standalone Networks. Electronics. 2025; 14(19):3817. https://doi.org/10.3390/electronics14193817

Chicago/Turabian StyleNurakhov, Yedil, Aksultan Mukhanbet, Serik Aibagarov, and Timur Imankulov. 2025. "A Machine Learning Approach to Investigating Key Performance Factors in 5G Standalone Networks" Electronics 14, no. 19: 3817. https://doi.org/10.3390/electronics14193817

APA StyleNurakhov, Y., Mukhanbet, A., Aibagarov, S., & Imankulov, T. (2025). A Machine Learning Approach to Investigating Key Performance Factors in 5G Standalone Networks. Electronics, 14(19), 3817. https://doi.org/10.3390/electronics14193817