1. Introduction

Single-phase ground (SPG) faults are the most common and widespread type of failure in the operation and maintenance of distribution networks. This is particularly evident in systems widely deployed in China, where the three-phase neutral point is either ungrounded or grounded through an arc suppression coil. The incidence rate of SPG faults can exceed 80% [

1,

2,

3]. If not promptly and accurately identified—and if the faulty line is not isolated in time—these faults may escalate into more severe issues such as phase-to-phase short circuits, equipment damage, or even large-scale blackouts, posing serious threats to power supply security and system stability [

4].

The causes of SPG faults are complex and varied, involving both external and internal factors. External causes include lightning arrester breakdown, tree branch contact, line-to-crossarm discharge, and interference from small animals or vegetation [

5]. Internally, equipment failures and construction defects—such as cable insulation damage, insulator breakdown, and flaws in manufacturing or installation—are major contributors. These fault types not only differ in their manifestations but may also exhibit overlapping waveform characteristics. Under high-resistance grounding and system asymmetry, fault signals often feature low amplitude, short duration, and wide frequency distribution, significantly increasing the difficulty of fault line identification. Traditional fault identification approaches primarily rely on features extracted from transient or steady-state electrical quantities, with decisions made using heuristic rules or machine learning models. However, in small-current systems, fault transients are easily affected by factors such as high transition resistance and small initial phase angles. This weakens the expressive power of extracted features and results in relatively low accuracy for traditional line selection algorithms [

6].

To improve fault identification performance, various feature extraction and classification methods have been proposed. For instance, Li et al. introduced the improved Hilbert Huang transform-random forest (IHHT-RF) method, which combines transient feature extraction with random forest classification. Although the method demonstrates robustness and adaptability, it remains limited by its dependence on fixed feature engineering, which reduces scalability in complex scenarios [

7]. Liang et al. developed a deep belief network (DBN) optimized using phasor measurement unit-based network parameter configuration, enabling accurate and efficient SPG fault identification [

8]. Gao et al. proposed a semantic segmentation-based method for single-phase grounding fault detection and identification, which is experimentally shown to accurately determine both the occurrence time and the fault type [

9]. Wang et al. developed a multi-criteria fusion-based fault location method using Dempster–Shafer evidence theory, which remains unaffected by conductor sag, galloping, or data window length and proves effective under noise interference and high-impedance fault conditions [

10]. Recent studies have further advanced fault detection strategies. Bayati et al. demonstrated that SVM can effectively locate high-impedance faults in DC microgrid clusters under varying grounding resistances, without relying on communication links [

11]. Aiswarya et al. designed a two-level adaptive SVM scheme for microgrids with renewable integration and EV loads, and verified its accuracy through hardware-in-the-loop experiments [

12]. While these works confirm the feasibility of SVM in different DC and microgrid contexts, their applicability remains limited by handcrafted features and scenario-specific assumptions. In contrast, our study targets 10 kV AC distribution networks with ungrounded or arc-suppression coil grounding, and explores an interpretable feature-selection-driven modeling approach validated on both simulated and measured data, aiming to achieve stronger robustness and scalability than existing SVM-based methods. Gao et al. developed a high-impedance fault (HIF) detection method that integrates Empirical Wavelet Transform (EWT) with differential fault energy analysis. By employing permutation entropy and permutation variance as discriminative features, the method effectively distinguishes HIFs from normal disturbances. Experimental results further confirm its adaptability and robustness in microgrids and systems with distributed energy resource integration [

13]. Wan et al. proposed a line selection method based on transfer learning using the ResNet-50 architecture. By extracting features from zero-sequence voltage and current waveforms at the fault initiation moment, the method enables accurate identification of single-phase grounding faults. It achieved a validation accuracy of 99.77% even under noisy conditions, underscoring its effectiveness and reliability in complex scenarios [

14]. Yang et al. developed a fault direction identification method based on local voltage information, suitable for small-current grounding systems and unaffected by distributed generation or

V/

V-connected potential transformers, thereby enhancing system protection reliability [

15]. Su et al. developed a single-phase earth ground fault identification model based on the AdaBoost algorithm and validated its effectiveness and adaptability in identifying such faults [

16]. Zhang et al. combined improved variational mode decomposition with ConvNeXt to propose a high-accuracy line selection method under noisy conditions [

17]. Li et al. introduced a method based on Median Complementary Ensemble Empirical Mode Decomposition and Multiscale Permutation Entropy (MCEEMD-MPE) normalization and k-means clustering, achieving 100%-line identification accuracy in field tests by extracting key zero-sequence current components across multiple scales [

18]. Luo et al. designed a high-resistance fault detection approach using an improved stacked denoising autoencoder, enhancing both feature representation and model robustness [

19]. Beyond SVM, hybrid and deep-learning approaches have achieved further progress. Ravi Kumar Jalli et al. combined adaptive variational mode decomposition with a deep randomized network to achieve accurate fault classification in DC microgrids [

20]. Guo et al. introduced a Swin-Transformer that leverages synthesized zero-sequence signals to enable threshold-free SPG fault location in 10 kV distribution systems [

21]. Zhao et al. integrated wavelet threshold filtering with graph neural networks, thereby enhancing voltage transformer fault diagnosis under noisy operating conditions [

22]. Collectively, these studies highlighted the effectiveness of coupling deep models with advanced signal processing techniques to improve robustness and generalization in complex power system environments.

Hybrid models have also gained attention. Alhanaf et al. developed a Convolutional Neural Network-Long Short-Term Memory (CNN-LSTM) framework that captures both spatial and temporal features, improving fault identification accuracy in complex systems [

23]. Ozgur Alaca et al. proposed a two-stage CNN architecture integrating time- and frequency-domain features, enabling accurate classification of various fault types, including SPG faults [

24]. Gu et al. constructed a real-time grounding fault location method for auxiliary power supply systems by combining fault mechanism modeling with waveform-based statistical feature extraction, demonstrating practical effectiveness on embedded platforms [

25]. Wu et al. proposed a fault type identification method for AC/DC hybrid transmission lines based on the whale optimization algorithm designed by an orthogonal experiment design-least square support vector machine (OED-WOALSSVM) algorithm, and validated its feasibility and identification accuracy [

26]. Guo et al. presented a threshold-free line selection algorithm based on the Swin-Transformer, synthesizing zero-sequence voltage and current signal images and leveraging Fourier transform and image segmentation to achieve high-precision fault localization under diverse conditions [

21]. Similarly, reinforcement learning, deep temporal convolutional networks, and dual-branch CNN frameworks have also been explored. Zhang et al. employed temporal convolutional networks (TCN) for intelligent distribution system diagnosis, which effectively captured temporal dependencies but suffered from limited interpretability, restricting practical adoption [

27]. Teimourzadeh et al. proposed a CNN-LSTM method for high-impedance SPG fault diagnosis in transmission lines, achieving high accuracy under varying resistances, but requiring large training data and lacking interpretability [

28]. Fahim et al. proposed a capsule network with sparse filtering (CNSF) for transmission line fault detection, which demonstrated robustness against noise and parameter variations but remained heavily reliant on complex preprocessing and data transformation [

29]. Gao et al. recently designed a Dual-Branch CNN with feature fusion for SPG fault section location in resonant networks, yielding high accuracy in both simulations and field tests but facing scalability challenges when applied to heterogeneous datasets [

30]. Despite continuous improvements in the above methods, several common challenges remain: (1) strong reliance on handcrafted features and complex signal preprocessing, making it difficult for different network architectures to consistently adapt to diverse input formats; (2) poor model interpretability, hindering the clear understanding of how key features influence fault line selection; (3) insufficient capacity of individual models to extract deep features, particularly under multi-source data fusion and complex fault scenarios, often resulting in classification ambiguity. Cross-domain advances provide valuable insights for developing interpretable and adaptive fault detection methods. Yao et al. designed a parallel multiscale convolutional transfer network (PMCTNN) for bearing fault diagnosis, achieving a balance between efficiency and accuracy through transfer learning [

31]. Similarly, Chen et al. proposed a dual-scale complementary spatial–spectral joint model (DSCSM) for hyperspectral image classification, where multi-scale truncated filtering and spatial probability optimization enhanced robustness under small-sample conditions [

32]. Beyond spatial–spectral fusion, Guo et al. introduced a multi-modal situation awareness framework for real-time air traffic control, integrating speech and trajectory data for intent understanding and prediction, and further developed a contextual knowledge–enhanced ASR model (CATCNet) that leveraged domain-specific prior knowledge to improve named-entity recognition accuracy in noisy environments [

33,

34]. These studies collectively highlight the advantages of multi-scale fusion, attention mechanisms, and multi-modal context-aware designs, offering inspiration for SPG fault analysis where diverse signals and prior knowledge can be jointly exploited.

In parallel, optimization-driven strategies have continued to evolve. Ran et al. proposed a fuzzy system–based genetic algorithm that adaptively segmented GPS trajectories, demonstrating how evolutionary operators can improve stability and continuity [

35]. Deng et al. developed a multi-strategy quantum differential evolution framework with hybrid local search, effectively mitigating premature convergence in large-scale routing optimization [

36]. Building on these advances, Li et al. introduced a cross-domain adaptation framework that combined maximum classifier discrepancy with deep feature alignment (MCDDFA), significantly improving diagnostic accuracy under variable operating conditions [

37]. Together, these approaches underscore the importance of balancing global exploration with local refinement, and of incorporating cross-domain and multi-modal information, thereby paving the way for more adaptive and interpretable SPG fault detection models in AC distribution networks.

In response to these problems, this paper proposes a three-phase system SPG fault line selection method based on the Tabular Data Neural Network (TabNet) model. The proposed method demonstrates strong robustness and practicality across diverse fault types and grounding resistances, effectively overcoming the high complexity of TCNs [

27], the poor interpretability of CNN-LSTM methods [

28], the preprocessing dependency of CNSF [

29], and the scalability bottleneck of Dual-Branch CNNs [

30]. Compared with traditional neural networks or tree-based models, TabNet maintains training efficiency while offering stronger interpretability and robustness, making it particularly suitable for the joint modeling of waveforms and physical parameters in three-phase power systems. The main innovations of this study include the following: (1) A structured feature set integrating zero-sequence components, voltage, current statistical features, as well as physical parameters such as impedance and conductor type is constructed; (2) The TabNet model is introduced as the fault line classifier, and a linear model feature selection based on L2 norms is added to the TabNet model. The L2 norm [

38,

39,

40] size of each feature is calculated and sorted to further improve the recognition accuracy and interpretability; (3) The simulated data are combined with the measured data to establish a model and conduct a comparative evaluation with recently proposed advanced approaches, including TCN, CNN-LSTM, CNSF, and Dual-Branch CNN. The experimental results show that the proposed method exhibits strong robustness and practicability under various fault types and grounding resistance levels, providing effective support for engineering applications.

2. Dataset Construction

2.1. Development and Configuration of the SPG Fault Simulation Model

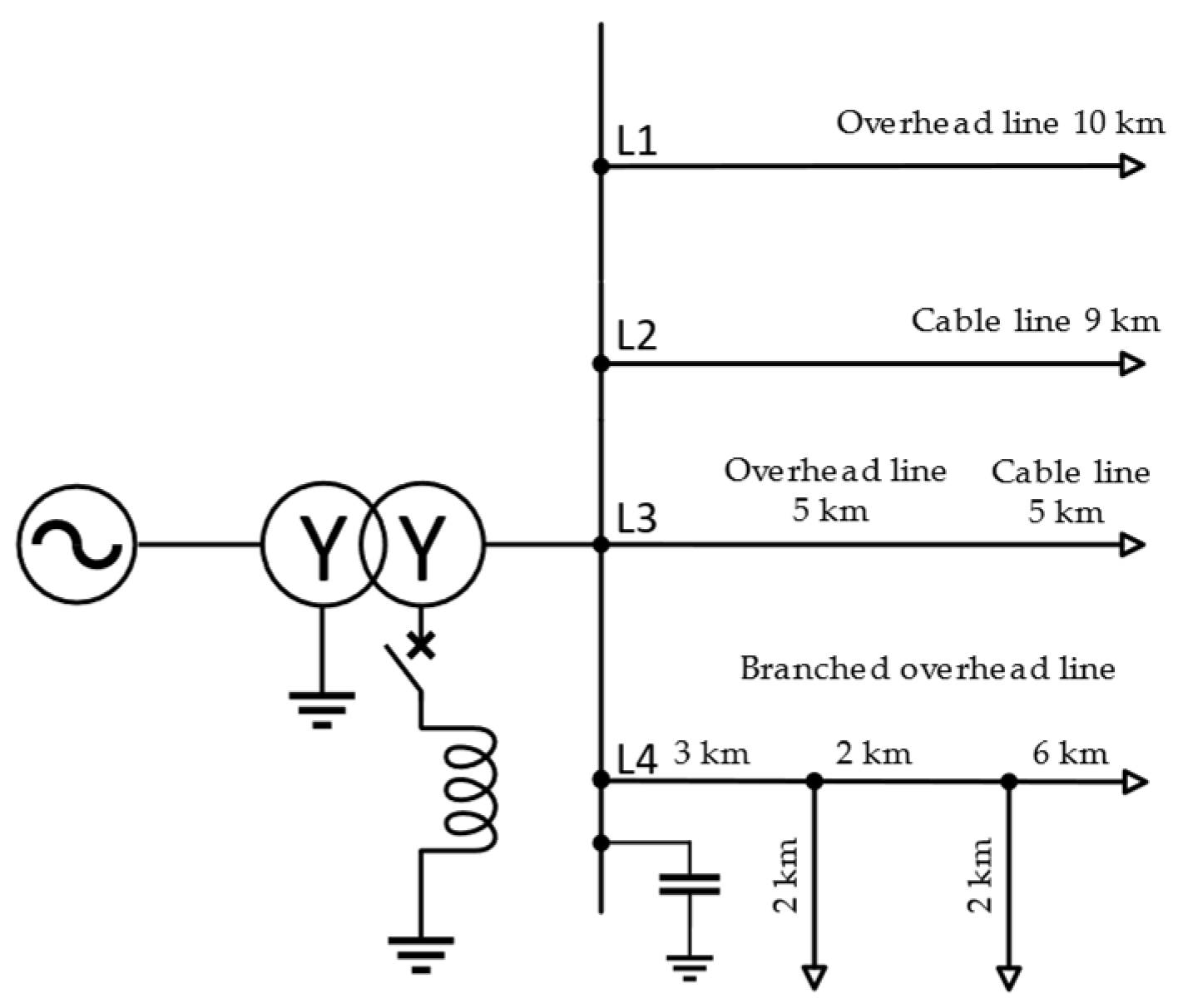

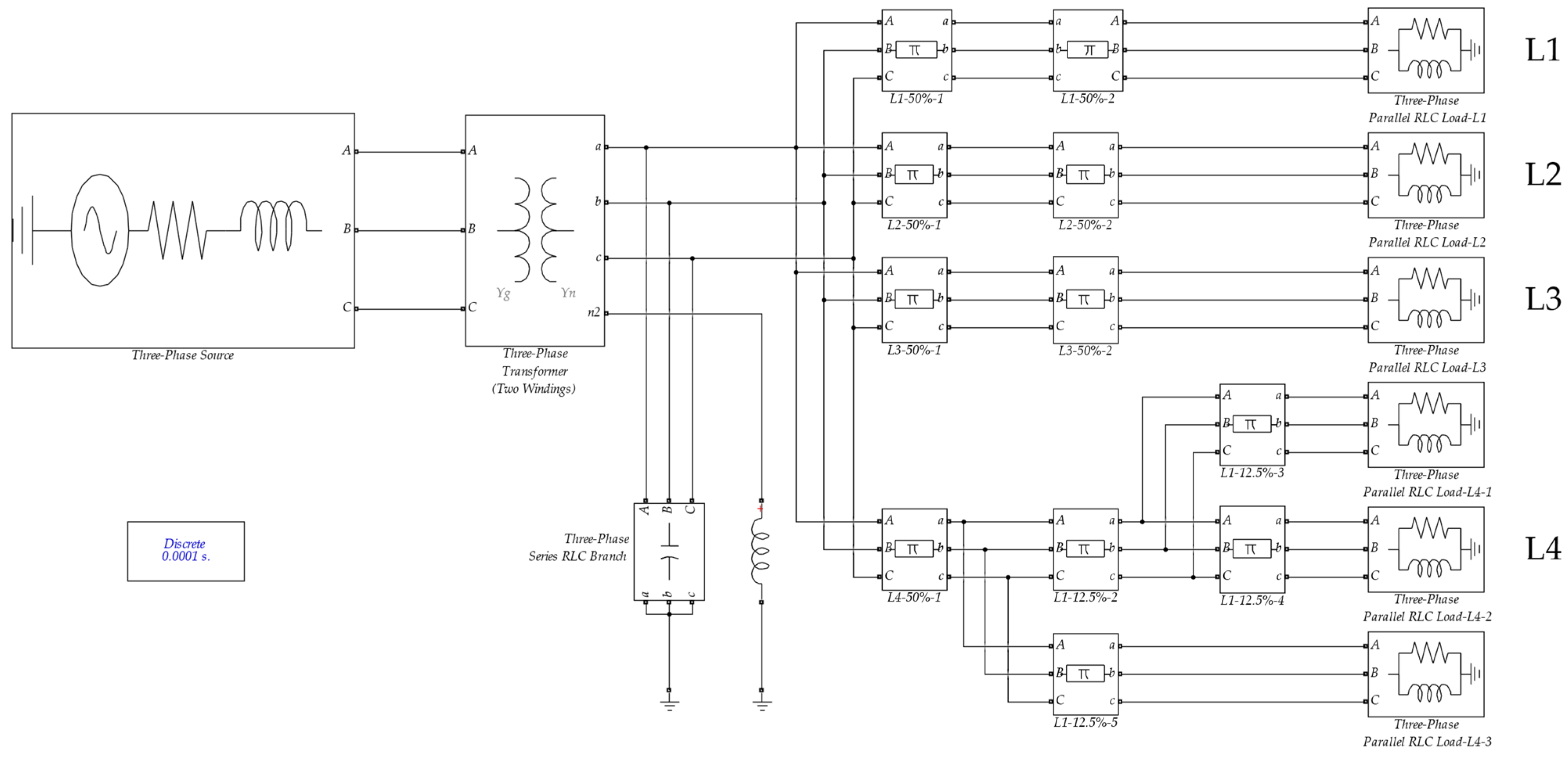

To investigate the transient characteristics of SPG faults in distribution networks, a 10 kV distribution system simulation model is developed using MATLAB/Simulink R2021b (The MathWorks, Natick, MA, USA). The model structure is inspired by EMTP-based configurations reported in the literature and comprises a 110/10.5 kV transformer, four feeder branches, and representative loads. For the purposes of simulation and parameter control, three equivalent transmission lines with differing positive- and zero-sequence impedances are implemented on the 10 kV bus side. At the terminal of each line, parallel RLC loads are connected to emulate the characteristics of typical industrial and commercial users. The schematic diagram of the SPG fault model is shown in

Figure 1, in which the feeder topology and grounding resistance configurations are illustrated. In branch L1, the “Three-Phase Parallel RLC Load” block in Simulink is utilized, with the connection type configured as grounded Y (wye). The rated phase voltage is specified as 10 kV, with an active power of 249 kW and a reactive inductive power of 15.7 kvar. The system is operated at a nominal frequency of 50 Hz. These configurations are implemented in

Figure 2, which presents the Simulink implementation of the system model. Line lengths and electrical parameters, such as inductive reactance and susceptance, are assigned based on models referenced from previous studies.

2.1.1. Fault and Load Condition Configuration

To evaluate the model’s capability in capturing transient characteristics, the simulation is configured with a fault initiation time of 0.005 s, a fault duration of 0.005 s, a total simulation time of 0.02 s, and a sampling frequency of 10 kHz. SPG faults are introduced at multiple locations along feeder L1, specifically 5 km from the bus, under two grounding resistance levels ( = 10 Ω and 50 Ω), to simulate varying fault intensities. The load access ratio’s impact on the transient response is also considered, with representative load conditions of 20%, 50%, and 80% loading at the ends of each feeder. The system’s zero-sequence current is monitored for each condition to analyze its transient behavior under varying fault and loading scenarios. Symmetrical three-phase parallel RLC loads are used at each feeder endpoint, grounded using a Y-connection to emulate realistic industrial and commercial loads. For example, on branch L1, the phase-to-phase voltage is maintained at 10 kV, and the system frequency is held at 50 Hz. The load is inductive, with no capacitive component. Simulink blocks are configured to extract branch voltage and current signals, supporting feature extraction and transient response analysis. These configurations reflect the steady-state power characteristics of real-world distribution networks, ensuring the simulation’s engineering relevance and fidelity.

2.1.2. Data Preparation and Description

The dataset for model training and evaluation is generated based on the previously described simulation setup. Each data sample includes labeled voltage and current signals collected under various fault scenarios, such as different grounding resistances (10 Ω and 50 Ω), fault locations, and load access ratios (20%, 50%, and 80%). The choice of 10 Ω as the minimum and 50 Ω as the maximum grounding resistance explicitly reflects the contrast between low-impedance faults, which result in higher fault currents and stronger transients, and high-impedance faults, which produce weaker currents but are more challenging to detect. Likewise, varying the fault locations enables the analysis of how electrical distance from the source influences signal attenuation and distortion, ensuring coverage of both near-bus and remote scenarios [

41]. Additionally, feeder characteristics, including impedance variations and branch-specific loads, are considered to capture a wide range of operational conditions. The collected signals are preprocessed by removing baseline drift, normalizing amplitudes, and segmenting them into uniform time windows. Each segment is labeled with its fault type and location, ensuring the dataset is well-suited for supervised learning tasks.

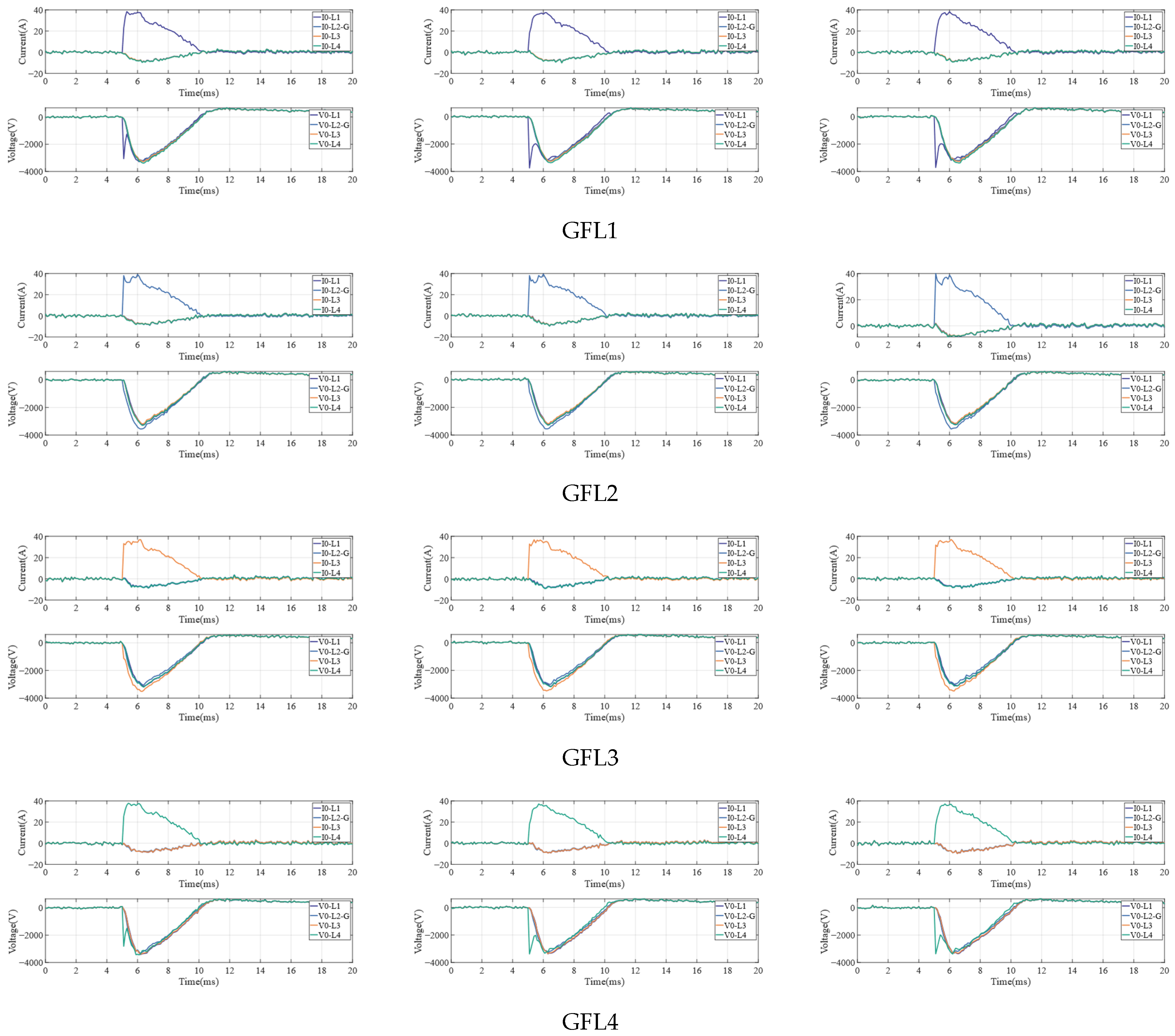

A representative sample from the dataset is shown in

Figure 3, in which typical zero-sequence current waveforms under various single-phase grounding fault conditions are illustrated. Each waveform is associated with a distinct simulation configuration, characterized by variations in fault location, grounding resistance, and load access ratio. Specifically, the samples labeled GFL1 to GFL4 are associated with scenarios in which ground faults are applied to different feeder lines (L1 to L4). The label “GFLx” is defined to represent a Ground Fault on Line x, where the numeric suffix (1–4) is used to distinguish the specific line on which the fault is introduced. This subset is intended to demonstrate the diversity of faulted line scenarios and the comprehensiveness of the simulation configurations. The dataset has been systematically constructed to facilitate data-driven analyses of transient characteristics, as well as to support fault identification, localization, and classification tasks.

2.2. Feature Engineering

In the detection of SPG faults in three-phase four-wire systems (including phases A, B, C, and a neutral point), accurate and efficient judgment relies on the collection, fusion, and analysis of various current and voltage eigenvalues from each phase. When an SPG fault occurs, multiple eigenvalues of current and voltage change, including time-domain features such as mean, standard deviation, maximum/minimum values, peak-to-peak value, skewness, and mean absolute deviation; frequency-domain features such as spectral centroid, spectral entropy, spectral bandwidth, skewness, and peak value; wavelet features such as wavelet entropy, energy of each wavelet decomposition layer, and wavelet packet node energy; and waveform shape features such as main peak width, number of peaks, average peak amplitude, and maximum peak amplitude. For single-phase fault detection, it is essential to extract voltage and current eigenvalues from each line of the three-phase four-wire system as the main basis for fault assessment, despite the high computational complexity involved. In this study, for identifying SPG faults in three-phase four-wire transmission systems, multi-dimensional eigenvalues are weighted and fused into an eigenvalue table for clear data presentation. The TabNet model is then used to process data in the eigenvalue table to determine the location and severity of the SPG fault.

Step 1. Twenty-four types of waveform data from eight channels (G1–G4 current and G1–G4 voltage) under six different fault conditions ( = 10 Ω/50 Ω; fault positions = 20%, 50%, 80%) are preprocessed, including de-mean and normalization, smoothing (Savitzky–Golay filtering), and main peak alignment.

Step 2. The L2-norm is employed to evaluate and rank the importance of multi-domain features in the classification of SPG faults. The top 20 features with the highest L2-norm values are selected as representative inputs to construct the final feature dataset.

Step 3. To further reduce computation and improve system performance, multimodal feature fusion is performed on the eigenvalue data. Specifically, 24 types of waveform eigenvalue data from eight channels under six different fault states are fused simultaneously to form one channel.

Step 4. The TabNet model is used to process data samples after multimodal feature fusion. Meanwhile, the same data are processed by Support Vector Machine (SVM), CNN, Long Short-Term Memory (LSTM), and Transformer models. The superiority of the TabNet model is demonstrated through five performance metrics: Accuracy, Kappa, Macro-F1, classification report, and confusion matrix.

2.2.1. Data Preprocessing

In the detection of SPG faults, data preprocessing is necessary to remove noise, correct distortion, and normalize feature scales. These steps ensure that multi-channel signals are properly aligned and that extracted features are enhanced for better discrimination. The ultimate goal is to provide clean and consistent inputs that improve the robustness and accuracy of the model. Its core is to denoise current and voltage waveform signals, correct their distortion, unify data formats, and improve the purity and analyzability of fault signals. Specifically, it includes suppressing high-frequency noise (e.g., wavelet threshold denoising) to eliminate white noise and impulse interference; and imputing missing values and correcting outliers to ensure waveform continuity and rectify distortion.

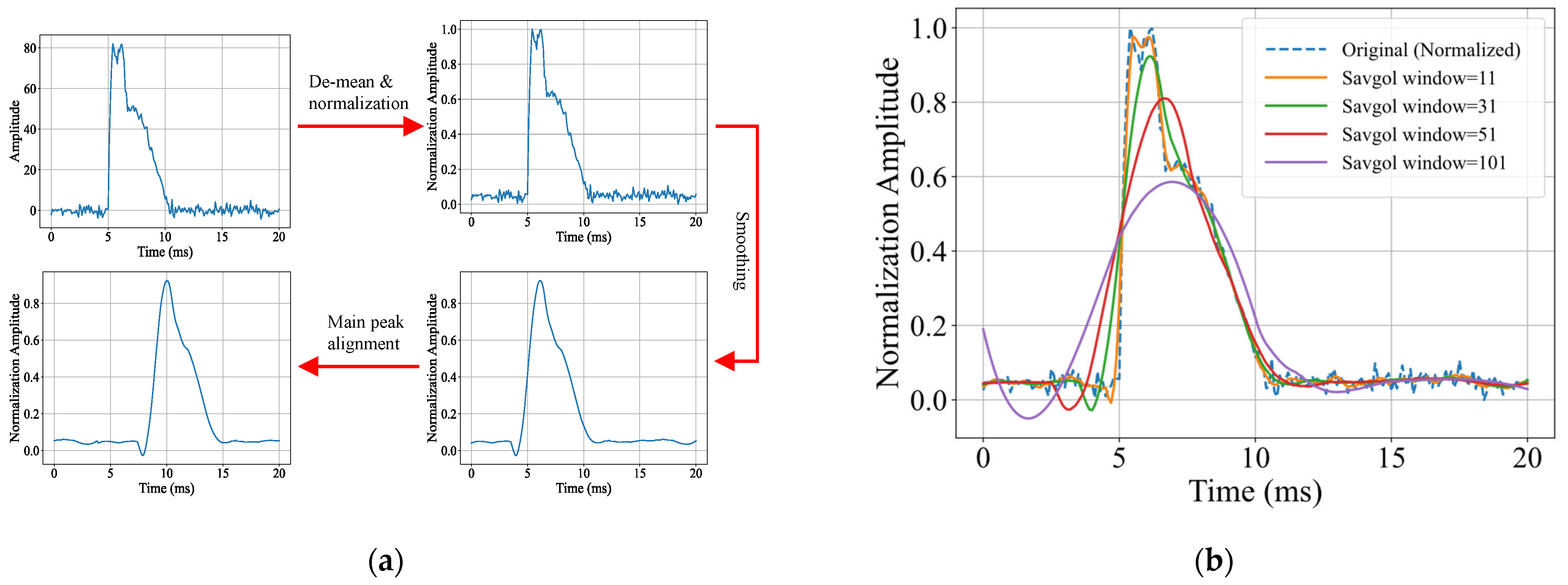

The original waveform signals are standardized and filtered, as shown in

Figure 4a, Demeaning eliminates the DC offset of the signal to prevent baseline drift caused by CT/PT zero drift from distorting feature quantities. Normalization, used when fault currents in different lines vary significantly, eliminates the influence of magnitude, unifies the amplitude scale, and enhances model generalization. Smoothing involves using the Savitzky–Golay filtering method to smooth waveform data, which, compared with common methods like moving average filtering and wavelet denoising, offers advantages of no phase delay, minimal edge distortion, and high computational efficiency, accurately retaining and extracting features of transient pulses (e.g., Max Peak, Impulse Factor), avoiding distortion of Peak Width, Bandwidth, etc., caused by waveform time shift after filtering, and effectively removing random oscillations (2–5 kHz high-frequency burrs) in arc grounding, as shown in

Figure 4b. Main peak alignment unifies the fault initiation time to eliminate propagation delay differences (μs-ms level) during faults in different lines, ensuring no window misalignment in feature extraction.

2.2.2. Feature Extraction

In diagnosing SPG faults in three-phase four-wire systems, a fault event leads to changes in multiple multi-dimensional voltage and current features across the four lines. As summarized in

Table 1, the extracted features span time-domain, frequency-domain, waveform, and wavelet perspectives, including interquartile range (IQR), standard deviation (STD), mean absolute deviation (MAD), interpeak value (PTP), wavelet packet node energy (WPN), and wavelet decomposition layer energy (WE). These descriptors are closely aligned with SPG fault mechanisms: arc faults produce sharp transients that elevate kurtosis and crest factor; unbalanced or capacitive grounding introduces waveform asymmetry reflected in skewness; spectral centroid and entropy characterize the redistribution of spectral energy under high resistance; and wavelet-based measures emphasize localized transient responses. Collectively, these features capture both statistical properties and physical fault signatures, ensuring that the extracted set provides a comprehensive and interpretable representation of SPG fault characteristics. Input multiple multi-dimensional features into a linear model based on L2 norms for weight sorting and screen out the top 20 most important feature values in the judgment of single-phase grounding faults. The L2 norm-based linear model quantifies feature importance through the L2 norm of the weight vector of the linear model—the larger the absolute value of the weight, the higher the contribution of the feature to fault discrimination. The expression of the linear model is as follows:

where

is the fault state label (usually,

represents the normal state, and

represents the fault state),

is the

ith feature (up to 20 features), and

is the feature weight, which is used to measure feature importance.

Meanwhile, it is necessary to impose a constraint on the L2 norm, i.e., L2 regularization (Ridge regression):

where

is the regularization strength, which controls weight decay.

can directly reflect the feature’s ability to judge faults. After the linear model based on the L2 norm screens and ranks the features in terms of importance, the top 20 features in terms of importance are selected, as shown in

Figure 5. The fused dataset is composed of 59 features extracted from time-domain, frequency-domain, wavelet-based, and statistical descriptors. To ensure reliable feature representation, all features are ranked according to their L2 norm values, by which their overall contributions across samples are quantified.

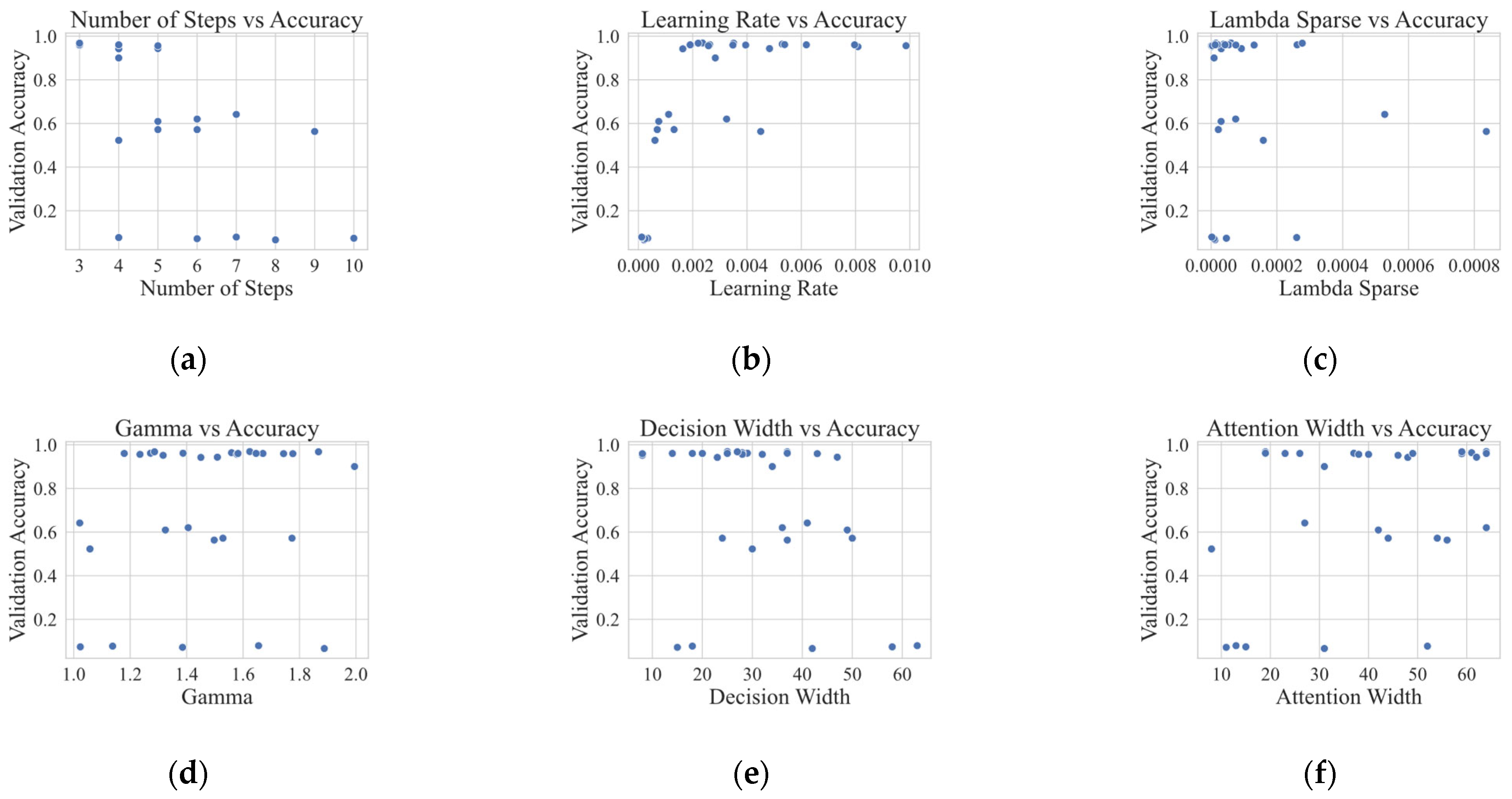

Figure 5a illustrates that model performance (accuracy, macro-F1, and kappa) steadily improves as the number of selected features increases, but reaches a plateau once 20–30 features are included. This demonstrates that additional features beyond this range contribute little new information and mainly introduce redundancy. Therefore, the top 20 features are selected, as they preserve sufficient discriminative information while maintaining compactness and interpretability. These selected features collectively capture diverse aspects of the signal, ensuring both relevance and complementarity in the fused feature space, while providing a stable, data-driven basis for model training and improving classification accuracy.

They are presented in

Figure 5b and measure the magnitude of each feature vector across all samples. Features with higher L2 norms are considered to carry more discriminative power for fault classification. The most important features include kurtosis, skewness, and IQR, highlighting their strong relevance in capturing signal shape and distribution. Several wavelet-based features, such as wavelet entropy, wavelet energy from different decomposition levels, and WPN are also highly ranked, indicating their effectiveness in representing time-frequency characteristics of fault signals. Additionally, statistical indicators like crest factor, MAD, and STD are selected for their ability to reflect signal amplitude and fluctuation. These selected features collectively capture diverse aspects of the signal, ensuring both relevance and complementarity in the fused feature space, while providing a stable, data-driven selection process that preserves high-impact information for model training and improves classification accuracy.

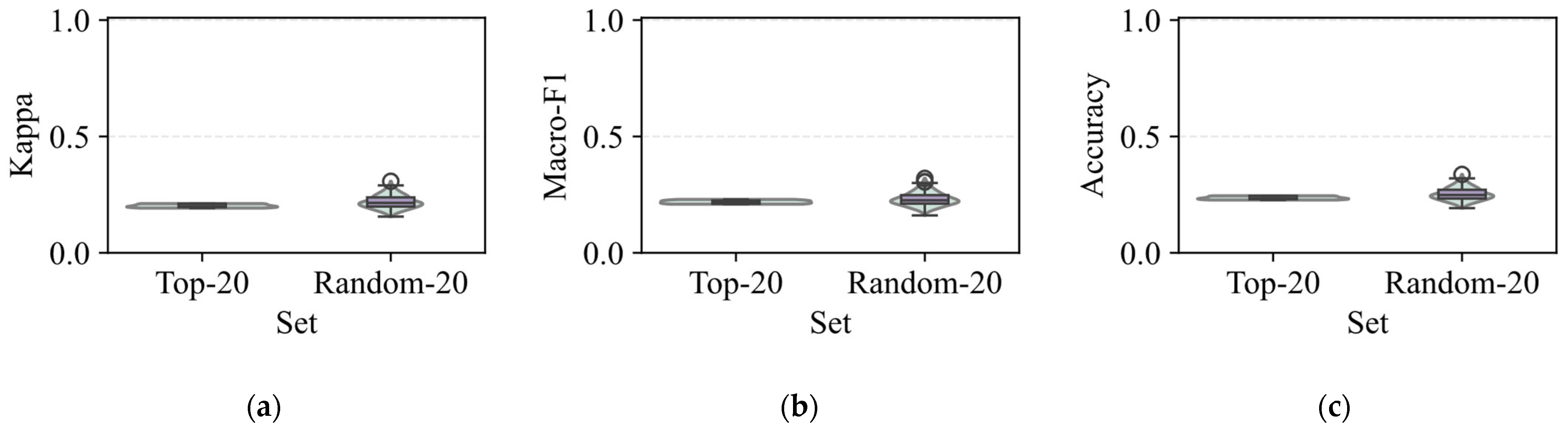

Figure 6 further validates the necessity of the proposed feature selection strategy by comparing the performance of the Top-20 features with that of randomly selected feature subsets of the same size. As shown in

Figure 6a–c, the Top-20 features consistently achieve significantly higher scores in terms of kappa coefficient, macro-F1, and accuracy, while also exhibiting lower variance across repeated trials. In contrast, the Random-20 subsets produce unstable and substantially weaker results, highlighting the risk of relying on arbitrary feature combinations. These findings confirm that the proposed L2 norm-based selection not only captures the most informative and complementary features but also ensures robustness and reproducibility. Therefore, feature selection is a necessary step: it reduces redundancy, enhances interpretability, and guarantees that the retained features contribute directly to accurate and stable fault classification.

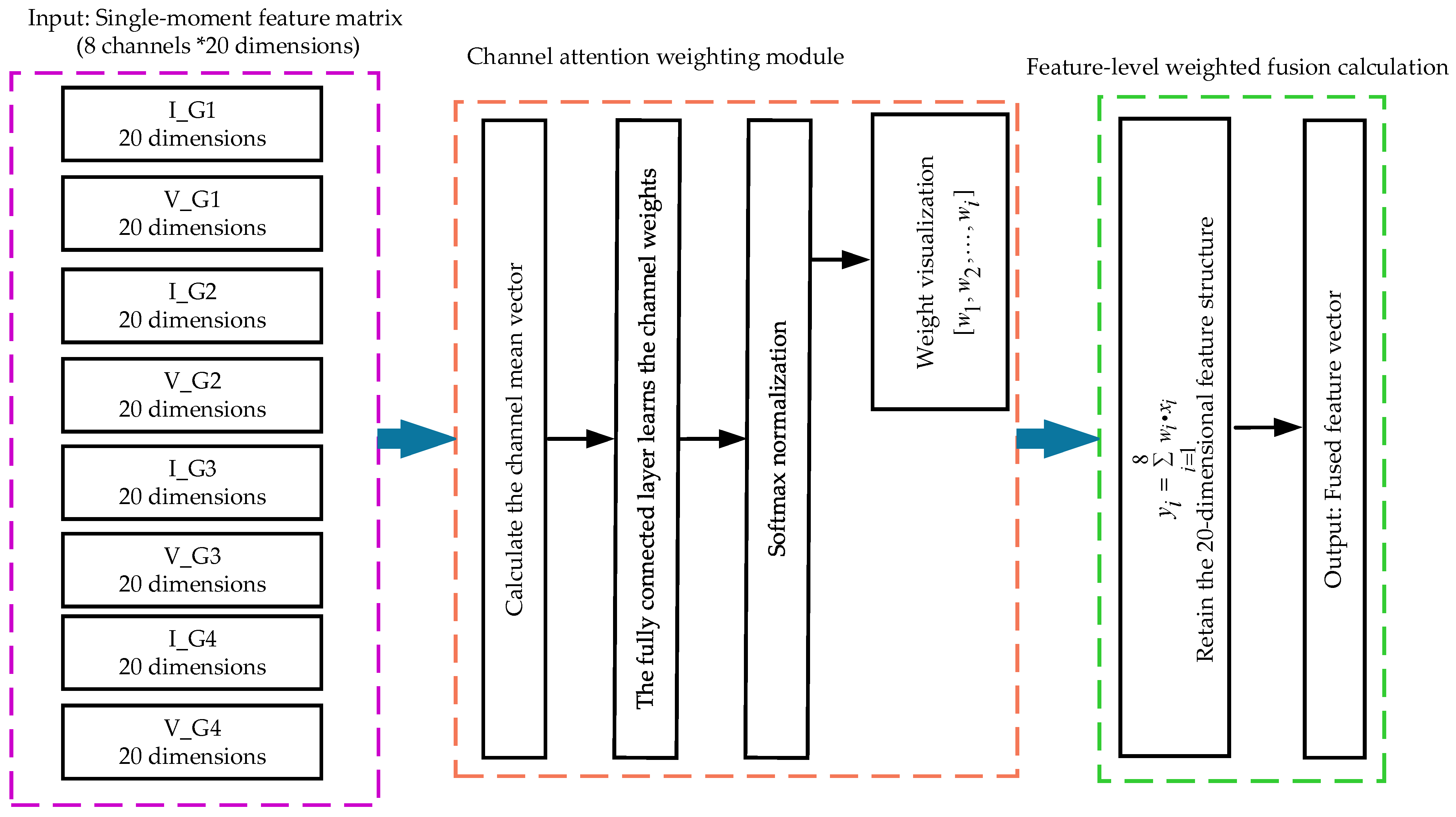

2.2.3. Multimodal Feature Fusion

For each fault instance, voltage and current signals from four feeder lines (L1 to L4) are collected, resulting in eight channels of raw input. Each channel captures a distinct spatial or electrical perspective of the same fault event. However, relying on a single feeder’s data may lead to incomplete or biased interpretation, especially under weak fault signals or noise. By fusing all eight channels, the model can leverage complementary and redundant patterns across lines, thus improving fault type discrimination and overall robustness. To effectively integrate information from all eight channels, a feature-level weighted fusion module is designed, as illustrated in

Figure 7. The input is a single-moment feature matrix comprising eight channels, each with 20 dimensions, derived from the voltage and current signals of four feeder lines. The detailed channel configuration is summarized in

Table 2.

First, the channel attention weighting module computes the mean vector for each channel to capture global channel-level statistics. These mean vectors are then passed through a fully connected layer followed by a SoftMax function to generate normalized channel attention weights:

These learned weights reflect the significance of each channel in the context of fault classification. Next, the feature fusion is performed by applying the learned weights to the corresponding feature channels using a weighted sum:

where

denotes the element of the

channel, and

is the

element of the final fused feature vector. This operation retains the 20-dimensional structure while adaptively emphasizing informative channels. The resulting fused feature vector captures the complementary information across all feeders, enhancing the model’s ability to generalize across various fault conditions.

4. Results and Analysis

This section presents the experimental results and discussion based on the optimized TabNet model applied to the collected grounding fault dataset. The model’s performance is evaluated using multiple metrics and compared against several baseline methods: Gao et al. use TCN for grounding fault detection in distribution systems [

28], Teimourzadeh et al. employ a CNN-LSTM framework to capture both spatial and temporal fault characteristics [

29], Fanim et al. introduce CNSF to enhance feature extraction for complex fault patterns [

30], and Gao et al. propose a Dual-Branch CNN to extract complementary features from upstream and downstream zero-sequence currents, showing strong robustness under resonant grounding conditions [

31]. As shown in

Figure 11a, the per-class precision of TabNet remains consistently high across all 24 fault categories, demonstrating its superior reliability and stability at the class level. In contrast, the baseline models, particularly TCN and CNN-LSTM, show drops in precision for certain classes such as class 10, 14, and 16, indicating challenges in handling complex or imbalanced fault conditions.

Figure 11b compares the overall performance of all models using three key evaluation metrics: accuracy, kappa coefficient, and macro-F1 score. The optimized TabNet model surpasses all baselines, achieving an accuracy of 0.9733, a kappa coefficient of 0.9721, and a macro-F1 score of 0.9739. These results confirm the superior generalization ability of TabNet and its effectiveness in accurately classifying diverse grounding fault types. To mitigate the effect of randomness, all models are evaluated under a 5 × 5 stratified cross-validation scheme with multiple independent runs. Mean values, standard deviations, and 95% confidence intervals (CIs) are reported. Furthermore, pairwise Wilcoxon signed-rank tests are conducted to ensure that the observed improvements are statistically significant.

The training dynamics of all evaluated models are shown in

Figure 12.

Figure 12a demonstrates that the optimized TabNet model exhibits smooth convergence, with steadily decreasing loss and consistently improving accuracy, indicating efficient and robust learning behavior. In contrast, CNSF in

Figure 12b shows larger fluctuations in both loss and accuracy, reflecting sensitivity to class imbalance and unstable convergence.

Figure 12c illustrates the TCN model, which converges rapidly in the early epochs but plateaus later, suggesting limited capacity to capture more complex patterns.

Figure 12d presents the CNN+LSTM model, which achieves fast convergence but also shows oscillations and early saturation. Finally, the Dual-Branch CNN in

Figure 12e reaches high training accuracy but displays a small gap between training and test performance, indicating mild overfitting. Overall, TabNet achieves the most stable and reliable training dynamics, highlighting its superior generalization ability in SPG fault classification tasks.

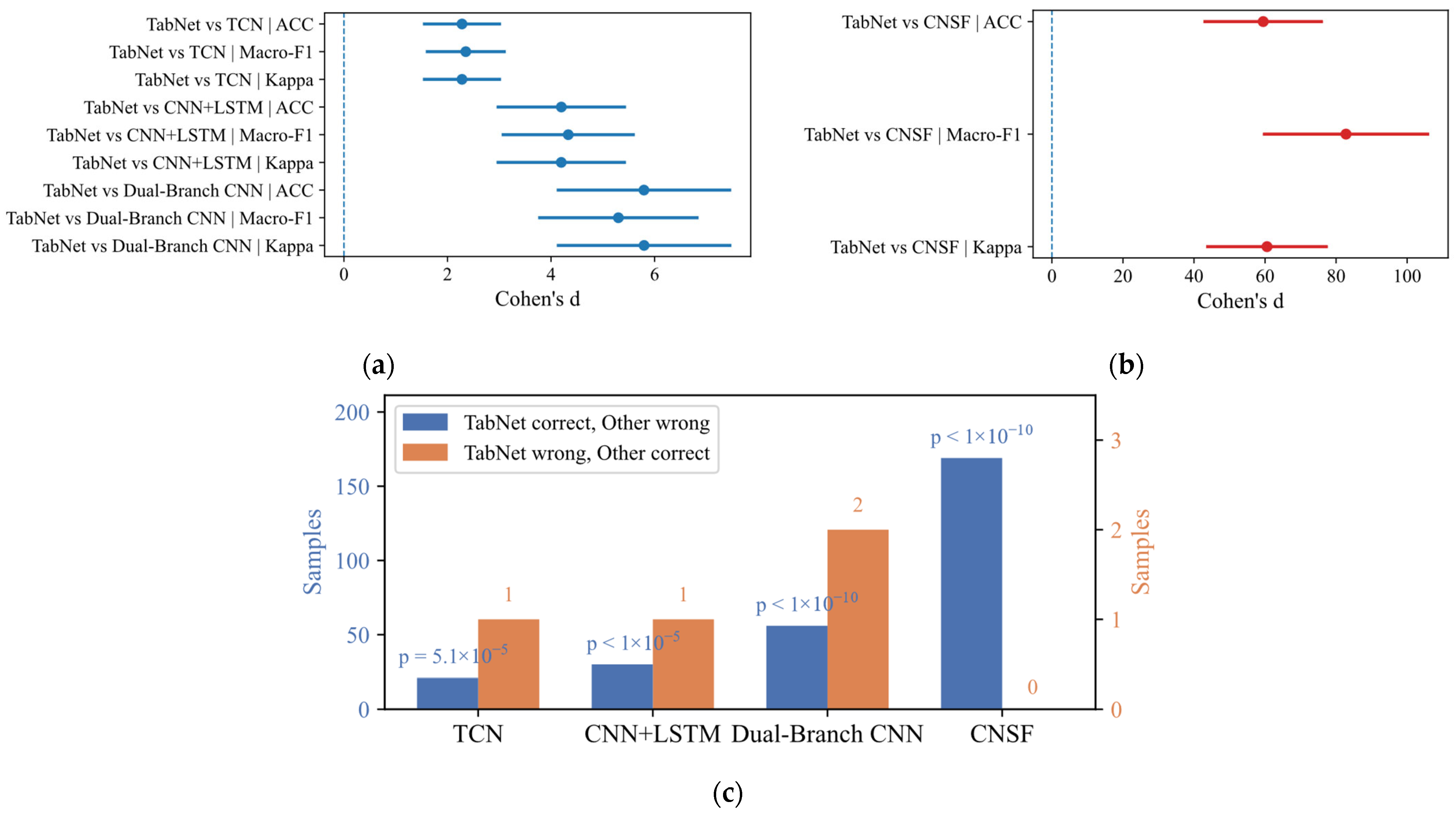

To ensure the robustness of the comparative analysis, a comprehensive evaluation protocol is adopted that integrates cross-validation, confidence interval estimation, and statistical hypothesis testing. Reporting only mean accuracy may conceal randomness and uncertainty, whereas statistical testing verifies whether improvements are consistently significant rather than incidental. All models are repeatedly evaluated under a 5 × 5 stratified K-fold cross-validation scheme, which balances class distributions in each fold and reduces variance from random partitioning.

As summarized in

Table 5, the optimized TabNet model achieves the best overall performance, with ACC = 0.9560 ± 0.0093 (95% CI: 0.9523–0.9594), Macro-F1 = 0.9531 ± 0.0108, and Kappa = 0.9541 ± 0.0097. These results demonstrate not only high accuracy but also remarkable stability, as reflected by the narrow confidence intervals. In contrast, TCN reaches 0.8730 ± 0.0322, CNN+LSTM achieves 0.789 ± 0.0396, and Dual-Branch CNN attains 0.700 ± 0.0440, while CNSF performs very poorly at 0.074 ± 0.0088. To further validate these findings,

Figure 13 presents statistical comparisons between TabNet and the baselines.

Figure 13a,b display forest plots of Cohen’s d effect sizes, showing consistently large to very large margins for TabNet, especially against CNSF, while

Figure 13c illustrates McNemar’s test, where asymmetric counts (21 vs. 1 for TCN, 30 vs. 1 for CNN+LSTM, 56 vs. 2 for Dual-Branch CNN, and 169 vs. 0 for CNSF) again yield highly significant

p-values (<1 × 10

−5). Collectively,

Table 5 and

Figure 13 provide strong and complementary evidence that TabNet not only achieves higher accuracy but also delivers significantly more reliable and generalizable performance than all baseline models.

To further demonstrate interpretability,

Figure 14 provides a multi-perspective visualization of the attention distributions learned by TabNet across different fault categories. Panel

Figure 14a shows the class-wise heatmap of attention scores aggregated over test samples, where distinct feature patterns can be observed for different fault types. For instance, the model consistently attends to kurtosis and skewness in arc-related faults (e.g., Class 10 and Class 14), while wavelet energy and entropy features are more prominent in capacitor grounding conditions (e.g., Class 16). Panel

Figure 14b further highlights the top-10 features contributing to a specific arc fault class, verifying that the model prioritizes physically meaningful descriptors such as higher-order statistical moments. In addition to class-level insights, Panels

Figure 14c,d quantitatively compare the attention mass allocated to the selected Top-20 features against multiple Random-20 feature groups. The Top-20 set consistently captures a significantly larger proportion of attention, with tighter and more stable distributions, thereby validating the robustness and discriminative power of the chosen features. These results confirm that TabNet does not merely rely on spurious correlations but adaptively emphasizes fault-relevant features in alignment with electrical and physical fault mechanisms. Together, these visualizations provide essential evidence for the interpretability of the proposed method. They show that the model’s decision-making is not a black box but is grounded in domain-relevant features, which is critical for building practitioner trust and for enabling practical deployment in real-world fault diagnosis of distribution networks.