1. Introduction

A traditional fraud detection task refers to a machine learning task that identifies or predicts manipulated information or deceptive behavior, primarily in areas such as banking, public administration, insurance, and taxation [

1]. However, as e-commerce platforms that are provided based on internet services have grown, various cases of damage have rapidly increased, and new fraud detection methods suitable for responding to them are now required [

1,

2]. Among them, fraud detection against malicious fraud types such as fraudulent reviews is treated as a major challenge [

3].

Fraud detection methods using conventional data mining techniques primarily rely on statistical analysis based on domain knowledge and computer science-based approaches to learn and predict the patterns of malicious data [

1]. However, this approach has several limitations in environments such as e-commerce platforms. First, data related to reviews often include variable-length textual information, making it difficult for data mining techniques to be effective. In addition, the fact that the core attributes often included vary by product also makes the consistent application of data mining difficult.

There exist algorithms applicable to non-fixed-size textual information for detecting fraudulent reviews. These methods learn the textual patterns of general reviews and detect abnormal text keywords. However, if attackers bypass the system’s detection by using spelling patterns involving special characters or numbers, then text analysis for fraudulent reviewer detection has limitations [

4,

5]. For example, a fraudulent review containing the phrase “Click this link to win money” can be easily filtered. However, if a word like “to” is replaced with “2” or a word like “money” is replaced with a symbol or term implying money, the fraudulent reviewer could be recognized as a regular user.

As a new approach that has a different direction from the previously reviewed fraud detection techniques, an analysis method that models relationships between users on e-commerce platforms in the form of a graph structure has attracted attention [

2,

6,

7,

8,

9]. Due to the nature of user authentication-based services, data generated on online platforms involve interactions across multiple attributes or behaviors of different users, and this can be effectively represented using a graph modeling approach.

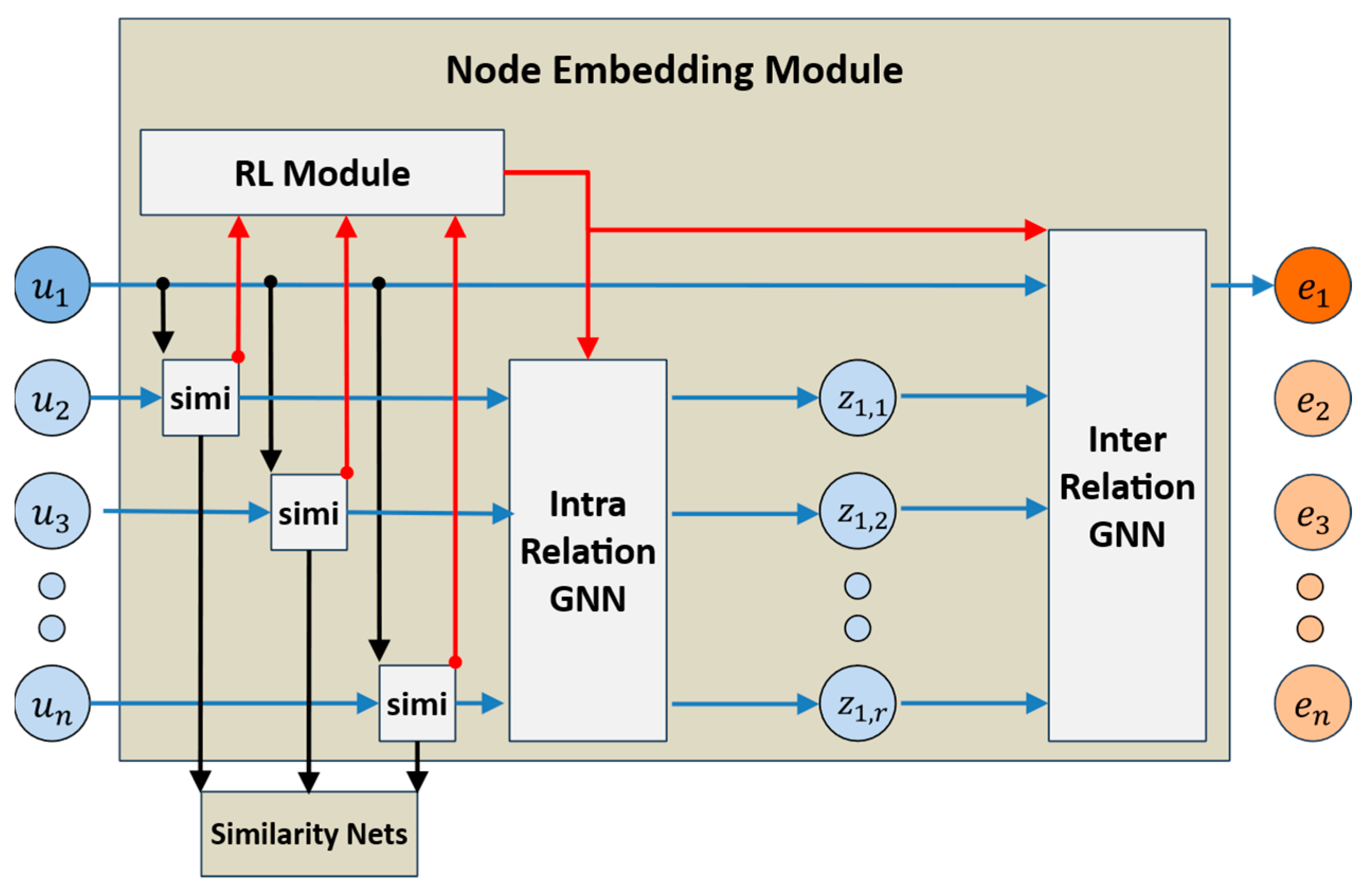

In this approach, platform users are modeled as nodes, and attributes such as the number of written reviews, star ratings, and textual similarity with other reviews can also be included. Such graph representations can be analyzed using fraud detection models based on graph neural networks. However, graph neural network algorithms that analyze whether a node in the graph representation is classified as a malicious node may experience performance degradation depending on the range of the embedding vector values during the node embedding process. Therefore, in this paper, we newly propose a scale regularization method that can be applied to the operation process of graph neural networks in graph-based fraud detection models. To validate the effectiveness of the proposed method, we conduct experiments to evaluate performance using the Amazon-Fraud dataset, which is constructed as a multi-relational graph representation of user data from the Amazon E-Commerce platform.

2. Related Works

Various approaches in machine learning-based fraud detection techniques have been developed. Representative attempts include association rule, decision tree, clustering, one-class classification [

10,

11], neural networks, and ensemble approaches. Among them, fraud detection based on neural networks has recently been reported as having the highest detection performance, and graph neural networks are particularly evaluated as an effective approach in e-commerce platforms [

2].

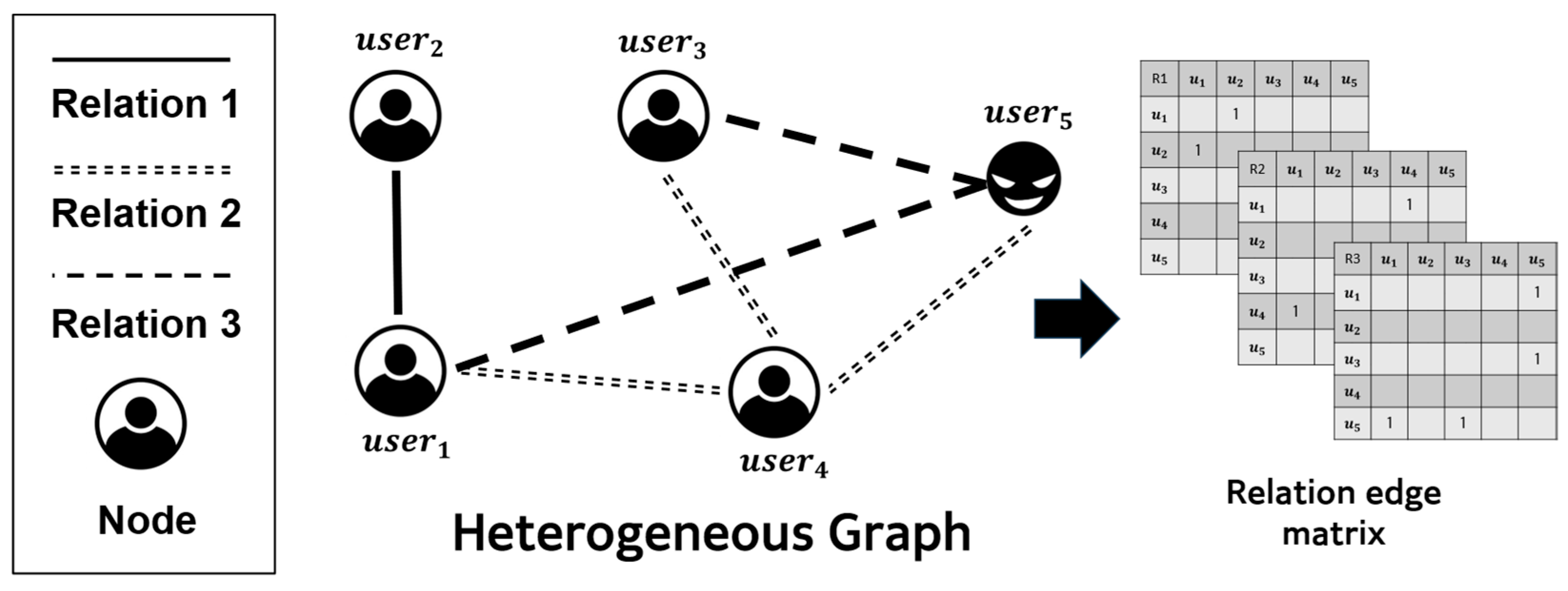

Fraud detection algorithms using graph neural networks model the review patterns and structural relationships of users on e-commerce platforms as graph representations and detect frauds. Review data generated on e-commerce platforms are important because they reveal heterogeneous multiple relationships between nodes in a graph structure when representing multi-relation interactions between users as a graph representation. This characteristic makes it difficult to directly apply general graph neural network models for fraud detection task.

Previously reported graph fraud detection models include CARE-GNN [

2], PC-GNN [

7], RioGNN [

6], RLC-GNN [

8], and GTAN [

9]. These models commonly incorporate a mechanism of graph adaptive filtering, in which graph edges are dynamically pruned during training. CARE-GNN was the earliest to be reported, and this model is a node classification model that learns contextual information between nodes in a relational graph to detect malicious nodes for fraud detection. CARE-GNN was the first to attempt predicting the neighbor selection threshold for edge filtering through reinforcement learning. However, the reward function is overly sensitive, leading to instability in the scale range of embedding vectors. Subsequently, to address the imbalance in the number of malicious labels in graph data for CARE-GNN, PC-GNN was proposed. This model maintains performance even under label imbalance by using propagation and collaboration mechanisms. RioGNN was proposed to improve CARE-GNN’s graph filtering, allowing a clearer representation of relationships between adjacent nodes. RioGNN allows independent thresholding and weight adjustment for edges within each subgraph, which leads to higher performance. However, as the number of thresholds to be inferred through reinforcement learning increases, the instability of node embeddings becomes more pronounced. RLC-GNN was introduced to address the negative impact caused by deeper layers in node aggregation within CARE-GNN. GTAN presents a novel method for detecting malicious attacker nodes using a semi-supervised learning approach, and the model is designed to consider non-static graph representations.

In this paper, we analyze the causes of commonly observed instability problems in similar graph filtering-based node embeddings used by CARE-GNN, RioGNN, and RLC-GNN. To solve this problem, we propose a scale regularization method that can be applied to node embeddings for graph fraud detection.

4. Experiments

To verify the effectiveness of the proposed scale regularization method, we conducted experiments using the Amazon-Fraud multi-relational graph dataset constructed from review data on the Amazon E-Commerce platform. The reason for using the Amazon-Fraud dataset is that it has been widely employed as a benchmark in prior graph fraud detection studies, allowing direct comparison with related works. Amazon-Fraud is a graph dataset that analyzes review data including variable-length texts from Amazon E-Commerce, representing platform users as nodes and the relations between users based on shared review characteristics as relation edges. In this like, Amazon-Fraud contains multiple relational edges; it represents heterogeneous graphs well, which are the domain of our study. In this graph representation, each node represents user attributes as multivariate vectors.

Table 1 presents the three types of relational structures in the Amazon-Fraud dataset. U-P-U in

Table 1 represents the relation between users who have reviewed at least one common product. U-S-U in

Table 1 represents the relation between users who gave at least one common rating within one week. U-V-U in

Table 1 represents the relation between users whose review text similarity falls within the top 5% among all users. In addition to the complete graph constructed from the three relations, Amazon-Fraud also includes individual graphs for each relation.

The experiment compares the node classification performance with the scale regularization model and existing graph fraud detection models such as CARE-GNN, PC-GNN, RioGNN, and GTAN. The scale regularization method was applied to the RioGNN model, which we refer to as RioGNN-sr, and compared with other models. In addition, we observed that RioGNN-sr shows a more robust performance trend than RioGNN, even under varying embedding dimensions. Then, to confirm whether the proposed method mitigates the sharp variation in the L1 norm distribution of embedding vectors, we visualized the change in the L1 norm per epoch.

In this context, AUROC was selected as the primary evaluation metric, since it is widely regarded as the most appropriate indicator for evaluating improvements in graph fraud detection tasks. Unlike metrics that focus exclusively on either fraudulent or normal users, AUROC jointly reflects the model’s ability to assign higher scores to fraudulent nodes while maintaining consistent predictions for legitimate users. Therefore, rather than isolating performance variations from one perspective alone, AUROC provides sufficient and comprehensive evidence of how effectively the proposed scale regularization enhances the discrimination capacity of graph adaptive filtering methods.

Table 2 presents both the AUROC performance reported in the existing literature, along with the results of experiments directly reproducing the corresponding identical models in this paper. In the comparative experiments, the proposed scale regularization applied to RioGNN (RioGNN-sr) achieves the best AUROC score of 98.11% among all models. Compared to the base architecture RioGNN, this method shows a performance improvement of 2.55%. In addition, this is an improvement of 0.61% compared to GTAN, which previously showed the best performance in the related literature, and a 1.12% higher result compared to the GTAN reproduced in our experiment.

Figure 6 shows the results of a comparative experiment in which scale regularization was applied to the node embedding module while changing the embedding dimension size for the directly reproduced RioGNN architecture. For each condition, 30 repetitions were performed, and it can be observed that RioGNN-sr showed relatively superior performance compared to the reproduced RioGNN in the experiment shown in

Figure 6. In addition, this confirms that the proposed scale regularization method demonstrates high robustness to hyperparameter changes such as embedding dimension.

Figure 7 visualizes the change in distribution of the L1 norm values of embedding vectors according to epochs between the proposed RioGNN-sr and the original RioGNN. At this time, for a fair comparison, RioGNN-sr was based on the distribution of the embedding vector,

, not the normalized vector,

. In the case of RioGNN, during approximately half of the total training epochs, on average, L1 norm distribution tended to increase more irregularly compared to RioGNN-sr. On the other hand, in the case of RioGNN-sr, during the first 1/4 of the training epochs, the distribution slightly decreased irregularly, but its variance converged continuously and stably afterward. As mentioned earlier, considering that when the magnitude or deviation of the embedding vectors becomes excessively large or changes irregularly, it may negatively affect the classifier networks, it can be seen that the distribution of RioGNN-sr is better. When the scale regularization method proposed in this paper is applied to node embedding, this visualization above shows that the method contributes to improved performance.

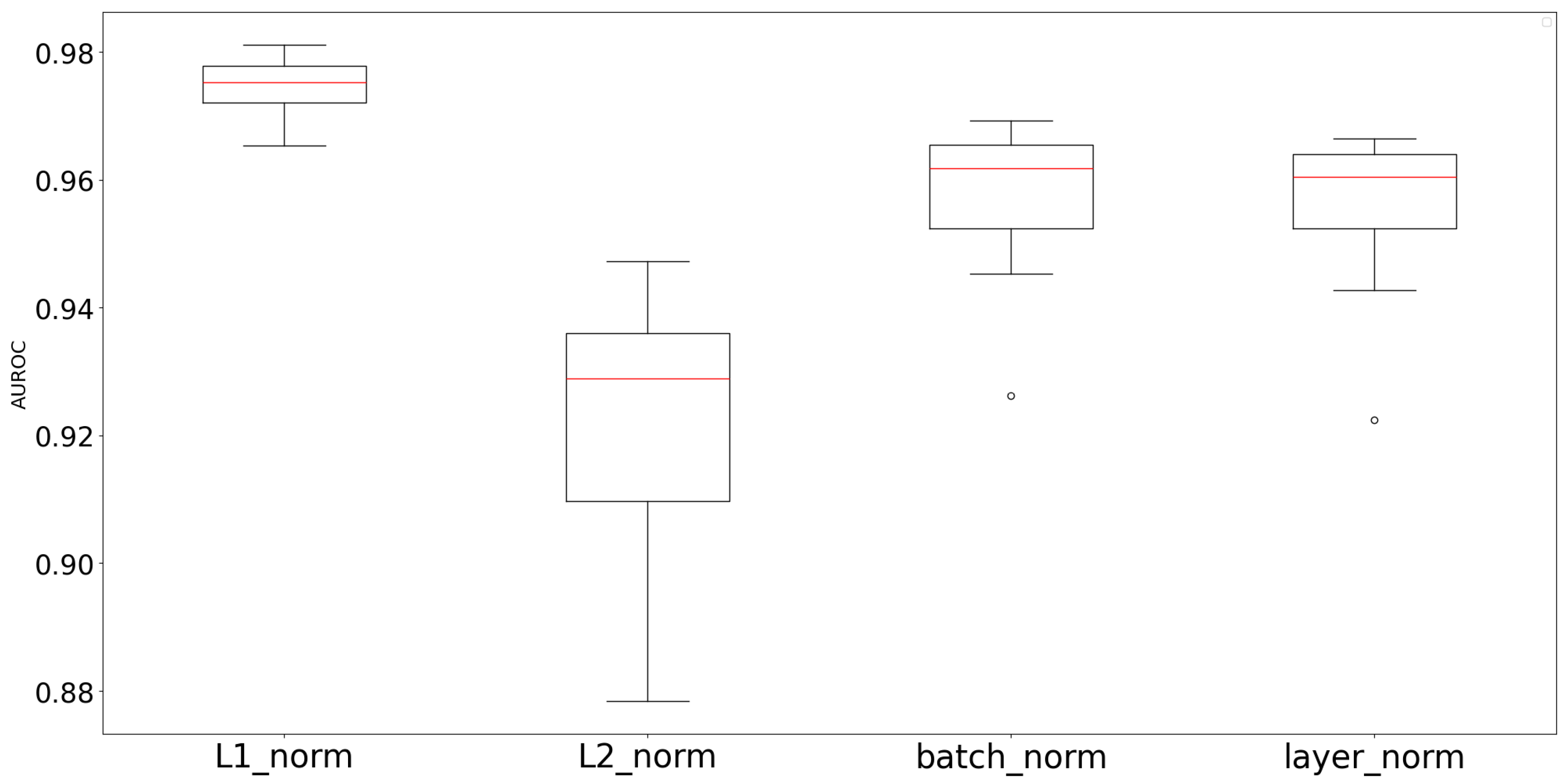

In addition, we conducted comparative experiments to investigate the effects of other normalization methods beyond the scale regularization based on the mean of the L1 norm of embedding vectors. As shown in

Figure 8, the tick label “L1 norm” represents the vanilla version of RioGNN-sr, whereas the tick label “L2 norm” denotes the experiment where embedding vectors were normalized using the mean of the L2 norm in the scale regularization operation.

Figure 8 demonstrates that the proposed L1 norm-based scale regularization achieves the best performance.

In particular, this highlights the limitations of batch normalization and layer normalization, which affect individual vector instances or attributes separately. By contrast, the proposed L1 norm-based scale regularization reduces excessive absolute deviations among embedding vectors while preserving their relative distances, thereby enhancing stability without distorting structure in the embedding space distribution.

Limitations and Discussion

In this section, we examine both the strengths and the limitations of the proposed approach and further discuss possible works. A key strength of scale regularization lies in its ability to mitigate the shortcomings of graph adaptive filtering strategies, which are commonly observed in existing graph fraud detection models. In this sense, scale regularization contributes in a more generalizable manner to stabilizing node embeddings and is relatively easy to integrate into various models.

But the introduction of the

inevitably adds a dependency on this additional hyperparameter. To investigate its influence on model performance, we attempted several trials of rc-factor value variation based on parameter

defined in Equation (11). When substituting

value into the

, the results reported in

Table 2 and

Figure 6 show that favorable performance can be achieved.

Figure 9 presents experimental results where the

was varied around

to evaluate its concrete effects across models. As observed in

Figure 9, the

newly introduced in scale regularization exhibits relatively low hyperparameter robustness. Therefore, similar to how the

value was derived in Equation (11), additional ways to derive the

value can be considered.