2. Materials and Methods

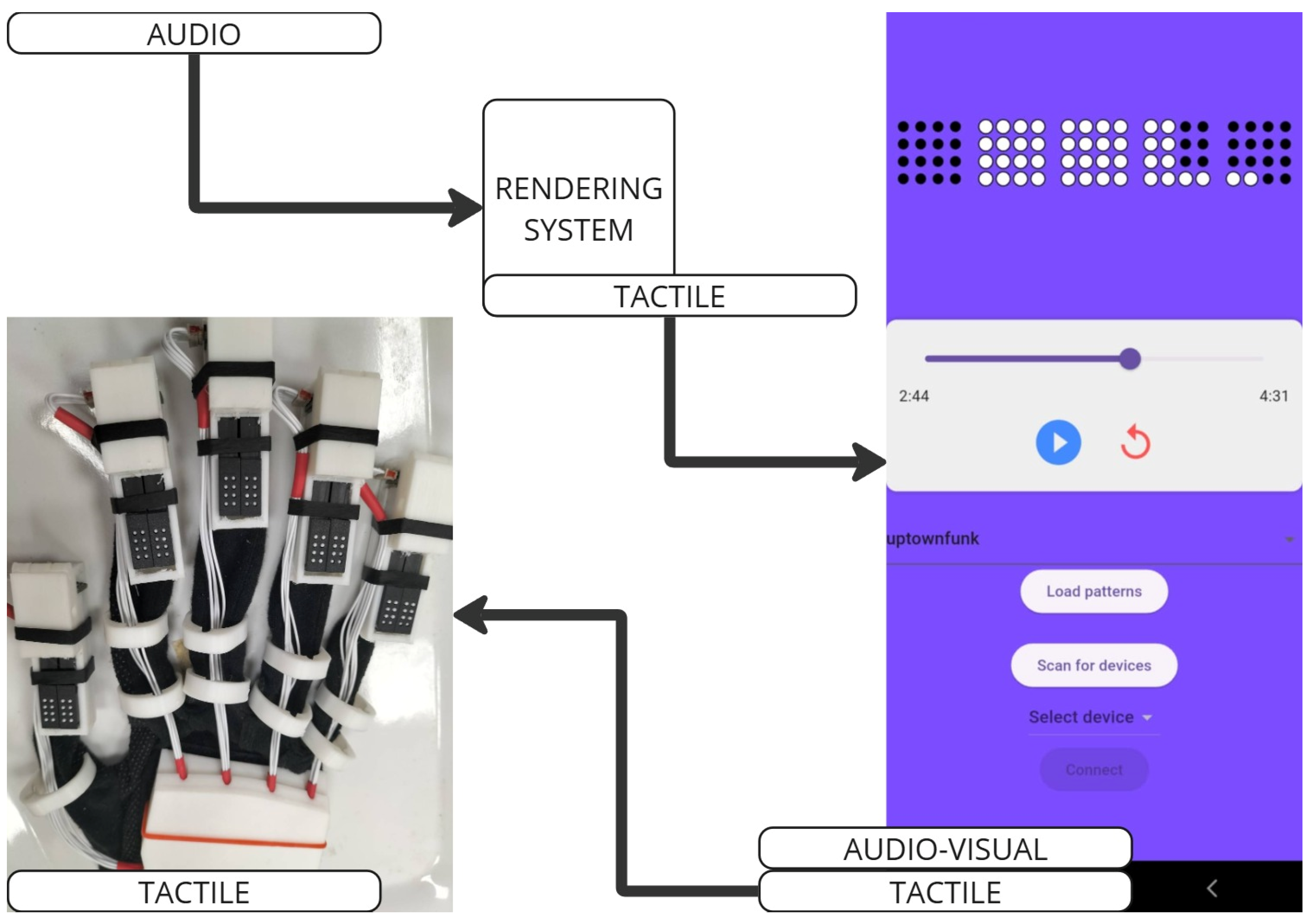

The HMP-WD is composed of (1) the haptic glove, (2) the mobile app, and (3) the rendering system. Given an audio file, the audio-tactile rendering system extracts the music features pitch, melody, rhythm, and timbre using source separation, spectral flux, YIN, discretization, and feature-pin mapping, embedding the extracted features into tactile patterns. The tactile patterns are stored in the mobile app, and when a song is played, the app synchronizes the visual and audio feedback with the tactile feedback as it sends the patterns to the haptic glove. The haptic glove processes the patterns and activates the corresponding pins.

Figure 1 illustrates the system architecture of the HMP-WD.

The haptic glove consists of P20 braille cells (metec Ingenieur GmbH, Stuttgart, Germany) connected to a custom-designed printed circuit board (PCB), with both housed in 3D-printed enclosures and attached to off-the-shelf gloves. The PCB features an STM32F10 microcontroller and a JDY33 Bluetooth module, which enables it to receive tactile patterns from the mobile app through Bluetooth Low Energy (BLE) communication. The system is powered by a 5 V lithium battery with a 2 A current rating and supports automatic recharging. A DC-DC boost converter supplies 185 V for the actuators and at least 3.3 V for control, managed by an LM3488 controller. The gloves’ components observe a balance of portability, efficiency, and reliability required for HMP-WD applications.

Each finger is equipped with two piezoelectric actuators, with each containing eight pins that can deliver a minimum tactile force of 17 cN and a fast 50 ms dot rising time, which are well within the human tactile sensitivity range (≥10 cN, 1–100 ms), providing high-resolution vibrotactile feedback that can communicate rapid changes in music features. The HMP-WD takes advantage of the piezoelectric actuator’s unique ability for fine localization, which is also made possible by their position at the user’s fingertips, where 44–60% of the Pacinian corpuscles of the hand are situated [

31]. While seemingly no HMP has utilized piezoelectric actuators for audio-tactile feedback, the notion has been expressed before as these actuators were known to have extremely fast response times and wide frequency ranges that would fit the requirements for the tactile display of music [

32]. Recently, the same configuration of actuators has been used to provide localized cutaneous force feedback in robotics assisted surgery systems [

33] and virtual reality immersion [

34,

35], highlighting its reliability for HMP applications.

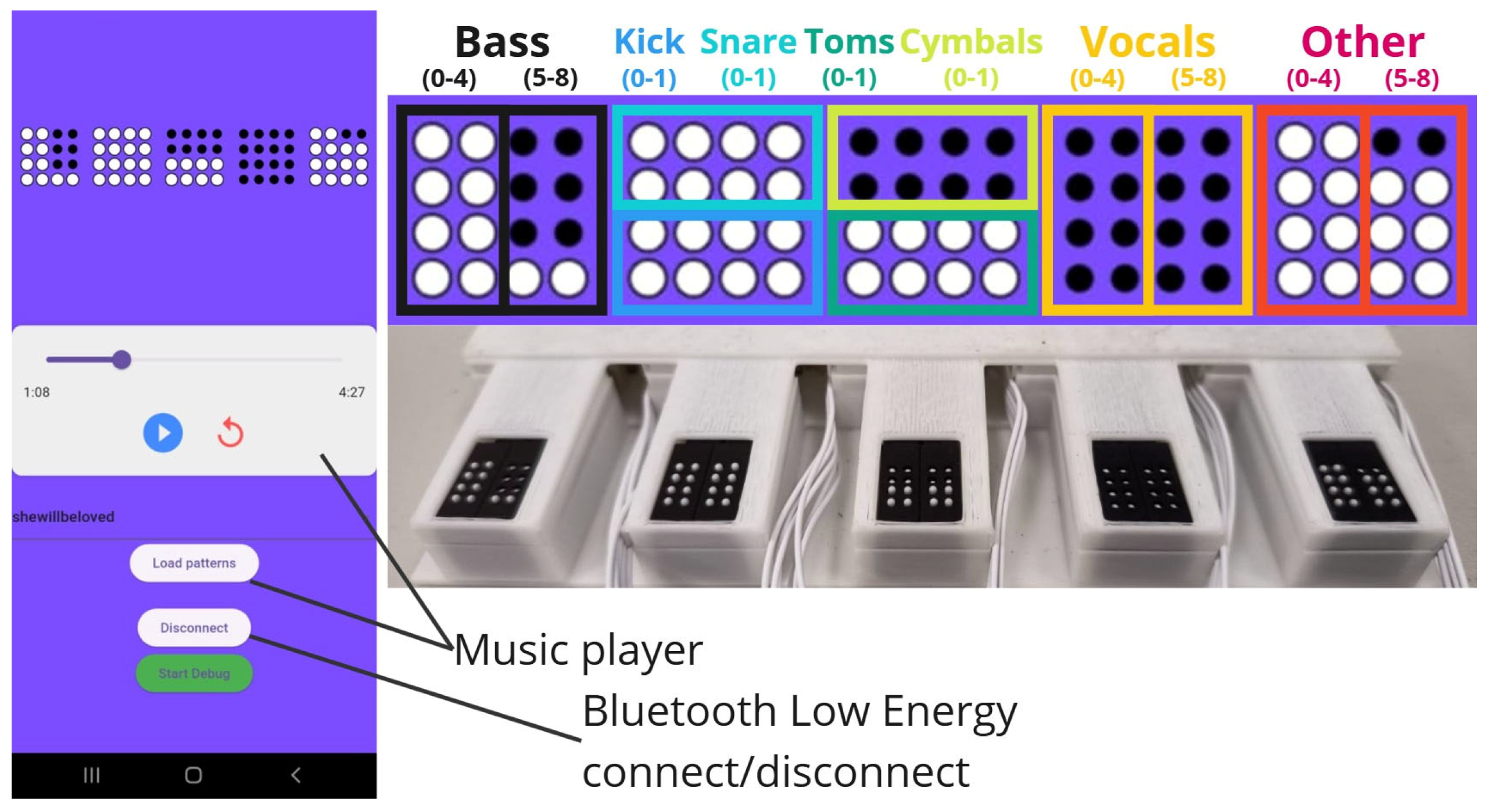

Figure 2 presents the components and control pipeline of the haptic gloves used by the HMP-WD.

The mobile app, developed using Flutter for cross-platform compatibility, delivers synchronized visual, audio, and vibrotactile stimuli through real-time, BLE communication with the haptic gloves while the music plays. The feature-pin mapping assigns instruments to different fingers to communicate timbre, centered around the percussive elements being assigned to the index and middle fingers as these are commonly used when moving to the rhythm in finger-tapping studies [

36]. Among the remaining melodic elements, the bass is assigned to the thumb as it has the lowest frequency band [

23], and the vocals and accompaniment are sent to the ring and pinky fingers, respectively. The percussive elements are ordered from the lowest frequency band to the highest in a down-up, left-right manner, as kick and snare are assigned to the index finger while toms and cymbals belong to the middle finger. A similar behavior can be observed in the melodic elements’ pitch display, as a pitch movement from 0 to 4 reflect an upwards movement from the pins, while movement from 0 to 8 would show an upwards, rightwards, and upwards movement to communicate melody. The rightwards movement to signify an increase in pitch mirrors the behavior in HMPs that utilize tactile illusions [

24,

26], as lower-pitched notes are assigned to the left side while higher pitched notes are given to the right. An advantage of using piezoelectric actuators for this application, however, is the ability to further localize pitch information in different levels on solely the fingertips and without using tactile illusions, providing the space necessary to assign instruments to their own fingers.

Figure 3 shows the mobile application with the assignment of music elements to their corresponding pins.

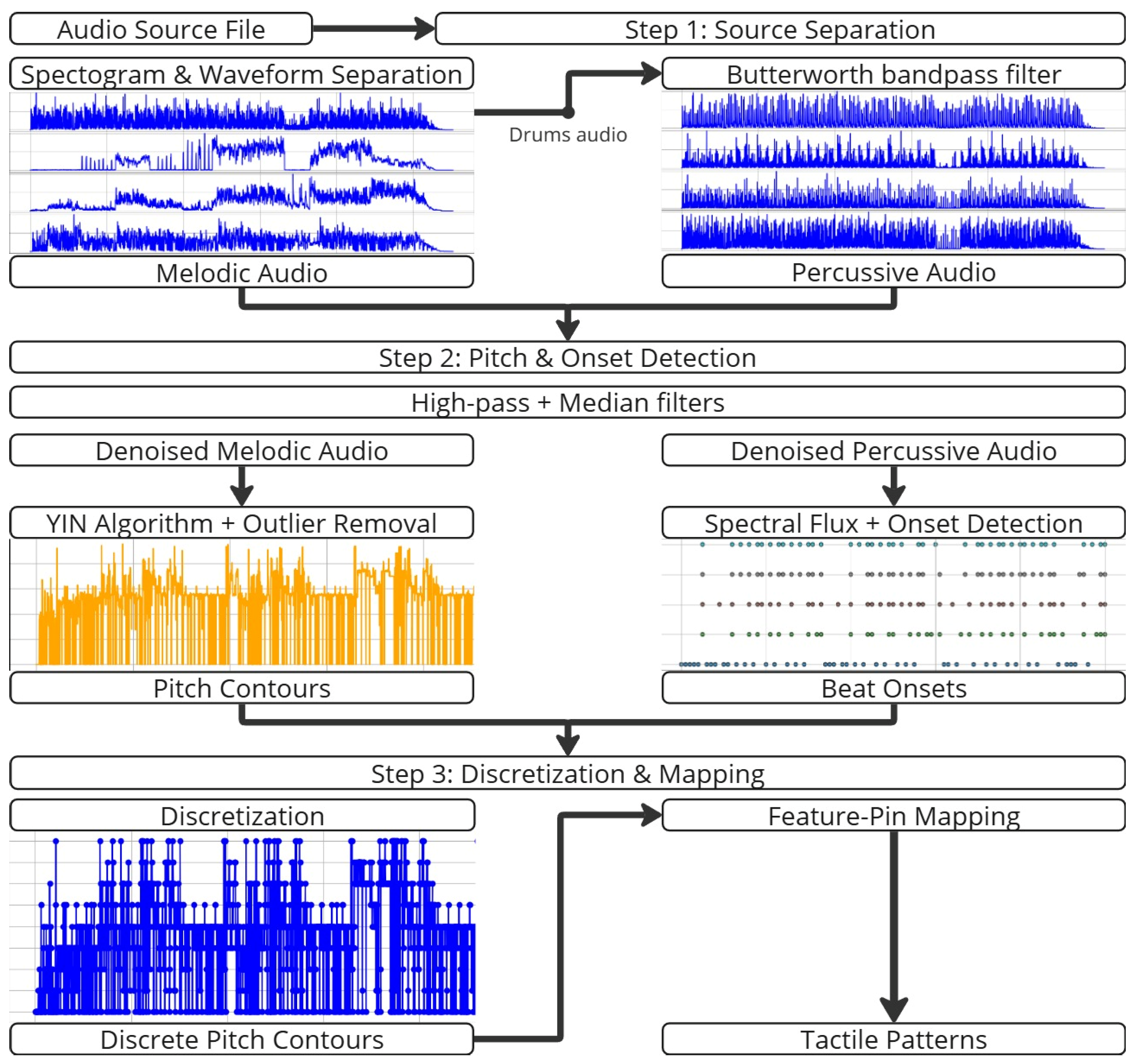

The rendering system consists of Python software (version 3.11.5) that takes an audio file path, extracts music features, and embeds them in tactile signals. The algorithm sequentially performs (1) source separation, (2) pitch and onset detection, and (3) discretization and feature-pin mapping.

Source separation isolates different instruments from the music into their own audio files. To achieve this, we employed a pre-trained hybrid separation model [

37] that separates the audio into four stems: drums, bass, vocals, and other accompaniments, with the latter three comprising the melodic tracks. The model uses a Transformer architecture and leverages both spectrogram-based and waveform-based techniques to perform high-accuracy source separation. Since drums are unpitched percussive sounds, the drum stem is further split into four frequency subsets, which will later be used to detect beat onsets. The subdivisions were heuristically selected based on where a distinct drum sound is best heard, which are 30–150 Hz for kicks, 150–800 Hz for snares, 800–5000 Hz for toms, and 5000–12,000 Hz for cymbals. These were then separated from the drum stem using a Butterworth bandpass filter, resulting in four percussive tracks.

Pitch and onset detection performs feature extraction on each of the separated audio tracks, preventing features of one track from being overlapped by another. The process begins by removing noise from the audio at the signal level using two filters. A Butterworth high-pass filter removes low-frequency noise, including microphone rumbles and external movements. Afterward, a median filter replaces each sample with the median of its window to remove impulse noise such as clicks and pops. Denoising is an essential preprocessing step to make voiced signal parts and real beats more salient for detection, so it is first applied to both melodic and percussive tracks.

To extract pitch information, autocorrelation measures the similarity between the time-lagged version and the original signal, which will periodically repeat at certain lag values. The best match after zero lag occurs at the fundamental period, revealing the signal’s pitch. The autocorrelation function can be defined as

where

x[

n] is the signal sample at time

n,

is the time lag, and

R(

) is the similarity between signal and itself shifted by the time lag. While autocorrelation has shown robustness to amplitude changes without the need for frequency analysis, it tends to perform poorly in the presence of harmonics, which occur simultaneously with the pitch. The YIN algorithm improves autocorrelation by using the difference and cumulative mean normalized difference functions to suppress harmonic peaks and amplify the true pitch [

30]. While our previous method utilized Short-Time Fourier Transform and Quadratically Interpolated Fast Fourier Transform for spectral peak estimation [

38], these are just as if not more susceptible to noise and harmonics that disrupt accurate pitch detection, suggesting that YIN may be a more fitting approach for HMP applications.

To detect beat onsets, spectral flux computes how much the frequency-domain representation of the audio changes at each frame. Sharp increases in the spectrum indicate potential points where a percussive instrument was used, signifying a beat onset. Changes in the spectrum are quantified by the onset strength envelope which is given by

where

o(

t) is the onset strength at time frame

t,

f is the frequency bin index,

S is the spectrum, and a max function implements half-wave rectification. From the envelope, local thresholds are determined to pick peaks that represent beat onsets [

39]. While spectral flux has been used for onset detection in pitched instruments [

40,

41], it has also shown reliability in beat onset detection for rhythm-based applications [

42,

43]. Energy peaks as used in our previous method is still a simple approach that is effective when beats always occur with loudness spikes, but spectral flux looks beyond loudness and into the frequencies while being efficient enough for use in HMPs.

To remove unreliable pitch and beat detections as well as noise, the Root Mean Square (

RMS) energy is calculated. The

RMS energy of an audio signal is defined as

where

x[

n] is the signal frame and

N is the number of samples in the frame. The

RMS energy is then expressed in decibels and paired with a predefined threshold, which is 35 dB in this study, to remove frames where the estimated pitch or beat onsets are too quiet and are likely false detections and noise. As the pitch values generally follow non-normal distributions that exhibit skewness and platykurtosis, Tukey’s method is used to detect and remove outliers. The technique uses a standard interquartile range with a threshold of 1.5 to clean the pitch contours.

Discretization involves transforming the continuous pitch contours into eight discrete levels based on their percentile relative to the maximum pitch value in the audio track. Since beat onsets are already in binary form, they are not discretized further. To ensure uniform spacing for BLE communication, the resulting discretized signals are aligned to 100 ms bins and empty values are set to zero. The signals finally undergo feature-pin mapping to convert them into tactile signals.

The third step involves converting the continuous pitch contours to eight discrete values based on the quartile they belong to relative to the maximum pitch value in the audio file. Time points with no pitch values are set to zero. Both the discretized pitch contours and the beat onsets are assigned to 100 ms time bins so that the tactile patterns are uniformly spaced. The onsets and discretized pitch values are formatted in a dictionary which are then converted to strings. The tactile patterns generated in this final step correspond to the feature-pin mappings, which are later stored in the mobile app and sent to the haptic gloves.

Figure 4 illustrates the proposed audio-tactile rendering algorithm.

4. Discussion

The HMP-WD attained relatively high scores in its respective metrics on the tactile display of pitch, melody, timbre, and rhythm, and improvements were also observed when using spectral flux and YIN in the feature extraction process. Furthermore, these improvements came with only a small increase in the algorithm’s processing time compared to our previous method.

For pitch display, the current methods were formulated with the hypothesis that modifying the discretization step from four levels to eight would reduce the MAE of tactile signals, as the distances between each discretized time point and the original pitch would become smaller. Compared to the previous method whose MAE was 0.1240, the current implementation showed an overall improvement with an error of 0.1020 and deviating from the original audio by only 10.2%. While improvements on the bass stem are negligible, the accompaniment and vocal stems showed significant improvements, with the latter reducing MAE by 0.0416.

The disparity of results among the three stems can be explained by the non-zero pitch ranges in the original audio, which play an integral factor during discretization. It is important to note that when the eight levels are determined during the discretization step, only the non-zero pitch ranges are considered. The rationale behind this is that starting from zero reduces touch-perceptible level changes as the original pitch increases. For instance, a stem whose non-zero pitch range is 400–600 Hz would feel more muffled compared to one with a range of 200–400 Hz, even if both have the same melody, because the former would only actually utilize fewer upper levels than the latter. Conversely, if both utilize only their non-zero pitch range, the movements in pitch would be equally perceptible regardless of raw value, leading to better melody displays. Returning to the point on disparity, the bass stem will almost never have a higher raw pitch value compared to the other stems, and it will generally have a smaller non-zero pitch range. In one song, the estimated range for bass was 50–160 Hz, while the ranges were 50–725 Hz for vocals and 50–650 Hz for accompaniments. This means that, after discretization, pitch levels for bass are shifted relatively higher or lower than those of other stems. Despite the disparity of results across stems, increasing the number of levels during discretization was still shown to improve the HMP-WD’s pitch display.

For melody/timbre display, the hypotheses are that using YIN would lead to pitch estimates that are more stable, allowing the tactile signals to replicate them better compared to the coarse pitch contours retrieved using spectral peaks, and that increasing the discretized pitch levels would allow tactile signals to capture finer pitch movements. Compared to the previous DTW distance of 0.1392, the current implementation seems to display overall melody worse with a distance of 0.1518. Taking a closer inspection, it is revealed that the driving forces for the overall distance increase are the bass and accompaniment, whose distances increased by about 0.04 each, even when the vocal stem’s distance decreased by 0.0518.

Although it is reliable in general pitch estimation, YIN is best applied on monophonic vocal signals as observed in other studies [

44,

45]. Thus, the significant decrease in DTW distance for the vocal stem is not surprising and rather proves the working hypotheses. On the other hand, the accompaniment stem is usually polyphonic, with some songs containing multiple instruments such as piano and guitar. YIN still accurately estimates the pitch in polyphonic signals but because of harmonization, the resulting pitch contours are relatively rougher, resulting in an increased difficulty for the tactile signals to accurately replicate these sharp pitch movements. Similarly, while the bass stem is usually monophonic, its percussive aspects would inevitably lead to rough pitch contours even when using YIN, and when combined with its pitch display issues as discussed earlier, the resulting melody display is also impacted. The working hypotheses were proven for the vocal stem, whereas future research can explore methods in pitch estimation or discretization that can improve the melody display for the bass and accompaniment stems.

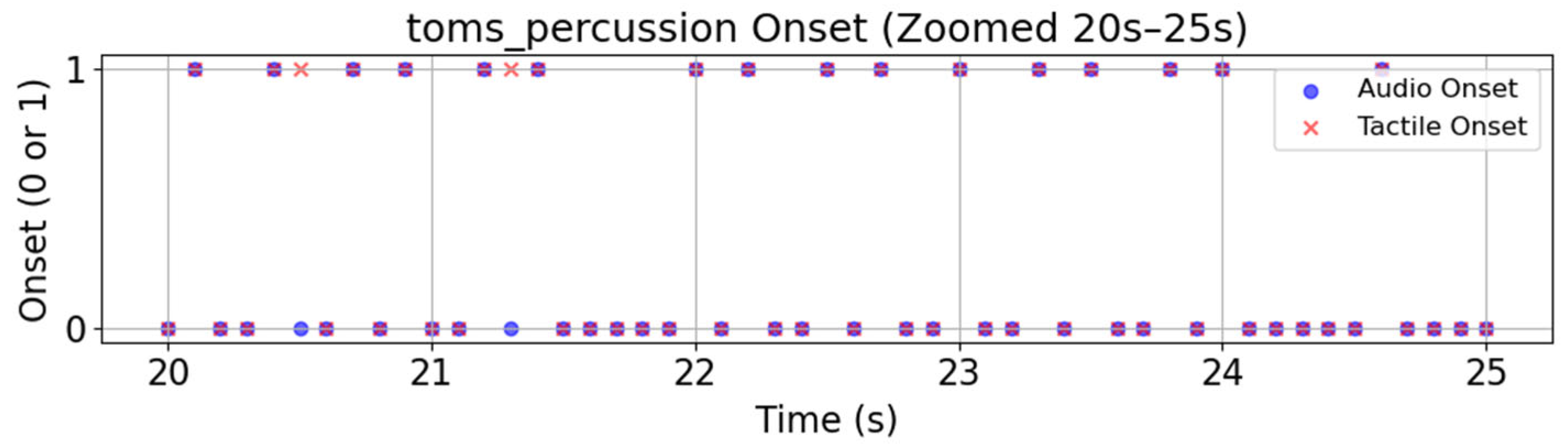

For the rhythm display, we sought to improve the results of our previous approach using spectral flux and software optimization. Since it detects beat onsets at the frequency level rather than finding energy peaks, it no longer includes volume spikes that may merely come from noise, leading to reduced payloads and latencies. At the same time, we optimized the mobile application’s synchronization with the haptic gloves and music playback. Thus, the accuracy for all drum frequency subsets was drastically improved. Unlike related applications that immediately attempted to detect beats from the audio, the methods in this study account for beats with softer volume and at four different frequency subsets to further isolate each type of drum, which were obtained from a source separation process that isolates it from vocals, bass, and accompaniments.

With these results, the HMP-WD has been shown to effectively display pitch, melody, timbre, and rhythm using only an audio file, extracting features and communicating them from a near-auditory frequency range. It retains the characteristics of the original audio while improving upon prior approaches in four key ways. First, this study uses piezoelectric actuators, which offer fine localization capabilities and can communicate frequencies beyond 1000 Hz through vibrotactile feedback, unlike other popular actuators. In HMP-WD studies with a glove form factor, this device aligns with prior studies that applied stimuli to the fingertips [

19,

20,

21] instead of only the phalanges, backhand, or palm [

17,

18]. The use of a mobile app to transmit tactile signals via Bluetooth has been explored before [

19], but this study advances the approach by using BLE to improve portability. Second, the study introduces an algorithm that does not require a music’s MIDI file to extract pitch information. It proposes YIN and discretization as an efficient and effective method. Unlike previous studies limited by external software [

25,

26] and a fixed number of notes [

1,

5,

6,

24,

25,

26], the proposed methods generate continuous pitch contours that can be discretized into any number of levels, adjusting for the number of actuators. This also addresses the need for tactile illusions to increase pitch display capacity [

24,

26]. Third, this study provides a framework for beat extraction for HMP applications. While prior studies mentioned aligning vibrations with a beat [

20], their accuracy was not validated. The proposed methods allow for beat extraction without using a bass band that is limited to 200 Hz [

23,

27,

28] and suggest a frequency-based approach to detect soft-volume beats rather than the energy-based approach [

23]. Fourth, source separation is used to separate sounds from different instruments to display timbre, similar to prior studies [

5,

26].

The limitation in the proposed algorithm is that it requires preprocessing the audio file before it can be used in the HMP-WD. However, despite using spectral flux and YIN for multi-feature extraction, the methods take an average of only 180.45 s to process an audio file from start to finish for permanent, offline use. This is a minimal increase of less than 10 s compared to our previous methods, highlighting the overall efficiency of the audio-tactile rendering algorithm.

5. Conclusions

With a normalized respective MAE and DTW distance of 0.1020 and 0.1518, and a rhythmic pattern accuracy of 97.56%, the wearable HMP effectively communicates multiple musical features. The proposed audio-tactile rendering algorithm achieves this without the pitfalls of prior approaches. Using piezoelectric actuators, this HMP also overcomes the 1000 Hz frequency range barrier of previous devices. Additionally, the HMP-WD is more efficient, with an average preprocessing time of only 180.45 s and without required MIDI files or software. The study also proposes a volume-independent method to extract beats from music for HMP applications and a way to display timbre information using source separation and feature-pin mapping.

The findings suggest that the HMP-WD can communicate the pitch, melody, timbre, and rhythm of music at an auditory frequency range while retaining most of the characteristics of the original audio. The YIN algorithm was shown to greatly improve the tactile display of vocals and slightly improve the display of bass and accompaniments. Spectral flux and software optimizations significantly improved rhythm display.

Future research should focus on reducing the MAE and DTW distances of melodic displays and validating the results with more song data. Other actuators could be explored to improve the results, and adjustments to the methods could be made, such as using probabilistic YIN [

45] or related source separation, beat extraction, and discretization methods. Subjective experiences with the device could also be validated through user testing. Furthermore, these methods could be used to enhance the music instrument playing experience [

22] or integrated with other modalities like VR [

2]. Future work could also study the human emotional and cognitive perception of music using the HMP-WD.