Abstract

Six years after its deployment, SatShipAI, an operational platform combining AI models with Sentinel-1 SAR imagery and AIS data, has provided robust maritime surveillance around Denmark. A meta-analysis of archived outputs, logs, and manual reviews shows stable vessel detection and classification performance over time, including successful cross-sensor application to X-band SAR data without retraining. Key operational challenges included orbit file delays, nearshore detection limits, and emerging infrastructure such as wind farms. The platform proved particularly valuable for detecting offshore “dark” vessels beyond AIS coverage, informing maritime security, traffic management, and emergency response. These findings demonstrate the feasibility, resilience, and adaptability of long-term AI–geospatial systems, offering practical guidance for future autonomous monitoring infrastructure.

1. Introduction

The integration of artificial intelligence (AI) into real-world engineering systems has progressed rapidly in recent years, yet long-term deployments remain relatively underexplored. This article presents insights from SatShipAI, an end-to-end AI-powered platform that has operated for six years, processing Copernicus Sentinel-1 synthetic aperture radar (SAR) satellite imagery for maritime surveillance tasks such as ship detection, type classification, and length estimation. Unlike studies that emphasize isolated benchmarks or state-of-the-art (SOTA) model performance, this work focuses on the sustained application of AI in a live operational setting, highlighting performance evaluation, system-level resilience, failure cases, and insights revealed over time.

The use of computer vision for image analysis, AI-driven autonomous decision-making, and the integration of intelligent systems into engineering applications is becoming increasingly prevalent. The SatShipAI platform serves as a practical example of how AI can be effectively applied to address complex and mission-critical engineering challenges in autonomous monitoring. Operating under real-world constraints and uncertainties, the platform demonstrates the potential and limitations of long-term AI deployment. By examining its life cycle, this study offers valuable insights for researchers and practitioners focused on developing, sustaining, and scaling intelligent systems for continuous operation in similarly demanding environments.

SatShipAI is an operational platform that leverages SAR satellite data in combination with AI models to automatically detect maritime vessels. For demonstration purposes, the system focuses on the waters surrounding Denmark. Upon the availability of a new Sentinel-1 satellite product, the primary processing pipeline is triggered, enabling rapid vessel detection using trained AI models. A secondary pipeline is executed approximately three days later, once historical automatic identification system (AIS) data are released by Danish maritime authorities. This step allows for cross-verification of detections; identification of “dark” vessels, i.e., those without AIS signals; and performance evaluation of the models. The platform demonstrates the practical efficiency of AI in real-world monitoring scenarios, producing results in under 10 min, including KML outputs compatible with visualization tools such as Google Earth, with the consistent performance of the deployed models further contributing to the robustness and reliability of the system.

In contrast to many existing studies or platforms that focus on single-use applications or retrospective analysis, SatShipAI operates continuously and autonomously, producing results in near-real time. This persistent deployment not only highlights the feasibility of long-term AI integration in maritime surveillance but also allows for in-depth analysis of operational reliability, failure modes, model drift, and performance under changing environmental conditions.

The publication of this work is motivated by the pressing necessity to consolidate and reflect upon the extensive operational experience derived from the platform over the past six years. As the development of an upgraded platform version is on the horizon, it has become imperative to document the acquired lessons, recurring challenges, and performance patterns observed throughout its deployment. This comprehensive documentation not only serves as a guide for future enhancements but also enriches the broader research community by sharing practical insights from an ongoing, real-world AI deployment in maritime surveillance. By examining both the strengths and limitations of the current system, this report lays a foundation for informed redesign, promoting transparency and collaboration with other researchers and practitioners in the field. The study provides practical insights into the sustainability and robustness of AI–geospatial platforms in real-world applications and proposes strategies for self-monitoring systems, output visualization, and swift failure recovery.

It is important to note that the SatShipAI platform has been developed within the framework of an ongoing project and is not released as open-source software. For this reason, certain implementation details cannot be disclosed in full. Nonetheless, this paper provides a transparent and comprehensive evaluation of the platform’s performance, focusing on its operational outcomes, detection accuracy, and practical utility. The emphasis is placed on evaluation procedures and measurable results, ensuring that the findings remain relevant and useful to the broader research community despite these limitations.

Beyond the scope of the present evaluation, the SatShipAI platform is expected to contribute to the MUlti-Sensor Inferred Trajectories (MUSIT) project [1]. MUSIT aims to address challenges in trajectory reconstruction and enrichment by integrating heterogeneous sensor data, including AIS, coastal cameras, and satellite imagery. Participation in MUSIT provides an opportunity for SatShipAI not only to contribute expertise in satellite-based vessel detection but also to experimentalize with advanced fusion methodologies. These outcomes are anticipated to inspire and support future enhancements in the field of maritime monitoring, complementing the findings reported in this work.

This work begins by establishing the role of AI in the evolving landscape of maritime surveillance, positioning the current study relative to broader technological and operational developments. It then introduces the developed platform, describing the underlying detection pipelines, output modalities, and technical challenges encountered during deployment. Emphasis is placed on analyzing the system’s limitations, including sources of delay and failure, to support reproducibility and robustness in future implementations. A comprehensive assessment follows, examining model performance over time, generalization to external datasets, and the platform’s responsiveness and adaptability under operational conditions. The discussion expands to cover real-world applications, system transparency, and public engagement. The manuscript concludes by reflecting on key lessons learned, emphasizing the importance of system resilience and the evolving balance between human oversight and automated decision-making in maritime AI systems.

While prior research on AI in maritime surveillance has largely focused on short-term benchmarks or retrospective analysis of isolated datasets, there is a lack of longitudinal studies assessing how AI-based detection and classification systems perform under sustained real-world deployment. This gap limits our understanding of system resilience, adaptability, and operational constraints over time. SatShipAI provides an ideal case study to address this gap: it is among the few operational platforms that has continuously processed satellite SAR data and integrated AIS information over a six-year period, enabling a rare, evidence-based evaluation of long-term AI performance in the maritime domain.

To make our contributions more explicit, Table 1 summarizes the key elements of this study. These include the longitudinal evaluation of SatShipAI’s six-year operation, evidence of cross-sensor generalization, assessment of operational resilience and bottlenecks, demonstration of practical maritime relevance through “dark” vessel detection, and guidance for future system development. Together, these contributions position this work as a rare longitudinal study of an AI–geospatial monitoring platform, directly addressing the gap in sustained evaluations of real-world maritime AI deployments.

Table 1.

Key contributions of the present study.

The remainder of this paper is structured as follows. Section 2 reviews related work in artificial intelligence for maritime surveillance, providing the context within which this study is situated. Section 3 introduces the SatShipAI platform, detailing its design and operational pipelines that form the basis for the subsequent analysis. Building on this, Section 4 presents a comprehensive evaluation of the platform, including long-term model performance, qualitative observations, cross-sensor validation, and an examination of operational failures. Section 5 then discusses the practical applications of these findings, highlights lessons learned, and outlines perspectives for future improvements. Finally, Section 6 concludes by reflecting on operational resilience and the balance between human oversight and automation.

2. AI in Maritime Surveillance

Maritime surveillance has greatly benefited from advances in AI over the past decade, particularly through the application of deep learning to remote sensing imagery [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29]. A structured summary of these studies is provided in Table 2, which highlights their sensor modalities, methodological contributions, and main findings.

Table 2.

Representative works in AI-based maritime surveillance.

Two primary sensor modalities—SAR and electro-optical (visual) imaging—provide complementary capabilities for the monitoring of vessel traffic and maritime activities. SAR can image the ocean surface during the day and night and through clouds, making it ideal for wide-area all-weather surveillance, while optical satellite imagery offers high-resolution visual detail in clear conditions. Traditionally, ship detection in imagery relied on classical techniques (e.g., CFAR thresholding in radar or feature engineering in optical data) and manual analysis, which often struggled with high false-alarm rates and environmental noise.

Recent advances in AI—particularly deep learning—have transformed maritime surveillance by enhancing vessel detection, classification, and tracking using remote sensing data [2,17,18]. Two key sensor modalities dominate this field: SAR, which offers all-weather, day-and-night monitoring, and optical imagery, which provides high spatial detail under clear skies [19].

Modern maritime surveillance systems greatly benefit from multi-sensor fusion and advanced signal analysis using satellite data. Reggiannini & Bedini developed a gradient-enhanced pipeline that integrates SAR and optical imagery to detect ships and estimate speed and heading from wake patterns [30]. Petrovic et al. showcased an effective IoT-over-satellite approach for equatorial maritime areas, using HF radar and IoT links to achieve consistent vessel tracking with minimal latency [31]. Lazarov’s analytical SAR model enhances moving-target imaging by compensating for phase errors and motion distortion, improving the visual clarity of sea-bound vessels [32].

The rapid progress of deep-learning developments designed for maritime applications is notable. The AFSC network, introduced by He et al., employs anti-aliasing and shared convolutions to enhance the detection of small ships within noisy SAR imagery, achieving an approximate mAP of 69% on recognized SAR standards [33]. Chen et al.’s approach integrates CNN detection with NSA filtering and introduces a novel Bounding-Box Similarity Index (BBSI), improving marine radar multiple-object tracking by 41% and reaching a high-level object tracking accuracy of 79% [34]. Furthermore, Rocha and de Figueiredo’s contributions offer an analysis of visually based ship-tracking datasets and metrics, providing recommendations for the implementation of YOLO-style optical methods in maritime environments [35].

Traditional SAR processing pipelines, CFAR thresholding, heuristic filters, and region-growing methods have been largely replaced by CNN-based models that significantly outperform legacy techniques in both precision and generalizability [16,17]. Since the release of SSDD and similar public datasets, research has shifted toward real-time, robust detection using YOLO, Faster R-CNN, RetinaNet, and attention-based variants. These models often integrate scale-aware mechanisms, oriented bounding boxes, and speckle-noise tolerance to improve performance in maritime conditions.

Beyond detection, deep learning has also enabled accurate ship classification from radar backscatter patterns, despite the inherent imaging noise of SAR products. Hybrid CNN-LSTM frameworks and transfer learning have helped overcome data scarcity, while domain-specific augmentations further boost generalization [2,26].

For optical imagery, deep learning has improved detection under complex visual scenarios, occlusions, cluttered harbors, and varying ship sizes. Algorithms such as YOLOv5, Swin Transformers, and RetinaNet have been adapted for satellite and aerial images [10,18]. Large datasets such as SeaShips and xView3 support supervised learning for ship detection, including cases of dark vessel activity [20,21]. Attention mechanisms and transformer backbones have shown particular success in improving robustness to background variation and spatial resolution changes.

Multi-sensor fusion, especially the combination of SAR, AIS, and optical data, offers a solid path to persistent maritime domain awareness. Systems like SatShipAI demonstrate how real-world platforms can automate vessel detection and correlate results with AIS to reduce false positives [27,36]. Government and commercial actors, including EMSA, ICEYE, and Global Fishing Watch, have implemented AI-enabled fusion pipelines at scale [22,24,25]. These systems combine public data (e.g., Copernicus) and proprietary feeds to support tasks like IUU (illegal, unreported, and unregulated) fishing detection and maritime security monitoring.

Despite progress, several challenges persist, such as limited amounts of labeled data in some regions, inconsistent ground truth from AIS, and variations in environmental conditions. Ongoing research is exploring self-supervised learning, domain adaptation, and active learning to improve model robustness across maritime zones [11,13]. The integration of additional sensors, as well as UAV imagery, infrared cameras, and port surveillance networks, is a promising next step. There is also growing interest in explainable AI and uncertainty quantification, which are critical for trust and accountability in operational surveillance systems.

3. The SatShipAI Platform

SatShipAI (Satellite, Ships, and Artificial Intelligence) is a maritime monitoring initiative that utilized, upon release, advanced AI techniques alongside freely available Sentinel-1 SAR data. The system automatically detects ships, classifies ship types, and estimates ship lengths by processing high-resolution satellite imagery. Since its launch, it has provided near-real-time insights into maritime activity, with a particular focus on the waters surrounding Denmark. A publicly accessible demonstration of its output is available on the project’s website: https://satshipai.eu (accessed on 4 September 2025).

Initially developed in 2018 as an internal research project, SatShipAI has been recognized for its innovative approach to maritime surveillance. It was awarded first prize at the Copernicus hackathon in Athens (2018) (accessed on 1 January 2019) and was selected as one of the top ten winners of NATO’s Defence Innovation Challenge (2019) (accessed on 4 September 2025), highlighting its value in both civilian and defense applications.

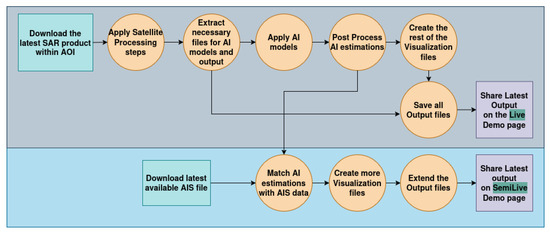

The platform features two modes: NearRealTime (or Live) and AfterNextDay (or SemiLive). The NearRealTime mode uses the most recently released Sentinel-1 SAR products, which are typically accessible approximately seven hours after image acquisition. The AfterNextDay mode integrates AI-based analysis of SAR imagery with AIS data. This version is subject to a three-day delay, as the corresponding historical AIS data becomes available after that period. All necessary steps can be viewed in Figure 1.

Figure 1.

Flowchart of “NearRealTime” and “AfterNextDay” demo pages.

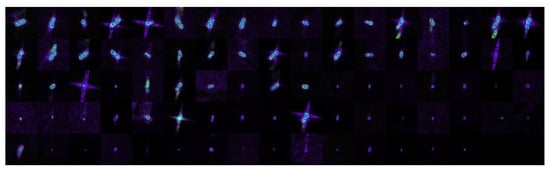

Figure 2 presents all AI-detected ships within a single Sentinel-1 SAR satellite image. The vessels appear to glow against the dark sea background, reminiscent of stars scattered across the night sky, a visual effect that highlights the contrast between ship targets and the surrounding sea. This visualization effectively showcases the density and distribution of maritime traffic within the imaged area. Importantly, the detection capability demonstrated here is largely resilient to external environmental conditions; since SAR imaging is not dependent on sunlight or atmospheric clarity, the system remains effective during nighttime and under heavy cloud cover. The only significant constraint stems from the spatial resolution of the satellite sensor in relation to the physical size of the vessels, as very small ships may not be reliably detected if they fall below the minimum detectable size threshold.

Figure 2.

All AI-detected vessels in an example Sentinel-1 image.

3.1. Detection Workflow

This section outlines the end-to-end processing workflow used to extract and classify ship detections from Sentinel-1 SAR imagery within the system. The pipeline is designed to operate efficiently on large datasets, using a combination of satellite data preprocessing, AI-based detection, and classification techniques, with automated resource and output management.

3.1.1. System Initialization

The workflow begins with the initialization of the working environment, where necessary geospatial libraries and dependencies are loaded. The system is configured to support GPU-accelerated operations and to interface with external tools for satellite data processing. Orbit correction data relevant to each SAR product is automatically retrieved to ensure precise geolocation.

3.1.2. Data Processing Pipeline

Each Sentinel-1 product within the dataset is processed through a structured sequence of operations:

- SAR Data PreprocessingSatellite products undergo standard preprocessing steps, including radiometric calibration, speckle noise filtering, and terrain correction. This ensures the imagery is geophysically accurate and suitable for downstream analysis.

- Ship Detection, Classification, and Length EstimationAfter preprocessing, each image is analyzed using a deep learning model trained to detect all vessels. Detected vessels are extracted and resized to pixels for the classification and length estimation models. The classification model distinguishes between five ship types—cargo, fishing, passenger, tanker, and other. The results are saved in structured formats (including imagery previews and metadata) for visualization and analysis purposes.

- Post Processing and Spatial FilteringTo improve detection relevance and reduce false positives, the pipeline includes a spatial filtering stage that removes any vessels detected on land, as well as vessels detected within wind farm areas. This step ensures that only open-water detections are retained for further use.

- Output Generation and ArchivingThe final detection results are prepared for visualization in downloadable and Web-based formats. Outputs are automatically organized and archived, while redundant data is cleaned to optimize storage. The system also provides standardized output packages for demonstration and sharing purposes.

Table 3 provides additional specifics regarding the preprocessing, detection, and classification pipelines.

Table 3.

Core stages of the SatShipAI ship detection/classification pipeline.

3.1.3. Workflow Management and Optimization

Throughout the process, key actions are logged to avoid redundant operations in future runs. Memory usage is optimized through systematic resource cleanup, ensuring stable performance, even when processing large volumes of satellite data. The pipeline is designed to be modular and scalable, supporting both individual product analysis and batch processing in operational environments.

3.2. System Modes and Outputs

For each satellite product processed in the “NearRealTime” mode, a dedicated output directory is generated, containing a comprehensive set of files designed for analysis, visualization, and integration. These include the following:

- A CSV file summarizing the AI model’s estimations, including the geographic positions of all detected vessels, ship-type classifications with associated confidence scores, and estimated vessel lengths;

- A set of KML files enabling geospatial visualization of the results, compatible with both our demo website and Google Earth;

- Multiple background image layers prepared to support the projection and visualization of detection results;

- Image chips (cropped patches) centered around each detected vessel, suitable for further inspection or analysis;

- A preview image that provides an overview of the entire processed satellite product.

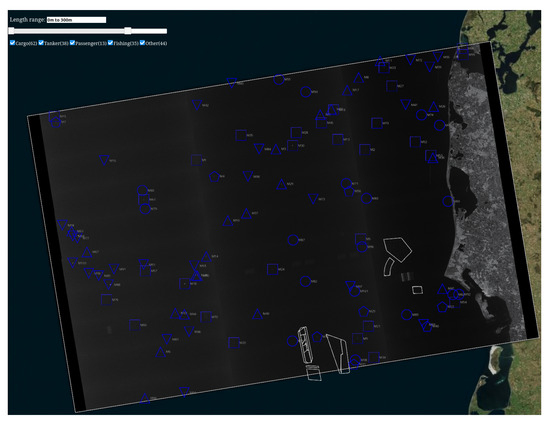

Figure 3 illustrates a demonstration of the “NearRealTime” mode, showcasing an SAR satellite image overlaid on a global map interface. The visualization is enriched with AI-driven ship detection and classification results. Each detected vessel is represented by a distinct geometric symbol corresponding to its estimated type, such as cargo, tanker, or fishing vessel. Users can interact with individual vessel markers to access additional information, including an estimated ship length. This interactive layer provides a clear and intuitive overview of maritime activity as analyzed in near-real time by the system.

Figure 3.

A “NearRealTime” output example.

In the “AfterNextDay” mode, the outputs from the “NearRealTime” processing are further extended. Each detected vessel is categorized into one of three classes based on its association with AIS transmissions: matched (correlated with AIS data), dark (no corresponding AIS signal), or unmatched (insufficient data for a definitive match). To facilitate this classification process, the large AIS dataset is initially sub-sampled to match the spatial coverage and acquisition time of each satellite product, thereby reducing its size. These tailored subsets are then combined with the AI-generated estimations using a straightforward custom proximity algorithm. The resulting fused data is used to produce new KML files, enabling detailed visualization and further analysis.

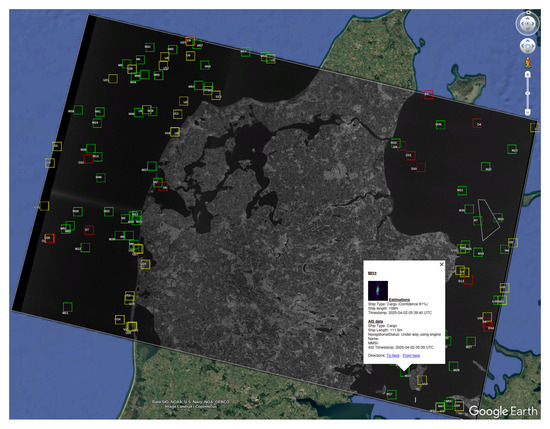

Figure 4 illustrates a SAR satellite image seamlessly integrated into the Google Earth interface. Each detected vessel is marked by a color-coded rectangle corresponding to its classification status—matched (green), dark (red), or unmatched (yellow). When a user clicks on a vessel marker, a detailed information panel appears, combining AI-derived detection insights from the “NearRealTime” mode with AIS data. This integrated view enables users to explore and analyze each vessel’s identification, classification, and estimated characteristics in a single, intuitive interface.

Figure 4.

“AfterNextDay” example on Google Earth.

4. Comprehensive System Assessment

This section presents a thorough evaluation of the platform’s long-term behavior and operational effectiveness. Drawing on both quantitative metrics and qualitative observations, we assess the stability and consistency of model performance over time, identify external factors contributing to failures and delays, and examine the system’s responsiveness and capacity for self-monitoring. Independent validation using X-band SAR imagery is also assessed, alongside a review of practical use cases that illustrate the platform’s real-world applicability and limitations. Together, these assessments provide a well-rounded understanding of the system’s maturity, robustness, and areas for future improvement.

4.1. Model Performance over Time

Although AI models and training techniques have advanced significantly in recent years, no updates have been made to the platform concerning the employed models or their implementation methods. The focus was not on continuously improving modeling performance but, rather, on serving as an end-to-end capabilities demonstrator. This approach allows us to extract valuable insights into how an unmodified model performs over time and to investigate the potential causes of performance degradation, if observed.

Table 4 presents yearly statistics resulting from the cross-matching of AI model outputs with AIS data. The limited number of satellite products processed in 2021 (one product) and 2022 is attributed to the temporary suspension of the platform’s operations; thus, statistics from 2021 should be considered unreliable. Similarly, 2019, the initial year of the project’s implementation, and the current year, which is still ongoing, also show reduced data availability. The decrease in processed data from 2023 to 2024 is primarily due to the failure of the Sentinel-1B satellite, while the expected increase from 2024 to 2025 will be due to the new Sentinel-1C satellite.

Table 4.

Vessel detections per satellite product statistics (AIS cross-matched).

Although the classification precision reported in Table 4 ranges between 59 and 62%, this level of performance is consistent with the results observed in comparable SAR-based ship classification studies, particularly under operational and laboratory conditions. Published benchmarks on curated datasets such as OpenSARShip [37] or FUSAR-Ship [38] often report accuracies in the 70–85% range when classes are balanced and image quality is controlled [38,39]. Even higher accuracies above 90% are typically achieved only in carefully selected experimental settings with limited ship categories or auxiliary cues such as AIS or wake signatures [40].

On the OpenSARShip benchmark, the reported performance varies significantly depending on the number of classes considered. For the three-class variant (typically bulk, container, and tanker), accuracies around 80% are common. For example, PFGFE-Net achieved 79.84% [41]; DPIG-Net’s accuracy was slightly higher, at 81.28% [42]; and the lightweight SBNN model achieve 80.03% accuracy [43]. Earlier baselines also performed competitively; for example, HOG-ShipCLSNet achieved 78.15% accuracy, with VGG and plain CNNs trailing, with accuracies between 67 and 74% [43]. When the benchmark is extended to the six-class setting, accuracies decline sharply to the 56–59% range, reflecting the increased difficulty and class imbalance. PFGFE-Net reported 56.83% [41], DPIG-Net 58.68% [42], SBNN 56.73% [44], and SE-LPN-DPFF 56.66% [44]. More recently, Wang et al. (2023) showed that their MSFA+AWC model modestly improved performance to 59.16% in the six-class setting while maintaining near-80% accuracy in the three-class setup [9].

In contrast, our system processes continuous multi-year Sentinel-1 acquisitions across heterogeneous traffic, environmental conditions, and ship types, where noise, imbalance, and imperfect labels are unavoidable. Importantly, when training earlier versions of the model over six years ago, we obtained similar accuracy levels, indicating that the problem has been inherently difficult from the very beginning and not simply a limitation of the current implementation. Furthermore, the presence of an ‘Other’ category—by design, a very generic class that aggregates different types of vessels—naturally reduces classification precision, but it is necessary to preserve coverage across the full range of ships encountered in real-world operations. Under these constraints, maintaining a stable accuracy around 60% while simultaneously delivering low MAE in ship length estimation is operationally valuable, as it enables robust, large-scale monitoring rather than idealized “in vitro” performance.

To further investigate the impact of satellite resolution on detection performance, we analyzed vessel detection accuracy across different size categories. Vessels were grouped into four classes: small (<10 m), medium–small (10–40 m), medium–large (40–100 m), and large (>100 m). The results summarized in Table 5 indicate that detection accuracy increases with vessel size, with smaller vessels (<10 m) exhibiting the lowest detection rates due to the spatial resolution limitations ( 10 m) of the satellite imagery. Conversely, larger vessels (>100 m) are detected with high reliability, highlighting that performance is strongly influenced by vessel size and image resolution.

Table 5.

Detection performance by vessel length class.

Beyond vessel length, it is also important to consider how vessel type influences detection performance in SAR imagery. As shown in Table 6, cargo and tanker vessels are detected with high accuracy, as expected, given their relatively large size. Passenger vessels also achieve reasonably good detection rates, while fishing vessels are detected with moderate accuracy (slightly above 50%). The most challenging category to detect is the other class, with only about one in four vessels correctly identified. Interestingly, relying solely on Table 5 could be misleading, as one might assume that fishing vessels, which are often smaller in size, represent the most difficult class to detect. In reality, the other category proves more problematic, largely due to its heterogeneous nature: it aggregates diverse vessel types, including many small recreational craft and other less common categories, making it highly ambiguous and harder to capture consistently.

Table 6.

Detection performance by vessel type.

In terms of vessel detection, the AI models have demonstrated consistent performance over time. The number of vessels detected with matching AIS data, as well as the count of “dark” vessels, i.e., those without AIS signals, remains relatively stable across the years. A slight increase is observed in the number of vessels with AIS data that were not detected by the models, rising from approximately 40 in 2020 to around 47 in the past two to three years. Since undetected vessels are predominantly smaller in size, this variation might have been influenced by regulatory or behavioral changes related to the COVID-19 pandemic. Additionally, the ship type classification and ship length estimation models have shown strong consistency, with only minimal year-to-year fluctuations. Collectively, these results underscore the robustness and long-term reliability of the AI models, suggesting their suitability for extended use in diverse operational contexts.

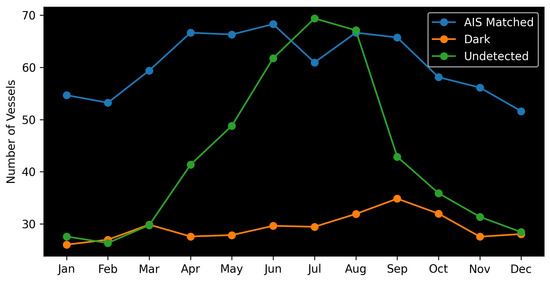

Figure 5 presents the monthly average number of vessels detected in satellite SAR imagery after cross-referencing with AIS data from the corresponding areas and time periods. The blue line represents vessels successfully detected by our AI models that also have matching AIS records. Monthly variations in vessel counts are primarily attributed to fluctuations in maritime activity. The green line indicates vessels with AIS signals that were not detected by our models. These undetected vessels show significant seasonal variation, with the majority occurring during the summer months. This is likely due to an increase in small fishing and recreational vessels during this period, which are more challenging to detect in Sentinel-1 imagery. The orange line denotes vessels detected by our models for which no corresponding AIS data were found. These may include so-called “dark” vessels, as well as potential false positives. A qualitative assessment of the “AfterNextDay” monitoring interface suggests that many of these “dark” detections are located far offshore, beyond the range of terrestrial AIS receivers. Given the known limitations of AIS coverage due to the Earth’s curvature, such cases highlight the value of satellite SAR, which extends vessel detection capabilities beyond traditional AIS reach.

Figure 5.

AI vessel detections and AIS cross-matches per month.

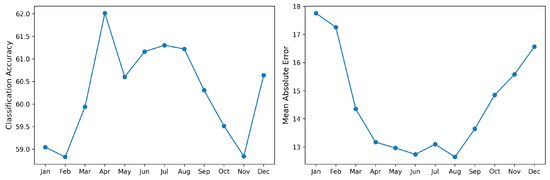

Figure 6 illustrates the monthly performance of the ship type classification and ship length estimation models. The two metrics exhibit similar seasonal trends. Although they represent opposite scales, where higher classification accuracy is preferable and a lower mean absolute error (MAE) is desired, they both reflect improved performance during the same periods.

Figure 6.

Ship type classification accuracy (left) and ship length MAE per month (right).

Specifically, higher classification accuracy and lower MAE values are observed during the summer months. This seasonal improvement is primarily attributed to the increased presence of smaller vessels, predominantly fishing boats, during this period. While these smaller vessels are generally more difficult to detect, once detected, they are more likely to be correctly classified due to their distinctive characteristics associated with the fishing category. Furthermore, their limited size range constrains the extent of potential error in length estimation, thereby contributing to a reduced MAE.

SatShipAI stands as a pivotal contribution to the field of AI applications for Earth observation, demonstrating how experimental AI models can be successfully matured into robust, field-tested systems. Its sustained operational use in real-world maritime scenarios offers not only practical value for surveillance and monitoring tasks but also serves as a critical reference point in advancing research and guiding the deployment of AI in similarly complex and dynamic environments.

4.2. Qualitative Observations from Long-Term Manual Review

Over the course of several years of manual result inspection, a number of observations have emerged that are not easily captured through systematic data analysis alone. Overall, the vessel detection performance of the system appears to be highly effective—comparable, in many cases, to that of a human analyst. Larger vessels are more readily detected due to their stronger radar signatures, while smaller vessels pose a greater challenge, particularly given the approximately 10 m spatial resolution of Sentinel-1 SAR imagery.

Detection becomes significantly more difficult in nearshore environments, especially within harbors, where the dense clustering of vessels and the presence of port infrastructure can obscure or confound ship signatures. Additionally, offshore wind turbines are sometimes misclassified as vessels, reflecting a known source of false positives.

One of the most compelling strengths of the system lies in its ability to detect vessels operating far from shore, where AIS data is often absent or unavailable. In such regions, the model frequently provides the only means of surveillance. The ship classification component of the system demonstrates satisfactory performance, particularly given the inherent challenges of distinguishing vessel types in SAR imagery, a task that even experienced human analysts often find difficult and unreliable (see Figure 2).

Notably, the system’s performance may be slightly under-represented in automated evaluations, as SAR image edges provide limited useful information for detection, whereas AIS data may still include vessels in these regions. Finally, occasional mismatches between AI detections and AIS transmissions have been observed, where a single ship appears as both a “dark” and an “unmatched” detection instead of one single “matched” detection. These cases suggest the need for a more robust fusing algorithm to improve matching accuracy between the two data sources.

4.3. External Evaluation on X-Band SAR Imagery

To further assess the generalizability and robustness of the SatShipAI models, an external evaluation was conducted using SAR imagery acquired from a different satellite operating in the X-band frequency range. Unlike Sentinel-1, which captures SAR data in the C band, X-band systems have different backscatter characteristics and typically offer higher spatial resolution but can be more affected by atmospheric conditions [45]. These differences present a meaningful challenge when applying models trained exclusively on C-band imagery.

Despite these spectral and structural differences, the vessel detection model demonstrated strong performance during this assessment of its output. According to the external evaluator, the model identified maritime vessels with a level of accuracy that was consistent with expectations and aligned with operational needs. False positives and missed detections remained within acceptable limits, and no major adaptation or retraining was required to apply the models to the X-band dataset.

This successful cross-band evaluation highlights the inherent adaptability of the SatShipAI models and reinforces their potential for broader application across diverse SAR platforms. It also suggests that, with minimal adjustments, these models could support vessel detection tasks in systems beyond Sentinel-1, enabling wider use in operational and multi-sensor maritime surveillance frameworks.

4.4. Analysis of Failures and Delays

A substantial portion of the encountered operational failures stemmed from disruptions in the acquisition of satellite products and their associated orbit files. One major source of instability was the transition of the official Copernicus data access point from https://scihub.copernicus.eu/dhus (accessed on 1 January 2022) to https://dataspace.copernicus.eu (accessed on 4 September 2025). This change not only introduced a new access protocol but also required the integration of updated authentication credentials and data retrieval procedures. The most persistent and recurring challenge has been the availability of orbit files, which are essential for accurate image geolocation and calibration. These files are hosted separately from the main satellite image and are often stored in cloud-based directories that have either been relocated or become temporarily inaccessible. Such inconsistencies in availability have led to processing failures or delays, particularly when the orbit files could not be retrieved within the platform’s expected processing window.

The unexpected failure of the Sentinel-1B satellite significantly impacted the availability of SAR data across the entire Copernicus program. For an extended period, only Sentinel-1A remained operational, effectively reducing the overall data acquisition capacity by half. This limitation due to the substantial drop in the number of processed scenes not only affected this platform but also disrupted numerous Earth observation applications globally, particularly those dependent on consistent temporal coverage. Fortunately, the recent deployment of Sentinel-1C has partially restored the SAR data coverage within the constellation, while the upcoming launch of Sentinel-1D, scheduled for next year, is expected to significantly expand the overall data acquisition capacity.

The procurement of AIS data constituted a less common but recurrent challenge. The source and method of access for this dataset, including its host location and protocols (e.g., FTP, HTTP, and Web interface), underwent a minimum of three significant changes within the six-year study period. This instability in the data source introduces a potential failure point, necessitating troubleshooting cycles at approximately two-year intervals.

The SatShipAI platform includes a post-processing step that excludes known offshore wind farm areas from analysis to reduce false detections. This strategy has been effective in minimizing misclassifications of static structures as vessels. However, in recent years, the Baltic Sea has experienced considerable growth in wind farm installations. Some of these newer wind farms were not yet incorporated into the exclusion zones. As a result, a small number of wind turbines located in these unlisted areas were occasionally misclassified by the AI models as ships, introducing a limited number of false positives. While the overall impact on detection accuracy remains low, this highlights the importance of regularly updating exclusion areas to reflect changes in the maritime infrastructure landscape.

4.5. System Responsiveness and Self-Awareness

The platform exhibited a failure-handling limitation in its user interface, whereby it did not adequately indicate when the system had ceased updating its displayed content. Consequently, the most recently displayed product remained unchanged, persistently showing outdated information. This lack of updates was readily apparent to experienced users familiar with the system’s expected behavior. The lack of automated notification delayed administrative awareness of the system’s degraded state, and as a result, operators were forced to perform manual, periodic checks to confirm whether the data feed had resumed or remained stalled.

In order to resolve such failures, direct human intervention was required to diagnose the issue and implement corrective actions, including restarting services or updating configurations. This dependency on manual oversight introduces inefficiencies and increases the risk of prolonged system downtime going unnoticed.

In terms of human oversight, the most frequent task involves manual verification of anomalous outputs, which typically requires only a few minutes per case but depends strongly on how soon the verification is needed. Processing failures are much rarer, occurring roughly once per year, but may require up to a few hours to resolve, depending on the underlying issue. While these oversight requirements are manageable in the current operational context, a wider deployment would necessitate additional human resources to sustain the same level of responsiveness. Otherwise, delays in identifying and addressing anomalies or failures would likely increase, limiting scalability.

To enhance system resilience and ensure timely operator awareness, it is essential to implement an automated notification mechanism that alerts users whenever a gap in product updates is detected. Such a mechanism would enable faster response times and reduce reliance on constant user monitoring. Additionally, the integration of a centralized dashboard displaying real-time system status, product availability metrics, and historical update logs would significantly improve transparency, facilitate troubleshooting, and reinforce the overall integrity of the platform.

5. Discussion and Perspectives

This section synthesizes the platform’s practical applications with a reflective analysis of its operational history and future development paths. Drawing on the long-term performance evaluation, we discuss SatShipAI’s real-world utility in maritime surveillance, its resilience under operational challenges, and the balance between automation and human oversight. We also outline forward-looking perspectives, including technical upgrades, expanded sensor integration, and improved data fusion, which can inform the design of next-generation AI–geospatial monitoring systems.

5.1. Assessment of Contribution and Impact

5.1.1. Significance

This paper is significant as one of the very few long-term studies of an AI-driven maritime surveillance system that is deployed in operational or near-operational conditions. In comparison, the great majority of previous studies are short-term tests, even though they apply SOTA methods of detection and implementation. SatShipAI documents six years of steady performance, robustness, and adaptability under conditions of operational constraints. A very useful lesson concerns the integration of AIS information: While crucial to the validation and detection of ships, AIS reports are generally incomplete, lagged, or inconsistent, especially with regard to minor ships, as well as offshore regions. Overlaying detections with AIS highlighted both the capability of the system to detect ‘dark’ ships outside reach and the limitations when AIS is used as ground truth in isolation. These findings put into perspective the requirement for stronger fusion methodologies and multiple AIS sources and demonstrate how long-term deployment can reveal knowledge hidden in short-term studies.

5.1.2. Quality

The value of this paper lies in its multi-perspective and systematic performance evaluation approach, which goes beyond performance reporting. Firstly, the study deploys quantitative metrics such as accuracy, mean absolute error (MAE), mean absolute percentage error (MAPE), and detection rates for vessels, all measured steadily over multiple years of operation. These offer cross-year comparisons that are proof of stability against varying environmental and operating conditions. The reported results are similar to those achieved during the training phase of the models years ago (a five-fold training/validation split was used.). Secondly, the analysis is complemented by qualitative metrics gleaned from prolonged manual inspections of system outputs that capture subtleties perhaps lost to purely automatically run evaluations, e.g., misclassifications in harbor areas or wind farm interference problems. Thirdly, the systems’ robustness is confirmed through external validation on X-band SAR imagery, as models trained on purely C-band imagery show generalizability without needing to retrain.

What is significant is that this paper integrates operational failure analysis and measurement of resilience in that assessment is not restricted to controlled/ideal laboratory situations but, rather, mimics in-the-field deployment complexities. This includes documenting and dealing with repeat bottlenecks such as orbit file delays, AIS instabilities in the data, and maritime infrastructure modifications. Through documentation of successes and limitations, the study is open in associating the performance of the system.

5.1.3. Scientific Soundness

The scientific soundness of this work lies in its transparent methodology, reproducible evaluation design, and alignment with the latest practices in maritime AI at the time of deployment. Although certain implementation details cannot be disclosed for operational reasons, this paper provides a clear description of the end-to-end pipeline, including pre-processing, detection, classification, and fusion workflows, while also situating them among comparable benchmarks from the literature. At the time of its inception (2018–2020), the SatShipAI platform adopted and operationalized state-of-the-art methods—notably, a tight processing pipeline built around modular Python 3.8 scripts. This design enabled efficient orchestration of data ingestion, orbit file correction, SAR preprocessing, model inference, and output generation, ensuring stability and reproducibility over six years of continuous operation.

To increase robustness and reduce variance in predictions, the system relied on a two-model ensemble strategy, combining a RetinaNet-based ship detector (ResNet-50 backbone) with CNN classifiers (InceptionV3 and DenseNet201) for ship type identification and length estimation. This ensemble approach, coupled with test-time augmentation and spatial filtering, ensured consistent accuracy across heterogeneous scenes and environmental conditions. By cross-validating detections with AIS data in a structured fusion step, the platform also provided an additional layer of verification, reducing false positives and enhancing practical reliability.

The consistency of the results over six years, including classification precision around 60% in operational conditions, and agreement with independent external evaluations on X-band SAR imagery confirm the reliability of the findings. While the methods were considered cutting-edge in 2020, their enduring stability and scalability in live deployment highlight the maturity of the implementation and the robustness of the system architecture. Collectively, these design choices underline the scientific soundness of the platform as both a technological demonstrator and a valuable case study in the long-term operationalization of AI for Earth observation.

5.1.4. Reader Interest

This work is positioned to serve the needs of both practitioners and researchers, reflecting its dual relevance as an operational case study and a scientific contribution.

- For practitioners including coast guard operators, port and harbor authorities, maritime security analysts, and industry stakeholders, the study provides actionable lessons on how satellite-driven AI can extend surveillance beyond AIS coverage, detect “dark” vessels, and maintain operational reliability despite challenges such as orbit file delays or wind farm interference. The demonstrated resilience strategies, ensemble approaches, and cross-sensor adaptability offer practical guidance for engineers and decision-makers deploying or upgrading maritime monitoring infrastructure.

- For researchers across AI, computer vision, Earth observation, and applied geospatial fields, the six-year longitudinal evaluation offers rare empirical evidence of how models behave over extended deployments. By reporting both stable performance and recurring limitations, the study contributes to discussions on trustworthy AI, model drift, self-monitoring, and human–automation interaction. Researchers in policy, environmental monitoring, and security studies may also find value in the evidence-based insights into how AI-driven platforms can contribute to maritime domain awareness and long-term sustainability.

By explicitly addressing both groups, this paper highlights how rigorous evaluation of real-world deployments can simultaneously inform practical applications and advance broader research agendas.

5.2. Practical Applications and Use Cases

Beyond technical benchmarks, assessing the long-term utility of the platform requires evaluation of how its outputs serve real-world maritime operations. Despite the inherent delay in SAR image availability—typically around seven hours post acquisition—the system’s automated image ingestion and AI-driven ship detection provide timely intelligence in environments where real-time data is limited or unreliable. This is particularly relevant in areas where AIS signals are disabled, spoofed, or absent, underscoring the platform’s value in bridging critical surveillance gaps.

In operational maritime security contexts, such as border enforcement or illegal vessel activity monitoring, the platform has demonstrated the ability to support decision-making, even with delayed inputs. Authorities can act on AI-verified detections to redirect patrols, initiate targeted monitoring, or plan interdiction efforts based on confirmed vessel locations. The system’s resilience under adverse conditions, such as cloud cover, darkness, or poor visibility, adds to its effectiveness in routine and crisis response scenarios alike.

Similarly, in port operations and vessel traffic management, AI-generated detections contribute to the optimization of berthing schedules and resource deployment. The provided actionable intelligence enables more efficient planning and reduces delays, especially when traditional AIS tracking is incomplete. In emergency situations, ranging from severe weather events to maritime accidents, SAR-based detections can aid in the rapid coordination of search and rescue efforts by providing situational awareness over large maritime areas.

For defense and strategic intelligence purposes, the system supports ongoing monitoring of sensitive or high-risk regions. Temporal analysis of vessel movement patterns can support anomaly detection and provide insights into strategic trends. When integrated with auxiliary data sources, such as historical AIS records, oceanographic data, or meteorological forecasts, these outputs enable predictive modeling that enhances operational foresight and inform pre-emptive decision-making.

Ultimately, the practical applications discussed here serve not only to demonstrate the platform’s utility but also to validate its functional robustness under diverse operational conditions. The consistent integration of AI with SAR data and its translation into usable maritime intelligence reinforce its role as a meaningful contributor to modern surveillance infrastructure. These use cases provide essential context for the interpretation of the performance metrics and systemic observations presented throughout this assessment.

5.3. Retrospective and Online Presence

A Web-based retrospective search reveals that SatShipAI has been referenced across a variety of third-party sources and platforms. It appears in policy-related discussions such as the article “Green Economy Policies: Where We Are” [46] and is listed in open-source directories like the GitHub repository for awesome geospatial companies (accessed on 21 May 2025). Furthermore, it was mentioned in a French National Centre for Scientific Research post (visualization of shipping lanes from Sentinel-1 (accessed on 21 May 2025)). Additionally, it is featured in blog posts and technical articles on prominent sites such as the Alcimed’s insights on data analytics in maritime surveillance (accessed on 21 May 2025) and ACME AI’s post on geospatial AI for maritime surveillance (accessed on 21 May 2025). Finally, it has also been shared on social networks, such as in a maritime surveillance LinkedIn post (accessed on 21 May 2025).

These references underscore the project’s growing visibility and influence across both technical and policy-oriented domains. Its presence in scientific, governmental, and industry-related discussions highlights the platform’s relevance as a credible and practical tool for real-world maritime surveillance. The breadth of citations, from open-source repositories to policy analysis and specialized AI blogs, demonstrates its multidisciplinary significance and utility. This recognition affirms SatShipAI not only as a technological demonstration but also as a valuable contributor to the broader geospatial and maritime data ecosystems, informing best practices, raising awareness on AI integration in surveillance, and guiding future developments in autonomous monitoring platforms.

5.4. Perspectives

While the platform has successfully fulfilled its role as an end-to-end demonstrator, the current research provides a more comprehensive understanding of its performance and challenges encountered over time. While some challenges, such as those related to the satellite imagery feed and the availability of orbit files, are beyond our control, there remains significant room for improvement. A future version of the platform, as well as any similar system, should address several key aspects, including the following:

- Integration of state-of-the-art models. Leveraging the latest advancements in deep learning and computer vision can significantly improve detection accuracy and robustness. New architectures such as vision transformers or hybrid attention-based models should be explored and benchmarked.

- Implementation of system health monitoring and alerts. Adding automated system health diagnostics and alerting mechanisms would enable early detection of failures in processing pipelines, data ingestion, or model degradation. This includes logging anomalies and resource bottlenecks and maintaining operational continuity through redundancy strategies.

- Model retraining with additional and diverse data. Expanding the training dataset with new SAR acquisitions across varying regions, seasons, sea states, and ship types will improve the model’s generalizability. Incorporating edge cases and mislabeled examples identified during operation can help refine model accuracy and resilience.

- Fusion with coastal camera networks. Integrating real-time data from coastal surveillance cameras can complement satellite detections, particularly in littoral zones where SAR resolution may be insufficient or cluttered by land–sea interference. This fusion would enhance situational awareness in nearshore environments.

- Utilization of Sentinel-2 optical imagery. Including optical data from Sentinel-2 (or other) satellite missions can enrich the detection process, especially under clear-sky conditions. Adding AI estimations on optical satellite data will add another layer and expand our awareness for the vessels present within our area of interest (AOI).

- Enhancement of AI–AIS correlation algorithms. Enhancing the algorithm that links AI-detected vessels with AIS transmissions is essential in improving the accuracy of performance assessments and the identification of dark vessels. Integrating probabilistic modeling, spatiotemporal interpolation techniques, and vessel trajectory prediction could significantly strengthen the robustness and reliability of these associations.

6. Conclusions

Over six years of continuous operation, the SatShipAI platform has demonstrated that artificial intelligence can be reliably integrated into maritime surveillance workflows under real-world conditions. The system consistently delivered vessel detection, classification, and length estimation across diverse environments while also providing valuable insights into the presence of “dark” vessels beyond AIS coverage. Importantly, the platform’s modular design and ensemble-based processing enabled adaptability to new challenges, including cross-sensor application to X-band SAR imagery without retraining.

- Operational Resilience

- The platform exhibited notable resilience by maintaining performance despite recurring bottlenecks such as orbit file delays, satellite outages, and AIS instabilities. These disruptions, while outside the control of the system itself, revealed the importance of redundancy, continuous updates to exclusion masks (e.g., for wind farms), and automated system health monitoring to ensure long-term reliability.

- Human oversight vs. automation

- SatShipAI highlights the ongoing balance between autonomy and human intervention in AI–geospatial systems. While the platform largely operated without manual input, critical tasks such as resolving anomalies, updating dependencies, and verifying false detections still required human expertise. This hybrid design underscores the necessity of transparency and oversight for trustworthy AI in high-stakes monitoring domains.

- Final Remarks

- This study contributes one of the few longitudinal evaluations of an AI-based Earth observation system in operation, offering lessons that extend beyond the maritime domain. By combining quantitative metrics with qualitative reviews and external validation, we show that operational maturity is not only a matter of algorithmic performance but also of resilience, adaptability, and human–AI collaboration. Lessons learned from AIS detection mapping, particularly regarding incomplete coverage and an inconsistent ground truth, highlight the need for more sophisticated fusion and trajectory reconstruction approaches in future platforms. Ultimately, SatShipAI provides both practitioners and researchers with a reference case for the design of next-generation monitoring systems that are sustainable, transparent, and capable of evolving alongside changing infrastructure and operational demands.

Author Contributions

Conceptualization, I.N.; methodology, I.N.; software, I.N. and K.V.; validation, I.N. and K.V.; investigation, I.N.; resources, I.N. and K.V.; data curation, I.N. writing—original draft preparation, I.N.; writing—review and editing, K.V.; visualization, I.N. and K.V.; supervision, K.V.; project administration, I.N. and K.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data supporting the findings of this study are not publicly available as they originate from a private project and are subject to confidentiality restrictions.

Acknowledgments

The authors would like to thank the editor and the reviewers for reviewing the paper and providing valuable comments. While this research was not funded by the MUSIT project, the project’s activities served as inspiration and are expected to provide valuable insights for future work building on this study.

Conflicts of Interest

Author Ioannis Nasios was employed by the company Nodalpoint Systems LTD. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Ray, C.; Troupiotis-Kapeliaris, A.; Kontopoulos, I.; Andronikou, V.; Nasios, I.; Piliouras, N.; Chevallier, T.; Delmas, V.; Tserpes, K.; Zissis, D.; et al. Multi-Sensor Inferred Trajectories (MUSIT) for Vessel Mobility. In Proceedings of the OCEANS 2025 Brest, Brest, France, 16–19 June 2025; pp. 1–6. [Google Scholar]

- Awais, C.M.; Reggiannini, M.; Moroni, D.; Salerno, E. A Survey on SAR Ship Classification Using Deep Learning. arXiv 2025, arXiv:2503.11906. [Google Scholar] [CrossRef]

- Chen, H.; Chen, C.; Wang, F.; Shi, Y.; Zeng, W. RSNet: A Light Framework for The Detection of Multi-scale Remote Sensing Targets. arXiv 2024, arXiv:2410.23073. [Google Scholar] [CrossRef]

- El-Darymli, K.; Gierull, C.; Biron, K. Deep Learning vs. K-CFAR for Ship Detection in Spaceborne SAR Imagery. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024. [Google Scholar]

- Im, Y.; Lee, Y. Ship Detection in Satellite Images from Radar and Optical Sensors using YOLO. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024. [Google Scholar]

- Ma, X.; Cheng, J.; Li, A.; Zhang, Y.; Lin, Z. AMANet: Advancing SAR Ship Detection with Adaptive Multi-Hierarchical Attention Network. arXiv 2024, arXiv:2401.13214. [Google Scholar] [CrossRef]

- Niranjan, A.; Patial, S.; Aryan, A.; Mittal, A.; Choudhury, T.; Rabiei-Dastjerdi, H.; Kumar, P. A Deep Learning Approach for Ship Detection Using Satellite Imagery. EAI Endorsed Trans. Internet Things 2024, 10. [Google Scholar] [CrossRef]

- Tian, C.; Lv, Z.; Xue, F.; Wu, X.; Liu, D. Multi-Domain Joint Synthetic Aperture Radar Ship Detection Method Integrating Complex Information with Deep Learning. Remote Sens. 2024, 16, 3555. [Google Scholar] [CrossRef]

- Wang, C.; Pei, J.; Luo, S.; Huo, W.; Huang, Y.; Zhang, Y.; Yang, J. SAR ship target recognition via multiscale feature attention and adaptive-weighed classifier. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Yin, Y.; Cheng, X.; Shi, F.; Liu, X.; Huo, H.; Chen, S. High-order Spatial Interactions Enhanced Lightweight Model for Optical Remote Sensing Image-based Small Ship Detection. arXiv 2023, arXiv:304.03812. [Google Scholar] [CrossRef]

- Deng, Y.; Guan, D.; Chen, Y.; Yuan, W.; Ji, J.; Wei, M. SAR-ShipNet: SAR-Ship Detection Neural Network via Bidirectional Coordinate Attention and Multi-resolution Feature Fusion. arXiv 2022, arXiv:2203.15480. [Google Scholar]

- Ke, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S. SAR Ship Detection based on Swin Transformer and Feature Enhancement Feature Pyramid Network. arXiv 2022, arXiv:2209.10421. [Google Scholar] [CrossRef]

- Shi, W.; Zheng, W.; Xu, Z. Ship-Yolo: A Deep Learning Approach for Ship Detection in Remote Sensing Images. J. Mar. Sci. Eng. 2025, 13, 737. [Google Scholar] [CrossRef]

- Gong, Y.; Chen, Z.; Tan, J.; Yin, C.; Deng, W. Two-stage ship detection at long distances based on deep learning and slicing technique. PLoS ONE 2024, 19, e0313145. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Han, D.; Cui, M.; Chen, C. NAS-YOLOX: A SAR ship detection using neural architecture search and multi-scale attention. Intell. Syst. Remote Sens. 2023, 35, 1–32. [Google Scholar]

- Er, M.J.; Zhang, Y.; Chen, J.; Gao, W. Ship detection with deep learning: A survey. Artif. Intell. Rev. 2023, 56, 11825–11865. [Google Scholar] [CrossRef]

- Li, J.; Xu, C.; Su, H.; Gao, L.; Wang, T. Deep Learning for SAR Ship Detection: Past, Present and Future. Remote Sens. 2022, 14, 2712. [Google Scholar] [CrossRef]

- Zhao, T.; Wang, Y.; Li, Z.; Gao, Y.; Chen, C. Ship Detection with Deep Learning in Optical Remote-Sensing Images: A Survey of Challenges and Advances. Remote Sens. 2024, 16, 1145. [Google Scholar] [CrossRef]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Shao, Z.; Wang, W.; Liu, B.; Li, Y. SeaShips: A Large-Scale Precisely Annotated Dataset for Ship Detection in Visible Images. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Paolo, F.; Lin, T.T.T.; Gupta, R.; Goodman, B.; Patel, N.; Kuster, D.; Dunnmon, J. xView3-SAR: Detecting Dark Fishing Activity Using Synthetic Aperture Radar Imagery. In Proceedings of the NeurIPS 2022 Datasets and Benchmarks Track, Panama City, Panama, 19–21 October 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Global Fishing Watch. Emerging Technology Gives First Ever Global View of Hidden Vessels; Global Fishing Watch: Washington, DC, USA, 2022. [Google Scholar]

- Needhi, J. AI’s Role in Combating Illegal Fishing: The Case of Skylight; Medium: San Francisco, CA, USA, 2024. [Google Scholar]

- ICEYE. Combining SAR with AI & Optical Imagery to Support Maritime Awareness; ICEYE Official Blog: Helsinki, Finland, 2023. [Google Scholar]

- European Commission CORDIS. Throwing a Wider Net to Close Gaps in Civilian Maritime Surveillance; Results in Brief for COMPASS2020 Project; CORDIS: Brussels, Belgium, 2021. [Google Scholar]

- He, X.; Chen, X.; Du, X.; Wang, X.; Xu, S. Maritime Target Radar Detection and Tracking via DTNet Transfer Learning Using Multi-Frame Images. Remote Sens. 2025, 17, 836. [Google Scholar] [CrossRef]

- Marti, C.; Ouerhani, N.; Bruzzone, L. Ranking Ship Detection Methods Using SAR Images Based on Machine Learning and AI. J. Mar. Sci. Eng. 2023, 11, 1916. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Li, Z.; Li, W.; Tang, W.; Xu, Z. VesselSARNet: Lightweight Ship Detection For SAR Images. In Proceedings of the IPICE 2024: 2024 International Conference on Image Processing, Intelligent Control and Computer Engineering, Qingdao, China, 19–21 July 2024. [Google Scholar]

- Reggiannini, M.; Bedini, L. Multi-Sensor Satellite Data Processing for Marine Traffic Understanding. Electronics 2019, 8, 152. [Google Scholar] [CrossRef]

- Petrovic, R.; Simic, D.; Cica, Z.; Drajic, D.; Nerandzic, M.; Nikolic, D. IoT OTH Maritime Surveillance Service over Satellite Network in Equatorial Environment: Analysis, Design and Deployment. Electronics 2021, 10, 2070. [Google Scholar] [CrossRef]

- Lazarov, A. SAR Signal Formation and Image Reconstruction of a Moving Sea Target. Electronics 2022, 11, 1999. [Google Scholar] [CrossRef]

- He, M.; Liu, J.; Yang, Z.; Yin, Z. Integrated Anti-Aliasing and Fully Shared Convolution for Small-Ship Detection in Synthetic Aperture Radar (SAR) Images. Electronics 2024, 13, 4540. [Google Scholar] [CrossRef]

- Chen, C.; Ma, F.; Wang, K.; Liu, H.; Zeng, D.; Lu, P. ShipMOT: A Robust and Reliable CNN-NSA Filter Framework for Marine Radar Target Tracking. Electronics 2025, 14, 1492. [Google Scholar] [CrossRef]

- do Lago Rocha, R.; de Figueiredo, F.A.P. Beyond Land: A Review of Benchmarking Datasets, Algorithms, and Metrics for Visual-Based Ship Tracking. Electronics 2023, 12, 2789. [Google Scholar] [CrossRef]

- Kongsberg Satellite Services (KSAT). Maritime Monitoring Services with Satellite-Based AIS and SAR. 2023. Available online: https://www.ksat.no/services/maritime-monitoring/ (accessed on 24 June 2025).

- Huang, L.; Liu, B.; Li, B.; Guo, W.; Yu, W.; Zhang, Z.; Yu, W. OpenSARShip: A dataset dedicated to Sentinel-1 ship interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 195–208. [Google Scholar] [CrossRef]

- Hou, X.; Ao, W.; Song, Q.; Lai, J.; Wang, H.; Xu, F. FUSAR-Ship: Building a high-resolution SAR-AIS matchup dataset of Gaofen-3 for ship detection and recognition. Sci. China Inf. Sci. 2020, 63, 140303. [Google Scholar] [CrossRef]

- Yan, Z.; Song, X.; Yang, L.; Wang, Y. Ship classification in synthetic aperture radar images based on multiple classifiers ensemble learning and automatic identification system data transfer learning. Remote Sens. 2022, 14, 5288. [Google Scholar] [CrossRef]

- Dechesne, C.; Lefèvre, S.; Vadaine, R.; Hajduch, G.; Fablet, R. Ship identification and characterization in Sentinel-1 SAR images with multi-task deep learning. Remote Sens. 2019, 11, 2997. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A polarization fusion network with geometric feature embedding for SAR ship classification. Pattern Recognit. 2022, 123, 108365. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, T.; Ke, X. A Dual-Polarization Information-Guided Network for SAR Ship Classification. Remote Sens. 2023, 15, 2138. [Google Scholar] [CrossRef]

- Zheng, S.; Hao, X.; Zhang, C.; Zhou, W.; Duan, L. Towards Lightweight Deep Classification for Low-Resolution Synthetic Aperture Radar (SAR) Images: An Empirical Study. Remote Sens. 2023, 15, 3312. [Google Scholar] [CrossRef]

- Zhu, H.; Guo, S.; Sheng, W.; Xiao, L. SBNN: A Searched Binary Neural Network for SAR Ship Classification. Appl. Sci. 2022, 12, 6866. [Google Scholar] [CrossRef]

- Tings, B.; Velotto, D. Comparison of ship wake detectability on C-band and X-band SAR. Int. J. Remote Sens. 2018, 39, 4451–4468. [Google Scholar] [CrossRef]

- Cavallo, M.; Culver, J.K.; Rau, C. Green Economy Policies. Where We Are. 2022. Available online: https://www.researchgate.net/publication/358571764_Green_Ecomomy_Policies_Where_we_are (accessed on 4 September 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).