1. Introduction

With the integration of Internet of Things (IoT) technologies into the agricultural sector, smart agriculture has emerged as a vital direction to address critical challenges such as climate change, labor shortages, and food security. In particular, the convergence of sensor technologies, communication networks, and artificial intelligence has facilitated precise environmental monitoring, real-time data analytics, and intelligent decision-support systems. These innovations are key drivers of agricultural automation and sustainable development. Traditionally, IoT-based agricultural communication has relied on wireless protocols such as Wi-Fi, 4G, LoRaWAN, and NB-IoT for data acquisition and transmission. While these technologies provide fundamental connectivity, they suffer from limitations in bandwidth, latency, transmission stability, and scalability [

1], rendering them inadequate for core smart agriculture applications such as dense sensor deployments, real-time image recognition, and remote device control. Consequently, deploying communication infrastructures characterized by high speed, high reliability, and ultra-low latency has become essential to advancing agricultural intelligence.

Fifth-generation mobile communication technology (5G network), formally introduced by the 3rd Generation Partnership Project (3GPP) in Release 15 (2018) [

2], incorporates the concept of private 5G networks. This architecture supports three major use cases, Enhanced Mobile Broadband (eMBB), Ultra-Reliable and Low-Latency Communications (URLLC), and Massive Machine-Type Communications (mMTC), which serve as foundational components for services requiring high bandwidth, high throughput, and low latency [

3,

4]. The private 5G network is built on a Service-Based Architecture (SBA) [

5] and utilizes Network Functions Virtualization (NFV) [

6] for dynamic core network resource allocation, thereby enhancing deployment flexibility, scalability, and resource efficiency [

7]. Unlike public mobile networks, private 5G networks operate on a licensed spectrum, allowing for independent frequency management and superior interference resistance [

8], thus ensuring high connection quality and information security. These advantages make private 5G networks particularly suitable for scenarios such as smart agriculture, remote healthcare, and smart manufacturing, which demand highly reliable communication [

9].

In wireless communication, the IEEE 802.11 Wireless Local Area Network (WLAN) standard [

10], exemplified by IEEE 802.11ax (Wi-Fi 6), is often referenced as a benchmark when compared with 5G private networks. Wi-Fi 6 incorporates Orthogonal Frequency-Division Multiple Access (OFDMA) and Multi-User Multiple-Input Multiple-Output (MU-MIMO) [

11], improving concurrent connectivity, spectral efficiency, throughput, and latency performance. At the Medium Access Control (MAC) layer, however, Wi-Fi 6 continues to rely on the Carrier Sense Multiple Access with Collision Avoidance (CSMA/CA) protocol [

12], using the Distributed Coordination Function (DCF) for channel contention. Under heavy traffic, this mechanism may be affected by backoff delays and retransmissions, which can impact efficiency. To mitigate this, enhancements including multi-tiered priority schemes that adjust the Inter-Frame Space (IFS) and backoff range have been explored to improve packet access, reduce latency, and strengthen overall performance [

13].

Although Wi-Fi 6 operates in unlicensed spectrum bands (e.g., 2.4 GHz and 5 GHz), which provides flexibility in deployment and relatively low installation costs, its communication performance may be affected in open or electromagnetically congested environments due to interference from nearby devices. This variability in link quality and transmission stability can pose challenges for applications that demand high reliability. In contrast, private 5G networks utilize licensed spectrum bands and support technologies such as Network Slicing [

14] and Multi-access Edge Computing (MEC), enabling differentiated Quality of Service (QoS) provisioning and real-time data processing tailored to specific application requirements. Furthermore, private 5G networks adopt a cellular architecture [

15] with dense deployments of small cells, forming modular coverage areas. This design allows dynamic capacity scaling to match site-specific demands and facilitates flexible expansion of base stations and spectrum resources. Seamless handover between cells ensures continuous connectivity, while inter-station coordination helps reduce interference and improve signal stability. Compared with the hotspot-based design of Wi-Fi 6, which offers less centralized management and adaptive coordination, the cellular approach of private 5G is generally better suited to scenarios involving high-density device deployments and large-scale remote-control applications.

On the other hand, wired Ethernet provides extremely high stability and ultra-low latency (under 1 ms), with transmission rates reaching up to 100 Gbps. However, its reliance on physical cabling restricts deployment flexibility, making it unsuitable for agricultural settings characterized by heterogeneous devices and high mobility demands. A comparative overview of the three primary communication technologies in the context of smart agriculture is presented in

Table 1 [

16].

In Taiwan, black fungus, commonly known in Western countries as “Jew’s Ear” due to its ear-like morphology, is widely cultivated. Rich in nutrients comparable to those in animal-based products, it is often referred to as “the meat among vegetarian foods” and has recently attracted attention as a functional health supplement. Species of black fungus are distributed across temperate to tropical regions and exhibit desirable traits for precision agriculture, including rapid mycelial growth, high yield, ease of cultivation, and consistent productivity.

However, the cultivation of black fungus requires precise environmental control. Key parameters such as temperature, relative humidity, light intensity, and carbon dioxide (CO

2) levels directly influence both yield and quality. Optimal growth typically occurs between 23 °C and 35 °C with relative humidity levels between 70% and 90% [

17]. Although modern greenhouses provide environmental regulation, farmers still rely on manual visual inspection to assess substrate bag conditions and harvest readiness, which may take one to three hours of daily in-greenhouse work. Prolonged exposure to micron-sized fungal spores poses respiratory health risks to workers [

18], and frequent access to greenhouses can inadvertently introduce exogenous molds, increasing the risk of fungal outbreaks. For instance,

Neurospora sitophila (commonly known as “red bread mold”) can rapidly proliferate in enclosed greenhouse environments, leading to substantial crop losses [

19].

Given these challenges, the development of an intelligent cultivation system featuring real-time monitoring, object recognition, and remote control capabilities is crucial for improving black fungus yield, reducing labor intensity, and enhancing disease management. To address these needs, this study proposes a smart cultivation platform for black fungus based on a private 5G communication infrastructure. The system integrates an IoT-enabled environmental sensing module with a deep learning-based image recognition engine to facilitate automated environmental control and real-time alerting.

The remainder of this paper is structured as follows:

Section 2 details the technical architecture and potential applications of private 5G networks.

Section 3 outlines the design and deployment of the proposed system.

Section 4 presents the experimental results and system performance evaluation, while

Section 5 concludes the study and discusses future research directions.

2. Related Work

With the rapid advancement of the Internet of Things (IoT) and Artificial Intelligence (AI), increasing research efforts have focused on the integrated application of real-time data sensing, transmission, and distributed decision-making. Pham et al. proposed a cloud-based platform architecture that incorporates various types of IoT sensor nodes and communication modules, enabling real-time device status reporting and service scheduling control. This approach significantly enhances system operational efficiency and resource management capabilities [

20]. Their work illustrates how the deep integration of IoT with communication and computing platforms can greatly improve information flow efficiency and remote controllability in the field.

To further improve edge inference and real-time responsiveness, Lin et al. developed a task scheduling system that integrates edge computing and deep reinforcement learning in a smart manufacturing context. By employing a multi-class Deep Q Network (DQN) model, the system achieved real-time decision-making, effectively reducing data transmission latency and enhancing overall system performance [

21]. These studies underscore that, in scenarios requiring large-scale sensing and rapid responsiveness, implementing a Multi-access Edge Computing (MEC) platform for local model inference and control logic is essential for realizing intelligent management and high-efficiency applications.

A comprehensive evaluation comparing the performance of 5G Standalone (5G-SA) architecture, Wi-Fi 6, and public 4G networks in agricultural applications was conducted, along with the design of an autonomous driving system for weed detection and targeted spraying in farmland. Experimental results demonstrated that the 5G-SA network significantly outperformed the public 4G network in terms of data transmission latency, throughput, and system stability, highlighting its high reliability and operational efficiency [

22].

In the domain of smart agriculture, Tang Nguyen-Tan et al. proposed a smart irrigation system built on a 5G Private Mobile Network (PMN). The system utilized UERANSIM and Free5GC to establish the 5G infrastructure and integrated a Quantum Key Distribution Framework (QKDF) to enhance data transmission security. Moreover, the research team applied the YOLOv8 deep learning model for crop health monitoring and growth stage prediction. By extracting critical features from real-time UAV-captured images, the system dynamically adjusted irrigation schedules to optimize water resource utilization and improve crop yield [

23].

A system integrating a private 5G network, unmanned aerial vehicles (UAVs), and an automated spraying robot was developed for detecting and controlling

Rumex obtusifolius in forage crop production. UAVs conducted real-time field inspections and transmitted high-resolution images to an edge computing server via a 5G-SA network. Weed detection and localization were carried out using a deep learning model. Upon detection, the spraying robot was guided for precise herbicide application. This study highlights the low latency and high uplink bandwidth advantages of private 5G networks, which enhance reliability and efficiency in agricultural automation [

24].

In Japan, the Tokyo Metropolitan Agriculture, Forestry and Fisheries Promotion Foundation, NTT East Corporation, and NTT AgriTechnology Co., Ltd. jointly established the Tokyo Smart Agriculture Research and Development Platform. This platform employs local 5G networks, ultra-high-resolution cameras, smart glasses, and autonomous robots to enable remote operation of greenhouse facilities. Even under remote control conditions, the system maintains high operational quality and efficiency. Through advanced data analytics, the platform monitors farm conditions, optimizes facility management, and supports the practical implementation of innovative agricultural technologies [

25].

In summary, private 5G network technology plays a pivotal role in the development of Agriculture 4.0. Its high reliability, ultra-low latency, and support for massive device connectivity position it as a foundational technology for future smart agriculture systems. Nevertheless, current research remains largely focused on environmental monitoring and data transmission. There is still considerable potential for deeper integration with AI and robotics. Building upon existing research, this study explores the application of private 5G networks in smart black fungus cultivation, with a particular emphasis on optimizing low-latency communication and real-time environmental monitoring to enhance the precision and stability of automated farm management systems.

3. Materials and Methods

In this study, the proposed 5G private network system for smart agriculture is applied to the cultivation of black fungus. The system architecture is illustrated in

Figure 1.

The proposed method establishes an intelligent agriculture framework for black fungus cultivation through the integration of 5G private networks, edge computing (MEC), deep learning models, and IoT sensors. The system architecture is designed to exploit the low latency and high bandwidth of 5G networks, enabling the transmission of sensor data and image streams to edge nodes for real-time processing. The analyzed results are subsequently returned to the user interface to support efficient decision-making. The method consists of three functional modules: (1) mycelial growth monitoring based on image inspection, (2) automated detection of mold contamination using deep learning models, and (3) environmental monitoring of temperature, humidity, and CO2 concentration via IoT devices. An Autonomous Guided Vehicle (AGV) equipped with a network camera serves as the data acquisition platform. The AGV follows predefined magnetic-rail paths to ensure consistent inspection coverage and incorporates a lift mechanism for capturing images across multiple rack levels. Through the integration of communication, computation, and sensing technologies, the method provides a systematic and automated approach to cultivation monitoring, reducing manual intervention while enhancing accuracy and efficiency in production management.

The captured images are transmitted in real time to a Multi-access Edge Computing (MEC) node, where a deep learning model performs inference to estimate the mycelial coverage rate of each substrate bag and detect visual indicators of mold infection. The system includes a real-time alert mechanism that notifies farmers immediately upon detection of anomalies by the model, enabling prompt isolation of contaminated substrate bags and reducing the risk of disease propagation.

In parallel with image-based analysis, the cultivation environment is instrumented with multiple sensors to continuously monitor key environmental parameters, including temperature, humidity, and CO2 concentration. Data are collected at five-minute intervals and transmitted to a backend database for storage. These environmental data are presented to users via a visualization interface, while image inference results from the deep learning model are computed and displayed in real time. Together, these two data streams provide farmers with a comprehensive basis for decision-making, facilitating more precise environmental control and production planning.

3.1. Private 5G Network Planning

This study designs a comprehensive private 5G network architecture to serve as the communication infrastructure for smart agricultural environments. The system architecture is depicted in

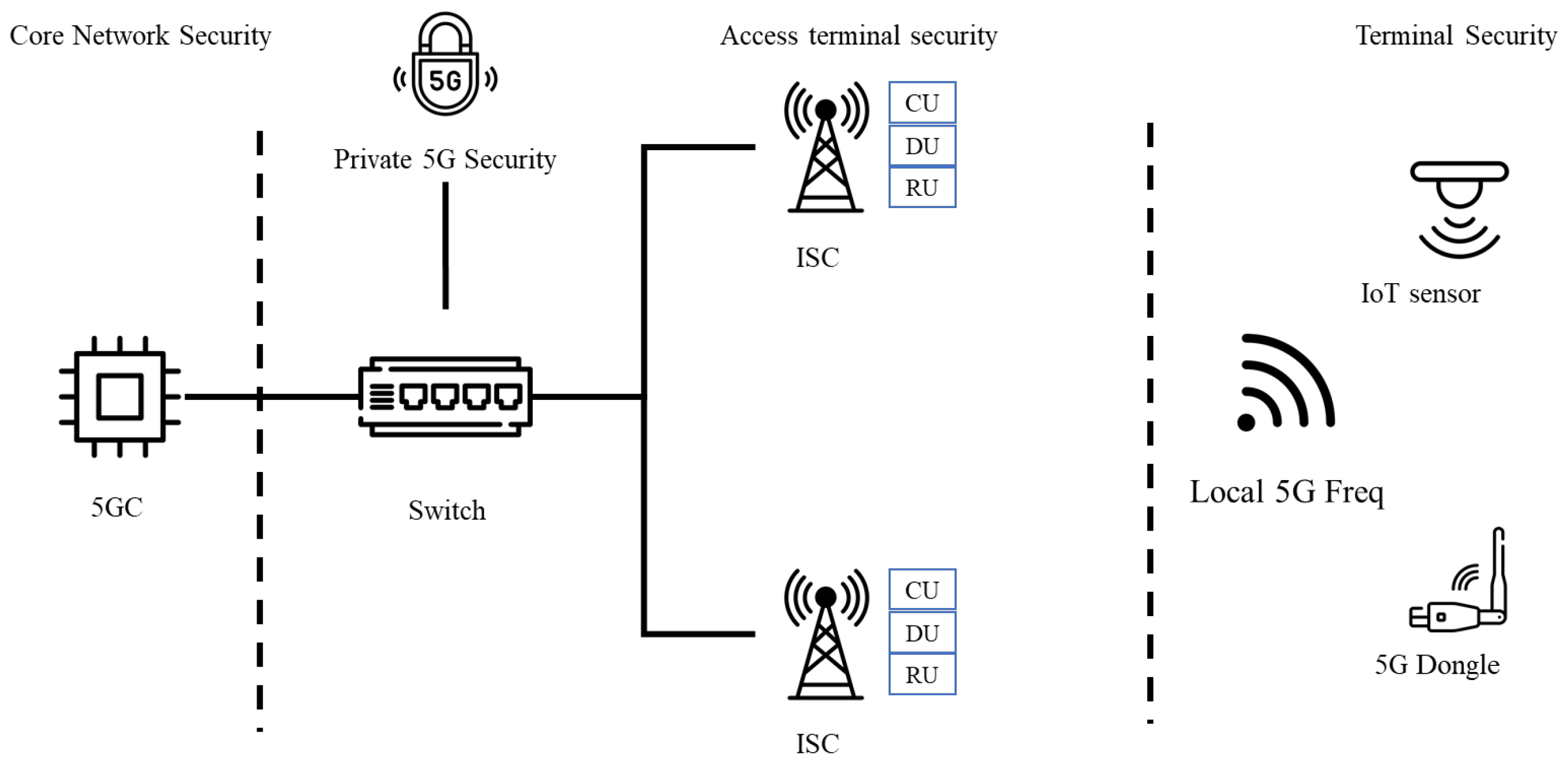

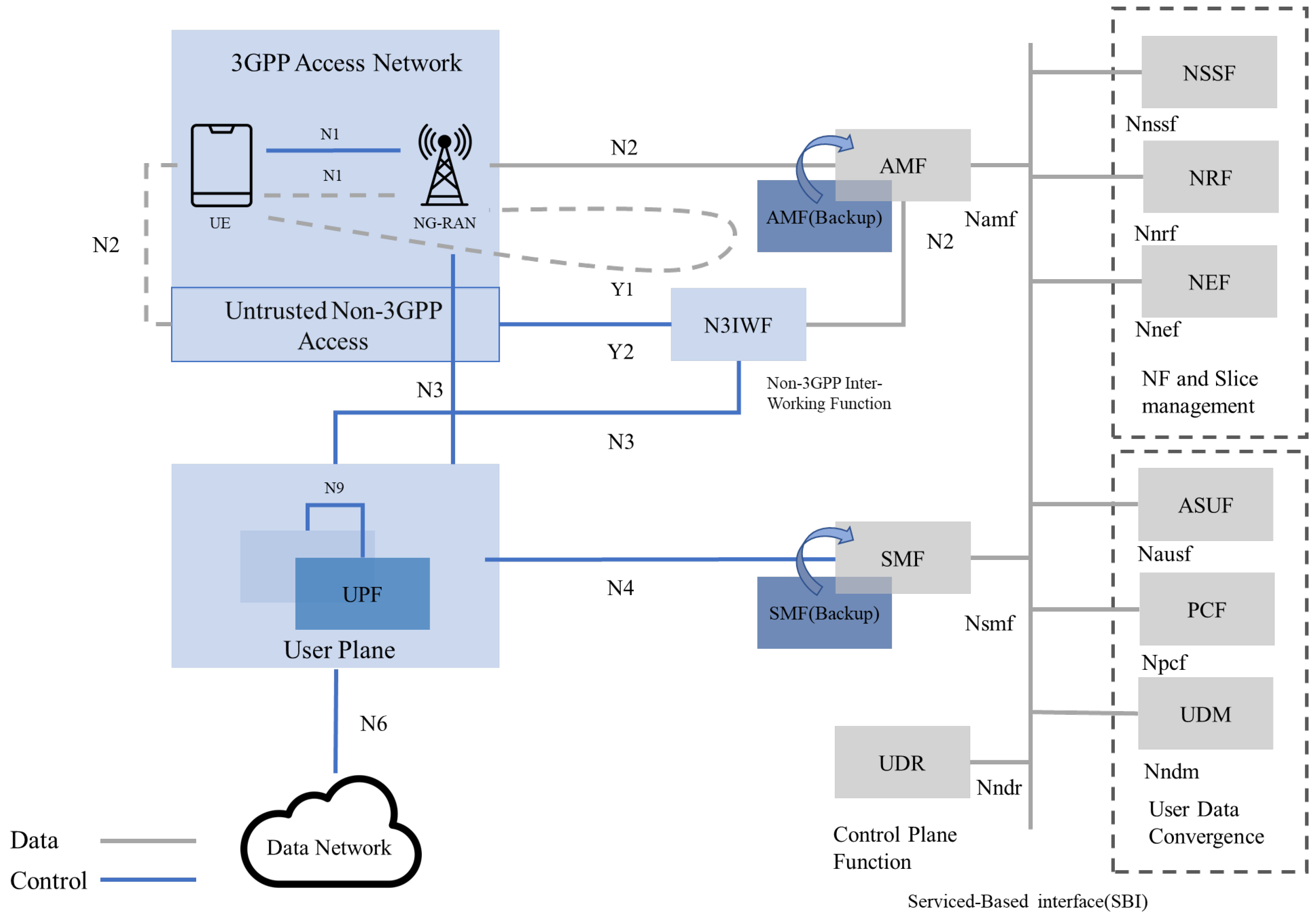

Figure 2.

The main components of the system include a Fifth Generation Core Network (5GC), an Integrated Small Cell (ISC), a Multi-access Edge Computing platform, a cybersecurity firewall, data terminal equipment (e.g., 5G dongles), and various network transmission and management modules. The architecture adheres to the 5G system reference model defined by the 3rd Generation Partnership Project (3GPP) and is structured into three primary domain layers: the Endpoint Device layer, the Radio Access Network (RAN) layer, and the Core Network (CN) layer.

Endpoint devices are categorized based on functionality into fixed-type devices (e.g., Customer Premises Equipment and environmental monitoring nodes) and mobile-type devices (e.g., AGVs equipped with 5G dongles). Within the RAN layer, integrated Distributed Unit/Centralized Unit (DU/CU) small cells are deployed to manage wireless signal processing and protocol scheduling. These small cells connect to the 5G Core Network via the N3 interface.

The Core Network comprises several essential network functions, including the Authentication Server Function (AUSF), Session Management Function (SMF), Policy Control Function (PCF), and User Plane Function (UPF). Furthermore, through the N6 interface, the core network connects to internal office automation (OA) systems, IT infrastructure, or cloud-based management platforms. This configuration establishes a dedicated communication environment characterized by high bandwidth, ultra-low latency, and enhanced reliability.

3.1.1. 5G Core Network

This study adopts the 5G core network architecture developed by Saviah Technology as the foundational communication infrastructure for private network deployment. The architecture is based on the commercial version of Free5GC, the world’s first fully 3GPP Release 15 compliant open source 5G core, and features a modular design offering high scalability and reliability. The overall system structure is illustrated in

Figure 3 [

26], encompassing multiple Network Functions (NFs), each responsible for distinct communication and management tasks. The primary components and their respective roles are outlined below:

Access and Mobility Management Function (AMF): The AMF handles the registration and connection management of User Equipment (UE) and manages handovers between different base stations (Next Generation Node B, gNB) to ensure seamless mobility and low-latency communication during user sessions.

Session Management Function (SMF): The SMF is responsible for establishing and maintaining PDU sessions for UE. It manages IP address allocation and data path resource scheduling to support stable transmission and consistent Quality of Service (QoS).

Policy Control Function (PCF): The PCF enforces unified QoS policies and traffic management rules. It enables dynamic resource allocation based on user profiles, application requirements, or service classifications.

Unified Data Management (UDM): The UDM stores user subscription and authentication data. In conjunction with the Authentication Server Function (AUSF), it performs identity verification and access control, serving as the central entity for user data management.

Authentication Server Function (AUSF): Working in tandem with the UDM, the AUSF carries out authentication procedures to validate user credentials and ensure secure access to the network.

Network Repository Function (NRF): The NRF enables registration, discovery, and service querying of network functions. It facilitates dynamic resource orchestration and enhances inter-module communication within the 5G core.

Network Exposure Function (NEF): The NEF provides a unified Application Programming Interface (API) for secure access to 5G network capabilities, such as QoS parameters, event notifications, and subscription data. This function enables seamless interoperability between smart agriculture applications and external systems.

Figure 3.

Core Network Architecture Diagram [

26].

Figure 3.

Core Network Architecture Diagram [

26].

In addition, to enhance network availability and fault tolerance, both the Access and Mobility Management Function (AMF) and the Session Management Function (SMF) are configured with redundant nodes, enabling automatic failover in the event of a primary node failure. This configuration ensures uninterrupted service continuity. On the data plane, the User Plane Function (UPF) connects to external data networks such as IoT servers and cloud platforms providing high-speed data forwarding and seamless access to edge computing resources.

3.1.2. Integrated Small Cell

In this study, the SCE2200 5G Sub-6 small cell, developed by Askey Communication, is employed as the gNB within the private 5G network architecture. The technical specifications of the device are presented in

Table 2. Compliant with the 3GPP Release 15 standard, the SCE2200 supports the N78 (3.3–3.8 GHz) and N79 (4.4–5.0 GHz) Sub-6 GHz frequency bands—two of the most widely adopted mid-band configurations for enterprise 5G deployments—making it well-suited for private network environments [

27].

The SCE2200 is powered by the Qualcomm FSM10056 baseband platform and incorporates an NXP octa-core Neural Processing Unit (NPU). It utilizes a 2 × 2 MIMO antenna configuration and supports a maximum transmit power (TX Power) of 24 dBm, with an equivalent isotropically radiated power (EIRP) of up to 32 dBm, providing approximately 70 m of indoor line-of-sight (LOS) coverage.

In terms of data transmission performance, the SCE2200 offers a maximum downlink rate of 750 Mbps and an uplink rate ranging from 100 to 200 Mbps. The device can support up to 64 simultaneous user connections, making it suitable for high-density agricultural scenarios involving sensor networks, high-bandwidth visual data transmission, and automated device control. The SCE2200 also features advanced time synchronization capabilities, including support for GPS, Precision Time Protocol (PTP), and 1PPS signals. These capabilities are essential for maintaining accurate and consistent timing—an important requirement for time-aligned visual inference and coordinated control operations.

The SCE2200 is equipped with both a 2.5G RJ45 and a 10G SFP+ Ethernet interface, enabling flexible integration with either the 5G core network or a Multi-access Edge Computing (MEC) platform. The device supports power supply through either a 12 V DC input or Power over Ethernet++ (PoE++, IEEE 802.3bt), with a maximum power consumption of less than 60 W. These features make the SCE2200 suitable for deployment in environments with limited power infrastructure or restrictive installation conditions, such as agricultural greenhouses and automated climate-controlled storage facilities. The actual deployment scenario of the device is shown in

Figure 4.

3.1.3. Spectrum and Licensing

The private 5G network deployed in this study is classified as a Non-Public Network (NPN) [

28] and operates on the n79 frequency band (4.8–4.9 GHz), which is allocated by Taiwan’s National Communications Commission (NCC). This band falls within the Sub-6 GHz mid-frequency range and offers favorable signal propagation characteristics, including improved wall penetration and reliable indoor coverage. These attributes make it particularly suitable for semi-enclosed environments such as greenhouse farms. The private network operating license is presented in

Figure 5.

The private network architecture adopted in this system conforms to the “Local Area Network (LAN)” deployment model defined in 3GPP TS 22.261 [

29]. This network type is optimized for communication within a specific or confined geographic area and offers a controllable, manageable, and operator-independent solution, eliminating the need for reliance on a Mobile Network Operator (MNO). Leveraging this architecture, the system can be tailored to meet the specific requirements of the deployment environment in terms of bandwidth allocation, core network functions, and service policies. It effectively supports applications that demand low latency, high reliability, and edge inference capabilities, making it particularly suitable for smart agriculture scenarios requiring high autonomy and real-time responsiveness.

3.1.4. Signal Coverage Area in the Deployment Site

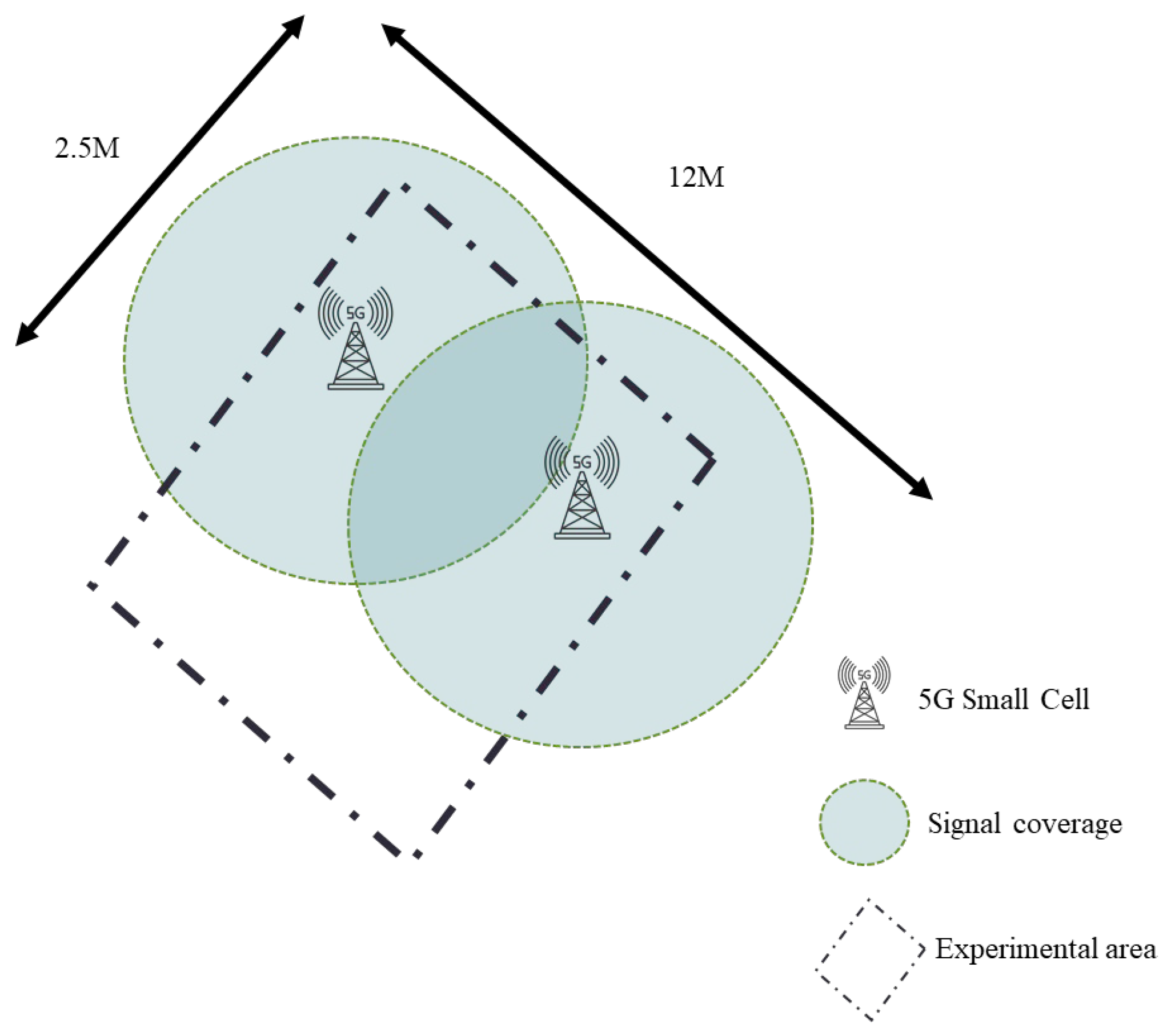

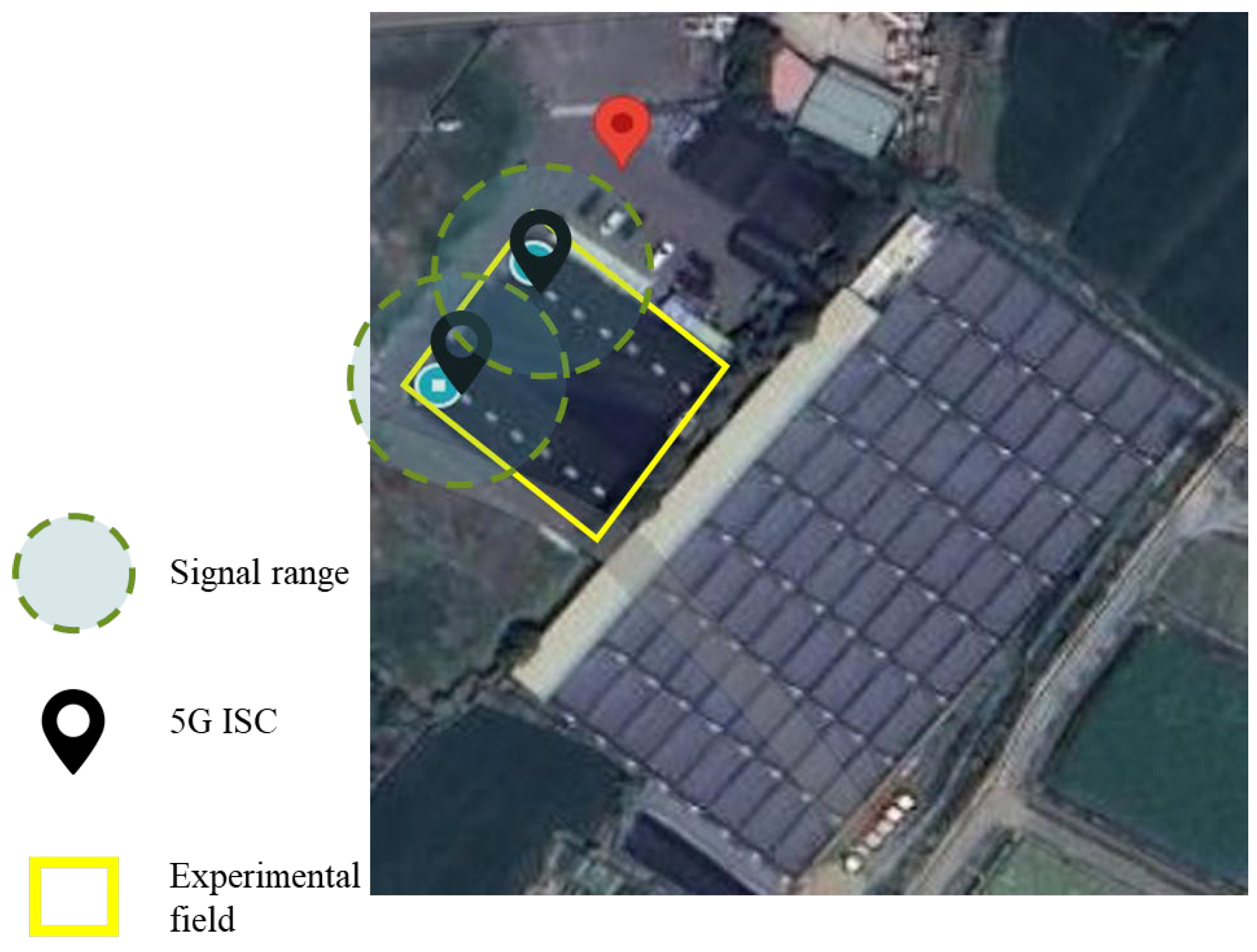

In the deployment of a private 5G network within a smart farm, the coverage area and signal distribution are critical design parameters that directly impact communication performance. In this study, high-quality wireless coverage of the core black fungus cultivation area was achieved through the deployment of Integrated Small Cells, ensuring stable connectivity and data transmission for environmental sensors and AGV. The deployment layout and signal coverage are illustrated in

Figure 6.

The deployment uses two indoor small-cell base stations (gNBs). Together, they provide an effective service corridor of approximately 12 m × 2.5 m (length × width), as illustrated in

Figure 6. The two gNBs are positioned collinearly along the aisle so that their coverage footprints intersect in the middle, forming a dedicated overlap/hand-over zone. This layout maximizes usable coverage within the corridor while eliminating dead zones and ensuring reliable, continuous connectivity for mobile automated equipment.

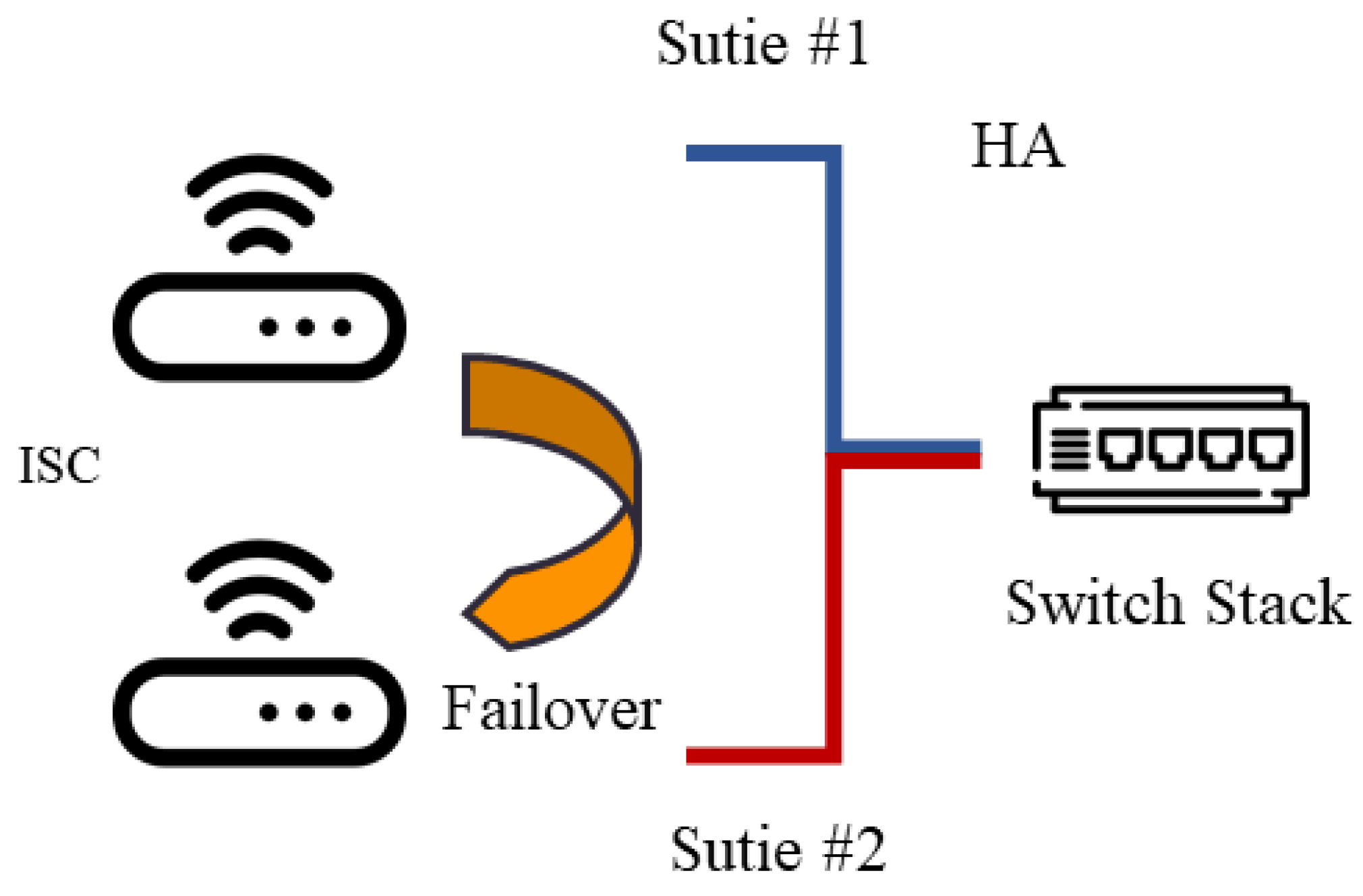

To ensure stable operation and uninterrupted service of the private 5G network within the smart agriculture environment, two Integrated Small Cells, designated as ISC1 and ISC2, were installed in the experimental site. Their configuration is illustrated in

Figure 7. These two base stations are deployed using a High Availability (HA) architecture and are configured with a mutual failover mechanism to provide redundancy and fault tolerance [

30].

3.1.5. Cybersecurity Testing

As shown in

Figure 8, the cybersecurity design of the private 5G network in this study follows the Cybersecurity Testing Guidelines for 5G Mobile Communication Base Stations issued by the National Communications Commission [

31]. A multi-layered security architecture was implemented, and cybersecurity testing and field verification were conducted by Auray OTIC, an accredited laboratory. These measures ensure that the system deployment complies with both national and international cybersecurity standards.

The cybersecurity testing of the 5G dongles and Wi-Fi devices used in this system was conducted in accordance with the European Union’s EN 303 645 standard for IoT security [

32] and 3GPP TS 33.117 [

33]. The testing scope included user equipment (UE) authentication and access control mechanisms. For the base station’s wireless communication interface, security verification was performed based on 3GPP TS 33.511 [

34], which includes testing for confidentiality and integrity of signaling at the Radio Resource Control (RRC) layer [

35].

At the core network level, cybersecurity design and management were implemented and reviewed according to the internal security policies and operational procedures defined by the core system operator. These measures include user data protection, anomaly detection, and access logging mechanisms.

The overall cybersecurity strategy encompasses the device layer, communication layer, and network layer, forming a comprehensive end-to-end security protection framework to ensure both communication stability and data security within the experimental deployment site.

3.2. Object Detection of Black Fungus Cultivation Substrate Bags

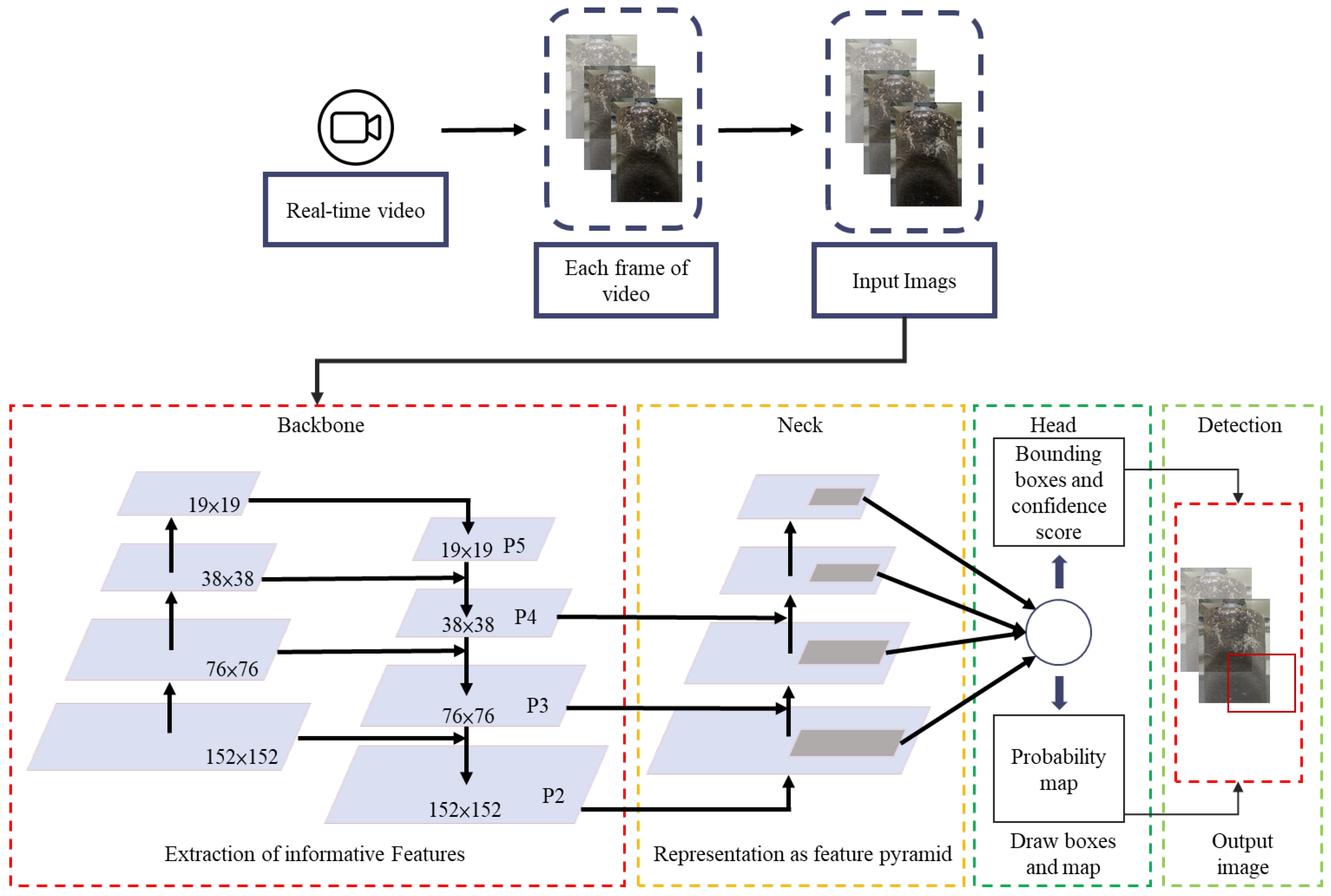

This study aims to extract mycelial image features from black fungus cultivation substrate bags as the basis for evaluating mycelial coverage and identifying potential diseases, thereby establishing an intelligent monitoring system with real-time and automated capabilities. The system employs the YOLOv8 deep learning model, which is based on a one-stage object detection architecture [

36]. This model offers high inference speed and detection accuracy, making it particularly suitable for deployment in smart agricultural environments that require edge computing.

Figure 9 illustrates the YOLOv8-based pipeline used in this work. An input RGB image of a single substrate bag is resized to 608 × 608 × 3 and normalized. The backbone extracts multi-scale features that form P2–P5 maps of 152 × 152, 76 × 76, 38 × 38, and 19 × 19, respectively. A bidirectional feature pyramid neck fuses these maps, and an anchor-free decoupled detection head predicts object hypotheses on all four scales.

3.2.1. Detection of Black Fungus Mycelial Coverage Rate

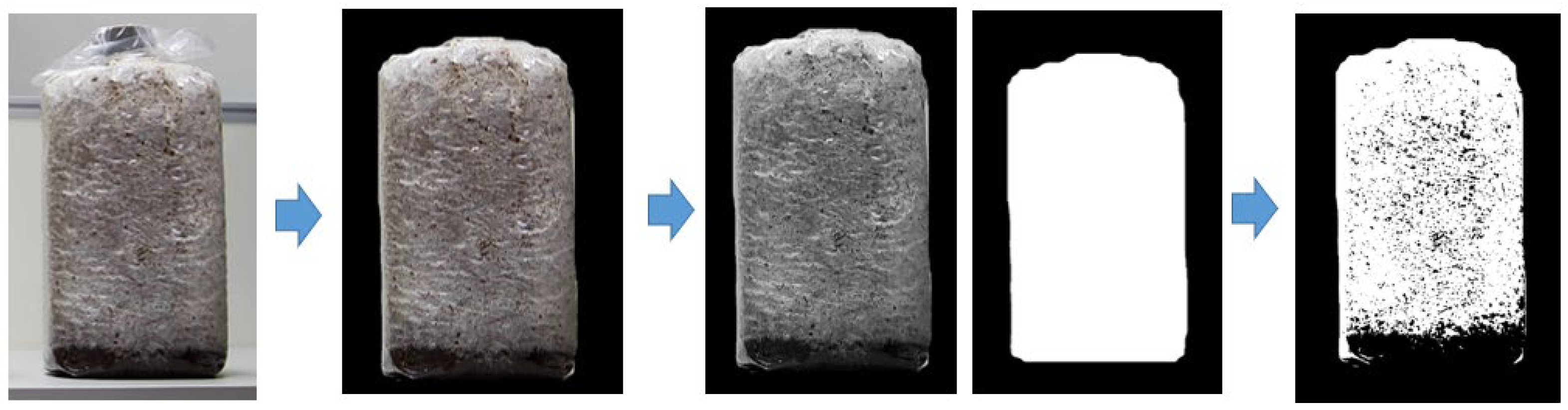

To enhance the generalization capability and recognition stability of the model, the training dataset includes a diverse and precisely annotated set of image samples. These samples cover various stages of mycelial growth, different camera angles, and varying distances and lighting conditions. All images were manually labeled, and feature enhancement was applied to highlight mycelial distribution and potential abnormal regions. This approach enables the model to effectively learn key visual characteristics, such as texture patterns and color distributions. In the image preprocessing stage, particular attention was given to the fact that black fungus mycelium typically exhibits a white visual appearance, as illustrated in

Figure 10.

The system initially applies color thresholding and image binarization to perform preliminary region segmentation. This is followed by the use of the Canny edge detection algorithm [

37] to enhance the boundaries of mycelial contours, thereby improving the model’s ability to recognize the progression of mycelial growth and detect pathological symptoms such as discoloration or uneven density. This preprocessing strategy significantly enhances the inference stability and disease warning capabilities of the model in real-world agricultural environments, providing a reliable tool for pest and disease management in smart agriculture.

In the deep learning model developed for this study, a novel Cross Stage Partial Fusion (C2f) module was introduced into the backbone architecture, replacing the traditional C3 structure [

38]. The structure of the C2f module is illustrated in

Figure 11.

By employing channel splitting followed by a series of bottleneck modules and subsequent feature fusion, the C2f module effectively enhances overall feature extraction efficiency. In addition to offering improved feature learning capabilities, this module significantly reduces both the number of parameters and computational load, thereby improving inference speed and stability on edge devices. Such characteristics make it particularly suitable for deployment on autonomous platforms and sensing devices in smart agriculture, where long-term, stable operation is essential.

To improve the model’s accuracy in identifying black fungus mycelial growth conditions, the system incorporates a high-resolution, low-noise image preprocessing pipeline. This pipeline integrates the Canny edge detection technique to enhance morphological features. The processing steps are shown in

Figure 12.

Image Cropping and Size Normalization: The original images, captured from the top-mounted camera on the autonomous platform, are first cropped to a resolution of 640 * 480 pixels to ensure consistency with the model input dimensions.

Brightness and Contrast Enhancement: A linear brightness and contrast enhancement technique is applied to increase the visual distinction between the bright white mycelium and the darker substrate background, thereby improving the model’s ability to identify relevant feature regions.

Grayscale Conversion: The enhanced images are converted to grayscale to reduce channel dimensionality and computational cost, while preserving the luminance characteristics critical to representing mycelial texture.

Masking and Binarization: To minimize background interference and noise, a binary mask is first generated using a predefined threshold range (50–220). Subsequently, the Otsu thresholding algorithm is applied to automatically determine the optimal threshold for adaptive binarization, effectively filtering out non-mycelial regions.

Edge Enhancement and Noise Suppression: The Canny edge detection algorithm is utilized in combination with Gaussian blur for smoothing, and a dual-threshold edge tracking strategy. Pixels with intensity values greater than 100 are classified as strong edges, while those between 50 and 100 are considered weak edges and are retained only if connected to strong edges. Isolated noise points are automatically discarded.

Mycelial coverage is defined as the fraction of pixels within the bag region that are labeled as mycelium by the preprocessing–segmentation pipeline, as expressed in Equation (1).

Concretely, the preprocessing–segmentation pipeline is defined as follows: An input image is first cropped to a

resolution and subjected to brightness and contrast enhancement, after which it is converted into a grayscale image. The bag region of interest RRR is extracted using intensity range masking

, followed by morphological closing to eliminate small gaps. Within

R, Otsu thresholding is applied to generate a binary mycelium candidate map

B. To further refine the boundaries while suppressing noise, Canny edge detection (Gaussian smoothing with hysteresis thresholds of 100/50) is applied, and the resulting edges are lightly dilated. The final mycelium mask

M is defined as

with small isolated components removed by an area filter. This definition confines the denominator to the substrate area, excludes background pixels, and yields a reproducible percentage used for growth assessment; low coverage accompanied by edge-enhanced speckle patterns triggers a contamination/disease alert in the monitoring system.

The final output edge images clearly depict the contours and distribution density of mycelial growth, serving as the input feature data for subsequent model training and inference. These processed images can also be utilized to assess mycelial coverage and detect potential abnormalities, thereby providing farmers with accurate information to support production management decisions.

3.2.2. Detection of Invasive Mold

During the cultivation of black fungus, substrate bags are susceptible to contamination by external molds, with

Neurospora spp. (commonly referred to as red bread mold) being one of the most prevalent pathogens. Infected areas typically appear as orange-red to reddish-brown patches, exhibiting distinct color characteristics, as shown in

Figure 13. Such infections not only inhibit mycelial growth but may also lead to substrate bag decay, ultimately affecting both yield and quality.

In response to this issue, the present study introduces a color-based image processing method to identify and extract abnormal lesions caused by red bread mold and similar pathogens. By converting the images into alternative color spaces, the method enhances hue and saturation stability under varying lighting conditions. This is followed by color threshold filtering, which effectively isolates diseased regions from the original image. These extracted features are then used for training deep learning models and for supporting anomaly detection and early warning analysis. This approach offers the advantages of low computational cost and high implementation flexibility, making it suitable for real-world agricultural environments.

To accurately extract regions infected by Neurospora (red bread mold) within black fungus cultivation substrate bags, this study first converts the original RGB color images into the HSV (Hue, Saturation, Value) color space to improve color separability and enhance the detectability of red-colored lesions. In the HSV color space, H represents hue, S denotes saturation, and V corresponds to brightness. Based on practical image observations, infected regions typically exhibit high-saturation red to reddish-orange tones. Therefore, a dual-threshold masking approach is applied using hue intervals of H ∈ [0, 10] ∪ [160, 180], with S ≥ 100 and V ≥ 100, to capture the relevant color spectrum of infected areas.

After generating the initial masks for each threshold range, the two masks are combined using a bitwise OR operation to form a unified mask of the red lesion regions. To enhance mask continuity and suppress noise, morphological closing [

39,

40] is applied twice using a 5 × 5 square structuring element (kernel), which effectively connects fragmented regions and fills small holes. Subsequently, Connected Component Analysis (CCA) [

38] is employed to compute and filter the area of each connected region within the mask, eliminating noise artifacts. This enables the quantification of the ratio between the area of red lesions and the total surface area of the substrate bag, providing a basis for determining the severity of

Neurospora infection.

Through the aforementioned image processing pipeline, the system can effectively distinguish between healthy mycelial regions and those infected by red bread mold, thereby enhancing both the accuracy and automation level of disease recognition. This technique can be integrated into an autonomous inspection platform to enable real-time disease detection and risk alerts, assisting farmers in identifying and isolating infection sources at an early stage. Ultimately, it reduces the risk of mold outbreak propagation within the cultivation environment and improves overall management efficiency and crop health stability.

3.3. Experimental Site Planning

The experimental site for this study is located in Wufeng District, Taichung City, Taiwan, utilizing a smart farm equipped with a fully deployed private 5G network as the application scenario, as illustrated in

Figure 14.

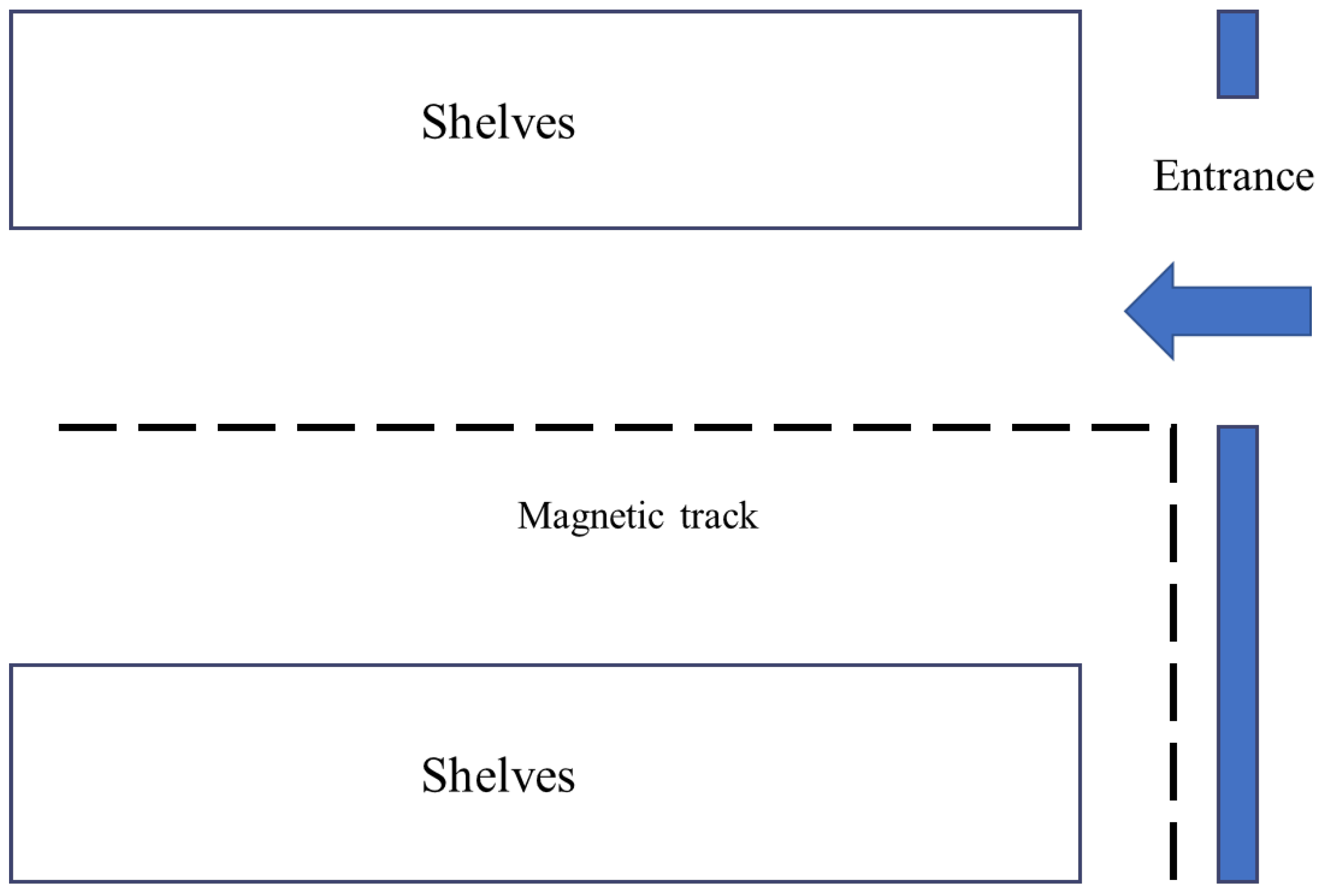

The farm adopts a container-based modular design to create an independent and controllable cultivation environment. The container measures 12 m in length, 2.3 m in width, and 2.3 m in height. Its interior is fully enclosed, effectively isolating the internal environment from external climatic influences to enhance environmental control precision and ensure experimental stability.

A magnetic guidance path is installed along the central aisle inside the container to facilitate the navigation of the AGV. Cultivation racks are arranged on both sides of the aisle to hold the black fungus substrate bags. Temperature, humidity, and carbon dioxide sensors are installed throughout the cultivation chamber, strategically distributed to collect environmental data across the experimental site. Additionally, a monitoring camera is mounted on the left side of the aisle to provide real-time surveillance of the air conditioning system, lighting equipment, ventilation fans, and the operation status of the unmanned vehicle, as illustrated in

Figure 15.

3.3.1. Environmental Data Monitoring

To ensure that black fungus mushrooms are cultivated under optimal environmental conditions throughout all growth stages, various environmental sensing devices including temperature, humidity, and carbon dioxide sensors were installed within the cultivation chamber. These sensors were positioned above the cultivation racks in appropriate locations to minimize monitoring errors caused by obstruction or improper placement, as illustrated in

Figure 16. The sensing devices transmit real-time data to the central control system via the on-site private 5G network. This setup allows administrators to promptly monitor environmental conditions in different cultivation zones through an intuitive user interface and make real-time adjustments based on actual measurements.

3.3.2. Environmental Control and User Interface

In addition, the environmental control system dynamically visualizes changes in indoor environmental parameters based on the growth stage requirements of black fungus, while also considering the spatial characteristics of the cultivation facility and local climate variations. An intuitive user interface is provided to present the data in a clear and accessible format. Farmers can interpret the real-time sensor readings and manually adjust the operation of devices such as the air conditioning system, exhaust fans, or misting units to maintain optimal cultivation conditions.

Figure 17 shows the actual user interface, which displays the current sensor values and corresponding settings for each monitored zone. This facilitates precise environmental management and enables users to respond promptly to any changes in cultivation conditions.

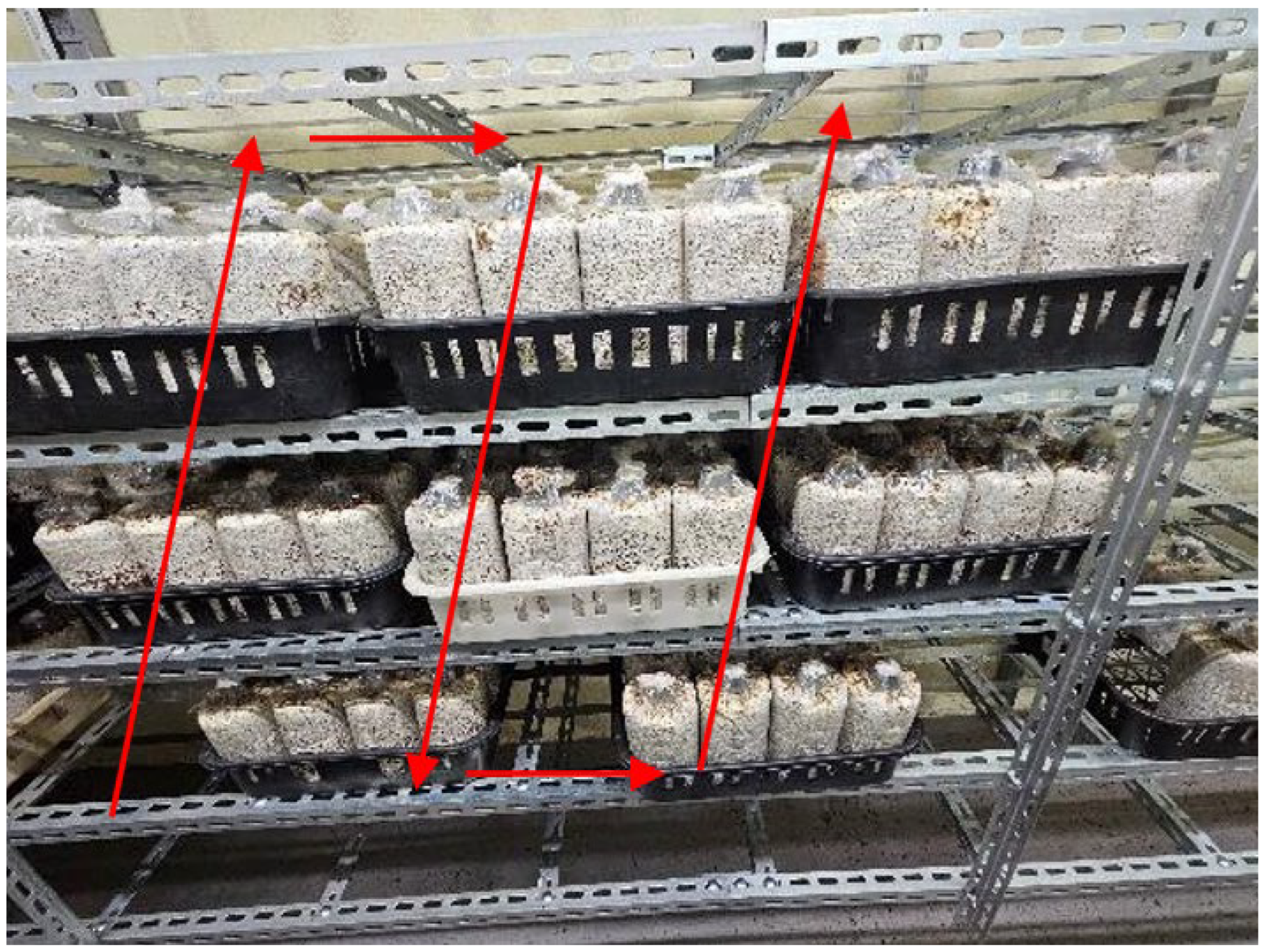

3.3.3. Autonomous Guided Vehicle Configuration

To reduce the labor burden associated with manual inspections and minimize the risk of external contamination in the black fungus cultivation chamber, this system introduces an AGV equipped with autonomous navigation and image monitoring capabilities. The AGV is designed to perform scheduled inspections of cultivation substrate bags, thereby avoiding the frequent entry and exit of personnel, which could increase the risk of mold contamination. Additionally, it reduces workers’ exposure to airborne spores, mitigating potential respiratory health issues.

As shown in

Figure 18, the AGV is equipped with a vertically adjustable inspection platform that houses a network camera and a 5G communication module (5G dongle). This configuration enables automated navigation and image acquisition throughout the cultivation area based on predefined inspection tasks.

The AGV travels along a magnetic navigation track embedded in the floor, which runs approximately 11 m along the X-axis of the cultivation aisle, as illustrated in

Figure 19. This magnetic-guided navigation approach offers advantages such as ease of installation, low cost, and high operational stability, making it particularly suitable for enclosed agricultural environments where space is limited and high reliability is required.

The camera module is mounted on a vertically adjustable platform with a lifting range of 0 to 75 cm, allowing it to perform vertical imaging tasks aligned with the varying heights of cultivation rack levels, as shown in

Figure 20. The AGV operates according to a predefined scanning strategy, following a repetitive inspection pattern of “bottom-to-top, left-to-right, and then top-to-bottom” in its imaging and movement sequence. This ensures comprehensive coverage of all black fungus cultivation substrate bags and achieves complete, gap-free image acquisition. A top-mounted PTZ camera captures substrate-bag images, and onboard sensors stream data via the private 5G network to the edge server for real-time inference and alerts, as shown in

Figure 21.

4. Results

This study was conducted at a smart farm located in Wufeng District, Taichung City, where a private 5G network was deployed to establish an integrated smart agriculture system with real-time communication capabilities. The system leverages the high-bandwidth and low-latency characteristics of the private 5G network in combination with the YOLOv8 deep learning architecture to enable real-time image recognition and object detection of black fungus cultivation substrate bags. Through real-time data transmission and data-driven decision-making control, the system significantly enhances production efficiency, quality stability, and overall operational sustainability in the cultivation process, demonstrating the practical potential of advanced communication technologies in agricultural applications.

4.1. Environmental Data Collection and Image Acquisition

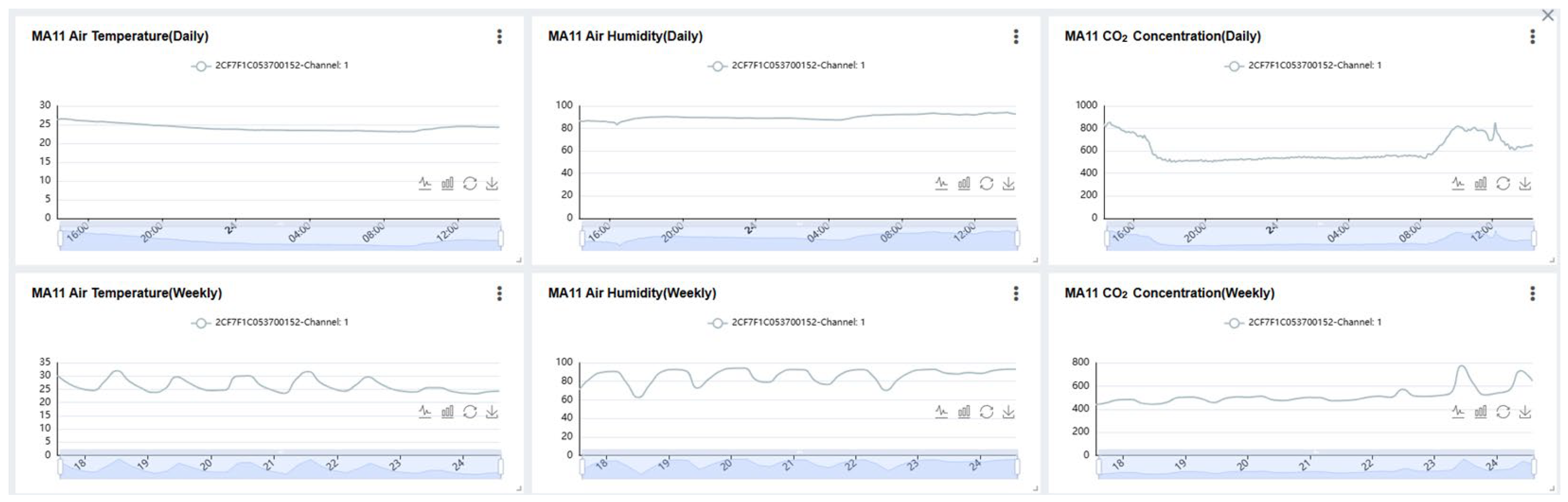

This study designed and deployed a multi-parameter environmental sensing system as the core architecture for automated smart cultivation control. The system features high-frequency data acquisition capabilities, with sensors automatically recording data every five minutes. Over a continuous two-week period, more than 4000 valid environmental data entries were collected. The monitored parameters include air temperature, relative humidity, and carbon dioxide concentration. The daily and weekly variations of these parameters are illustrated in

Figure 22.

The collected sensor data are transmitted to a backend database, forming the foundation of an intelligent cultivation control system with real-time feedback and automatic regulation capabilities. Through a data-driven decision-making mechanism, the system dynamically adjusts key environmental factors—such as temperature, humidity, and carbon dioxide concentration—to ensure optimal conditions for black fungus mycelial growth while mitigating the effects of adverse environmental factors.

The unmanned autonomous platform developed in this study is capable of conducting scheduled patrols within the black fungus greenhouse cultivation area to perform image acquisition tasks. The platform follows a predefined magnetic track for navigation, enabling stable traversal along greenhouse aisles and achieving full coverage monitoring of the cultivation zones.

For image capture, the platform is equipped with a Sricam Italia SP008 IP camera, which supports High Efficiency Video Coding (HEVC, H.265). Compared to the traditional H.264 format, HEVC offers higher compression efficiency and reduced network bandwidth consumption. The camera is also equipped with infrared night vision and digital zoom capabilities, allowing for stable and clear imaging under low-light conditions—an essential feature for enabling accurate inference by deep learning models. Each patrol operation captures approximately 60 to 80 images, which are transmitted via the private 5G network to a Multi-access Edge Computing node. There, the YOLOv8 model performs real-time inference to support intelligent cultivation monitoring and anomaly detection.

4.2. Graphical Human–Machine Interface Design and Cultivation Data Visualization Interface

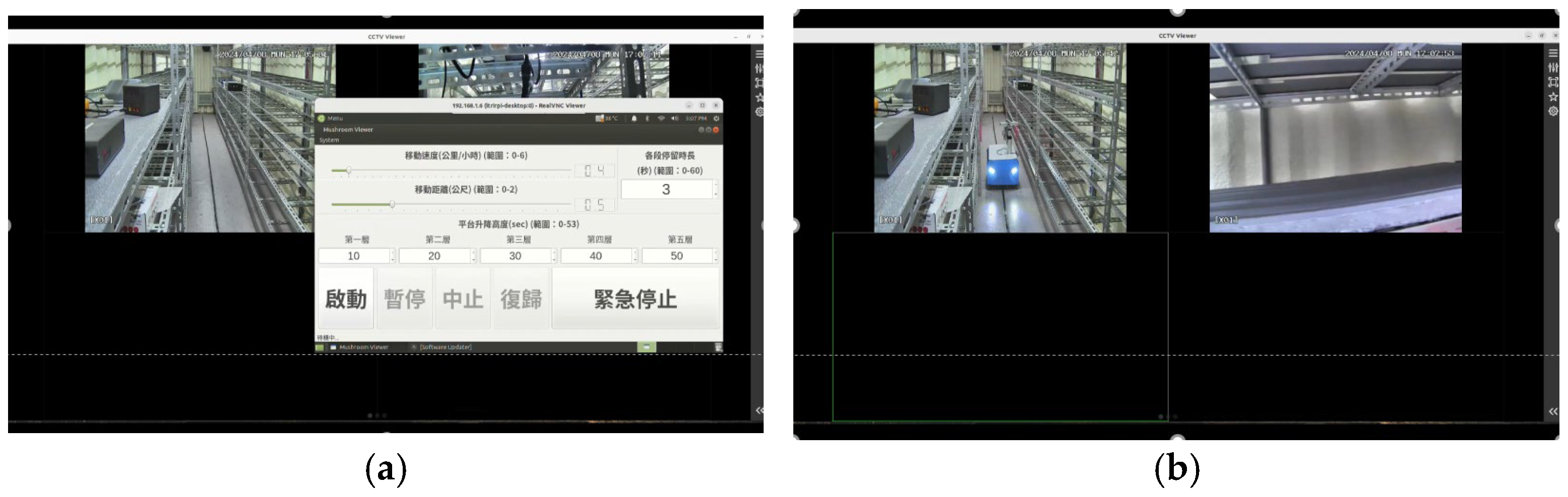

To enhance remote operation efficiency and control precision, a graphical Human–Machine Interface (HMI) was developed, as shown in

Figure 23. Through this interface, users can configure platform movement parameters, including movement speed (in kilometers per hour), the number of movement steps per segment, and dwell time (in seconds). The interface also allows for the configuration of up to five stopping points to achieve precise navigation control.

A range of control commands is available within the interface, including “Start,” “Pause,” “Stop,” “Home,” and “Emergency Stop,” enabling users to maintain full control over the platform’s operational status. These functions also provide essential safety mechanisms to ensure operational stability and security within complex greenhouse environments.

Figure 23 presents both the real-time operation scene and the control interface of the autonomous platform.

To enhance the system’s operational efficiency and real-time information accessibility in practical applications, this study further integrates the environmental sensing module with the deep learning inference module, resulting in the development of an intuitive and highly integrated user interface platform. This platform is designed to assist farmers in efficiently conducting environmental monitoring and management tasks.

From the perspective of environmental monitoring, the system interface displays real-time data from various sensor nodes located within the greenhouse (e.g., front-left, front-right, rear-left, rear-right). Key environmental indicators—such as relative humidity, temperature, and carbon dioxide concentration—are presented in an easily interpretable format.

The system also supports additional functionalities, including time range selection, data queries, and historical data review. As shown in

Figure 24, users can visualize environmental changes over a specified date and time range, providing a reliable basis for subsequent environmental control decisions.

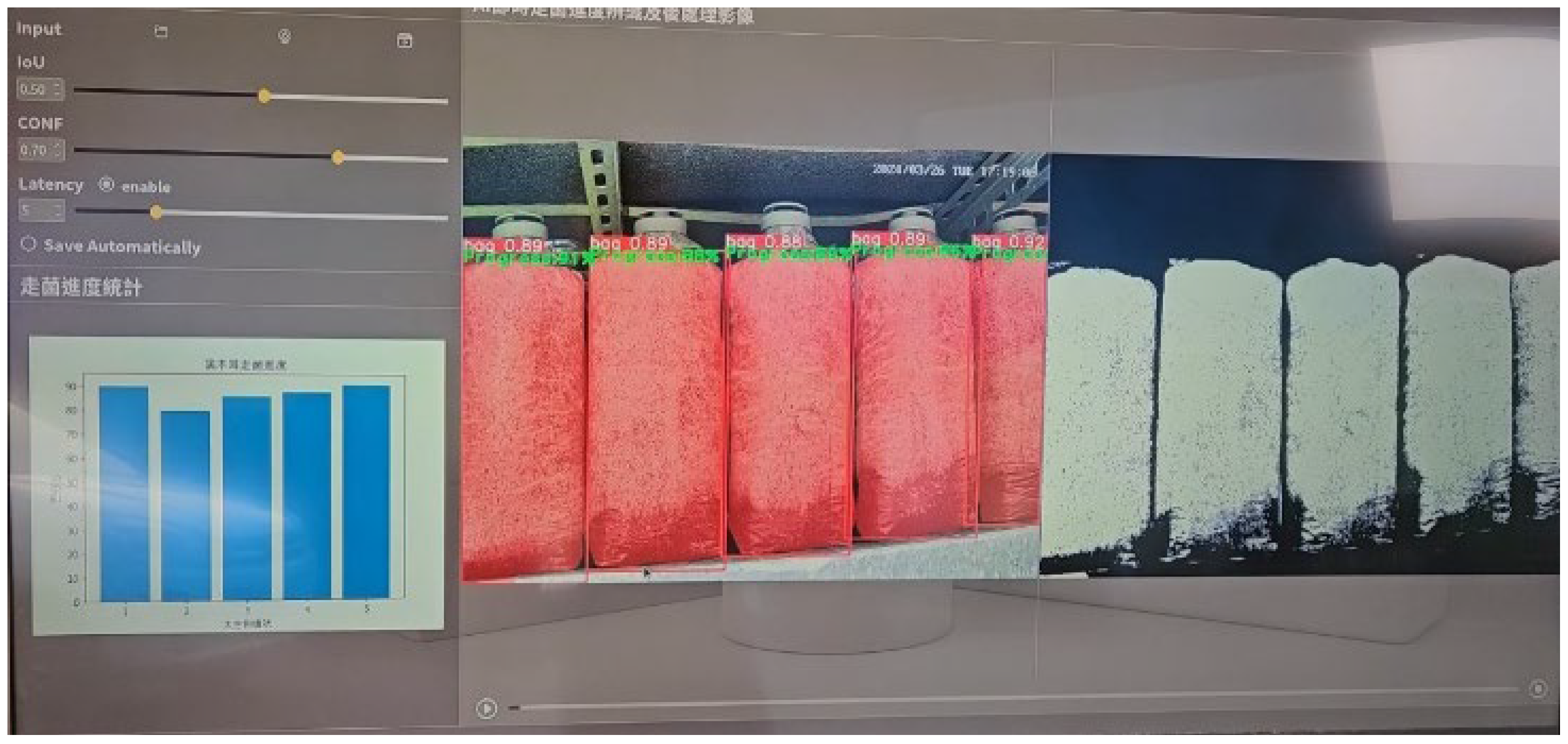

In terms of object detection and deep learning inference, the image recognition interface developed in this system enables real-time display of inference results for black fungus cultivation substrate bags, as shown in

Figure 25. The interface highlights object bounding boxes along with their corresponding confidence scores and provides adjustable parameters, including the Intersection over Union (IoU) threshold and confidence threshold. Users can dynamically configure these output conditions based on specific application needs to achieve optimal recognition accuracy.

In addition, the interface integrates statistical information on mycelial coverage rates. A bar chart displays the coverage rates for multiple substrate bags, assisting users in evaluating growth conditions from a macro perspective and identifying potential anomalies.

Overall, the interface combines real-time inference visualization, flexible detection parameter settings, and integrated graphical statistics. These features enhance the practical feasibility and application value of deploying deep learning models in agricultural environments.

4.3. Performance Evaluation of the Deep Learning Model

We collected 2830 greenhouse images as the base dataset and held out an external test set of 318 images collected in subsequent batches to ensure temporal independence. The base set was stratified by disease presence and cultivation batch into 80% training (2264) and 20% validation (566). Data augmentation was applied only to the training set, expanding it to 42,540 labeled images via horizontal/vertical flipping, random cropping, and contrast adjustment. The validation and test sets were not augmented (only resized/normalized). The resulting split is summarized in

Table 3. The model was trained for >10,000 epochs with early stopping and learning rate decay to improve convergence efficiency and recognition accuracy.

To verify performance under practical deployment, we additionally collected 318 test images. Predictions with confidence ≥ 0.90 were treated as valid and compared against manual annotations. The model correctly identified 298 samples (accuracy = 93.7%). A two-sided 95% confidence interval for accuracy was 90.5-95.9% (298/318). The mean prediction confidence was 0.96.

Figure 26 shows representative inference results.

The YOLOv8 model demonstrated stable and outstanding recognition performance in the real-world application environment, particularly in image samples with uniform lighting and clearly defined mycelial growth contours, where prediction accuracy was especially high. A summary of the model’s inference performance evaluation metrics is presented in

Table 4 and

Table 5.

Using the confusion matrix in

Table 4, we derive the model performance metrics—precision, recall, and accuracy—according to standard definitions [

41]. The formulas are as follows:

The quantitative performance evaluation shows that the YOLOv8 model achieved an accuracy of 0.9371, a recall of 0.9728, and a precision of 0.9597, demonstrating its ability to detect positive cases with high sensitivity while maintaining reliable precision in distinguishing non-target samples.

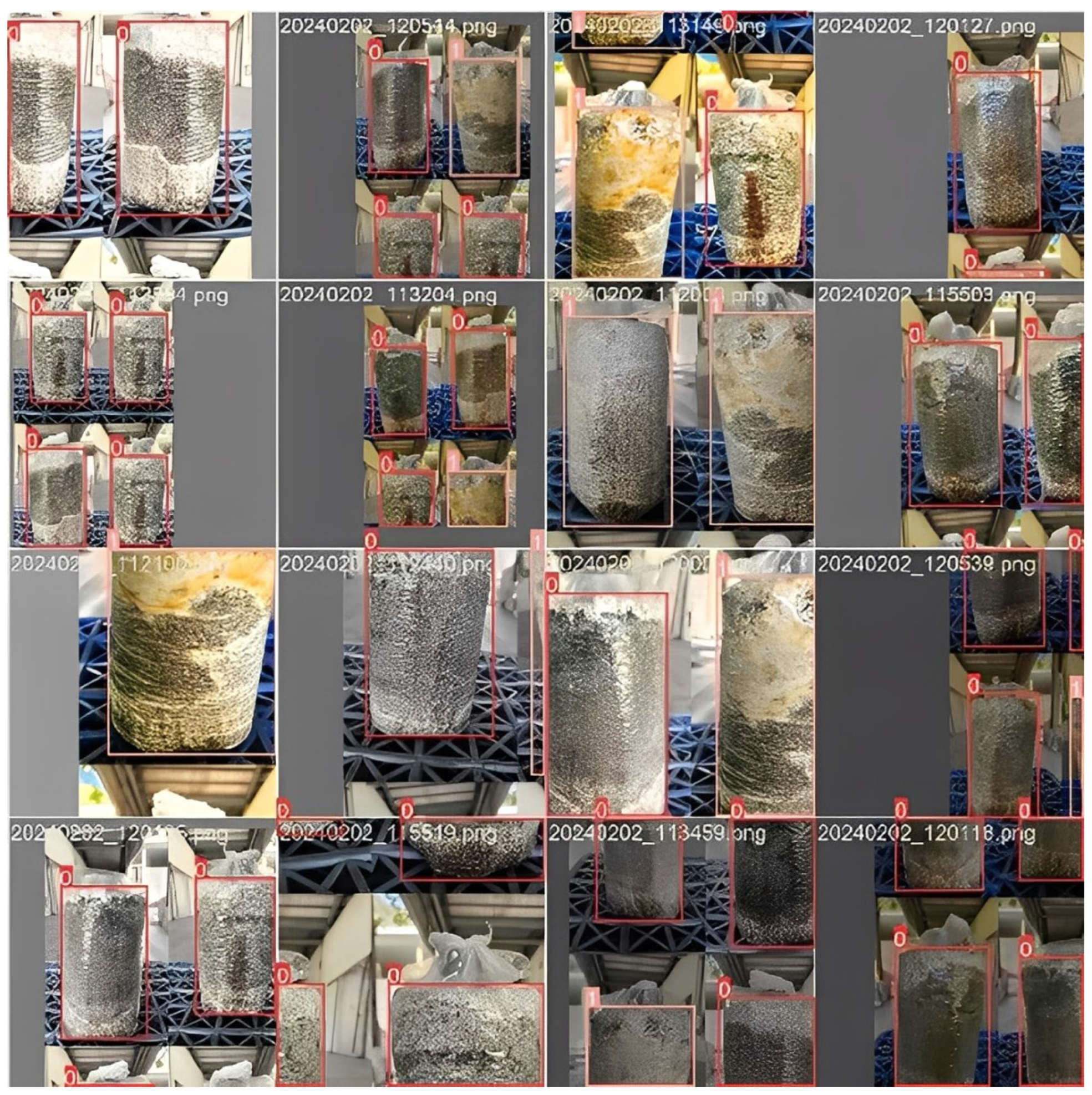

This study implemented feature data augmentation targeting abnormal mycelial patterns and simultaneously optimized the model architecture. Specifically, for disease-related features such as discoloration, surface pitting, and yellowing of the mycelium caused by

Neurospora contamination, the research team collected and manually annotated approximately 40 real-world disease image samples, which were used as initial training data for the model. An example of the labeled training dataset is shown in

Figure 27.

To further enhance the model’s ability to detect diverse disease manifestations, the YOLOv8 network architecture was modified. The originally employed Cross Stage Partial (C3) module was entirely replaced with the Cross Stage Partial Fusion (C2f) module. This module applies channel splitting and deep feature fusion mechanisms, enabling more effective extraction of fine-grained and high-level structural features within images. As a result, the model’s sensitivity and accuracy in identifying abnormal mycelial patterns—such as color variations and morphological irregularities—are significantly improved. This architectural optimization enhances the model’s ability to discriminate disease symptoms and reduces the risk of misclassification.

During the model training phase, the manually annotated disease image dataset described above was used for initial training. A total of 100 training epochs were conducted with hyperparameter tuning. The training results indicated that the model achieved an average precision of approximately 90% in the disease recognition task.

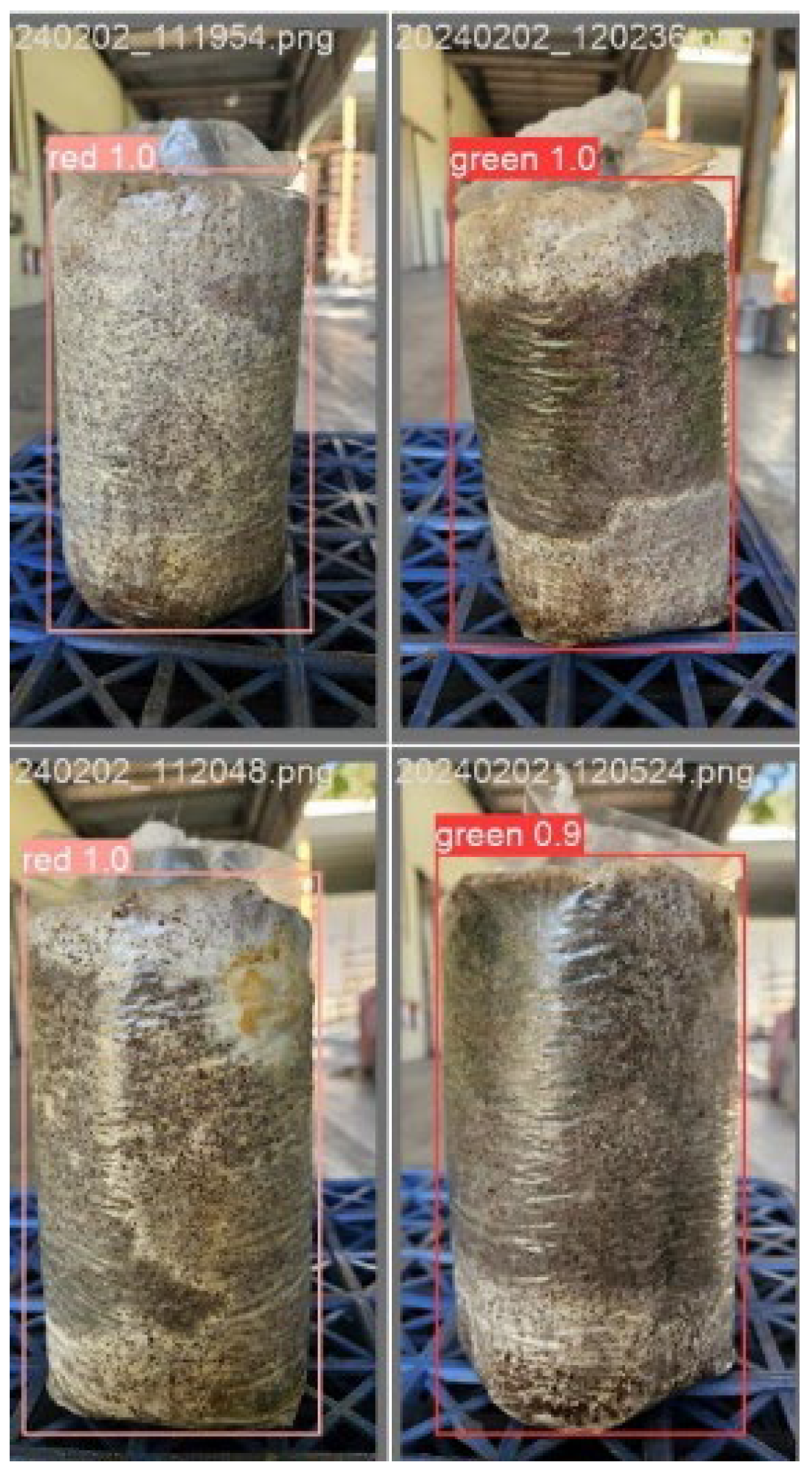

In the inference stage, the model was able to effectively identify suspicious diseased regions on the surface of the cultivation substrate bags and present the recognition results in a visualized format. As shown in

Figure 28, the system uses red bounding boxes to indicate potential disease instances, while green bounding boxes represent healthy mycelial growth. Corresponding confidence scores are also displayed to support the inference results.

This visual interface not only enhances the interpretability of the recognition output but also provides a real-time risk alert mechanism, enabling early intervention and on-site management in the cultivation process.

Observations from selected test results indicate that the model demonstrates strong recognition capabilities for symptoms such as yellowing and localized atrophy of mycelium, highlighting the system’s potential for early-stage disease detection and intelligent cultivation management.

To validate the feasibility and effectiveness of deploying the proposed model in a real-world system, this study further references several related works that apply YOLOv8 in agricultural contexts for comparative analysis and supporting evidence. Zhou et al. [

42] applied YOLOv8 to crop weed detection tasks and incorporated an enhanced C2f module along with extended vision convolutional networks. Their results showed that the model was capable of reliably identifying various types of weeds even under complex field conditions, demonstrating YOLOv8’s strong adaptability to environmental variability and its high recognition accuracy.

In another study, Sapkota et al. [

43] deployed YOLOv8 for apple fruit and disease detection in orchard environments, using mobile devices and edge computing platforms. Their experimental results revealed that YOLOv8 outperformed previous versions in terms of response time and mean Average Precision (mAP) on embedded devices, confirming its suitability for resource-constrained edge computing scenarios.

Combining the findings from these prior studies with the experimental observations from this research, it can be reasonably concluded that the deep learning model developed herein possesses strong potential for on-site deployment. Furthermore, it can be effectively integrated with private 5G networks and mobile platforms to enable real-time cultivation monitoring and disease early warning within smart agriculture applications.

4.4. Performance Analysis of the Private 5G Network

This study adopts the Saviah 5GC as the 5G core network architecture and deploys two ASKEY SCE2200 5G Sub-6 small cells as gNB nodes to provide full coverage of the black fungus greenhouse cultivation area. To evaluate the practicality and stability of the constructed private 5G network in a smart agriculture context, real-world field tests were conducted to assess its data transmission performance, with results compared against theoretical maximum values.

The field measurements yielded average downlink throughput of 645.2 Mbps and uplink throughput of 147.5 Mbps. The average ping latency was 19 ms, and the average jitter was 6.3 ms. Relative to the theoretical values in

Table 4, the downlink corresponds to 80.7% of the 800 Mbps benchmark, whereas the uplink corresponds to 73.8% of the 200 Mbps benchmark. The uplink shortfall from 80% is consistent with (i) a downlink-favored TDD slot configuration during operation, (ii) uplink MCS adaptations and HARQ retransmissions under in-house occlusion and interference, and (iii) UE power/spatial-stream constraints that typically cap UL spectral efficiency relative to DL. The latency is slightly above the nominal < 15 ms reference because our measurement captures end-to-end paths, while the jitter remains well within target.

Overall, the deployed private 5G network provides stable, low-jitter connectivity adequate for real-time object-recognition feedback, synchronized environmental-sensor display, and remote AGV control in the greenhouse.

A comparative summary of the theoretical Sub-6 GHz performance (based on the 3GPP Release 16 standards and the ASKEY SCE2200 specifications), along with the measured field test results, is presented in

Table 6.

Overall, the private 5G network architecture implemented in this system not only achieves high-bandwidth and low-latency communication but also supports real-time transmission of deep learning inference results and environmental sensing data. This provides a robust and scalable technological foundation for the implementation of intelligent cultivation applications in agricultural environments.

4.5. Production Optimization Process

To address the practical requirements of black fungus cultivation environments, this study developed a production optimization system that integrates environmental parameter control, deep learning-based image recognition, and automated inspection modules. The goal is to establish an intelligent cultivation process characterized by real-time responsiveness, autonomy, and decision-support capabilities.

As illustrated in

Figure 29, the overall system workflow comprises three core modules: (1) environmental parameter analysis for climate control, (2) evaluation of mycelial growth conditions, and (3) a standardized decision-making mechanism for production management.

First, the system automatically adjusts key environmental parameters—such as temperature and carbon dioxide concentration—based on the specific growth stage of black fungus, while continuously analyzing mycelial coverage and monitoring the growth rate. Leveraging historical trial data and statistical modeling, the system establishes an economic benefit evaluation model for black fungus cultivation, which serves as the basis for optimizing future environmental control strategies.

Second, a deep learning-based object detection module is integrated into the system to perform real-time analysis of mycelial growth through image processing. This module enables automated recognition of growth completeness and annotation of potential disease spots or anomalies, thereby significantly reducing the labor burden and misjudgment associated with manual inspection. During experimentation, the object detection model achieved an average accuracy of 93.7% and a mean confidence score of 0.96, indicating strong stability and high inference reliability.

Third, the system deploys an AGV to perform automated inspection and data collection across large cultivation areas. The platform is equipped with sensors and an image acquisition module to periodically collect mycelial growth information and upload it to a backend database for real-time object detection on the cultivation substrate bags.

In this study, the cultivation success rate (CSR) is defined at the bag level as the proportion of substrate bags that (i) complete the planned cultivation cycle and reach harvest readiness, and (ii) pass the harvest quality check without contamination (no disease/contamination alerts by the detection model at harvest, confirmed by a human inspector), as formulated in Equation (7).

Bags discarded due to contamination, abnormal/stalled growth, or mechanical damage are counted as unsuccessful.

Finally, to validate the system’s practical performance, on-site harvesting trials were conducted across five batches of black fungus in a greenhouse setting, with cultivation cycles ranging from 30 to 60 days. All batches were managed in accordance with the system’s recommended environmental parameters and inspection strategies. The results showed that the maximum yield per substrate bag reached up to 400 g, with an average cultivation success rate of 80%. Overall, the system demonstrated strong production stability and control efficiency in practical applications, indicating high potential for field deployment and commercial scalability.

5. Conclusions

This study directly addresses two longstanding bottlenecks identified in the Introduction—labor-intensive, subjective visual inspection and delayed discovery of diseases in bagged black fungus cultivation—by integrating a private 5G network with deep learning-based perception in a real farm setting in Taiwan. A high-reliability private 5G network was deployed, achieving an uplink of 147.5 Mbps and downlink 645.2 Mbps, providing the low-latency, high-throughput backbone required for real-time sensing, inference, and feedback across distributed IoT devices.

The proposed system couples an autonomous ground vehicle (AGV), multi-parameter environmental sensors, and a YOLOv8-based vision pipeline to replace intermittent, manual rounds with continuous, automatic image acquisition and risk screening. Over two weeks, environmental data logged every five minutes yielded > 4000 valid records, enabling precise control of temperature, humidity, and CO2. Across five harvest cycles, the system maintained stable field operation, achieved an average yield success rate of 80%, and reached a maximum yield of 400 g per substrate bag. Together, these results show that the approach reduces routine manual intervention and shortens the time-to-alert for potential disease conditions via automated coverage estimation and anomaly flagging.

The key contributions of this study are threefold. (1) We provide a practical deployment blueprint for private 5G in agriculture, demonstrating reliability, low latency, and scalability that outperform conventional connectivity for always-on monitoring. (2) We show how edge AI converts labor-intensive inspection into automated, reproducible assessments of mycelial growth with early-warning capability for disease/contamination, thereby improving operational efficiency and crop quality. (3) To our knowledge, this is among the first 5G-enabled smart agriculture implementations in Taiwan, offering a replicable model for modernizing specialty-crop production.

Future work will extend the AI stack to pathogen-specific detection, growth-pattern prediction, and yield optimization; evaluate scalability in larger houses and diverse crops; and quantify end-to-end labor savings and disease-related outcomes over longer seasons to further validate impact.