Effectiveness of Modern Models Belonging to the YOLO and Vision Transformer Architectures in Dangerous Items Detection

Abstract

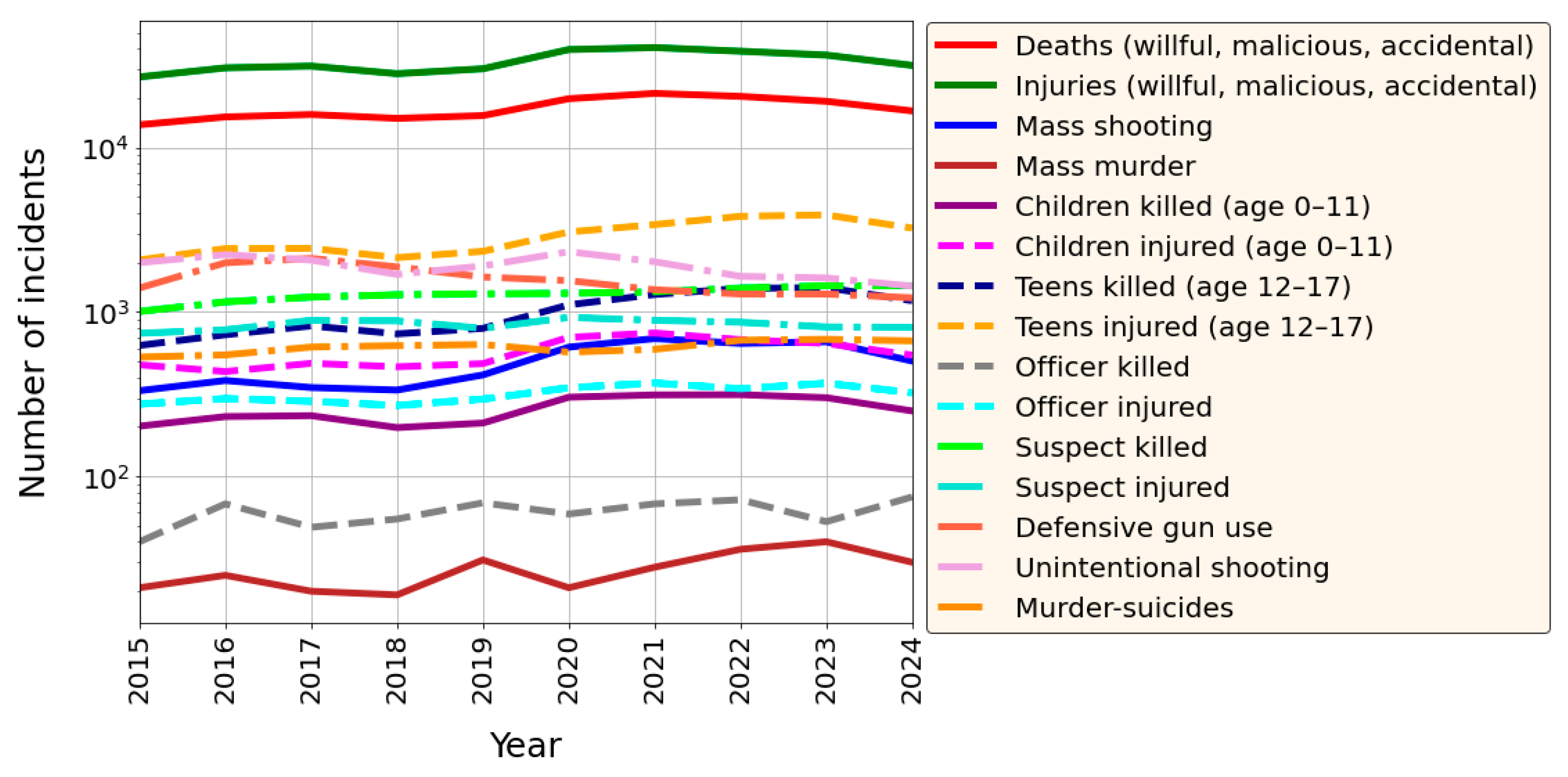

1. Introduction

- Viewpoint variation (the same item can have a different orientation);

- Scale and illumination variation (variation in items’ size and the level of illumination on pixel level can vary);

- Intraclass variation (there can be several types of items with varying appearance within a class);

- Blockage (only a small portion of the item of interest may be visible);

- Background clutter (items can blend into their environment, which will make them hard to identify).

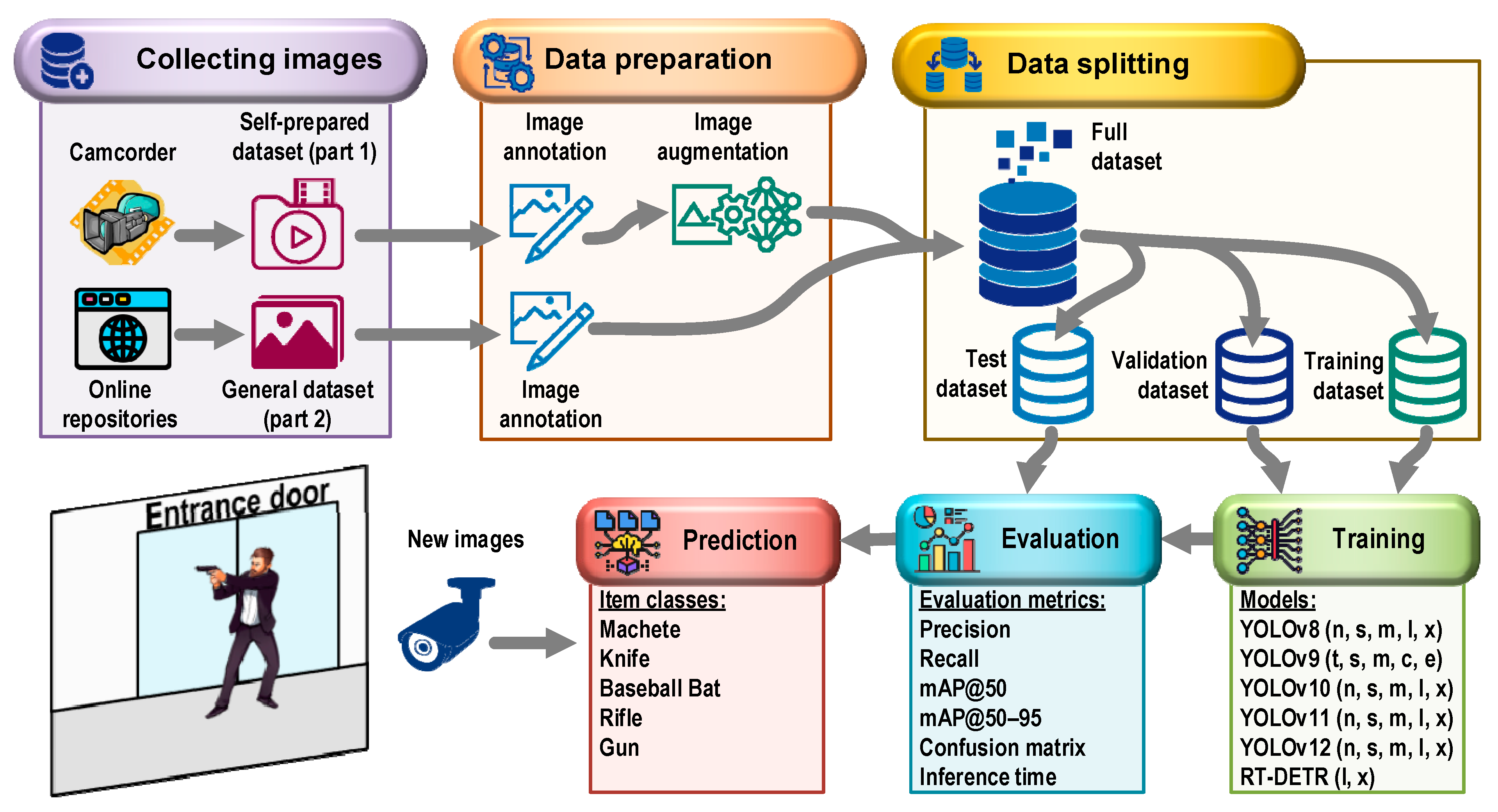

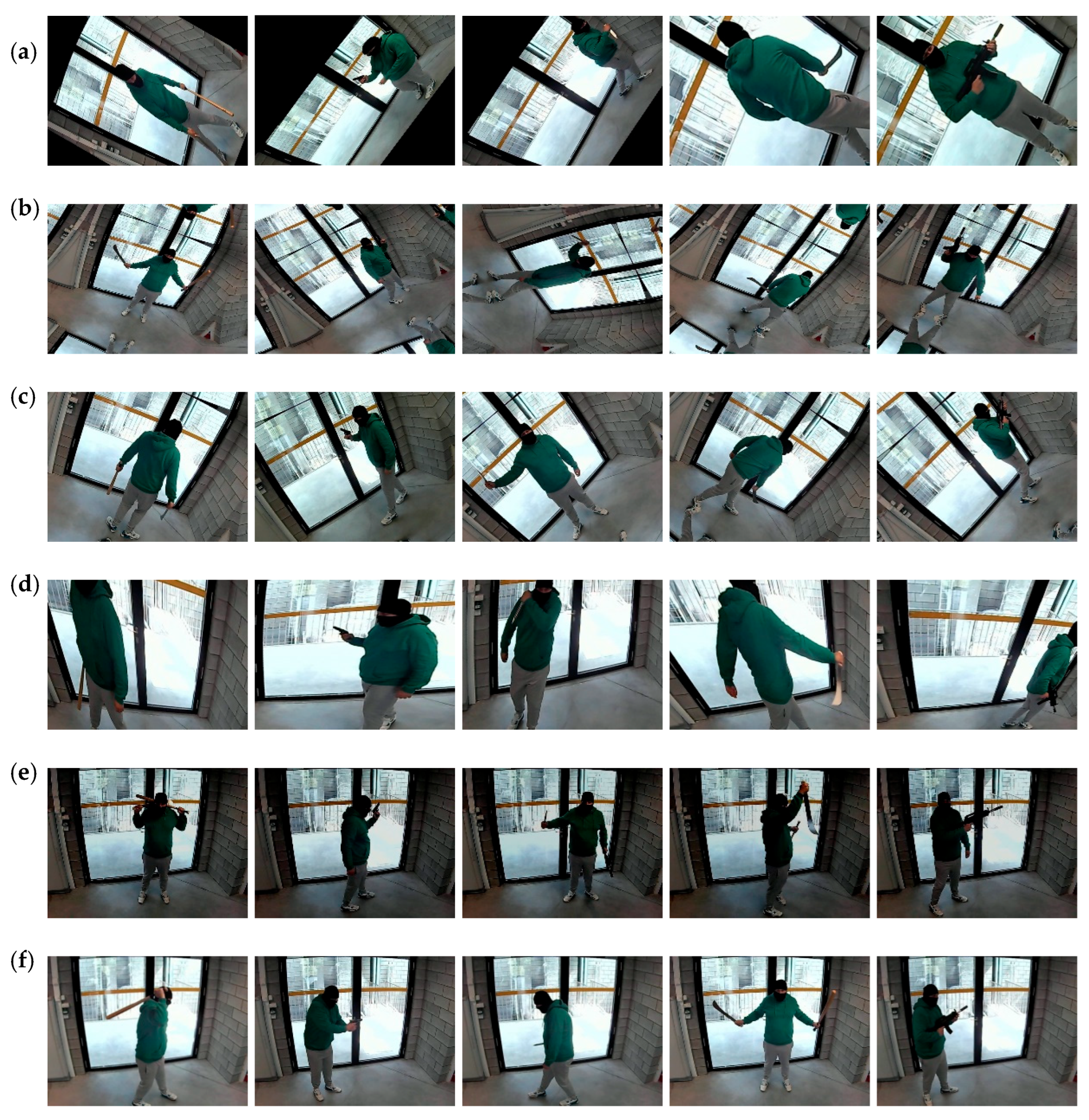

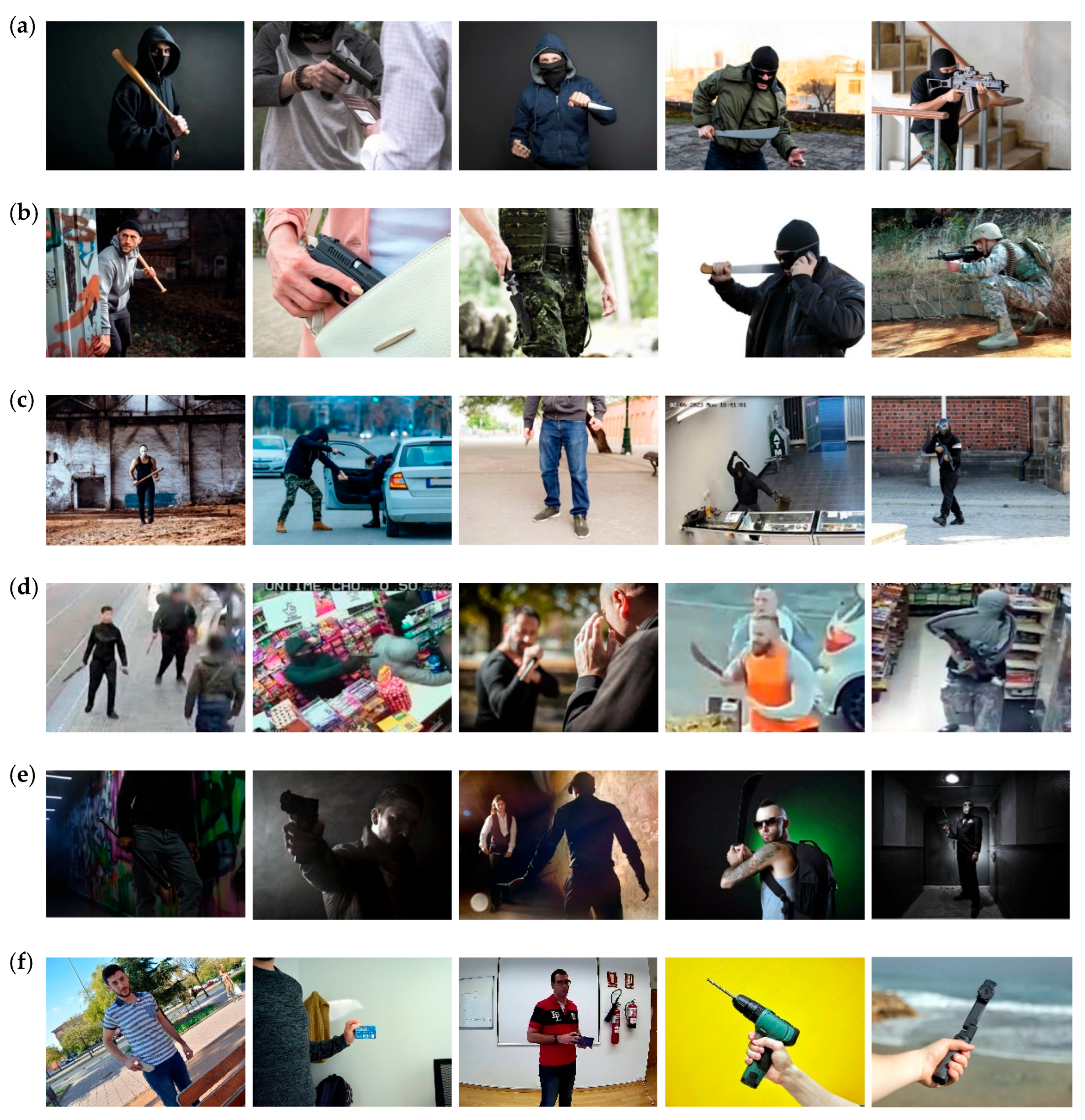

- Creating and making available on the Zenodo platform a new dataset dedicated to detecting five types of dangerous items which include various categories of images: clearly visible items, partially covered items, small items, poor image sharpness, poor image illumination, and background images. This dataset reflects the actual conditions for detecting dangerous items better than previous datasets, so it allows a more realistic assessment of the actual performance of object detectors;

- Training and effectiveness comparison of state-of-the-art object detection models belonging to the YOLO and ViT architectures (27 models in total). The tests carried out are innovative in nature, as the author is not aware of any research results in which the aforementioned architectures would be used to such an extent for the detection of dangerous items. Thanks to this, more reliable results were obtained, allowing for the assessment of the potential of the constructed models and the possibilities of their implementation in public monitoring systems;

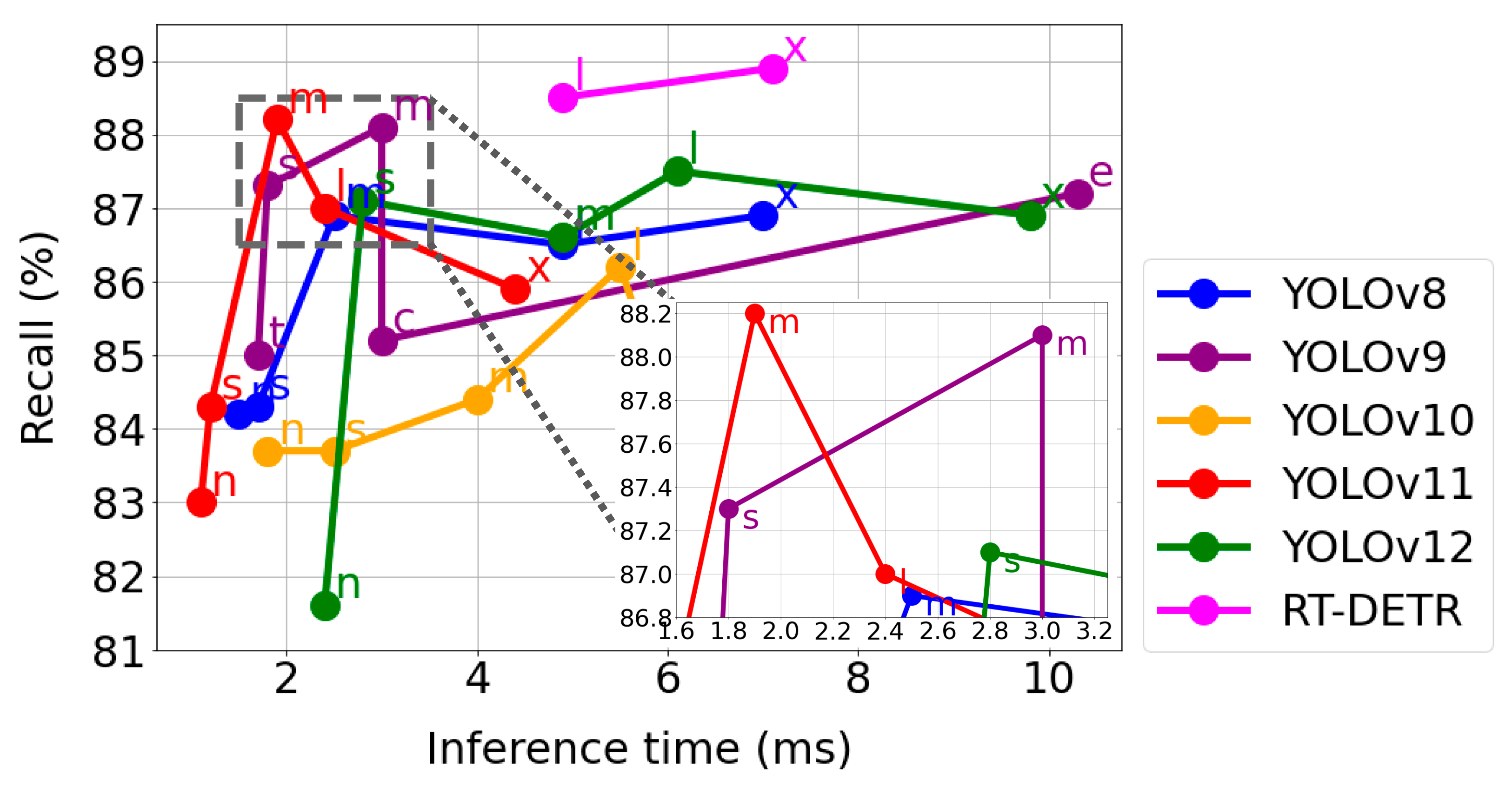

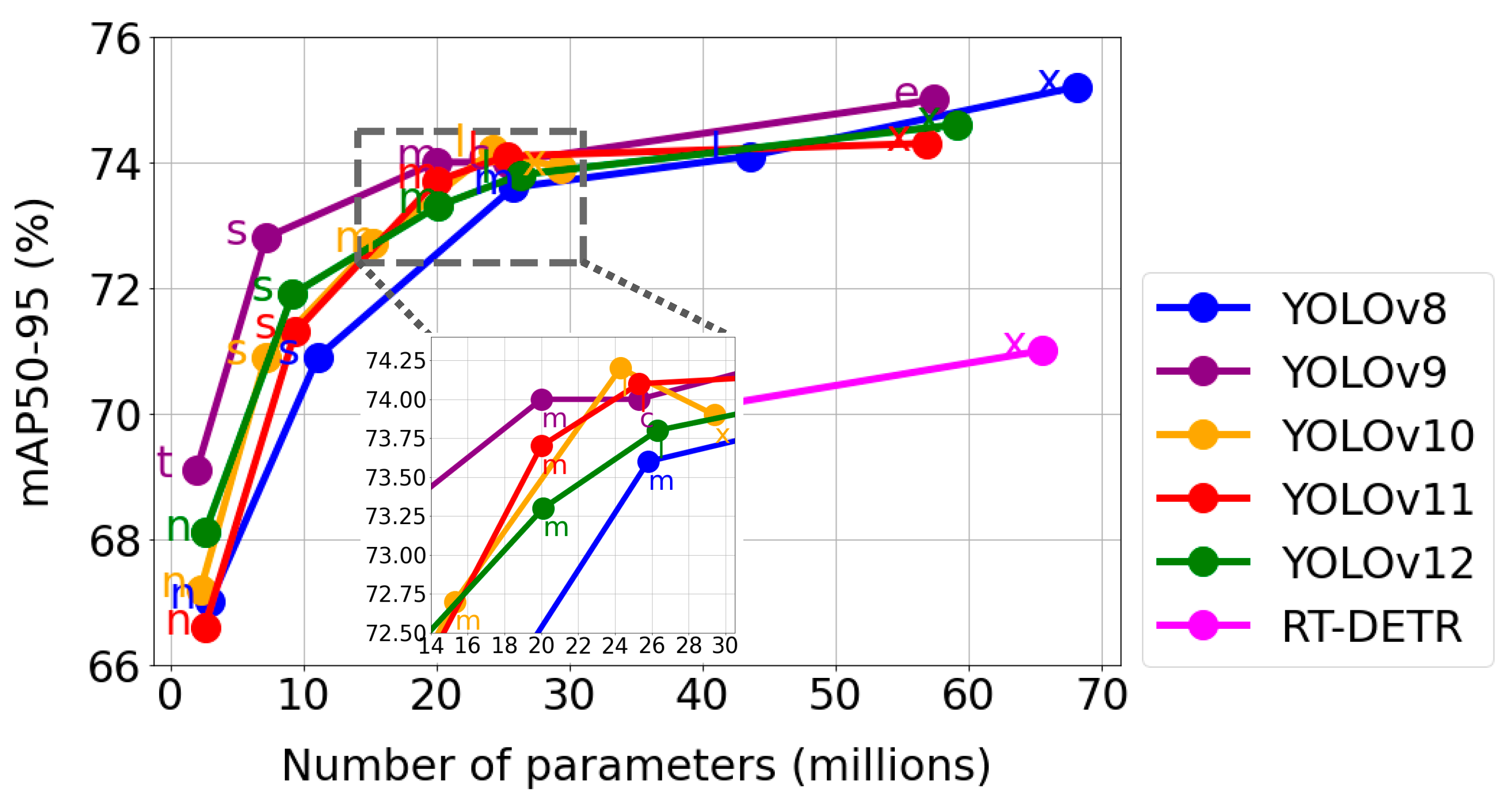

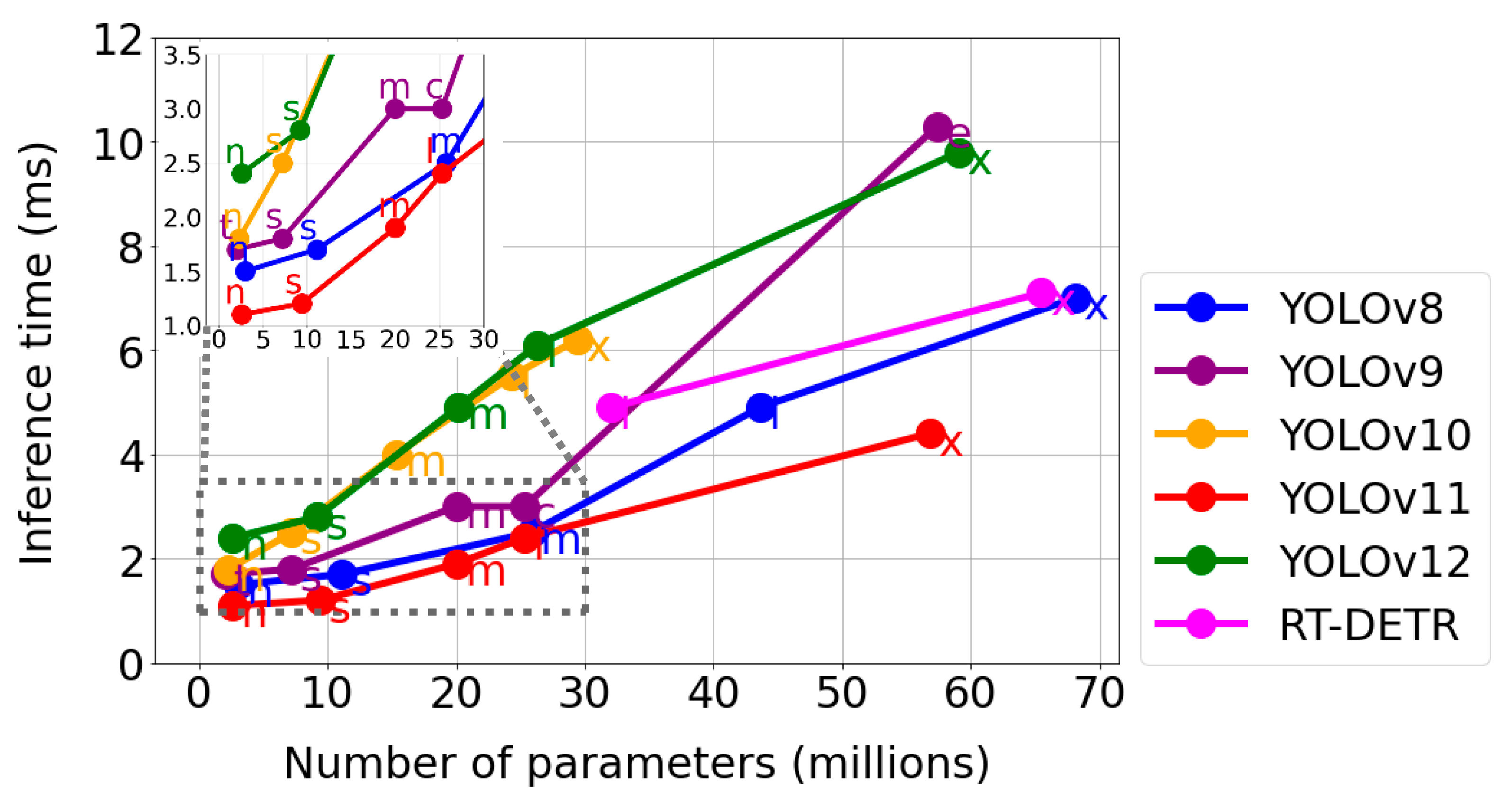

- The presentation of an original approach to the analysis of results dedicated to dangerous object detectors, which takes into account the relationships between the values of relevant parameter pairs: recall—inference time and medium average precision—model complexity;

- Based on the analysis, a recommendation was made for the best model for detecting dangerous items for use in public monitoring systems, and its effectiveness was tested in real-world conditions;

2. Materials and Methods

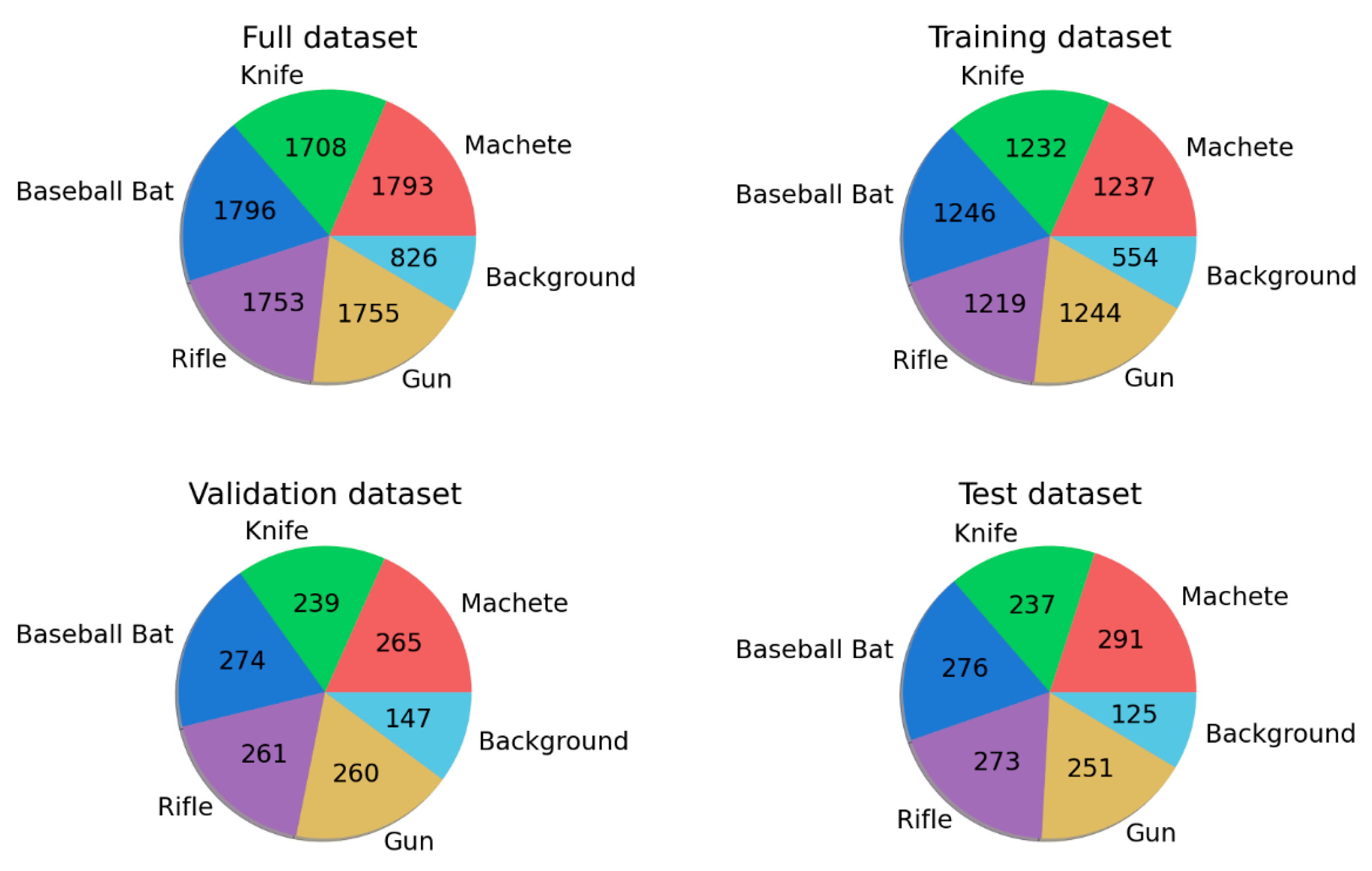

2.1. Research Material

- Affine—augmentation to apply affine transformations to images;

- SafeRotate—rotate the input inside the frame by an angle selected randomly from the uniform distribution;

- ShiftScaleRotate—randomly apply affine transforms: translate, scale, and rotate the input;

- Perspective—apply random four-point perspective transformation to the input.

- RandomBrightnessContrast—randomly changes the brightness and contrast of the input image;

- GaussianBlur—apply Gaussian blur to the input image using a randomly sized kernel.

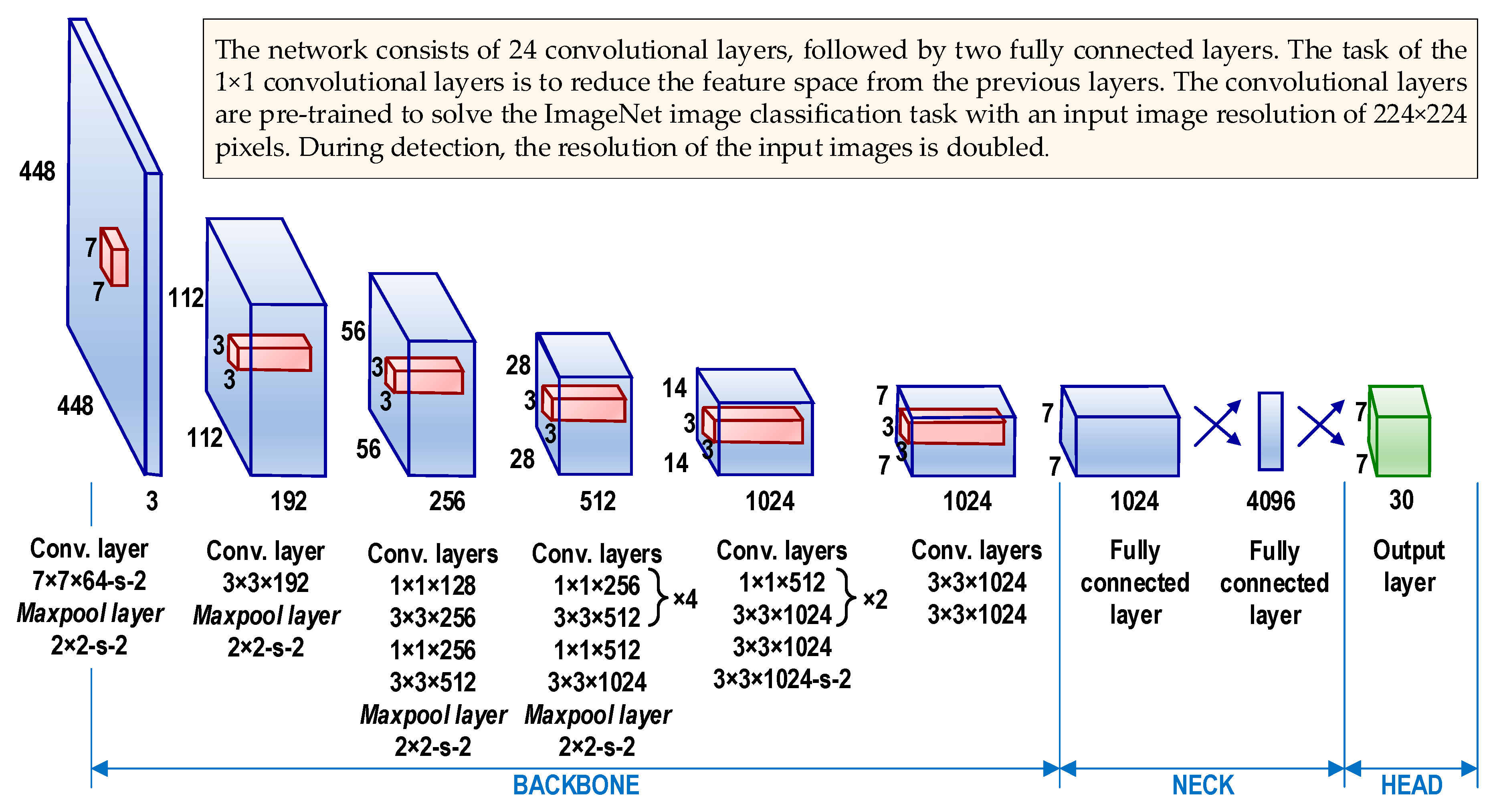

2.2. The Idea Behind YOLO and Its Architectures

2.3. Modifications Introduced by Subsequent Versions of the YOLO and ViT Networks

- YOLOv2 [32]:

- Darknet-19 backbone;

- Anchor boxes (simplifying network learning);

- Batch normalization (improved accuracy and model stability);

- New loss function based on the sum of squares of errors between ground-truth and predicted bounding boxes (better suited for object detection tasks);

- Replacement of fully connected layers with convolutional layers.

- YOLOv3 [33]:

- Darknet-53 backbone (improved accuracy);

- Feature Pyramid Network (FPN) performing detection at 3 different scales (improved detection of objects of different sizes).

- YOLOv4 [34]:

- Cross Stage Partial Darknet-53 backbone (better performance while maintaining computational efficiency);

- New method for generating anchor boxes (K-means clustering). The method clusters reference frames and then uses the group centroids as anchor boxes;

- Gradient Harmonizing Mechanism loss function (improved model performance for unbalanced datasets);

- Mosaic data augmentation (improved model generalization ability by introducing photometric and geometric distortions into the training set).

- YOLOv5 [35]:

- CSPDarknet-53 backbone;

- Migration from the Darknet framework to PyTorch 1.6.0 (simplification of the implementation and experimentation process);

- Mosaic data augmentation (increasing data diversity and improving small object detection by combining 4 training images into one);

- Introduction of 5 model variants—nano, small, medium, large, xlarge (adaptation to different computational requirements).

- YOLOv6 [36]:

- EfficientNet-L2 backbone;

- New method for generating anchor boxes, called dense anchor boxes.

- YOLOv7 [37]:

- Extended-ELAN backbone (increased learning and feature representation capabilities while maintaining gradient stability);

- Use of 9 predefined anchor boxes for detecting objects of various shapes;

- Use of Focal Loss;

- Higher resolution of input images (608 × 608 pixels) than previous versions.

- YOLOv8 [38]:

- CSPDarknet-53 backbone;

- Neck with dynamic label assignment (improved detection of objects of different scales);

- Head without anchor boxes (better accuracy and more efficient object detection);

- Advanced augmentation techniques, including image blending and affine transformations (increased resistance to interference).

- YOLOv9 [39]:

- Programmable Gradient Information technique—ensures that relevant data is retained in deep network layers, enabling the generation of reliable gradients that allow for accurate model updates and improved overall detection performance;

- Generalized Efficient Layer Aggregation Network technique—allows for flexible integration of different computational blocks, enabling the model to be used in a wide range of applications without compromising speed or accuracy.

- YOLOv10 [40]:

- Use of an improved version of Cross Stage Partial Network as the backbone;

- The neck aggregates feature from different scales using Path Aggregation Network;

- Use of Dual-Head architecture:One-to-Many Head module—generates multiple predictions for each object, providing an adequate supply of training signals and improving learning accuracy.One-to-One Head module—generates a single, best prediction for each object. This eliminates the need for NMS, reduces latency, and improves performance.

- YOLOv11 [41]:

- Cross Stage Partial blocks with kernel size 2 (C3K2)—replace the previously used C2F blocks (improved processing speed while maintaining the ability to efficiently extract features);

- Spatial Pyramid Pooling Fast (SPPF) blocks—ensure effective aggregation of multi-scale features (improved processing speed for objects of different sizes while maintaining high precision);

- Parallel Spatial Attention (C2PSA) blocks—the parallel spatial attention mechanism allows the model to focus more precisely on relevant regions of the image (improved object detection accuracy).

- YOLOv12 [42]:

- A novel approach to the self-attention mechanism—feature maps are divided horizontally or vertically into regions of equal size—4 by default (avoiding complex operations and maintaining large effective receptive fields);

- Improved feature aggregation module based on Residual Efficient Layer Aggregation Networks (meeting optimization challenges, especially in attention-focused models on a larger scale).

- RT-DETR [43]:

- Abandoning the NMS algorithm;

- Using a belief-based framework;

- Efficient hybrid encoder (the network achieves high real-time performance);

- Separation of interactions between features within scales and combination of features between scales (efficient processing of multi-scale features);

- Flexible inference speed adjustment using different decoder layers without the need for retraining.

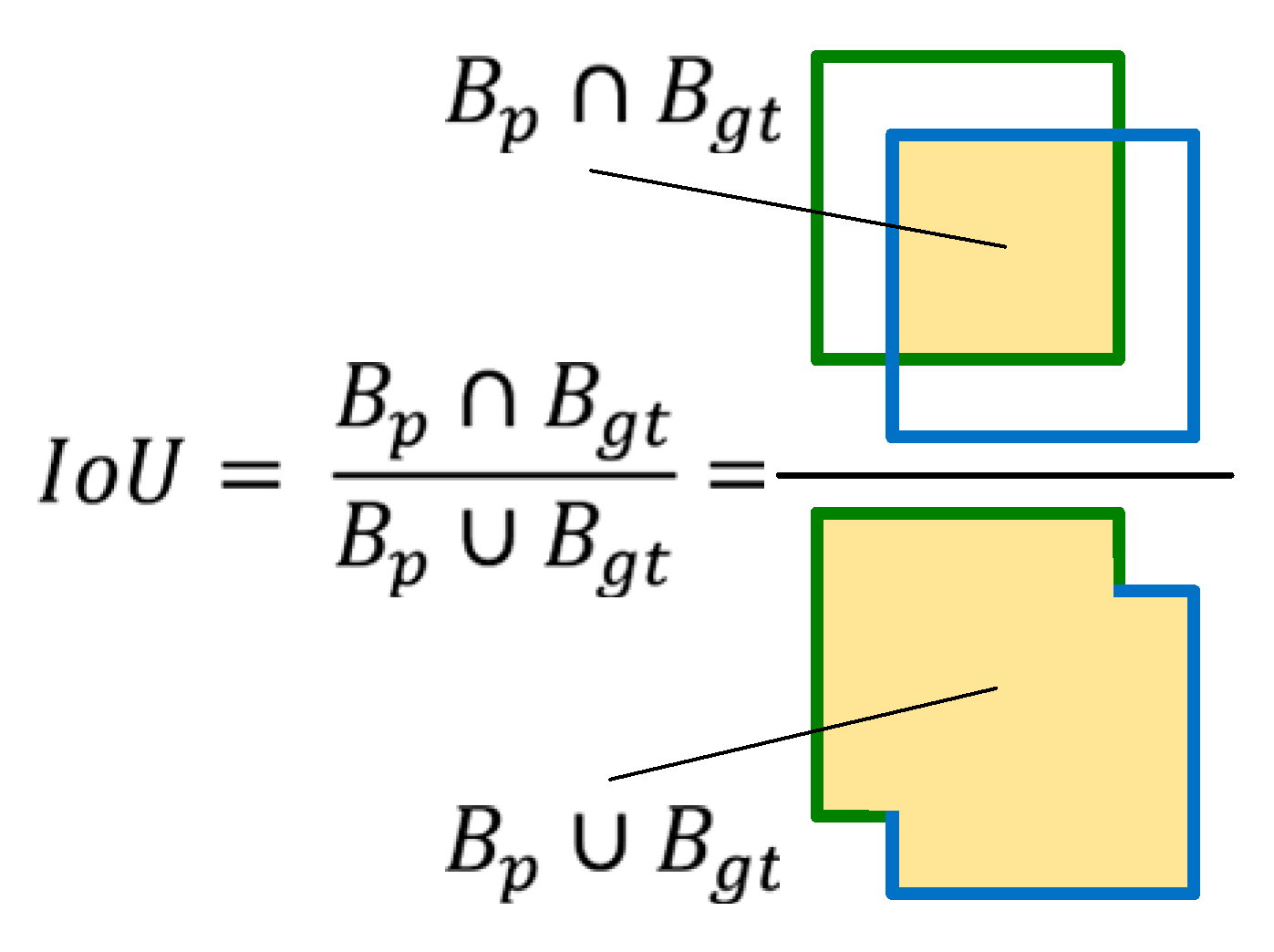

2.4. Model Quality Assessment Measures

- True positive (TP)—occurs when the prediction confidence is greater than the accepted detection threshold (e.g., 0.5), the predicted class is the same as the class corresponding to the ground-truth box, and the predicted box has an IoU value greater than the detection threshold;

- False positive (FP)—a false detection that occurs when either of the last two conditions is not met;

- False negative (FN)—occurs when the prediction confidence is lower than the detection threshold (the ground-truth box was not detected).

3. Results

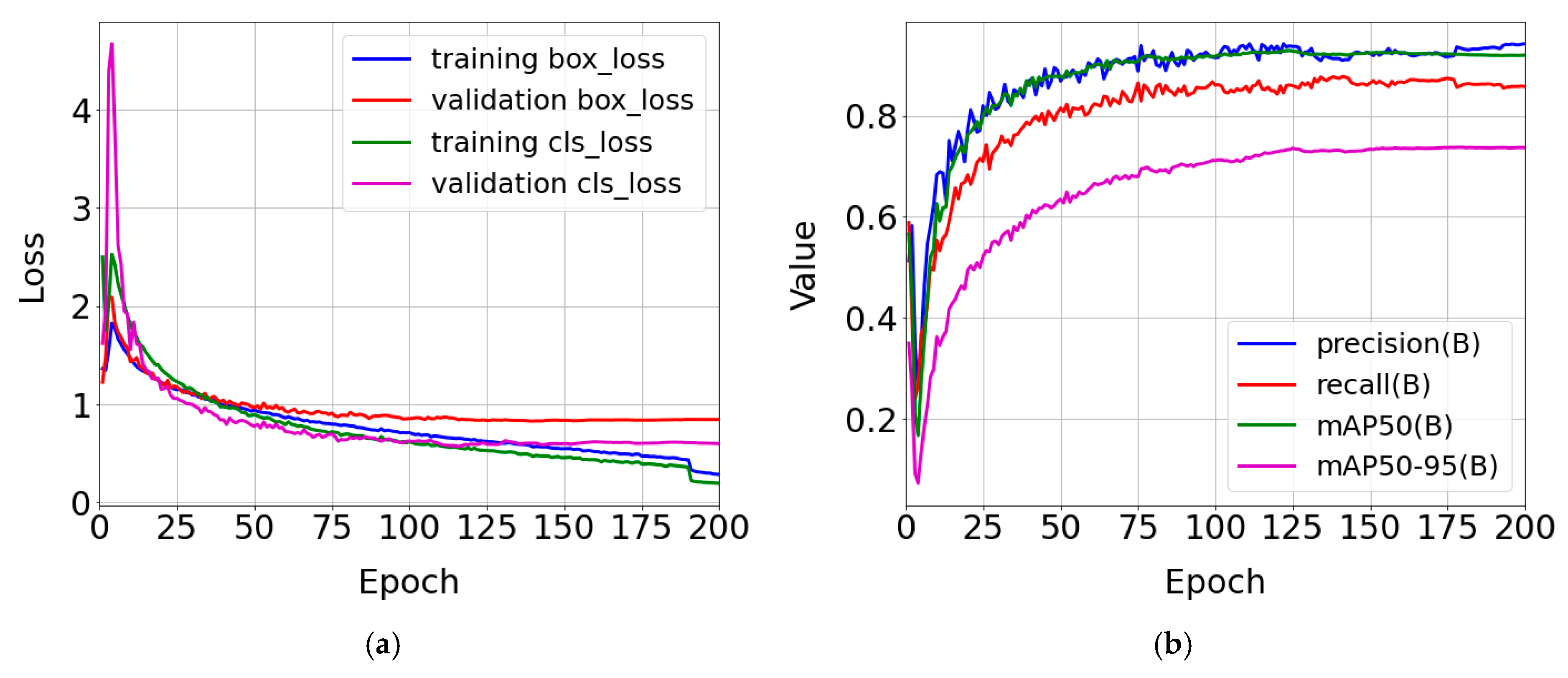

3.1. Training Process

3.2. Model Evaluation

4. Discussion

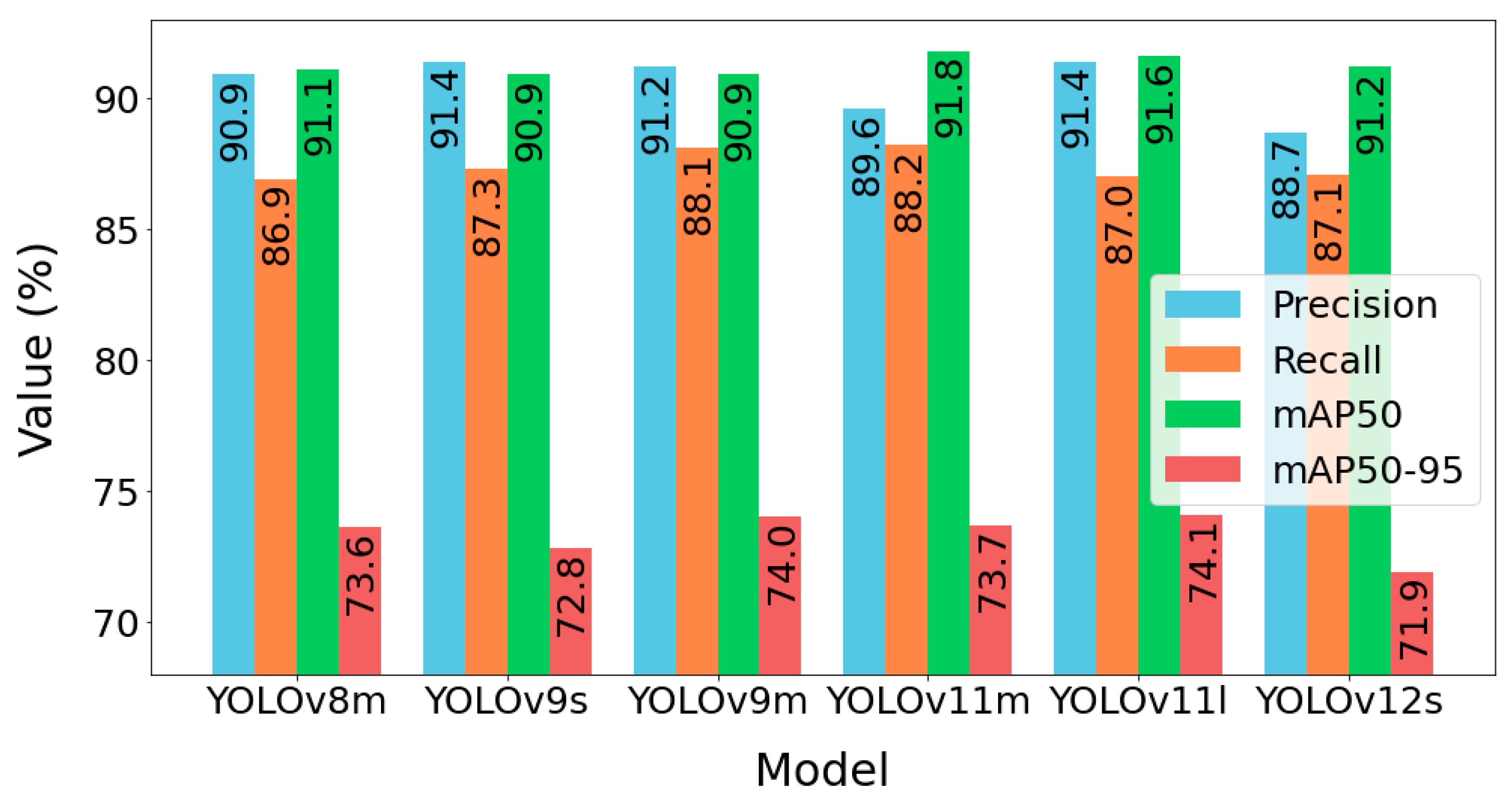

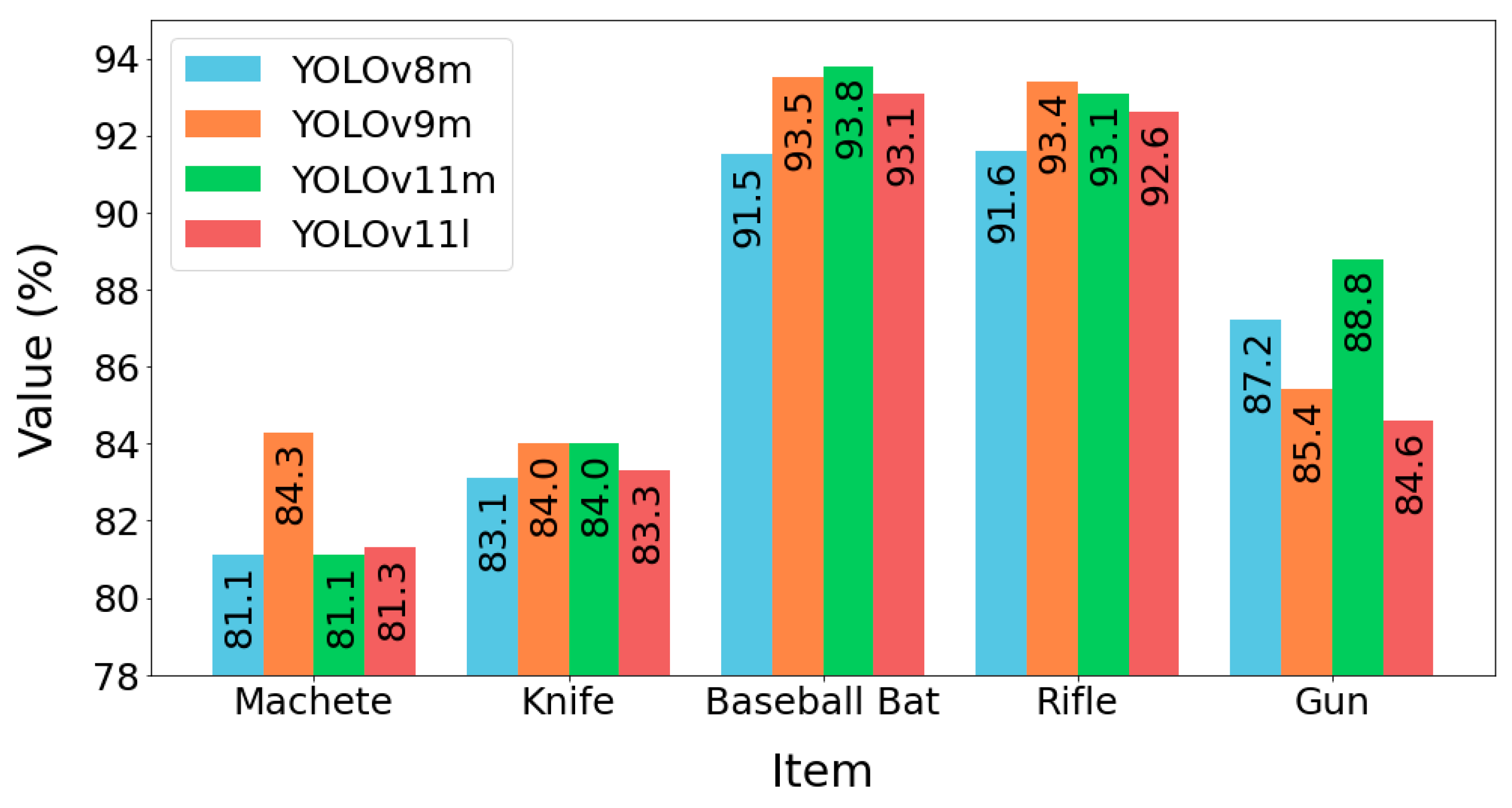

4.1. Results Analysis and Selection of the Best Model

4.2. Comparison of Results with Those of Other Authors

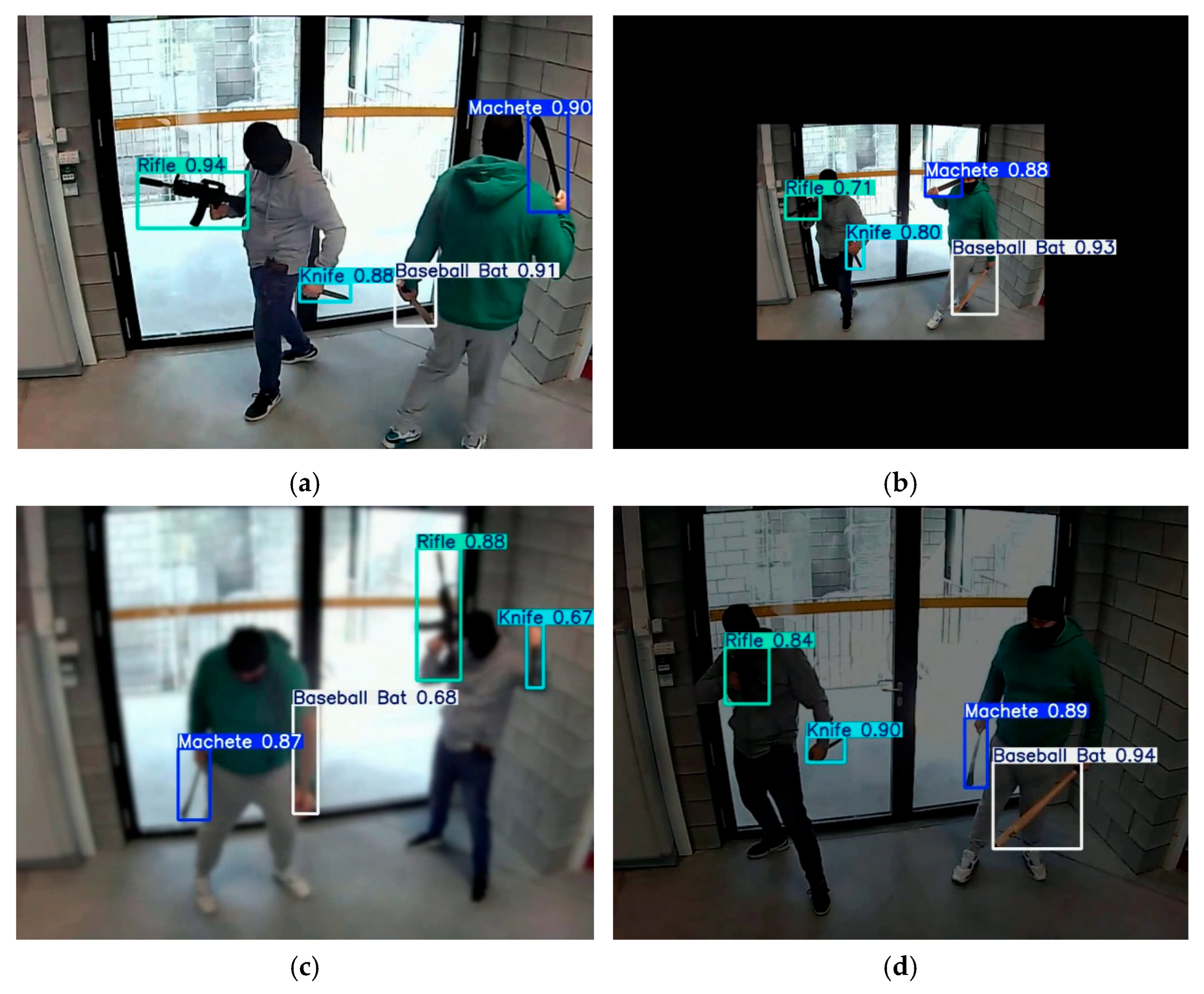

4.3. Testing the Model Using New Images

4.4. Model Limitations

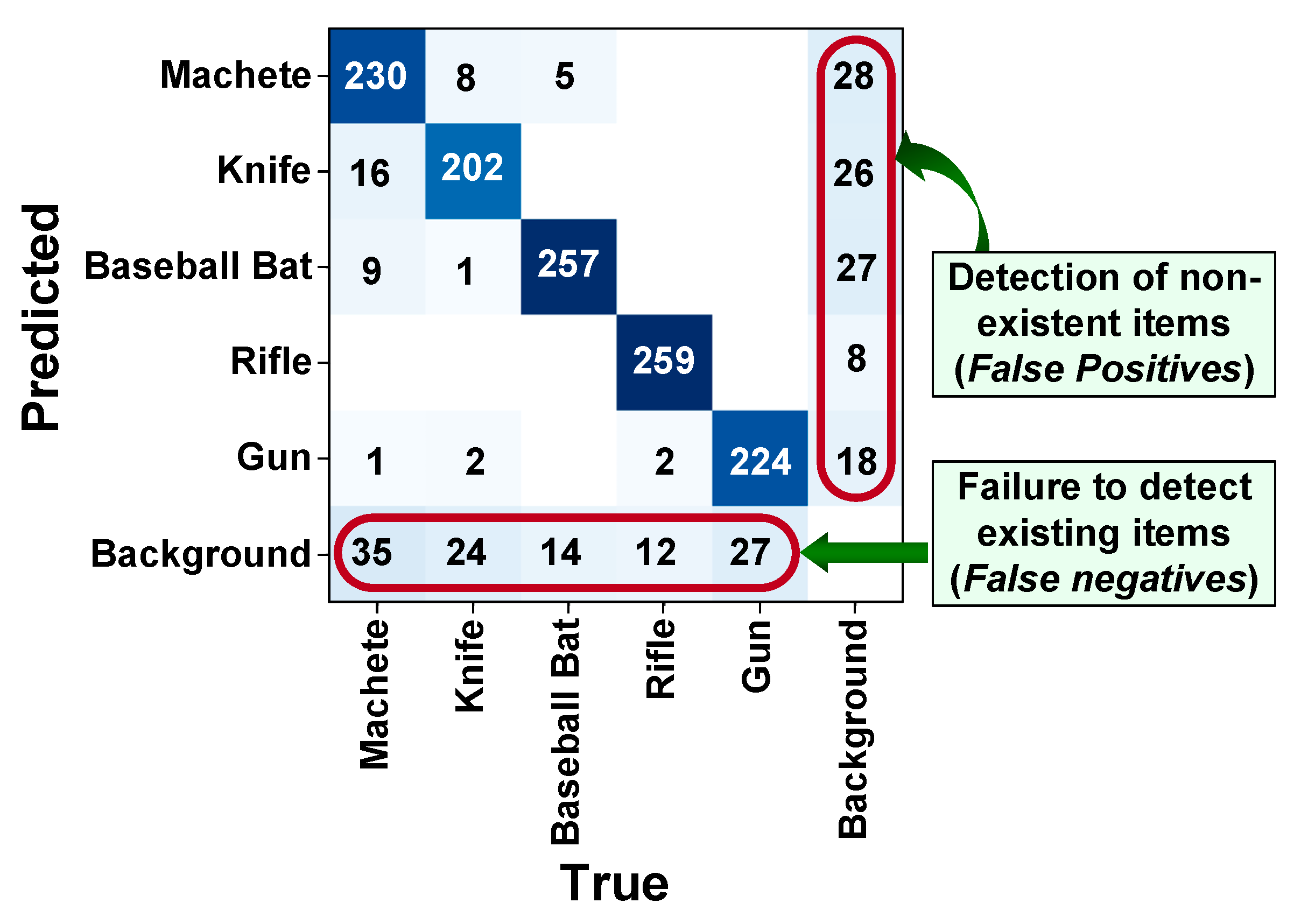

- The problem of accuracy and generalization. The model was trained on a limited set of dangerous items, so its accuracy may be lower for new, unknown images presenting other forms of the same items. The significance of this problem was reduced during the research by using the second part of the dataset, which was designed to increase the generalization properties of the model. The tests showed 112 false negatives (8.4%) and 107 false positives (8%). The number of false negatives can be reduced by expanding the training set with a larger number of images depicting certain objects in positions that make them difficult to recognize. For example, machetes and knives are pointed toward the camera with their blades, and rifles and guns are pointed toward the camera with their barrels. Similarly, the number of images depicting objects that are partially covered should be increased. The model had the most difficulty recognizing machetes and knives because when they are pointed toward the camera lens, these objects look similar. In addition, in both cases, there may be items that are comparable in shape and length. The severity of this problem can be reduced by increasing the number of images in the training set that show machetes and knives in shots that are difficult to recognize;

- Limited understanding of context. Models based on local characteristics ignore the surrounding context, which may be important for detection accuracy. For example, detecting a knife at a school entrance will be treated the same as detecting a knife in a kitchen. There are no ideal models that are universal for every application. In this study, it was emphasized that the purpose of the model is to detect dangerous items before entering a monitored room (e.g., a school). Therefore, an extensive set of training images (part 1) was used to teach the model to understand this specific environmental context;

- Sensitivity to input data. Model tests conducted in various environmental conditions revealed problems with detecting small objects (knife, gun) in cases of poorer image sharpness and lower light intensity. To mitigate this problem, various categories of training images were used (items clearly visible, partially covered, small items, poor image sharpness, poor image illumination, and background images). Further steps may involve the use of image preprocessing algorithms before sending images to the model input;

- Difficult detection of small and overlapping objects. The model detects small objects less well than large ones. The number of undetected knives and guns was more than twice the number of undetected baseball bats and rifles. The problem of detecting small objects remains a challenge in the field of computer vision. One way to solve this problem is to increase the number of small objects in the training set. It is also possible to use a network that is more accurate at detecting small objects, such as Faster R-CNN, at the cost of increased inference time;

- Limited number of categories. The model has been trained to detect only five potentially dangerous items (machete, knife, baseball bat, rifle, and gun). In real-world conditions, other objects may serve as “dangerous” items. Therefore, the model should be retrained to include items that should be detected in specific conditions and applications of the model;

- Implementation limitations. The model tests were performed on a computer with the following hardware specifications, which set the general framework for implementation: Intel Core i7-12650H 2.70 GHz processor, 32 GB RAM, and NVIDIA GeForce RTX 3060 GPU with 6 GB GDDR6. In addition, the following software components were installed: python 3.10.15, opencv-python 4.11.0.86, torch 2.5.1+cu118, ultralytics 8.3.75. The above specification allowed for smooth display of forecasting results at a speed of up to approximately 60 frames per second. In summary, it can be concluded that the model does not have excessive requirements in terms of computer system technical parameters and can be easily integrated with typical general purpose computers. It should be added here that it is not possible to directly implement the model on edge devices. In this case, appropriate model optimization is required.

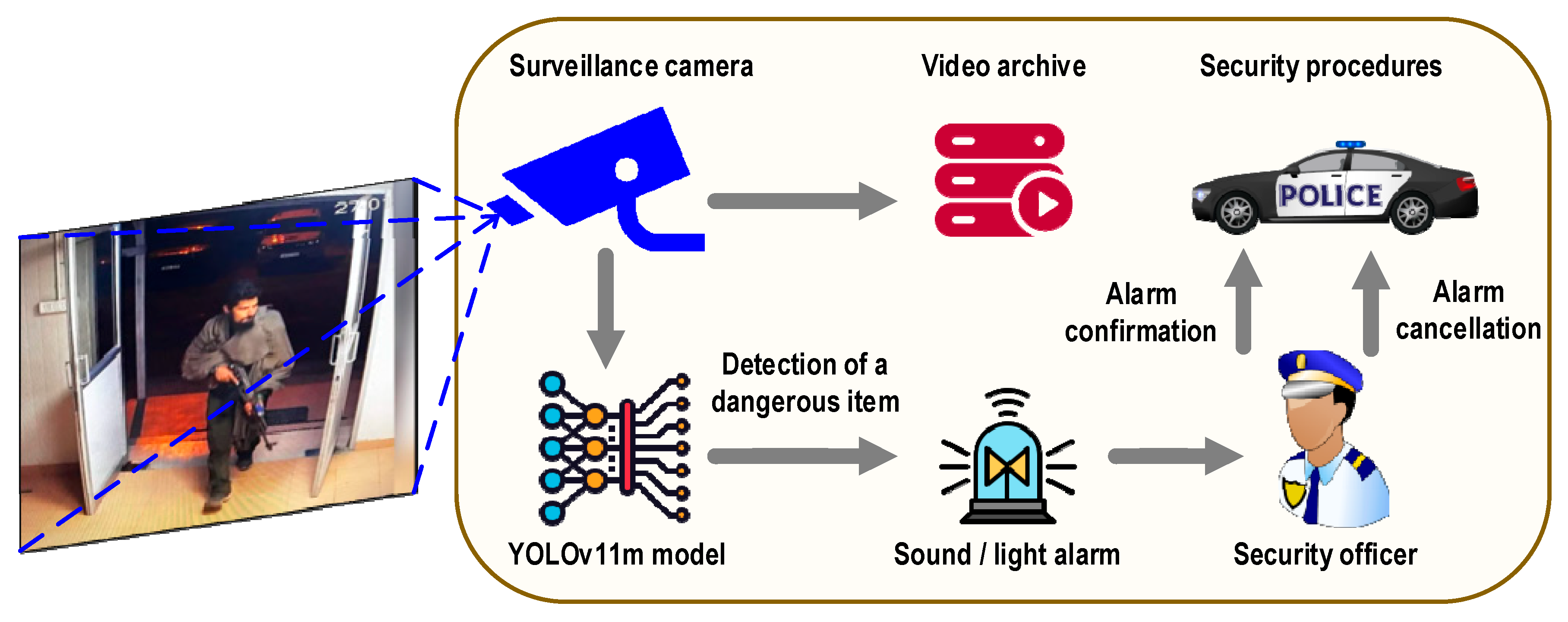

4.5. Potential Application of the Built Model

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- The Gun Violence Archive. Available online: https://www.gunviolencearchive.org/ (accessed on 18 June 2025).

- Jang, S.; Battulga, L.; Nasridinov, A. Detection of Dangerous Situations using Deep Learning Model with Relational Inference. J. Multimed. Inf. Syst. 2020, 7, 205–214. [Google Scholar] [CrossRef]

- Triguero, F.; Tabik, S.; Lamas, A.; Hernández, F.; Pimentel, R. Weapons detection for security and video surveillance. Pattern Recognit. 2024, 147, 109192. [Google Scholar]

- Ha, E.; Kim, H.; Na, D. HOD: New harmful object detection benchmarks for robust surveillance. Comput. Vis. Image Underst. 2024, 238, 103059. [Google Scholar]

- Pérez-Hernández, F.; Tabik, S.; Lamas, A.; Olmos, R.; Fujita, H.; Herrera, F. Object Detection Binary Classifiers methodology based on deep learning to identify small objects handled similarly: Application in video surveillance. Knowl. Based Syst. 2020, 194, 105590. [Google Scholar] [CrossRef]

- Castillo, A.; Tabik, S.; Pérez, F.; Olmos, R.; Herrera, F. Brightness guided preprocessing for automatic cold steel weapon detection in surveillance videos with deep learning. Neurocomputing 2019, 330, 151–161. [Google Scholar] [CrossRef]

- Yadav, P.; Gupta, N.; Sharma, P.K. A Comprehensive Study towards High-level Approaches for Weapon Detection using Classical Machine Learning and Deep Learning Methods. Expert Syst. Appl. 2023, 212, 118698. [Google Scholar] [CrossRef]

- Gawade, S.; Vidhya, R.; Radhika, R. Automatic Weapon Detection for surveillance applications. In Proceedings of the International Conference on Innovative Computing & Communication (ICICC-2022), Delhi, India, 19–20 February 2022. [Google Scholar]

- Dugyala, R.; Reddy, M.V.V.; Reddy, C.T.; Vijendar, G. Weapon Detection in Surveillance Videos Using YOLOv8 and PELSF-DCNN. In Proceedings of the 4th International Conference on Design and Manufacturing Aspects for Sustainable Energy (ICMED-ICMPC 2023), Hyderabad, India, 19–20 May 2023. [Google Scholar]

- Azarov, I.; Gnatyuk, S.; Aleksander, M.; Azarov, I.; Mukasheva, A. Real-time ML Algorithms for the Detection of Dangerous Objects in Critical Infrastructures. In Proceedings of the 4th International Workshop on Intelligent Information Technologies and Systems of Information Security, Online Conference, 22–24 March 2023. [Google Scholar]

- Gao, Q.; Li, Z.; Pan, J. A Convolutional Neural Network for Airport Security Inspection of Dangerous Goods. IOP Conf. Ser. Earth Environ. Sci. 2019, 252, 042042. [Google Scholar] [CrossRef]

- Andriyanov, N. Deep Learning for Detecting Dangerous Objects in X-rays of Luggage. Eng. Proc. 2023, 33, 20. [Google Scholar]

- Omiotek, Z. Dangerous items’ detection in surveillance camera images using Faster R-CNN. Prz. Elektrotech. 2025, 101, 156–168. [Google Scholar] [CrossRef]

- Pistols Dataset. Available online: https://universe.roboflow.com/joseph-nelson/pistols (accessed on 30 August 2025).

- OD WeaponDetection. Available online: https://github.com/brooksideas/OD-weapon-detection-dataset?tab=readme-ov-file (accessed on 30 August 2025).

- Weapon Detection Dataset. Available online: https://www.kaggle.com/datasets/snehilsanyal/weapon-detection-test (accessed on 30 August 2025).

- Gun Detection Datasets. Available online: https://www.linksprite.com/gun-detection-datasets/ (accessed on 30 August 2025).

- Pistols YoloV5 Object Detection Dataset. Available online: https://universe.roboflow.com/weapons/pistols-yolov5-vlhoz/dataset/1 (accessed on 30 August 2025).

- Pistol Labeled Image Dataset. Available online: https://images.cv/dataset/pistol-image-classification-dataset (accessed on 30 August 2025).

- Weapons Labeled Image Dataset. Available online: https://images.cv/dataset/weapons-image-classification-dataset (accessed on 30 August 2025).

- Weapons in Images. Available online: https://datasetninja.com/weapons-in-images (accessed on 30 August 2025).

- Weapon Detection Dataset. Available online: https://www.kaggle.com/datasets/abhishek4273/gun-detection-dataset (accessed on 30 August 2025).

- Weapon Detection System. Available online: https://github.com/HeeebsInc/WeaponDetection (accessed on 30 August 2025).

- Mock Attack Dataset. Available online: https://github.com/Deepknowledge-US/US-Real-time-gun-detection-in-CCTV-An-open-problem-dataset (accessed on 30 August 2025).

- Gun and Knife Detection. Available online: https://universe.roboflow.com/mahad-ahmed/gun-and-knife-detection/dataset/1 (accessed on 30 August 2025).

- Pistol & Rifle & Knife Dataset. Available online: https://universe.roboflow.com/pistolrifle/pistol-rifle-knife/dataset/1 (accessed on 30 August 2025).

- Gun and Knife Detection System Dataset. Available online: https://universe.roboflow.com/weapondetection-um7tj/gun-and-knife-detection-system/dataset/13 (accessed on 30 August 2025).

- YouTube GDD. Available online: https://github.com/UCAS-GYX/YouTube-GDD (accessed on 30 August 2025).

- Weapons-Dataset. Available online: https://github.com/tufailshah786/Weapons-Dataset (accessed on 30 August 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR-2016), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. Available online: http://pjreddie.com/darknet/ (accessed on 18 June 2025).

- Redmon, J.; Farhadi, A. Yolo9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Yolov5 Framework and Documentation. Available online: https://github.com/ultralytics/yolov5 (accessed on 18 June 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 18 June 2025).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics YOLO11. Available online: https://github.com/ultralytics/ultralytics (accessed on 18 June 2025).

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524v1. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2024, arXiv:2304.08069v3. [Google Scholar] [CrossRef]

- Al Rabbani, A.; Hussain, M. YOLOv1 to YOLOv10: A comprehensive review of yolo variants and their application in the agricultural domain. arXiv 2024, arXiv:2406.10139v1. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M. YOLOv1 to YOLOv10: The fastest and most accurate real-time object detection systems. arXiv 2024, arXiv:2408.09332v1. [Google Scholar] [CrossRef]

- Hidayatullaha, P.; Syakranib, N.; Sholahuddinc, M.R.; Gelard, T.; Tubaguse, R. YOLOv8 to YOLO11: A Comprehensive Architecture In-depth Comparative Review. arXiv 2025, arXiv:2501.13400v2. [Google Scholar]

- Sapkota, R.; Flores-Calero, M.; Qureshi, R.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M.; et al. YOLO advances to its genesis: A decadal and comprehensive review of the You Only Look Once (YOLO) series. Artif. Intell. Rev. 2025, 58, 274. [Google Scholar] [CrossRef]

- Ramos, L.T.; Sappa, A.D. A Decade of You Only Look Once (YOLO) for Object Detection. arXiv 2025, arXiv:2504.18586v1. [Google Scholar] [CrossRef]

- Ultralytics Homepage. Available online: https://docs.ultralytics.com/models/ (accessed on 18 June 2025).

- Ashraf, A.H.; Imran, M.; Qahtani, A.M.; Alsufyani, A.; Almutiry, O.; Mahmood, A.; Attique, M.; Habib, M. Weapons Detection for Security and Video Surveillance Using CNN and YOLO-V5s. Comput. Mater. Contin. 2022, 70, 2761–2775. [Google Scholar] [CrossRef]

- Shanthi, P.; Manjula, V. Weapon detection with FMR-CNN and YOLOv8 for enhanced crime prevention and security. Sci. Rep. 2025, 15, 26766. [Google Scholar] [CrossRef] [PubMed]

- Yadav, P.; Gupta, N.; Sharma, P.K. Robust weapon detection in dark environments using Yolov7-DarkVision. Digit. Signal Process. 2024, 145, 104342. [Google Scholar] [CrossRef]

- Bushra, S.N.; Shobana, G.; Maheswari, K.U.; Subramanian, N. Smart Video Survillance Based Weapon Identification Using Yolov5. In Proceedings of the 2022 International Conference on Electronic Systems and Intelligent Computing (ICESIC-2022), Chennai, India, 22–23 April 2022. [Google Scholar]

- Sun, B.; Duan, Z.; Han, X.; Huang, X.; Xie, B.; Wu, X. Dangerous Object Detection Using YOLOv8 and Dynamic Snake Convolution. In Proceedings of the 2024 6th International Conference on Internet of Things, Automation and Artificial Intelligence (IoTAAI-2024), Guangzhou, China, 26–28 July 2024. [Google Scholar]

- Thakur, A.; Shrivastav, A.; Sharma, R.; Kumar, T.; Puri, K. Real-Time Weapon Detection Using YOLOv8 for Enhanced Safety. arXiv 2024, arXiv:2410.19862v1. [Google Scholar]

- Narejo, S.; Pandey, B.; Vargas, D.E.; Rodriguez, C.; Anjum, M.R. Weapon Detection Using YOLO V3 for Smart Surveillance System. Math. Probl. Eng. 2021, 2021, 9975700. [Google Scholar] [CrossRef]

- Ramon, A.O.; Guaman, L.B. Detection of weapons using Efficient Net and Yolo v3. In Proceedings of the 2021 IEEE Latin American Conference on Computational Intelligence (LA-CCI 2021), Temuco, Chile, 2–4 November 2021. [Google Scholar]

- Bhatti, M.T.; Khan, M.G.; Aslam, M.; Fiaz, M.J. Weapon Detection in Real-Time CCTV Videos Using Deep Learning. IEEE Access 2021, 9, 34366–34382. [Google Scholar] [CrossRef]

- Haribharathi, S.; Arvind, R.V.; Ragavendhar, V.P.; Balamurugan, G. Novel Deep Learning Pipeline for Automatic Weapon Detection. arXiv 2023, arXiv:2309.16654v1. [Google Scholar] [CrossRef]

- Jain, H.; Vikram, A.; Kashyap, A.M.; Jain, A. Weapon Detection using Artificial Intelligence and Deep Learning for Security Applications. In Proceedings of the International Conference on Electronics and Sustainable Communication Systems (ICESCS 2020), Coimbatore, India, 28–30 April 2020. [Google Scholar]

- Olmos, R.; Tabik, S.; Herrera, F. Automatic handgun detection alarm in videos using deep learning. Neurocomputing 2018, 275, 66–72. [Google Scholar] [CrossRef]

- Vijayakumar, K.P.; Pradeep, K.; Balasundaram, A.; Dhande, A. R-CNN and YOLOV4 based Deep Learning Model for intelligent detection of weaponries in real time video. Math. Biosci. Eng. 2023, 20, 21611–21625. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, J.; Munir, M.A.; Mahmood, A.; Ali, A.R.; Ali, M. Leveraging Orientation for Weakly Supervised Object Detection with Application to Firearm Localization. arXiv 2021, arXiv:1904.10032v2. [Google Scholar] [CrossRef]

- Hnoohom, N.; Chotivatunyu, P.; Maitrichit, N.; Sornlertlamvanich, V.; Mekruksavanich, S.; Jitpattanakul, A. Weapon Detection Using Faster R-CNN Inception-V2 for a CCTV Surveillance System. In Proceedings of the 25th International Computer Science and Engineering Conference (ICSEC 2021), Chiang Rai, Thailand, 18–20 November 2021. [Google Scholar]

- González, J.L.S.; Zaccaro, C.; Alvarez-Garcia, J.A.; Morillo, L.M.S.; Caparrini, F.S. Real-time gun detection in CCTV: An open problem. Neural Netw. 2020, 132, 297–308. [Google Scholar]

- Fernandez-Carrobles, M.M.; Deniz, O.; Maroto, F. Gun and Knife Detection Based on Faster R-CNN for Video Surveillance. In Proceedings of the 9th Iberian Conference, IbPRIA 2019, Madrid, Spain, 1–4 July 2019. [Google Scholar]

| Dataset Name (Source) | No. of Images (Classes) | Refs. |

|---|---|---|

| Pistols Dataset (Roboflow) | 2986 (guns) | [14] |

| OD-WeaponDetection (GitHub) | 5859 (guns, knives, backgrounds) | [15] |

| Weapon Detection Dataset (Kaggle) | 714 (various weapons) | [16] |

| Gun Detection Datasets (LinkSprite) | 51,000 (guns) | [17] |

| Pistols-YoloV5 Object Detection Dataset (Roboflow) | 6752 (guns) | [18] |

| Pistol Labeled Image Dataset (Images.CV) | 7400 (guns) | [19] |

| Weapons Labeled Image Dataset (Images.CV) | 40,000 (various weapons) | [20] |

| Weapons in Images (Dataset Ninja) | 5695 (various weapons) | [21] |

| Weapon detection dataset (Kaggle) | 3000 (guns) | [22] |

| Weapon detection system (GitHub) | 4940 (guns) | [23] |

| Mock Attack Dataset (GitHub) | 5149 (knives, rifles) | [24] |

| Gun and Knife Detection (Roboflow) | 8451 (guns, knives) | [25] |

| Pistol and Rifle and Knife Dataset (Roboflow) | 12,932 (guns, knives, rifles) | [26] |

| Gun and Knife Detection System Dataset (Roboflow) | 2402 (guns, knives) | [27] |

| YouTube-GDD (GitHub) | 5000 (guns, rifles) | [28] |

| Weapons-Dataset (GitHub) | 7801 (guns, rifles) | [29] |

| Type of Transf. | Albumentations Function | Parameter | Value/Range |

|---|---|---|---|

| Spatial-level transforms | Affine | rotate | 45 |

| scale | [0.5, 2] | ||

| shear | 15 | ||

| translate_percent | 0.05 | ||

| SafeRotate | limit | 90 | |

| ShiftScaleRotate | shift_limit | 0.0625 | |

| scale_limit | 0.1 | ||

| rotate_limit | 45 | ||

| Perspective | scale | [0.15, 0.2] | |

| Pixel-level transforms | RandomBrightnessContrast | brightness_limit | [−0.2, −0.1] |

| contrast_limit | [0, 0] | ||

| GaussianBlur | sigma_limit | [3, 5] |

| Name | Value |

|---|---|

| epochs—total number of training epochs | 200 |

| patience—number of epochs to wait without improvement in validation metrics before early stopping the training | 100 |

| batch—batch size | 32 |

| imgsz—target image size for training | 640 |

| optimizer—choice of optimizer for training | SGD |

| momentum—momentum factor influencing the incorporation of past gradients in the current update | 0.937 |

| lr0—initial learning rate | 0.01 |

| lrf—final learning rate as a fraction of the initial rate = (lr0 × lrf), used in conjunction with schedulers to adjust the learning rate over time | 0.01 |

| weight_decay—L2 regularization term, penalizing large weights to prevent overfitting | 0.0005 |

| warmup_epochs—number of epochs for learning rate warmup, gradually increasing the learning rate from a low value to the initial learning rate to stabilize training early on | 3.0 |

| warmup_momentum—initial momentum for warmup phase, gradually adjusting to the set momentum over the warmup period | 0.8 |

| warmup_bias_lr—learning rate for bias parameters during the warmup phase, helping stabilize model training in the initial epochs | 0.1 |

| box—weight of the box loss component in the loss function, influencing how much emphasis is placed on accurately predicting bounding box coordinates | 7.5 |

| cls—weight of the classification loss in the total loss function, affecting the importance of correct class prediction relative to other components | 0.5 |

| dfl—weight of the distribution focal loss, used in certain YOLO versions for fine-grained classification | 1.5 |

| iou—sets the Intersection Over Union threshold for Non-Maximum Suppression | 0.7 |

| max_det—limits the maximum number of detections per image | 300 |

| augment—enables test-time augmentation during validation | false |

| Architecture | Version | Precision | Recall | mAP@50 | mAP@50–95 |

|---|---|---|---|---|---|

| YOLOv8 | n | 0.888 | 0.842 | 0.897 | 0.670 |

| s | 0.910 | 0.843 | 0.904 | 0.709 | |

| m | 0.909 | 0.869 | 0.911 | 0.736 | |

| l | 0.909 | 0.865 | 0.912 | 0.741 | |

| x | 0.933 | 0.869 | 0.923 | 0.752 | |

| YOLOv9 | t | 0.887 | 0.850 | 0.899 | 0.691 |

| s | 0.914 | 0.873 | 0.909 | 0.728 | |

| m | 0.912 | 0.881 | 0.909 | 0.740 | |

| c | 0.930 | 0.852 | 0.915 | 0.740 | |

| e | 0.916 | 0.872 | 0.917 | 0.750 | |

| YOLOv10 | n | 0.868 | 0.837 | 0.888 | 0.672 |

| s | 0.922 | 0.837 | 0.902 | 0.709 | |

| m | 0.924 | 0.844 | 0.903 | 0.727 | |

| l | 0.935 | 0.862 | 0.915 | 0.742 | |

| x | 0.921 | 0.836 | 0.912 | 0.739 | |

| YOLOv11 | n | 0.897 | 0.830 | 0.890 | 0.666 |

| s | 0.903 | 0.843 | 0.905 | 0.713 | |

| m | 0.896 | 0.882 | 0.918 | 0.737 | |

| l | 0.914 | 0.870 | 0.916 | 0.741 | |

| x | 0.927 | 0.859 | 0.916 | 0.743 | |

| YOLOv12 | n | 0.901 | 0.816 | 0.890 | 0.681 |

| s | 0.887 | 0.871 | 0.912 | 0.719 | |

| m | 0.906 | 0.866 | 0.916 | 0.733 | |

| l | 0.921 | 0.875 | 0.920 | 0.738 | |

| x | 0.918 | 0.869 | 0.918 | 0.746 | |

| RT-DETR | l | 0.927 | 0.885 | 0.899 | 0.698 |

| x | 0.928 | 0.889 | 0.895 | 0.710 |

| Refs. | Network/ Backbone | mAP (%) | Precision (%) | Recall (%) |

|---|---|---|---|---|

| Own results | YOLOv11m/C3K2 | 91.8 (baseball bat, gun, knife, machete, rifle) | 88.3 (machete) 84.4 (knife) 89.6 (baseball bat) 95.1 (rifle) 90.5 (gun) | 81.1 (machete) 84 (knife) 93.8 (baseball bat) 93.1 (rifle) 88.8 (gun) |

| [55] | YOLOv8/CSPDarknet-53 | 78 (weapon, no weapon) | 85 (weapon) | 80 (weapon) |

| [56] | YOLOv3/Darknet-53 | 98.9 (10 weapon classes) | – | – |

| [54] | YOLOv8/CSPDarknet-53 | – | 57.2 (knife) | – |

| [50] | YOLOv5s/CSPDarknet-53 | – | 81 (gun) | 99 (gun) |

| [53] | YOLOv5/CSPDarknet-53 | 95 (gun) | 98 (gun) | 87 (gun) |

| [57] | YOLOv3/Darknet-53 | – | 80 (gun) | 74 (gun) |

| [58] | YOLOv4/CSPDarknet-53 | 91.7 (gun) | 93 (gun) | 88 (gun) |

| [51] | FMR-CNN + YOLOv8/MobileNetV3 + CSPDarknet-53 | 90.1 * (pistol, revolver, rifle, hand-held firearms, gun) | 97.2 (pistol, revolver, rifle, hand-held firearms, gun) | 95 (pistol, revolver, rifle, hand-held firearms, gun) |

| [52] | YOLOv7-DarkVision/Extended-ELAN | 95.7 (gun) | 95.5 (gun) | 91.4 (gun) |

| [13] | Faster R-CNN/ResNet152 | 85 (baseball bat, gun, knife, machete, rifle) | 87.8 (baseball bat) 91.3 (gun) 80.8 (knife) 79.7 (machete) 85.3 (rifle) | 95.3 (baseball bat) 94.6 (gun) 90 (knife) 85.5 (machete) 88.5 (rifle) |

| [59] | Faster R-CNN/CNN | – | 84.7 (gun) | 86.9 (gun) |

| [60] | Faster R-CNN/CNN | 84.6 (gun, rifle) | – | – |

| [61] | Faster R-CNN/VGG-16 | – | 84.2 (gun) | 100 (gun) |

| [62] | Faster R-CNN/ResNet50 | 80.5 (axe, gun, knife, rifle, sword) | 96.6 (gun) 55.8 (knife) 100 (rifle) | 61 (gun) 52 (knife) 96 (rifle) |

| [63] | Faster R-CNN/VGG-16 | 79.8 (gun, rifle) | 80.2 (gun) 79.4 (rifle) | – |

| [64] | Faster R-CNN/Inception-ResNetV2 | – | 79.3 (gun) | 68.6 (gun) |

| [65] | Faster R-CNN/ResNet50 | – | 88.1 (gun) | 100 (gun) |

| [58] | Faster R-CNN/Inception-ResNetV2 | – | 86.4 (gun) | 89.2 (gun) |

| [66] | Faster R-CNN/SqueezeNet | – | 85.4 (gun) | – |

| Faster R-CNN/GoogleNet | – | 46.7 (knife) | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Omiotek, Z. Effectiveness of Modern Models Belonging to the YOLO and Vision Transformer Architectures in Dangerous Items Detection. Electronics 2025, 14, 3540. https://doi.org/10.3390/electronics14173540

Omiotek Z. Effectiveness of Modern Models Belonging to the YOLO and Vision Transformer Architectures in Dangerous Items Detection. Electronics. 2025; 14(17):3540. https://doi.org/10.3390/electronics14173540

Chicago/Turabian StyleOmiotek, Zbigniew. 2025. "Effectiveness of Modern Models Belonging to the YOLO and Vision Transformer Architectures in Dangerous Items Detection" Electronics 14, no. 17: 3540. https://doi.org/10.3390/electronics14173540

APA StyleOmiotek, Z. (2025). Effectiveness of Modern Models Belonging to the YOLO and Vision Transformer Architectures in Dangerous Items Detection. Electronics, 14(17), 3540. https://doi.org/10.3390/electronics14173540