1. Introduction

The proliferation of Internet of Things (IoT) devices has given rise to a growing demand for intelligent systems capable of performing real-time data analysis directly at the edge. Conventional methodologies, which rely on the transmission of raw sensor data to remote servers or cloud infrastructures for processing, are encumbered by several critical limitations. The necessity for constant connectivity introduces latency, which has the potential to degrade real-time responsiveness. Round-trip delays for cloud inference typically range from 100 milliseconds to several seconds, depending on network congestion and physical distance from data centers. Secondly, the wireless transmission of continuous sensor data (e.g., at rates of 1–10 KB/s per device) has been demonstrated to rapidly diminish battery life, with communication alone accounting for up to 80% of total energy consumption in typical battery-powered systems. Thirdly, the utilization of cloud-based processing engenders concerns pertaining to privacy and data security, particularly in scenarios involving sensitive behavioral or biometric information. Finally, network availability and bandwidth constraints limit the scalability and reliability of cloud-dependent systems, particularly in remote or infrastructure-poor environments.

The field of Tiny Machine Learning (TinyML) has emerged as a response to the challenges posed by the deployment of lightweight machine learning models on ultra-low-power microcontrollers [

1,

2]. These devices typically consume less than 1 mW of energy during inference mode, a critical consideration in the development of energy-efficient machine learning systems. For instance, a model that has been optimized for efficiency and executed on a Cortex-M4 MCU is capable of performing inference in under 10 milliseconds whilst utilizing less than 100 KB of memory. By shifting computation from the cloud to the edge, TinyML facilitates real-time decision making, substantially reduces communication overhead, and enables systems to function autonomously, even in the absence of continuous connectivity. This makes it especially well suited to pervasive, distributed sensing applications where responsiveness, energy efficiency, and privacy are critical design constraints.

In this work, we propose a lightweight edge-based system that detects human motion patterns such as entering, exiting, approaching, or leaving a doorway, using only a single Time-of-Flight (ToF) sensor. The sensing unit’s core is the TDK InvenSense ICU-20201, a miniature ToF sensor that measures the distance to nearby objects by analyzing the round-trip time of a modulated infrared signal. The sensor, when installed in an elevated position above a gate or doorway, is able to capture the distance of a person over time. This provides a temporal profile that reflects movement direction and behavior.

In order to preserve mobility and reduce wiring complexity, Bluetooth Low Energy (BLE) is adopted as the communication protocol between the sensor and the processing unit. BLE facilitates low-power, short-range wireless communication, rendering it optimal for utilization in battery-operated or intermittently powered devices.

For the processing unit, the Arduino Nano 33 IoT microcontroller was selected, offering integrated Bluetooth and Wi-Fi connectivity, in addition to sufficient resources to execute inference using LiteRT for microcontrollers. This board constitutes a pragmatic selection for practical real-world implementations, attributable to its compact dimensions, minimal financial outlay, and capacity to facilitate secure IoT development.

By training a small, efficient ML model on labeled distance sequences and deploying it to the Arduino board, we demonstrate that it is possible to accurately classify user movement at the edge, without relying on cloud infrastructure or external computation. Our approach is fully self-contained, scalable, and well suited to smart building applications such as occupancy detection, automated access control, or elderly monitoring. In particular, the proposed work is part of a project related to the development of smart domotic applications. Indeed, detecting the presence of a person inside a room enables the smart management of features like lighting, heating or cooling. The system proposed in this work takes a novel approach, leveraging a ToF sensor coupled with a TinyML model deployed on an ultra-low-power microcontroller to classify movement patterns with high temporal resolution. Unlike conventional binary motion detectors, our solution can detect presence and discern the nature and direction of movement, such as entering, exiting or approaching without crossing. This enables richer automation logic in intelligent environments. The combination of directional awareness, real-time processing and a fully embedded architecture makes this method particularly innovative, making it well suited to next-generation domotic applications that require precision, autonomy and minimal invasiveness.

This paper is organized as follows.

Section 2 summarizes the related works, while

Section 3 describes the adopted methodology. In particular, it describes the general concepts of the system, the features of the adopted sensors and the dataset acquisition and labelling. Moreover, it describes the wireless transmission and the design and deployment of the TinyML model.

Section 4 details the experimental results and compares them with the state of the art. Finally,

Section 5 concludes this paper.

2. Related Works

The convergence of embedded sensing and machine learning has given rise to a growing body of research in the field of low-power, on-device intelligence. The application of TinyML has seen a marked increase in recent years, with the technology being used to solve problems in a variety of fields. These include the identification of keywords [

3], the recognition of gestures [

4], the identification of anomalies in industrial environments [

5], and the recognition of human activity [

6]. These applications characteristically depend on lightweight models, frequently neural networks or decision trees, operating on microcontrollers with constrained memory and processing capacity.

In the field of human movement detection, a range of sensing modalities have been investigated, each offering distinct advantages and limitations. Passive infrared (PIR) sensors are utilized extensively due to their cost-effectiveness, minimal power consumption, and simplicity. PIR sensors are capable of detecting changes in infrared radiation, thus enabling effective basic presence detection. It has been established that the amount of current typically consumed by these devices is between 1 and 6 microamperes when operating in ultra-low-power modes [

7]. However, the technology is subject to certain limitations, including low spatial resolution and inability to determine movement direction or distance [

8]. This can result in false positives in complex environments. Radar-based systems, including Frequency-Modulated Continuous Wave (FMCW) and Millimeter-Wave (mmWave) radars, have been shown to offer superior sensitivity and the capability to detect fine-grained motion, including micro-gestures and velocity estimation. These systems function effectively under various lighting and environmental conditions and can penetrate obstacles such as walls. Recent advancements resulted in the development of radar sensors that exhibit a power consumption of as low as 80 μW. However, it should be noted that radar modules are generally more expensive and involve complex signal processing, which makes them less suitable for ultra-low-power, cost-constrained applications [

9].

Camera-based solutions [

10] can detect posture, count people, and infer direction, but raise significant privacy concerns and typically demand high computational power, limiting their applicability in embedded or battery-powered setups. Recently, ToF sensors have emerged as an attractive alternative due to their compact size, privacy-preserving nature, and ability to return accurate distance profiles at low power.

Some works have investigated ToF-based activity detection in smart environments [

11], yet most rely on cloud-based processing or larger edge devices such as Raspberry Pi. In contrast, our approach performs on-device classification using a low-power microcontroller with BLE communication, achieving full autonomy without external computation. Other recent studies in the TinyML domain, such as [

12,

13], have shown promising results using IMUs or microphones, but do not address the specific task of doorway event classification with directionality.

ToF sensors have been demonstrated to provide precise distance measurements with relatively low power consumption and a compact form factor. ToF sensors are able to determine the direct distance of an object by measuring the time it takes for a modulated light signal to travel to the object and back. To provide an example, the TDK InvenSense ICU-20201 operates at a low power consumption of 26 μA at 1 sample per second for a 5-m range and up to 296 μA at 25 samples per second. It offers a customizable field of view up to 180°, operates effectively in any lighting condition, and provides accurate range measurements up to 5 m. In comparison with PIR sensors, ToF sensors provide a more comprehensive spatial information, facilitating the distinction between entering and exiting movements and the tracking of approach trajectories. Despite their lack of penetration ability when compared with radar, and their sensitivity to environmental factors such as strong ambient light, the affordability, ease of integration, and high sampling rates of ToF sensors make them a compelling choice for indoor human detection tasks. As demonstrated in previous studies, the ToF technology has proven effective in detecting occupancy and classifying movement. However, the majority of implementations continue to rely on cloud-based post-processing or are deployed on more powerful edge devices rather than on ultra-constrained microcontrollers. Indeed, conventional human activity detection systems frequently rely on the continuous transmission of raw sensor data to the cloud for processing. Whilst the architecture facilitates complex inference on high-performance servers, it is afflicted by numerous well-documented disadvantages. These include communication latency, elevated energy consumption due to frequent wireless transmission, network dependency, and substantial privacy concerns, particularly in personal or clinical contexts [

14,

15,

16,

17]. Recent studies have shown that edge AI and TinyML can mitigate these limitations by enabling local decision making directly on microcontrollers or embedded platforms [

13,

18,

19] This paradigm shift facilitates real-time responses, reduced data exposure and improved energy efficiency. These factors are of critical importance for battery-powered, autonomous systems such as smart doorways and occupancy-aware environments.

The utilization of Bluetooth-based sensing systems has been proposed for applications necessitating wireless communication with minimal energy expenditure. For instance, BLE has been used in occupancy monitoring [

20] and distributed sensor networks [

21], often in conjunction with gateway nodes or edge hubs. However, there is a paucity of studies that have explored the direct integration of BLE communication and ML inference within the same ultra-low-power platform.

In recent years, the practical deployment of ML models on boards such as the Arduino Nano 33 IoT and STM32 has been enabled by frameworks such as LiteRT (

https://ai.google.dev/edge/litert/microcontrollers/overview accessed on May 2025) and Edge Impulse (

https://edgeimpulse.com/ accessed on 22 May 2025). These tools facilitate the connection between model design and real-world implementation, though case studies utilizing them for single-sensor, door-based people detection remain restricted.

To the best of our knowledge, this work is one of the first to take a fully integrated approach, combining a compact ToF sensor mounted above a doorway with BLE-based communication and real-time TinyML inference directly on a microcontroller. Indeed, we found only some industrial demos working with two sensors to detect if a person enters or exits a room (

https://sadel.it/index.php/2023/08/01/people-counting/ accessed on 22 May 2025).

Exploiting the spatial precision of the ToF sensor enables the system to capture detailed distance profiles of individuals moving through the monitored area. This allows it to accurately discriminate between motion patterns such as entering, exiting, approaching and moving away from the entrance. A key advantage of this architecture is its ability to perform all data processing locally on the microcontroller using an ultra-lightweight machine learning model, thus eliminating the need for external computation or cloud-based services.

This fully embedded design ensures real-time responsiveness and offers robustness and resilience to network failures, privacy concerns and power interruptions. The combination of BLE communication and efficient inference ensures minimal energy consumption, making the system ideal for battery-operated or intermittently powered setups. Furthermore, the solution is designed to be cost-effective, relying on off-the-shelf components and straightforward installation procedures to facilitate scalable deployment in smart buildings, access control systems, and energy-efficient IoT environments. By combining precision sensing, low-power wireless communication and edge-based intelligence, this work provides a practical and reliable framework for next-generation human presence detection.

To the best of our knowledge, our work is the first to combine:

Direction-aware classification (entering, exiting, approaching–leaving),

A single overhead-mounted ToF sensor,

Real-time, fully embedded inference on a low-power microcontroller, and

BLE-based result transmission within a compact and privacy-preserving system.

3. Materials and Methods

3.1. System Overview

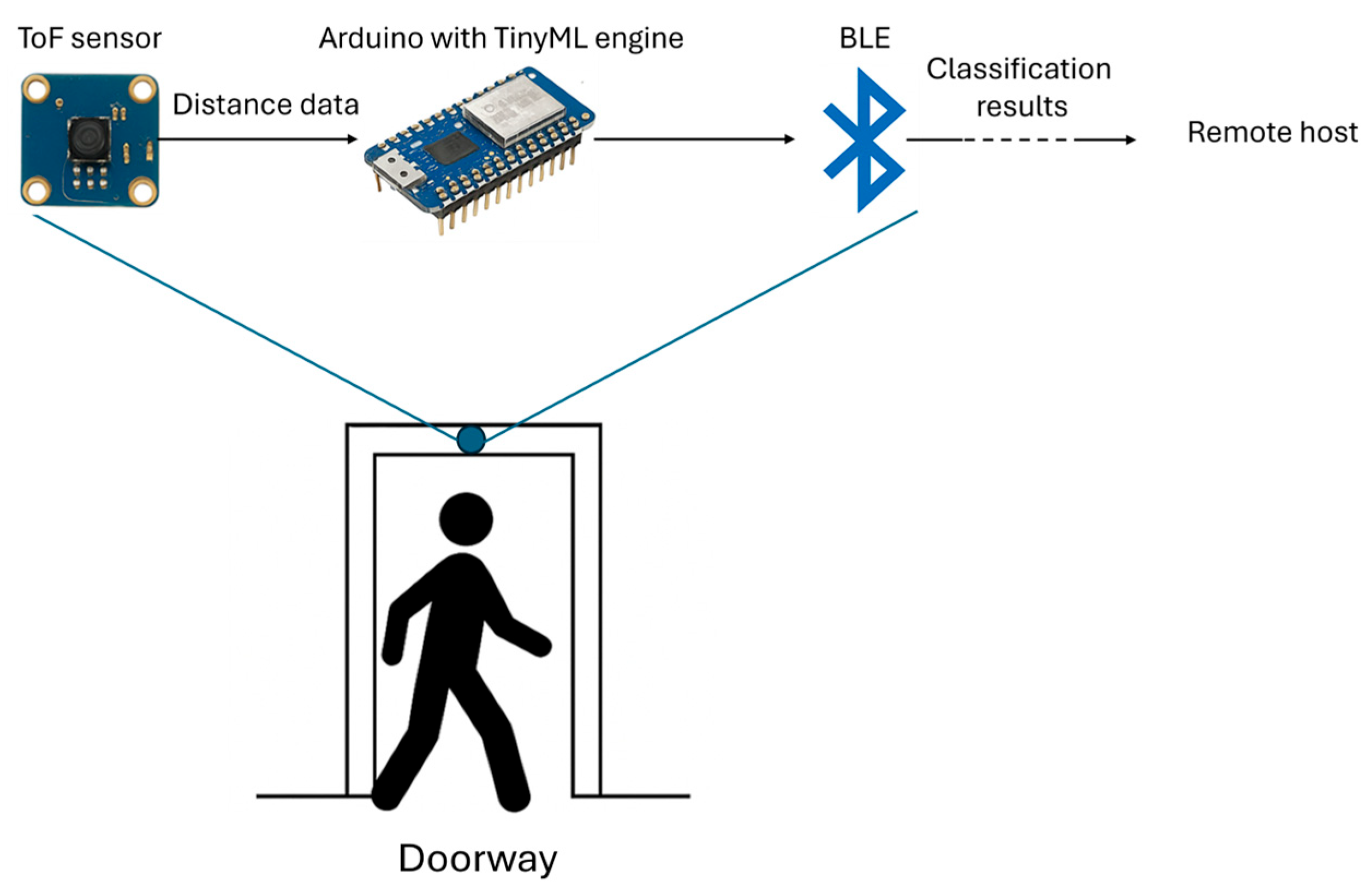

The proposed system is designed as a fully embedded, low-power solution for real-time detection and classification of human movement events near a doorway. The system is comprised of three closely integrated components: a ToF sensing unit, a microcontroller running a TinyML model, and a BLE communication module. It is evident that the amalgamation of these components gives rise to an end-to-end pipeline that is capable of capturing raw distance data, processing it locally, and transmitting actionable classification results to a host device. A notable aspect of this process is its independence from external computing resources or cloud connectivity, thereby ensuring a high degree of autonomy and security.

The sensing unit comprises a TDK InvenSense ICU-20201 ToF sensor, which is mounted above the doorway with the purpose of continuously monitoring the distance profile beneath it. As already discussed in Section II, this sensor is suitable for ultra-low power applications. The sensor is directly connected to the Arduino Nano 33 IoT microcontroller, which functions as the system’s processing node. The microcontroller is responsible for receiving the raw distance measurements from the ToF sensor, preprocessing the data, and subsequently feeding it into an embedded machine learning model implemented using TensorFlow Lite for Microcontrollers. This lightweight model performs real-time inference to classify motion events into predefined categories, such as “entering”, “exiting”, “approaching&leaving”.

Subsequent to the completion of classification, the results are transmitted over a BLE link to a host device or central server, where they can be logged, visualized, or used to trigger higher-level actions, such as opening doors or updating occupancy records. The system’s local execution of sensing and inference operations ensures privacy preservation, minimizes latency, and significantly reduces the volume of data that must be transmitted wirelessly, thus conserving energy.

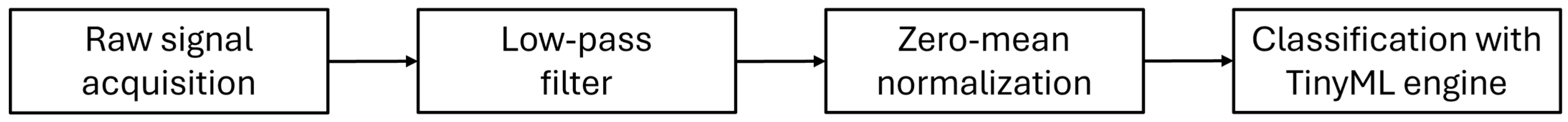

Figure 1 shows a general overview of the proposed system. The signal pipeline begins with the acquisition of raw distance values from the ToF sensor sampled at 25 Hz. Each data window consists of 100 consecutive samples (i.e., a 4 s sequence), which is then pre-processed prior to model inference. Initially, the signal is smoothed using a low-pass filter (window length = 11, polynomial order = 2) to reduce high-frequency noise whilst preserving movement trends. The filtered signal is then normalized to zero-mean and unit variance using:

where

and

are the mean and standard deviation computed over the 100-sample input window, respectively. No additional feature extraction or hand-engineered descriptors are used; the normalized 1D sequence is directly fed to the TinyML engine.

Figure 2 shows a flow chart of the signal pipeline.

The subsequent subsections provide a comprehensive description of each component, encompassing the sensor hardware, the dataset acquisition process for model training, the BLE communication framework, and the design and deployment of the TinyML inference model.

3.2. The ICU-20201 Sensor

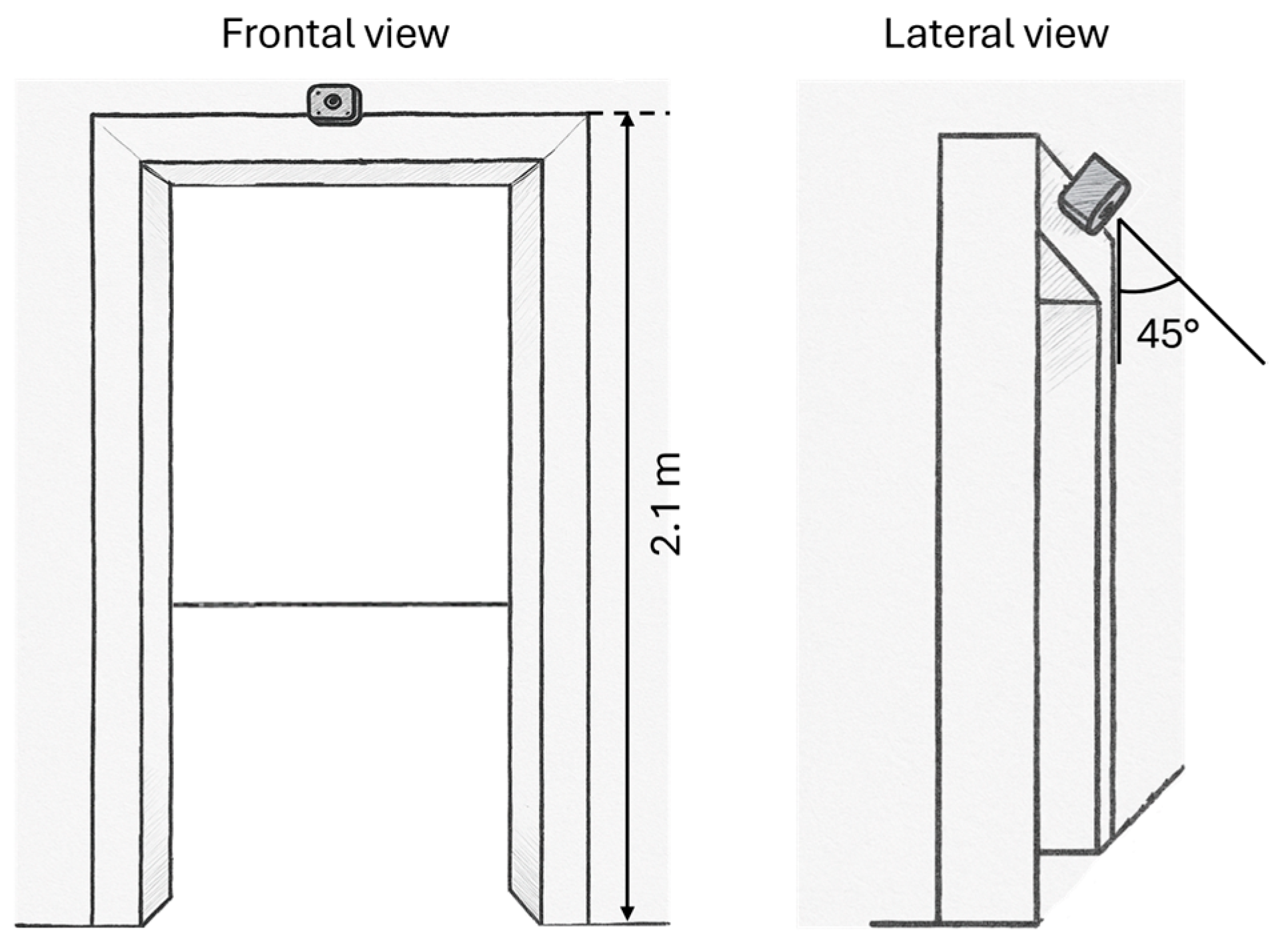

The sensing unit at the core of the system is the TDK InvenSense ICU-20201, a compact and low-power ToF sensor designed for precise distance measurement. The sensor emits modulated infrared light and measures the time taken for the reflected signal to return. This process provides accurate range information for objects within its field of view. The ICU-20201 is a particularly well-suited device for doorway monitoring applications, where tracking the position and movement of individuals over time is essential. It offers a configurable range of up to 5 m and a customizable field of view of up to 180°.

In the configuration under consideration, the sensor was mounted directly above the doorway, at a height of 2.1 m. In order to maximize coverage of the entrance area, the camera was angled downward at 45°, thus providing an optimal view of individuals approaching or passing through the gate. The sensor was configured to operate at its maximum field of view of 180°, thus ensuring that lateral movements near the edges of the doorway were also captured through the entrance area. The configuration of the sensor in relation to the doorway is illustrated in

Figure 3. The sampling rates were adjusted between 5 Hz and 25 Hz, depending on the desired resolution and power consumption trade-offs. The typical power consumption ranged from 26 μA (at 1 sample per second) to 296 μA (at 25 samples per second). These characteristics make the ICU-20201 a particularly attractive component for battery-powered or energy-constrained deployments.

The sensor collects distance data to form temporal profiles of human movement as individuals approach, pass through or move away from the monitored area. These time-series profiles capture key features such as the rate of distance change, approach or retreat pattern, and duration within the sensor’s range. All of this information is useful to classifying the type of motion event. The raw sensor readings are transmitted directly to the Arduino Nano 33 IoT microcontroller via a wired SPI interface, where they are preprocessed and passed to the TinyML inference engine.

3.3. Data Acquisition and Labelling

The training and validation of the TinyML classification model was achieved by employing a dedicated dataset, which was acquired through the utilization of the complete sensing setup that had been previously described. The dataset concentrated on the capture of realistic human movement patterns near the doorway, encompassing a range of motion events that are pertinent to access monitoring applications.

Fifteen participants (9 male, 6 female) with heights ranging from 1.55 m to 1.90 m were recruited to ensure diversity in body size and walking style. Each participant completed five trials for each of the three target motion classes, resulting in a total of 225 recorded sequences (15 participants × 5 trials × 3 classes). The three motion classes have been meticulously designed to mirror prevalent door-interaction scenarios:

entering: the participant approached and passed through the doorway, entering the space;

exiting: the participant started inside the room, walked toward the doorway and exited;

approaching&leaving: the participant walked toward the doorway but stopped before crossing, then turned and left, simulating hesitation or aborted entry.

Each acquisition trial was of a duration of approximately 4 s, during which the sensor continuously sampled distance readings at a frequency of 25 Hz. The resulting time-series data captured the dynamic distance profiles associated with each motion event, including approach speed, transition patterns, and departure behavior [

22].

The labelling of all recordings was conducted manually, based on operator observations, to ensure the accuracy of the ground truth. The labelled dataset was subsequently segmented into individual sequences and normalized to fit the machine learning model’s input requirements. It is important to note that meticulous care was taken to ensure an equitable distribution of samples across the various classes, thereby averting the introduction of class imbalance during the training process.

3.4. Wireless Transmission via BLE

Upon completion of the local inference on the incoming sensor data by the Arduino Nano 33 IoT, the system is required to communicate the classification results to an external host device for logging, visualization, or integration with higher-level applications such as building automation or access control systems. In order to complete this task, a BLE communication layer was implemented, selected on the basis of its low power consumption, simplicity, and widespread compatibility with modern devices.

BLE is particularly well suited to embedded and battery-powered applications, as it offers a power-efficient alternative to classic Bluetooth and Wi-Fi. It is noteworthy that BLE is able to maintain sufficient bandwidth and low latency for transmitting small payloads, such as classification results. In the proposed system, solely the final inference outcome (i.e., the predicted class label) is transmitted over BLE, thereby significantly reducing the volume of data transferred in comparison to the transmission of raw sensor measurements. This design choice further contributes to minimizing power consumption and extending operational lifetime in scenarios where energy constraints are critical.

The BLE module on the Arduino was configured to operate as a peripheral device, with the module advertising its presence and establishing connections with a central host (such as a computer, smartphone, or gateway). Once connected, the Arduino transmits classification results in near real time, typically within tens of milliseconds of local inference completion. This low-latency communication ensures that downstream systems can respond promptly to detected events, such as updating occupancy databases or triggering automated actions.

3.5. TinyML Model Design and Deployment

In order to implement on-device classification of human motion events, a range of machine learning models supported by LiteRT (formerly TensorFlow Lite) for Microcontrollers were explored. LiteRT is an ultra-lightweight inference engine designed for microcontrollers with highly constrained memory and compute resources. In view of the fact that the task at hand concerns the analysis of time-series data (i.e., sequences of distance measurements), the focus was directed toward model architectures capable of capturing temporal patterns while maintaining sufficient efficiency to accommodate the memory constraints of the Arduino Nano 33 IoT, which possesses a mere 256 KB of flash memory and 32 KB of SRAM.

In the initial phase of the project, the utilization of fully connected neural networks (i.e., multi-layer perceptrons) was considered. These networks are characterized by their ability to process flattened input sequences through dense layers. Despite their simplicity and lightweight nature, such architectures frequently prove insufficient for the capture of temporal dependencies, necessitating the incorporation of extensive feature engineering, a process which we sought to circumvent. Recurrent neural networks (RNNs), particularly architectures such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), were also evaluated, as they are well known for modelling time dependencies. However, these models are characterized by a high degree of parameterization and computational complexity, which hinders their deployment on microcontrollers without significant pruning or quantization. Finally, the focus was directed toward one-dimensional convolutional neural networks (1D CNNs), which implement temporal filters over the time axis, thereby enabling the automatic detection of local patterns (such as sudden distance changes or sustained approach phases) with high efficiency. This architecture has proven highly effective in embedded applications, offering an excellent balance between accuracy, computational cost, and memory use.

In the considered case, the input is a one-dimensional signal, denoted by . The representation of the distance measurements captured over time is structured as a sequence of 100 samples (i.e., 4 s of acquisitions at a sampling rate of 25 Hz).

The convolution operation is the heart of the CNN. It involves applying a set of learnable filters (kernels) to the input sequence in order to extract local features. Mathematically, convolution at position

for the

filter can be expressed as follows:

where

is the input at position

,

is the weight of the

element of the

filter,

is the bias of the

filter,

is the kernel size and

is the activation function. In this work, we adopted the ReLU activation function, which is defined as:

After convolution, a pooling operation is applied to reduce the temporal dimension and enhance the robustness of the features. In the case of maximum pooling, the operation is defined as follows:

where

is the pool size,

is the stride and

is the pool output at position

for the

filter.

After the convolutional and pooling layers, the resulting feature maps are flattened into a single vector

, which is passed to a fully connected layer, defined as:

where

are the weights of the layer,

are the biasses and

is the length of the flattened vector.

The final fully connected layer applies a softmax function to convert the raw scores into class probability, according to the following equation:

where

is the number of classes (3 in the considered case) and

is the predicted probability for class

. The predicted class is determined by selecting the index

with the highest probability.

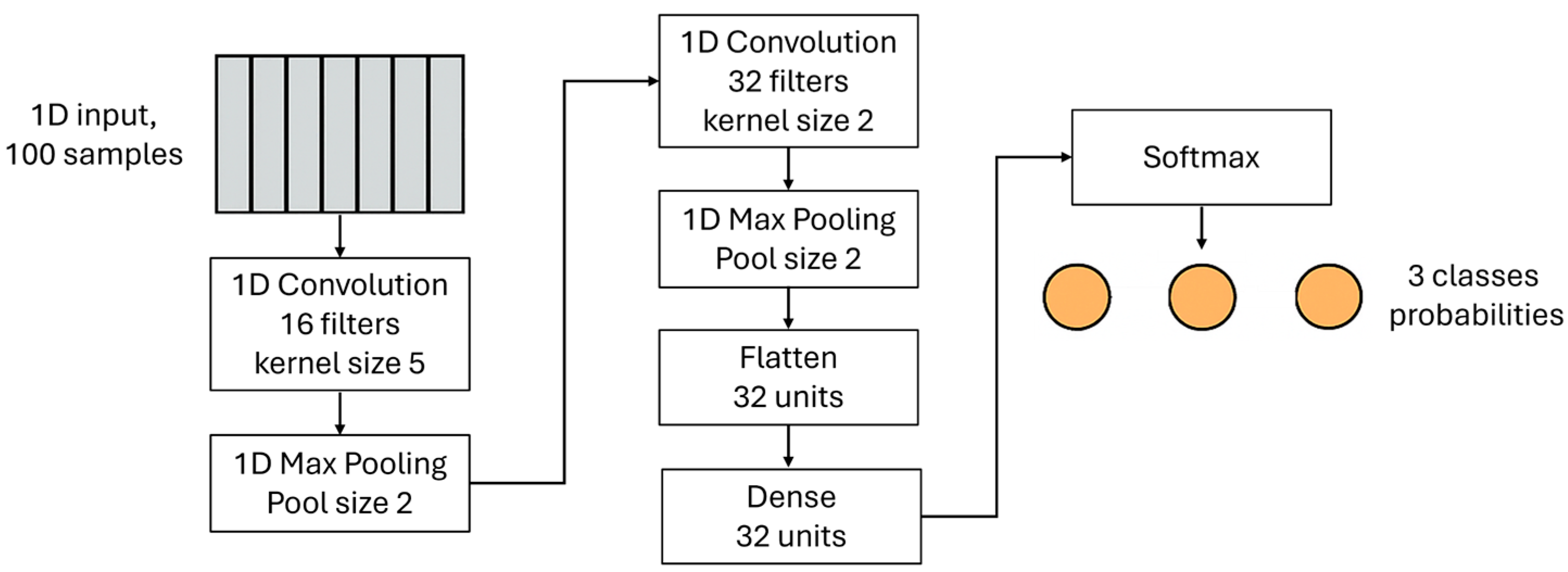

Based on an ablation study described in the following, we selected a 1D CNN architecture as the best trade-off between accuracy, latency, and memory efficiency. The final neural network architecture adopted for on-device inference consists of a compact 1D CNN specifically tailored to process time-series sequences of 100 distance samples. The model commences with a one-dimensional convolutional layer that implements 16 filters with a kernel size of 5 and a stride of 1, subsequently followed by a ReLU activation function to introduce non-linearity. This is then followed by a one-dimensional max pooling layer with a pool size of 2, which reduces the temporal resolution while preserving the most salient features. A second convolutional layer is then applied, this time with 32 filters and a smaller kernel size of 3, again using ReLU as the activation function. A subsequent max pooling layer, also with a pool size of 2, further compresses the feature representation. The resulting feature maps are flattened into a single vector of 736 elements, which is then passed to a fully connected layer comprising 32 neurons and ReLU activation. Finally, the network concludes with an output layer of three neurons, corresponding to the motion classes (entering, exiting, and approaching/leaving), and applies a softmax activation to generate class probabilities. The total number of layers, inclusive of convolutional, pooling, dense, and output layers, is seven.

Figure 4 summarizes the architecture.

The deployment of the trained model onto the Arduino Nano 33 IoT followed a structured and efficient workflow. Initially, the model was trained offline using TensorFlow in a desktop environment, employing the previously described labelled dataset. Following the attainment of a satisfactory performance level, the model underwent full 8-bit integer quantization. This step resulted in a substantial reduction in the memory footprint by compressing both the weights and activations, making the model suitable for execution on a microcontroller with highly limited resources.

After the process of quantization, the model was exported in the TensorFlow Lite format (.tflite), which is compatible with the LiteRT runtime. The lightweight format facilitates efficient execution on devices devoid of operating systems or floating-point units. The .tflite model was then compiled into the Arduino firmware using the LiteRT C++ API and integrated with the application logic. A statically allocated memory buffer, known as the tensor arena, was reserved in SRAM to accommodate the model’s input, output, and intermediate activation data. The buffer was dimensioned to approximately 12 KB, ensuring stable inference while allowing for sufficient memory for BLE communication and system management.

At runtime, the Arduino continuously acquires incoming distance measurements from the ToF sensor and buffers them into overlapping sequences of 100 samples. Each sequence is then subjected to normalization and subsequently passed to the LiteRT interpreter for inference. The classification result, indicating one of the three motion categories, is obtained and is then transmitted over BLE to the host device.

4. Experimental Results

In order to evaluate the effectiveness and deployability of the proposed TinyML-based system for doorway-related human motion classification, an extensive set of experiments was conducted. These experiments covered both model performance and embedded system benchmarks. The objectives of this study were twofold: first, to validate the classification accuracy across realistic movement patterns, and second, to assess the feasibility of running the model on the Arduino Nano 33 IoT with respect to inference latency, memory usage, and energy consumption.

In order to assess the generalization capabilities of the trained models, two distinct evaluation strategies were adopted. In the initial configuration, a conventional 70–30 (150 records for the training and 75 for the test) random division of the dataset was employed, thereby ensuring the representation of data from all participants in both the training and test sets. This approach is based on simulating a scenario in which the system has previously encountered motion patterns from the same individuals. This makes the system suitable for use cases involving personalization or semi-supervised updates. In the second setup, a leave-one-subject-out (LOSO) cross-validation scheme was employed. In this particular instance, the model was trained on data from 14 participants and tested on the remaining unseen subject, with this process being repeated 15 times in total, once for each participant. This evaluation strategy emulates real-world conditions in which the system is tested with individuals never seen before, thereby facilitating the achievement of a more stringent and generalizable performance estimate.

The two evaluation strategies have been applied to all the model evaluated during the initial phase this study, namely Multi-Layer Perceptron (MLP), GRU and 1D-CNN.

Table 1 reports the results of this preliminary evaluation, which highlighted that the 1d-CNN model outperforms the others. Indeed, in this paper, we will address only the evaluation of the 1D-CNN model.

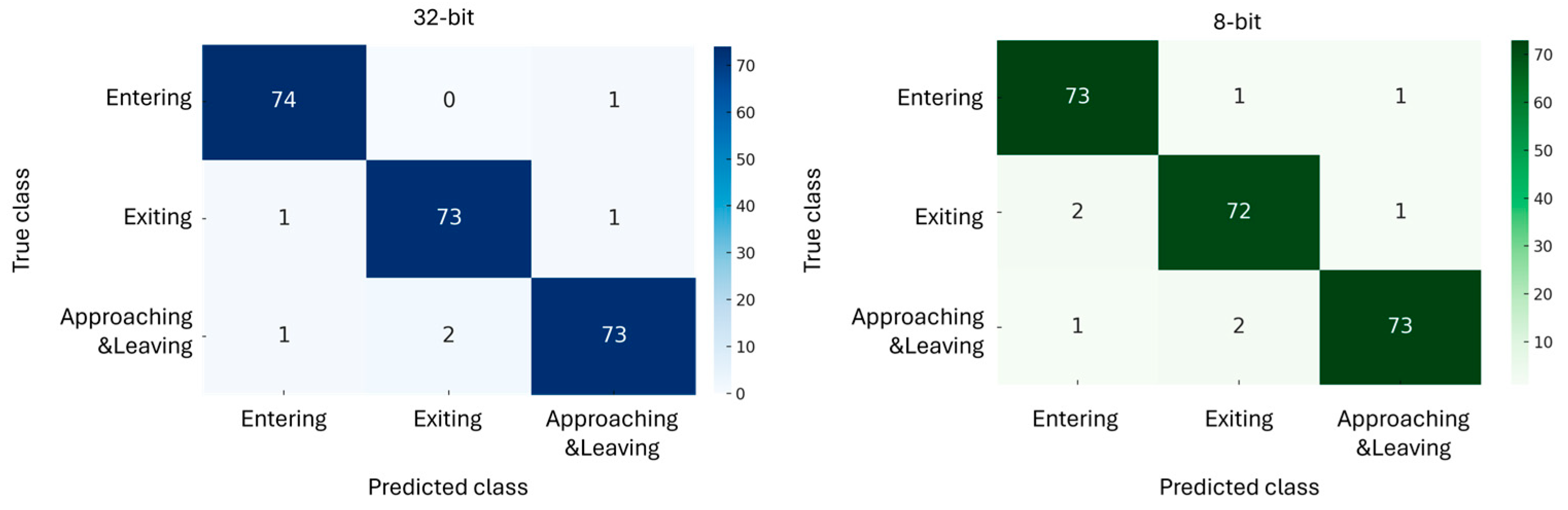

The IEEE 754 single precision floating point (32-bit) and fully quantized (8-bit) versions of the model were evaluated under the two training protocols. For each case, the following metrics were computed: overall accuracy, precision, recall, F1-score, and confusion matrices.

Utilizing the 70–30 split, the floating-point model attained an overall accuracy of 96.2%, exhibiting particularly robust performance on the “entering” and “exiting” classes. The quantized model, deployed using RTLite, achieved a slightly lower but still high accuracy of 95.6%, confirming that quantization had minimal negative impact on classification quality.

Table 2 reports the per-class metrics obtained by both the 32-bit and 8-bit models. Moreover,

Figure 5 shows the confusion matrices.

In the more challenging LOSO validation, the floating-point model attained a mean accuracy of 92.4%, while the quantized model achieved 91.1%. As expected, there was a slight decrease in performance due to inter-subject variability; however, the findings continue to substantiate robust generalization.

Table 3 shows the classification performance of the floating point and of the 8 bit models for the LOSO validation.

In order to verify the real-world deployability of the proposed system, a benchmarking process was conducted on the quantized 1D CNN model running on the Arduino Nano 33 IoT. The parameters of this process included inference latency, memory usage, and energy consumption. These metrics are critical in demonstrating the feasibility of operating the classification model entirely on-device within the severe hardware constraints typical of ultra-low-power microcontrollers.

The Arduino Nano 33 IoT is based on the Arm Cortex-M0+ SAMD21 microcontroller, which offers 256 KB of flash memory and 32 KB of SRAM. BLE communication is handled via the onboard u-blox NINA-W102 module, which is natively supported in the Arduino IDE through the ArduinoBLE library.

The distance measurements are acquired using the TDK InvenSense ICU-20201 Time-of-Flight (ToF) sensor, capable of high-resolution multi-zone depth readings at up to 60 Hz. It communicates with the microcontroller via I2C and operates with a typical current consumption of ~2.9 mA during measurement.

The machine learning model was implemented using LiteRT, an open-source framework designed to execute quantized neural networks on resource-constrained devices without operating system support. The Arduino sketch was developed using the Arduino IDE 2.2.1, and the model was trained and converted using TensorFlow 2.14 with post-training quantization enabled.

BLE transmission was handled using the ArduinoBLE v1.2.0 library, with each classification result broadcast as a custom BLE service to a nearby host (e.g., smartphone or Raspberry Pi).

The application in its entirety, inclusive of the inference engine, model, BLE communication stack, and data acquisition routines, was required to be contained within these limits.

The consumption of flash memory was determined through a process of compilation and linkage of the complete application with the quantized .tflite model, which was integrated via the RTLite for Microcontrollers runtime. The quantized model itself occupies approximately 20 KB of flash memory. This includes 8-bit integer weights and biases across the two convolutional layers, one dense layer, and the softmax output. The RTLite interpreter library, in conjunction with supporting utilities for model initialization, input/output tensor management, and operator kernels, necessitates an additional 50 KB. It is evident that application-level logic, inclusive of sensor interface drivers, signal normalization routines, and the BLE communication stack, accounts for an additional 15 KB. Consequently, the total flash usage of the system is approximately 85 KB, well below the available 256 KB, leaving room for future expansion or additional features.

It is imperative to note that RAM usage is of greater significance due to the more stringent 32 KB constraint. The most significant allocation in SRAM is the tensor arena, a statically defined buffer used by LiteRT to store intermediate tensors, activation maps, input/output buffers, and workspace memory required by each operator. For the model under consideration, which incorporates 100 input samples and two convolutional layers of 16 and 32 filters, respectively, a tensor arena of 12 KB was allocated. This size was determined experimentally as the minimum static size required to support inference without runtime errors or memory fragmentation. In addition to the tensor arena, approximately 4 KB are allocated for BLE buffers, connection state management, and event handling. The remaining memory, approximately 10 KB, is allocated for the stack, global variables, control logic, and sensor SPI communication buffers. It is evident that the total SRAM utilization is approximately 26 KB, which is well within the 32 KB limit of the microcontroller, even during periods of peak operation.

The duration of the inference process was determined by the act of timestamping the processing interval. This interval started from the moment a complete 100-sample window was buffered and normalized, extending until the model output was made available. Tests conducted at a clock speed of 48 MHz demonstrated that each inference took an average of 275 ms, with minor variations attributable to background BLE operations and interrupt servicing. The configuration of the sampling frequency at 25 Hz is a prerequisite for the real-time operation, and this in turn confirms that classification can occur without introducing latency or requiring sample downscaling.

The estimation of energy consumption was based on the premise that the transmission of data via Bluetooth has the potential to draw a current of 4.8 mA. Utilizing the sensor at a rate of 25 Hz, the microcontroller executing inference at regular intervals, and the transmission of classification results via BLE, the system’s average power consumption was estimated to be approximately 16 mW. This figure incorporates the energy expenditure associated with the acquisition, computation, and wireless communication of sensors, and is consistent with power budgets reported in other TinyML deployments for low-duty-cycle motion recognition tasks. Assuming a 1000 mAh battery operating at 3.3 V, the system could operate continuously for more than eight days, or alternatively for a greater duration when working in a duty-cycled or event-triggered manner.

The reported power consumption was estimated rather than measured directly with laboratory instrumentation. We used the known supply voltage (3.3 V) and the typical current consumption values provided in the datasheets of the Arduino Nano 33 IoT and the TDK InvenSense ICU-20201 sensor.

The average power consumption of the system was estimated based on the known supply voltage (3.3 V) and current consumption from the Arduino Nano 33 IoT and TDK ICU-20201 sensor. Specifically, the Arduino Nano 33 IoT draws approximately 9–10 mA when running 547 at 48 MHz with BLE active, and the ICU-20201 sensor adds ~2–3 mA during measurement. Active BLE transmissions result in short peaks of 12–15 mA, depending on packet size and communication overhead. During inference, which lasts approximately 275 ms, total current draw is estimated at ~12–14 mA. Thus, during inference and BLE activity, the system draws approximately 29 mA (including both MCU processing and BLE radio). Each classification cycle consists of a 275 ms inference step and a BLE transmission of ~5 ms, repeating every 4 s. This results in an active window of 280 ms per cycle, and an inactive (low-power or idle) state for the remaining 3.720 s, yielding an estimated average power of less than 100 mW. Moreover, considering the energy dissipated by the system during inference and transmission, the value is less than 35 mJ. These values confirm the system’s suitability for low-power battery operation while accounting for realistic communication overhead.

These estimates are consistent with similar setups reported in related literature and demonstrate the feasibility of long-term operation in battery-powered applications.

The current system uses BLE for transmitting inference results to a host device. BLE was selected due to its availability on the Arduino Nano 33 IoT, its widespread compatibility, and its relatively low power consumption compared to other protocols. Nevertheless, alternative wireless technologies exist and offer distinct trade-offs. Wi-Fi provides high throughput and broad support but is significantly more power-hungry, making it unsuitable for low-duty-cycle applications without aggressive power management. Zigbee offers low-power mesh networking but requires additional hardware and lacks native support on the selected microcontroller. LoRa enables ultra-low-power, long-range communication, but suffers from high latency and is better suited to low-data-rate applications. UWB provides high-precision positioning but at the cost of complexity and increased energy usage.

BLE remains a compelling choice due to its energy efficiency in short, periodic transmissions and its tight integration with battery-powered IoT platforms.

To further extend battery life, several strategies can be adopted: (1) optimizing the sampling and inference rate (e.g., from 10 Hz to 1–2 Hz during idle periods), (2) implementing event-driven inference, where data acquisition is triggered only upon detecting significant motion, (3) enabling deep sleep modes on the microcontroller and waking it only for inference windows, and (4) transmitting only aggregated results (e.g., activity counts or state transitions) rather than individual predictions. Future versions of the system may also explore using dedicated ultra-low-power wake-up sensors to reduce energy costs during inactive phases.

The proposed system represents a substantial advancement in the field of embedded human motion classification by means of the effective integration of a 1CNN with a ToF sensor and BLE communication on a microcontroller with limited resources. In order to provide a contextual framework to understanding the contributions of the present study, a comparison is made with recent studies in similar domains, since there are no works on the same application considered in this paper.

The study in [

23] explored the implementation of TinyML models for hand motion classification using an IMU sensor on the Arduino Nano 33 BLE. A comparison was made between CNN and Multi-Layer Perceptron (MLP) models, with both demonstrating an identical classification accuracy of 100%. However, the CNN model required 313 ms for inference and utilized 15.9 KB of RAM, whereas the MLP model was more efficient, taking only 4 ms and 4.0 KB of RAM. In contrast, the system under consideration achieves a balance between accuracy and efficiency, with a 1D CNN model that performs inference in approximately 275 milliseconds and utilizes approximately 26 KB of SRAM, thus demonstrating suitability for real-time applications.

Moreover, the findings in [

24] demonstrated that 1D CNN models have the capacity to effectively process raw sensor input data, achieving both feature extraction and classification. The model, comprising three 1D convolutional layers with 64 filters each, attained an accuracy of 97.62% on the UCI HAR dataset. Whilst the efficacy of 1D CNNs in human activity recognition is highlighted by their work, our system extends this by implementing a quantized model suitable for deployment on microcontrollers. This ensures low memory footprint and energy consumption.