1. Introduction

Alzheimer’s Disease (AD) is a progressive neurodegenerative disorder characterized by cognitive decline, memory impairment, and behavioral disturbances. Affecting millions of people around the world, it poses considerable challenges not only for healthcare systems but also for patients’ families and caregivers [

1]. Early diagnosis and continuous monitoring are essential for treatment of AD, as timely interventions can slow disease progression and significantly improve patient quality of life [

2].

Recent advances in Artificial Intelligence (AI) and sensor technologies have opened new opportunities for the early detection and personalized monitoring of cognitive disorders [

3]. AI-based models are increasingly effective in identifying subtle cognitive impairments through the analysis of complex behavioral and physiological patterns. For example, Machine Learning (ML) techniques have been successfully applied to analyze brain wave activity during sleep, providing predictive information on the onset of cognitive decline. In certain cases, AI-driven tools have even outperformed traditional neuropsychological tests in forecasting the progression of Alzheimer’s disease, underscoring their emerging clinical utility [

4].

Concurrently, the proliferation of wearable and ambient technologies has improved the ability to collect real-time, non-invasive health data relevant to AD [

5]. Devices such as smartwatches, biosensors, smart clothing, and connected environmental sensors can monitor vital indicators, such as heart rate variability, gait anomalies, and physical activity levels, yielding continuous data streams critical for cognitive health assessment [

6,

7]. These tools allow for the early detection of behavioral deviations from baseline, often indicative of cognitive deterioration.

Building upon these foundations, we introduce LumiCare, a context-aware and hybrid AI infrastructure specifically designed to support individuals affected by Alzheimer’s disease. The LumiCare system integrates heterogeneous sensor data, edge/cloud AI inference, and adaptive interaction mechanisms to enable continuous patient monitoring, personalized cognitive assistance, and real-time emergency response. The name LumiCare reflects the core objective of the system: to enhance situational awareness and clinical insight, thereby illuminating the cognitive state and behavioral context of the patient through multimodal data fusion and intelligent service orchestration. It is a context-aware, AI-enabled system designed to support individuals with Alzheimer’s disease through continuous monitoring, cognitive stimulation, and caregiver alerting. LumiCare integrates multimodal data from heterogeneous sources, ranging from wearables and environmental sensors to connected vehicles, to construct a holistic representation of the user’s behavioral and physiological state. Upon detecting deviations that may suggest cognitive or behavioral anomalies, the system activates interactive assessments via chatbots or voice assistants, utilizing both general orientation questions and personalized prompts. To enhance responsiveness and engagement, the system employs multimodal stimuli such as haptic feedback (e.g., smartwatch vibrations) and auditory alerts.

The architecture of LumiCare combines mobile and cloud-based AI processing within a hybrid framework. Most computations are executed locally on the user’s device to minimize latency and preserve data privacy. More complex inference tasks or uncertainty resolution routines are selectively offloaded to cloud resources. Additionally, an intelligent caregiver alert protocol is integrated to notify designated contacts when significant behavioral or cognitive changes are detected, facilitating prompt intervention.

Ongoing research initiatives, such as the SERENADE project, emphasize the importance of explainability and user trust in the deployment of AI-based dementia care technologies [

8]. Similarly, the use of immersive environments, such as virtual reality, has been explored to increase the ecological validity of cognitive assessments [

9]. These developments offer valuable insights for the design of adaptive, transparent, and user-centered solutions like LumiCare.

Looking ahead, the emergence of 6G networks envisaged between 2028 and 2030 promises to revolutionize healthcare delivery through immersive, massive, and ubiquitous communication capabilities [

10,

11]. These advancements will rely on next-generation technologies, including smart rings, AR/VR headsets, connected vehicles, and Unmanned Aerial Vehicles (UAVs), to ensure seamless data integration and uninterrupted access.

Within this paradigm, 6G introduces novel architectural components that significantly expand the design space of intelligent health systems, such as the following:

AI Agent—an autonomous, intelligent entity capable of real-time learning and decision-making;

AI Service—a distributed, low-latency inference engine embedded in the network infrastructure;

Intelligent Communication Assistant—a customizable virtual interface capable of multimodal interactions, enabled by secure, consent-driven data access.

Such innovations pave the way for next-generation platforms like LumiCare, enabling real-time, adaptive, and privacy-conscious monitoring and support for individuals affected by degenerative cognitive disorders.

The main objectives of this paper are:

to provide a comprehensive review of state-of-the-art technologies supporting Alzheimer’s disease management, with a focus on AI, wearables, and IoT ecosystems;

to propose an effective system architecture based on AI agent technology and enhanced by the forthcoming 6G infrastructures;

to present LumiCare Companion, an interactive application designed to support patients throughout their daily routines and respond appropriately to emergency situations.

The remainder of this paper is structured as follows:

Section 2 reviews current technological approaches for AD support, with emphasis on sensing, networking, and computational methods.

Section 3 further explores these enabling technologies, with a focus on behavioral analysis, anomaly detection, and cognitive assessment. In

Section 4, we detail the proposed architecture, highlighting the interplay between edge devices, cloud infrastructure, and 6G components.

Section 5 introduces the LumiCare Companion, a user-focused mobile platform for interaction, cognitive engagement, and emergency handling. Finally,

Section 6 summarizes our contributions and outlines directions for future research.

2. Related Works

The significant and growing burden of AD on individuals, caregivers, and healthcare systems has intensified the search for innovative solutions, particularly leveraging advancements in technology. Traditional diagnostic methods, often resource-intensive and subjective, are increasingly being complemented or replaced by technologies enabling early detection, continuous monitoring, and personalized support. Research in this area spans various technological approaches, often integrated into sophisticated systems.

Wearable Sensor-Based Systems—A prominent area of research focuses on the use of wearable devices to collect data relevant to AD management [

12,

13,

14]. Devices such as smartwatches, fitness bands, bracelets, smart insoles, and actigraphs are utilized to gather real-time data on a variety of parameters [

12,

15]. These include physiological parameters such as heart rate, oxygen saturation (SpO2), and temperature [

13,

14,

16], activity levels encompassing steps, physical strain, posture, and detailed gait analysis [

17,

18,

19], sleep patterns and circadian rhythm [

13,

19,

20], and location tracking, including the ability to detect falls [

14,

21]. These data points can provide valuable insights into subtle changes in behavior and physical function indicative of disease progression or emergencies. Challenges include limited battery life, accuracy depending on placement and environment, and user acceptance. Despite these, wearable sensors hold significant potential for early detection and monitoring.

Mobile Applications (mHealth)—Mobile applications running on smartphones and tablets serve as crucial tools for supporting AD patients and their caregivers. These apps offer a range of functionalities such as GPS tracking for patient localization [

14], medication and task reminders [

21], facial recognition to help patients identify known individuals, and cognitive games designed to stimulate memory and thinking [

12,

14,

21,

22]. User feedback on these apps can provide valuable insights into their usability and desired features for AD patients and caregivers. Key challenges include ensuring usability for individuals with cognitive decline, user acceptance, and technical issues.

Cloud Computing—The integration of Internet of Things (IoT) devices and mobile applications generates vast amounts of data that require robust infrastructure for storage and processing. Cloud computing provides the necessary scalable resources for storing, managing, and enabling remote access to this data for healthcare providers and family members. It is a key enabler for data-driven healthcare solutions [

14,

16,

23]. Challenges include data security and privacy, latency issues, and network reliability. Hybrid cloud-edge architectures are being explored to mitigate some of these, performing initial processing closer to the data source [

20].

ML and Deep Learning (DL) Algorithms—Central to analyzing the large, complex datasets generated by IoT devices, mobile apps, and medical imaging are ML and DL algorithms. These are extensively applied to analyze sensor data, medical imaging (Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), Computed Tomography (CT)), and Electronic Medical Records (EMR) to facilitate early detection, classify disease stages, recognize patterns, predict risks, and support [

20]. Convolutional Neural Networkss (CNNs) [

13] are particularly prominent for image analysis, while Long Short-Term Memory (LSTM) [

20] networks are effective for time-series data like physiological signals or activity patterns. ML/DL can aid in diagnosing stages of AD and identifying abnormal behaviors. Challenges include data scarcity, heterogeneity, and imbalance, computational intensity, and the need for explainability in complex models [

24,

25].

Medical Imaging (Traditional and AI-Enhanced)—Medical imaging techniques like MRI, PET, and CT scans are foundational for diagnosing structural and metabolic changes in the brain associated with AD. They can detect biomarkers such as beta-amyloid plaques and tau protein tangles [

13]. However, these methods are often expensive, invasive (in the case of PET), and provide only episodic snapshots of the disease state [

19]. Recent work integrates ML/DL algorithms with imaging data to improve the accuracy and efficiency of diagnosis and disease staging [

26].

EMR and Clinical Data Analysis—EMRs and other clinical data sources provide a wealth of information, including patient history, demographics, cognitive test scores (like Mini-Mental State Examination (MMSE), Clinical Dementia Rating (CDR), Montreal Cognitive Assessment (MoCA)), and various biomarkers [

27]. While EMRs are often used for tracking disease progression and outcomes, they can also be analyzed using ML/DL techniques to aid in early detection and risk prediction. Challenges include the lack of standardization across different records, missing information, and critical data privacy concerns [

27].

In vitro Models (“Brain-on-a-chip”)—Representing a distinct line of research, in vitro models like “brain-on-a-chip” systems aim to simulate brain structures and disease pathology in a controlled laboratory environment [

26]. These models are used for studying the mechanisms of neural degeneration, such as the effects of beta-amyloid accumulation on neural networks, and for testing potential therapeutics in real time. They offer unique capabilities for monitoring neural network connections and disconnections dynamically.

The reviewed literature highlights the diverse technological landscape being developed to address the multifaceted challenges of AD, from early detection and monitoring through various sensing modalities to providing direct support and therapy. While significant progress has been made within each approach, challenges related to data integration, standardization, privacy, user acceptance, and clinical validation remain key areas for future research and development.

3. Technologies to Support Alzheimer’s Patients

AD is a progressive neurodegenerative disorder characterized by cognitive decline, memory loss, and changes in behavior and personality. It represents a significant and rapidly expanding healthcare responsibility and a major burden to the healthcare ecosystem globally. While currently incurable, early detection and effective management are considered crucial for potentially slowing progression, compiling better treatment plans, and improving quality of life for patients and alleviating the burden on caregivers. Traditional diagnostic methods often involve clinical interviews and assessments that can be resource-intensive, time-consuming, and may only identify the disease in later stages. Furthermore, the early signs of AD, such as memory loss, may not be immediately apparent to family members or even during initial medical examinations. That highlights the imperative need for efficient and effective methodologies for early detection and continuous support. Recent advancements in technology, particularly in the fields of the IoT, wearable devices, cloud computing, and AI, including ML and DL, offer transformative potential for the detection, monitoring, and personalized management of AD patients. These technologies enable the collection and analysis of objective, high-frequency, and passive data related to cognitive, behavioral, sensory, and motor changes, which can precede clinical manifestations. This chapter explores the application of these technologies in supporting AD patients, focusing on the description of relevant technologies, strategies for detecting concerning events, and methods for measuring disease advancement, drawing solely upon the provided literature.

3.1. Description of Technologies

Technological solutions for AD management primarily leverage ubiquitous sensing and data processing capabilities. At the core of these solutions are IoT-enabled devices and smart wearables, which serve as the primary data acquisition layer. Wearable devices, such as smartwatches and fitness trackers, can continuously capture a variety of data directly from the patient.

This includes the following:

Physiological signals—heart rate, body temperature, and oxygen saturation [

12,

19].

Activity levels and movement patterns—using Inertial Measurement Unit (IMU) sensors like accelerometers and gyroscopes. This allows monitoring of parameters like striding action and speed [

14,

18].

Sleep patterns—important as sleep fragmentation is linked to AD risk and progression [

20,

22,

27].

Location data—via GPS sensors [

14,

18,

20].

In addition to wearables, environmental sensors or smart home sensors can unobtrusively monitor behavioral patterns and interactions within the patient’s living space [

15,

22]. Examples include video cameras, tags on objects, infrared motion sensors, and sensors on furniture or doors. These distributed systems base their outcomes on long-term monitoring of activity patterns.

The vast amount of data generated by these IoT devices necessitates robust infrastructure for storage, management, and processing. Cloud computing architectures provide the necessary scalability and remote accessibility for healthcare professionals and family members to access the collected data [

12,

14,

18,

22].

To extract meaningful insights from this data, AI techniques, particularly ML and DL, are applied [

13,

16,

20,

23]. These algorithms analyze the sensor data to identify patterns and anomalies, and provide predictive insights. ML/DL models can be trained on collected sensor data to enable sophisticated real-time analysis, pattern identification, and classification. The combination of IoT, cloud, and AI is viewed as a revolutionary approach in healthcare, offering end-to-end solutions for disease identification and management.

3.2. Strategies for Detecting Concerning Events or Changes

While AD does not manifest as acute “attacks” in the same way as some other conditions, patients can experience concerning events or show changes indicative of worsening symptoms or dangerous situations. Technological systems are designed to detect these events and changes, often providing real-time alerts.

Strategies for detecting these events and changes include the following:

Early Symptom Detection—Sensors can capture subtle changes in behavior, activity levels, sleep patterns, and physiological parameters that may indicate the early stages or worsening of AD symptoms. These changes, referred to as “digital biomarkers,” leverage widely available mobile and wearable technologies. Existing tests are often less effective at detecting deviations from normal cognitive decline in the earliest stages [

15,

22,

23].

Monitoring Daily Activities and Behavior—Systems monitor how patients perform everyday tasks (Instrumental Activities of Daily Livings (IADLs)), their movement patterns, and interactions. Detecting a decrease in autonomy in task performance or changes in sleep/wake patterns, such as nighttime wanderings, can signal a problem. AI algorithms are used to analyze these patterns and identify anomalies or deviations from a patient’s baseline [

13,

17,

22].

Detection of Dangerous Conditions:

Fall Detection—Accelerometers and gyroscopes in wearable devices can detect fall events based on sudden changes in motion and orientation. Systems like the Smart Wearable Medical Devices (SWMD) have demonstrated high sensitivity and specificity in fall detection. Alerts can be sent to caregivers upon detection [

18,

21,

23].

Wandering and Location Monitoring—GPS sensors in wearables allow tracking a patient’s location. Geofencing functionalities in applications like Alzimio can establish safe zones and automatically detect when these zones are violated (e.g., exited), alerting caregivers [

14,

18,

20].

Emergency Signals—Some devices include dedicated emergency buttons that the patient can press to signal distress [

12,

14,

15,

18,

24].

Physiological Monitoring for Acute Changes—Continuous monitoring of vital signs (heart rate, temperature, oxygen saturation) can help detect acute health issues or significant physiological deviations [

14,

16,

22,

23].

These detection capabilities, often facilitated by real-time data processing via cloud-connected AI, allow for timely alerts and interventions by caregivers or healthcare professionals.

3.3. Measuring Disease Advancement

Monitoring the progression of Alzheimer’s disease is essential for adapting care plans, evaluating treatments, and understanding the individual trajectory of the illness. Technology offers significant advantages over periodic clinical assessments by providing continuous, objective data over extended periods. Key strategies for measuring AD advancement using these technologies include the following:

Longitudinal Data Collection—IoT sensors and wearables enable the collection of massive amounts of data daily over months and years. This longitudinal data provides a detailed history of a patient’s activity levels, sleep patterns, physiological parameters, and behavioral metrics [

22,

27].

Tracking Decline in Activity and Function—Changes in the quantity and quality of daily activities, mobility, sleep efficiency, and performance of routine tasks monitored by sensors are objective indicators of functional and cognitive decline. Declining autonomy in daily activities, for instance, correlates with disease progression [

12,

13,

19,

20,

22,

23].

Monitoring Digital and Physiological Biomarkers—The continuous capture of physiological signals and activity metrics provides digital biomarkers that can track changes associated with disease progression. Changes in heart rate variability, sleep fragmentation, or gait characteristics can potentially indicate worsening neurological function [

19].

Disease Stage Classification using AI—ML and DL algorithms can be trained on the collected sensor data, potentially combined with other data sources, to classify the patient’s stage of AD (e.g., mild, moderate, severe). Sophisticated analysis can identify subtle patterns in the data indicative of specific stages or transitions between stages [

13,

16,

20,

23].

Identifying Individual Progression Trajectories—The wealth of data allows for personalized monitoring, identifying deviations from an individual’s typical patterns rather than just population averages. This can help predict individual disease trajectories and adapt care proactively [

12,

13,

17,

22,

27,

28].

The capability for continuous, objective data collection and analysis provided by IoT, wearables, cloud computing, and AI is revolutionizing the ability to monitor and understand AD progression, enabling timely interventions and personalized care planning.

4. System Architecture

In this section, we present the architectural design of LumiCare, a modular context-aware system that provides continuous, adaptive support for individuals with Alzheimer’s disease. Building on the technologies introduced earlier, such as wearable sensors, Mobile Health (mHealt) platforms, and intelligent dialogue systems, LumiCare integrates real-time monitoring, cognitive assistance, and emergency response in a unified framework.

LumiCare adopts a hybrid architecture that fuses data from different sources, including wearable and environmental sensors, mobile devices, and locally or remotely deployed Large Language Model (LLM). This setup enables responsive and privacy-aware operation, with most inference tasks handled locally and cloud resources used only when needed.

We begin by outlining key use cases that illustrate typical interactions between the patient and the system. Then, we detail the architecture’s core components: data acquisition, local/edge/cloud processing, communication protocols, and context-aware prompting. Finally, we describe the service flow under normal and emergency conditions, and conclude with a discussion of future extensions, which include 5G/6G connectivity, wearable AI, and smart infrastructure to enhance tracking, adaptability, and system interoperability.

4.1. Use Case Description

Consider a patient diagnosed with early-stage Alzheimer’s disease, experiencing mild cognitive impairment characterized by occasional memory lapses, disorientation, and difficulties maintaining daily routines. The patient’s primary goal is to preserve autonomy and maintain quality of life while minimizing dependence on continuous caregiver presence.

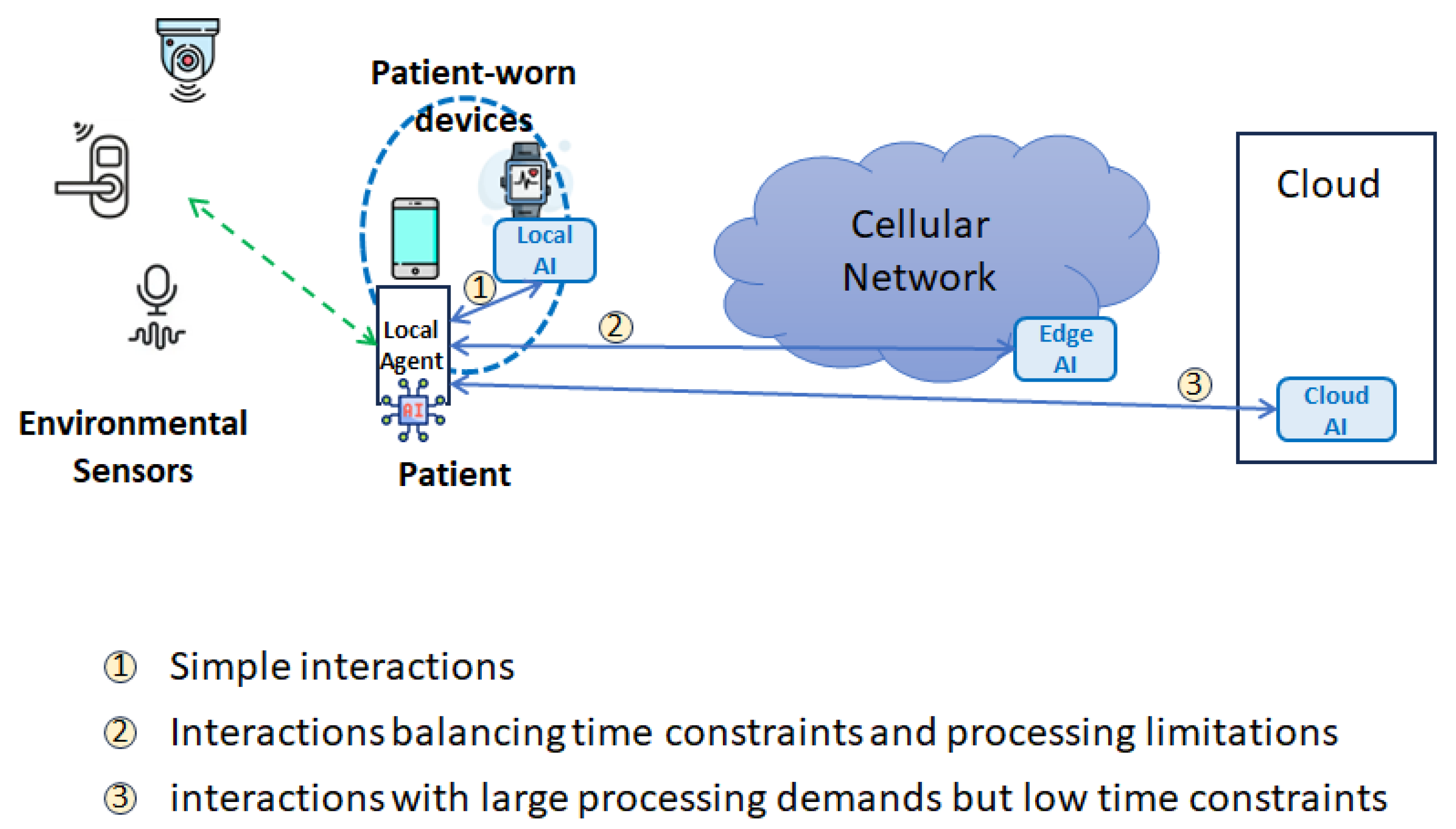

As illustrated in

Figure 1, the LumiCare system integrates a comprehensive monitoring infrastructure consisting of wearable devices (e.g., smartwatches or fitness trackers) and ambient environmental sensors deployed within the patient’s living space. These devices continuously collect physiological data (such as heart rate variability, body temperature, and oxygen saturation) and behavioral metrics (including movement patterns, gait regularity, and adherence to daily routines).

In typical daily scenarios, such as morning routines or scheduled medication times, the wearable devices perform continuous real-time data acquisition and preliminary analysis. If deviations from established behavioral patterns or vital signs are detected—indicative, for instance, of confusion, disorientation, or physical distress—the system proactively initiates context-aware interventions. These interventions range from subtle cognitive prompts and reminders provided through a personalized AI-driven virtual assistant integrated into the patient’s smartphone to immediate caregiver alerts in more critical situations, such as detected falls or extended inactivity.

The virtual assistant, powered by a Reinforcement Learning from Human Feedback (RLHF) AI model [

29], dynamically tailors interactions based on the patient’s medical history, current physiological state, and the context inferred from environmental sensor data. This capability ensures that interventions are both relevant and effective, reducing patient anxiety and enhancing compliance with non-pharmacological interventions.

The adaptive and real-time capabilities of the LumiCare system exemplify a sophisticated use of multimodal data fusion, low-latency processing, and context-sensitive support mechanisms. This integrated approach not only ensures safety through immediate detection and reaction to anomalies but also actively promotes autonomy and continuous cognitive engagement, significantly improving the patient’s day-to-day living experience.

4.2. Fundamental Elements in the System Architecture

Figure 2 presents the high-level architecture of the proposed LumiCare system. The patient is equipped with wearable devices (e.g., smart watches or fitness bands) capable of continuously acquiring biometric signals such as heart rate, oxygen saturation, body temperature, and other health-related metrics. These may include parameters specifically associated with the diagnosed disease or derived from the patient’s clinical history (e.g., comorbidities such as diabetes).

The local agent within the wearable device performs continuous real-time monitoring and is able to locally process the collected data or transmit it to a cloud-based AI/ML inference server. The system hosts specific AI models, tuned on the patient’s medical history and disease-specific characteristics, which enables continuous evaluation of the patient’s physiological state and behavioral patterns.

The system may be extended with additional environmental sensors, including proprietary sensors deployed in or near the patient’s home. These may include video surveillance cameras, proximity detectors, and contact sensors on doors or gates. Such an IoT infrastructure enables detection of abnormal events such as unwanted exits from the home or accidental indoor falls. Subject to prior consent, additional environmental sensors may also be deployed in outdoor areas to further enhance safety and situational awareness. In order to allow the LumiCare system to engage with the patient through notifications, voice prompts, or other interfaces whenever anomalous behavior is detected (such as memory lapses, disorientation, or confusion) or for periodic health status updates and routine check-ins, the smartphone serves some essential functions:

Geolocation tracking, utilizing GPS and mobile network functionality to determine the user’s real-time position;

Collect data from on-board and environmental sensors;

Human–system interaction, see

Section 5 for more details;

The user connectivity through the mobile network (e.g., 5G and forthcoming 6G technologies).

LumiCare is designed as a modular hybrid AI system, with a local LLM-based assistant at its core, supplemented by sensor-driven input modules and emergency-handling routines. The LLM component acts as a contextual dialogue engine that interprets multimodal cues from the user’s environment and wearable devices to generate timely prompts and reminders. For instance, using visual or location context captured by surrounding cameras or indoor tracking systems, the assistant might ask,

“What item are you looking for on this shelf?” or

“Did you remember to pick up milk on your way home?” Such contextual awareness is critical: as demonstrated in systems like MemPal [

30], leveraging real-time visual context and activity logs allows the LLM to create an ongoing “diary” of the user’s actions for memory retrieval and object-finding assistance. LumiCare similarly uses environmental context (e.g., store layout, home floor plan, nearby people) and conversational cues to engage the user in meaningful dialogue and offer personalized support, a capability emphasized in recent dementia-care reviews of LLM assistants.

Sophisticated on-device processing first extracts high-level features (e.g., step count, gait speed, heart-rate variability, detected activities) from this raw stream. ML models can infer stress or confusion from these signals (e.g., elevated heart rate or erratic motion may indicate agitation) These inferred “digital biomarkers” are passed to the LLM: if the user’s vitals show high stress, the LumiCare assistant might respond with soothing guidance or suggest a calming activity, whereas signs of fatigue might trigger a prompt to rest or hydrate. Contextual multimodal integration (such as audio, video, motion, and physiological) enhances personalization, as shown in the health-monitoring literature [

28,

31].

A dedicated emergency detection subsystem operates in parallel on local hardware. It monitors for critical events using sensor fusion and domain rules. For example, abnormal acceleration patterns or sudden immobility may indicate a fall; erratic gait or repeated wandering near exits may signal disorientation or getting lost; abnormal vital signs may flag tachycardia or hypoxia. LumiCare employs established IoT techniques that combine wearable and ambient sensors to increase detection accuracy: multimodal sensor fusion is essential for reliable fall detection and hazard alerts Upon detecting an event (e.g., fall, prolonged immobility, or dangerous behavior such as leaving the stove on), the system triggers its emergency protocol. First, local alerts may be issued (e.g.,

“Help is on the way!”). Simultaneously, LumiCare uses its LLM to generate a concise status report (e.g., context, last actions, vitals) and automatically dispatches notifications to caregivers or emergency services (via chat messages, SMS, call, or other dedicated apps). These reports include the user’s location and a natural-language summary (e.g.,

“User appears to have fallen in the kitchen; heart rate is 120 bpm; last seen drinking water”). Leveraging the LLM’s medical and contextual understanding ensures the clarity and relevance of alerts [

31,

32]. Emergency analytics run locally or on nearby edge servers, enabling rapid response as advocated in the edge-AI literature [

28,

33].

The local deployment of AI-based services within wearable devices or the patient’s smartphone presents several challenges due to the substantial computational, memory, and storage resources they require. To address these demands efficiently, LumiCare uses a hybrid edge-cloud architecture to balance efficiency, privacy, and performance. Lightweight inference and routine queries are handled entirely on-device or on proximal hardware: this includes the LLM model (optimized for local execution) and sensor-fusion logic. Computationally intensive or uncertain tasks (e.g., summarization, rare language queries) are offloaded to remote cloud servers as needed. The 5G system, and the forthcoming 6G, can decide whether to reserve radio resources to ensure the transmission of data that is vital for the patient’s health. In this way, the LumiCare system can leverage vast computing resources, enabling cloud-based AI functionalities to perform highly sophisticated and resource-intensive tasks.

The LumiCare system can also be equipped with edge AI, which is located within the Communication Service Provider’s network. It acts as an intermediary node, balancing resource availability and minimizing latency, while being capable of executing more complex and computationally demanding AI tasks than those supported by the AI on the wearable device. This approach minimizes bandwidth usage and improves privacy by reducing data transmission. Crucially, processing sensitive information locally also aligns with healthcare data protection guidelines. If connectivity is unavailable, LumiCare gracefully degrades: core functionality (e.g., reminders, emergency detection) continues offline on local hardware. Edge deployment significantly reduces latency and mitigates network-dependency issues, enabling robust performance in disconnected environments.

Finally, the architecture is flexibly configurable. Integrators can select different LLMs depending on their requirements for cost, performance, or regulatory compliance. For example, one deployment might run a compact LLM-based model on a smartwatch or smartphone, while another uses a home microserver to host a larger LLM. The sensor suite is likewise extensible: system designers may incorporate smart clothing, camera-based context detection, or vehicle GPS feeds as needed.

Regardless of configuration, emphasis is placed on local, low-power operation: all essential functionality, e.g., memory prompts, emergency alerts, and context-aware dialogue, must run on-device to ensure continuous support. This aligns with the trend of enabling AI-driven health interventions on ultra-low-power and edge devices [

33,

34].

Table 1 summarizes the main differences among the three communication paradigms adopted in the architecture, namely local, edge, and cloud computing. The comparison highlights their impact in terms of network load, latency, AI model accuracy, hardware requirements, and privacy, whose specific definitions are in the following.

The network load refers to the amount of data transmitted over the communication network due to the interaction between the application on the patient device and the AI model. It is negligible in the case of local processing, as the network is not involved; limited to the radio interface in edge processing, as the CSP network is only involved; and high when cloud processing is required.

The latency is intended as the network delay from sending the application request to the AI model processor and receiving the inference result, excluding the inference elaboration time. The latency values reported in the table are derived from our previous work [

35].

The AI model accuracy refers to the accuracy limitations already discussed in the text in correspondence with the architectural diagram. It is difficult to directly compare the time response of AI models located at different levels of the communication network, as this strongly depends on the hardware and software available in the personal device, edge, or cloud facilities.

The hardware requirements refer to the processing and storage capabilities needed at the different points of the communication network.

The privacy is defined with respect to the handling of personal data: it is maximal when no personal data are shared outside the device, medium when data are shared only with the CSP, and minimal when personal data are sent to the AI model running in the cloud. When data sharing is necessary, anonymization strategies may be adopted to enhance privacy.

4.3. Service Flow

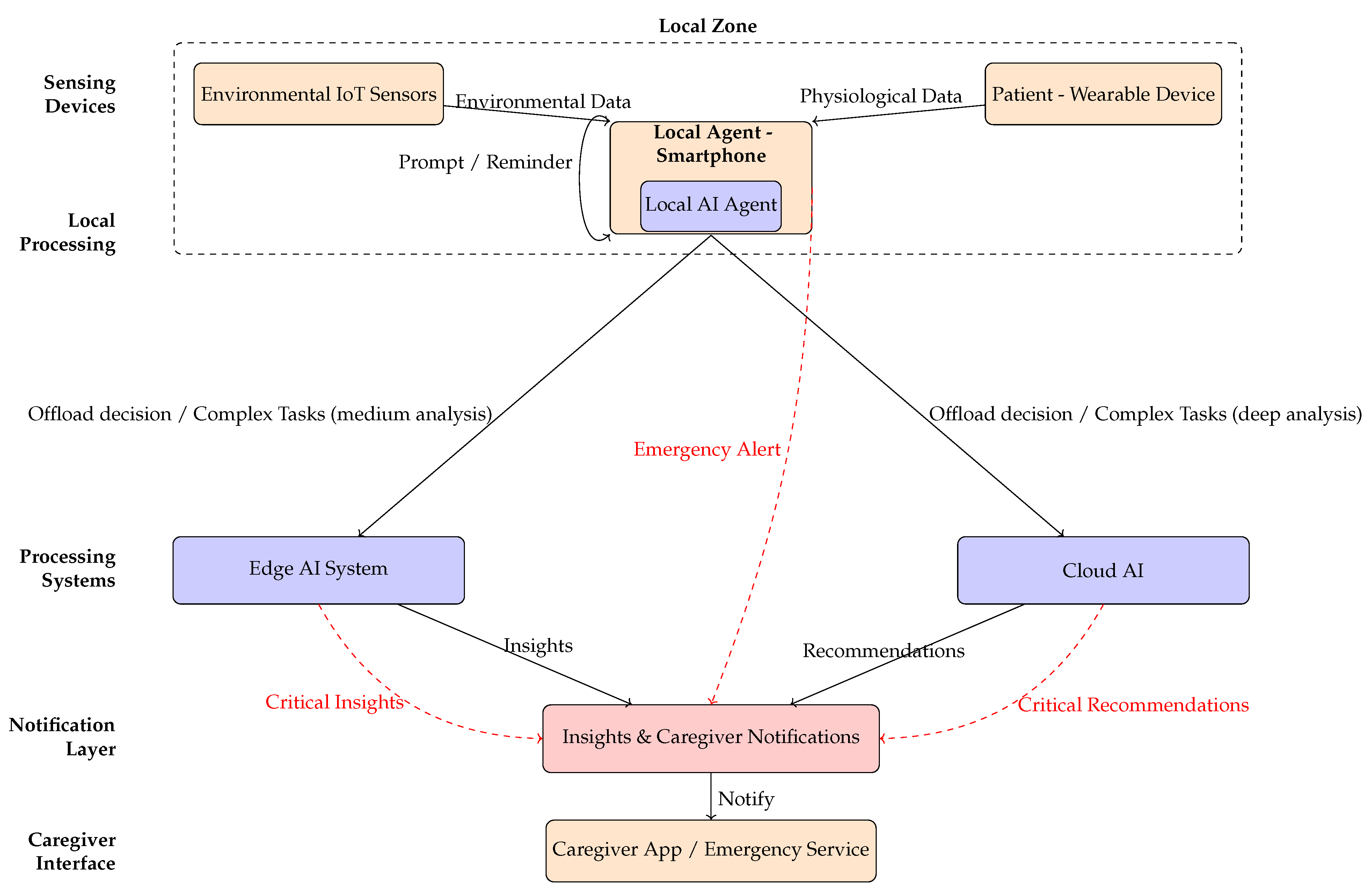

As illustrated in

Figure 3, the service flow of the LumiCare system delineates the interactions among its primary components: wearable devices and environmental IoT sensors, the local AI agent embedded within the user’s smartphone, edge and cloud processing systems, and the notification layer interfacing with caregivers or emergency services. Under normal operating conditions, physiological and environmental data undergo lightweight fusion and rapid inference locally to ensure low latency and privacy preservation. Conversely, tasks requiring extensive computational resources or complex analytical models are selectively offloaded to edge or cloud systems.

Furthermore, the distinct data paths associated with continuous monitoring are highlighted with solid arrows, and emergency detection or intervention scenarios are highlighted with dashed red arrows. The remainder of this subsection describes an illustrative example of the message exchanges within this service flow in greater detail.

The patient performs routine activities at home while wearing a health monitoring device (UE), which collects real-time biometric data;

In case the wearable device detects no biometric data irregularity nor the environmental IoT sensors detect any possible dangerous situation, the LumiCare system provides the user with periodic activites, such as puzzle solving or questions to monitor the patient’s health (see the Interactive application in

Section 5).

In case an irregular pattern in the user’s biometric data is noticed, the wearable device analyzes the biometric data irregularity. The local agent assesses for any irregularities indicating stress, signs of disorientation, or a potential illness problem. In this case, it may provide recommendations for the user, e.g., “Take a break” or “Drink water”, or ask questions, trying to keep the patient calm according to the interactive application.

Additional data can be collected by environmental IoT sensors with the aim of detecting potentially critical or dangerous situations, such as unexpected falls, unintended exits from the home, or deviations from the user’s daily routine. The processing of data collected from sensors can be computationally intensive, as in the case of image processing. In these scenarios, the data can be transmitted to an edge AI system for more accurate analysis. The local AI agent can define the network requirements that must be ensured to prevent delays or analysis inaccuracies.

In case the AI model takes a long time if executed locally in the UE or is not feasible for processing constraints or missing information, collected biometric data (eventually ciphered) is quickly transferred to the AI/ML server (the cloud AI) through the 5G or 6G networks. Biometric data can be enriched by additional data or environmental IoT sensors such as patient position (estimated by GPS outdoors or augmented by 5G/6G networks) or camera information. Moreover, the AI agent within the wearable device may add some requirements to the 5G/6G network in order to properly allocate resources for the analysis execution, such as latency and data rate constraints.

Once data are analyzed in the cloud AI, the UE receives the results of the computation task execution from the AI model through the 5G/6G network with possible actionable insights (e.g., “Sit down and wait for your daughter” or reminiscence exercises and orientation reminders from the interactive application). At the same time, other individuals, such as family members or caregivers, can be notified of the potential critical situation in order to prevent more serious situations.

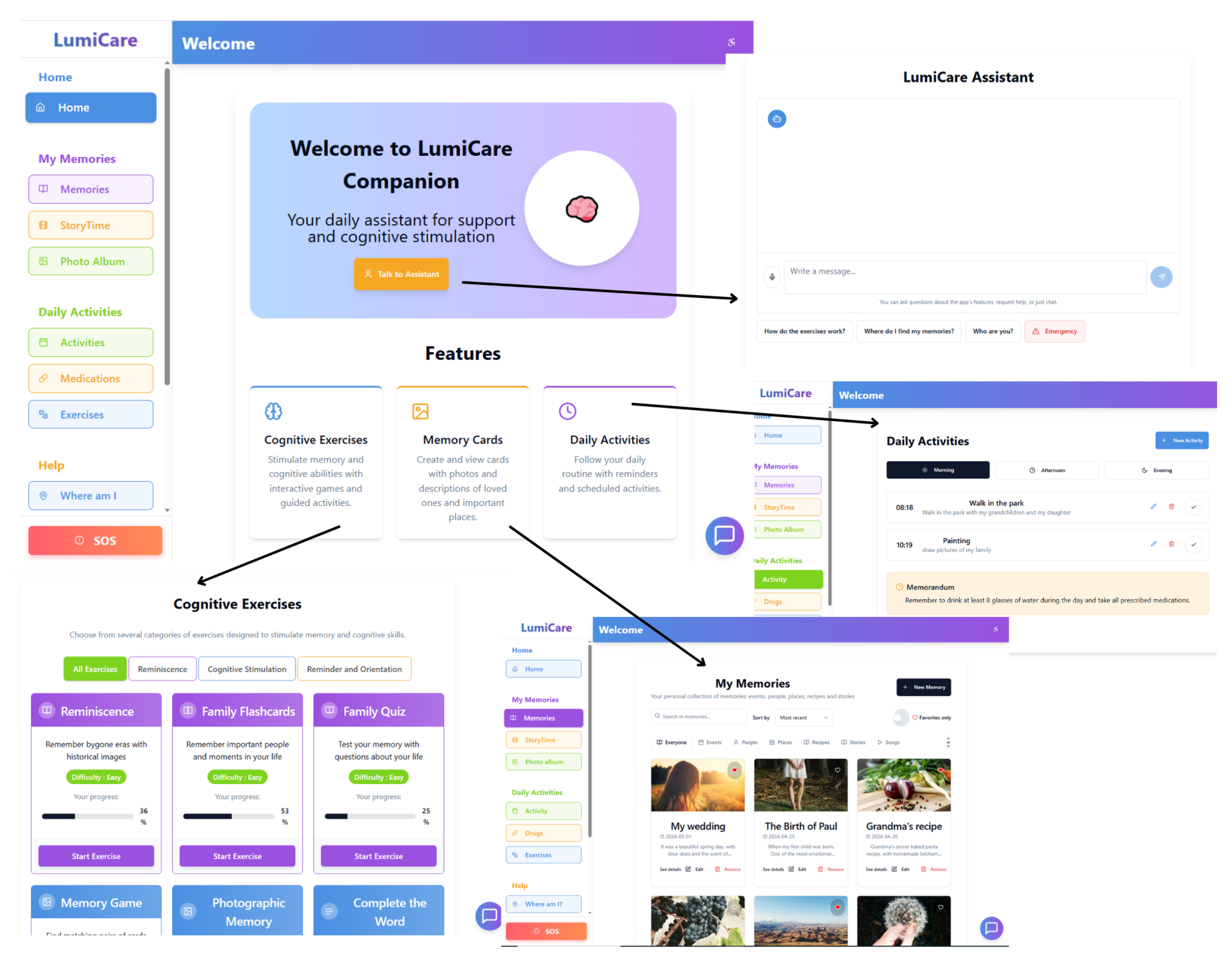

5. Interactive Application for Patient Support

In the daily lives of individuals affected by Alzheimer’s disease, as well as their caregivers, technology can play a critical role in providing continuous, tailored, and personalized assistance. This section introduces the LumiCare Companion, an interactive mobile application part of the LumiCare system, designed to assist patients throughout the day by combining cognitive stimulation, routine management, emergency support, and emotional well-being. By leveraging wearable devices and AI-driven functionalities, the application supports both the practical and psychological needs of users in a non-invasive and user-friendly manner.

The section begins by presenting the main features of the LumiCare Companion system, highlighting its user interface, accessibility options, virtual assistant integration, and tools for memory support and orientation. It then describes a typical day of use through a notification-based routine, showing how the system adapts to user behavior and responds to critical events. Finally, it outlines the internal workflow of the interactive application, explaining how the app coordinates with wearable sensors, context-aware prompts, and caregiver feedback mechanisms to ensure timely and effective support.

5.1. Daily Mental Stimulation

The interactive app offers a variety of cognitive activities as shown in

Figure 4 that are customizable, and divided into three main categories:

Reminiscence: exercises that awaken memories using old photos, family flashcards, and small quizzes about the user’s own life;

Cognitive Stimulation: targeted games—from the classic memory game to fun “Complete the Word”—designed to keep language and memory functions active;

Orientation and Reminders: visual puzzles and simple quizzes that help improve time and space awareness, linking words and images naturally.

All activities are developed following the principles of non-pharmacological cognitive therapy.

5.2. An Accessible App for All

One of LumiCare’s strongest points is its focus on accessibility, including the following:

Simple and intuitive interface;

Readable fonts for users with visual impairments;

Adjustable contrast;

Multi lingual support (e.g., English, French, Italian, Arabic, Hindu, and Chinese);

Integrated voice guidance that reads instructions in real time (in the selected language).

5.3. A Virtual Assistant That Makes You Feel Less Alone

Inside the app, a virtual assistant is always available, not only to guide the patient through the app but also to offer a friendly presence. It can perform the following:

Offer emotional support when alone;

Help request assistance in emergencies;

Reduce anxiety and disorientation with continuous interaction.

5.4. Memories Are Precious

In order to support the reminiscence and preserve personal identity, users can create digital photo albums, biographical cards, and collections of personal stories and family faces, by the Personal Memory Management feature.

5.5. Daily Routines and Greater Independence

The interactive calendar allows users to easily plan their day, including the following:

A practical aid to support independence for as long as possible.

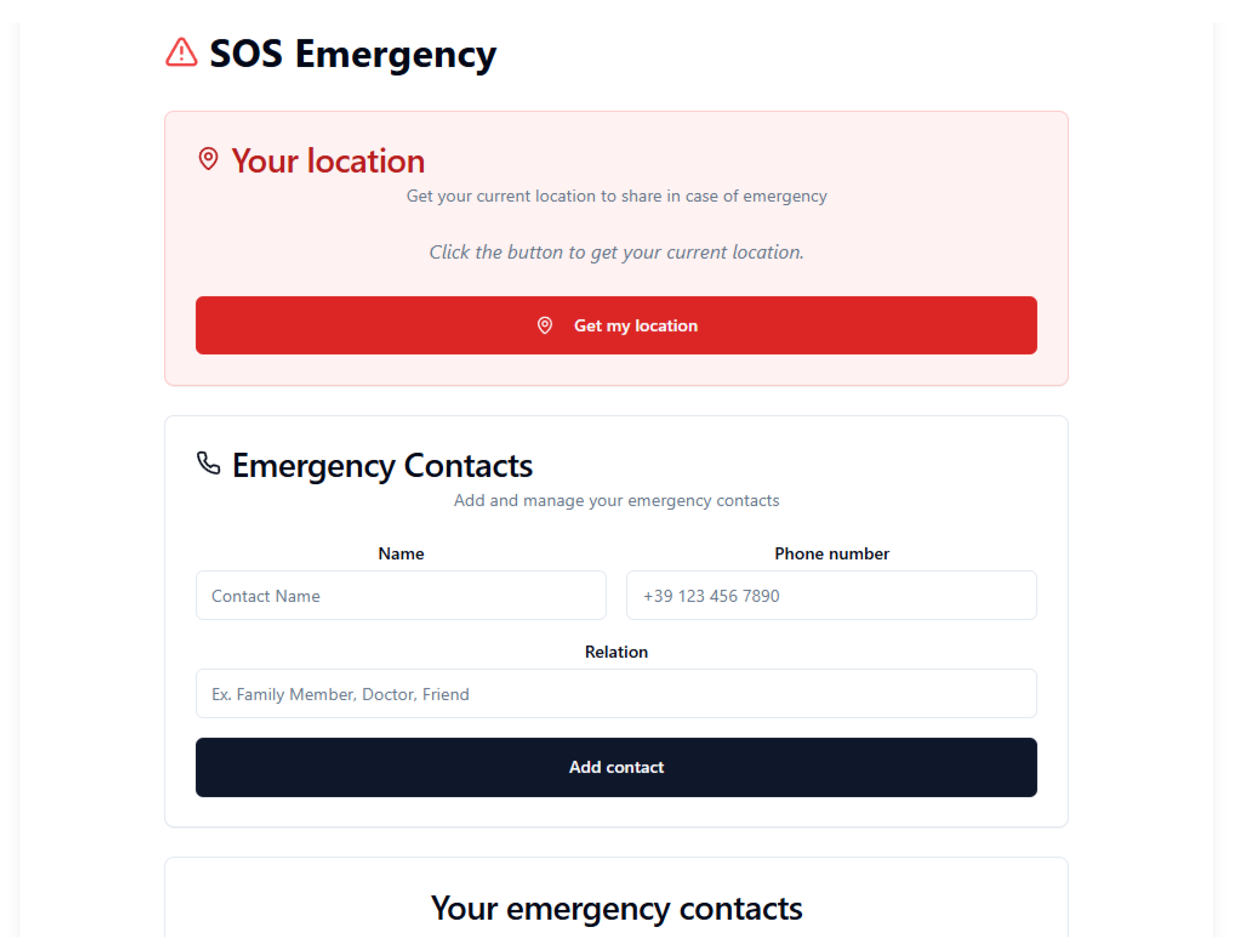

5.6. Greater Safety and Less Worry

In the

SOS section as shown in

Figure 5, users can perform the following:

A system designed to ensure maximum safety during critical moments.

5.7. A True Ally for Caregivers

LumiCare companion is also a great support tool for caregivers, offering the following:

Stimulates the mind and slows cognitive decline;

Reinforces user independence;

Adapts to the user’s remaining abilities;

Reduces stress with relaxing activities and vocal support;

Allows caregivers to monitor progress.

5.8. How to Easily Set Daily Reminders

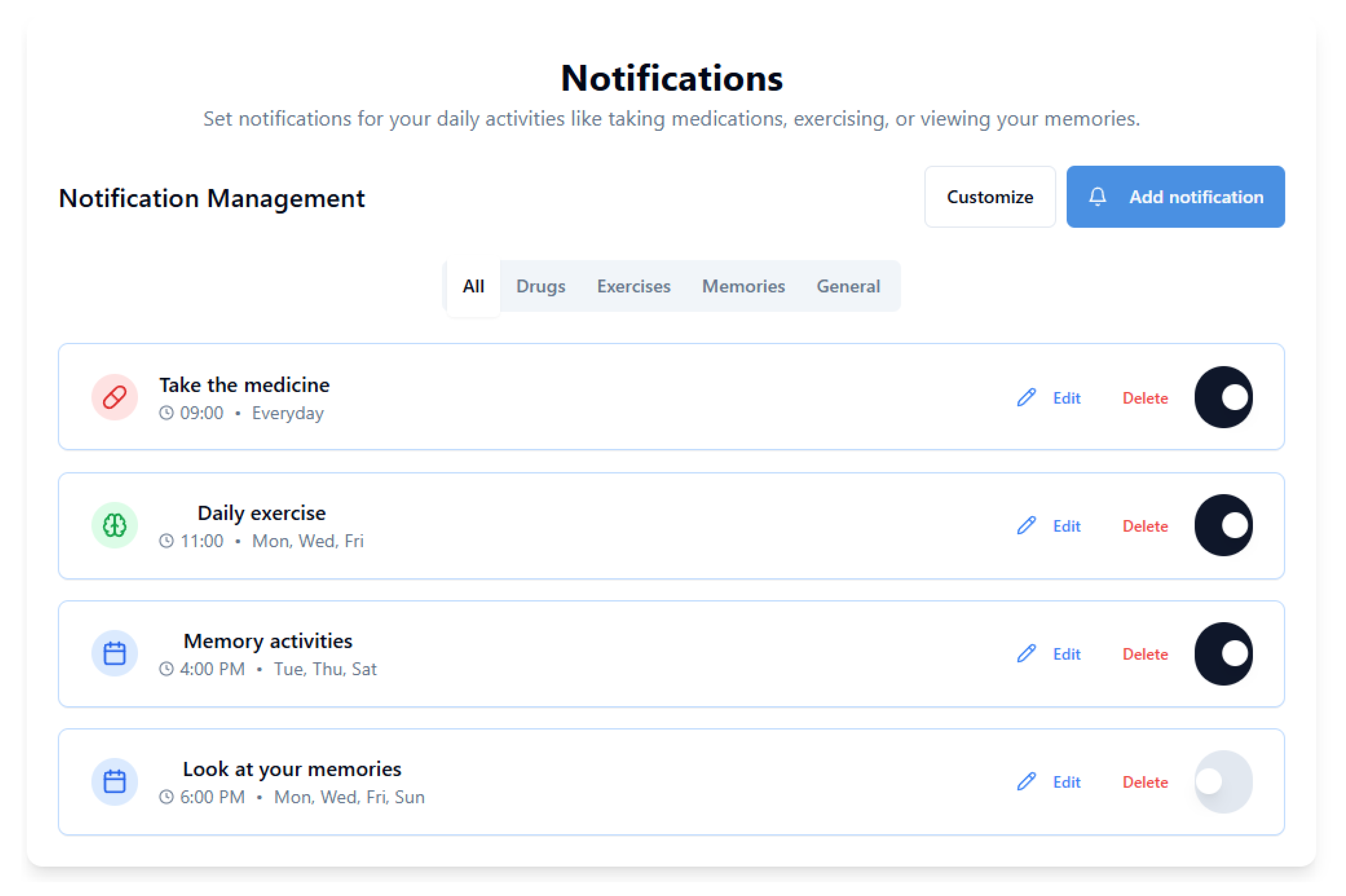

Setting daily notifications, as shown in

Figure 6, is easy:

Go to the “Routine” section and set times for:

- –

Medications,

- –

Meals,

- –

Personal hygiene or walks.

Enable silent or vocal push notifications.

Set automatic alerts in the “Daily Reminders” section.

Enable text-to-speech so the assistant reads all instructions aloud.

LumiCare Companion is much more than just an app: it is a bridge between technology and humanity, designed to accompany patients and their families through the most challenging moments, one day at a time.

5.9. Daily Routine with Alert and Notification System

5.9.1. Overview

The interactive application, integrated with a smart bracelet or smartwatch equipped with biometric, GPS, and motion sensors, provides continuous and personalized assistance to patients. It supports daily activities through intelligent notifications, monitoring, and adaptive interventions. The system responds dynamically to both completed and missed actions, issuing reminders, alerts, or caregiver notifications accordingly. A representative daily routine is detailed below.

Morning Routine (07:30–11:30)

07:30—Scheduled Wake-Up

Upon detecting wake-up activity, the system logs the start of the day and issues no further notifications. If no activity is detected within a predefined interval, auditory and haptic reminders are triggered. Persistent inactivity prompts a caregiver alert.

08:00—Personal Hygiene

Detected hygiene routines initiate guided voice and visual instructions. If undetected within a set time frame, a motivational voice prompt is issued. Continued inactivity results in an alert to the caregiver.

08:30—Breakfast and Morning Medication

Confirmed food intake and medication are logged and validated. Missed actions trigger multimodal reminders; lack of confirmation escalates to a caregiver alert.

10:00—Cognitive Exercise

Initiated exercises are monitored to assess engagement and update cognitive tracking. Missed sessions result in a notification, with repeated omissions escalating to caregiver alerts.

Afternoon Routine (12:00–17:30)

12:30—Lunch and Health Check

Normal vital signs and detected motion confirm meal completion and store biometric data. Anomalies or prolonged inactivity result in immediate alerts to caregivers.

14:00—Controlled Rest

If rest duration is within acceptable limits, the routine resumes automatically post-nap. Excessive rest time triggers a wake-up reminder, with further inaction prompting a caregiver alert.

16:00—Physical Activity / Walk

Successful activity within a predefined geofenced area is logged. Exiting the safe zone triggers a critical alert including GPS location, sent directly to the caregiver.

Evening Routine (18:00–22:00)

18:30—Dinner and Evening Medication

Medication intake is recorded, completing the daily medication cycle. Missed doses trigger repeated reminders; continued non-compliance results in caregiver notification.

20:00—Reminiscence Session

Participation prompts cognitive feedback logging and informs future recommendations. Inactivity leads to alternative suggestions; persistent disengagement generates a warning alert.

21:30—Bedtime Preparation

Following the bedtime routine switches the system to night mode with passive monitoring. If the patient remains active, a relaxing prompt is played; prolonged wakefulness triggers an alert.

22:00—Night Monitoring

If sleep patterns remain within normal parameters, data is silently recorded. Events such as falls, tachycardia, or frequent awakenings result in critical alerts and, if necessary, emergency contact notification.

5.9.2. Alert System Behavior

The system classifies events based on urgency:

Informative: Successful activity logged with positive feedback.

Warning: Missed or delayed activity triggers a patient notification and, if required, a caregiver alert.

Critical: Emergency (e.g., fall, abnormal vitals) initiates immediate caregiver notification and emergency response.

5.9.3. Continuous Adaptation

All events are archived in a synchronized digital diary shared with caregivers. The system employs adaptive learning to tailor future interactions by adjusting prompt timing, modality, and content based on the user’s behavioral patterns and responsiveness. For example, if a patient consistently ignores notifications during a particular time window, the system may delay subsequent prompts or switch to a less intrusive modality such as visual reminders instead of audio prompts.

Over time, the system learns to identify optimal engagement strategies for each individual user. This includes selecting the most effective times for cognitive stimulation, choosing between audio or visual guidance, and fine-tuning the complexity of exercises based on historical performance. Patterns of compliance and delay are analyzed to derive predictive behavioral profiles that inform system decisions. For instance, if the system detects improved responsiveness following brief reminders instead of detailed guidance, it will prioritize brevity in future prompts.

Additionally, this adaptive logic is extended to emergency scenarios. If a user shows delayed reactions to standard alerts, LumiCare can escalate interventions more quickly or notify caregivers proactively. The same mechanism supports long-term personalization: as disease severity progresses, the system can gradually simplify instructions, increase repetition, and intensify caregiver involvement. Through this continuous feedback loop, LumiCare maintains a dynamic and evolving care model that aligns with each patient’s cognitive trajectory and daily rhythms.

5.10. Interactive Application Workflow

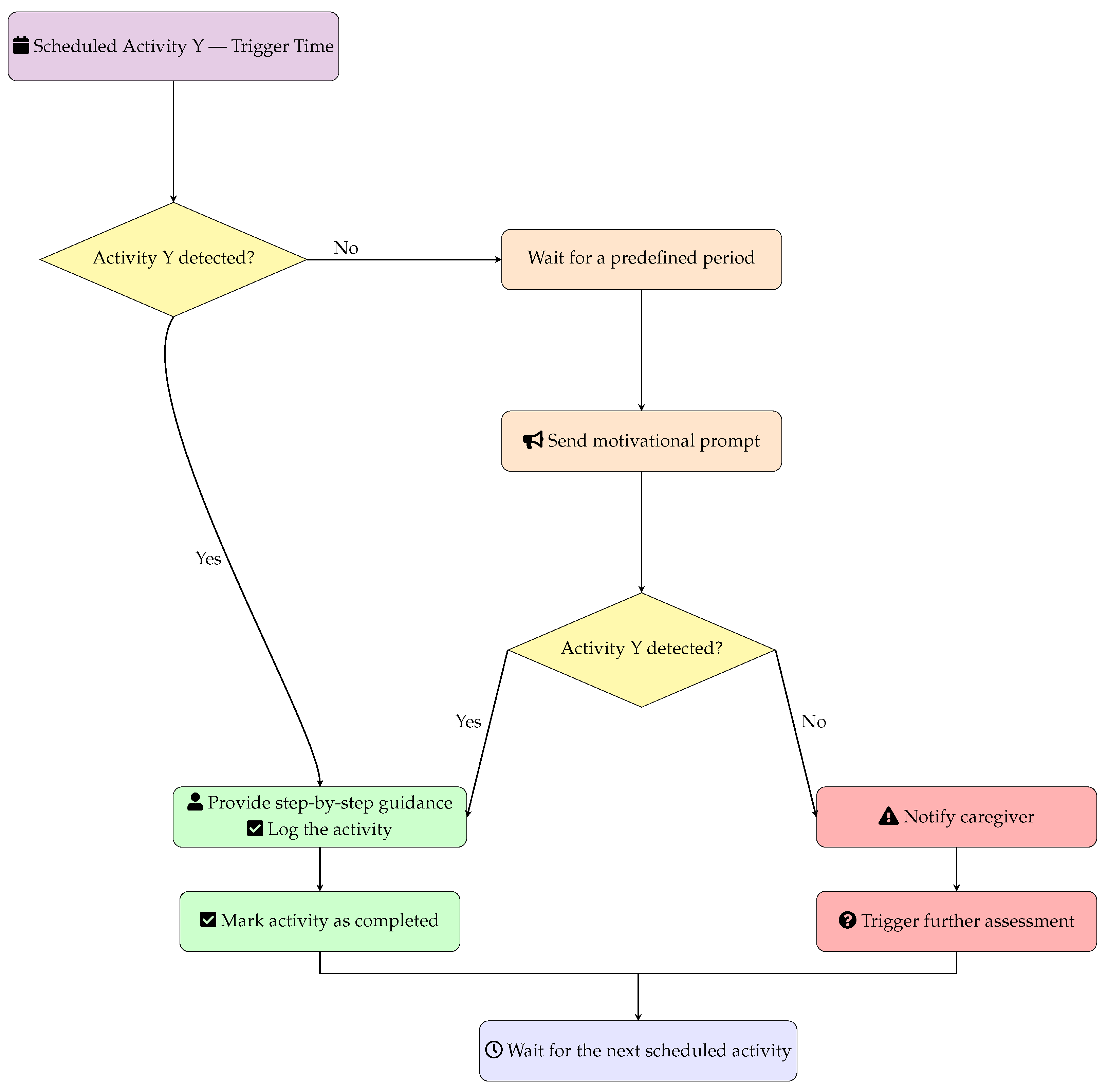

In

Figure 7, the generic workflow for automated activity monitoring is reported, which triggers for each scheduled activity in the care management system.

At the beginning, the system checks if the scheduled Activity Y is detected. The list of activities is reported above and includes, for example: personal hygiene, meals, medication intake, physical therapy, social activities, and daily living tasks. In case Activity Y is not detected within X minutes (e.g., 15 min from the scheduled time), the system automatically sends a motivational prompt to encourage the user to start the activity. This prompt can be delivered through various channels such as audio alerts, visual reminders, or mobile notifications. After the motivational prompt, the system waits an additional X minutes (same time frame as initial detection) to check if the activity has been initiated following the reminder. If the activity starts (either immediately when scheduled or after the motivational prompt), the system provides step-by-step guidance to help the user complete the task successfully and automatically logs the activity as completed in the care record. If the activity is still not started after the motivational prompt and additional waiting period, the system escalates by sending a caregiver alert to notify healthcare providers, family members, or care coordinators that immediate assessment and intervention may be required. Upon completion of either successful activity logging or caregiver alert generation, the system returns to standby mode and waits for the next scheduled activity trigger, creating a continuous monitoring cycle throughout the day. This automated approach ensures consistent care delivery while reducing caregiver workload and providing timely interventions when needed.

The experimental setting is based on a Linux Ubuntu 22 LTS environment running Ollama 2.1 with LLaMA 3.2. The model has been prompted with a set of initial instructions to interpret clinical results and present them to the patient. It is worth noting that the results can be generated not only by Lumicare but also by other AI tools, including non-LLM solutions, which may operate locally, at the edge, or in the cloud. To foster user trust and ensure explainability, particularly when dealing with vulnerable populations affected by cognitive decline, we rely on a Retrieval-Augmented Generation (RAG) approach. In this configuration, the LLM is constrained to operate strictly within a predefined knowledge base, thus preventing the generation of content outside the validated domain. This allows the system to provide consistent and reliable responses limited to a specific range of topics, improving both transparency and safety. By restricting the model to information explicitly inserted and validated by clinicians and caregivers, the architecture ensures that the AI-driven prompts remain trustworthy, explainable, and aligned with the needs of the target user group.

6. Discussion and Conclusions

This paper presented LumiCare, a modular, edge-native architecture designed to support people with Alzheimer’s disease by seamlessly integrating wearable sensors, environmental IoT nodes, smartphone-based interaction, and a hierarchical AI framework that spans local devices, edge infrastructure, and cloud services. Through advanced fusion of multimodal data, including physiological signals, behavioral patterns, geospatial information, and conversational interactions, LumiCare provides continuous, context-aware cognitive assistance. The system is specifically designed to detect anomalies promptly, provide personalized cognitive and behavioral prompts, and generate timely notifications to caregivers or healthcare professionals.

Three core design principles underpin LumiCare: (i) unobtrusive longitudinal monitoring leveraging digital biomarkers such as sleep efficiency, gait regularity, and adherence to daily routines; (ii) low-latency and privacy-preserving inference executed directly on local devices, ensuring responsiveness and safeguarding user data; and (iii) intelligent, communication-aware off-loading of computationally intensive analytics tasks to edge or cloud platforms. The latter principle capitalizes on advanced 5G technologies, including network slicing, massive machine-type communication, and ultra-reliable low-latency communication, while proactively anticipating integration with forthcoming 6G enhancements such as integrated sensing-and-communication waveforms and sub-THz spectrum. This approach aims to ensure timely, reliable, and energy-efficient data exchange essential for high-quality cognitive assistance.

Despite its potential, LumiCare currently exists as a conceptual framework, and numerous assumptions regarding detection accuracy, system responsiveness, energy efficiency, and user acceptance require rigorous empirical validation. Practical deployment must consider diverse challenges, including hardware heterogeneity, sensor calibration, system interoperability, fault tolerance, and adaptive user-interface personalization to effectively accommodate cognitive and sensory variability among patients.

Ethical considerations remain paramount. Given the sensitive nature of continuous data collection from vulnerable populations, LumiCare emphasizes privacy-by-design methodologies, informed consent mechanisms, stringent data minimization strategies, and transparent, explainable algorithms. Furthermore, the system is explicitly designed to complement and enhance caregiver workflows without inducing alert fatigue or creating excessive reliance on automation. The overarching objective is to establish a balanced partnership between human caregivers and assistive technology, rather than replacing human involvement.

Future research efforts will involve pilot trials with patients and caregivers, integration with healthcare data standards (such as Fast Healthcare Interoperability Resources (FHIR)), and the development of adaptive learning algorithms to enhance personalization. Additionally, a systematic evaluation of privacy, security, and explainability will be conducted in accordance with emerging regulatory frameworks. Future directions will explore the following: (a) real-time federated learning techniques that refine anomaly detection models locally, eliminating the need to centralize sensitive raw data; (b) extending LumiCare to support other neurodegenerative conditions, enabling cross-condition insights; (c) conducting longitudinal, multi-center clinical trials to quantify clinical outcomes, reductions in caregiver burden, and economic cost-effectiveness; and (d) leveraging secure hardware enclaves and promoting open health-data ecosystems to reinforce privacy, interoperability, and scalability.

Moreover, this framework can be easily extended to other neurodegenerative diseases. When the system is connected to suitable actuators, it can also support patients with hearing, vision, speech, or motor impairments. For example, the system may detect a progressive hearing loss and assist the patient by displaying on-screen transcriptions of spoken sentences from people nearby. This functionality may also be applied in an adaptive manner, providing support only in noisy environments while simply monitoring in normal conditions. Similarly, it may integrate with robotic elements to enhance mobility in case of detected motor impairments.

In conclusion, LumiCare represents a promising approach toward personalized, ubiquitous, and ethically responsible cognitive assistance for dementia care. Although substantial engineering and clinical validation work remains necessary, the architecture detailed herein establishes a solid foundation for next-generation digital health interventions aimed at improving quality of life and reducing the burden on both patients and caregivers.