1. Introduction

Cross-site scripting (XSS) attacks remain a persistent security threat due to their widespread occurrence and ease of exploitation [

1]. Machine learning-based detection, including reinforcement learning [

2,

3] and ensemble learning [

4,

5], has advanced significantly, with earlier studies [

4,

6,

7] and more recent works [

8,

9,

10,

11] focusing on improving model architectures and feature extraction.

However, many methods still face generalisation issues due to the highly distributed data structure and privacy concerns. Federated learning (FL) has emerged as a privacy-preserving alternative, allowing collaborative training without exposing raw data. This study explores the use of FL for XSS detection, addressing key challenges such as non-independent and identically distributed (non-IID) data, heterogeneity, and out-of-distribution (OOD). While FL has been applied in cybersecurity [

12,

13], its role in XSS detection remains underexplored. Most prior works focus on network traffic analysis, rather than text-based XSS payloads.

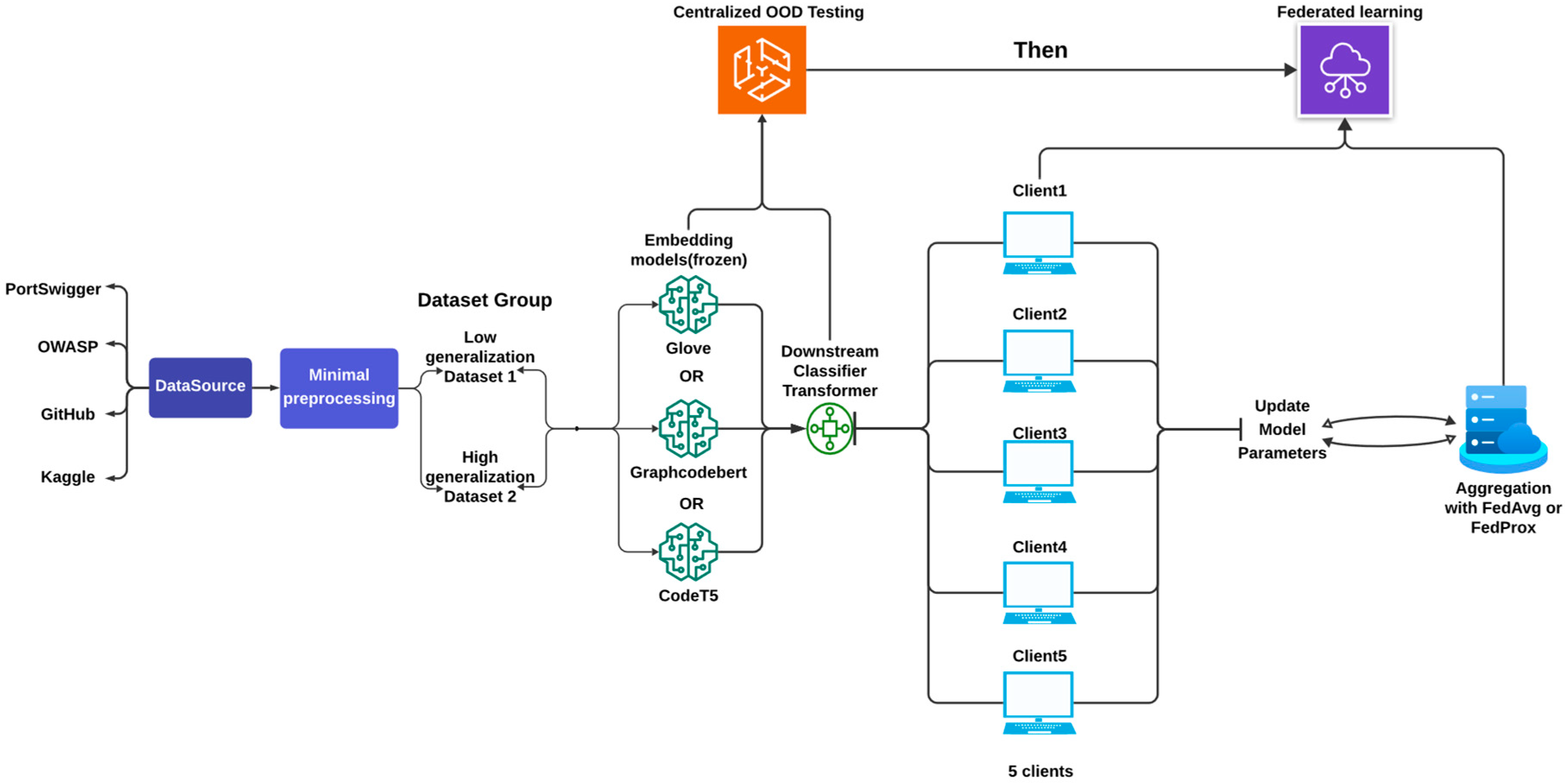

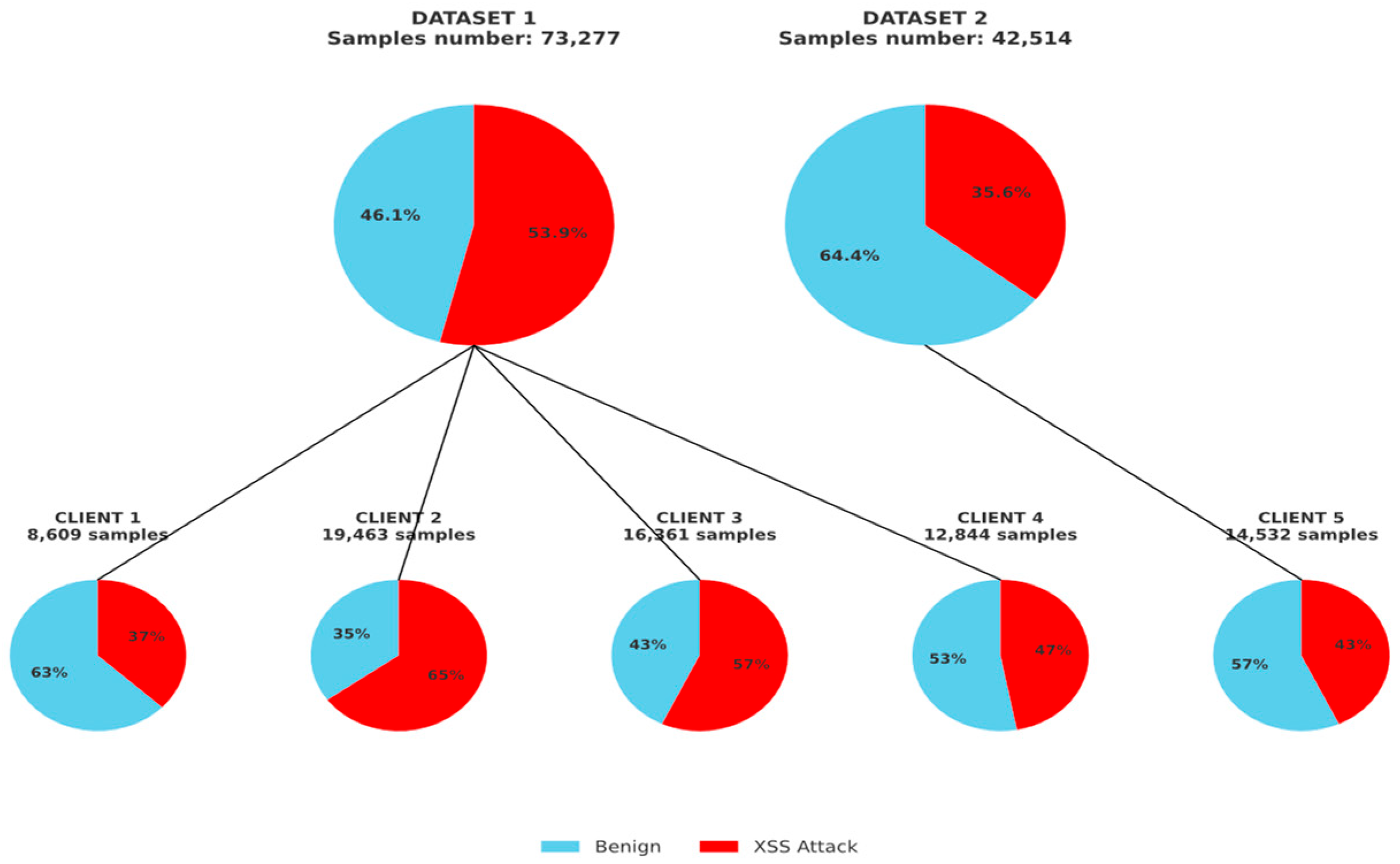

This study presents the first systematic application of federated learning to XSS detection under text-based XSS threat scenarios. Our key contributions are as follows.

We design a federated learning (FL) framework for XSS detection under structurally non-IID client distributions, incorporating diverse XSS types, obfuscation styles, and attack patterns. This setup reflects real-world asymmetry, where some clients contain partial or ambiguous indicators and others contain clearer attacks. Importantly, structural divergence also affects negatives, whose heterogeneity is a key yet underexplored factor in generalisation failure. Our framework enables the study of bidirectional OOD, where fragmented negatives cause high false positive rates under distribution mismatch.

Unlike prior work that mixes lexical or contextual features across splits, we maintain strict structural separation between training and testing data. By using an external dataset [

14] as an OOD domain, we isolate bidirectional distributional shifts across both classes under FL. Our analysis shows that generalisation failure can also be driven by structurally complicated benign samples, not only by rare or obfuscated attacks, emphasising the importance of structure-aware dataset design.

We compare three embedding models (GloVe [

15], CodeT5 [

16], GraphCodeBERT [

17]) in centralised and federated settings, showing that generalisation depends more on embedding compatibility with class heterogeneity than on model capacity. Using divergence metrics and ablation studies, we demonstrate that structurally complex and underrepresented negatives lead to severe false positives. Static embeddings like GloVe show more robust generalisation under structural OOD, indicating that stability relies more on representational resilience than expressiveness.

2. Related Work

Existing research on federated learning (FL) for XSS detection remains scarce. The most relevant work by Jazi & Ben-Gal [

18] investigated FL’s privacy-preserving properties using simplified setups and traditional models (e.g., MLP, KNN). Their non-IID configuration assumes an unrealistic “all-malicious vs. all-benign” client split, and evaluation is conducted separately on a handcrafted text-based XSS dataset [

14] and the CICIDS2017 intrusion dataset [

19]. However, they do not consider data heterogeneity or OOD generalisation. Still, the dataset [

14] they selected is structurally rich and thus serves as a suitable OOD test dataset in our experiments (see

Section 3.2).

Research on distributional shift or OOD has recently gained popularity [

20,

21,

22,

23], but most studies remain focused on computer vision, with few addressing specific cybersecurity scenarios in federated learning. For example, in addressing distribution shift in image anomaly detection [

23], the authors proposed Generalised Normality Learning to mitigate differences between in-distribution (ID) and OOD. For the FL domain, the FOOGD approach [

22] utilised federated learning to handle two distinct types of distribution shifts, namely covariate shift and semantic shift. This study offers valuable design insights (e.g., MMD and SM3D strategies), though its application domain is still primarily computer vision.

Heterogeneity in datasets remains a significant challenge for XSS detection [

24,

25,

26,

27]. The absence of standardised datasets, particularly in terms of class variety and sample volume, can have a substantial impact on the decision boundaries learned by detection models [

28,

29]. Most existing studies, including [

6,

9,

10,

11], attempt to address this issue through labour-intensive manual processing, aiming to ensure strict control over data quality, feature representation, label consistency, and class definitions.

However, we argue that complete reliance on manual curation often fails to reflect real-world conditions. In practical cybersecurity scenarios, data imbalance is both common and inevitable, especially regarding the ratio and diversity of attack versus non-attack samples [

27,

28,

30]. This often results in pronounced structural and categorical divergence between positive and negative classes. For example, commonly used XSS filters frequently over-filter benign inputs [

31], indicating a mismatch between curated datasets and actual deployment environments.

In light of these challenges, federated learning demonstrates strong potential. It enables models to share decision boundaries through privacy-preserving aggregation [

20,

32], offering an effective alternative to centralised data collection and manual intervention.

Meanwhile, we argue that findings from FL research on malicious URL detection [

33,

34] are partially transferable to XSS detection. Although some malicious URLs may embed XSS payloads, the two tasks differ in semantic granularity, execution contexts, and structural variability. Given their shared challenges like class imbalance, distribution shift, and non-IID data, we think FL techniques proven effective for URL detection offer a reasonable foundation for XSS adaptation.

The high sensitivity of XSS-related information, such as emails or session tokens, makes sharing difficult without anonymisation. Yet studies [

35,

36] show that anonymisation often introduces significant distributional shifts due to strategy-specific biases. Disparities in logging, encoding, and user behaviour further distort data distributions, compromising generalisation [

35,

36].

For example, strings embedded in polyglot-style payloads are hard to anonymise, as minor changes may affect execution. Consider the following sample:

<javascript:/*-><img/src=‘x’onerror=eval(unescape(/%61%6c%65%72%74%28%27%45%78%66%69%6c%3A%20%2b%20%27%2b%60test@example.com:1849%60%29/))>

Naively replacing “test@example.com” with an unquoted *** breaks JavaScript syntax, rendering the sample invalid and misleading detectors. While AST-based desensitisation can preserve structure, it is complex, labour-intensive, and lacks scalability [

37].

To address these challenges, this study introduces a federated learning (FL) framework to enhance XSS detection while preserving data privacy, especially under an OOD scenario. FL enables collaborative training without exposing raw data [

12,

32], mitigating distributional divergence and improving robustness [

22,

32]. More importantly, our approach leverages structurally well-aligned, semantically coherent clients to anchor global decision boundaries, allowing their generalisation capabilities to be implicitly shared across clients with fragmented, noisy, or ambiguous data distributions. In doing so, we avoid the need for centralised, large-scale anonymisation or sanitisation and instead provide low-quality clients with clearer classification margins without direct data sharing or manual intervention. This decentralised knowledge transfer mechanism forms the basis of our FL framework, detailed in

Section 5, and evaluated under dual OOD settings across three embedding models.

Section 4 will explain the centralised OOD testing.

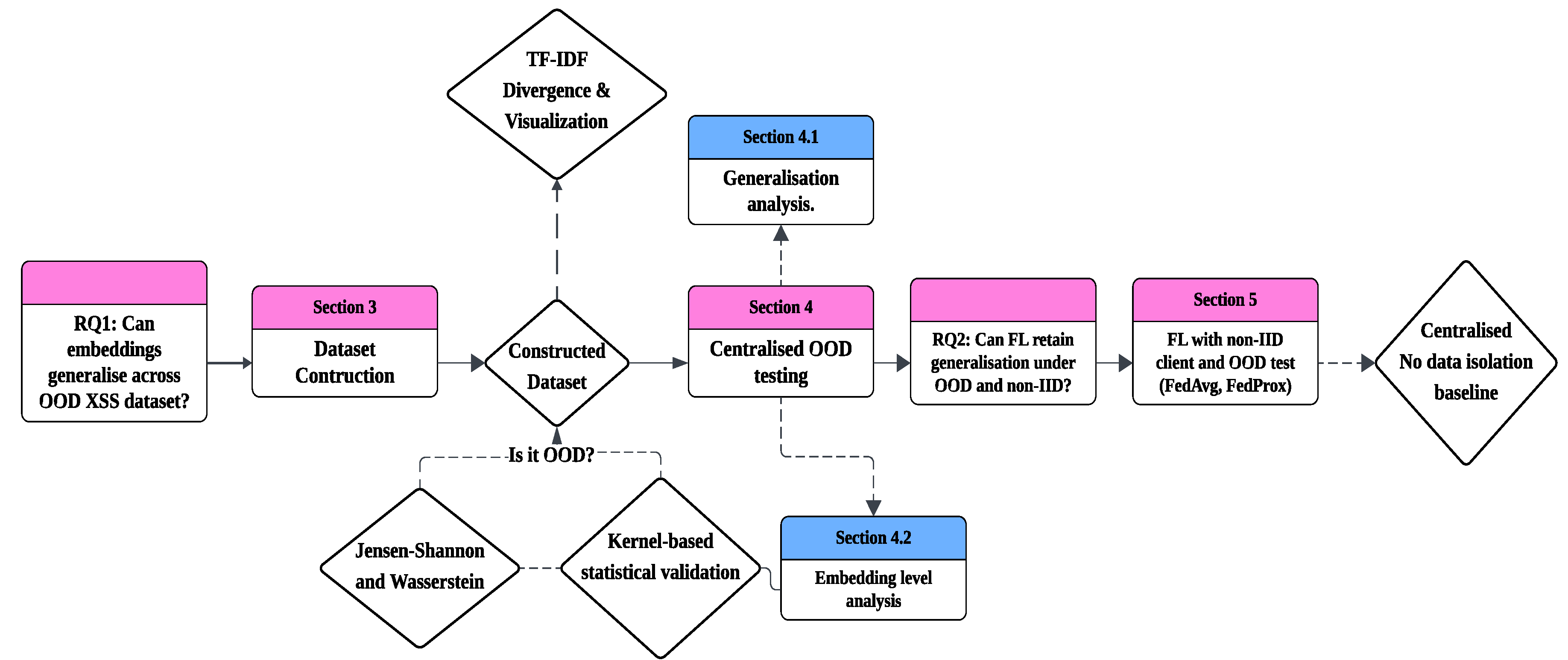

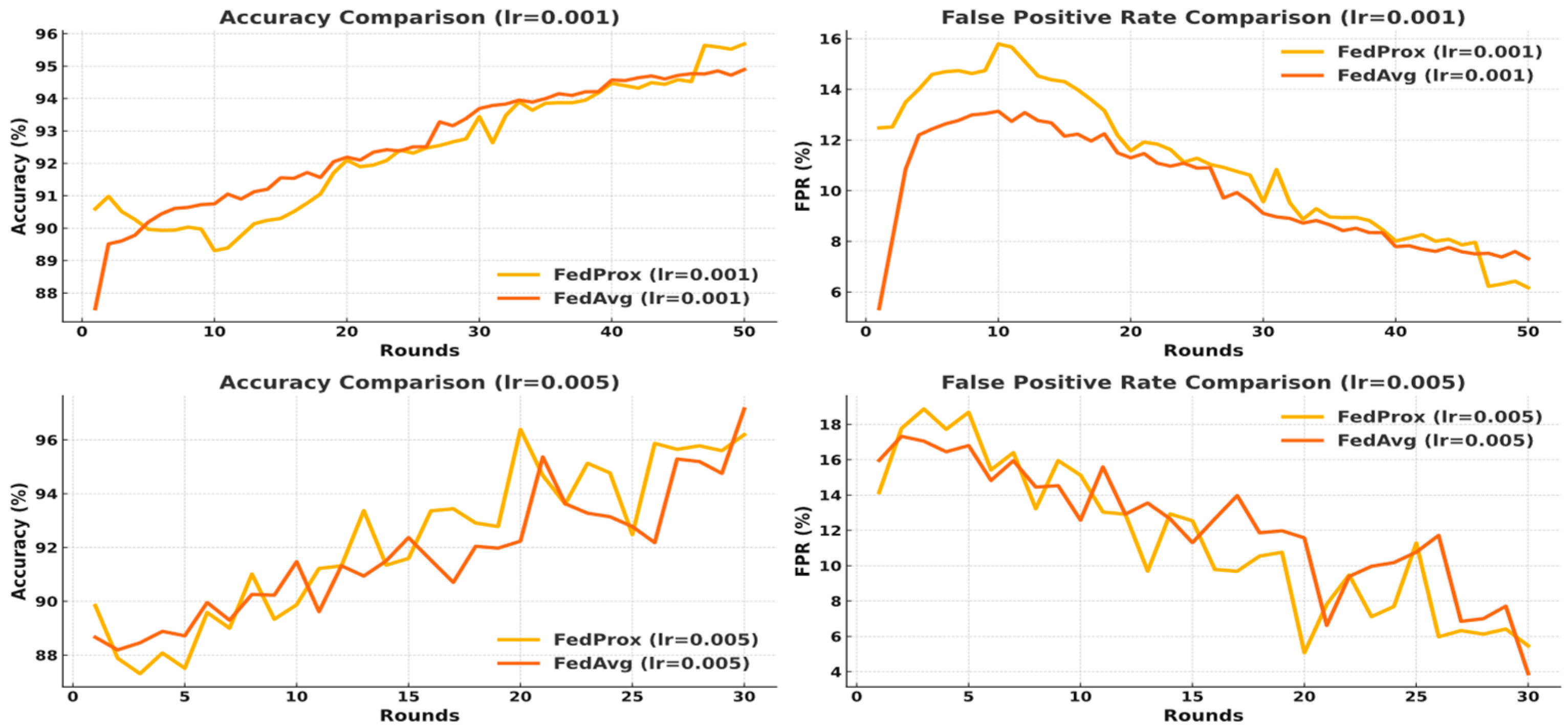

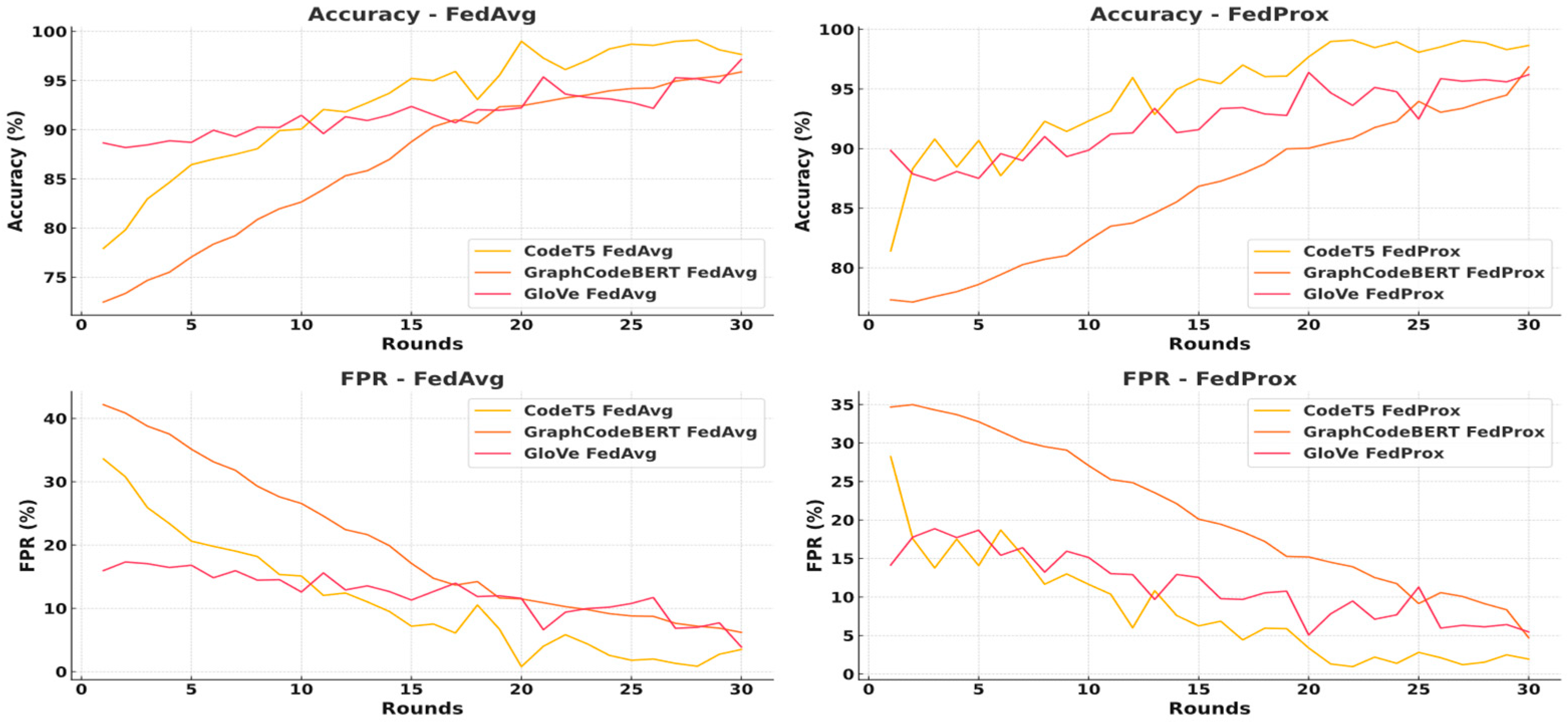

4. Independent Client Testing with OOD Distributed Data

In the first part of our evaluation, we trained on Dataset 1 and tested on Dataset 2, then reversed the setup. While both datasets target reflected XSS, they differ in structural and lexical characteristics, as detailed in

Section 3.1. This asymmetry, present in both positive and negative samples, led to significant generalisation gaps. In particular, models trained on one dataset exhibited lower precision and increased false positive rates when tested on the other, reflecting the impact of data divergence under OOD settings.

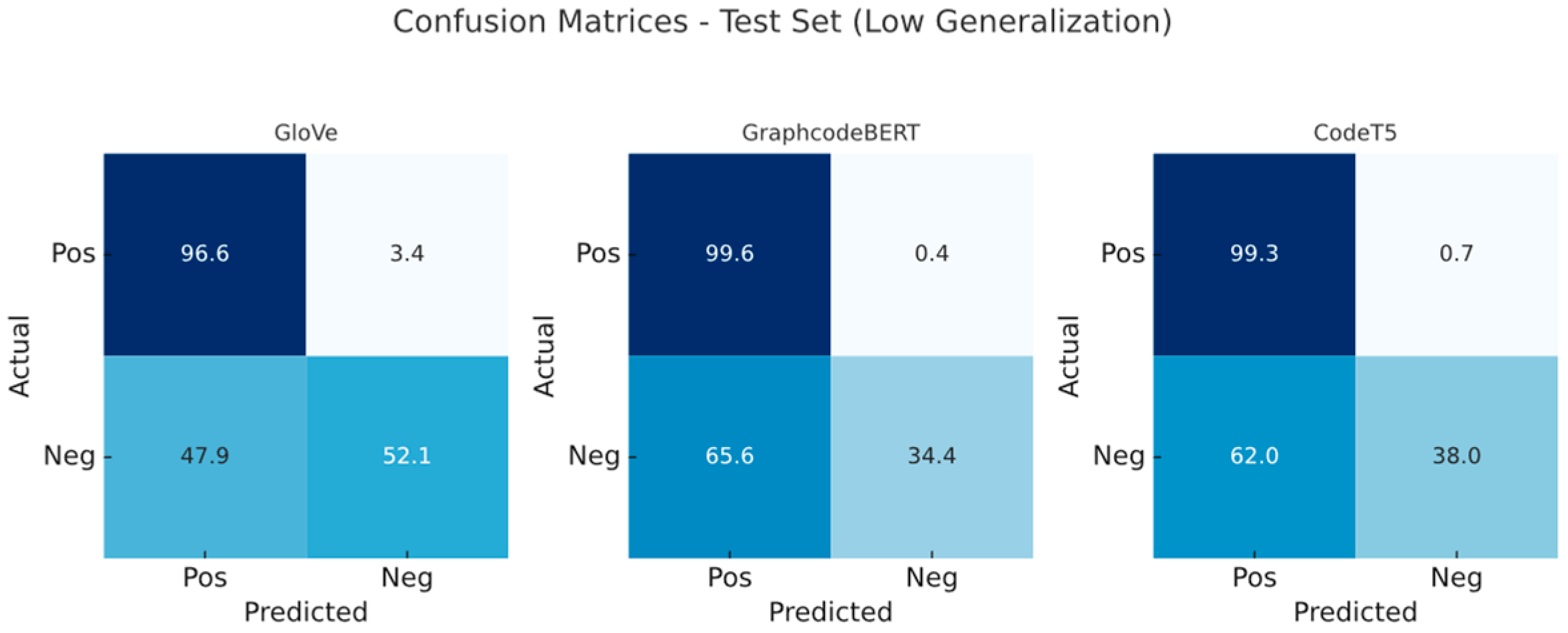

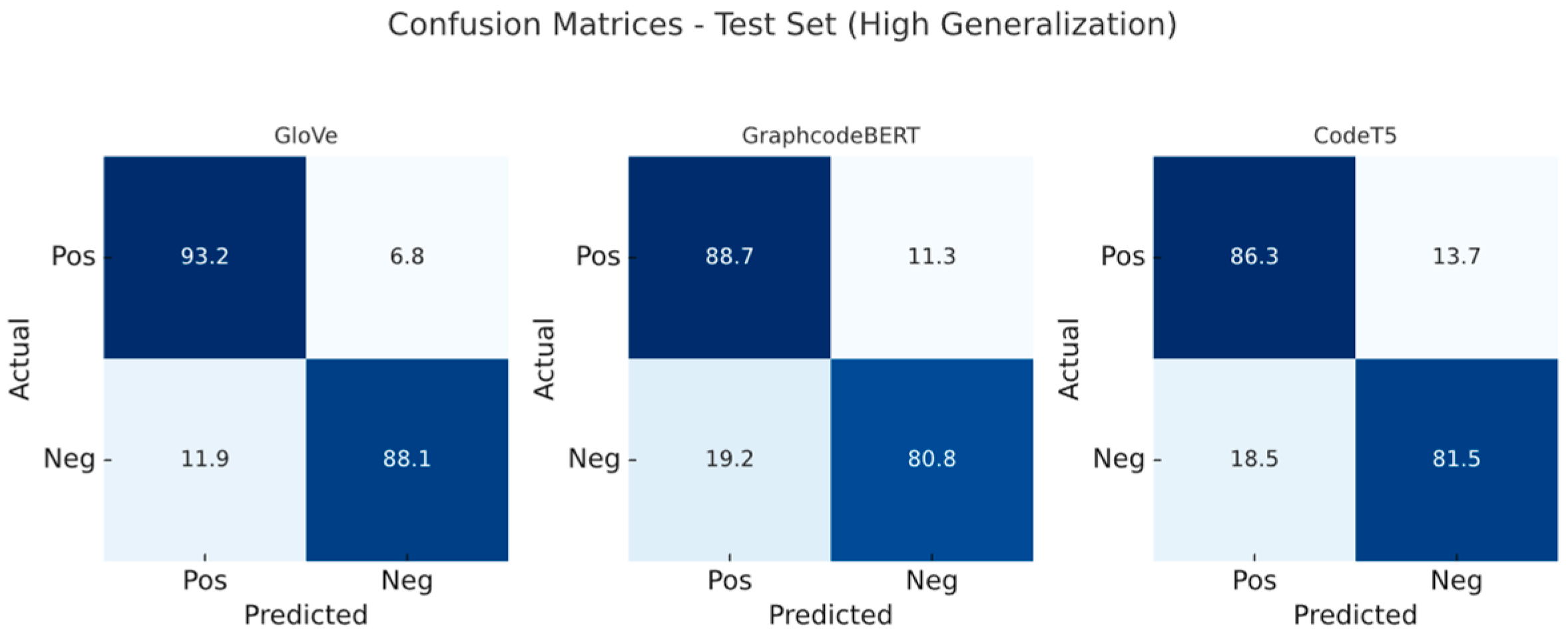

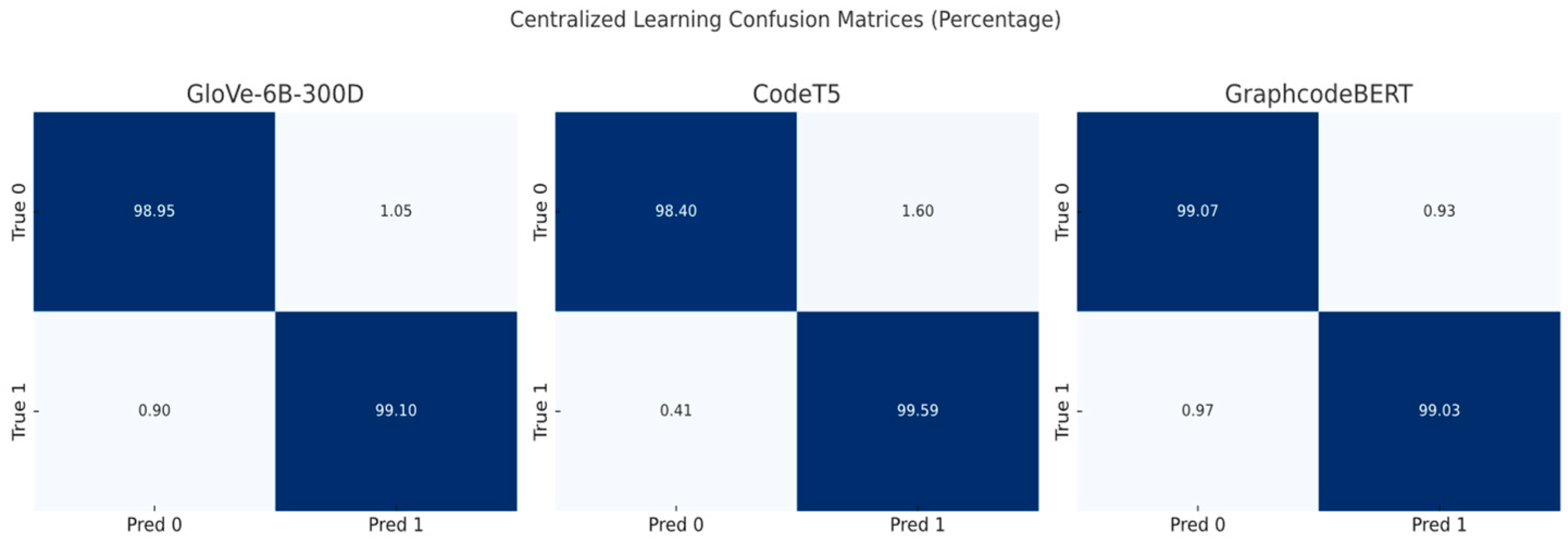

We evaluated all three embedding models under both configurations. Confusion matrices (

Figure 4 and

Figure 5) illustrate the classification differences when trained on low- versus high-generalisation data, respectively. Before this, we established performance baselines via 20% splits on the original training set to rule out overfitting (

Table 3).

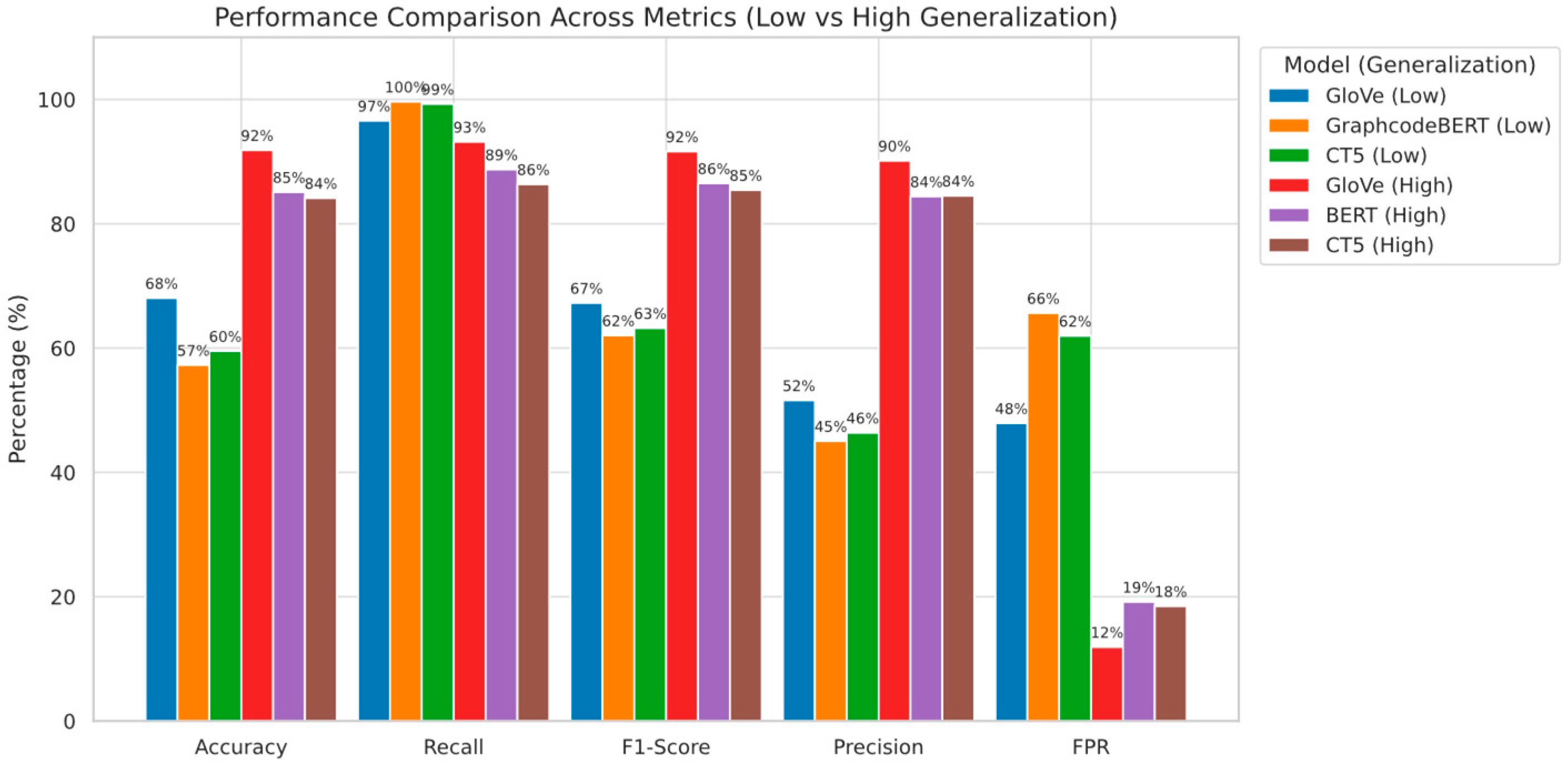

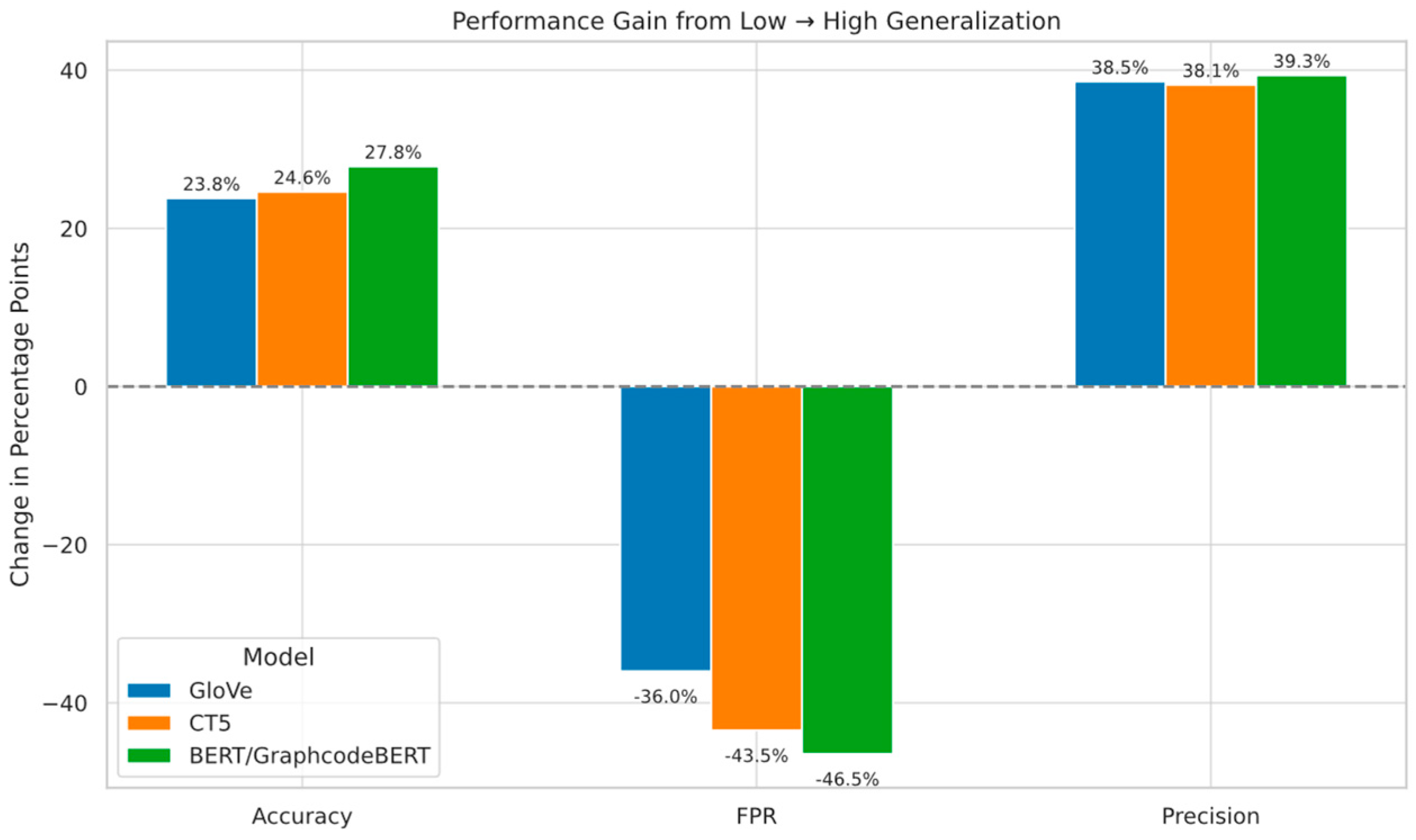

Figure 6 summarises cross-distribution performance under each model, and

Figure 7 highlights the extent of performance shifts under structural OOD. These results confirm that both positive and negative class structures play a critical role in the generalisation performance of XSS detectors.

To isolate the impact of positive sample structure, we conducted cross-set training where the training positives originated from the high-generalisation Dataset 2 while retaining fragmented negatives from Dataset 1 on the most structure-sensitive model GraphcodeBERT. Compared to the baseline trained entirely on Dataset 1, this setup substantially improved accuracy (from 56.80% to 71.57%) and precision (from 44.82% to 68.39%), with recall slightly increased to 99.70%. These findings highlight that structural integrity in positive samples enhances model confidence and generalisability even under noisy negative supervision. Conversely, negatives primarily increase false positives (FPR 68.19%). See

Table 4.

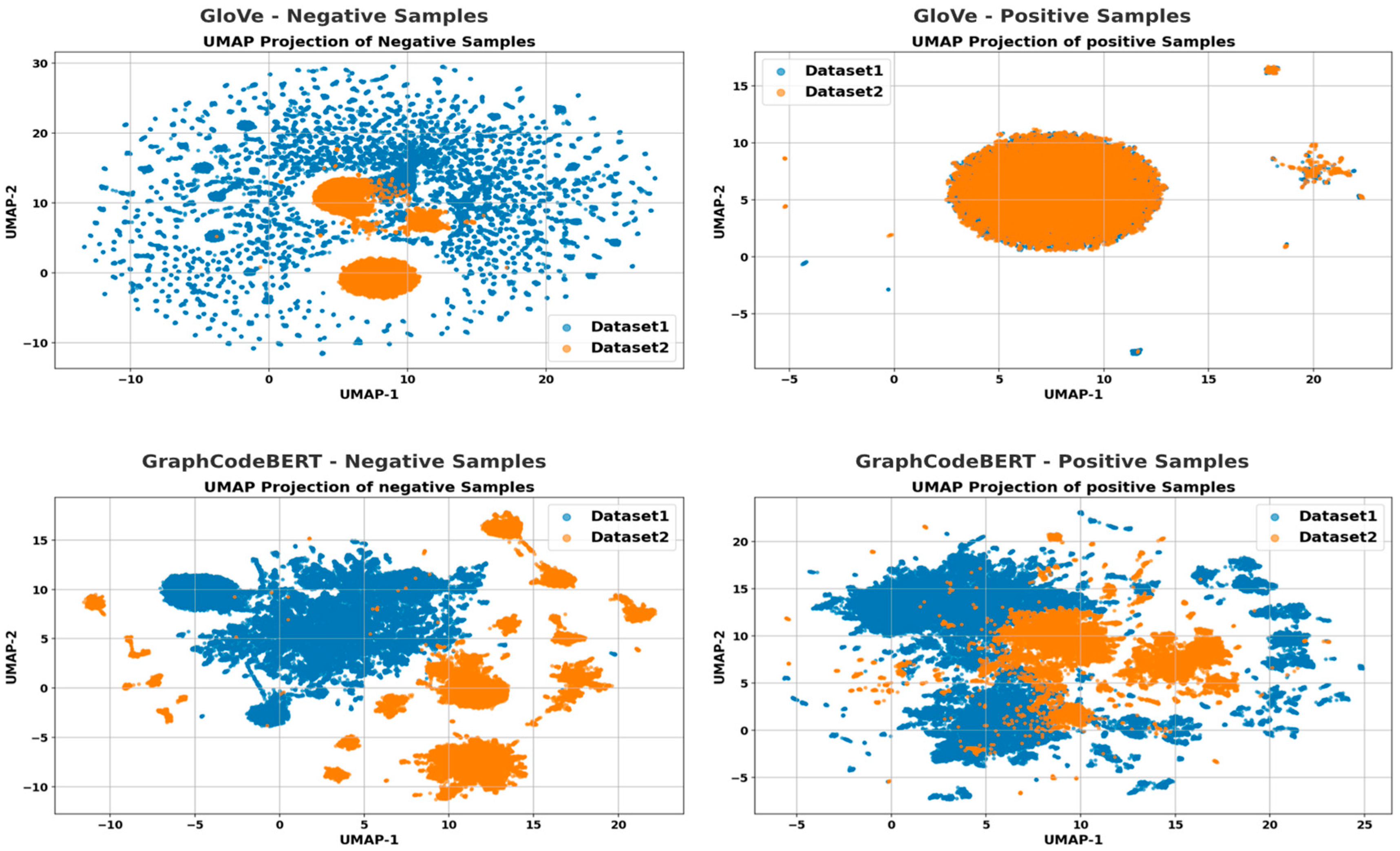

4.1. Generalisation Performance Analysis

When we evaluate the generalisation ability of GloVe, GraphCodeBERT, and CodeT5 embeddings by testing on the high-generalisation dataset (Dataset 2) and training on the structurally diverse and fragmented Dataset 1, all models experience a significant drop in performance, particularly in precision and false positive rate (FPR), indicating high sensitivity to structural shifts across datasets.

GraphCodeBERT shows the most severe performance degradation, with precision dropping from 84.38% to 45.03% (−39.35%) and FPR increasing from 19.16% to 65.62% (+46.46%). Despite maintaining nearly perfect recall (99.63%), it heavily overpredicts positives when faced with unfamiliar structures, suggesting poor robustness to syntactic variance due to its code-centric pre-training.

CodeT5 suffers slightly less, but still significant degradation: precision drops from 84.50% to 46.36% (−38.14%), and FPR rises from 18.47% to 61.95% (+43.48%). This suggests that while its span-masked pre-training aids structural abstraction, it still fails under negative class distribution shift.

GloVe demonstrates the most stable cross-dataset performance, with a precision decline from 90.13% to 51.58% (−38.55%) and FPR increasing from 11.90% to 47.90% (+36.00%). Although static and context-agnostic, GloVe is less vulnerable to structural OOD, likely due to its reliance on global co-occurrence statistics rather than positional or syntactic features.

These results support that structural generalisation failure arises from both positive class fragmentation and negative class dissimilarity. Models relying on local syntax (e.g., GraphCodeBERT) are more prone to false positives, while those leveraging global distributional features (e.g., GloVe) exhibit relatively better robustness under extreme OOD scenarios.

Sensitivity of Embeddings to Regularisation Under OOD

Under structural OOD conditions, CodeT5 achieved high recall (≥99%) but suffered from low precision and high FPR, indicating overfitting to local patterns. Stronger regularisation (dropout = 0.3, lr = 0.0005) led to improved precision (+4.73%) and reduced FPR (−10.89%), showing modest gains in robustness. GloVe benefited the most from regularisation, with FPR dropping to 29.49% and precision rising to 63.41%. In contrast, GraphCodeBERT remained not very sensitive to regularisation, with relatively smaller changes across settings. These results suggest that structure-sensitive embeddings require tuning to remain effective under structural shift, while static embeddings like GloVe offer more stable performance.

Notably, we also observed that stronger regularisation on dropout tends to widen the performance gap between the best and worst OOD scenarios, especially for GloVe (4%~9%). These results suggest that structure-sensitive embeddings require tuning to remain effective under distributional shift. See

Table 5.

4.2. Embedding Level Analysis

To assess whether embedding similarity correlates with generalisation, we computed pairwise Jensen–Shannon divergence (JSD) [

57] and Wasserstein distances (WD) [

58] across models on both datasets. P and Q: probability distributions of two embedding sets; M: mean distribution; KL: Kullback–Leibler divergence from one distribution to another; FPx-FQx: cumulative distribution functions. JSDP∥Q reflects a symmetric, smoothed divergence metric capturing the balanced difference between P and Q.

As shown in

Table 6, the three embedding models respond differently to structural variation. GraphCodeBERT has the lowest JSD (0.2444) but the highest WD (0.0758), suggesting its embeddings shift more sharply in space despite low average token divergence. This sensitivity leads to poor generalisation, with false positive rates exceeding 65% under OOD tests. GloVe shows the highest JSD (0.3402) and moderate WD (0.0562), indicating broader but smoother distribution changes. It performs most stably in OOD scenarios, likely due to better tolerance of structural drift. CodeT5 has the lowest WD (0.0237), meaning its embeddings change little across structure shifts. However, this low sensitivity results in degraded precision, especially for negative-class drift.

Kernel-Based Statistical Validation of OOD Divergence

While metrics like JSD and Wasserstein quantify distributional shifts, they do not assess statistical significance. To address this, we compute the Maximum Mean Discrepancy (MMD) between Dataset 1 and Dataset 2 using Random Fourier Features (RFFs) for efficiency, with 40,000 samples per set.

MMD score scope for different models’ embedding in all samples: 0.001633 (GraphcodeBERT)—0.082517 (GloVe)—0.118169 (CodeT5).

In positive samples: 0.000176 (GloVe)—0.000853 (GraphcodeBERT)—0.106470 (CodeT5).

In negative samples: 0.004105 (GraphcodeBERT)—0.007960 (CodeT5)—Glove (0.517704).

All embeddings’ < 0.001 (refers to a distinct OOD).

These data confirmed a statistically significant distributional shift and semantic OOD in negative samples. For formulation, please see below.

,

values refer to the set of different embeddings. Digamma

means the kernel feature mapping approximated via Random Fourier Features (RFFs). For

,

is the observed MMD score,

represents the number of permutations,

is the MMD value obtained for permutation

i.

The unusually high negative-class MMD of GloVe largely arises from lexical-surface drift along dimensions that have negligible classifier weights. Suggesting the decision boundary learned during hard-negative mining is far from benign regions in these dimensions, the model maintains a low false-positive rate under OOD settings despite the apparent distribution gap. Conversely, contextual models display a smaller overall MMD yet place their boundary closer to benign clusters, yielding higher FPR. This suggests that absolute MMD magnitude is not a sufficient indicator of OOD robustness; alignment between drift directions and decision-relevant subspaces is critical.

These results, supported by lexical analysis (

Section 3.2), indicate that the observed generalisation gap is attributable to systematic data divergence, particularly in negative sample distributions, rather than random fluctuations.

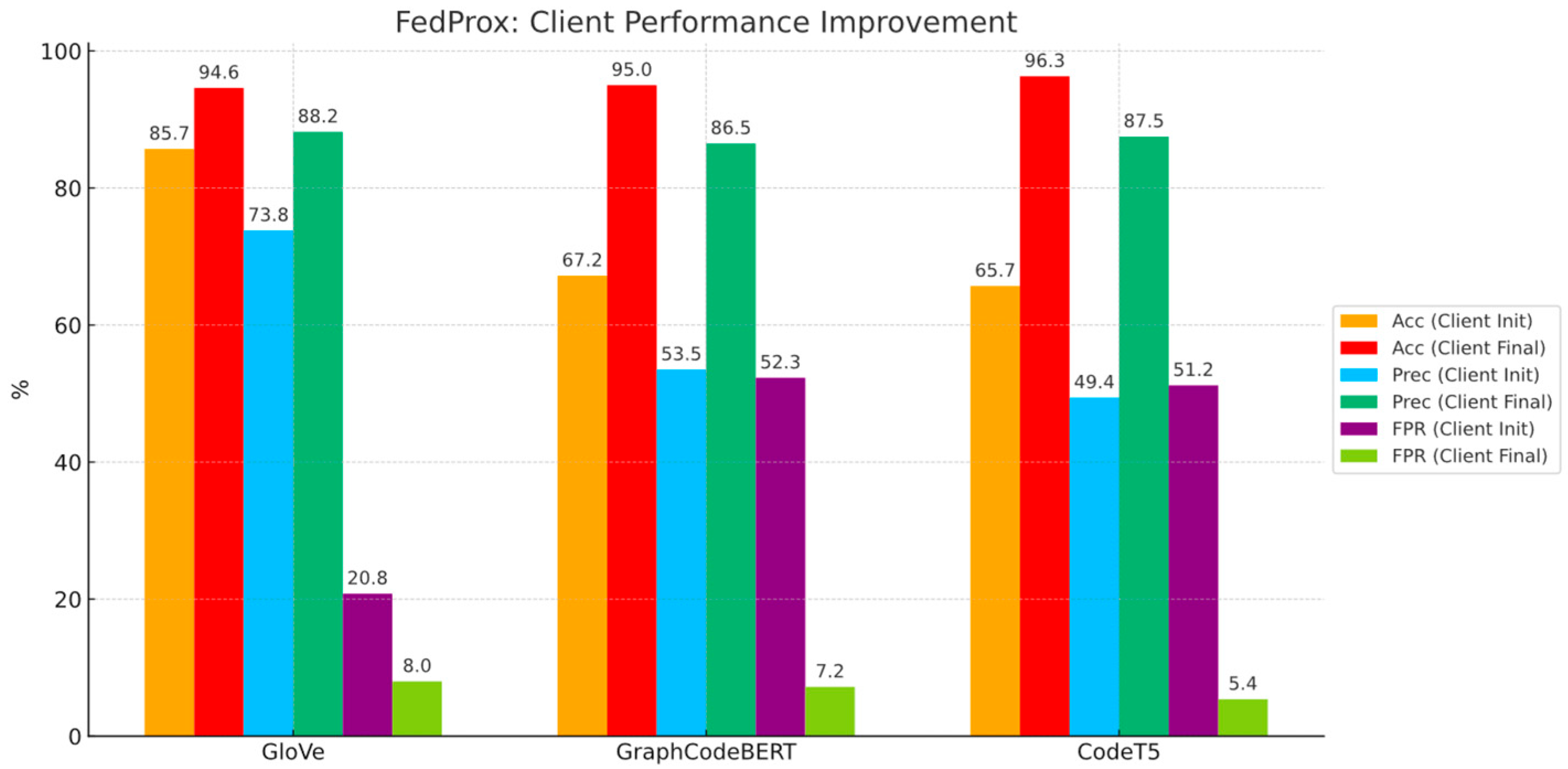

6. Conclusions

This study shows that federated learning (FL) can achieve privacy-preserving, OOD-resilient XSS detection if the embedding geometry can align well with the aggregation strategy. Analyses with JSD, Wasserstein 1, and RFF MMD reveal a split drift pattern: benign inputs mainly reorder words, whereas malicious inputs drift along deeper structural axes. Under centralised training, the static word level of GloVe benefits from stable term frequencies, while the structure-aware GraphCodeBERT is penalised, suggesting it overreacts to code edits. When data remain local and FedProx aggregates update, the variety of control and data flow graphs across clients is ensemble-smoothed, allowing GraphCodeBERT to converge the most stably and to achieve the best balance between FPR and recall.

GloVe still reaches acceptable final accuracy, but its training curve oscillation may be because client-specific vocabularies pull its sparse lexical weights in conflicting directions. CodeT5, used solely as a frozen encoder, improves fastest during the first few rounds, yet later shows mild jitter which may be because its representations are sensitive to local structural quirks.

Overall, FL effectively averages out the lexical and distributional shifts that harm each model when trained in isolation. Future work should pair FL with embeddings that are structure-aware without being oversensitive and should design aggregation rules that adapt to each client’s drift profile instead of applying uniform weighting.