Abstract

The proliferation of fake news, particularly in sensitive domains like religious texts, necessitates robust authenticity verification methods. This study addresses the growing challenge of authenticating Hadith, where traditional methods relying on the analysis of the chain of narrators (Isnad) and the content (Matn) are increasingly strained by the sheer volume in circulation. To combat this issue, machine learning (ML) and natural language processing (NLP) techniques, specifically through transfer learning, are explored to automate Hadith classification into Genuine and Fake categories. This study utilizes an imbalanced dataset of 8544 Hadiths, with 7008 authentic and 1536 fake Hadiths, to systematically investigate the collective impact of both linguistic and contextual features, particularly the chain of narrators (Isnad), on Hadith authentication. For the first time in this specialized domain, state-of-the-art pre-trained language models (PLMs) such as Multilingual BERT (mBERT), CamelBERT, and AraBERT are evaluated alongside classical algorithms like logistic regression (LR) and support vector machine (SVM) for Hadith authentication. Our best-performing model, AraBERT, achieved a 99.94% F1score when including the chain of narrators, demonstrating the profound effectiveness of contextual elements (Isnad) in significantly improving accuracy, providing novel insights into the indispensable role of computational methods in Hadith authentication and reinforcing traditional scholarly emphasis. This research represents a significant advancement in combating misinformation in this important field.

1. Introduction

Hadith, the collected sayings and actions of the Prophet Muhammad (peace be upon him), are the second most important source of Islamic law, teaching and guidance after the holy Quran. Therefore, verifying the authenticity of Hadith is a very critical task that Islamic scholars have traditionally carried out over many years through a rigorous process of analyzing the chain of narrators (Isnad) and content (Matn) of each reported saying by those chains of narrators. However, with the vast number of Hadith in circulation and their rapid spread on contemporary digital platforms like social media, manual authentication is a time-consuming, laborious process and requires deep knowledge of both the chain of narrators and the linguistic style of the Prophet Muhammad (peace be upon him) sayings. This also includes the reported actions of the Prophet Muhammad (peace be upon him).

In recent years, there has been a growing interest in applying computational methods to assist with Hadith classification and authentication. ML and NLP techniques offer the potential to automatically categorize Hadith based on their content and chain of narrators to detect Fake reported Hadith that do not meet the strict criteria for authenticity.

While several computational studies have explored aspects of Hadith classification, a significant gap remains in the systematic application and comparative evaluation of advanced ML and deep learning (DL) models, particularly pre-trained language models (PLMs), for the explicit task of authenticity verification, where the crucial role of the chain of narrators is thoroughly investigated. Prior works have predominantly focused on topic classification or utilized a limited scope of traditional classifiers without a deep dive into contextual features like Isnad.

We explore a diverse range of models, including mBERT, CamelBERT, AraBERT, XLM-RoBERTa, as well as classical algorithms like LR, SVM, Decision Trees (DT), Random Forest (RF), K-Nearest Neighbors (KNN), and Naive Bayes (NB). The key objectives of this research are as follows:

- To implement and compare various ML models for classifying Hadith into Genuine and Fake categories, aiming for enhanced accuracy and robustness.

- To conduct a novel ablation study investigating the impact of including the chain of narrators on Hadith classification performance using both ML and DL models, providing insights into the computational significance of Isnad.

- To evaluate the effectiveness of PLMs in the context of Hadith authenticity classification for the first time, contributing new and critical insights into their applicability and superior performance in this specialized and culturally sensitive domain.

We evaluate our approach using a newly compiled dataset of 8544 Hadiths from reliable sources, including 7008 authentic Hadiths from Sahih Al-Bukhari, which is recognized as the gold standard by Muslim scholars. Additionally, we include 1536 labeled Fake Hadiths, which contain issues with either the narrators or the content (details are provided in Section 3.2). This results in a balanced dataset that represents both Genuine and Fake Hadiths. The data is carefully preprocessed to extract only the content (matn) for input into the ML and DL models. Our findings demonstrate the significant impact of the chain of narrators on classification performance, highlighting the importance of contextual elements in effectively distinguishing between authentic and fabricated Hadiths.

The remainder of this paper is structured as follows. Section 2 provides a detailed overview of previous research on fake news and Hadith classification. Section 3 describes our experimental setup, data collection methods, and evaluation metrics. Section 4 presents and discusses the experimental results. Finally, Section 5 concludes the paper and outlines potential directions for future work.

2. Related Work

2.1. Overview of Previous Research on Fake News Detection

As misinformation continues to erode public trust and disrupt societal processes, fake news detection has become a highly active area of research across multiple domains. Efforts span politics, healthcare, economics and others, with interdisciplinary methods being explored to tackle the growing complexity and scale of online disinformation [1]. Recent advancements in DL have demonstrated significant promise in combating misinformation and harmful online content. The hybrid CNN-LSTM-BERT model, enhanced through feature selection and stop word filtering, offers a robust framework for fake news detection [2]. Furthermore, DL’s broader application across domains such as cyberbullying and hate speech prevention underscores its transformative potential in safeguarding digital discourse [3].

Hadiths, along with the Holy Quran, constitute the primary legal foundation for Muslims, making the authentication of Hadiths crucial. While ML and DL techniques can be utilized to detect fake Hadiths, there is currently limited research specifically in this critical area. Existing approaches for classifying Hadiths largely focus on categorizing their topics and groups or classifying them into specific classes, rather than on their authenticity. For example, ref. [4] proposed a method for categorizing Hadith topics into 13 distinct classes (books) of Sahih Al-Bukhari. Similarly, ref. [5] examined the effectiveness of categorizing Hadiths into eight distinct classes (books) using ML classifiers. Subsequently, ref. [6] focused on Hadith quotes extracted from four different books, training and comparing three ML classifiers to predict these four classes. The authors in [7] developed a system to classify Sahih Hadith compiled by Bukhari, translated into Indonesian. This system aims to aid Muslims in easily recognizing the suggestions and restrictions within a Hadith. They chose the Backpropagation Neural Network algorithm for its ability to handle classification tasks with a large number of diverse features. Additionally, they introduced a novel approach by combining this algorithm with information gain for feature selection, allowing the selection of influential features for each class label of both multi-label and single-label data. Their results indicate that 88.42% of multi-label Hadith data and 65.275% of single-label Hadith data were correctly classified using this method.

The research [8] utilized text categorization to classify specific categories by analyzing interrelations between various Islamic resources, including the Quran and Hadiths, showing SVM’s effectiveness with term weighting. Three selected categories, namely Hajj, Prayer, and Zakat, were compared using three classification methods: NB, SVM, and KNN, utilizing term weighting through Term Frequency–Inverse Document Frequency (TF-IDF). The results showed that SVM, whether used alone or with term weighting, effectively addressed the interrelationship for both single- and multi-label classifications. Furthermore, SVM achieved better accuracy with a 10–20% improvement compared to the other methods, which showed only slight improvement in accuracy. However, these prior studies, while valuable for topic classification, have consistently overlooked the crucial aspect of verifying the authenticity of these Hadiths, which is the core focus of our work.

More directly related to authenticity, ref. [9] combined expert systems and ML techniques to authenticate 999 Hadiths based on their validity degree (e.g., Sahih or Hasan), utilizing a DT classifier with extracted attributes. In contrast, ref. [10] proposed a technique that extracts Hadith phrases from web pages and uses a positional index created from a database of Hadiths to authenticate them as Sahih or Daif, without building or training a model. The authors in [11] proposed to authenticate and classify Hadiths into categories such as Sahih, Mawdu, or Daif according to these characteristics and codes, which would then be used to create a decision tree and establish a rule-based degree of Hadith authenticity. The authors in [12] proposed a method for representing Hadiths using the Vector Space Model (VSM) while considering the order of words, specifically the order of narrators, which is critical for classification. They introduced a document representation that includes word order and applied this method to classify Hadiths. Using Learning Vector Quantization (LVQ), they achieved good results in classifying Hadiths into four categories: Sahih, Hasan, Da’if, and Maudu’. The classification performed well for the main categories (Sahih and Maudu’), but Hadiths with narrators not present in the training corpus were classified as Maudu’. Ref. [13] offered a theoretical authentication framework that would determine if a Hadith is Sahih or not. Taking a different tack at authenticating Hadiths using the Sanad, ref. [14] addresses the problem by recognising the Arabic names in the chain of narrators using Part-of-Speech (POS) and Named Entity Recognition (NER).

Ref. [15] proposed a novel approach based entirely on the content of each Hadith to classify them into categories of authentic and non-authentic. They created a binary relation for each category, with Hadiths as objects and words as attributes. Keywords for each category were hierarchically ordered by importance using hyper-rectangular decomposition. These keywords were then fed into a logistic regression classifier. The method was validated on a database of approximately 1600 Hadiths, demonstrating that classification accuracy improved with the number of annotators who agreed on the authenticity of each Hadith. This suggests that their method effectively extracts relevant keywords and can complement traditional methods. Ref. [16] conducted comprehensive experiments to evaluate Hadith authenticity using various ML and DL classifiers. These included SVM, NB, and DT classifiers, as well as long short-term memory (LSTM), convolutional neural network (CNN), and CNN-LSTM DL classifiers.

While previous studies have made significant strides in classifying Hadith authenticity, they tend to focus solely on a limited selection of traditional classifiers. Additionally, their work lacks a systematic investigation into how linguistic and contextual features (chain of tellers in this study) collectively impact classification performance. This study addresses these gaps by exploring a more comprehensive approach to Hadith classification. We systematically evaluate the impact of the chain of tellers alongside the content of the Hadith, employing advanced models like multilingual BERT and classical algorithms. By leveraging a diverse dataset of 8544 Hadiths, our work not only contributes new insights into the applicability of PLMs in this domain but also establishes a more robust framework for distinguishing between authentic and fabricated Hadiths. In the following section, we provide an overview of the PLMs utilized in this study.

2.2. Overview of PLMs

PLMs have revolutionized the field of NLP by leveraging transfer learning to capture rich contextual information from large corpora. Traditional models like Word2Vec and GloVe, while effective in learning word embeddings based on co-occurrence within a fixed window, are limited to static, context-free embeddings. This restricts their ability to handle the complexity of language, where the meaning of words depends heavily on context. To address these shortcomings, modern PLMs have been developed, enabling the capture of deep, bidirectional contextual relationships between words. Among these, Bidirectional Encoder Representations from Transformers (BERT) stands out as a pivotal model that sparked widespread adoption of transformer-based architectures in NLP tasks. BERT (Bidirectional Encoder Representations from Transformers), introduced by [17], marked a major breakthrough in language modeling. BERT’s architecture differs from previous models by utilizing a deeply bidirectional transformer that reads text in both directions—left-to-right and right-to-left—allowing it to create highly contextual word representations. This makes it capable of understanding the nuanced meaning of words based on their surrounding context.

A critical component of BERT’s architecture is the attention mechanism, which helps the model determine the relevance of each word in a sentence with respect to every other word. This mechanism enables BERT to focus on the most important words when generating word embeddings, thus improving the model’s comprehension of context. The attention mechanism is defined mathematically as follows:

where Q represents the query matrix, K is the key matrix, V is the value matrix, and is the dimensionality of the keys, used to scale the result.

The softmax function ensures that the attention scores sum to 1, allowing the model to weigh each word’s importance in context. BERT further enhances this with a multi-head attention mechanism, where several attention heads work in parallel to capture multiple types of relationships between words. Each head focuses on different aspects of word interactions, providing a more detailed representation. The multi-head attention mechanism is expressed as follows:

where each head i is computed by the following:

here, , , and are learned projection matrices for each attention head, and is the output projection matrix that transforms the concatenated result of all attention heads. By combining multiple attention heads, the model captures different types of word relationships, leading to more accurate contextual word embeddings. In this study, several variants of BERT and other PLMs were explored to enhance the detection of fake Hadiths in Arabic. These models include mBERT (Multilingual BERT) Uncased (bert-base-multilingual-uncased), a multilingual model trained on 104 languages, which supports cross-lingual tasks like multilingual text classification and translation with case-insensitive input. AraBERT (aubmindlab/bert-base-arabert), a model specifically fine-tuned for Arabic, addresses the unique morphological and syntactic characteristics of Arabic text. Additionally, CAMeLBERT-CA (CAMeL-Lab/bert-base-arabic-camelbert-ca) is tailored for Arabic dialects, particularly focusing on conversational tasks using data from the CAMeL corpus. Lastly, XLM-RoBERTa Base (FacebookAI/xlm-roberta-base), a robust multilingual PLM, improves cross-lingual performance by building on BERT’s architecture and utilizing advanced pre-training techniques to handle over 100 languages, including low-resource languages. Initially, we planned to use the larger versions of these models; however, due to resource constraints, we opted for the base versions instead.

2.3. Problem Statement

This study aims to propose DL models capable of automatically classifying Hadith texts into two distinct categories: genuine Hadith (denoted by Y = 1) and fake Hadith (denoted by Y = 0). To achieve this classification task, the models will be trained on a dataset , where represents the ith Hadith text and represents its corresponding label (1 for genuine, 0 for fake). The model will learn a function that maps a Hadith text H to a predicted label .

The effectiveness of the models will be evaluated using standard text classification metrics including accuracy (A), precision (P), recall (R), and F1 score (F1), as discussed in Section 3.3.

3. Experiments

3.1. Experimental Setup

We developed our model using a combination of PyTorch (v2.1.0, developed by the PyTorch Team at Meta AI, open source) for DL and scikit-learn for traditional statistical ML. We built the DL model in the PyTorch environment and trained it on Google Colab with a powerful NVIDIA Tesla T4 graphics card. Additionally, we explored various statistical ML algorithms from scikit-learn, including LR, SVM, Random Forest, NB, DT, and KNN. We employed techniques like TF-IDF vectorization to prepare the text data for these models and optimized their hyperparameters using GridSearchCV to achieve the best performance. For fine-tuning BERT-based models, AdamW is used. This technique helps the model learn efficiently. We set the initial learning rate to , a common starting point for fine-tuning. Additionally, we employed a learning rate scheduler that automatically adjusts the learning rate as the model progresses through its training. This helps to mitigate the risk of the model overfitting on the training data. To maintain consistent input size and optimize training efficiency, we truncated or padded texts to a maximum of 512 tokens, focusing on the first 512 words. This limitation was imposed by the specific BERT variations we employed, a smaller number of articles have significantly longer lengths. We processed the data in batches of four examples simultaneously for training and testing. The models achieved their best performance after being trained for three epochs. A table summarizing the different settings we used (hyperparameters) is shown in Table 1.

Table 1.

Hyperparameter values for PLMs.

3.2. Data Collection

Islamic studies, particularly those focused on the Quran and Hadith, demand rigorous standards of authenticity, reliability, and validation when dealing with such data. Our project focuses on Hadith data. Accordingly, two types of Hadith are required: Genuine Hadith and Fake Hadith.

3.2.1. Genuine Hadith

Hadith, comprising the sayings and actions of the Prophet Muhammad (peace be upon him), are classified as genuine (Sahih or Hasan) when transmitted through an unbroken chain of narrators, each known for their trustworthiness and sound memory. While Hasan Hadith may include narrators with minor memory lapses, Sahih Hadith demands absolute reliability throughout the chain. While numerous criteria exist for classifying genuine Hadith, this paper will focus solely on Hadith found within Sahih Al-Bukhari. Widely recognized by Islamic scholars throughout history as the most accurate compilation of genuine Hadith, Sahih Al-Bukhari is considered the gold standard, ensuring the authenticity of all its entries.

To collect our Genuine Hadith dataset from Sahih Al-Bukhari, we used data that were scraped from this website https://www.islambook.com/hadith/ (accessed on 21 March 2025). The website has a collection of 7008 Hadith from several books of Al-Bukhari that constitute Sahih Al-Bukhari. Each Hadith in Sahih Albukhari contain the chain of narrators and the actual Hadith. Since our focus is on the actual Hadith, we separated the text into a chain of narrators and the actual Hadith. We then kept the actual Hadith part as the only text passed to the ML models.

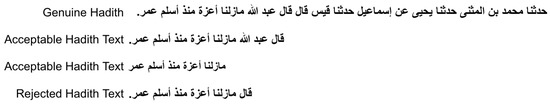

To perform the text separation, we used the REGEX approach. This method leverages regular expressions and pattern-matching to identify and isolate specific sections within the text based on predefined rules. Although this method has known issues, such as multiple data stripping from different text parts, we wrote a validation step that verifies the separated text. The validation step ensured that the extracted Hadith text (Matn) was a complete subset of the original text, confirming its integrity and reliability. Accordingly, if the resulting text from the REGEX is a complete subset of the original text, we include the observation. The following image in Figure 1 illustrates our REGEX approach:

Figure 1.

Example of an acceptable Genuine Hadith after the removal of the chain of tellers using REGEX method.

Our REGEX-based preprocessing reduced the Genuine Hadith dataset from 7008 to 6440 entries. During this process, approximately 568 Hadiths were excluded due to fragmented extractions from multiple sections of the original text, thereby affecting textual integrity. However, this loss, representing roughly (around 9%) of the dataset, is considered negligible, particularly in light of the existing class imbalance across the whole dataset, as discussed in the following section (Fake Hadith).

3.2.2. Fake Hadith

A Hadith is considered fake (also known as weak, fabricated, a lie, or forged) when it fails to meet the rigorous criteria for authenticity established for genuine Hadith. This includes instances where the chain of narrators contains individuals lacking sound character or reliable memory, or where the narrative exhibits inconsistencies with the Prophet’s (peace be upon him) known speech and behavior. The detailed criteria for identifying fake Hadith are beyond this project’s scope. However, we utilized a reputable source of known fake Hadith that specialists in Hadith authentication have rigorously reviewed.

To collect our Fake Hadith dataset, we used the website of (https://dorar.net/fake-hadith (accessed on 21 March 2025)). This website is well-maintained and widely recognized for its high standards of verification. In addition, it received endorsement from prominent Islamic scholars. Furthermore, the website is supervised by experts and scholars in the field of Hadith authentication. In particular, the website has received a commendation from Abdulaziz Al-Alshaikh, the Grand Mufti of Saudi Arabia.

To compile our Fake Hadith dataset, we developed a Python (v3.11.8, developed by the Python Software Foundation, open source) web scraper that scraped 1536 Hadith from this source (https://dorar.net/fake-hadith (accessed on 21 March 2025)). These Hadith were carefully reviewed and verified by the website’s team of Islamic scholars, ensuring they are not genuine Hadith. Some of these Hadiths have been circulating among Muslims, not knowing they are Fake Hadith.

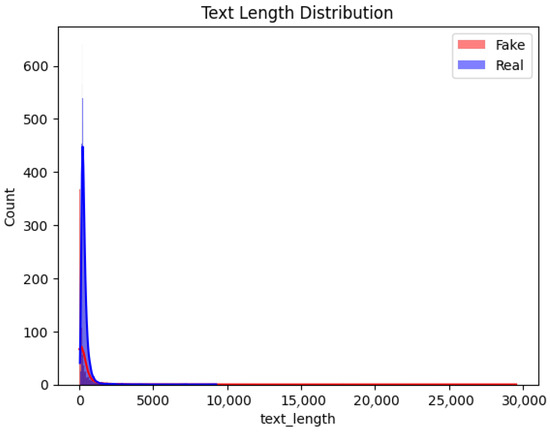

This section provides an exploratory analysis of the dataset. As shown in Figure 2, the X-axis represents text length, ranging from 0 to 30,000 characters, with the majority falling within a much shorter range and very few extending beyond 5000 characters. The Y-axis indicates the count of texts within each length interval.

Figure 2.

Text length distribution for fake and real Hadith texts.

As shown in Figure 3, the distribution is heavily right-skewed, with most text lengths concentrated towards the lower end. Both “fake” and “real” texts share similar length characteristics, peaking at short lengths. The lengths for both classes are comparable, with peaks at shorter lengths and a rapid decrease as length increases. The distribution indicates that most texts are relatively short, with a long tail of fewer longer texts. Notably, there are a few outliers with lengths reaching up to 30,000 characters.

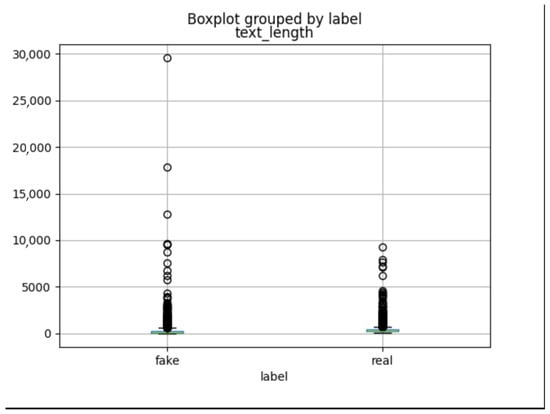

Figure 3.

Boxplot comparing the distribution of text lengths for fake and real Hadith texts.

As can be seen, there is a significant number of outliers, with some text lengths reaching up to nearly 30,000 characters. These points are spread out far from the rest of the data. Based on the preliminary analysis, it is clear that the presence of such a wide range of text lengths, including many extreme outliers, can significantly impact the performance and behavior of the ML models. Long texts may introduce noise or be atypical cases that could skew the model’s training process.

3.3. Evaluation Metrics

We used four common methods to evaluate the performance of our models in text classification: precision, precision, recall, and F1 score. The equations for these are shown below. We also looked at a confusion matrix, which gives a detailed breakdown of the model’s results. For a visual picture, we plotted a Receiver Operating Characteristic (ROC) curve. This curve shows the trade-off between correctly identifying relevant items and incorrectly identifying irrelevant ones. The higher the curve reaches the top-left corner, the better the model’s performance. We reported the ROC score for the best-performing models.

The variables , , , and represent different categories in classification models. They stand for True Positive, True Negative, False Positive, and False Negative, respectively.

As the dataset is imbalanced, with a significant number of authentic Hadiths compared to fake ones, there is a risk that the model might overfit the majority class or ignore the minority class entirely. To address this challenge in evaluation, we employed the F1 score, which is a more robust metric for imbalanced datasets than accuracy. The F1 score provides a more reliable measure of a model’s performance on both classes by considering both precision and recall. Our results, as presented in Table 2 and Table 3, demonstrate that our models achieved strong performance on both classes, which suggests that the models were not simply defaulting to the majority class.

Table 2.

The experimental results w/o data cleaning and processing. We use original data w/o removing chains of tellers. Here, the weighted average of all metrics is reported.

Table 3.

The experimental results w/o data cleaning and processing. Here, we remove chain of tellers from real Hadith. Here, the weighted average of all metrics is reported.

4. Results and Discussion

4.1. Key Innovations

This study introduces several significant contributions to the field of automated Hadith authentication.

- Systematic Investigation of Isnad: We conducted a systematic investigation into the combined effect of linguistic content (Matn) and contextual features (Isnad) on Hadith authentication, a critical aspect largely overlooked in prior research.

- First-time Evaluation of PLMs: For the first time in this specialized domain, we evaluated the effectiveness of state-of-the-art pre-trained language models (PLMs) like AraBERT, CamelBERT, and mBERT for the explicit task of Hadith authenticity verification.

- Novel Ablation Study: We performed a unique ablation study to quantitatively assess the impact of removing the chain of narrators (Isnad) on classification performance, providing crucial insights into its computational significance.

4.2. Performance Comparison and Discussion

The results of our experiments on fake Hadith detection, detailed in Table 2 and Table 3, highlight the crucial role of chain tellers (Isnad) in assessing the authenticity of Hadiths. The comparison between the two datasets, one with chain tellers and one without, underscores the impact of these chains on model performance. As shown in Table 3, the pre-trained models consistently outperform traditional ML algorithms when the chain of narrators is removed. For example, AraBERT maintains an F1 score of 98.23%, while the best traditional model, LR, drops to 93.53%. This superior performance is due to the advanced architecture of PLMs, which are pre-trained on massive text corpora. This process allows them to learn deep, bidirectional contextual relationships between words, making them exceptionally well-suited for understanding the nuanced and complex nature of Hadith texts. Their ability to capture subtle linguistic patterns and semantic relationships enables them to maintain high performance even when a critical contextual feature like the chain of narrators is removed. This contrasts with traditional models, which rely on explicit feature engineering (e.g., TF-IDF) and lack the same level of contextual understanding. This finding underscores the significant advantage of modern language models in this specialized task.

The results in Table 2 also show impressive performance for all models when the original data with chain tellers is used, with LR achieving an F1 score of 99.35% and SVM reaching 99.41%. This indicates that the presence of chain tellers provides valuable information that aids all models in distinguishing between authentic and fabricated Hadiths. The drop in performance shown in Table 3 highlights the challenges models face without this guidance, demonstrating that chains are essential for maintaining high accuracy.

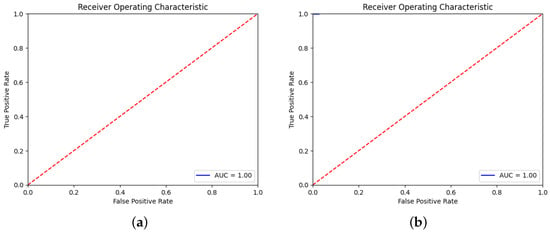

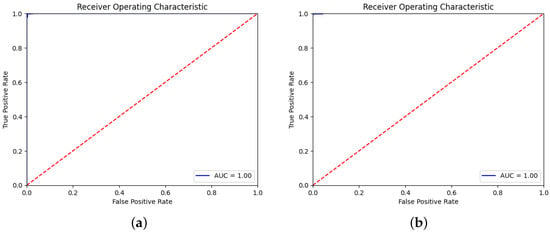

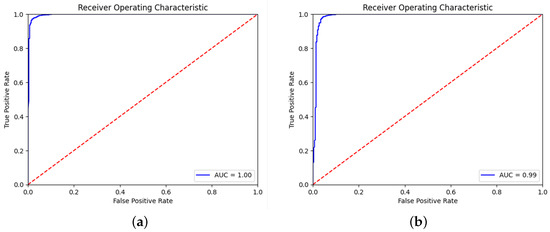

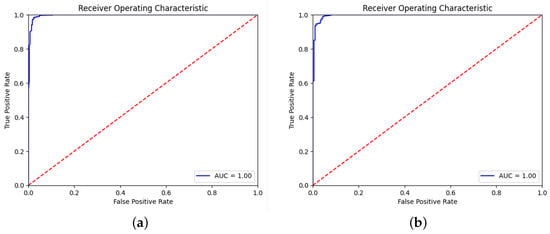

Overall, the results clearly indicate that chain tellers significantly contribute to the identification of authentic Hadiths. Their presence enhances model performance, suggesting that future approaches to fake Hadiths detection should prioritize incorporating these contextual elements to improve accuracy and reliability. Figure 4 and Figure 5 illustrate how well each model performs when removing the chain of tellers, while Figure 6 and Figure 7 show performance when the chain of tellers is included, as seen by their ROC curves. Figure 4 and Figure 5 present the ROC curves for AraBERT, XLM-RoBERTa, CamelBERT and mBERT without removing chain tellers. In all these cases, the models achieved an Area Under the Curve (AUC) score of 1.00, indicating perfect discrimination between fake and real Hadith on the test set. The ROC curves lie almost entirely in the top-left corner, far above the diagonal line of random guessing, which reflects a flawless classification performance.

Figure 4.

The ROC curve for (a) AraBERT and (b) XLM-RoBERTa w/o removing chains of tellers.

Figure 5.

The ROC curve for (a) CamelBERT and (b) mBERT w/o removing chains of tellers.

Figure 6.

The ROC curve for (a) AraBERT and (b) mBERT when removing chains of tellers.

Figure 7.

The ROC curve for (a) CamelBERT and (b) XLM-RoBERTa when removing chains of tellers.

5. Conclusions

This study presents a comprehensive analysis of ML and DL approaches for authenticating Hadith texts, with a strong emphasis on the pivotal role of the chain of tellers (Isnad) in classification accuracy. The experimental results reveal several significant and novel findings in the field of automated Hadith authentication.

First, the analysis clearly demonstrates the crucial importance of the chain of tellers in the authentication process. Models trained on texts including a chain of tellers consistently outperformed those trained solely on Hadith content (Matn), showcasing notable performance differences across various models. This finding strongly aligns with traditional Islamic scholarship’s long-standing emphasis on the importance of the chain of tellers in Hadith authentication. It suggests that computational approaches must preserve and utilize this valuable contextual information for effective classification.

Second, a comparative analysis of different model architectures confirms that while both traditional ML and DL approaches can achieve high accuracy in Hadith classification, Pre-trained language models (PLMs) exhibit superior robustness and consistency. Specifically, AraBERT achieved the highest performance with a 99.94% F1 score when the chain of tellers was included, closely followed by CamelBERT-CA and XLM-ROBERTa-base (both at 99.71% F1 score). These results underscore modern language models’ ability to capture complex contextual relationships, making them exceptionally well-suited for this nuanced task.

Third, a novel ablation study on the impact of removing the chain of tellers provided critical insights into model behavior. While DL models maintained relatively strong performance even without the explicit use of the chain of tellers (AraBERT: 98.23%, CamelBERT-CA: 98.54%), traditional ML approaches showed a significantly more pronounced degradation in performance (e.g., Decision Trees dropping from 99.59% to 87.89% F1 score). This indicates that while PLMs can partially compensate for missing contextual information provided by the chain of tellers, the presence of the chain of tellers remains highly beneficial for achieving optimal performance and reliability.

These findings have important practical implications for Islamic studies. They demonstrate the significant potential for automated processes to assist scholars in the initial screening of Hadith authenticity, while simultaneously highlighting the continued and indeed reinforced importance of traditional authentication methods underpinned by the chain of tellers. This research marks a significant advancement in addressing the challenges of misinformation in this important field.

Author Contributions

Conceptualization, J.A., A.A., and T.A.-D.; methodology, J.A., A.A., and T.A.-D.; software, J.A., A.A., and T.A.-D.; validation, J.A., A.A., and T.A.-D.; formal analysis, J.A., A.A., and T.A.-D.; investigation, J.A., A.A., and T.A.-D.; writing—original draft preparation, J.A., A.A., and T.A.-D.; writing—review and editing, J.A., A.A., and T.A.-D.; visualization, J.A., A.A., and T.A.-D.; project administration, J.A., A.A., and T.A.-D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alghamdi, J.; Lin, Y.; Luo, S. ABERT: Adapting BERT model for efficient detection of human and AI-generated fake news. Int. J. Inf. Manag. Data Insights 2025, 5, 100353. [Google Scholar] [CrossRef]

- Zhao, J.H.S.; Al-Dala’in, T. The Hybrid Model Combination of Deep Learning Techniques, CNN-LSTM, BERT, Feature Selection, and Stop Words to Prevent Fake News. In Proceedings of the Third International Conference on Innovations in Computing Research (ICR’24), Athens, Greece, 12–14 August 2024; Daimi, K., Al Sadoon, A., Eds.; Springer: Cham, Switzerland, 2024; pp. 173–184. [Google Scholar]

- Al-Dala’in, T.; Zhao, J.H.S. Overview of the Benefits Deep Learning Can Provide Against Fake News, Cyberbullying and Hate Speech. In Proceedings of the Second International Conference on Innovations in Computing Research (ICR’23), Madrid, Spain, 4–6 September 2023; Daimi, K., Al Sadoon, A., Eds.; Springer: Cham, Switzerland, 2023; pp. 13–27. [Google Scholar]

- Jbara, K.M.A.; Sleit, A.T.; Hammo, B.H. Knowledge Discovery in Al-Hadith Using Text Classification Algorithm; University of Jordan: Amman, Jordan, 2009. [Google Scholar]

- Alkhatib, M. Classification of Al-Hadith Al-Shareef using data mining algorithm. In Proceedings of the European, Mediterranean and Middle Eastern Conference on Information Systems, EMCIS2010, Abu Dhabi, United Arab Emirates, 12–13 April 2010; pp. 1–23. [Google Scholar]

- Al-Kabi, M.N.; Wahsheh, H.A.; Alsmadi, I.M. A topical classification of hadith Arabic text. In Proceedings of the 2nd International Conference on Islamic Applications in Computer Science And Technology, Amman, Jordan, 12–13 October 2014. [Google Scholar]

- Bakar, M.Y.A.; Al Faraby, S.; Adiwijaya, K. Multi-label topic classification of hadith of Bukhari (Indonesian language translation) using information gain and backpropagation neural network. In Proceedings of the 2018 International Conference on Asian Language Processing (IALP), Bandung, Indonesia, 15–17 November 2018; IEEE: New York, NY, USA, 2018; pp. 344–350. [Google Scholar]

- Rostam, N.A.P.; Malim, N.H.A.H. Text categorisation in Quran and Hadith: Overcoming the interrelation challenges using machine learning and term weighting. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 658–667. [Google Scholar] [CrossRef]

- Aldhlan, K.A.; Zeki, A.M.; Zeki, A.M.; Alreshidi, H.A. Novel mechanism to improve hadith classifier performance. In Proceedings of the 2012 International Conference on Advanced Computer Science Applications and Technologies (ACSAT), Kuala Lumpur, Malaysia, 26–28 November 2012; IEEE: New York, NY, USA, 2012; pp. 512–517. [Google Scholar]

- Shatnawi, M.Q.; Abuein, Q.Q.; Darwish, O. Verification hadith correctness in islamic web pages using information retrieval techniques. In Proceedings of the International Conference on Information & Communication Systems, Avila, Spain, 11–13 March 2011; pp. 164–167. [Google Scholar]

- Najiyah, I.; Susanti, S.; Riana, D.; Wahyudi, M. Hadith degree classification for Shahih Hadith identification web based. In Proceedings of the 2017 5th International Conference on Cyber and IT Service Management (CITSM), Denpasar, Indonesia, 8–10 August 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Ghanem, M.; Mouloudi, A.; Mourchid, M. Classification of hadiths using LVQ based on VSM considering words order. Int. J. Comput. Appl. 2016, 148, 25–28. [Google Scholar] [CrossRef]

- Ibrahim, N.K.; Samsuri, S.; Seman, M.S.A.; Ali, A.E.B.; Kartiwi, M. Frameworks for a computational isnad authentication and mechanism development. In Proceedings of the 2016 6th International Conference on Information and Communication Technology for The Muslim World (ICT4M), Jakarta, Indonesia, 22–24 November 2016; IEEE: New York, NY, USA, 2016; pp. 154–159. [Google Scholar]

- Balgasem, S.S.; Zakaria, L.Q. A hybrid method of rule-based approach and statistical measures for recognizing narrators name in hadith. In Proceedings of the 2017 6th International Conference on Electrical Engineering and Informatics (ICEEI), Langkawi, Malaysia, 25–27 November 2017; IEEE: New York, NY, USA, 2017; pp. 1–5. [Google Scholar]

- Hassaine, A.; Safi, Z.; Jaoua, A. Authenticity detection as a binary text categorization problem: Application to Hadith authentication. In Proceedings of the 2016 IEEE/ACS 13th International Conference of Computer Systems and Applications (AICCSA), Agadir, Morocco, 29 November–2 December 2016; IEEE: New York, NY, USA, 2016; pp. 1–7. [Google Scholar]

- Tarmom, T.; Atwell, E.; Alsalka, M. Deep learning vs compression-based vs traditional machine learning classifiers to detect Hadith authenticity. In Proceedings of the Annual International Conference on Information Management and Big Data, Virtual, 1–3 December 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 206–222. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).