Interaction-Based Vehicle Automation Model for Intelligent Vision Systems

Abstract

1. Introduction

2. Related Work

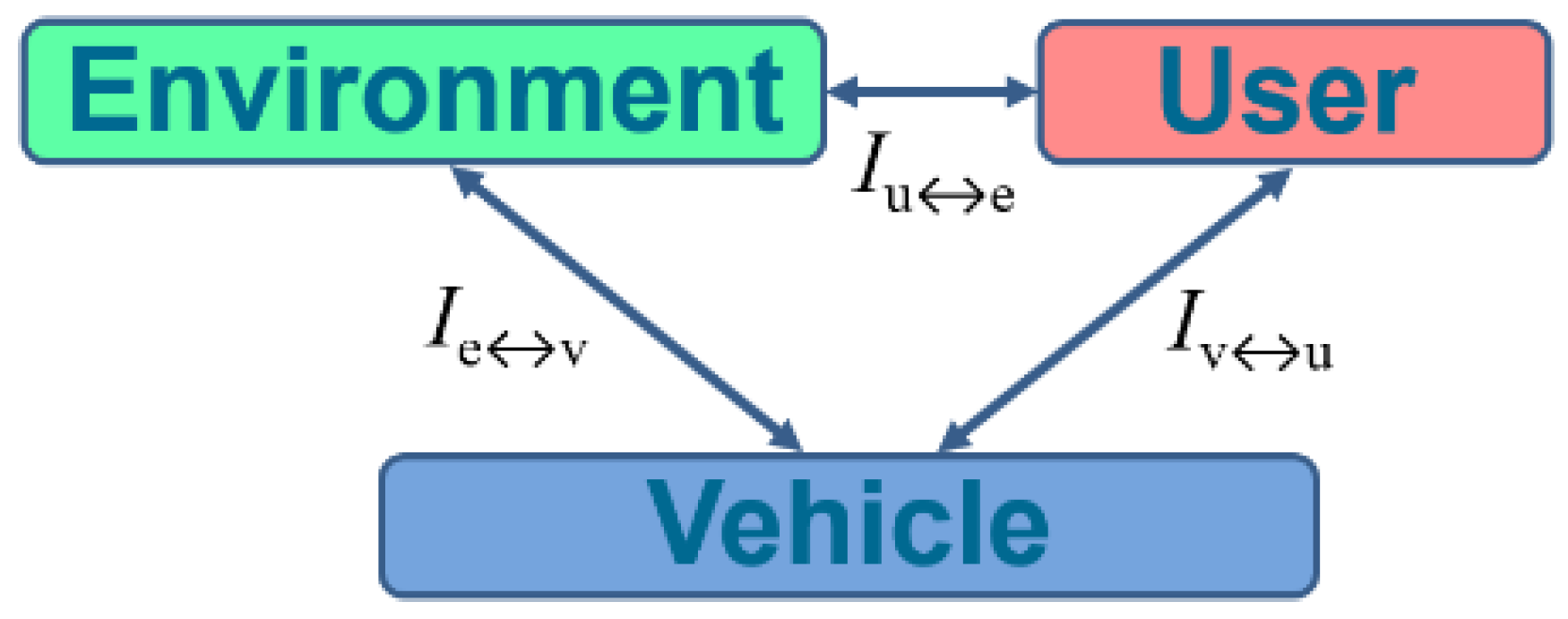

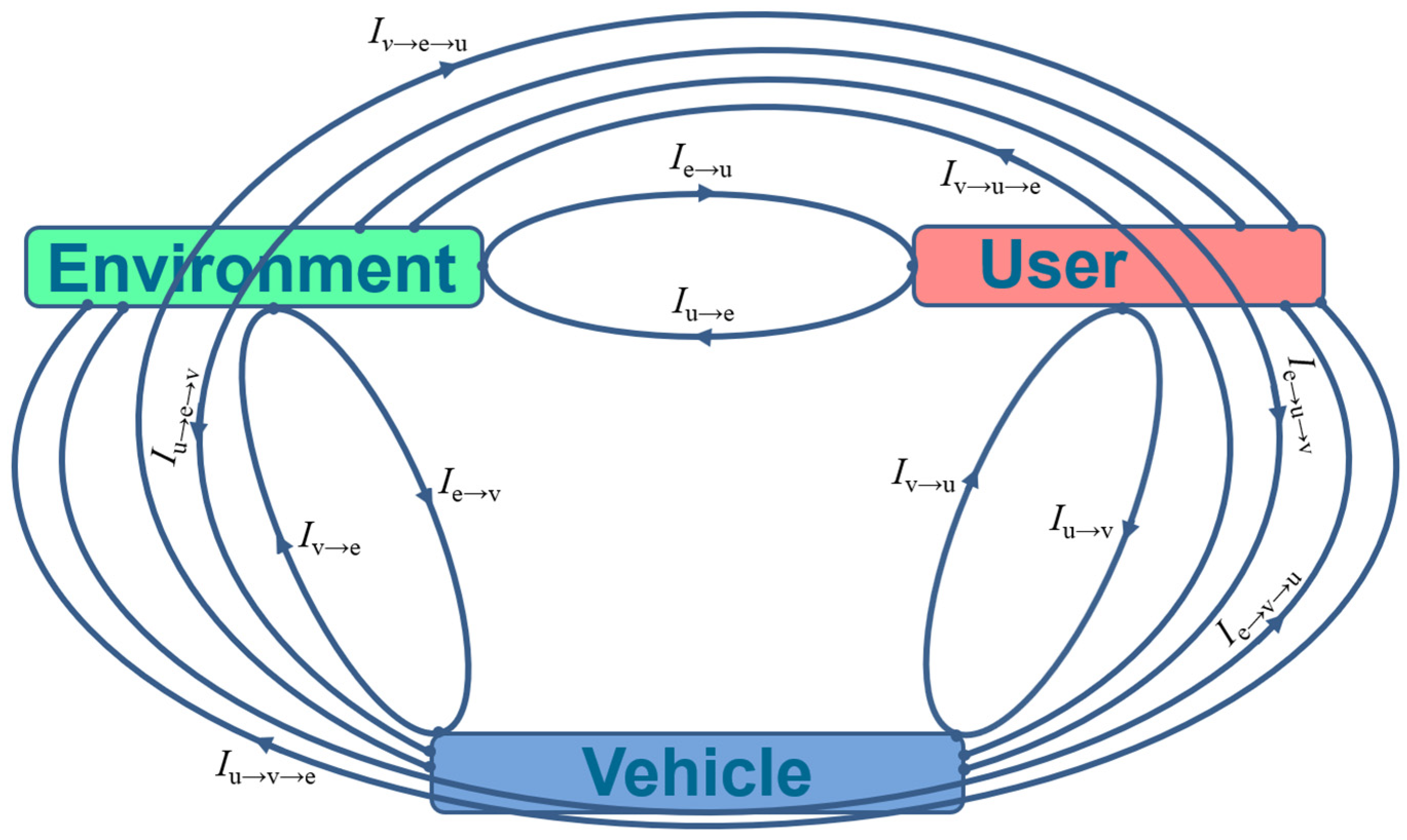

3. Proposed Interaction-Based Automation Analysis

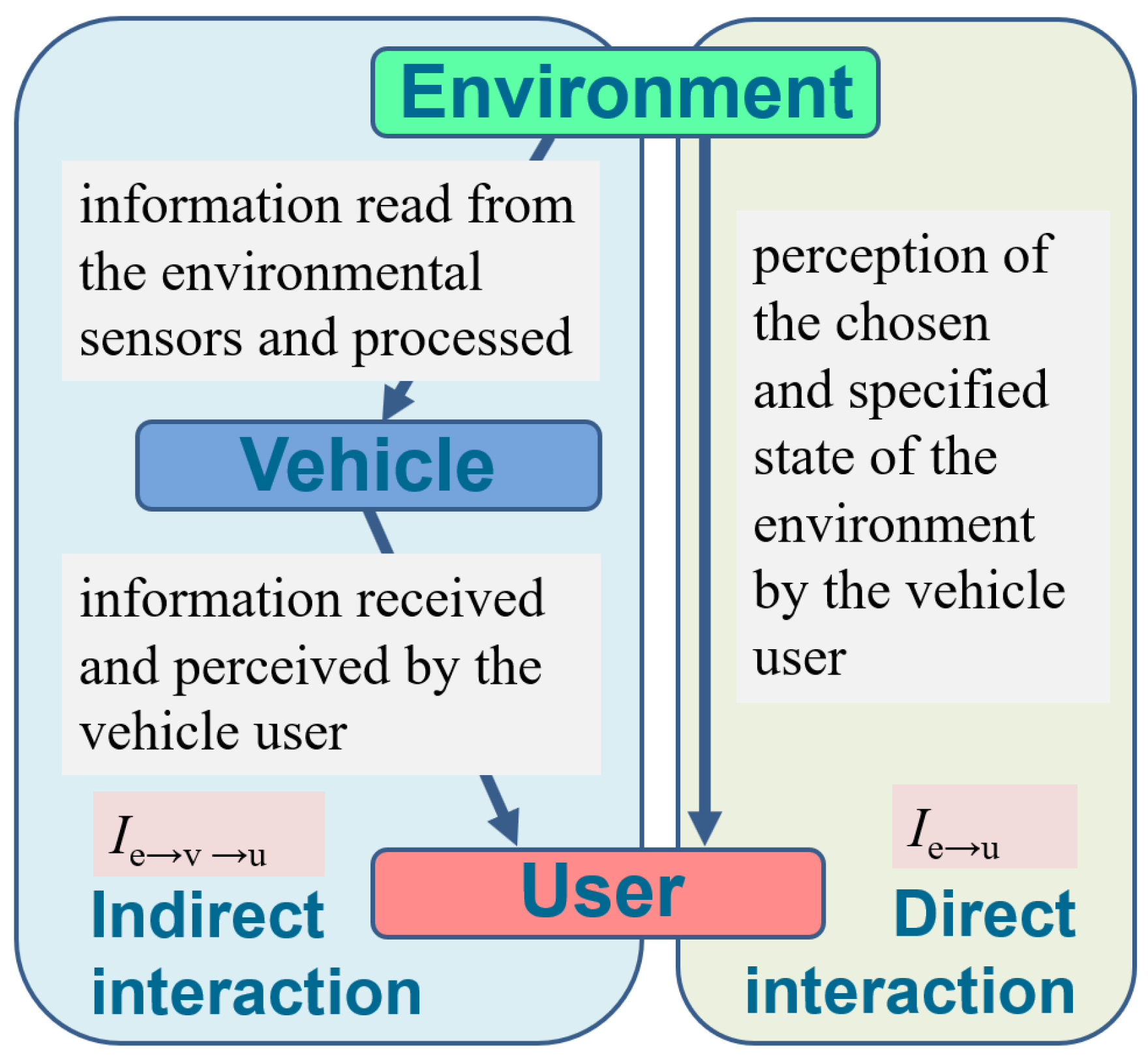

3.1. Definitions of Concepts and Notations

3.2. Mathematical Relationships

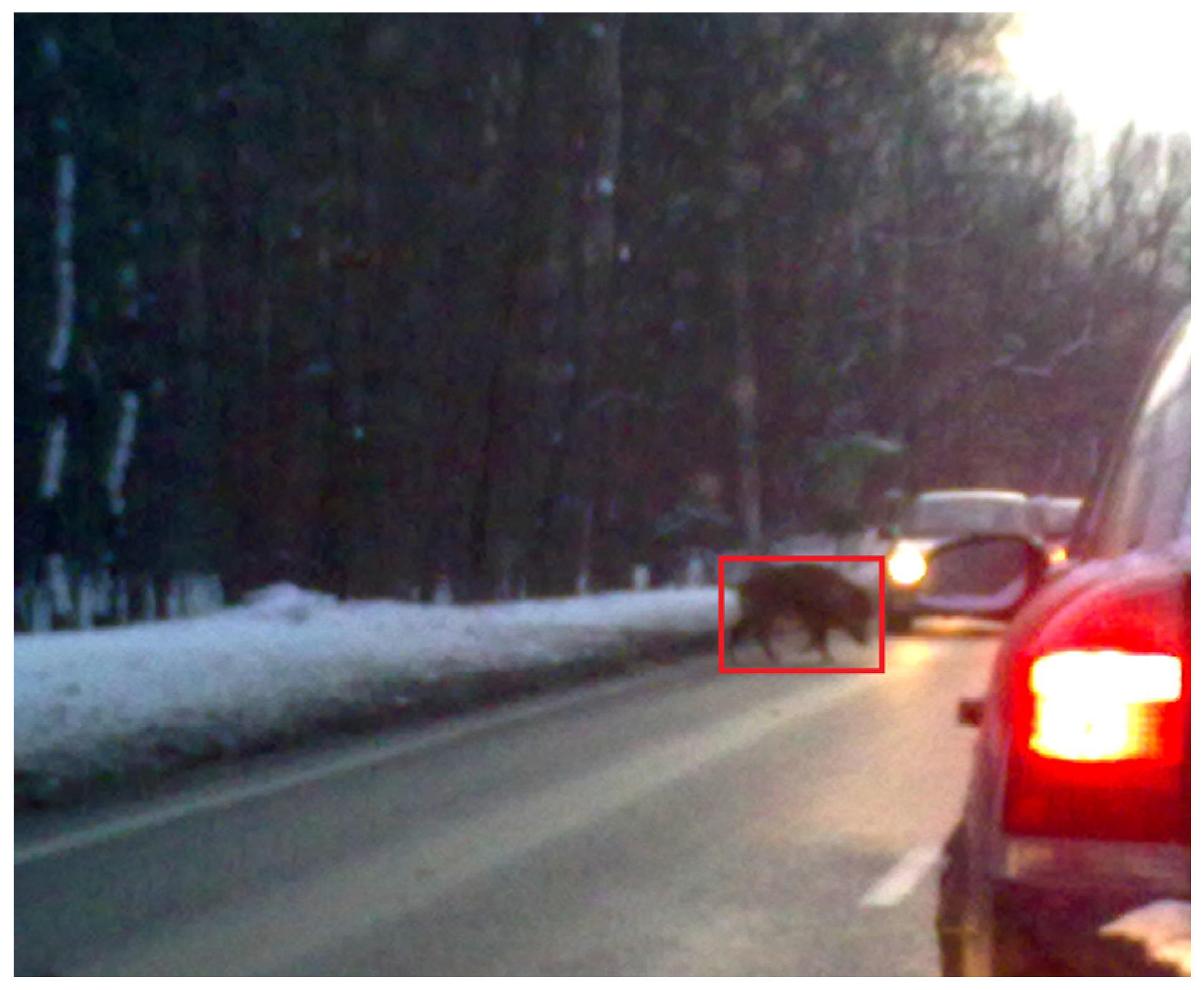

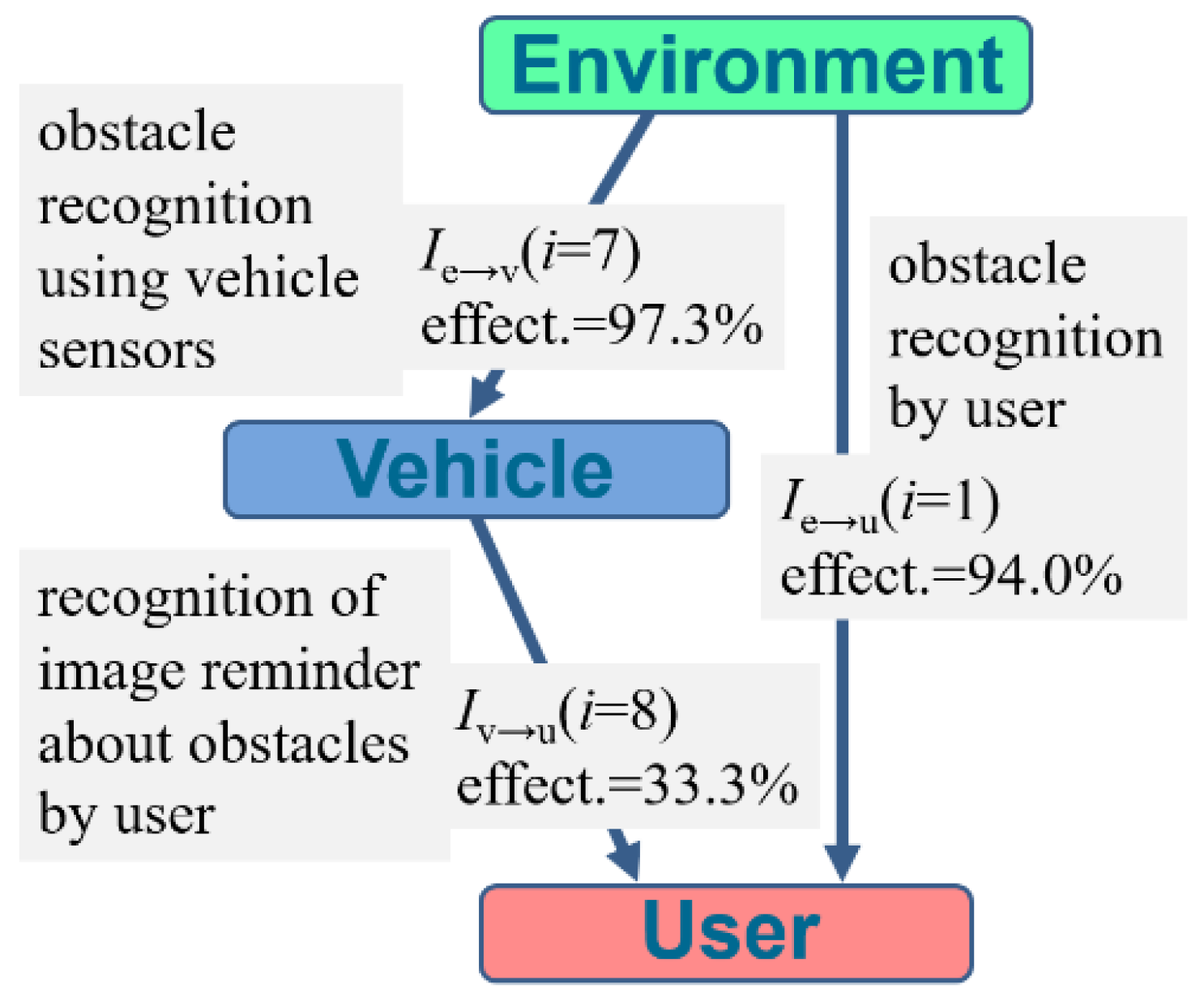

3.3. Reference to Vision Systems

3.4. Measures of Effectiveness

3.5. Analysis Based on Averaging and Multiplication

4. Application of the Proposed Model to Vision Systems

4.1. Input Data from the Literature Review of Intelligent Automotive Vision Systems

| Index (i) | Vehicle → Environment Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | classification of vehicle body elements (e.g., side indicator, door handle, fuel filler cap, headlight, front bumper) [21] | 99.0% |

| 2 | classification of vehicle interior elements (e.g., steering wheel, door handle, interior lamp, door lock mechanism) [21] | 100% |

| 3 | classification of vehicle engine elements (engine block, voltage regulator, air filter, coolant reservoir) [21] | 100% |

| 4 | car dashboard icons classification (e.g., power steering, brake system, seat belt, battery, exhaust control) [22] | 100% |

| 5 | tire inflation pressure recognition (normal and abnormal) [23] | 99.3% |

| 6 | tire tread height classification (proper and worn tread) [24] | 84.1% |

| 7 | vehicle corrosion detection (identification and analysis of surface rust and appraisal its severity) [25] | 96.0% |

| 8 | vehicle damage detection and classification (various settings, lighting, makes and models; damages: bend, cover damage, crack, dent, glass shatter, light broken, missing, scratch) [26] | 91.0% |

| 9 | tire damage recognition (under various interfering conditions, including stains, wear, and complex mixed damages) [27] | 98.4% |

| 10 | rim damage detection (various weather and lighting conditions) [24] | 90.0% |

| mean value of effectiveness | 95.8% | |

| product of effectiveness | 64.0% | |

| variance | 30.6 | |

| standard deviation | 5.5 |

| Index (i) | User → Vehicle Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | facial recognition-based vehicle authentication (facial features analysis to authorize or not to authorize person) [28] | 98.0% |

| 2 | driver drowsiness detection using facial analysis (recognition of drowsy and awake driver behavior) [29] | 100% |

| 3 | driver emotion recognition using face images (anger, happiness, fear, sadness) [30] | 96.6% |

| 4 | driver distraction detection based on eye gaze analysis (recognition of distracted, i.e., the gazing off the forward roadway, and the alert state, i.e., looking at the road ahead) [31] | 90.9% |

| 5 | hand gesture image recognition (multi-class hand gesture classification) [32] | 97.6% |

| 6 | visual detection of both hand presence on steering wheel (images collected from the back of the steering wheel) [33] | 95.0% |

| 7 | vision-based feet detection power liftgate (various weather conditions, different illumination conditions, different types of shoes in various seasons, and various ground surfaces) [34] | 93.3% |

| 8 | driver operating the shiftgear pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 100% |

| 9 | driver eating or smoking pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 100% |

| 10 | driver responding to a cell phone pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 99.6% |

| mean value of effectiveness | 97.1% | |

| product of effectiveness | 74.1% | |

| variance | 10.0 | |

| standard deviation | 3.2 |

| Index (i) | User → Environment Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | binary classification of presence/absence of a passenger along with the driver using external camera images (two cameras set at the oncoming traffic and perpendicular to it) [38] | 97.0% |

| 2 | rear passenger count using external camera images (two cameras set at the oncoming traffic and perpendicular to it) [38] | 91.1% |

| 3 | passenger detection using surveillance images without filter (various window tinting) [39] | 10.2% |

| 4 | passenger detection using surveillance images with neutral gray filter (various window tinting) [39] | 68.8% |

| 5 | passenger detection using surveillance images with near-infrared filter (various window tinting) [39] | 71.6% |

| 6 | passenger detection using surveillance images with near-infrared and ultraviolet filter (various window tinting) [39] | 72.2% |

| 7 | passenger detection using surveillance images with polarizing filter (various window tinting) [39] | 74.7% |

| 8 | passenger detection using surveillance images with near-infrared and neutral gray filter (various window tinting) [39] | 75.8% |

| 9 | passenger detection using surveillance images with near-infrared and polarizing filter (various window tinting) [39] | 76.6% |

| 10 | passenger detection using surveillance images with ultraviolet filter (various window tinting) [39] | 79.0% |

| mean value of effectiveness | 71.7% | |

| product of effectiveness | 1.1% | |

| variance | 545.6 | |

| standard deviation | 23.4 |

| Index (i) | Environment → User Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | car recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 94.0% |

| 2 | human recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 98.0% |

| 3 | train recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 94.0% |

| 4 | dog recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 88.0% |

| 5 | cat recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 81.0% |

| 6 | bird recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 83.0% |

| 7 | counting people with various degrees of occlusion by observers in 2D images (several to a dozen people to count) [41] | 33.3% |

| 8 | counting people with various degrees of occlusion by observers in 3D images (several to a dozen people to count) [41] | 54.8% |

| 9 | estimation of the dependence of object motion trajectories with limited visibility by observers for 2D images (relationships between people, vehicles and the traffic sign line) [41] | 36.5% |

| 10 | estimation of the dependence of object motion trajectories with limited visibility by observers for 3D images (relationships between people, vehicles and the traffic sign line) [41] | 45.3% |

| mean value of effectiveness | 70.8% | |

| product of effectiveness | 1.5% | |

| variance | 650.5 | |

| standard deviation | 25.5 |

| Index (i) | Vehicle → User Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | recognition of text reminder about safety belt displayed on the screen (noticed in pre-driving stage) [42] | 50.0% |

| 2 | recognition of image reminder about safety belt displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 3 | recognition of text reminder about fuel check displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 4 | recognition of image reminder about fuel check displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 5 | recognition of text reminder about mirror check displayed on the screen (noticed in pre-driving stage) [42] | 50.0% |

| 6 | recognition of image reminder about mirror check displayed on the screen (noticed in pre-driving stage) [42] | 66.7% |

| 7 | recognition of text reminder about obstacles displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 8 | recognition of image reminder about obstacles displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 9 | recognition of text reminder about drowsy driving displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 10 | recognition of image reminder about drowsy driving displayed on the screen (noticed in during-driving stage) [42] | 16.7% |

| mean value of effectiveness | 53.3% | |

| product of effectiveness | 0.1% | |

| variance | 604.5 | |

| standard deviation | 24.6 |

4.2. Effectiveness Averaging

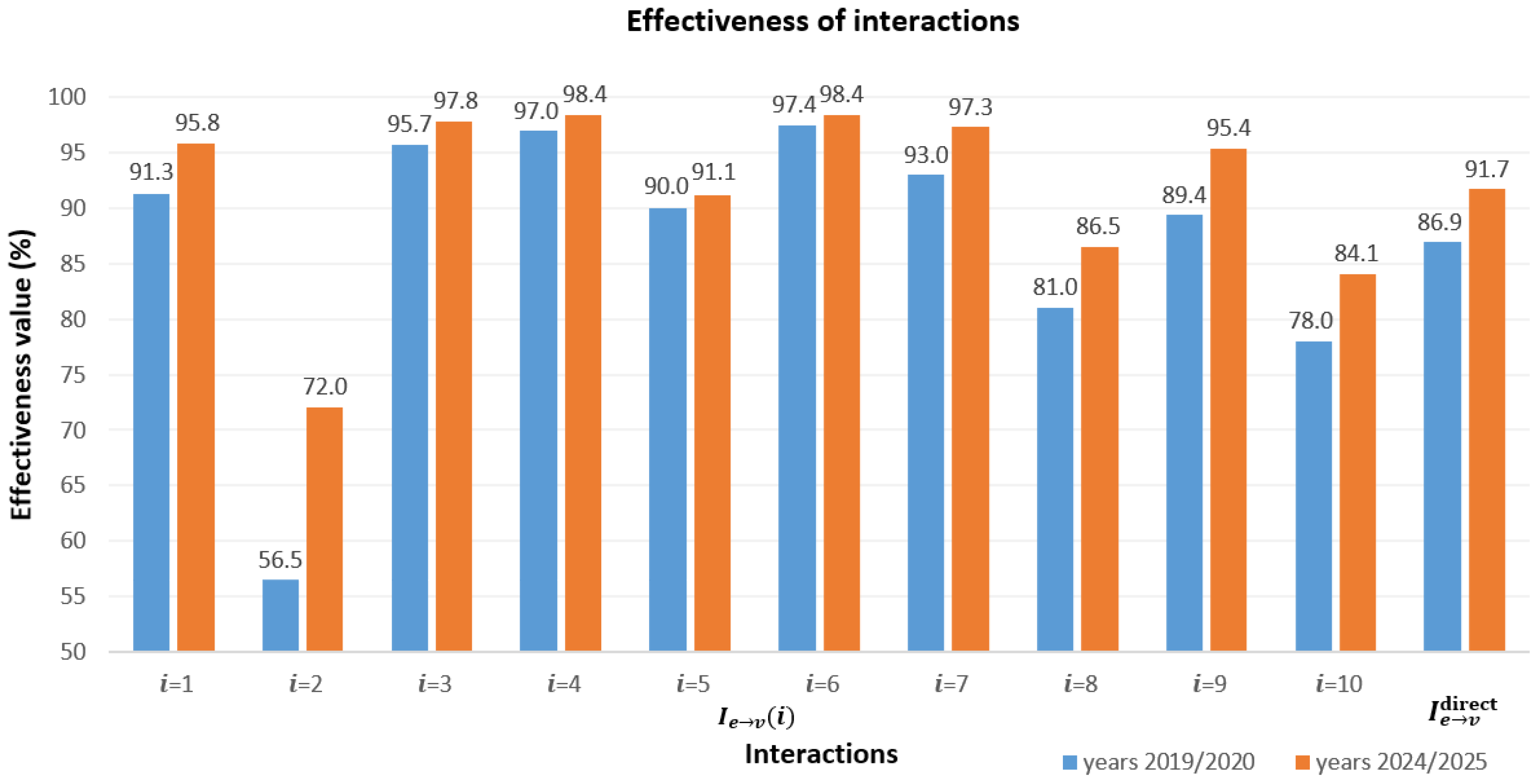

4.2.1. Effectiveness over Time

4.2.2. Effectiveness for Special Application Requirements

4.2.3. Effectiveness for Special Environmental Conditions

4.3. Effectiveness Multiplication

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wagner, P. Traffic Control and Traffic Management in a Transportation System with Autonomous Vehicles. In Autonomous Driving: Technical, Legal and Social Aspects; Maurer, M., Gerdes, J.C., Lenz, B., Winner, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 301–316. [Google Scholar] [CrossRef]

- Ali, F.; Khan, Z.H.; Gulliver, T.A.; Khattak, K.S.; Altamimi, A.B. A Microscopic Traffic Model Considering Driver Reaction and Sensitivity. Appl. Sci. 2023, 13, 7810. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Cierpiszewska, J.; Kus, Ł.; Zborowski, P. Automatic recognition of dangerous objects in front of the vehicle’s windshield. In Proceedings of the 2024 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 25–27 September 2024; IEEE: New York, NY, USA, 2024; pp. 91–96. [Google Scholar] [CrossRef]

- Tollner, D.; Zöldy, M. Road Type Classification of Driving Data Using Neural Networks. Computers 2025, 14, 70. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P. How to combine issues related to autonomous vehicles—A proposal with a literature review. In Proceedings of the 2023 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 20–22 September 2023; IEEE: New York, NY, USA, 2023; pp. 189–194. [Google Scholar] [CrossRef]

- Balcerek, J.; Dąbrowski, A.; Pawłowski, P. Vision signals and systems in the vehicle automation model. Prz. Elektrotechniczny 2024, 100, 208–211. [Google Scholar] [CrossRef]

- Dilek, E.; Dener, M. Computer Vision Applications in Intelligent Transportation Systems: A Survey. Sensors 2023, 23, 2938. [Google Scholar] [CrossRef]

- González-Saavedra, J.F.; Figueroa, M.; Céspedes, S.; Montejo-Sánchez, S. Survey of Cooperative Advanced Driver Assistance Systems: From a Holistic and Systemic Vision. Sensors 2022, 22, 3040. [Google Scholar] [CrossRef]

- Effectiveness, Awork, Glossary. Available online: https://www.awork.com/glossary/effectiveness (accessed on 26 August 2025).

- Noola, D.A.; Basavaraju, D.R. Corn leaf image classification based on machine learning techniques for accurate leaf disease detection. Int. J. Electr. Comput. Eng. 2022, 12, 2509–2516. [Google Scholar] [CrossRef]

- Fan, X.; Liu, R. Automatic Detection and Classification of Surface Diseases on Roads and Bridges Using Fuzzy Neural Networks. In Proceedings of the 2024 International Conference on Electrical Drives, Power Electronics & Engineering (EDPEE), Athens, Greece, 27–29 February 2024; IEEE: New York, NY, USA, 2024; pp. 770–775. [Google Scholar] [CrossRef]

- Maddiralla, V.; Subramanian, S. Effective lane detection on complex roads with convolutional attention mechanism in autonomous vehicles. Sci. Rep. 2024, 14, 19193. [Google Scholar] [CrossRef]

- Prakash, A.J.; Sruthy, S. Enhancing traffic sign recognition (TSR) by classifying deep learning models to promote road safety. Signal Image Video Process. 2024, 18, 4713–4729. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Lin, S.-Y.; Tu, K.-C. Traffic Light Detection and Recognition Using a Two-Stage Framework From Individual Signal Bulb Identification. IEEE Access 2024, 12, 132279–132289. [Google Scholar] [CrossRef]

- Wang, K.-C.; Meng, C.-L.; Dow, C.-R.; Lu, B. Pedestrian-Crossing Detection Enhanced by CyclicGAN-Based Loop Learning and Automatic Labeling. Appl. Sci. 2025, 15, 6459. [Google Scholar] [CrossRef]

- Dhatrika, S.K.; Reddy, D.R.; Reddy, N.K. Real-Time Object Recognition For Advanced Driver-Assistance Systems (ADAS) Using Deep Learning On Edge Devices. Procedia Comput. Sci. 2025, 252, 25–42. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Dolny, K.; Skotnicka, J. Automatic recognition of emergency vehicles in images from video recorders. In Proceedings of the 2024 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 25–27 September 2024; IEEE: New York, NY, USA, 2024; pp. 85–90. [Google Scholar] [CrossRef]

- Parkavi, K.; Ganguly, A.; Sharma, S.; Banerjee, A.; Kejriwal, K. Enhancing Road Safety: Detection of Animals on Highways During Night. IEEE Access 2025, 13, 36877–36896. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Trzciński, B. Vision system for automatic recognition of animals on images from car video recorders. Przegląd Elektrotech. 2023, 99, 278–281. [Google Scholar] [CrossRef]

- Wiseman, Y. Real-time monitoring of traffic congestions. In Proceedings of the 2017 IEEE International Conference on Electro Information Technology (EIT), Lincoln, NE, USA, 14–17 May 2017; IEEE: New York, NY, USA, 2017; pp. 501–505. [Google Scholar] [CrossRef]

- Balcerek, J.; Dąbrowski, A.; Pawłowski, P.; Tokarski, P. Automatic recognition of elements of Polish historical vehicles. Przegląd Elektrotech. 2024, 100, 212–215. [Google Scholar] [CrossRef]

- Balcerek, J.; Hinc, M.; Jalowski, Ł.; Michalak, J.; Rabiza, M.; Konieczka, A. Vision-based mobile application for supporting the user in the vehicle operation. In Proceedings of the 2019 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 18–20 September 2019; IEEE: New York, NY, USA, 2019; pp. 250–255. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, J.; Kong, X.; Deng, L.; Obrien, E.J. Vision-based identification of tire inflation pressure using Tire-YOLO and deflection. Measurement 2025, 242, 116228. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Pawłowski, P.; Dankowski, C.; Firlej, M.; Fulara, P. Automatic recognition of vehicle wheel parameters. Prz. Elektrotech. 2022, 98, 205–208. [Google Scholar] [CrossRef]

- Dhonde, K.; Mirhassani, M.; Tam, E.; Sawyer-Beaulieu, S. Design of a Real-Time Corrosion Detection and Quantification Protocol for Automobiles. Materials 2022, 15, 3211. [Google Scholar] [CrossRef]

- Amodu, O.D.; Shaban, A.; Akinade, G. Revolutionizing Vehicle Damage Inspection: A Deep Learning Approach for Automated Detection and Classification. In Proceedings of the 9th International Conference on Internet of Things, Big Data and Security (IoTBDS 2024), Angers, France, 28–30 April 2024; SciTePress: Setúbal, Portugal, 2024; pp. 199–208. [Google Scholar] [CrossRef]

- Shen, D.; Cao, J.; Liu, P.; Guo, J. Intelligent recognition system of in-service tire damage driven by strong combination augmentation and contrast fusion. Neural Comput. Applic. 2025, 37, 5795–5813. [Google Scholar] [CrossRef]

- Gade, M.V.; Afroj, M.; Kadaskar, S.; Wagh, P. Facial Recognition Based Vehicle Authentication System. IJIREEICE 2025, 13, 124–129. [Google Scholar] [CrossRef]

- Essahraui, S.; Lamaakal, I.; El Hamly, I.; Maleh, Y.; Ouahbi, I.; El Makkaoui, K.; Filali Bouami, M.; Pławiak, P.; Alfarraj, O.; Abd El-Latif, A.A. Real-Time Driver Drowsiness Detection Using Facial Analysis and Machine Learning Techniques. Sensors 2025, 25, 812. [Google Scholar] [CrossRef]

- Luan, X.; Wen, Q.; Hang, B. Driver emotion recognition based on attentional convolutional network. Front. Phys. 2024, 12, 1387338. [Google Scholar] [CrossRef]

- Hermandez, J.-S.; Pardales, F.-T., Jr.; Lendio, N.M.-S.; Manalili, I.E.-S.; Garcia, E.-A.; Tee, A.-C., Jr. Real-time driver drowsiness and distraction detection using convolutional neural network with multiple behavioral features. World J. Adv. Res. Rev. 2024, 23, 816–824. [Google Scholar] [CrossRef]

- Ahmed, I.T.; Gwad, W.H.; Hammad, B.T.; Alkayal, E. Enhancing Hand Gesture Image Recognition by Integrating Various Feature Groups. Technologies 2025, 13, 164. [Google Scholar] [CrossRef]

- Borghi, G.; Frigieri, E.; Vezzani, R.; Cucchiara, R. Hands on the wheel: A Dataset for Driver Hand Detection and Tracking. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; IEEE: New York, NY, USA, 2018; pp. 564–570. [Google Scholar] [CrossRef]

- Liu, J.; Chen, Y.; Yan, F.; Zhang, R.; Liao, X.; Wu, Y.; Sun, Y.; Hu, D.; Chen, N. Vision-based feet detection power liftgate with deep learning on embedded device. J. Phys. Conf. Ser. 2022, 2302, 012010. [Google Scholar] [CrossRef]

- Yan, C.; Coenen, F.; Zhang, B. Driving posture recognition by convolutional neural networks. IET Comput. Vis. 2016, 10, 103–114. [Google Scholar] [CrossRef]

- Gu, X.; Lu, Z.; Ren, J.; Zhang, Q. Seat belt detection using gated Bi-LSTM with part-to-whole attention on diagonally sampled patches. Expert Syst. Appl. 2024, 252, 123784. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Pawłowski, P.; Rusinek, W.; Trojanowski, W. Vision system for automatic recognition of selected road users. In Proceedings of the 2022 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 21–22 September 2022; IEEE: New York, NY, USA, 2022; pp. 155–160. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, A.; Santra, B.; Lalitha, K.; Kolla, M.; Gupta, M.; Singh, R. VPDS: An AI-Based Automated Vehicle Occupancy and Violation Detection System. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9498–9503. [Google Scholar] [CrossRef][Green Version]

- Kuchár, P.; Pirník, R.; Kafková, J.; Tichý, T.; Ďurišová, J.; Skuba, M. SCAN: Surveillance Camera Array Network for Enhanced Passenger Detection. IEEE Access 2024, 12, 115237–115255. [Google Scholar] [CrossRef]

- van Dyck, L.E.; Kwitt, R.; Denzler, S.J.; Gruber, W.L. Comparing Object Recognition in Humans and Deep Convolutional Neural Networks—An Eye Tracking Study. Front. Neurosci. 2021, 15, 750639. [Google Scholar] [CrossRef]

- Balcerek, J. Human-Computer Supporting Interfaces for Automatic Recognition of Threats. Ph.D. Thesis, Poznan University of Technology, Poznan, Poland, 2016. [Google Scholar]

- Zou, Z.; Alnajjar, F.; Lwin, M.; Ali, L.; Jassmi, H.A.; Mubin, O.; Swavaf, M. A preliminary simulator study on exploring responses of drivers to driving system reminders on four stimuli in vehicles. Sci. Rep. 2025, 15, 4009. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Piniarski, K.; Pawłowski, P. Classification of road surfaces using convolutional neural network. In Proceedings of the 2020 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 23–25 September 2020; IEEE: New York, NY, USA, 2020; pp. 98–103. [Google Scholar] [CrossRef]

- Naddaf-Sh, S.; Naddaf-Sh, M.-M.; Kashani, A.R.; Zargarzadeh, H. An Efficient and Scalable Deep Learning Approach for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: New York, NY, USA, 2020; pp. 5602–5608. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, H.; Meng, W.; Luo, D. Improved lane detection method based on convolutional neural network using self-attention distillation. Sens. Mater. 2020, 32, 4505–4516. [Google Scholar] [CrossRef]

- Radu, M.D.; Costea, I.M.; Stan, V.A. Automatic Traffic Sign Recognition Artificial Inteligence—Deep Learning Algorithm. In Proceedings of the 2020 12th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 25–27 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Che, M.; Che, M.; Chao, Z.; Cao, X. Traffic Light Recognition for Real Scenes Based on Image Processing and Deep Learning. Comput. Inform. 2020, 39, 439–463. [Google Scholar] [CrossRef]

- Malbog, M.A. MASK R-CNN for Pedestrian Crosswalk Detection and Instance Segmentation. In Proceedings of the 2019 IEEE 6th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Kuala Lumpur, Malaysia, 20–21 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Kim, J.-A.; Sung, J.-Y.; Park, S.-h. Comparison of Faster-RCNN, YOLO, and SSD for Real-Time Vehicle Type Recognition. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics—Asia (ICCE-Asia), Seoul, Republic of Korea, 1–3 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Kaushik, S.; Raman, A.; Rajeswara Rao, K.V.S. Leveraging Computer Vision for Emergency Vehicle Detection-Implementation and Analysis. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Gothankar, N.; Kambhamettu, C.; Moser, P. Circular Hough Transform Assisted CNN Based Vehicle Axle Detection and Classification. In Proceedings of the 2019 4th International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 5–7 September 2019; IEEE: New York, NY, USA, 2019; pp. 217–221. [Google Scholar] [CrossRef]

- Yudin, D.; Sotnikov, A.; Krishtopik, A. Detection of Big Animals on Images with Road Scenes using Deep Learning. In Proceedings of the 2019 International Conference on Artificial Intelligence: Applications and Innovations (IC-AIAI), Belgrade, Serbia, 30 September–4 October 2019; IEEE: New York, NY, USA, 2019; pp. 100–1003. [Google Scholar] [CrossRef]

- J3016_202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2021. Available online: https://www.sae.org/standards/content/j3016_202104/ (accessed on 22 August 2025).

| Interaction | Characteristics |

|---|---|

| recognition of environmental objects using the vehicle sensors | |

| recognition of vehicle components by external applications (smarthphone, surveillance) | |

| recognition of vehicle user characteristics by external applications | |

| recognition of environmental objects directly or displayed by external applications, by the vehicle user | |

| recognition of objects displayed in the vehicle by the vehicle user | |

| recognition of visible state, commands and activities of user by the vehicle |

| Index (i) | Environment → Vehicle Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | road type classification (city, rural, highway) [4] | 95.8% |

| 2 | detection and classification of surface diseases (on roads and bridges; damages: cracks, potholes) [11] | 72.0% |

| 3 | lane detection (on poor roads, particularly with curves, broken lanes, no lane markings, extreme weather conditions) [12] | 97.8% |

| 4 | traffic sign recognition (urban, rural, and highway scenes; in different weather conditions and lighting situations) [13] | 98.4% |

| 5 | traffic light recognition (on individual light signal bulbs) [14] | 91.1% |

| 6 | pedestrian-crossing detection (under varying environmental conditions: daytime, nighttime, and rainy) [15] | 98.4% |

| 7 | detecting various vehicles, pedestrians, and barrier cones (various conditions: sunny, rainy, daytime, and nighttime) [16] | 97.3% |

| 8 | recognition of emergency vehicles (Police, fire brigade, ambulance, military) [17] | 86.5% |

| 9 | recognition of dangerous objects in front of the vehicle’s windshield—car wheels (diverse weather and lighting conditions on the roads) [3] | 95.4% |

| 10 | detection of animals on highways (during night) [18] | 84.1% |

| mean value of effectiveness | 91.7% | |

| product of effectiveness | 40.2% | |

| variance | 73.6 | |

| standard deviation | 8.6 |

| Human Driver Role | Reduced | Eq. | Increased | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.0 | 0.2 | 0.4 | 0.6 | 0.8 | 1.0 | 1.2 | 1.4 | 1.6 | 1.8 | 2.0 | |

| entire model effectiveness [in %] | 87.5 | 86.0 | 84.5 | 83.0 | 81.6 | 80.1 | 78.6 | 77.1 | 75.6 | 74.1 | 72.6 |

| Interaction | Ie→v(i) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| weight | 1.25 | 1.25 | 1.25 | 1.25 | 0 | 0 | 1.25 | 1.25 | 1.25 | 1.25 | |

| effectiveness [%] | 95.8 | 72.0 | 97.8 | 98.4 | 91.1 | 98.4 | 97.3 | 86.5 | 95.4 | 84.1 | 90.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balcerek, J.; Pawłowski, P. Interaction-Based Vehicle Automation Model for Intelligent Vision Systems. Electronics 2025, 14, 3406. https://doi.org/10.3390/electronics14173406

Balcerek J, Pawłowski P. Interaction-Based Vehicle Automation Model for Intelligent Vision Systems. Electronics. 2025; 14(17):3406. https://doi.org/10.3390/electronics14173406

Chicago/Turabian StyleBalcerek, Julian, and Paweł Pawłowski. 2025. "Interaction-Based Vehicle Automation Model for Intelligent Vision Systems" Electronics 14, no. 17: 3406. https://doi.org/10.3390/electronics14173406

APA StyleBalcerek, J., & Pawłowski, P. (2025). Interaction-Based Vehicle Automation Model for Intelligent Vision Systems. Electronics, 14(17), 3406. https://doi.org/10.3390/electronics14173406