Failure Detection with IWO-Based ANN Algorithm Initialized Using Fractal Origin Weights

Abstract

1. Introduction

2. Materials and Methods

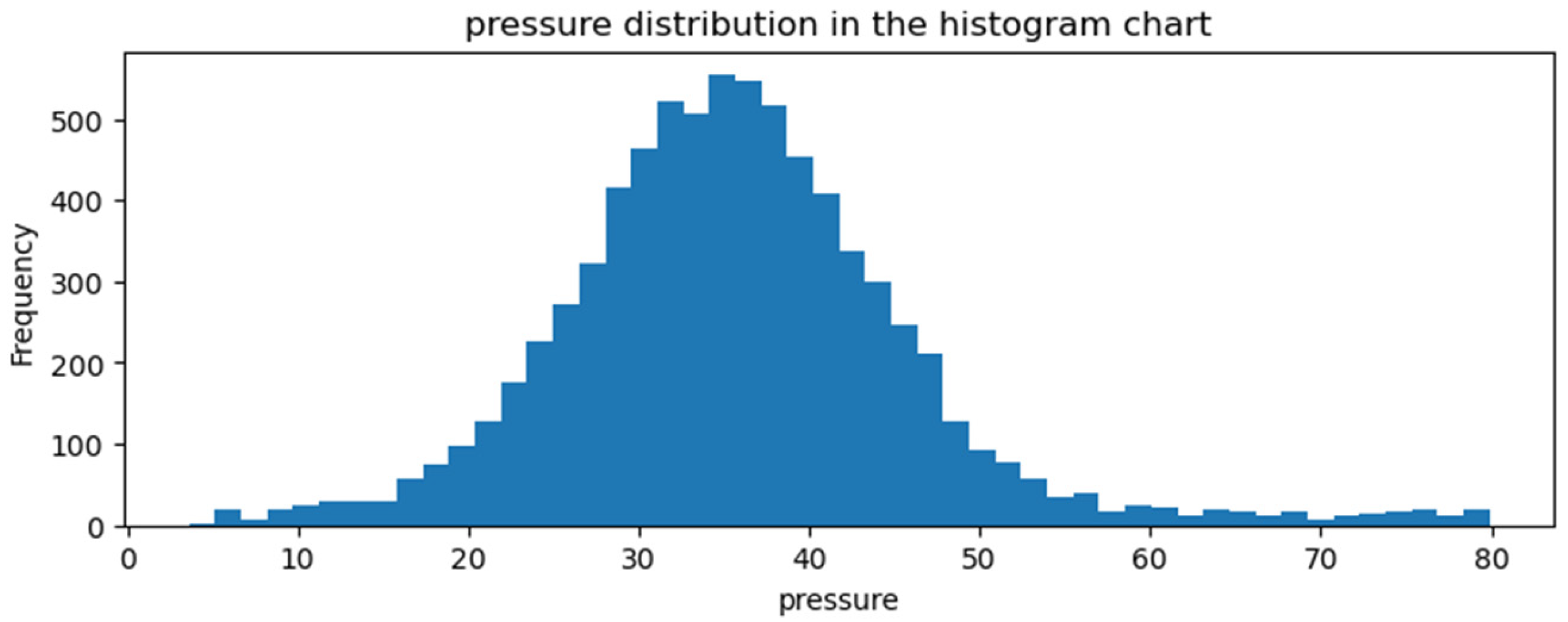

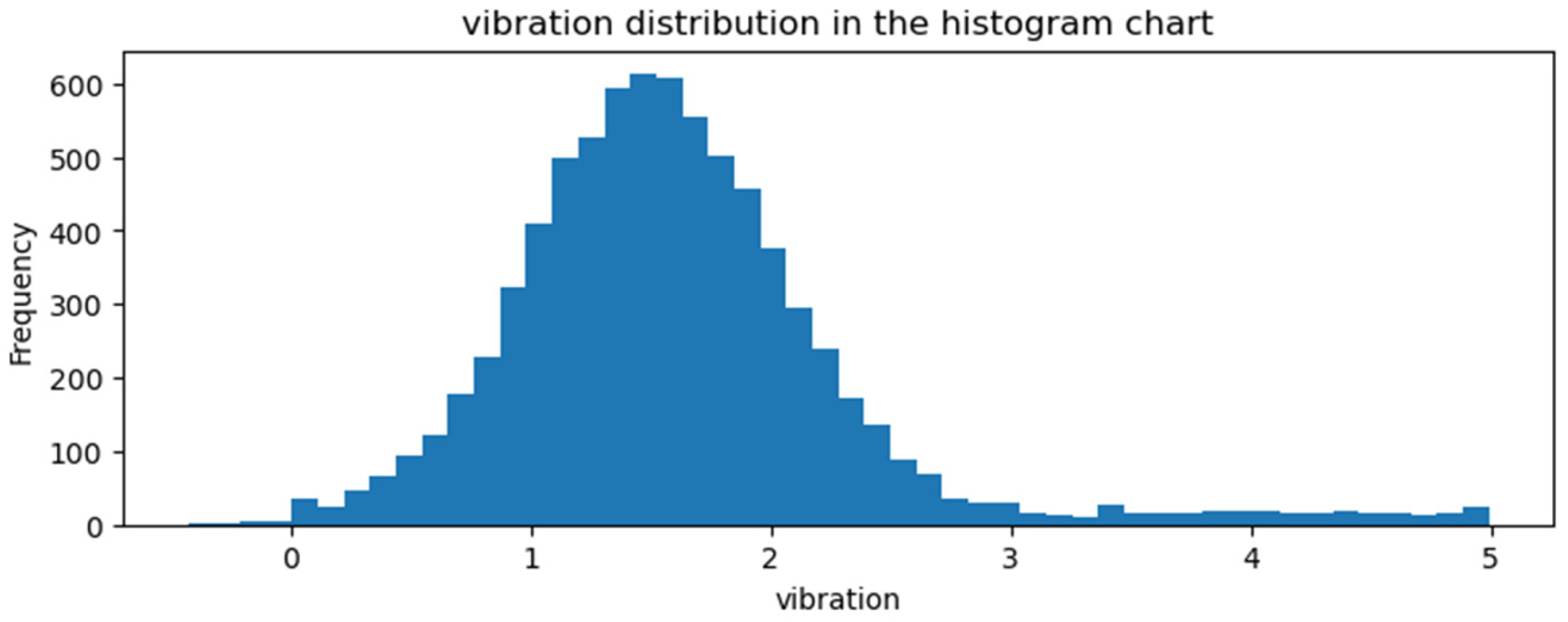

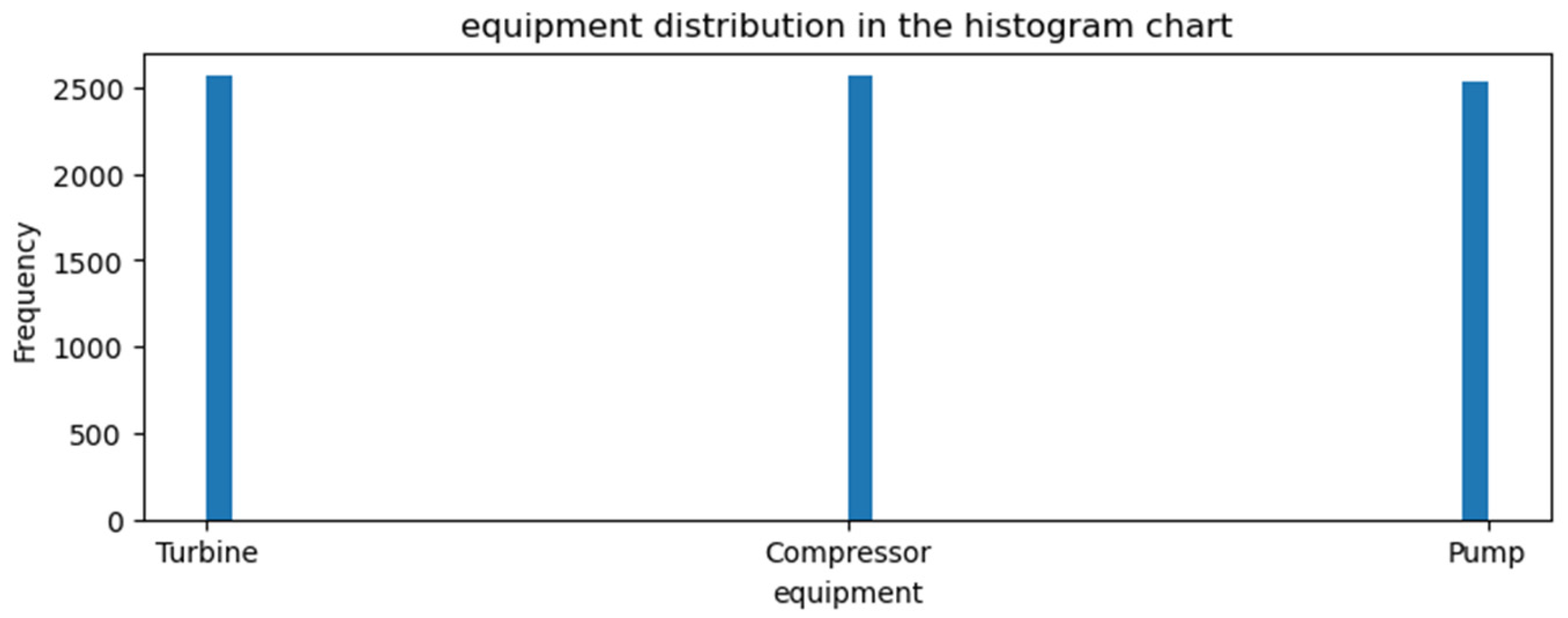

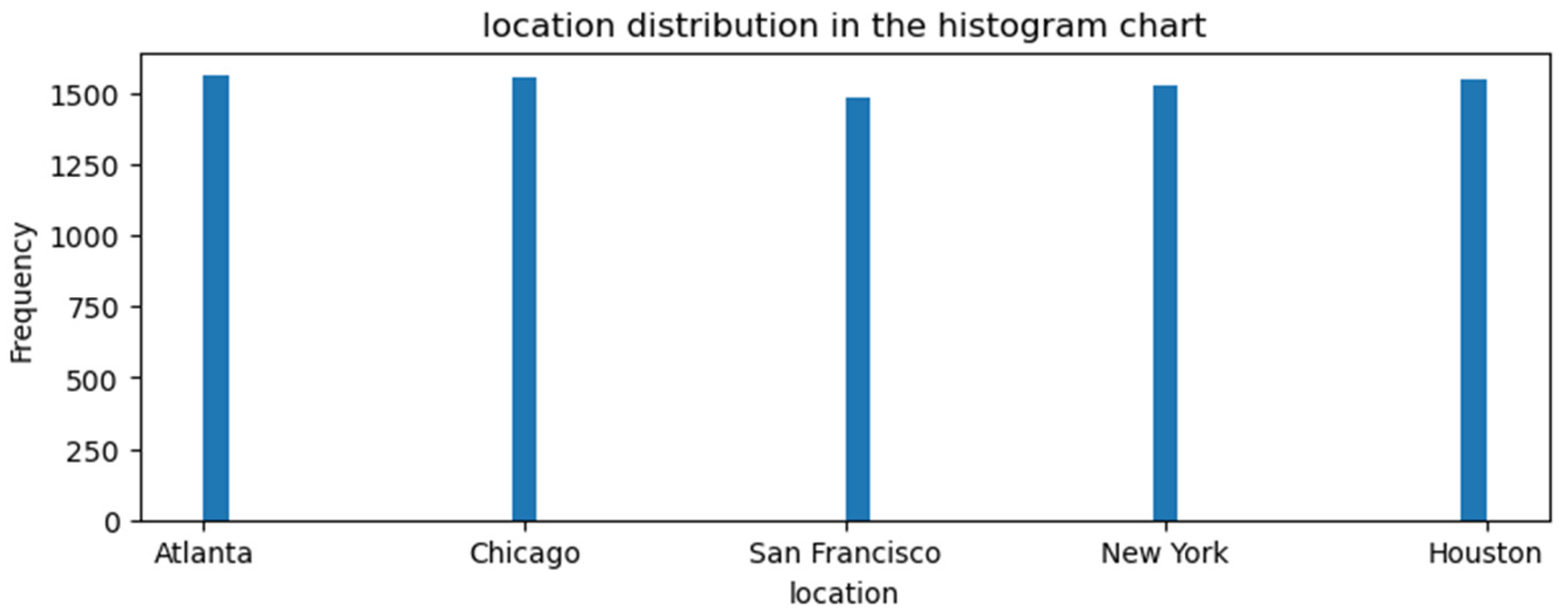

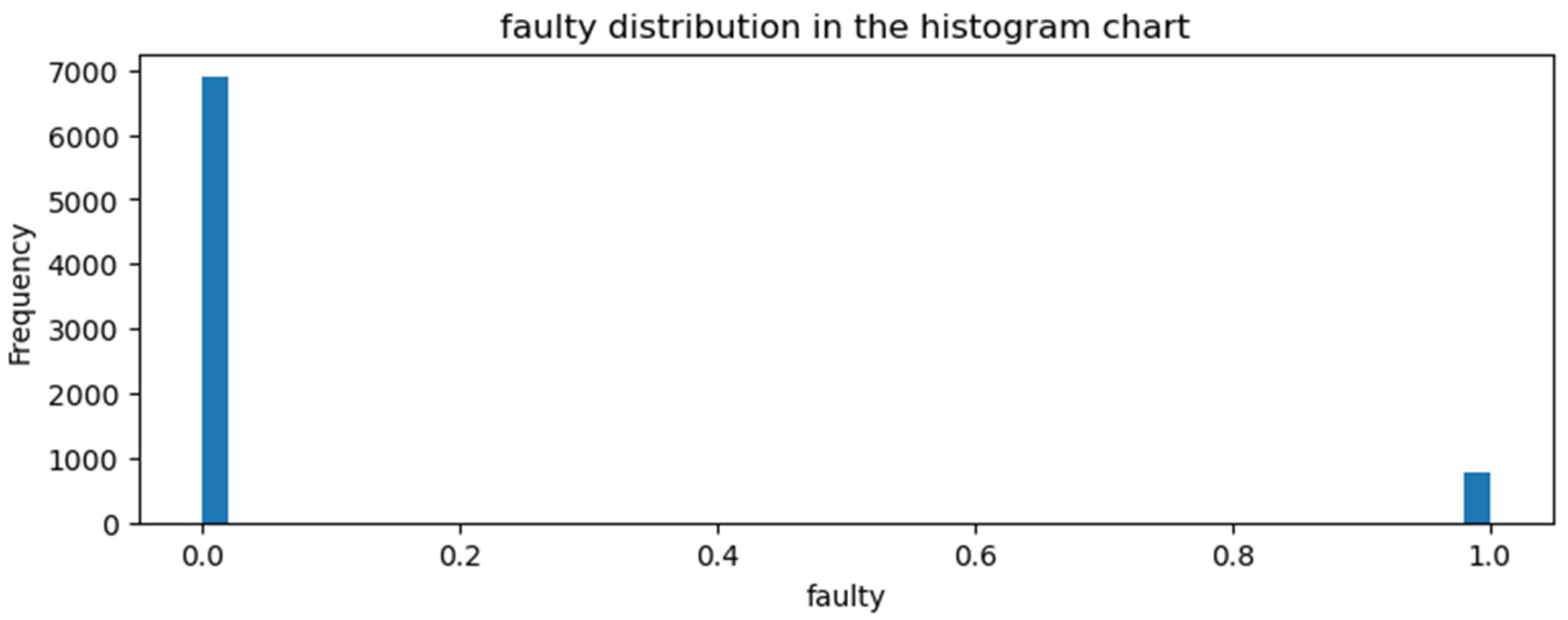

2.1. Dataset

2.2. Preprocessing

2.3. Optimization

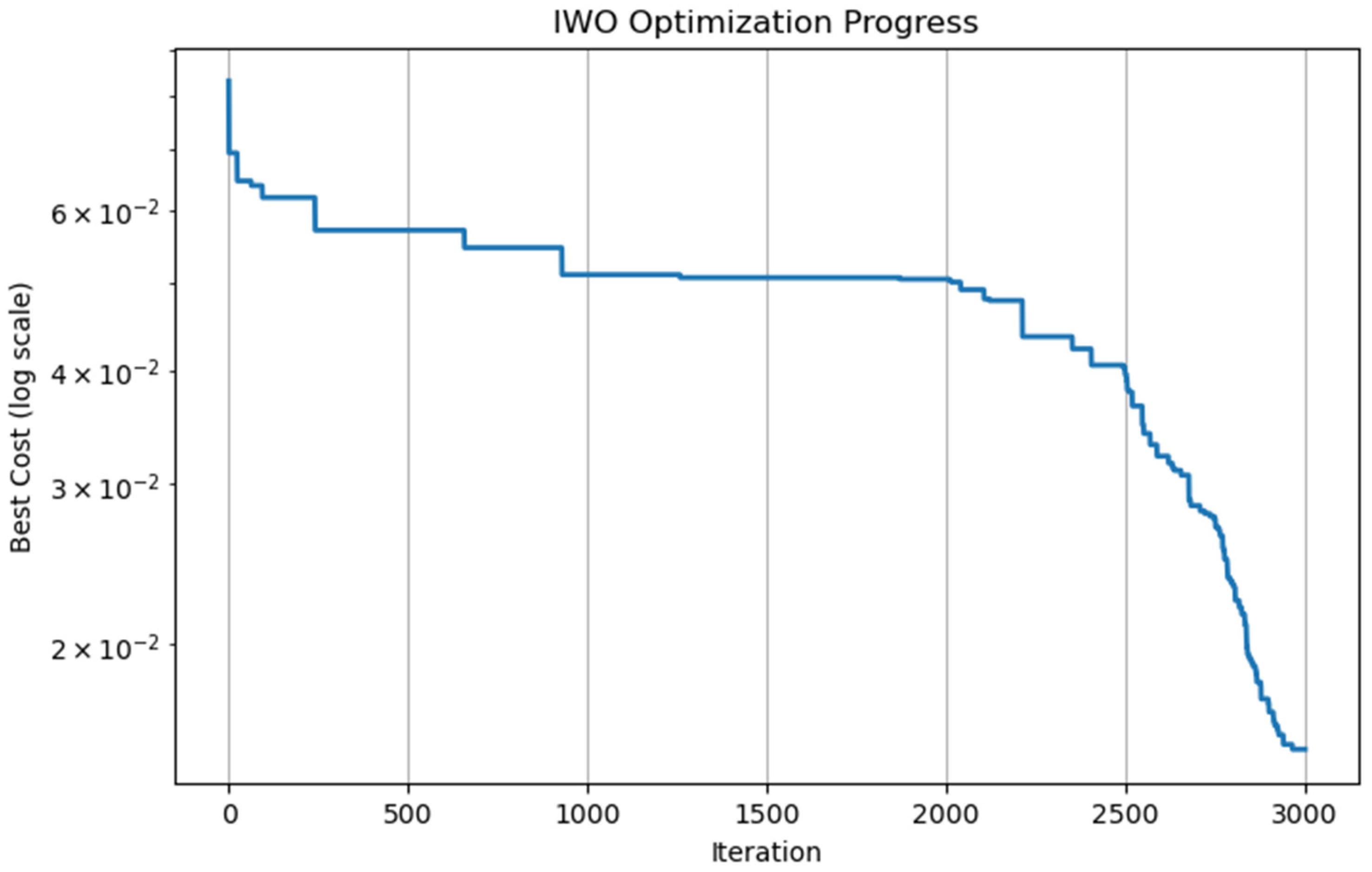

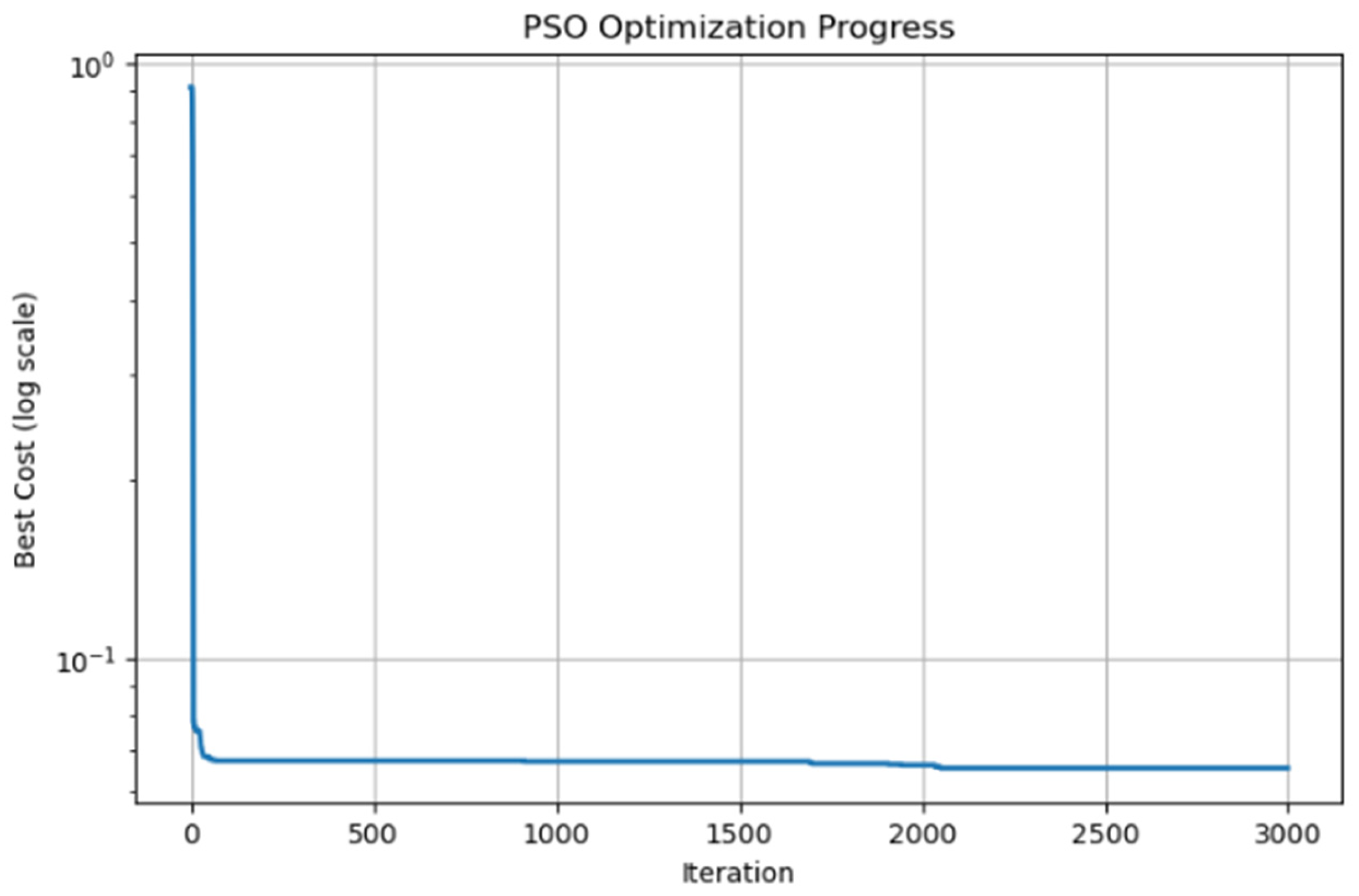

3. Iwo-Based Ann Algorithm

- The first stage is population initialization. In this stage, the seed assigned to nPop0 is randomly distributed in the solution space.

- The second stage is reproduction, which allows plants to produce seeds. The mathematical expression for this stage is given in Equations (1) and (2).In Equation (1), f(pi) is the fitness value of individual pi. fworst is the worst cost value in the population. fbest is the best cost value in the population. Ꜫ is the constant 1 × 10−12 used to control the error of division by 0. Smin in Equation (2) is the minimum number of seeds. Smax is the maximum number of seeds.

- The third stage is the determination of new locations for the produced child seeds. In this section, the generated child seeds are placed by adding the random deviation value multiplied by the sigma value to the position of the parent. The sigma value for the ith iteration is calculated using the mathematical expression given in Equation (3).MaxIt, σinitial and σfinal variables are used to calculate the sigma value within the scope of the i’th iteration in Equation (3). MaxIt is the total number of iterations. n is the rate of change in the sigma variable. sigma_initial and sigma_final are used to spread the seeds over a large space and then narrow down this space.

- The fourth stage is elimination. In this stage, the lowest-cost seeds are selected.

- The fifth stage is termination. Model training is completed when the MaxIt iteration count is reached.

| Code 1. |

| 1-The Industrial Equipment Monitoring dataset is given as input to the artificial neural network mechanism. 2-Predictions are obtained as a result of training using the feedforward process with the ANN mechanism. 3-The error value is calculated by comparing the actual and predicted values. This error value is then propagated back to the feedback layers. 4-The weight values are updated when the backpropagated gradient information is calculated. However, unlike the classical gradient descent method, IWO optimization is used for the update. 5-IWO optimization is an algorithm based on swarm intelligence. It focuses on finding the best combination of weight and bias parameters to be updated. |

4. Julia Set

5. Results and Discussion

6. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Singh, V.; Gangsar, P.; Porwal, R.; Atulkar, A. Artificial intelligence application in fault diagnostics of rotating industrial machines: A state-of-the-art review. J. Intell. Manuf. 2021, 34, 931–960. [Google Scholar] [CrossRef]

- Das, M.K.; Rangarajan, K. Performance Monitoring and Failure Prediction of Industrial Equipments using Artificial Intelligence and Machine Learning Methods: A Survey. In Proceedings of the 4th International Conference on Computing Methodologies and Communication, ICCMC 2020, Erode, India, 11–13 March 2020; pp. 595–602. [Google Scholar] [CrossRef]

- Gherghina, I.-S.; Bizon, N. Detection, Prevention, and Monitoring Techniques for Industrial Equipment—A brief review. In Proceedings of the 16th International Conference on Electronics, Computers and Artificial Intelligence, ECAI 2024, Iasi, Romania, 27–28 June 2024. [Google Scholar] [CrossRef]

- Leite, D.; Andrade, E.; Rativa, D.; Maciel, A.M.A. Fault Detection and Diagnosis in Industry 4.0: A Review on Challenges and Opportunities. Sensors 2024, 25, 60. [Google Scholar] [CrossRef] [PubMed]

- Akalin, F. Genetik Algoritma Temelli Yeni Bir Sentetik Veri Üretme Yaklaşımının Geliştirilmesi. Fırat Üniversitesi Mühendislik Bilim. Derg. 2023, 35, 753–760. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Brito, L.C.; Susto, G.A.; Brito, J.N.; Duarte, M.A.V. An explainable artificial intelligence approach for unsupervised fault detection and diagnosis in rotating machinery. Mech. Syst. Signal Process. 2022, 163, 108105. [Google Scholar] [CrossRef]

- Mazzoleni, M.; Sarda, K.; Acernese, A.; Russo, L.; Manfredi, L.; Glielmo, L.; Del Vecchio, C. A fuzzy logic-based approach for fault diagnosis and condition monitoring of industry 4.0 manufacturing processes. Eng. Appl. Artif. Intell. 2022, 115, 105317. [Google Scholar] [CrossRef]

- Shao, X.; Cai, B.; Zou, Z.; Shao, H.; Yang, C.; Liu, Y. Artificial intelligence enhanced fault prediction with industrial incomplete information. Mech. Syst. Signal Process. 2025, 224, 112063. [Google Scholar] [CrossRef]

- Brusa, E.; Cibrario, L.; Delprete, C.; Di Maggio, L.G. Explainable AI for Machine Fault Diagnosis: Understanding Features’ Contribution in Machine Learning Models for Industrial Condition Monitoring. Appl. Sci. 2023, 13, 2038. [Google Scholar] [CrossRef]

- Gültekin, Ö.; Cinar, E.; Özkan, K.; Yazıcı, A. Real-Time Fault Detection and Condition Monitoring for Industrial Autonomous Transfer Vehicles Utilizing Edge Artificial Intelligence. Sensors 2022, 22, 3208. [Google Scholar] [CrossRef] [PubMed]

- Nyanteh, Y.; Edrington, C.; Srivastava, S.; Cartes, D. Application of artificial intelligence to real-time fault detection in permanent-magnet synchronous machines. IEEE Trans. Ind. Appl. 2013, 49, 1205–1214. [Google Scholar] [CrossRef]

- Li, Z.; Kristoffersen, E.; Li, J. Deep transfer learning for failure prediction across failure types. Comput. Ind. Eng. 2022, 172, 108521. [Google Scholar] [CrossRef]

- Hosseinzadeh, A.; Chen, F.F.; Shahin, M.; Bouzary, H. A predictive maintenance approach in manufacturing systems via AI-based early failure detection. Manuf. Lett. 2023, 35, 1179–1186. [Google Scholar] [CrossRef]

- Wahid, A.; Breslin, J.G.; Intizar, M.A. Prediction of Machine Failure in Industry 4.0: A Hybrid CNN-LSTM Framework. Appl. Sci. 2022, 12, 4221. [Google Scholar] [CrossRef]

- Eang, C.; Lee, S. Predictive Maintenance and Fault Detection for Motor Drive Control Systems in Industrial Robots Using CNN-RNN-Based Observers. Sensors 2025, 25, 25. [Google Scholar] [CrossRef] [PubMed]

- Eldele, E.; Ragab, M.; Qing, X.; Edward; Chen, Z.; Wu, M.; Li, X.; Lee, J. UniFault: A Fault Diagnosis Foundation Model from Bearing Data. arXiv 2025, arXiv:2504.01373. [Google Scholar] [CrossRef]

- Kaggle Datasets. Available online: https://www.kaggle.com/datasets (accessed on 20 August 2025).

- Shabbir, A.; Shabbir, M.; Javed, A.R.; Rizwan, M.; Iwendi, C.; Chakraborty, C. Exploratory data analysis, classification, comparative analysis, case severity detection, and internet of things in COVID-19 telemonitoring for smart hospitals. J. Exp. Theor. Artif. Intell. 2022, 35, 507–534. [Google Scholar] [CrossRef]

- Huang, L.; Asteris, P.G.; Koopialipoor, M.; Armaghani, D.J.; Tahir, M.M. Invasive weed optimization technique-based ANN to the prediction of rock tensile strength. Appl. Sci. 2019, 9, 5372. [Google Scholar] [CrossRef]

- Akalın, F. Survival Classification in Heart Failure Patients by Neural Network-Based Crocodile and Egyptian Plover (CEP) Optimization Algorithm. Arab. J. Sci. Eng. 2023, 49, 3897–3914. [Google Scholar] [CrossRef]

- Michael, F.; Barnsley Robert, L.; Devaney Benoit, B. Mandelbrot Heinz-Otto Peitgen Dietmar Saupe Richard F. Voss With Contributions by Yuval Fisher Michael McGuire. In The Science of Fractal Images; Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

| Number of Layers | Number of Neurons Used in Each Layer | |

|---|---|---|

| Experiment 1 | 1 | 5 |

| Experiment 2 | 1 | 20 |

| Experiment 3 | 1 | 40 |

| Experiment 4 | 1 | 60 |

| Experiment 5 | 2 | 10 20 |

| Experiment 6 | 2 | 20 40 |

| Experiment 7 | 3 | 10 30 50 |

| Experiment 8 | 3 | 10 20 10 |

| Experiment 9 | 3 | 20 40 20 |

| Experiment 10 | 4 | 20 40 60 80 |

| Experiment 11 | 5 | 10 20 30 40 50 |

| TP | FP | FN | TN | Accuracy | Weighted Precision | Weighted Recall | Weighted F1 Score | |

|---|---|---|---|---|---|---|---|---|

| Training Dataset | 4802 | 10 | 92 | 373 | 0.9807 | 0.9806 | 0.9807 | 0.9798 |

| Testing Dataset | 2087 | 6 | 36 | 132 | 0.9814 | 0.9811 | 0.9814 | 0.9806 |

| TP | FP | FN | TN | Accuracy | Weighted Precision | Weighted Recall | Weighted F1 Score | |

|---|---|---|---|---|---|---|---|---|

| Training Dataset | 4806 | 6 | 72 | 393 | 0.9852 | 0.9852 | 0.9852 | 0.9847 |

| Testing Dataset | 2083 | 10 | 25 | 143 | 0.9845 | 0.9842 | 0.9845 | 0.9842 |

| 2 − 2j | TP | FP | FN | TN | Accuracy | Weighted Precision | Weighted Recall | Weighted F1 Score |

|---|---|---|---|---|---|---|---|---|

| Training Dataset | 4801 | 11 | 70 | 395 | 0.9847 | 0.9845 | 0.9847 | 0.9842 |

| Testing Dataset | 2083 | 10 | 21 | 147 | 0.9863 | 0.9860 | 0.9863 | 0.9861 |

| 2 − 2j | TP | FP | FN | TN | Accuracy | Weighted Precision | Weighted Recall | Weighted F1 Score |

|---|---|---|---|---|---|---|---|---|

| Training Dataset | 7067 | 4 | 64 | 365 | 0.9909 | 0.9909 | 0.9909 | 0.9906 |

| Testing Dataset | 16,468 | 64 | 223 | 745 | 0.9836 | 0.9830 | 0.9836 | 0.9829 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akalın, F. Failure Detection with IWO-Based ANN Algorithm Initialized Using Fractal Origin Weights. Electronics 2025, 14, 3403. https://doi.org/10.3390/electronics14173403

Akalın F. Failure Detection with IWO-Based ANN Algorithm Initialized Using Fractal Origin Weights. Electronics. 2025; 14(17):3403. https://doi.org/10.3390/electronics14173403

Chicago/Turabian StyleAkalın, Fatma. 2025. "Failure Detection with IWO-Based ANN Algorithm Initialized Using Fractal Origin Weights" Electronics 14, no. 17: 3403. https://doi.org/10.3390/electronics14173403

APA StyleAkalın, F. (2025). Failure Detection with IWO-Based ANN Algorithm Initialized Using Fractal Origin Weights. Electronics, 14(17), 3403. https://doi.org/10.3390/electronics14173403