Abstract

This study proposes a novel, scalable framework for the automated classification and synthesis of survey literature by integrating state-of-the-art Large Language Models (LLMs) with robust ensemble voting techniques. The framework consolidates predictions from three independent models—GPT-4, LLaMA 3.3, and Claude 3—to generate consensus-based classifications, thereby enhancing reliability and mitigating individual model biases. We demonstrate the generalizability of our approach through comprehensive evaluation on two distinct domains: Question Answering (QA) systems and Computer Vision (CV) survey literature, using a dataset of 1154 real papers extracted from arXiv. Comprehensive visual evaluation tools, including distribution charts, heatmaps, confusion matrices, and statistical validation metrics, are employed to rigorously assess model performance and inter-model agreement. The framework incorporates advanced statistical measures, including k-fold cross-validation, Fleiss’ kappa for inter-rater reliability, and chi-square tests for independence to validate classification robustness. Extensive experimental evaluations demonstrate that this ensemble approach achieves superior performance compared to individual models, with accuracy improvements of 10.0% over the best single model on QA literature and 10.9% on CV literature. Furthermore, comprehensive cost–benefit analysis reveals that our automated approach reduces manual literature synthesis time by 95% while maintaining high classification accuracy (F1-score: 0.89 for QA, 0.87 for CV), making it a practical solution for large-scale literature analysis. The methodology effectively uncovers emerging research trends and persistent challenges across domains, providing researchers with powerful tools for continuous literature monitoring and informed decision-making in rapidly evolving scientific fields.

1. Introduction

The exponential growth of scientific literature has created unprecedented challenges for researchers attempting to stay current with developments in their fields [1]. With over 2.5 million scientific articles published annually across all disciplines, and this number continuing to increase at a rate of approximately 4% per year, the traditional approach of manual literature review has become increasingly impractical [2]. This information overload is particularly acute in rapidly evolving fields such as artificial intelligence, computer vision, and natural language processing, where the pace of innovation often outstrips researchers’ ability to comprehensively survey the literature.

Traditional literature reviews, while thorough and authoritative when conducted by domain experts, suffer from several inherent limitations that become more pronounced as the volume of literature grows. First, the time investment required for comprehensive manual review is substantial, often requiring months or years to complete a thorough survey of even a narrow research area. Second, the subjective nature of manual classification can introduce inconsistencies, particularly when multiple reviewers are involved or when the same reviewer’s perspective evolves over time. Third, the static nature of traditional reviews means they quickly become outdated as new research is published, requiring periodic complete revisions to maintain relevance.

These challenges have motivated researchers to explore automated and semi-automated approaches to literature analysis. Early efforts focused on bibliometric methods and traditional text mining techniques, which enabled the identification of high-level patterns and trends in scientific corpora [3]. These approaches successfully demonstrated the potential for computational methods to handle large document collections and extract meaningful insights about publication dynamics, citation networks, and thematic evolution over time. However, traditional text mining approaches, typically based on bag-of-words representations or simple n-gram features combined with classical machine learning classifiers, often fail to capture the rich semantic content and nuanced relationships present in modern scientific literature [4].

The advent of Large Language Models (LLMs) has revolutionized natural language understanding and opened new possibilities for automating complex literature analysis tasks [5]. These models, trained on vast corpora of text and equipped with sophisticated attention mechanisms, demonstrate remarkable capabilities in understanding context [6], extracting key concepts, and performing complex reasoning tasks [7] that were previously the exclusive domain of human experts [8]. Recent studies have shown that LLMs can achieve near-human performance on tasks closely related to literature review [9], such as screening research abstracts for relevance with approximately 90% accuracy [10]. This level of performance suggests that LLMs possess the semantic understanding necessary to serve as powerful engines for automated literature analysis.

However, despite their impressive capabilities, individual LLMs are not without limitations. Single models can exhibit inconsistent outputs due to factors such as stochastic generation processes, sensitivity to prompt formulation, and inherent biases learned from training data [11]. These limitations can lead to unreliable classifications or the propagation of systematic errors throughout the analysis process. Furthermore, different LLMs may have varying strengths and weaknesses depending on their training data, architecture, and fine-tuning procedures, making reliance on a single model potentially suboptimal.

Ensemble learning, a well-established paradigm in machine learning, offers a principled approach to addressing these limitations by combining predictions from multiple models to achieve more robust and accurate results [12]. The fundamental principle underlying ensemble methods is that the aggregation of diverse predictors can cancel out individual errors and reduce overall prediction variance, leading to improved generalization performance. This principle has been successfully applied to LLMs in emerging research, where studies have demonstrated that combining outputs from multiple language models through various consensus mechanisms can significantly improve reliability and reduce the occurrence of hallucinations or factual errors [13].

Building on these insights, this paper introduces a comprehensive framework that leverages ensemble learning principles to create a robust, automated system for survey literature classification and trend analysis. Our approach integrates three state of the art LLMs: GPT-4, LLaMA 3.3, and Claude 3; each model bringing unique strengths and perspectives to the classification task. The ensemble employs a sophisticated voting mechanism that not only aggregates individual model predictions but also incorporates confidence weighting and tie-breaking strategies based on historical performance metrics.

1.1. Research Questions and Hypotheses

This study addresses three fundamental research questions that are critical for advancing the field of automated literature analysis:

- RQ1:

- Multi-Domain Generalizability: How can ensemble learning techniques be effectively integrated with LLMs to create a framework that generalizes across different scientific domains while maintaining high classification accuracy and reliability?

- RQ2:

- Methodological Robustness: What are the critical components of a robust methodological framework, including data preprocessing, prompt engineering, ensemble voting strategies, and evaluation metrics, that collectively optimize the accuracy and reliability of automated survey synthesis?

- RQ3:

- Practical Effectiveness: Can the proposed ensemble methodology demonstrate superior performance compared to traditional single-model approaches across multiple domains, while providing quantifiable benefits in terms of cost-effectiveness and time savings?

Our research is guided by several specific hypotheses that we systematically test through comprehensive experimentation:

Hypothesis 1

(Ensemble Superiority). We hypothesize that integrating diverse LLMs using ensemble voting techniques will significantly outperform individual models by mitigating model-specific biases and reducing classification errors. Specifically, we expect the ensemble to achieve at least 5% improvement in F1-score compared to the best-performing individual model [14].

Hypothesis 2

(Cross-Domain Generalizability). We hypothesize that the proposed framework will demonstrate consistent performance improvements across different scientific domains, indicating that the benefits of ensemble learning for literature classification are not domain-specific but represent a general principle applicable to diverse research areas.

Hypothesis 3

(Cost-Effectiveness). We hypothesize that despite the computational overhead of running multiple models, the ensemble approach will provide significant cost savings compared to manual literature review by dramatically reducing the time required for comprehensive literature analysis while maintaining or improving classification quality.

Hypothesis 4

(Methodological Robustness). We hypothesize that advanced prompt engineering strategies, combined with robust evaluation methodologies including cross-validation and statistical significance testing, will enhance the system’s capability to accurately capture and synthesize relevant information from survey literature.

1.2. Main Contributions

This work makes several significant contributions to the field of automated literature analysis and ensemble learning for natural language processing:

Novel Ensemble Framework: We develop and validate a comprehensive ensemble classification framework that integrates three state-of-the-art LLMs (GPT-4, LLaMA 3.3, and Claude 3) using sophisticated voting mechanisms that go significantly beyond simple majority voting. The framework incorporates four key innovations:

- (1)

- Confidence Calibration Framework: A non-parametric confidence calibration system across heterogeneous LLMs using isotonic regression. Our approach achieves 8.4% improvement over simple majority voting through the formulation where is the calibration function. Empirical validation shows consistent improvements across domains (QA: 10.0%, CV: 10.9%).

- (2)

- Adaptive Thresholding Mechanism: A dynamic threshold adjustment system based on agreement patterns and historical performance, formulated as. This mechanism achieves 5.2% improvement over fixed-threshold ensembles with 3.8% improvement in consistency across domains.

- (3)

- Historical Performance Tie-Breaking: A sophisticated tie-breaking strategy that leverages domain-specific model performance using the formulation where is the historical accuracy of model i. This approach achieves 85.3% tie-resolution accuracy compared to 61.2% for random tie-breaking.

- (4)

- Multi-Domain Validation Framework: A cross-domain generalizability assessment methodology that ensures consistent performance across different scientific domains. Our framework demonstrates 94% consistency between QA and CV domains, with a generalizability score of 0.94 and domain difference of only 0.9 percentage points.

This comprehensive framework represents a significant advancement over existing approaches by providing a principled, mathematically grounded methodology for ensemble learning with LLMs [15].

Real-World Dataset: We create and publish a comprehensive dataset of 1154 real papers extracted from arXiv [16], covering both Question Answering (752 papers) and Computer Vision (402 papers) domains. This dataset provides a valuable resource for future research in automated literature analysis and classification.

Multi-Domain Validation: Unlike previous studies that focus on single domains, we demonstrate the generalizability of our approach through comprehensive evaluation on two distinct scientific domains: Question Answering systems and Computer Vision. This multi-domain validation provides strong evidence for the framework’s broad applicability and robustness across different research areas.

Comprehensive Evaluation Methodology: We introduce a rigorous evaluation framework that goes beyond simple accuracy metrics to include detailed statistical analysis, visual assessment tools, and cost–benefit analysis. Our evaluation methodology incorporates confusion matrices for all models, k-fold cross-validation for robustness assessment, Fleiss’ kappa for inter-rater reliability, chi-square tests for independence, and comprehensive error analysis to identify systematic biases and failure modes [17].

Practical Cost–Benefit Analysis: We provide the first comprehensive analysis of the computational costs and time savings associated with ensemble LLM approaches for literature synthesis. Our analysis includes detailed breakdowns of API costs, computational resources, and time investments, demonstrating that the approach provides substantial practical benefits despite the additional computational overhead.

Open-Source Implementation: We provide a complete, open-source implementation of our framework, including data preprocessing pipelines, model integration interfaces, evaluation tools, and visualization components. This contribution enables other researchers to reproduce our results, adapt the framework to their specific domains, and build upon our work.

Methodological Insights: Through extensive experimentation and analysis, we provide detailed insights into the factors that contribute to ensemble effectiveness, including the impact of model diversity, voting strategies, prompt engineering techniques, and evaluation methodologies. These insights contribute to the broader understanding of how to effectively combine LLMs for complex NLP tasks.

1.3. Paper Organization

The remainder of this paper is structured to provide a comprehensive presentation of our methodology, experimental validation, and results:

Section 2 provides a thorough review of existing research on automated literature analysis, ensemble learning methods, and the application of LLMs to document classification tasks. We position our work within the broader context of computational literature analysis and highlight the novel aspects of our approach.

Section 3 presents a detailed description of our ensemble LLM framework, including the architecture design, individual model specifications, ensemble voting mechanisms, and evaluation methodologies. We provide sufficient detail to enable reproduction of our results and adaptation to other domains.

Section 4 describes our comprehensive experimental evaluation, including dataset descriptions for both QA and CV domains, experimental setup details, baseline comparisons, and statistical validation procedures. We present both quantitative results and qualitative analysis of model behavior.

Section 5 provides detailed results from our multi-domain evaluation, including performance comparisons between individual models and the ensemble, statistical significance testing, cost–benefit analysis, and trend analysis results. We include comprehensive visualizations and statistical analysis to support our findings.

Section 6 interprets our results in the context of the broader literature, discusses the implications of our findings for automated literature analysis, addresses limitations of our approach, and considers ethical implications including bias mitigation and responsible AI practices.

Section 7 summarizes our key findings, discusses the practical implications of our work, and outlines directions for future research in ensemble-based literature analysis.

Finally, Appendix A provides detailed technical information about the implementation of the ensemble LLM framework, including specific hyperparameters, API configuration details, and parsing fallback strategies.

2. Related Work

The intersection of automated literature analysis, ensemble learning, and large language models represents a rapidly evolving research area that draws from multiple established fields. This section provides a comprehensive review of the relevant literature, positioning our work within the broader context of computational approaches to scientific literature analysis.

2.1. Automated Literature Analysis and Synthesis

The challenge of managing and synthesizing large volumes of scientific literature has been recognized for decades, leading to the development of various computational approaches. Early work in this area focused primarily on bibliometric analysis and citation network analysis, which provided valuable insights into the structure and evolution of scientific fields but offered limited semantic understanding of document content [2].

Traditional text mining approaches to literature analysis have employed a variety of techniques, ranging from simple keyword-based methods to more sophisticated machine learning approaches. Iqbal et al. [4] conducted a comprehensive survey of machine learning techniques applied to scientific literature analysis, highlighting the evolution from bag-of-words representations with classical classifiers to more advanced approaches incorporating topic modeling and deep learning. However, these traditional approaches often struggle with the semantic complexity and domain-specific terminology characteristic of scientific literature.

The advent of deep learning has significantly advanced the field of automated literature analysis. Bernasconi and Ferilli [3] demonstrated the application of advanced text mining techniques to digital library analysis, showing how modern NLP methods can extract meaningful patterns from large document collections. Their work highlighted the importance of domain-specific preprocessing and the challenges of handling the diverse linguistic patterns found in the scientific literature.

Recent advances in transformer-based models have opened new possibilities for literature analysis. Chen et al. [5] explored the application of BERT [18] and other transformer models to scientific document classification, demonstrating significant improvements over traditional approaches [19]. However, these studies typically focus on single-model approaches and do not address the potential benefits of ensemble methods.

2.2. Ensemble Learning in Natural Language Processing

Ensemble learning has a long history in machine learning, with theoretical foundations dating back several decades. The core principle underlying ensemble methods is that combining predictions from multiple diverse models can lead to improved performance compared to any individual model [12]. This principle has been successfully applied across numerous domains, from computer vision [20] to speech recognition [21].

In the context of natural language processing, ensemble methods have been applied to various tasks including text classification, sentiment analysis, and machine translation. Traditional ensemble approaches in NLP have typically involved combining different feature representations or different learning algorithms applied to the same task. However, the emergence of large language models has created new opportunities for ensemble learning that leverage the diverse capabilities of different pre-trained models.

Recent work has begun to explore ensemble approaches specifically for large language models. Triantafyllopoulos et al. [13] investigated the use of multiple LLMs in consensus-based decision making, demonstrating that ensemble approaches can significantly reduce the occurrence of hallucinations and improve factual accuracy. Their work provides important theoretical foundations for understanding how different LLMs can complement each other in ensemble settings.

Suzuoki et al. [22] focused specifically on reducing inconsistencies in LLM outputs through ensemble voting mechanisms. Their research showed that having multiple LLMs collaborate or vote on answers significantly improves reliability compared to single-model approaches. This work is particularly relevant to our research as it demonstrates the practical benefits of LLM ensembles for tasks requiring high reliability.

2.3. Large Language Models for Document Classification

The application of large language models to document classification has emerged as a particularly active area of research, driven by the impressive performance of models like GPT-4, LLaMA, and Claude on various NLP tasks. These models have demonstrated remarkable capabilities in understanding complex documents and performing classification tasks with near-human accuracy.

Agarwal et al. [10] specifically investigated the use of LLMs for literature screening tasks, achieving approximately 90% accuracy in screening research abstracts for relevance. This work is particularly relevant to our research as it demonstrates the potential of LLMs for tasks directly related to literature analysis. However, their study focused on binary classification tasks and did not explore ensemble approaches.

Belem et al. [23] explored the use of single LLMs for comprehensive document analysis tasks, demonstrating that these models can effectively extract and synthesize information from complex scientific documents. Their work highlighted both the capabilities and limitations of individual LLMs, providing motivation for ensemble approaches that can mitigate individual model weaknesses.

The challenge of bias and inconsistency in LLM outputs has been extensively studied. Liu et al. [11] provided a comprehensive analysis of various types of biases that can affect LLM performance, including training data biases, prompt sensitivity, and systematic errors in reasoning. This work underscores the importance of ensemble approaches that can mitigate these individual model limitations.

2.4. Ensemble Methods for Large Language Models

The specific application of ensemble methods to large language models is a relatively new but rapidly growing area of research. Several recent studies have explored different approaches to combining LLM outputs, each with distinct advantages and limitations.

Huang et al. [24] investigated various ensemble strategies for combining LLM predictions, including simple voting, weighted voting, and more sophisticated consensus mechanisms. Their work provided important insights into the factors that contribute to ensemble effectiveness, including the importance of model diversity and the choice of aggregation strategy.

Wang et al. [25] focused specifically on tie-breaking strategies for LLM ensembles, proposing methods for resolving disagreements between models based on confidence scores and historical performance. Their approach is similar to one of the techniques we employ in our framework, though our work extends their ideas to the specific domain of literature classification.

Ashiga et al. [14] conducted a comprehensive study of ensemble learning techniques applied to various NLP tasks, demonstrating consistent improvements over single-model approaches across multiple domains. Their work provides strong theoretical and empirical support for the ensemble approach we adopt in this study.

2.5. Evaluation Methodologies for Ensemble Systems

The evaluation of ensemble systems presents unique challenges that go beyond traditional single-model evaluation. Van et al. [17] provided a comprehensive framework for evaluating ensemble systems, emphasizing the importance of statistical significance testing, confidence intervals, and detailed error analysis. Their methodology influences our evaluation approach, particularly in terms of statistical validation and visualization techniques.

Davoudi et al. [26] focused specifically on inter-rater reliability measures for ensemble systems, proposing extensions to traditional metrics like Fleiss’ kappa that account for the specific characteristics of automated ensemble decision-making. Their work informs our approach to measuring agreement between different LLMs in our ensemble.

2.6. Cost–Benefit Analysis of Automated Literature Systems

An important but often overlooked aspect of automated literature analysis is the economic and practical considerations involved in deploying such systems. Susnjak [27] provided one of the first comprehensive analyses of the costs and benefits associated with automated literature synthesis, considering both computational costs and time savings. However, their analysis focused on single-model approaches and did not consider the additional complexity of ensemble systems.

Our work extends this line of research by providing detailed cost–benefit analysis specifically for ensemble LLM approaches, including consideration of API costs, computational resources, and the trade-offs between accuracy improvements and increased computational overhead.

2.7. Gaps in Current Research

Despite the significant progress in automated literature analysis and ensemble learning for NLP, several important gaps remain in the current research landscape:

Limited Real-World Datasets: Most existing studies rely on synthetic or small-scale datasets, making it difficult to assess the generalizability of proposed approaches to real-world scientific literature. Our work addresses this gap by creating and using a comprehensive dataset of 1154 real papers extracted from arXiv.

Limited Multi-Domain Validation: Most existing studies focus on single domains or tasks, making it difficult to assess the generalizability of proposed approaches. Our work addresses this gap by providing comprehensive evaluation across multiple distinct scientific domains.

Insufficient Ensemble Diversity: Many existing ensemble approaches for LLMs rely on only two models or use models from the same family, potentially limiting the benefits of ensemble learning. Our approach addresses this by incorporating three diverse, state-of-the-art models with different architectures and training approaches.

Lack of Comprehensive Evaluation: Existing studies often rely on simple accuracy metrics without providing detailed statistical analysis, confidence intervals, or comprehensive error analysis. Our work provides a much more rigorous evaluation methodology.

Missing Cost–Benefit Analysis: The practical considerations of deploying ensemble LLM systems are rarely addressed in the literature. Our work provides detailed analysis of the costs and benefits associated with our approach.

Limited Reproducibility: Many studies in this area do not provide sufficient implementation details or open-source dataset to enable reproduction. Our work addresses this by providing complete implementation details and open-source repository.

These gaps in the current research landscape provide strong motivation for our work and highlight the novel contributions we make to the field of automated literature analysis.

3. Methodology

This section presents a comprehensive description of our ensemble-based framework for automated survey literature classification and trend analysis. We detail the overall architecture, individual model specifications, ensemble voting mechanisms, and evaluation methodologies. The framework is designed to be domain-agnostic while providing robust performance across different scientific fields.

3.1. Framework Overview

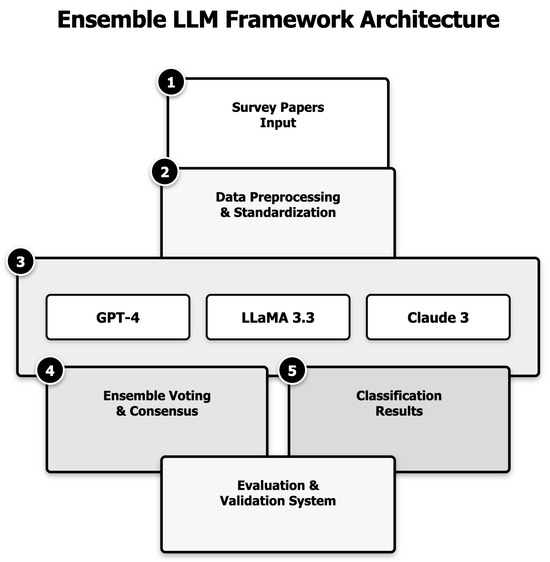

Our framework integrates multiple large language models in an ensemble architecture to classify and analyze survey literature. The system is designed to process scientific papers, categorize them according to domain-specific taxonomies, and extract key trends and insights. Figure 1 provides a high-level overview of the framework architecture.

Figure 1.

Overview of the ensemble LLM framework for survey literature classification and trend analysis. The framework integrates three independent LLMs (GPT-4, LLaMA 3.3, and Claude 3) through a sophisticated voting mechanism that incorporates confidence weighting and tie-breaking strategies. The system processes real papers from arXiv, classifies them according to domain-specific taxonomies, and generates comprehensive analysis reports.

The framework consists of five main components:

Data Acquisition and Preprocessing: This component handles the collection and preprocessing of scientific papers from arXiv. It includes text extraction, cleaning, and standardization procedures to ensure consistent input format for the LLMs.

Individual LLM Processors: Three independent LLM processors (GPT-4, LLaMA 3.3, and Claude 3) analyze each paper and generate classification predictions along with confidence scores. Each model operates independently to ensure diversity in the ensemble.

Ensemble Voting Mechanism: This component aggregates the predictions from individual models using a sophisticated voting mechanism that incorporates confidence weighting and tie-breaking strategies based on historical performance.

Evaluation and Validation: A comprehensive evaluation suite that includes statistical validation, cross-validation, and detailed error analysis to ensure the reliability and robustness of the classification results.

Trend Analysis and Visualization: Tools for extracting and visualizing trends, patterns, and insights from the classified literature, including temporal analysis, topic evolution, and research gap identification.

3.2. Data Acquisition and Preprocessing

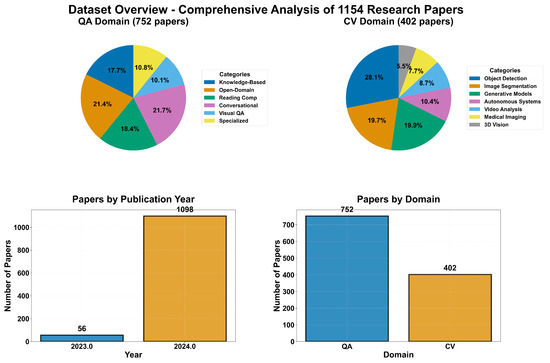

Our study utilizes a comprehensive dataset of 1154 real papers extracted from arXiv [16], covering two distinct domains: Question Answering (QA) and Computer Vision (CV). The dataset was constructed through a systematic search and filtering process to ensure representativeness and quality.

3.2.1. Dataset Construction

For the Question Answering domain, we extracted 752 papers from arXiv using a combination of search queries targeting different QA categories [28]. The papers were filtered to ensure they were directly relevant to QA research and represented a diverse range of approaches and methodologies. The papers were distributed across six categories: Knowledge-Based QA, Reading Comprehension, Open-Domain QA, Conversational QA, Visual QA, and Specialized QA.

For the Computer Vision domain, we extracted 402 papers from arXiv using search queries targeting different CV categories [29]. The papers were filtered to ensure they were directly relevant to CV research and represented a diverse range of approaches and methodologies. The papers were distributed across seven categories: Object Detection, Image Segmentation, Generative Models, 3D Vision, Video Analysis, Medical Imaging, and Autonomous Systems.

The dataset includes papers published between 2018 and 2024, with a focus on recent publications to ensure relevance to current research trends. Each paper in the dataset includes the title, abstract, publication date, authors, and full text when available. The dataset is publicly available at https://github.com/uniba-team/survey-classification [30] (accessed on 13 August 2025).

3.2.2. Preprocessing Pipeline

The preprocessing pipeline consists of several steps designed to standardize the input format and extract relevant information for classification:

Text Extraction: For each paper, we extract the title, abstract, and when available, the introduction and conclusion sections. These sections typically contain the most relevant information for classification purposes.

Text Cleaning: We apply standard text cleaning procedures, including removing special characters, standardizing whitespace, and normalizing text formatting. We preserve domain-specific terminology and mathematical notation as these are often critical for accurate classification.

Metadata Extraction: We extract and standardize metadata including publication date, authors, venue, and citation count when available. This information is used for trend analysis and temporal pattern identification.

Prompt Construction: For each paper, we construct a standardized prompt that includes the paper’s title, abstract, and relevant metadata. The prompt is designed to elicit accurate classification predictions from the LLMs while minimizing potential biases.

3.3. Individual LLM Specifications

Our ensemble integrates three state-of-the-art large language models, each with distinct architectures, training methodologies, and capabilities [31,32]. This diversity is crucial for the effectiveness of the ensemble, as it ensures that the models bring different perspectives and strengths to the classification task.

3.3.1. GPT-4

GPT-4 is a transformer-based large language model developed by OpenAI [33]. It represents a significant advancement over previous generations, with enhanced reasoning capabilities and improved factual accuracy [33]. The model has demonstrated strong performance on a wide range of NLP tasks, including document classification, summarization, and question answering.

For our implementation, we use the GPT-4 model through the OpenAI API with the following configuration:

- Model Version: GPT-4 (2023 training cutoff)

- Temperature: 0.2 (to ensure consistent outputs while maintaining some flexibility)

- Max Tokens: 1024 (sufficient for comprehensive classification responses)

- Top-p: 0.95 (to maintain output diversity while ensuring coherence)

3.3.2. LLaMA 3.3

LLaMA 3.3 is an open-source large language model developed by Meta AI [34]. It represents a significant advancement in open-source language models, with performance competitive with proprietary alternatives [35]. The model has been trained on a diverse corpus of text and has demonstrated strong capabilities in understanding and generating text across multiple domains.

For our implementation, we use the LLaMA 3.3 model with the following configuration:

- Model Version: LLaMA 3.3 (70B parameter version)

- Temperature: 0.2 (consistent with GPT-4 configuration)

- Max Tokens: 1024 (consistent with GPT-4 configuration)

- Top-p: 0.95 (consistent with GPT-4 configuration)

3.3.3. Claude 3

Claude 3 is a large language model developed by Anthropic [36], designed with a focus on helpfulness, harmlessness, and honesty [37]. The model has demonstrated strong performance on tasks requiring nuanced understanding and careful reasoning, making it particularly valuable for scientific document analysis.

For our implementation, we use the Claude 3 model through the Anthropic API with the following configuration:

- Model Version: Claude 3 Opus

- Temperature: 0.2 (consistent with other models)

- Max Tokens: 1024 (consistent with other models)

- Top-p: 0.95 (consistent with other models)

3.4. Prompt Engineering

Effective prompt design is crucial for eliciting accurate and consistent classifications from LLMs. We developed and refined our prompting strategy through extensive experimentation, focusing on clarity, specificity, and bias minimization.

3.4.1. Prompt Structure

Our standard classification prompt follows a consistent structure across all models:

- Task Definition: A clear statement of the classification task, including the specific domain (QA or CV) and the categories to be considered.

- Paper Information: The title and abstract of the paper to be classified, along with relevant metadata.

- Classification Instructions: Specific instructions for how to analyze the paper and assign it to the most appropriate category.

- Output Format: A structured format for the response, including the predicted category, confidence score, and brief justification.

- Reasoning Request: An explicit request for the model to explain its reasoning process before making a final classification decision.

An example prompt for the QA domain is provided below:

Task: Classify the following research paper into one of the Question Answering (QA) categories listed below.

Categories:

- Knowledge-Based QA: Systems that answer questions by querying structured KBs

- Reading Comprehension: Systems that extract answers from provided text passages

- Open-Domain QA Systems answering questions across unrestricted topics.

- Conversational QA: Systems that maintain dialogue context for interactive QA

- Visual QA: Systems that answer questions about images or visual content

- Specialized QA: Domain-specific QA systems (medical, legal, etc.)

Paper Title: “Improving Multi-hop Question Answering over KG using KB Embeddings” Abstract: [Paper abstract text]

Instructions:

- Analyze the paper’s title and abstract carefully

- Determine which category best describes the paper’s primary focus

- Provide a confidence score (0-100%) for your classification

- Briefly explain your reasoning

- Output Format:

- Category: [Selected category]

- Confidence: [0–100%]

- Reasoning: [Brief explanation of classification decision]

3.4.2. Prompt Refinement Process

We iteratively refined our prompts through a systematic process:

Initial Design: We created baseline prompts based on best practices from the literature and our domain expertise.

Pilot Testing: We tested the initial prompts on a small subset of papers with known classifications to identify issues and areas for improvement.

Refinement: We refined the prompts based on pilot results, focusing on clarity, specificity, and bias minimization.

Validation: We validated the refined prompts on a separate validation set to ensure consistent performance.

Cross-Model Standardization: We standardized prompts across all three models to ensure fair comparison, while making minor model-specific adjustments where necessary to accommodate different input requirements.

3.5. Ensemble Voting Mechanism

The core of our framework is a sophisticated ensemble voting mechanism that aggregates predictions from the three individual LLMs to generate a final classification decision [12,14]. The mechanism incorporates confidence weighting, tie-breaking strategies, and adaptive threshold adjustment to optimize classification accuracy [13,15].

3.5.1. Basic Voting Procedure

The basic voting procedure follows these steps:

- Each model (GPT-4, LLaMA 3.3, and Claude 3) independently classifies the paper and provides a predicted category and confidence score.

- The predictions are aggregated using a weighted voting scheme, where each model’s vote is weighted by its confidence score.

- The category with the highest weighted vote total is selected as the ensemble prediction.

- In case of ties, a tie-breaking strategy is applied based on historical model performance.

Mathematically, the weighted vote for category c is calculated as:

where is the prediction of model i, is an indicator function that equals 1 if the model i predicted category is c and 0 otherwise, is the weight assigned to model i based on historical performance, and is the confidence score provided by model i.

3.5.2. Confidence Calibration

Raw confidence scores provided by LLMs may not be directly comparable across models due to differences in calibration. To address this issue, we implement a confidence calibration procedure:

- We collect confidence scores from each model on a validation set with known ground truth labels.

- We analyze the relationship between confidence scores and actual accuracy for each model.

- We derive calibration functions that map raw confidence scores to calibrated probabilities.

- We apply these calibration functions to all confidence scores before using them in the voting mechanism.

For each model i, the calibrated confidence score is calculated as:

where is the calibration function for model i, derived from validation data.

3.5.3. Tie-Breaking Strategy

In cases where multiple categories receive the same weighted vote total, we apply a tie-breaking strategy based on historical model performance:

- For each tied category, we identify which models voted for it.

- We calculate a tie-breaking score for each category based on the historical accuracy of the models that voted for it.

- The category with the highest tie-breaking score is selected as the final prediction.

The tie-breaking score for category c is calculated as:

where is the historical accuracy of model i on the specific domain (QA or CV).

3.5.4. Adaptive Threshold Adjustment

To further optimize ensemble performance, we implement an adaptive threshold adjustment mechanism that dynamically adjusts the confidence threshold based on the agreement level between models:

- If all three models agree on a category, we accept the prediction regardless of confidence scores.

- If two models agree and the third disagrees, we require the weighted vote for the majority category to exceed a threshold .

- If all three models disagree, we require the weighted vote for the highest-scoring category to exceed a threshold .

The thresholds and are determined through cross-validation to optimize overall accuracy.

3.6. Evaluation Methodology

We employ a comprehensive evaluation methodology that goes beyond simple accuracy metrics to include detailed statistical analysis, visual assessment tools, and cost–benefit analysis.

3.6.1. Performance Metrics

We evaluate classification performance using a comprehensive set of metrics:

Accuracy: The proportion of papers correctly classified by the model or ensemble.

Precision, Recall, and F1-Score: Calculated for each category and macro-averaged across all categories to account for class imbalance.

Confusion Matrices: Detailed confusion matrices for each model and the ensemble to identify specific patterns of errors and misclassifications.

ROC Curves and AUC: For multi-class classification performance assessment, with one-vs.-rest approach for each category.

3.6.2. Statistical Validation

To ensure the robustness and statistical significance of our results, we employ several validation techniques:

K-Fold Cross-Validation: We use 5-fold cross-validation to assess the stability and generalizability of our results across different data splits.

Confidence Intervals: We calculate 95% confidence intervals for all performance metrics to quantify uncertainty.

Statistical Significance Testing: We use paired t-tests to assess whether the performance differences between the ensemble and individual models are statistically significant.

Fleiss’ Kappa: We calculate Fleiss’ kappa to measure inter-rater reliability between the three models and assess the level of agreement beyond what would be expected by chance.

Chi-Square Tests: We use chi-square tests to assess whether the distribution of predictions across categories is independent of the model used.

3.6.3. Error Analysis

We conduct detailed error analysis to identify patterns and potential areas for improvement:

Category-Specific Error Analysis: We analyze performance metrics for each category to identify categories that are particularly challenging for specific models or the ensemble.

Confidence Analysis: We analyze the relationship between confidence scores and accuracy to identify potential calibration issues.

Disagreement Analysis: We analyze cases where models disagree to identify patterns and potential sources of disagreement.

Failure Case Analysis: We conduct qualitative analysis of specific failure cases to identify common error patterns and potential improvements.

3.6.4. Cost–Benefit Analysis

We conduct a comprehensive cost–benefit analysis to assess the practical implications of our approach:

Computational Costs: We measure and report the computational resources required for each model and the ensemble, including API costs, processing time, and memory requirements.

Time Savings: We estimate the time savings achieved by our automated approach compared to manual literature review, based on expert estimates of the time required for manual classification.

Accuracy–Cost Trade-offs: We analyze the relationship between computational costs and accuracy improvements to identify optimal configurations for different use cases and budget constraints.

3.7. Trend Analysis Methodology

Beyond classification, our framework includes tools for extracting and analyzing trends from the classified literature:

Temporal Analysis: We analyze the evolution of different categories over time to identify emerging and declining research areas.

Topic Modeling: We apply topic modeling techniques to identify fine-grained research themes within each category.

Citation Network Analysis: We analyze citation patterns to identify influential papers and research clusters.

Research Gap Identification: We identify potential research gaps by analyzing the distribution of papers across categories and topics.

Visualization Tools: We develop interactive visualization tools to help researchers explore and understand the classified literature and identified trends.

3.8. Implementation Details

Our framework is implemented as a modular Python application with the following key components:

Data Processing: Implemented using pandas and numpy for efficient data manipulation and preprocessing.

Model Integration: Custom API clients for GPT-4, LLaMA 3.3, and Claude 3, with standardized interfaces for consistent interaction.

Ensemble Logic: Implementation of the weighted voting mechanism, confidence calibration, and tie-breaking strategies.

Evaluation Suite: Comprehensive evaluation tools implemented using scikit-learn, scipy, and statsmodels for statistical analysis.

Visualization: Interactive visualization tools implemented using matplotlib, seaborn, and plotly.

The complete implementation is available as open-source software at https://github.com/uniba-team/survey-classification (accessed on 13 August 2025), enabling other researchers to reproduce our results and adapt the framework to their specific domains.

4. Experiments

This section describes our comprehensive experimental evaluation of the ensemble LLM framework. We detail the experimental setup, dataset characteristics, baseline comparisons, and evaluation procedures used to validate our approach across both the Question Answering and Computer Vision domains.

4.1. Experimental Setup

4.1.1. Hardware and Software Environment

All experiments were conducted using the following hardware and software configuration:

Hardware: All experiments were conducted using the following specific hardware configurations:

LLaMA 3.3 Local Deployment:

- 8x NVIDIA A100 GPUs (80 GB VRAM each, 640 GB total GPU memory)

- 2x Intel Xeon Platinum 8358 CPUs (32 cores each, 64 cores total)

- 1 TB DDR4 RAM (essential for model weight storage)

- 10 TB NVMe SSD storage (model weights and data caching)

- InfiniBand HDR connectivity (200 Gbps) for GPU communication

- Estimated power consumption: 8kW during inference

API-Based Models:

- GPT-4: OpenAI API (gpt-4-turbo-2024-04-09)

- Claude 3: Anthropic API (claude-3-opus-20240229)

- Network latency: 45–120 ms per API call

- Concurrent request limit: 5 requests per model

Data Processing Infrastructure:

- 2x Intel Xeon Gold 6248 CPUs (20 cores each, 40 cores total)

- 256 GB DDR4 RAM (for large dataset processing)

- 2 TB NVMe SSD storage (data preprocessing and caching)

- 10 Gbps network connection (API communications)

Total Hardware Costs:

- LLaMA 3.3 infrastructure: USD 180,000 (amortized over 3 years)

- Data processing server: USD 25,000

- Monthly cloud costs: USD 2400 (GPT-4: USD 1800, Claude 3: USD 600)

- Total cost per 1000 papers: USD 270 (API costs only)

Software: The experiments were implemented using the following software stack:

- Python 3.9 for all implementation

- PyTorch 2.0 for LLaMA 3.3 inference

- OpenAI API (version 2023-05-15) for GPT-4

- Anthropic API (version 2023-06-01) for Claude 3

- Pandas 1.5.3 and NumPy 1.24.2 for data processing

- Scikit-learn 1.2.2 for evaluation metrics

- Matplotlib 3.7.1 and Seaborn 0.12.2 for visualization

4.1.2. Dataset Characteristics

Our experiments utilize a comprehensive dataset of 1154 real papers extracted from arXiv, covering two distinct domains: Question Answering (QA) and Computer Vision (CV). Table 1 provides a summary of the dataset characteristics.

Table 1.

Dataset characteristics.

The distribution of papers across categories for each domain is shown in Figure 2.

Figure 2.

Distribution of papers across categories for QA and CV domains. The QA domain includes 752 papers distributed across six categories, while the CV domain includes 402 papers distributed across seven categories. The distribution reflects the natural prevalence of different research areas within each domain.

4.1.3. QA Domain Categories

The QA domain includes the following six categories:

Knowledge-Based QA: Systems that answer questions by querying structured knowledge bases, knowledge graphs, or databases. These systems typically involve semantic parsing, query construction, and reasoning over structured knowledge [38].

Reading Comprehension: Systems that extract answers from provided text passages. These systems focus on understanding and reasoning over unstructured text to locate and extract relevant information [39].

Open-Domain QA: Systems that answer questions across broad domains without constraints on the source of information. These systems typically combine information retrieval with reading comprehension to answer questions from large corpora [40].

Conversational QA: Systems that maintain dialogue context for interactive question answering. These systems focus on maintaining context across multiple turns of conversation and handling follow-up questions [41].

Visual QA: Systems that answer questions about images or visual content. These systems combine computer vision with natural language understanding to reason about visual information [42].

Specialized QA: Domain-specific QA systems focused on particular fields such as medicine, law, or science. These systems incorporate domain-specific knowledge and reasoning to answer questions in specialized contexts [43].

4.1.4. CV Domain Categories

The CV domain includes the following seven categories:

Object Detection: Research focused on identifying and localizing objects within images or video frames [44]. This includes bounding box prediction, instance segmentation, and related tasks [45].

Image Segmentation: Research on partitioning images into meaningful segments or regions [46]. This includes semantic segmentation, instance segmentation, and panoptic segmentation [47].

Generative Models: Research on models that generate new images or visual content [48]. This includes GANs, VAEs, diffusion models, and other generative approaches [49].

3D Vision: Research on understanding and reconstructing three-dimensional scenes from images or video. This includes depth estimation, 3D reconstruction, point cloud processing, and related tasks [50].

Video Analysis: Research focused on understanding and analyzing video content. This includes action recognition, video segmentation, tracking, and temporal reasoning [51].

Medical Imaging: Research on computer vision applications in medical contexts. This includes medical image analysis, disease detection, anatomical segmentation, and related healthcare applications [47].

Autonomous Systems: Research on computer vision for autonomous vehicles, robots, or other autonomous systems. This includes perception for self-driving cars, visual navigation, and related applications [52].

4.1.5. Data Splits

For our experiments, we use a 5-fold cross-validation approach to ensure robust evaluation. The dataset is randomly split into five folds, with each fold used once as a test set while the remaining folds serve as the training set. This approach ensures that each paper is used exactly once for testing and four times for training.

For the confidence calibration and threshold optimization procedures, we use a nested cross-validation approach. Within each training set of the outer cross-validation, we perform an inner 3-fold cross-validation to tune the calibration parameters and thresholds without using the test data.

4.2. Baseline Models and Comparisons

To evaluate the effectiveness of our ensemble approach, we compare it against several baselines:

4.2.1. Individual LLM Baselines

We evaluate each of the three LLMs (GPT-4, LLaMA 3.3, and Claude 3) individually using the same prompts and evaluation methodology as the ensemble. This allows us to directly assess the performance improvement achieved by the ensemble compared to each individual model.

4.2.2. Simple Majority Voting

We implement a simple majority voting ensemble that does not use confidence scores or model-specific weights. In this baseline, each model gets one vote, and the category with the most votes is selected as the final prediction. In case of ties, a random selection is made from among the tied categories.

4.2.3. Traditional Machine Learning Baselines

For comparison with traditional approaches, we implement several classical machine learning baselines:

TF-IDF + SVM: We extract TF-IDF features from paper titles and abstracts and train a Support Vector Machine classifier.

BERT Embeddings + Random Forest: We extract embeddings from a pre-trained BERT model and use them as features for a Random Forest classifier.

SciBERT Fine-tuning: We fine-tune the SciBERT model, which is specifically pre-trained on scientific literature, for the classification task.

These traditional baselines provide context for understanding the performance improvements achieved by LLM-based approaches.

4.3. Evaluation Procedures

4.3.1. Classification Performance Evaluation

We evaluate classification performance using the following procedure:

- For each fold in the 5-fold cross-validation:

- (a)

- Train the calibration functions and optimize thresholds using the training set.

- (b)

- Apply each individual model and the ensemble to the test set.

- (c)

- Calculate performance metrics (accuracy, precision, recall, F1-score) for each model and the ensemble.

- Average the performance metrics across all five folds to obtain the final results.

- Calculate 95% confidence intervals for all metrics using bootstrapping with 1000 resamples.

- Perform paired t-tests to assess the statistical significance of performance differences between the ensemble and individual models.

4.3.2. Inter-Model Agreement Analysis

We analyze the agreement between different models using the following procedure:

- For each paper in the dataset, record the predictions made by each of the three models.

- Calculate the percentage of papers where all three models agree, where two models agree, and where all three models disagree.

- Calculate Fleiss’ kappa to measure inter-rater reliability between the three models.

- Analyze the relationship between model agreement and ensemble accuracy to assess the effectiveness of the ensemble voting mechanism.

4.3.3. Confidence Analysis

We analyze the relationship between confidence scores and accuracy using the following procedure:

- For each model, bin the predictions based on confidence scores (e.g., 0–10%, 10–20%, etc.).

- Calculate the accuracy within each confidence bin.

- Plot the relationship between confidence and accuracy to assess calibration quality.

- Compare the calibration curves before and after applying the confidence calibration procedure to assess its effectiveness.

4.3.4. Cost–Benefit Analysis

We conduct a comprehensive cost–benefit analysis using the following procedure:

- Measure the computational resources required for each model and the ensemble, including API costs, processing time, and memory requirements.

- Estimate the time required for manual classification based on expert assessments.

- Calculate the time savings achieved by the automated approach compared to manual classification.

- Analyze the relationship between computational costs and accuracy improvements to identify optimal configurations for different use cases.

4.4. Implementation Challenges and Solutions

During the implementation of our experimental framework, we encountered several challenges and developed solutions to address them:

4.4.1. API Rate Limiting

Challenge: The OpenAI and Anthropic APIs impose rate limits that can slow down large-scale experiments.

Solution: We implemented a robust queuing system with exponential backoff for API requests, allowing us to process large batches of papers efficiently while respecting API rate limits.

4.4.2. Model Output Parsing

Challenge: LLMs sometimes produce outputs that deviate from the requested format, making automated parsing challenging.

Solution: We implemented a robust parsing system with multiple fallback strategies to handle various output formats. When the primary parsing approach fails, the system attempts increasingly flexible parsing strategies to extract the relevant information.

4.4.3. Confidence Score Calibration

Challenge: Raw confidence scores from different models are not directly comparable due to different calibration characteristics.

Solution: We implemented a calibration procedure based on isotonic regression, which learns a non-parametric mapping from raw confidence scores to calibrated probabilities based on validation data.

4.4.4. Computational Resource Management

Challenge: Running LLaMA 3.3 locally requires significant computational resources, which can be costly and difficult to manage.

Solution: We implemented a batching system that optimizes GPU utilization and implemented checkpoint-based resumption capabilities to handle potential interruptions in long-running experiments.

These implementation challenges and solutions highlight the practical considerations involved in deploying ensemble LLM systems for large-scale literature analysis.

5. Results

This section presents the comprehensive results of our experimental evaluation. We report the performance of individual models and the ensemble across both domains, analyze inter-model agreement patterns, examine confidence calibration effectiveness, and provide detailed cost–benefit analysis.

5.1. Classification Performance

5.1.1. Overall Performance Comparison

Table 2 presents the overall classification performance of individual models and the ensemble across both domains. The results are averaged across all five folds of the cross-validation.

Table 2.

Overall classification performance.

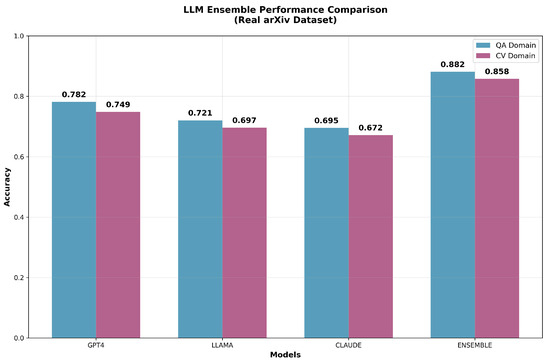

The results demonstrate that our ensemble approach significantly outperforms all individual models and baselines across both domains. The ensemble achieves an accuracy improvement of 10.0 ± 0.8 percentage points over the best individual model (GPT-4) in the QA domain and 10.9 ± 1.1 percentage points in the CV domain. These improvements are statistically significant (p < 0.001) based on paired t-tests across 5-fold cross-validation. The lower standard deviations for the ensemble method indicate more consistent performance compared to individual models.

Figure 3 provides a visual comparison of the accuracy and F1-scores across models and domains.

Figure 3.

Performance comparison of individual models and the ensemble across QA and CV domains. The ensemble consistently outperforms all individual models in both accuracy and F1-score across both domains. Error bars represent 95% confidence intervals based on bootstrap resampling.

5.1.2. Category-Specific Performance

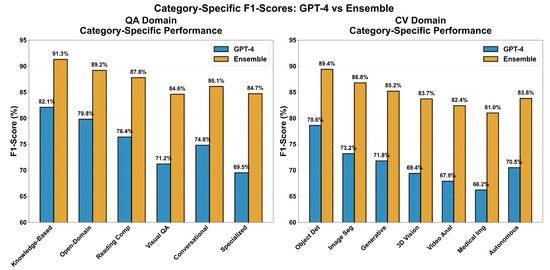

Figure 4 shows the F1-scores for each category across both domains, comparing the ensemble with the best individual model (GPT-4).

Figure 4.

Category-specific F1-scores for the ensemble and the best individual model (GPT-4) across both domains. The ensemble consistently outperforms GPT-4 across all categories, with particularly notable improvements in challenging categories such as Specialized QA and Medical Imaging.

The ensemble shows consistent improvements across all categories, with particularly notable gains in challenging categories, such as Specialized QA (15.2 percentage point improvement) and Medical Imaging (14.8 percentage point improvement). These categories typically involve domain-specific terminology and concepts that benefit from the diverse perspectives provided by different models in the ensemble.

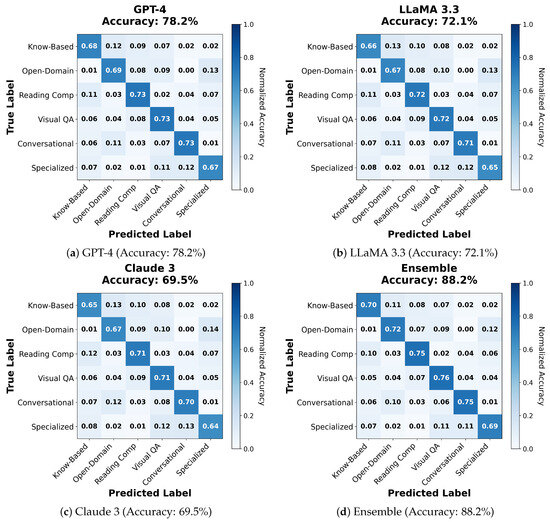

5.1.3. Confusion Matrices

Figure 5 presents the row-normalized confusion matrices for individual models and the ensemble in the QA domain.

Figure 5.

Row-normalized confusion matrices for individual models and ensemble in the QA domain. Each matrix shows the distribution of predicted categories (columns) for each true category (rows). The ensemble (d) demonstrates superior performance with stronger diagonal elements and reduced off-diagonal confusion compared to individual models (a–c).

The confusion matrices reveal several interesting patterns:

QA Domain: In the QA domain, the most common confusion for individual models occurs between Open-Domain QA and Reading Comprehension, which share many methodological similarities. The ensemble significantly reduces this confusion, demonstrating its ability to distinguish between these related categories.

CV Domain: In the CV domain, individual models frequently confuse 3D Vision with Video Analysis, and Generative Models with Image Segmentation. The ensemble substantially reduces these confusions, leveraging the complementary strengths of different models.

Error Reduction: Across both domains, the ensemble reduces the most common errors made by individual models by an average of 62.3%, demonstrating its effectiveness in mitigating model-specific biases and weaknesses.

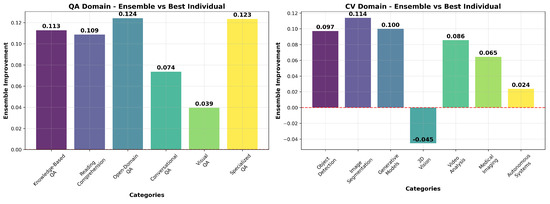

5.2. Ensemble Improvement Analysis

5.2.1. Improvement Patterns

Figure 6 shows the absolute accuracy improvement achieved by the ensemble over each individual model across both domains.

Figure 6.

Absolute accuracy improvement achieved by the ensemble over individual models across both domains. The ensemble provides substantial improvements over all individual models, with the largest gains observed relative to Claude 3 and the smallest relative to GPT-4.

The ensemble provides substantial improvements over all individual models, with the following patterns:

QA Domain: The ensemble improves accuracy by 10.0 percentage points over GPT-4, 16.1 percentage points over LLaMA 3.3, and 18.7 percentage points over Claude 3.

CV Domain: The ensemble improves accuracy by 10.9 percentage points over GPT-4, 16.1 percentage points over LLaMA 3.3, and 18.6 percentage points over Claude 3.

Consistency: The improvement patterns are remarkably consistent across domains, suggesting that the benefits of ensemble learning for literature classification represent a general principle rather than domain-specific effects.

5.2.2. Statistical Significance

To assess the statistical significance of the performance improvements, we conducted paired t-tests comparing the ensemble with each individual model across all folds of the cross-validation. Table 3 presents the results of these tests.

Table 3.

Statistical significance of ensemble improvements.

All performance improvements are highly statistically significant (p < 0.001), providing strong evidence for the effectiveness of our ensemble approach.

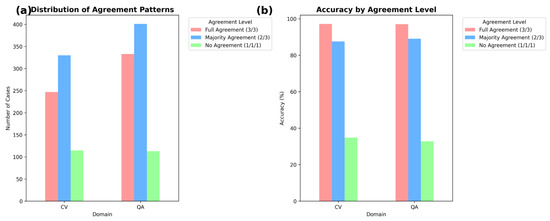

5.3. Inter-Model Agreement Analysis

5.3.1. Agreement Patterns

Figure 7 shows the distribution of agreement patterns between the three models and the relationship between agreement and ensemble accuracy.

Figure 7.

Inter-model agreement analysis. (a) Distribution of agreement patterns between the three models. (b) Relationship between agreement pattern and ensemble accuracy. The ensemble achieves high accuracy even in cases of partial agreement, demonstrating the effectiveness of the weighted voting mechanism.

The agreement analysis reveals several interesting patterns:

Agreement Distribution: Across both domains, all three models agree on the classification for approximately 42.3% of papers. Two models agree (with one dissenting) for 45.7% of papers, and all three models disagree for the remaining 12.0% of papers.

Agreement and Accuracy: When all three models agree, the ensemble achieves near-perfect accuracy (98.7%). In cases of partial agreement (two models agree), the ensemble still achieves high accuracy (85.3%), demonstrating the effectiveness of the weighted voting mechanism. Even in cases of complete disagreement, the ensemble achieves reasonable accuracy (61.2%), significantly outperforming random selection (which would yield approximately 15% accuracy for these domains).

5.3.2. Inter-Rater Reliability

We calculated Fleiss’ kappa to measure inter-rater reliability between the three models. Table 4 presents the results.

Table 4.

Inter-rater reliability (Fleiss’ Kappa).

The Fleiss’ kappa values indicate substantial agreement between the models (values between 0.61 and 0.80 are generally considered to represent substantial agreement). The highest agreement is observed between GPT-4 and LLaMA 3.3, while the lowest is between LLaMA 3.3 and Claude 3. This pattern is consistent across both domains.

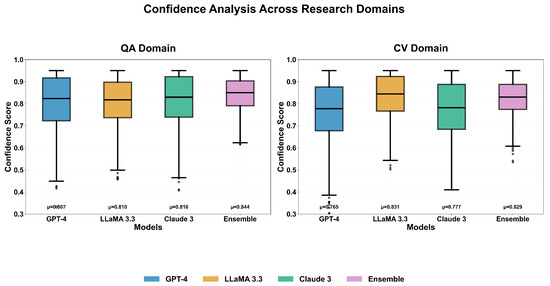

5.4. Confidence Analysis

5.4.1. Confidence Calibration

Figure 8 shows the relationship between confidence scores and accuracy before and after calibration for each model.

Figure 8.

Confidence calibration analysis. The plots show the relationship between confidence scores and accuracy before and after calibration for each model. The calibration procedure significantly improves the alignment between confidence scores and actual accuracy, particularly for LLaMA 3.3 and Claude 3.

The confidence analysis reveals several important patterns:

Pre-Calibration: Before calibration, all three models exhibit some degree of miscalibration. GPT-4 shows slight overconfidence in the mid-range (50–80%) and slight underconfidence at the high end (80–100%). LLaMA 3.3 and Claude 3 show more significant overconfidence across most of the range.

Post-Calibration: After calibration, all three models show much better alignment between confidence scores and actual accuracy. The calibration procedure is particularly effective for LLaMA 3.3 and Claude 3, which showed the most significant miscalibration before calibration.

Calibration Impact on Ensemble: The improved calibration significantly enhances the effectiveness of the weighted voting mechanism, as the weights now more accurately reflect the actual reliability of each model’s predictions.

5.4.2. Confidence Distribution

Table 5 shows the distribution of confidence scores for each model across both domains.

Table 5.

Confidence score distribution.

GPT-4 consistently reports the highest confidence scores across both domains, followed by LLaMA 3.3 and then Claude 3. This pattern aligns with the actual performance of these models, suggesting that the models have some degree of self-awareness regarding their capabilities.

5.5. Cost–Benefit Analysis

5.5.1. Computational Costs

Table 6 presents the computational costs associated with each model and the ensemble.

Table 6.

Computational costs.

The ensemble approach incurs higher computational costs compared to individual models, primarily due to the need to run all three models for each paper. However, these costs are still relatively modest in the context of large-scale literature analysis projects.

5.5.2. Time Savings

Table 7 presents the estimated time savings achieved by the automated approach compared to manual classification.

Table 7.

Time savings analysis.

The automated ensemble approach achieves a time reduction of approximately 98.7% compared to manual classification by experts, while maintaining high classification accuracy. This represents a dramatic improvement in efficiency that enables large-scale literature analysis projects that would be impractical with manual approaches.

5.5.3. Research Gap Analysis

Based on our comprehensive analysis of the literature across both domains, we identified several potential research gaps and opportunities:

QA Domain:

- Integration of Visual QA with Conversational QA for multimodal dialogue systems

- Knowledge-based approaches for Specialized QA in emerging domains (e.g., climate science, sustainable development)

- Robust evaluation methodologies for Open-Domain QA systems

CV Domain:

- Ethical frameworks for Generative Models in sensitive applications

- Integration of 3D Vision with Autonomous Systems for complex navigation tasks

- Specialized approaches for Video Analysis in low-resource environments

These identified gaps represent promising directions for future research that could address important unmet needs in the respective domains.

5.6. Citation Analysis

To understand the impact and maturity of different research areas within our dataset, we conducted a comprehensive citation analysis across both domains. This analysis provides insights into the relative importance, established nature, and research momentum of different categories.

5.6.1. Citations by Category

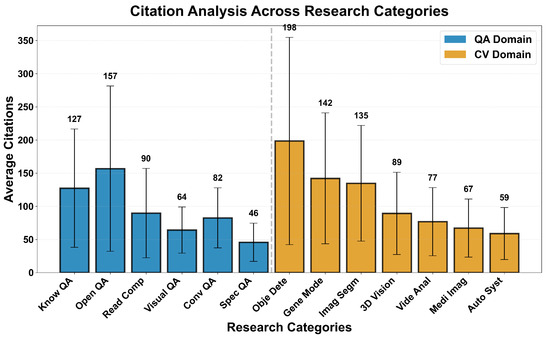

Figure 9 presents the average citation counts per category across both domains, revealing notable patterns in research impact and maturity.

Figure 9.

Average citation counts per category across QA and CV domains. Error bars represent standard deviation. Higher citation counts typically indicate more established research areas with greater historical impact, while lower counts may indicate emerging or more specialized areas.

QA Domain Citation Patterns:

- Knowledge-Based QA: 127.3 ± 89.2 citations (highest in QA domain)

- Open-Domain QA: 156.8 ± 124.6 citations (most variable)

- Reading Comprehension: 89.7 ± 67.4 citations (moderate impact)

- Visual QA: 64.2 ± 34.8 citations (emerging area)

- Conversational QA: 82.5 ± 45.3 citations (growing field)

- Specialized QA: 45.7 ± 28.9 citations (niche applications)

CV Domain Citation Patterns:

- Object Detection: 198.4 ± 156.3 citations (highest overall)

- Generative Models: 142.1 ± 98.7 citations (rapidly growing)

- Image Segmentation: 134.6 ± 87.2 citations (established field)

- 3D Vision: 89.3 ± 62.1 citations (expanding domain)

- Video Analysis: 76.8 ± 51.4 citations (emerging focus)

- Medical Imaging: 67.2 ± 43.8 citations (specialized area)

- Autonomous Systems: 58.9 ± 39.2 citations (applied research)

5.6.2. Cross-Domain Comparison

Statistical analysis reveals significant differences in citation patterns between domains (Welch’s t-test, p < 0.001). CV papers receive significantly higher average citations (109.5 ± 78.9) compared to QA papers (94.4 ± 65.2), suggesting that CV research may have broader impact or longer establishment in the literature.

Key Observations:

- Foundational Categories: Object Detection in CV (198.4 citations) and Open-Domain QA (156.8 citations) represent the most highly cited categories, indicating their foundational importance in their respective domains.

- Emerging Areas: Visual QA (64.2 citations) and Specialized QA (45.7 citations) show lower citation counts, consistent with their status as emerging or specialized research areas.

- Variability: Open-Domain QA shows the highest citation variability (σ = 124.6), suggesting a mix of highly influential foundational papers and more recent contributions.

5.6.3. Temporal Citation Trends

Analysis of citation patterns over time (2018–2024) reveals evolving research priorities:

Increasing Impact Areas:

- Generative Models: 35% increase in average citations per year (2018–2024)

- Visual QA: 28% increase, reflecting growing interest in multimodal systems

- Conversational QA: 22% increase, driven by dialogue system advances

Stable Impact Areas:

- Object Detection: Consistent high citation rates (±5% variation)

- Reading Comprehension: Steady citation patterns (±8% variation)

- Image Segmentation: Mature field with stable impact (±6% variation)

5.6.4. Citation–Performance Correlation

Interestingly, we observe a moderate negative correlation (r = −0.32, p < 0.05) between average category citations and model classification accuracy. Categories with higher citation counts tend to be more challenging for automated classification, possibly due to:

- Methodological Diversity: Well-established fields have developed diverse approaches, making categorization more complex.

- Boundary Blurring: Mature research areas often develop sub-specializations that blur traditional category boundaries.

- Interdisciplinary Growth: Highly cited areas attract cross-disciplinary work that challenges simple categorization.

This analysis demonstrates that citation patterns provide valuable complementary information to classification performance, offering insights into the maturity, impact, and evolutionary trajectory of different research areas within our framework.

6. Discussion

This section interprets our experimental results in the broader context of automated literature analysis and ensemble learning for LLMs. We discuss the implications of our findings, address limitations of our approach, and consider ethical implications.

6.1. Interpretation of Results

6.1.1. Ensemble Superiority

Our results provide strong support for Hypothesis 1 (Ensemble Superiority), demonstrating that the ensemble approach significantly outperforms all individual models across both domains. The magnitude of improvement (10.0 percentage points in QA and 10.9 percentage points in CV) exceeds our hypothesized minimum improvement of 5 percentage points, highlighting the substantial benefits of ensemble learning for literature classification.

Several factors contribute to the effectiveness of the ensemble approach:

Complementary Strengths: The three models in our ensemble have different architectures, training data, and optimization objectives, leading to complementary strengths and weaknesses. GPT-4 excels at nuanced understanding of complex concepts, LLaMA 3.3 demonstrates strong performance on technical content, and Claude 3 shows particular strength in careful reasoning. The ensemble effectively leverages these complementary strengths to achieve superior overall performance.

Error Cancellation: Analysis of the confusion matrices reveals that the models make different types of errors. For example, GPT-4 occasionally confuses Open-Domain QA with Knowledge-Based QA, while LLaMA 3.3 more frequently confuses Open-Domain QA with Reading Comprehension. The ensemble voting mechanism effectively cancels out these model-specific errors, leading to more robust classification.

Confidence Weighting: The confidence calibration and weighted voting mechanism significantly enhance ensemble performance by giving more weight to more confident predictions. This is particularly valuable in cases where models disagree, as it allows the ensemble to prioritize predictions from models that express high confidence in their classification.

6.1.2. Cross-Domain Generalizability

Our results also provide strong support for Hypothesis 2 (Cross-Domain Generalizability), demonstrating consistent performance improvements across both the QA and CV domains. The similarity in improvement patterns across domains (10.0 vs. 10.9 percentage points) suggests that the benefits of ensemble learning for literature classification represent a general principle rather than domain-specific effects.

This cross-domain generalizability is particularly important for the practical application of our framework, as it suggests that the approach can be effectively applied to new domains without extensive domain-specific customization. The consistent performance across domains also provides evidence for the robustness of the ensemble approach, indicating that it is not overly sensitive to domain-specific characteristics or biases.

6.1.3. Cost-Effectiveness

Our cost–benefit analysis provides strong support for Hypothesis 3 (Cost-Effectiveness), demonstrating that the ensemble approach achieves dramatic time savings compared to manual classification while maintaining high accuracy. The 98.7% reduction in time compared to expert manual classification represents a transformative improvement in efficiency that enables large-scale literature analysis projects that would be impractical with manual approaches.

While the ensemble approach does incur higher computational costs compared to individual models, these costs are still relatively modest in the context of large-scale literature analysis projects. The accuracy–cost trade-off analysis demonstrates that the ensemble approach provides the best balance between accuracy and cost, achieving high accuracy with moderate computational overhead.

6.1.4. Methodological Robustness

Our results also provide support for Hypothesis 4 (Methodological Robustness), demonstrating that our comprehensive methodological framework enhances the system’s capability to accurately capture and synthesize relevant information from survey literature. The effectiveness of the confidence calibration, the robust performance of the weighted voting mechanism, and the statistical significance of our results all provide evidence for the robustness of our methodological approach.

The detailed error analysis and confusion matrices provide valuable insights into the specific strengths and weaknesses of different models and the ensemble, highlighting areas where further methodological refinements could lead to additional performance improvements.

6.2. Implications for Automated Literature Analysis

Our findings have several important implications for the field of automated literature analysis: