1. Introduction

Accurate and reliable occupancy detection has become a cornerstone in the advancement of smart building technologies, providing critical capabilities for enhancing energy efficiency, enabling sophisticated automation, and strengthening security measures [

1]. Buildings account for consuming 32% of global energy consumption and contributing to 34 per cent of global CO

2 emissions, with significant wastage attributed to inefficient operation of heating, ventilation, air conditioning (HVAC) systems and lighting [

2]. Traditional static schedules often lead to energy use in unoccupied spaces, resulting in unnecessary costs and environmental impact [

3]. Occupancy-driven management enables the dynamic adjustment of building operations based on real-time presence information, ensuring that energy is expended only when necessary. This not only reduces operational expenses but also supports global efforts toward sustainability, positioning smart buildings as vital contributors to energy conservation goals [

4].

Beyond energy efficiency, occupancy detection plays a pivotal role in delivering intelligent, adaptive building automation [

5]. Modern smart buildings utilize occupancy data to optimize various subsystems, including dynamic lighting control, personalized temperature settings, and air quality management [

6]. Real-time occupancy insights enable predictive maintenance strategies, space utilization optimization, and emergency preparedness systems that adapt evacuation routes based on actual occupant distribution [

7]. Moreover, occupancy data integrated into access control and security systems enhances building safety by detecting anomalies and unauthorized presence [

8]. The rapid growth of the smart building market, driven by urbanization, rising energy costs, advancements in IoT, and regulatory emphasis on green building practices, is expected to spur significant innovation and investment in occupancy detection technologies in the coming years.

However, achieving accurate occupancy detection in complex real-world environments presents formidable challenges. Environmental signals such as temperature, humidity, CO

2 concentration, and light intensity exhibit delayed, nonlinear, and noisy relationships with human presence [

9]. Variations in external weather, building design, ventilation patterns, and occupant behavior further complicate the interpretation of sensor readings [

10]. Traditional approaches, such as passive infrared (PIR) sensors [

11] and rule-based methods, offer simplicity and cost-effectiveness but often fail to detect stationary occupants or generate high rates of false alarms [

12]. More advanced camera- and audio-based systems improve detection fidelity but introduce significant privacy risks and require substantial computational resources, hindering their scalability for widespread deployment.

Furthermore, the integration of smart building technologies introduces additional complexities in occupancy detection. Modern environments comprise heterogeneous sensors and platforms, generating vast volumes of asynchronous, multimodal data [

13,

14]. Merging these disparate data streams into a unified, actionable understanding of occupancy states requires sophisticated data-processing pipelines capable of handling inconsistencies, missing data, and sensor drift over time [

13]. Occupancy detection models must perform reliably under variable operational conditions, such as changing lighting levels, fluctuating indoor temperatures, and evolving occupant behaviors. Simultaneously, strict privacy regulations, such as the General Data Protection Regulation (GDPR), mandate non-intrusive, privacy-respecting detection mechanisms, emphasizing the need for solutions that avoid capturing visual, audio, or personally identifiable data [

12].

These realities highlight the limitations of conventional machine learning (ML) and deep learning (DL) approaches in occupancy detection. Classical ML models, including Random Forests, Support Vector Machines, and Multi-layer Perceptrons, rely heavily on engineered features and cannot often model complex temporal relationships inherent in dynamic building environments. While deep learning architectures, such as convolutional neural networks (CNNs) [

15] and recurrent neural networks (RNNs), offer improved feature extraction and sequential modeling, they typically struggle to capture long-range temporal dependencies and adapt to non-stationary patterns over time [

16]. Moreover, many existing models are not designed to cope with noisy, partially missing, or degraded sensor data, reducing their robustness in practical deployments [

17].

Despite advancements, a pressing need exists for occupancy detection models capable of simultaneously capturing both fine-grained local variations and long-term temporal dependencies across multiple sensor modalities. Existing hybrid CNN-RNN models, which combine different architectures, often excel at local feature extraction or sequential modeling but fail to effectively integrate both temporal scales due to inherent limitations such as vanishing gradients or restricted receptive fields [

18,

19]. Pure Transformer models, on the other hand, are adept at modeling long-range dependencies but sometimes struggle with encoding fine-grained local occupancy events, especially under high-frequency sensor sampling rates where spatial patterns play an essential role, and can be sensitive to noisy sensor inputs. Addressing these diverse and complex requirements simultaneously is crucial for developing reliable, scalable, and socially acceptable smart building systems that can operate effectively in real-world conditions.

To address these fundamental limitations and fill this critical research gap, we propose Translution, a novel hybrid Transformer-based architecture specifically designed for robust, privacy-preserving occupancy detection from multivariate sensor time-series data. Unlike prior hybrid or Transformer models, which exhibit one or both of the aforementioned shortcomings, Translution integrates multi-scale local feature extraction and long-range dependency modeling with an adaptive gating mechanism for enhanced robustness and generalization. This comprehensive solution is tailored to the complex temporal dynamics of occupancy patterns, achieving high accuracy while respecting privacy constraints by relying solely on non-intrusive environmental sensor data.

In response to these needs, we propose Translution, a novel hybrid Transformer-based architecture specifically designed for robust, privacy-preserving occupancy detection. Translution fuses multi-scale convolutional encoding to extract localized temporal features with self-attention mechanisms that capture long-range dependencies across environmental sensor signals. An adaptive feature gating mechanism is incorporated to dynamically prioritize informative inputs and suppress irrelevant noise, enhancing the model’s resilience to environmental variability and sensor imperfections. Translution thus offers a comprehensive solution tailored to the complex temporal dynamics of occupancy patterns, achieving high accuracy while respecting privacy constraints by relying solely on non-intrusive environmental sensor data. Our contributions are as follows:

We proposed Translution, a novel hybrid deep learning architecture that integrates self-attention mechanisms for long-range temporal modeling with multi-scale convolutional encoding to capture localized and diverse environmental patterns. This design enables the model to effectively learn both transient and sustained occupancy signals from sensor data.

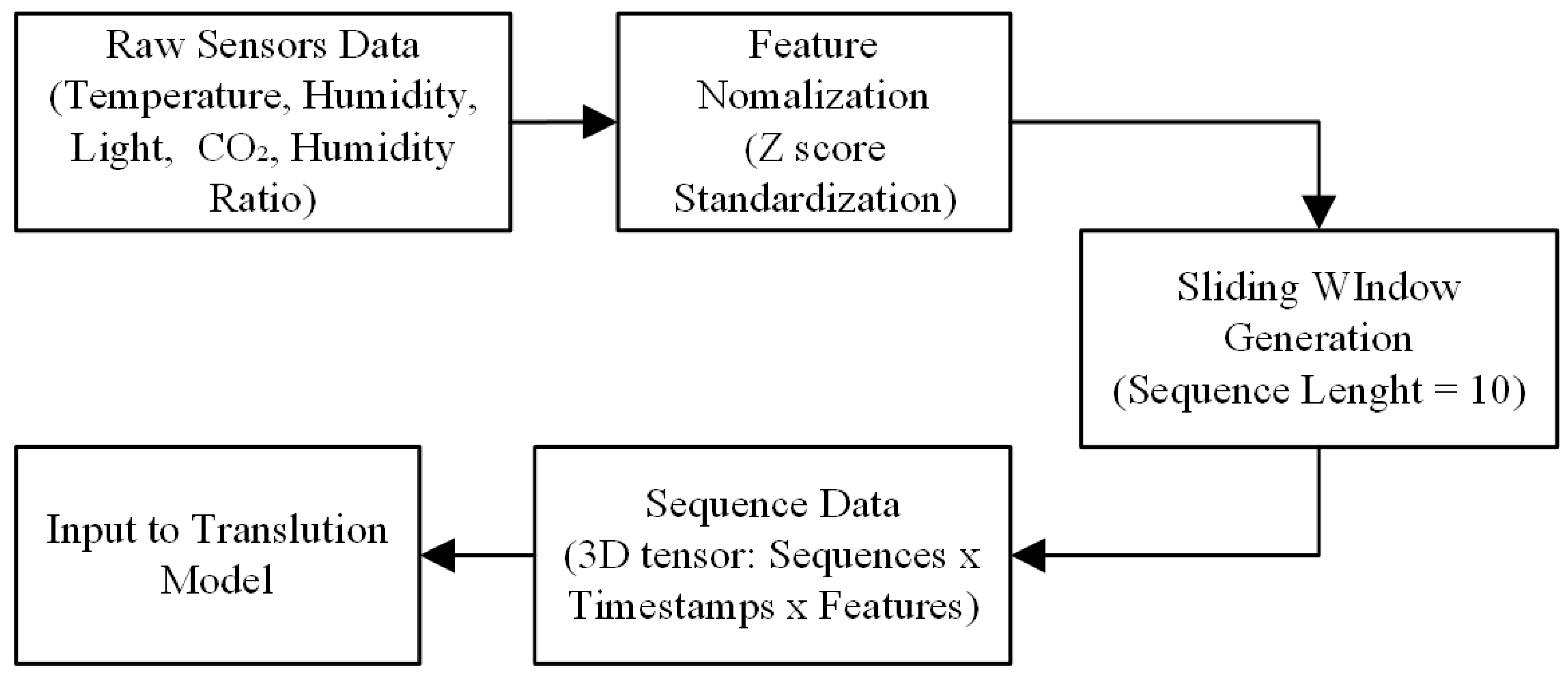

A dedicated preprocessing pipeline transforms raw 2D sensor readings into sequential inputs, facilitating temporal reasoning. Within the model, an adaptive gating mechanism dynamically fuses convolutional and attention-derived features, allowing Translution to prioritize salient information while suppressing noise without relying on handcrafted features.

Translution exclusively uses non-intrusive environmental sensor modalities (temperature, humidity, CO2, and light), avoiding cameras and microphones, and ensuring compliance with modern privacy standards. Its architecture is designed for generalization across varying occupancy conditions, making it well-suited for deployment in diverse smart building contexts.

The subsequent sections of the paper are organized as follows:

Section 2 provides a review of related work in the area of occupancy detection for smart buildings.

Section 3 presents the proposed Translution model, detailing its architecture and methodology.

Section 4 describes the experimental setup, evaluation metrics, and performance comparison between traditional machine learning, deep learning approaches, and Translution. Finally,

Section 5 concludes the paper by summarizing key challenges and outlining directions for future research.

2. Related Work

Accurate occupancy detection in smart buildings has been a key research focus over the past decade, driven by the critical need to improve energy efficiency, enable intelligent automation, and ensure occupant comfort. A wide range of ML and DL approaches have been explored, each with its advantages and limitations [

20]. In this section, we categorize the prior work into traditional ML methods, deep learning architectures, Transformer-based models, and privacy-aware occupancy detection frameworks.

Traditional Machine Learning Approaches: Earlier efforts in occupancy detection heavily relied on classical ML algorithms trained on environmental sensor data. Decision trees, Support Vector Machines (SVMs), and Random Forests were commonly employed due to their simplicity and interpretability. For instance, the authors [

21] presented a comprehensive review highlighting that Random Forest classifiers using temperature, humidity, CO

2, and light-intensity readings could achieve moderate success in occupancy prediction. However, these methods often required extensive manual feature engineering and struggled with dynamic and non-linear occupancy patterns, limiting their robustness in real-world smart building environments.

Deep Learning-Based Models: The limitations of traditional approaches led researchers to explore deep learning models capable of learning complex, non-linear feature representations from raw sensor streams [

22]. They developed a hybrid CNN-LSTM model where convolutional layers captured spatial features, and LSTM layers learned temporal occupancy patterns, significantly improving detection accuracy. Similarly, the authors [

23,

24] demonstrated that deep learning models, particularly recurrent neural networks (RNNs) and gated recurrent units (GRUs), are more capable of handling sequential dependencies compared to shallow models. Nevertheless, deep learning models still face challenges, such as overfitting on small datasets and sensitivity to noisy sensor inputs.

Transformer-Based Architectures: With the introduction of attention mechanisms, Transformer-based models have recently gained popularity in occupancy modeling. The authors in [

25] proposed a distributed Transformer network that could dynamically predict energy consumption by modeling occupancy patterns across smart building clusters. They demonstrated that self-attention layers could effectively capture long-term dependencies that traditional RNNs failed to model. Similarly, the authors in [

26] introduced the DMFF (Deep Multimodal Feature Fusion) framework, combining convolutional feature extractors with Transformer encoders. Their approach successfully integrated local and global features, resulting in faster convergence and improved generalization across heterogeneous occupancy patterns. However, pure Transformer models sometimes struggle with encoding fine-grained local occupancy events, especially when operating under high-frequency sensor sampling rates, where spatial patterns play a crucial role.

Multimodal and Privacy-Preserving Occupancy Detection: Recent research has increasingly emphasized the importance of privacy-respecting occupancy detection methods. The authors in [

19,

27] advocated for occupancy inference using only non-intrusive environmental sensors, such as temperature, humidity, light, and CO

2 measurements, to avoid the privacy concerns associated with camera- or audio-based approaches. Their studies demonstrated that when combined with appropriate machine learning techniques, environmental data alone can achieve competitive occupancy detection performance while adhering to modern data protection standards, such as the General Data Protection Regulation (GDPR). Additionally, the authors [

28] explored lightweight CNN-LSTM frameworks for predicting occupancy and energy consumption on embedded platforms, such as the Jetson Nano, highlighting the growing trend toward efficient, real-time, and privacy-compliant smart building solutions.

To provide an overview and structured comparison of the different occupancy detection approaches discussed,

Table 1 summarizes their key characteristics across dimensions such as algorithms, sensor modalities, privacy considerations, temporal modeling scope, typical performance, and limitations. This comparative analysis helps to highlight the specific challenges addressed by Translution and contextualize its novel contributions within the existing literature. We summarize the gaps identified in the literature as follows:

Existing models tend to specialize in capturing either short-term occupancy changes or long-term occupancy patterns, but often fail or struggle to capture both types of patterns together.

Deep learning models often overfit to specific datasets, limiting robustness across different buildings and occupancy patterns.

Many occupancy detection frameworks still rely on intrusive sensing modalities (e.g., cameras, microphones), which raises privacy concerns and limits their practical applicability in real-world smart building environments.

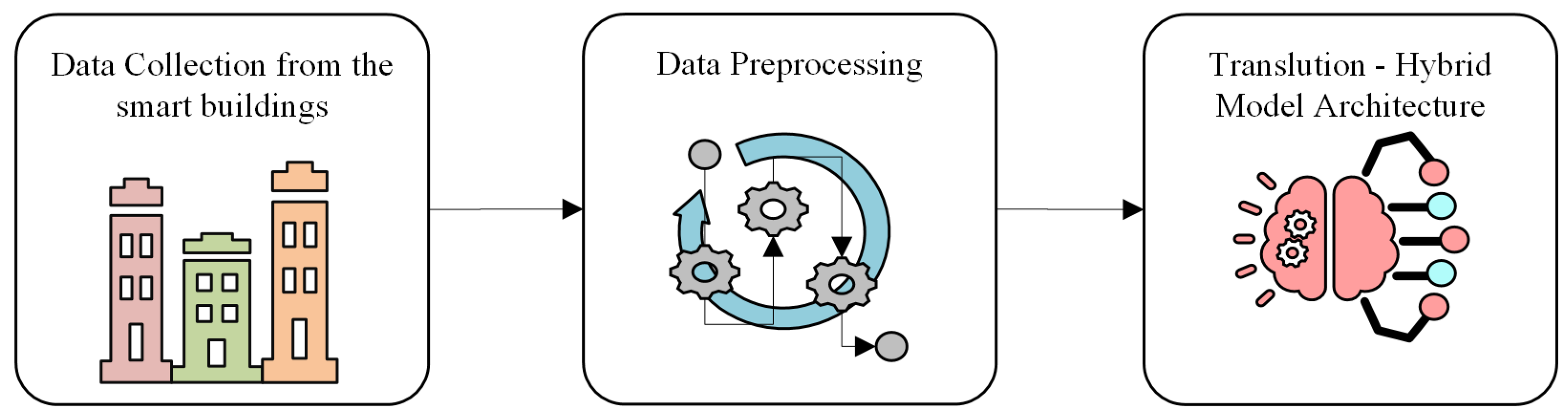

4. Experiment

We evaluated our model’s performance through a five-step process: data collection, data analysis, model training, model evaluation, and comparison with traditional machine learning and deep learning models.

4.1. Data Collection

The data used in this study is derived from the University of California, Irvine (UCI) Occupancy Detection Dataset [

32], a well-known benchmark for evaluating models in indoor presence recognition using ambient sensor signals. This dataset provides real-world, timestamped readings from a typical room environment in a building, monitored through non-intrusive environmental sensors. The sensors include temperature (°C), relative humidity (%), light intensity (Lux), CO

2 concentration (ppm), and humidity ratio. Each reading is accompanied by a ground-truth occupancy label (0 for unoccupied and 1 for occupied), verified using a PIR (Passive Infrared) motion sensor [

32].

To ensure robust training and generalization, the dataset is segmented into three distinct parts, covering different periods and varying occupancy patterns. These parts include a training subset composed of 8143 samples under normal working conditions. An initial testing subset of 2804 samples was collected during a different period for generalization testing. A second testing subset of 9752 samples, which contains more abrupt changes and varying lighting or ventilation conditions, simulates challenging deployment scenarios [

32].

Each row in the dataset represents a single reading captured at one-minute intervals, resulting in a rich temporal structure suitable for sequence modeling. By leveraging this structure, we could create input windows of consecutive time steps to form sequences that preserve short- and medium-term temporal dependencies in occupancy behavior. These sequences are further normalized using z-score scaling to reduce variance between features and enable more stable convergence during training. The sensors involved in the data-collection process are entirely non-intrusive, requiring no visual, audio, or biometric data, thereby ensuring full compliance with privacy-preserving standards, such as GDPR. This choice supports our objective of developing occupancy detection models that are both effective and ethically sound for real-world smart building environments.

4.2. Data Assessment

To design a robust and efficient deep learning model for occupancy detection, a thorough analysis of the dataset was carried out to gain empirical insights into the temporal behavior and variability of environmental sensor signals. This analysis guided key decisions on preprocessing strategies, sequence structuring, and model input configurations. Two central questions drove this exploration: (1) What are the distributional characteristics of each environmental feature? (2) What is the optimal sequence length for capturing occupancy patterns without introducing noise or computational overhead?

The dataset, derived from real-world smart building environments, includes five non-intrusive features—temperature sampled at regular intervals. Statistical profiling revealed that light and CO2 had the most distinguishable ranges across occupied and unoccupied classes. For example, average light levels during occupancy peaked at over 600 Lux, while unoccupied periods consistently remained below 50 Lux. Likewise, CO2 values showed a gradual accumulation during occupied sessions, due to human respiration, with averages exceeding 900 ppm, while unoccupied sessions remained around 400–500 ppm. Humidity and temperature demonstrated moderate variability but also contributed meaningful temporal trends when analyzed over time sequences.

We further evaluated the sequential nature of the data by computing moving averages and temporal autocorrelations to understand how quickly signals changed. This helped in selecting a fixed sequence length of 10 time steps, which provided a balanced window to capture both immediate fluctuations and longer occupancy cycles without overwhelming the model. For example, transient changes in light were observable within 2–3 time steps, while CO2 trends required 8–10 intervals for meaningful shifts.

The analysis also informed feature normalization. Since environmental sensors operate on different scales (e.g., CO2 in ppm vs. humidity as a ratio), standardization was applied using z-score normalization to ensure all features contributed equally during training. Additionally, we confirmed that the dataset had no missing values and minimal class imbalance, which was addressed using class weighting during model training to avoid bias toward the dominant class.

4.3. Evaluation Metrics

To assess model performance, several standard evaluation metrics were used, ensuring a robust evaluation of the occupancy detection models. These metrics were chosen to measure the model’s ability to correctly classify occupancy status while addressing issues like class imbalance and prediction reliability [

44].

Measures the overall correctness of the model’s predictions. It is useful when class distribution is balanced, but can be misleading in imbalanced datasets [

44].

Accuracy was used as a general performance indicator but was supplemented with other metrics to provide a more nuanced analysis.

Evaluates how many of the predicted positive cases are correct. High precision is crucial in applications where false positives (incorrectly predicting occupancy) need to be minimized [

44].

This metric was used to ensure that the model does not falsely predict occupancy too frequently, which is important for energy-efficient decision-making.

Measures how well the model detects actual positive cases. High recall is essential when missing an occupied state could have significant consequences, such as suboptimal energy use in smart buildings [

44].

This metric was particularly important in ensuring that occupied spaces were correctly identified and not mistakenly classified as unoccupied.

The harmonic mean of precision and recall balances both metrics. It is particularly useful when the dataset is imbalanced, thereby preventing reliance on accuracy alone [

44].

The F1-score was chosen as a primary metric to assess the trade-off between precision and recall, ensuring a well-balanced model.

Evaluates the model’s ability to distinguish between classes across different probability thresholds. A higher AUROC indicates better discrimination between occupied and unoccupied states. This metric was useful for analyzing model robustness across varying classification thresholds, allowing for better decision-making in practical applications [

44].

These metrics provided a comprehensive assessment of model effectiveness across different test datasets, enabling a detailed comparison of deep-learning models for occupancy detection.

4.4. Optimal Sequence Length Determination

We further evaluated the sequential nature of the data by computing moving averages and temporal autocorrelations to understand how quickly signals changed. This helped in selecting a fixed sequence length of 10 time steps, which provided a balanced window to capture both immediate fluctuations and longer occupancy cycles without overwhelming the model. For instance, transient changes in light were observable within 2–3 time steps, while CO2 trends required 8–10 intervals for meaningful shifts.

To empirically validate this design decision and identify the optimal input sequence length for Translution, we conducted a series of experiments evaluating the model’s performance across various sequence lengths: 5, 10, 15, and 20 time steps. The results, summarized in

Table 2 and

Table 3, demonstrate the impact of sequence length on Translution’s accuracy, F1-score, and AUROC.

As evidenced by these tables, Translution consistently achieved its highest performance across all metrics (Accuracy, F1-score, and AUROC) with a sequence length of 10 for both Test Dataset 1 and Test Dataset 2. Shorter sequences (e.g., 5) likely failed to capture sufficient temporal context, resulting in reduced recall and an overall F1-score. Conversely, while longer sequences (15 and 20) may contain more historical data, they often introduce irrelevant noise or dilute crucial short-term patterns, leading to a decline in performance. This empirical validation confirms that a 10-timestep window optimally balances the need to capture essential temporal dynamics with computational efficiency, supporting its selection as the standard input sequence length for Translution.

4.5. Hyperparameter Settings

To ensure effective learning and consistent benchmarking, the hyperparameters used in the Translution model were carefully selected and tuned, balancing model complexity, generalization ability, and computational efficiency. These settings, summarized in

Table 4, were primarily determined through a systematic process involving preliminary experiments and iterative optimization based on the performance of the validation set [

45].

An initial set of hyperparameter values was chosen, drawing upon common practices for Transformer-based architectures and deep learning models in time-series analysis. Subsequently, these parameters were refined by evaluating their impact on key performance metrics (F1-score and AUROC) on a dedicated validation set, which was held out from the training data. For instance, the internal representation dimension of 64 and the use of 2 stacked Translution blocks were identified as providing an optimal balance between model capacity and computational demand, through iterative testing to observe their effect on model performance and training time.

The dropout rate of 0.2 was selected to mitigate overfitting, a common challenge in deep learning, by monitoring the divergence between training and validation loss curves. This helped ensure the model’s ability to generalize effectively to unseen data. The Adam optimizer, with a learning rate of 0.001 and a batch size of 32, was chosen for its proven efficiency and stability in deep learning training, and further fine-tuned for optimal convergence behavior during preliminary runs. Global Average Pooling was employed before the final classification layer due to its lightweight and permutation-invariant aggregation properties, which also contribute to reducing overfitting.

4.6. Model Training

The training phase of the Translution architecture was designed to strike a balance between model complexity, learning efficiency, and generalization performance. To begin, environmental sensor data—comprising temperature, humidity, light intensity, CO2 concentration, and humidity ratio—was preprocessed through normalization and temporal sequence construction. Specifically, the data was reshaped into overlapping sequences of 10 time steps, allowing the model to observe short-term fluctuations and emerging occupancy trends across small time windows. This resulted in a structured 3D input format of the shape (samples, 10, 5).

Rather than training on static features, the model learned from evolving temporal patterns in a way that mimics real-world occupancy fluctuations. The training leveraged the Scikit-learn ‘Pipeline’ wrapper to tightly couple preprocessing and model fitting steps, ensuring consistency during validation and future deployment. The core model was trained using the Adam optimizer with binary cross-entropy loss, suitable for binary occupancy classification. A batch size of 32 and 15 epochs was chosen to balance convergence speed and training stability.

Although early stopping was not activated in this setup, the model incorporated built-in regularization mechanisms: dropout layers within attention and convolution modules, adaptive gating for dynamic noise suppression, and batch normalization for gradient stability. These collectively mitigated overfitting and helped the model generalize well across two separate test datasets.

4.7. Comparative Analysis of Different Machine and Deep Learning Models for Occupancy Detection

To rigorously assess the efficacy of the proposed Translution architecture, we designed a comparative experimental setup using the UCI Occupancy Detection Dataset [

32]. The dataset was split into three parts: a training set and two distinct test sets—one representing stable occupancy conditions and the other comprising more variable and challenging scenarios. Each sample includes five environmental sensor readings (temperature, humidity, CO

2, light, and humidity ratio) and a binary occupancy label. We trained all baseline models using the same preprocessed input sequences, ensuring consistency in evaluation. Our objective was not just to report raw accuracy but to examine how well different models handle temporal variation, noise, and generalization across changing occupancy patterns.

We included five baseline models in the experiment: Random Forest, MLP, OCSVM, CNN, and RNN. [

1] These models span a range of paradigms, from traditional supervised classifiers to temporal sequence learners. Random Forest and MLP were selected for their widespread use in sensor-based classification tasks. OCSVM provided a non-supervised baseline to examine model behavior under minimal label supervision. CNNs were chosen for their ability to learn spatial features from fixed-size windows, while RNNs represented conventional sequence models capable of capturing short-term temporal dependencies.

During the evaluation, all models were assessed using five standard metrics: accuracy, precision, recall, F1-score, and AUROC. The results reveal distinct patterns in performance, shown in

Table 5 and

Table 6. In Test 1, Translution achieved an accuracy of 98.1%, the highest among all models. It also led to recall (99.8%) and F1-score (97.3%), outperforming RNN (F1 = 95.7%), CNN (F1 = 84.6%), and traditional models like Random Forest (F1 = 95.1%). In Test 2, which involved more irregular occupancy behavior, Translution maintained robust performance with an accuracy of 98.9% and an equally high F1-score of 97.3%. At the same time, competing models saw notable drops—for instance, MLP dropped to an F1-score of 90.2%, and CNN fell to just 76.3%.

OCSVM consistently underperformed in both test sets, particularly in terms of precision and F1-score, confirming its limited utility in structured occupancy tasks. CNNs, though precise, missed many true positives due to their inability to model temporal dependencies. RNNs fared better but still failed to generalize across test sets. Translution’s advantage lies in its multi-scale convolutional layers, which capture short- and mid-range feature patterns, and self-attention modules, which handle long-range dependencies without recency bias. Additionally, the adaptive gating mechanism enables dynamic feature weighting at each time step, making the model robust to noise and environmental variability.

Overall, the experimental findings demonstrate that Translution is not only highly accurate but also generalizes better across diverse conditions, maintains high recall (minimizing false negatives), and handles real-world complexities more effectively than conventional ML/DL approaches. Its consistent performance across both test sets establishes it as a reliable, scalable, and privacy-compliant model for real-time occupancy detection in smart building environments.

Table 7 presents the computation time analysis for training and testing various machine learning and deep learning models, including our proposed Translution architecture. As expected, simpler models such as Random Forest and OCSVM exhibited minimal computation time—training in under 0.1 s and completing inference on both test sets in fractions of a second. These models are highly efficient, but they come with significant trade-offs in terms of detection accuracy and generalization, as demonstrated in prior evaluations. In contrast, deep learning models such as CNN and RNN required longer training times (83.61 s and 85.96 s, respectively) and showed increased inference latency, especially on the more complex Test Set 2. Translution, being the most complex architecture, required the longest training time at 222.19 s and exhibited the highest inference time: 2.22 s on Test Set 1 and 11.81 s on Test Set 2. This increase is attributed to its multi-scale convolutional layers, attention mechanisms, and dynamic gating modules, which collectively model both short-term and long-term dependencies in the input data. This trade-off between performance and computational cost is a critical consideration for real-world deployment, especially in diverse scenarios such as edge computing or large-scale smart building infrastructures.

For edge deployment, where a model needs to run directly on a resource-constrained device (e.g., an IoT gateway or a microcontroller), Translution’s current latency may be too high for real-time applications, and its memory footprint could be prohibitive. In such cases, the priority shifts from maximum accuracy to a balanced solution that offers high enough performance with minimal latency and power consumption. For large-scale deployments across many smart buildings, the cumulative computational demand and energy consumption for training and inference become significant. While Translution’s performance justifies its use in a central server environment, its scalability is a key challenge. The quadratic complexity of the attention mechanism and the parallel multi-scale convolutions contribute to this overhead.

4.8. Ablation Study

To validate the necessity and individual contributions of Translution’s core components, we conducted a comprehensive ablation study. We systematically evaluated four model configurations: the full Translution architecture and three ablated versions, each with one of the key components removed (dynamic gating, multi-scale convolution, and adaptive attention). All models were trained and evaluated under identical conditions on both Test Dataset 1 and Test Dataset 2. The results are summarized in

Table 8.

The results in

Table 8 demonstrate the critical role of each component in achieving Translution’s superior performance. When any of the three main components is removed, the model’s performance on both test sets, particularly in terms of the F1-score and AUROC, experiences a significant degradation.

Specifically, the removal of the dynamic gating mechanism resulted in a notable drop in performance, with the F1-score falling to 88.0% and 91.0% on Test 1 and Test 2, respectively. This degradation, particularly in recall, validates the importance of the gating mechanism in dynamically prioritizing relevant sensor signals and suppressing irrelevant noise, which is essential for robust detection in variable environments.

The ablation of the multi-scale convolution module led to the most substantial performance drop. The F1-score plummeted to 78.3% on Test 1 and 85.2% on Test 2. This finding empirically confirms the necessity of the convolutional component for capturing fine-grained local temporal patterns and feature interactions, which are crucial for detecting transient occupancy events. The model’s inability to learn these localized features effectively without the multi-scale convolution severely impacts its overall accuracy.

Finally, removing the adaptive attention mechanism also resulted in a significant performance reduction, with the F1-score decreasing to 90.1% and 90.3% on Test 1 and Test 2, respectively. While still performing better than the no-convolution model, this outcome underscores the vital role of the self-attention mechanism in modeling long-range temporal dependencies. Its absence limits the model’s ability to contextualize occupancy events across the entire input sequence, which is essential for detecting subtle and delayed changes in environmental data.

The ablation study provides strong empirical evidence that all three components—the multi-scale convolution, adaptive attention, and dynamic gating—are indispensable. Their synergistic integration is what enables the full Translution model to achieve state-of-the-art performance and robust generalization across diverse real-world occupancy scenarios.

While Translution demonstrates superior predictive accuracy and robustness, its advanced architecture results in significantly higher training and inference times compared to simpler baselines. This computational overhead is a critical consideration for practical deployment, particularly in resource-constrained edge devices or large-scale smart building infrastructures that require real-time responses. For such scenarios, future optimizations, such as model pruning, quantization, or knowledge distillation, will be essential to reduce model complexity and inference latency while preserving performance.

5. Conclusions

In this study, we introduced Translution, a novel hybrid deep learning model for accurate and privacy-preserving occupancy detection in smart buildings. The proposed architecture integrates multi-scale convolutional layers to capture short-term temporal patterns, complemented by Transformer-based attention blocks that effectively model long-range dependencies in environmental sensor data. Additionally, dynamic feature fusion via adaptive gating allows Translution to prioritize relevant features and suppress noisy signals, improving robustness across diverse conditions.

We evaluated Translution using the publicly available UCI Occupancy Detection Dataset, which comprises real-world sensor readings from office environments. Extensive experiments have demonstrated that Translution significantly outperforms conventional models, such as Random Forest, MLP, CNN, RNN, and One-Class SVM, across multiple performance metrics, including accuracy, F1-score, AUROC, precision, and recall. Notably, Translution achieved superior generalization on test datasets with unseen temporal conditions, validating its ability to adapt to dynamic occupancy patterns.

Beyond predictive performance, our model upholds strong privacy standards by exclusively relying on non-intrusive environmental features—such as temperature, humidity, light, and CO2—thereby avoiding the use of audio or video data that may compromise user privacy. This design aligns with regulatory frameworks, such as GDPR, making Translution a suitable solution for real-world deployments in privacy-sensitive smart environments.

Despite its strong performance, Translution’s architecture introduces significant computational complexity, particularly due to the layered attention and convolutional components. This can limit its scalability for real-time deployment on edge or resource-constrained devices. Additionally, the fixed sequence length design may reduce flexibility across varied sensor sampling rates.

In future work, we aim to optimize Translution for real-world use by exploring techniques such as model pruning to remove redundant connections and parameters, quantization to reduce the model’s memory footprint and accelerate inference, and knowledge distillation, where a smaller model is trained to mimic the behavior of the larger Translution architecture. These methods could allow for a significant reduction in model complexity and latency while preserving a substantial portion of Translution’s high performance, making it a more viable solution for diverse deployment scenarios. We also plan to evaluate its performance on larger, more diverse datasets and extend the framework to support variable-length sequences and online adaptation to dynamic building environments.