A Survey of Analog Computing for Domain-Specific Accelerators

Abstract

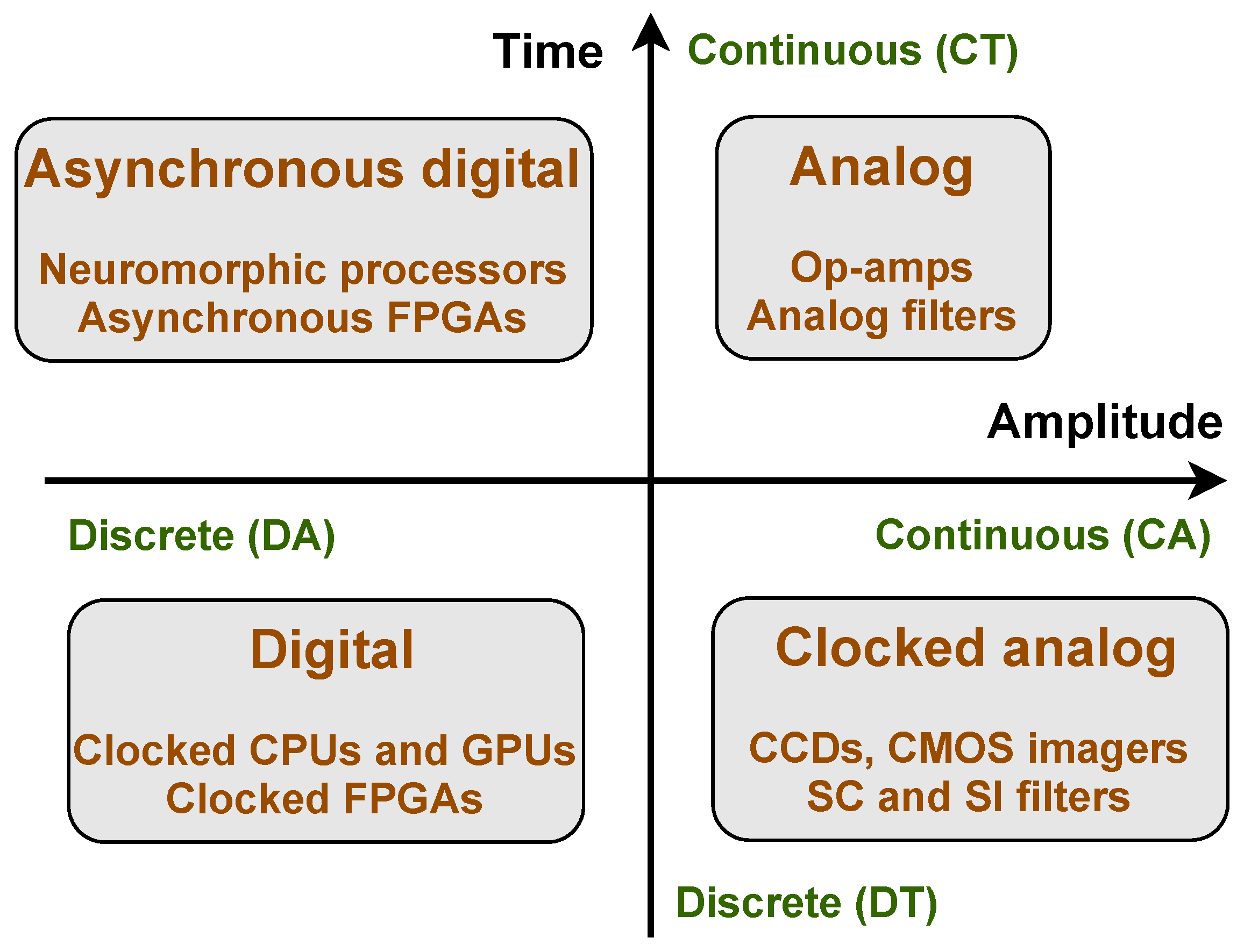

1. Introduction

2. In-Memory Computing

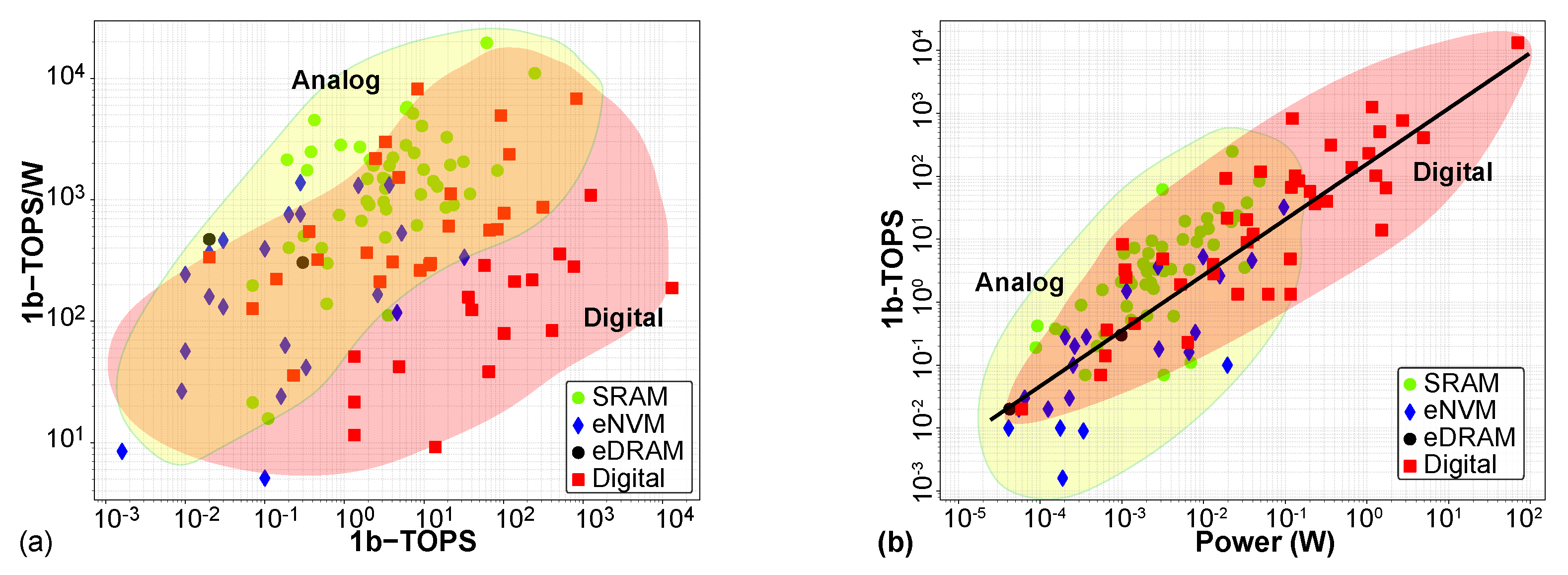

- Normalized efficiency: Reported bank-level energy efficiency is scaled by arithmetic precision to yield normalized efficiency (in units of 1b-TOPS/W).

- ADC analysis: Column ADC parameters are extracted to determine the number of bits processed per read cycle, input information content, and row/column parallelism.

- Throughput: Normalized throughput (in units of 1b-TOPS) is computed from the ADC and energy parameters and normalized by reported power.

- Density: Compute density is verified by dividing the bottom-up throughput by the layout area (estimated from the die photo if needed).

- Raw energy efficiency numbers (in 1b-TOPS/W) are reported without normalization to avoid ambiguity.

- Overall trend: 1b-TOPS = 215

- Analog IMC trend: 1b-TOPS = 500

- Digital accelerator trend: 1b-TOPS = 170

3. Ising Machines

3.1. Applications to NP-Hard Problems

3.2. Probabilistic Ising Machines (PIMs)

3.3. Discussion

4. Analog Solvers

4.1. PDE Solvers

4.2. Linear Algebra Solvers

4.3. Low-Complexity Beamforming

4.4. Antenna Array Signal Processing

4.5. Programmable Wave-Based Metastructures

5. Neuromorphic Processors

6. Machine Learning

7. Other Applications of Analog Computing

7.1. Quantum-Inspired Systems

7.2. Stochastic Simulations

7.3. Statistical Inference

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lundstrom, M.S.; Alam, M.A. Moore’s law: The journey ahead. Science 2022, 378, 722–723. [Google Scholar] [CrossRef]

- Lundberg, K.H. The history of analog computing: Introduction to the special section. IEEE Control Syst. Mag. 2005, 25, 22–25. [Google Scholar] [CrossRef]

- Small, J.S. General-purpose electronic analog computing: 1945–1965. IEEE Ann. Hist. Comput. 1993, 15, 8–18. [Google Scholar] [CrossRef]

- Ulmann, B. Analog Computing, 2nd ed.; Walter de Gruyter GmbH & Co KG: Berlin, Germany, 2022; 460p. [Google Scholar]

- Tsividis, Y. Not your Father’s analog computer. IEEE Spectr. 2018, 55, 38–43. [Google Scholar] [CrossRef]

- Köppel, S.; Ulmann, B.; Heimann, L.; Killat, D. Using analog computers in today’s largest computational challenges. Adv. Radio Sci. 2021, 19, 105–116. [Google Scholar] [CrossRef]

- Ulmann, B. Beyond zeros and ones – analog computing in the twenty-first century. Int. J. Parallel. Emergent. Distrib. Syst. 2024, 39, 139–151. [Google Scholar] [CrossRef]

- MacLennan, B.J. Analog computation. In Unconventional Computing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–33. [Google Scholar] [CrossRef]

- Sarpeshkar, R. Ultra Low Power Bioelectronics: Fundamentals, Biomedical Applications, and Bio-Inspired Systems; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Mandal, S.; Liang, J.; Malavipathirana, H.; Udayanga, N.; Silva, H.; Hariharan, S.; Madanayake, A. Integrated Analog Computers as Domain-Specific Accelerators: A Tutorial Review. In Proceedings of the 2024 IEEE 67th International Midwest Symposium on Circuits and Systems (MWSCAS), Springfield, MA, USA, 11–14 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 875–881. [Google Scholar] [CrossRef]

- Hasler, J.; Black, E. Physical Computing: Unifying Real Number Computation to Enable Energy Efficient Computing. J. Low Power Electron. Appl. 2021, 11, 14. [Google Scholar] [CrossRef]

- Mead, C. Neuromorphic electronic systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Sarpeshkar, R. Universal Principles for Ultra Low Power and Energy Efficient Design. IEEE Trans. Circuits Syst. II Express Briefs 2012, 59, 193–198. [Google Scholar] [CrossRef]

- Kinget, P. Device mismatch and tradeoffs in the design of analog circuits. IEEE J. Solid-State Circuits 2005, 40, 1212–1224. [Google Scholar] [CrossRef]

- Li, Y.; Ni, X.; Achour, S.; Murmann, B. Open-ALOE: An Analog Layout Automation Flow for the Open-Source Ecosystem. In Proceedings of the 2025 26th International Symposium on Quality Electronic Design (ISQED), San Francisco, CA, USA, 23–25 April 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, H.; Liu, M.; Xu, B.; Zhu, K.; Tang, X.; Li, S.; Lin, Y.; Sun, N.; Pan, D.Z. MAGICAL: An open-source fully automated analog IC layout system from netlist to GDSII. IEEE Design Test 2020, 38, 19–26. [Google Scholar] [CrossRef]

- Kunal, K.; Madhusudan, M.; Sharma, A.K.; Xu, W.; Burns, S.M.; Harjani, R.; Hu, J.; Kirkpatrick, D.A.; Sapatnekar, S.S. ALIGN: Open-Source Analog Layout Automation from the Ground Up. In Proceedings of the 56th Annual Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Kunal, K.; Dhar, T.; Madhusudan, M.; Poojary, J.; Sharma, A.K.; Xu, W.; Burns, S.M.; Hu, J.; Harjani, R.; Sapatnekar, S.S. GANA: Graph Convolutional Network Based Automated Netlist Annotation for Analog Circuits. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; pp. 55–60. [Google Scholar] [CrossRef]

- Wei, P.H.; Murmann, B. Analog and mixed-signal layout automation using digital place-and-route tools. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2021, 29, 1838–1849. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, H.; Gao, X.; Kong, Z.; Tang, X.; Lin, Y.; Wang, R.; Huang, R. LayoutCopilot: An LLM-Powered Multiagent Collaborative Framework for Interactive Analog Layout Design. IEEE Trans.-Comput.-Aided Des. Integr. Circuits Syst. 2025, 44, 3126–3139. [Google Scholar] [CrossRef]

- Zadeh, D.N.; Elamien, M.B. Generative AI for analog integrated circuit design: Methodologies and applications. IEEE Access 2025, 13, 58043–58059. [Google Scholar] [CrossRef]

- Chen, P.H.; Lin, Y.S.; Lee, W.C.; Leu, T.Y.; Hsu, P.H.; Dissanayake, A.; Oh, S.; Chiu, C.S. MenTeR: A fully-automated Multi-agenT workflow for end-to-end RF/Analog Circuits Netlist Design. arXiv 2025, arXiv:2505.22990. [Google Scholar]

- Shapero, S.; Hasler, P. Mismatch characterization and calibration for accurate and automated analog design. IEEE Trans. Circuits Syst. I Regul. Pap. 2012, 60, 548–556. [Google Scholar] [CrossRef]

- Chakrabartty, S.; Shaga, R.K.; Aono, K. Noise-shaping gradient descent-based online adaptation algorithms for digital calibration of analog circuits. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 554–565. [Google Scholar] [CrossRef]

- Rumberg, B.; Graham, D.W.; Kulathumani, V.; Fernandez, R. Hibernets: Energy-Efficient Sensor Networks Using Analog Signal Processing. IEEE J. Emerg. Sel. Top. Circuits Syst. 2011, 1, 321–334. [Google Scholar] [CrossRef]

- Fried, D. Analog sample-data filters. IEEE J. Solid-State Circuits 1972, 7, 302–304. [Google Scholar] [CrossRef]

- Caves, J.; Rosenbaum, S.; Copeland, M.; Rahim, C. Sampled analog filtering using switched capacitors as resistor equivalents. IEEE J. Solid-State Circuits 1977, 12, 592–599. [Google Scholar] [CrossRef]

- Gilbert, B. Translinear circuits: An historical overview. Analog Integr. Circuits Signal Process. 1996, 9, 95–118. [Google Scholar] [CrossRef]

- D’Angelo, R.J.; Sonkusale, S.R. A Time-Mode Translinear Principle for Nonlinear Analog Computation. IEEE Trans. Circuits Syst. I Regul. Pap. 2015, 62, 2187–2195. [Google Scholar] [CrossRef]

- Zhang, Y.; Mirchandani, N.; Abdelfattah, S.; Onabajo, M.; Shrivastava, A. An Ultra-Low Power RSSI Amplifier for EEG Feature Extraction to Detect Seizures. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 329–333. [Google Scholar] [CrossRef]

- Mirchandani, N.; Zhang, Y.; Abdelfattah, S.; Onabajo, M.; Shrivastava, A. Modeling and Simulation of Circuit-Level Nonidealities for an Analog Computing Design Approach With Application to EEG Feature Extraction. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 229–242. [Google Scholar] [CrossRef]

- Zhang, Y.; Mirchandani, N.; Onabajo, M.; Shrivastava, A. RSSI Amplifier Design for a Feature Extraction Technique to Detect Seizures with Analog Computing. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Seville, Spain, 12–14 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Safari, M.M.; Pourrostam, J.; Tazehkand, B.M. Innovative Analogue Processing-Based Approach for Power-Efficient Wireless Transceivers. IEEE Access 2024, 12, 130273–130291. [Google Scholar] [CrossRef]

- Safari, M.M.; Pourrostam, J.; Mousavi, S.H. MIMO Transceiver with Ultra-Power-Efficient Analog-Based Processing Toward Tbps Wireless Communication. In Proceedings of the 2024 11th International Symposium on Telecommunications (IST), Tehran, Iran, 9–10 October 2024; pp. 674–679. [Google Scholar]

- Verma, N.; Jia, H.; Valavi, H.; Tang, Y.; Ozatay, M.; Chen, L.Y.; Zhang, B.; Deaville, P. In-Memory Computing: Advances and Prospects. IEEE Solid-State Circuits Mag. 2019, 11, 43–55. [Google Scholar] [CrossRef]

- He, Y.; Hu, X.; Jia, H.; Seo, J.S. SRAM-and eDRAM-based compute-in-memory designs, accelerators, and evaluation frameworks: Macro-level and system-level optimization andevaluation. IEEE Solid-State Circuits Mag. 2025, 17, 49–60. [Google Scholar] [CrossRef]

- Conti, F.; Garofalo, A.; Rossi, D.; Tagliavini, G.; Benini, L. Open source hetero-geneous SoCs for artificial intelligence: The PULP platform experience. IEEE Solid-State Circuits Mag. 2025, 17, 49–60. [Google Scholar] [CrossRef]

- Hasler, J. Energy-Efficient Programable Analog Computing: Analog computing in a standard CMOS process. IEEE Solid-State Circuits Mag. 2024, 16, 32–40. [Google Scholar] [CrossRef]

- Hall, T.S.; Twigg, C.M.; Gray, J.D.; Hasler, P.; Anderson, D.V. Large-scale field-programmable analog arrays for analog signal processing. IEEE Trans. Circuits Syst. I Regul. Pap. 2005, 52, 2298–2307. [Google Scholar] [CrossRef]

- George, S.; Kim, S.; Shah, S.; Hasler, J.; Collins, M.; Adil, F.; Wunderlich, R.; Nease, S.; Ramakrishnan, S. A Programmable and Configurable Mixed-Mode FPAA SoC. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2016, 24, 2253–2261. [Google Scholar] [CrossRef]

- Hasler, J. Large-Scale Field-Programmable Analog Arrays. Proc. IEEE 2020, 108, 1283–1302. [Google Scholar] [CrossRef]

- Li, Y.; Song, W.; Wang, Z.; Jiang, H.; Yan, P.; Lin, P.; Li, C.; Rao, M.; Barnell, M.; Wu, Q.; et al. Memristive field-programmable analog arrays for analog computing. Adv. Mater. 2023, 35, 2206648. [Google Scholar] [CrossRef] [PubMed]

- Chawla, R.; Bandyopadhyay, A.; Srinivasan, V.; Hasler, P. A 531 nW/MHz, 128×32 current-mode programmable analog vector-matrix multiplier with over two decades of linearity. In Proceedings of the IEEE Custom Integrated Circuits Conference, Orlando, FL, USA, 6 October 2004; pp. 651–654. [Google Scholar] [CrossRef]

- Schlottmann, C.R.; Hasler, P.E. A Highly Dense, Low Power, Programmable Analog Vector-Matrix Multiplier: The FPAA Implementation. IEEE J. Emerg. Sel. Top. Circuits Syst. 2011, 1, 403–411. [Google Scholar] [CrossRef]

- Mathews, P.O.; Raj Ayyappan, P.; Ige, A.; Bhattacharyya, S.; Yang, L.; Hasler, J.O. A 65 nm CMOS Analog Programmable Standard Cell Library for Mixed-Signal Computing. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2024, 32, 1830–1840. [Google Scholar] [CrossRef]

- Hasler, J.; Ayyappan, P.R.; Ige, A.; Mathews, P. A 130nm CMOS programmable analog standard cell library. IEEE Trans. Circuits Syst. I Regul. Pap. 2024, 71, 2497–2510. [Google Scholar] [CrossRef]

- Miyashita, D.; Kousai, S.; Suzuki, T.; Deguchi, J. A Neuromorphic Chip Optimized for Deep Learning and CMOS Technology With Time-Domain Analog and Digital Mixed-Signal Processing. IEEE J. Solid-State Circuits 2017, 52, 2679–2689. [Google Scholar] [CrossRef]

- Thakur, C.S.; Wang, R.; Hamilton, T.J.; Etienne-Cummings, R.; Tapson, J.; van Schaik, A. An Analogue Neuromorphic Co-Processor That Utilizes Device Mismatch for Learning Applications. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 1174–1184. [Google Scholar] [CrossRef]

- Guo, N.; Huang, Y.; Mai, T.; Patil, S.; Cao, C.; Seok, M.; Sethumadhavan, S.; Tsividis, Y. Energy-Efficient Hybrid Analog/Digital Approximate Computation in Continuous Time. IEEE J. Solid-State Circuits 2016, 51, 1514–1524. [Google Scholar] [CrossRef]

- Skrzyniarz, S.; Fick, L.; Shah, J.; Kim, Y.; Sylvester, D.; Blaauw, D.; Fick, D.; Henry, M.B. A 36.8 2b-TOPS/W self-calibrating GPS accelerator implemented using analog calculation in 65nm LP CMOS. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 31 January–4 February 2016; pp. 420–422. [Google Scholar] [CrossRef]

- Fick, L.; Skrzyniarz, S.; Parikh, M.; Henry, M.B.; Fick, D. Analog Matrix Processor for Edge AI Real-Time Video Analytics. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 20–26 February 2022; Volume 65, pp. 260–262. [Google Scholar] [CrossRef]

- Fick, D. Analog Compute-in-Memory For AI Edge Inference. In Proceedings of the 2022 International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 3–7 December 2022; pp. 21.8.1–21.8.4. [Google Scholar] [CrossRef]

- Xue, Y. Recent development in analog computation: A brief overview. Analog. Integr. Circuits Signal Process. 2016, 86, 181–187. [Google Scholar] [CrossRef]

- Zhu, Z.; Feng, L. A Review of Sub-μW CMOS Analog Computing Circuits for Instant 1-Dimensional Audio Signal Processing in Always-On Edge Devices. IEEE Trans. Circuits Syst. I Regul. Pap. 2024, 71, 4009–4018. [Google Scholar] [CrossRef]

- Safari, M.M.; Pourrostam, J. The role of analog signal processing in upcoming telecommunication systems: Concept, challenges, and outlook. Signal Process. 2024, 220, 109446. [Google Scholar] [CrossRef]

- Sahay, S.; Bavandpour, M.; Mahmoodi, M.R.; Strukov, D. Energy-Efficient Moderate Precision Time-Domain Mixed-Signal Vector-by-Matrix Multiplier Exploiting 1T-1R Arrays. IEEE J. Explor. Solid-State Comput. Devices Circuits 2020, 6, 18–26. [Google Scholar] [CrossRef]

- Spear, M.; Kim, J.E.; Bennett, C.H.; Agarwal, S.; Marinella, M.J.; Xiao, T.P. The Impact of Analog-to-Digital Converter Architecture and Variability on Analog Neural Network Accuracy. IEEE J. Explor. Solid-State Comput. Devices Circuits 2023, 9, 176–184. [Google Scholar] [CrossRef]

- Laleni, N.; Müller, F.; Cuñarro, G.; Kämpfe, T.; Jang, T. A High-Efficiency Charge-Domain Compute-in-Memory 1F1C Macro Using 2-bit FeFET Cells for DNN Processing. IEEE J. Explor. Solid-State Comput. Devices Circuits 2024, 10, 153–160. [Google Scholar] [CrossRef]

- Jin, J.; Gao, S.; Lu, C.; Qiu, X.; Zhao, Y. Device Nonideality-Aware Compute-in-Memory Array Architecting: Direct Voltage Sensing, I–V Symmetric Bitcell, and Padding Array. IEEE J. Explor. Solid-State Comput. Devices Circuits 2025, 11, 19–24. [Google Scholar] [CrossRef]

- Navidi, M.M.; Graham, D.W.; Rumberg, B. Below-ground injection of floating-gate transistors for programmable analog circuits. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Dilello, A.; Andryzcik, S.; Kelly, B.M.; Rumberg, B.; Graham, D.W. Temperature compensation of floating-gate transistors in field-programmable analog arrays. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Hasler, J.; Basu, A. Historical perspective and opportunity for computing in memory using floating-gate and resistive non-volatile computing including neuromorphic computing. Neuromorphic. Comput. Eng. 2025, 5, 012001. [Google Scholar] [CrossRef]

- Huang, X.; Liu, C.; Tang, Z.; Zeng, S.; Wang, S.; Zhou, P. An ultrafast bipolar flash memory for self-activated in-memory computing. Nat. Nanotechnol. 2023, 18, 486–492. [Google Scholar] [CrossRef] [PubMed]

- Seo, J.S.; Saikia, J.; Meng, J.; He, W.; Suh, H.S.; Anupreetham; Liao, Y.; Hasssan, A.; Yeo, I. Digital Versus Analog Artificial Intelligence Accelerators: Advances, trends, and emerging designs. IEEE Solid-State Circuits Mag. 2022, 14, 65–79. [Google Scholar] [CrossRef]

- Sebastian, A.; Le Gallo, M.; Khaddam-Aljameh, R.; Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 2020, 15, 529–544. [Google Scholar] [CrossRef]

- Salehi, S.; Fan, D.; Demara, R.F. Survey of STT-MRAM cell design strategies: Taxonomy and sense amplifier tradeoffs for resiliency. ACM J. Emerg. Technol. Comput. Syst. (JETC) 2017, 13, 1–16. [Google Scholar] [CrossRef]

- Na, T.; Kang, S.H.; Jung, S.O. STT-MRAM Sensing: A Review. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 12–18. [Google Scholar] [CrossRef]

- Yusuf, A.; Adegbija, T.; Gajaria, D. Domain-Specific STT-MRAM-Based In-Memory Computing: A Survey. IEEE Access 2024, 12, 28036–28056. [Google Scholar] [CrossRef]

- Antolini, A.; Paolino, C.; Zavalloni, F.; Lico, A.; Scarselli, E.F.; Mangia, M.; Pareschi, F.; Setti, G.; Rovatti, R.; Torres, M.L.; et al. Combined HW/SW Drift and Variability Mitigation for PCM-Based Analog In-Memory Computing for Neural Network Applications. IEEE J. Emerg. Sel. Top. Circuits Syst. 2023, 13, 395–407. [Google Scholar] [CrossRef]

- Murmann, B. Mixed-Signal Computing for Deep Neural Network Inference. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2021, 29, 3–13. [Google Scholar] [CrossRef]

- Killat, D.; Koeppel, S.; Ulmann, B.; Wetzel, L. Solving partial differential equations with Monte Carlo/random walk on an analog-digital hybrid computer. arXiv 2023, arXiv:2309.05598. [Google Scholar] [CrossRef]

- Verhelst, M.; Shi, M.; Mei, L. ML Processors Are Going Multi-Core: A performance dream or a scheduling nightmare? IEEE Solid-State Circuits Mag. 2022, 14, 18–27. [Google Scholar] [CrossRef]

- Shanbhag, N.R.; Roy, S.K. Benchmarking In-Memory Computing Architectures. IEEE Open J. Solid-State Circuits Soc. 2022, 2, 288–300. [Google Scholar] [CrossRef]

- Shanbhag, N.R.; Roy, S.K. Comprehending In-memory Computing Trends via Proper Benchmarking. In Proceedings of the IEEE Custom Integrated Circuits Conference (CICC), Newport Beach, CA, USA, 24–27 April 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Shanbhag, N.R.; Roy, S.K. IMC-Benchmarking GitHub Repository. 2024. Available online: https://github.com/UIUC-IMC/UIUC-IMC-Benchmarking (accessed on 6 July 2025).

- Peierls, R. On Ising’s model of ferromagnetism. Math. Proc. Camb. Philos. Soc. 1936, 32, 477–481. [Google Scholar] [CrossRef]

- Dutta, S.; Khanna, A.; Assoa, A.S.; Paik, H.; Schlom, D.G.; Toroczkai, Z.; Raychowdhury, A.; Datta, S. An Ising Hamiltonian solver based on coupled stochastic phase-transition nano-oscillators. Nat. Electron. 2021, 4, 502–512. [Google Scholar] [CrossRef]

- Bashar, M.K.; Mallick, A.; Truesdell, D.S.; Calhoun, B.H.; Joshi, S.; Shukla, N. Experimental Demonstration of a Reconfigurable Coupled Oscillator Platform to Solve the Max-Cut Problem. IEEE J. Explor. Solid-State Comput. Devices Circuits 2020, 6, 116–121. [Google Scholar] [CrossRef]

- Lee, Y.W.; Kim, S.J.; Kim, J.; Kim, S.; Park, J.; Jeong, Y.; Hwang, G.W.; Park, S.; Park, B.H.; Lee, S. Demonstration of an energy-efficient Ising solver composed of Ovonic threshold switch (OTS)-based nano-oscillators (OTSNOs). Nano Converg. 2024, 11, 20. [Google Scholar] [CrossRef]

- Böhm, F.; Vaerenbergh, T.V.; Verschaffelt, G.; Van der Sande, G. Order-of-magnitude differences in computational performance of analog Ising machines induced by the choice of nonlinearity. Commun. Phys. 2021, 4, 149. [Google Scholar] [CrossRef]

- Sutton, B.; Faria, R.; Ghantasala, L.A.; Jaiswal, R.; Camsari, K.Y.; Datta, S. Autonomous Probabilistic Coprocessing With Petaflips per Second. IEEE Access 2020, 8, 157238–157252. [Google Scholar] [CrossRef]

- Wang, T.; Wu, L.; Nobel, P.; Roychowdhury, J. Solving combinatorial optimisation problems using oscillator based Ising machines. Nat. Comput. 2021, 20, 287–306. [Google Scholar] [CrossRef]

- Chou, J.; Bramhavar, S.; Ghosh, S.; Herzog, W. Analog Coupled Oscillator Based Weighted Ising Machine. Sci. Rep. 2019, 9, 14786. [Google Scholar] [CrossRef] [PubMed]

- Kaisar, T.; Habermehl, S.T.; Casilli, N.; Mandal, S.; Rais-Zadeh, M.; Roukes, M.L.; Cassella, C.; Feng, P.X.L. Synchronization Dynamics of MEMS Oscillators with Sub-harmonic Injection Locking (SHIL) for Emulating Artificial Ising Spins. IEEE J. Microelectromechanical. Syst. 2025; in press. [Google Scholar]

- Lu, J.; Young, S.; Arel, I.; Holleman, J. A 1 TOPS/W Analog Deep Machine-Learning Engine With Floating-Gate Storage in 0.13 μm CMOS. IEEE J. Solid-State Circuits 2015, 50, 270–281. [Google Scholar] [CrossRef]

- Tanamoto, T.; Higashi, Y.; Deguchi, J. Calculation of a capacitively-coupled floating gate array toward quantum annealing machine. J. Appl. Phys. 2018, 124, 154301. [Google Scholar] [CrossRef]

- Bae, J.; Oh, W.; Koo, J.; Kim, B. CTLE-Ising: A 1440-Spin Continuous-Time Latch-Based isling Machine with One-Shot Fully-Parallel Spin Updates Featuring Equalization of Spin States. In Proceedings of the 2023 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 19–23 February 2023; pp. 142–144. [Google Scholar] [CrossRef]

- Xie, S.; Raman, S.R.S.; Ni, C.; Wang, M.; Yang, M.; Kulkarni, J.P. Ising-CIM: A Reconfigurable and Scalable Compute Within Memory Analog Ising Accelerator for Solving Combinatorial Optimization Problems. IEEE J. Solid-State Circuits 2022, 57, 3453–3465. [Google Scholar] [CrossRef]

- Aonishi, T.; Nagasawa, T.; Koizumi, T.; Gunathilaka, M.D.S.H.; Mimura, K.; Okada, M.; Kako, S.; Yamamoto, Y. Highly Versatile FPGA-Implemented Cyber Coherent Ising Machine. IEEE Access 2024, 12, 175843–175865. [Google Scholar] [CrossRef]

- Sajeeb, M.; Aadit, N.A.; Wu, T.; Smith, C.; Chinmay, D.; Raut, A.; Camsari, K.Y.; Delacour, C.; Srimani, T. Scalable Connectivity for Ising Machines: Dense to Sparse. arXiv 2025, arXiv:2503.01177. [Google Scholar] [CrossRef]

- Sikhakollu, V.P.S.; Sreedhara, S.; Manohar, R.; Mishchenko, A.; Roychowdhury, J. High Quality Circuit-Based 3-SAT Mappings for Oscillator Ising Machines. In Proceedings of the International Conference on Unconventional Computation and Natural Computation, Pohang, South Korea, 17–21 June 2024; Springer: Cham, Switzerland, 2024; pp. 269–285. [Google Scholar] [CrossRef]

- Si, J.; Yang, S.; Cen, Y.; Chen, J.; Huang, Y.; Yao, Z.; Kim, D.J.; Cai, K.; Yoo, J.; Fong, X.; et al. Energy-efficient superparamagnetic Ising machine and its application to traveling salesman problems. Nat. Commun. 2024, 15, 3457. [Google Scholar] [CrossRef]

- Lechner, W.; Hauke, P.; Zoller, P. A quantum annealing architecture with all-to-all connectivity from local interactions. Sci. Adv. 2015, 1, e1500838. [Google Scholar] [CrossRef]

- Razmkhah, S.; Huang, J.Y.; Kamal, M.; Pedram, M. SAIM: Scalable Analog Ising Machine for Solving Quadratic Binary Optimization Problems. arXiv 2024. [Google Scholar] [CrossRef]

- Aadit, N.A.; Grimaldi, A.; Carpentieri, M.; Theogarajan, L.; Martinis, J.M.; Finocchio, G.; Camsari, K.Y. Massively parallel probabilistic computing with sparse Ising machines. Nat. Electron. 2022, 5, 460–468. [Google Scholar] [CrossRef]

- Chowdhury, S.; Çamsari, K.Y.; Datta, S. Emulating Quantum Circuits With Generalized Ising Machines. IEEE Access 2023, 11, 116944–116955. [Google Scholar] [CrossRef]

- Nishimori, H.; Tsuda, J.; Knysh, S. Comparative study of the performance of quantum annealing and simulated annealing. Phys. Rev. E 2015, 91, 012104. [Google Scholar] [CrossRef]

- Raimondo, E.; Garzón, E.; Shao, Y.; Grimaldi, A.; Chiappini, S.; Tomasello, R.; Davila-Melendez, N.; Katine, J.A.; Carpentieri, M.; Chiappini, M.; et al. High-performance and reliable probabilistic Ising machine based on simulated quantum annealing. arXiv 2025, arXiv:2503.13015. [Google Scholar]

- Zhang, T.; Tao, Q.; Liu, B.; Grimaldi, A.; Raimondo, E.; Jiménez, M.; Avedillo, M.J.; Nuñez, J.; Linares-Barranco, B.; Serrano-Gotarredona, T.; et al. A Review of Ising Machines Implemented in Conventional and Emerging Technologies. IEEE Trans. Nanotechnol. 2024, 23, 704–717. [Google Scholar] [CrossRef]

- Udayanga, N.; Madanayake, A.; Hariharan, S.I.; Liang, J.; Mandal, S.; Belostotski, L.; Bruton, L.T. A Radio Frequency Analog Computer for Computational Electromagnetics. IEEE J. Solid-State Circuits 2021, 56, 440–454. [Google Scholar] [CrossRef]

- Liang, J.; Udayanga, N.; Madanayake, A.; Hariharan, S.I.; Mandal, S. An Offset-Cancelling Discrete-Time Analog Computer for Solving 1-D Wave Equations. IEEE J. Solid-State Circuits 2021, 56, 2881–2894. [Google Scholar] [CrossRef]

- Malavipathirana, H.; Hariharan, S.I.; Udayanga, N.; Mandal, S.; Madanayake, A. A Fast and Fully Parallel Analog CMOS Solver for Nonlinear PDEs. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 3363–3376. [Google Scholar] [CrossRef]

- Kimovski, D.; Saurabh, N.; Jansen, M.; Aral, A.; Al-Dulaimy, A.; Bondi, A.B.; Galletta, A.; Papadopoulos, A.V.; Iosup, A.; Prodan, R. Beyond Von Neumann in the Computing Continuum: Architectures, Applications, and Future Directions. IEEE Internet Comput. 2024, 28, 6–16. [Google Scholar] [CrossRef]

- Hughes, T.W.; Williamson, I.A.; Minkov, M.; Fan, S. Wave physics as an analog recurrent neural network. Sci. Adv. 2019, 5, eaay6946. [Google Scholar] [CrossRef]

- Afshari, E.; Bhat, H.S.; Hajimiri, A. Ultrafast analog Fourier transform using 2-D LC lattice. IEEE Trans. Circuits Syst. I Regul. Pap. 2008, 55, 2332–2343. [Google Scholar] [CrossRef]

- Tousi, Y.M.; Afshari, E. 2-D Electrical Interferometer: A Novel High-Speed Quantizer. IEEE Trans. Microw. Theory Tech. 2010, 58, 2549–2561. [Google Scholar] [CrossRef]

- Mandal, S.; Zhak, S.M.; Sarpeshkar, R. A Bio-Inspired Active Radio-Frequency Silicon Cochlea. IEEE J. Solid-State Circuits 2009, 44, 1814–1828. [Google Scholar] [CrossRef]

- Mandal, S.; Sarpeshkar, R. A Bio-Inspired Cochlear Heterodyning Architecture for an RF Fovea. IEEE Trans. Circuits Syst. I Regul. Pap. 2011, 58, 1647–1660. [Google Scholar] [CrossRef]

- Wang, Y.; Mendis, G.J.; Wei-Kocsis, J.; Madanayake, A.; Mandal, S. A 1.0-8.3 GHz Cochlea-Based Real-Time Spectrum Analyzer With Δ-Σ-Modulated Digital Outputs. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 2934–2947. [Google Scholar] [CrossRef]

- Wang, Y.; Mandal, S. Bio-inspired radio-frequency source localization based on cochlear cross-correlograms. Front. Neurosci. 2021, 15, 623316. [Google Scholar] [CrossRef]

- Uy, R.F.; Bui, V.P. Solving ordinary and partial differential equations using an analog computing system based on ultrasonic metasurfaces. Sci. Rep. 2023, 13, 13471. [Google Scholar] [CrossRef]

- Huang, Y.; Guo, N.; Seok, M.; Tsividis, Y.; Mandli, K.; Sethumadhavan, S. Hybrid analog-digital solution of nonlinear partial differential equations. In Proceedings of the 50th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, MA, USA, 14–18 October 2017; pp. 665–678. [Google Scholar] [CrossRef]

- Udayanga, N.; Hariharan, S.I.; Mandal, S.; Belostotski, L.; Bruton, L.T.; Madanayake, A. Continuous-Time Algorithms for Solving Maxwell’s Equations Using Analog Circuits. IEEE Trans. Circuits Syst. I Regul. Pap. 2019, 66, 3941–3954. [Google Scholar] [CrossRef]

- Malavipathirana, H.; Mandal, S.; Udayanga, N.; Wang, Y.; Hariharan, S.I.; Madanayake, A. Analog Computing for Nonlinear Shock Tube PDE Models: Test and Measurement of CMOS Chip. IEEE Access 2025, 13, 2862–2875. [Google Scholar] [CrossRef]

- Enz, C.; Temes, G. Circuit techniques for reducing the effects of op-amp imperfections: Autozeroing, correlated double sampling, and chopper stabilization. Proc. IEEE 1996, 84, 1584–1614. [Google Scholar] [CrossRef]

- Liang, J.; Tang, X.; Hariharan, S.I.; Madanayake, A.; Mandal, S. A Current-Mode Discrete-Time Analog Computer for Solving Maxwell’s Equations in 2D. In Proceedings of the 2023 IEEE International Symposium on Circuits and Systems (ISCAS), Monterey, CA, USA, 21–25 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Li, Z.; Wijerathne, D.; Mitra, T. Coarse-Grained Reconfigurable Array (CGRA). In Handbook of Computer Architecture; Springer: Singapore, 2022; pp. 1–41. [Google Scholar] [CrossRef]

- Dudek, P. SCAMP-3: A vision chip with SIMD current-mode analogue processor array. In Focal-Plane Sensor-Processor Chips; Springer: New York, NY, USA, 2011; pp. 17–43. [Google Scholar] [CrossRef]

- Hasler, J.; Natarajan, A. Continuous-Time, Configurable Analog Linear System Solutions With Transconductance Amplifiers. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 765–775. [Google Scholar] [CrossRef]

- Ulmann, B.; Killat, D. Solving systems of linear equations on analog computers. In Proceedings of the 2019 Kleinheubach Conference, Miltenberg, Germany, 23–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Huang, Y.; Guo, N.; Seok, M.; Tsividis, Y.; Sethumadhavan, S. Analog computing in a modern context: A linear algebra accelerator case study. IEEE Micro 2017, 37, 30–38. [Google Scholar] [CrossRef]

- Rappaport, T.S.; Xing, Y.; Kanhere, O.; Ju, S.; Madanayake, A.; Mandal, S.; Alkhateeb, A.; Trichopoulos, G.C. Wireless Communications and Applications Above 100 GHz: Opportunities and Challenges for 6G and Beyond. IEEE Access 2019, 7, 78729–78757. [Google Scholar] [CrossRef]

- Zhang, L.; Krishnaswamy, H. Arbitrary Analog/RF Spatial Filtering for Digital MIMO Receiver Arrays. IEEE J. Solid-State Circuits 2017, 52, 3392–3404. [Google Scholar] [CrossRef]

- Suarez, D.; Cintra, R.J.; Bayer, F.M.; Sengupta, A.; Kulasekera, S.; Madanayake, A. Multi-beam RF aperture using multiplierless FFT approximation. Electron. Lett. 2014, 50, 1788–1790. [Google Scholar] [CrossRef]

- Reshma, P.G.; Gopi, V.P.; Babu, V.S.; Wahid, K.A. Analog CMOS implementation of FFT using cascode current mirror. Microelectron. J. 2017, 60, 30–37. [Google Scholar] [CrossRef]

- Ariyarathna, V.; Kulasekera, S.; Madanayake, A.; Lee, K.S.; Suarez, D.; Cintra, R.J.; Bayer, F.M.; Belostotski, L. Multi-beam 4 GHz microwave apertures using current-mode DFT approximation on 65 nm CMOS. In Proceedings of the 2015 IEEE MTT-S International Microwave Symposium, Phoenix, AZ, USA, 17–22 May 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Ariyarathna, V.; Udayanga, N.; Madanayake, A.; Belostotski, L.; Ahmadi, P.; Mandal, S.; Nikoofard, A. Analog 65/130 nm CMOS 5 GHz Sub-Arrays with ROACH-2 FPGA Beamformers for Hybrid Aperture-Array Receivers; Defense Technical Information Center (DTIC): Fort Belvoir, VA, USA, 2017; AD1041390. [Google Scholar]

- Ariyarathna, V.; Madanayake, A.; Tang, X.; Coelho, D.; Cintra, R.J.; Belostotski, L.; Mandal, S.; Rappaport, T.S. Analog Approximate-FFT 8/16-Beam Algorithms, Architectures and CMOS Circuits for 5G Beamforming MIMO Transceivers. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 466–479. [Google Scholar] [CrossRef]

- Zhao, H.; Madanayake, A.; Cintra, R.J.; Mandal, S. Analog Current-Mode 8-Point Approximate-DFT Multi-Beamformer With 4.7 Gbps Channel Capacity. IEEE Access 2023, 11, 53716–53735. [Google Scholar] [CrossRef]

- Lehne, M.; Raman, S. An Analog/Mixed-Signal FFT Processor for Wideband OFDM Systems. In Proceedings of the 2006 IEEE Sarnoff Symposium, Princeton, NJ, USA, 27–28 March 2006; pp. 1–4. [Google Scholar] [CrossRef]

- Lehne, M.; Raman, S. A prototype analog/mixed-signal fast fourier transform processor IC for OFDM receivers. In Proceedings of the 2008 IEEE Radio and Wireless Symposium, Orlando, FL, USA, 22–24 January 2008; pp. 803–806. [Google Scholar] [CrossRef]

- Handagala, S.; Madanayake, A.; Belostotski, L.; Bruton, L.T. Delta-sigma noise shaping in 2D spacetime for uniform linear aperture array receivers. In Proceedings of the 2016 Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 5–6 April 2016; pp. 114–119. [Google Scholar] [CrossRef]

- Gu, B.; Liang, J.; Wang, Y.; Ariando, D.; Ariyarathna, V.; Madanayake, A.; Mandal, S. 32-Element Array Receiver for 2-D Spatio-Temporal Δ-Σ Noise-Shaping. In Proceedings of the 2019 IEEE National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 July 2019; pp. 499–502. [Google Scholar] [CrossRef]

- Wang, Y.; Handagala, S.; Madanayake, A.; Belostotski, L.; Mandal, S. N-port LNAs for mmW array processors using 2-D spatio-temporal Δ-Σ noise-shaping. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1473–1476. [Google Scholar] [CrossRef]

- Silva, N.; Mandal, S.; Belostotski, L.; Madanayake, A. A Spatial-LDI Δ-Σ LNA Design in 65nm CMOS. In Proceedings of the 2024 International Applied Computational Electromagnetics Society Symposium (ACES), Xi’an, China, 16–19 August 2024; pp. 1–2. [Google Scholar]

- Radpour, M.; Kabirkhoo, Z.; Madanayake, A.; Mandal, S.; Belostotski, L. Demonstration of Receiver-Noise/Distortion Shaping in Antenna Arrays by Using a Spatio-Temporal Δ-Σ Method. IEEE Trans. Microw. Theory Tech. 2024, 72, 4660–4670. [Google Scholar] [CrossRef]

- Madanayake, A.; Pilippange, H.; Lawrance, K.; Uddin, A.; Mandal, S.; Di, J.; Tennant, M.; Workman, C.; Cintra, R.J. Analog-Digital Approximate DFT with Spatial Δ-Σ LNA Multi-beam RF Apertures. In Proceedings of the 2025 IEEE International Symposium on Circuits and Systems (ISCAS), London, UK, 25–28 May 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Silva, A.; Monticone, F.; Castaldi, G.; Galdi, V.; Alù, A.; Engheta, N. Performing mathematical operations with metamaterials. Science 2014, 343, 160–163. [Google Scholar] [CrossRef]

- Zangeneh-Nejad, F.; Sounas, D.L.; Alù, A.; Fleury, R. Analogue computing with metamaterials. Nat. Rev. Mater. 2021, 6, 207–225. [Google Scholar] [CrossRef]

- Miscuglio, M.; Gui, Y.; Ma, X.; Ma, Z.; Sun, S.; El Ghazawi, T.; Itoh, T.; Alù, A.; Sorger, V.J. Approximate analog computing with metatronic circuits. Commun. Phys. 2021, 4, 196. [Google Scholar] [CrossRef]

- Abdollahramezani, S.; Hemmatyar, O.; Adibi, A. Meta-optics for spatial optical analog computing. Nanophotonics 2020, 9, 4075–4095. [Google Scholar] [CrossRef]

- Sol, J.; Smith, D.R.; Del Hougne, P. Meta-programmable analog differentiator. Nat. Commun. 2022, 13, 1713. [Google Scholar] [CrossRef] [PubMed]

- Tzarouchis, D.C.; Edwards, B.; Engheta, N. Programmable wave-based analog computing machine: A metastructure that designs metastructures. Nat. Commun. 2025, 16, 908. [Google Scholar] [CrossRef] [PubMed]

- Ren, B.W.; Qi, C.; Li, P.; He, X.; Wong, A.M. A Self-Adaptive Reconfigurable Metasurface for Electromagnetic Wave Sensing and Dynamic Reflection Control. Adv. Sci. 2025; in press. [Google Scholar] [CrossRef]

- Chicca, E.; Stefanini, F.; Bartolozzi, C.; Indiveri, G. Neuromorphic Electronic Circuits for Building Autonomous Cognitive Systems. Proc. IEEE 2014, 102, 1367–1388. [Google Scholar] [CrossRef]

- Thakur, C.S.; Molin, J.L.; Cauwenberghs, G.; Indiveri, G.; Kumar, K.; Qiao, N.; Schemmel, J.; Wang, R.; Chicca, E.; Olson Hasler, J.; et al. Large-scale neuromorphic spiking array processors: A quest to mimic the brain. Front. Neurosci. 2018, 12, 891. [Google Scholar] [CrossRef]

- Sung, C.; Hwang, H.; Yoo, I.K. Perspective: A review on memristive hardware for neuromorphic computation. J. Appl. Phys. 2018, 124, 151903. [Google Scholar] [CrossRef]

- Huang, Y.; Ando, T.; Sebastian, A.; Chang, M.F.; Yang, J.J.; Xia, Q. Memristor-based hardware accelerators for artificial intelligence. Nat. Rev. Electr. Eng. 2024, 1, 286–299. [Google Scholar] [CrossRef]

- Zhu, R.; Lilak, S.; Loeffler, A.; Lizier, J.; Stieg, A.; Gimzewski, J.; Kuncic, Z. Online dynamical learning and sequence memory with neuromorphic nanowire networks. Nat. Commun. 2023, 14, 6697. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, S.; Klähn, J.; Bellec, G.; Grübl, A.; Guettler, M.; Hartel, A.; Hartmann, S.; Husmann, D.; Husmann, K.; Jeltsch, S.; et al. Neuromorphic hardware in the loop: Training a deep spiking network on the brainscales wafer-scale system. In Proceedings of the 2017 international joint conference on neural networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2227–2234. [Google Scholar]

- Pehle, C.; Billaudelle, S.; Cramer, B.; Kaiser, J.; Schreiber, K.; Stradmann, Y.; Weis, J.; Leibfried, A.; Müller, E.; Schemmel, J. The BrainScaleS-2 accelerated neuromorphic system with hybrid plasticity. Front. Neurosci. 2022, 16, 795876. [Google Scholar] [CrossRef]

- Muir, D.; Sheik, S. The road to commercial success for neuromorphic technologies. Nat. Commun. 2025, 16, 3586. [Google Scholar] [CrossRef] [PubMed]

- Adiletta, J.; Guler, U. A Fourier Dot Product Analog Circuit. In Proceedings of the 2023 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 6–8 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Ielmini, D. Brain-inspired computing with resistive switching memory (RRAM): Devices, synapses and neural networks. Microelectron. Eng. 2018, 190, 44–53. [Google Scholar] [CrossRef]

- Bouvier, M.; Valentian, A.; Mesquida, T.; Rummens, F.; Reyboz, M.; Vianello, E.; Beigne, E. Spiking neural networks hardware implementations and challenges: A survey. ACM J. Emerg. Technol. Comput. Syst. (JETC) 2019, 15, 1–35. [Google Scholar] [CrossRef]

- Seo, J.O.; Seok, M.; Cho, S. ARCHON: A 332.7TOPS/W 5b Variation-Tolerant Analog CNN Processor Featuring Analog Neuronal Computation Unit and Analog Memory. In Proceedings of the 2022 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 20–26 February 2022; Volume 65, pp. 258–260. [Google Scholar] [CrossRef]

- Seo, J.O.; Seok, M.; Cho, S. A 44.2-TOPS/W CNN Processor With Variation-Tolerant Analog Datapath and Variation Compensating Circuit. IEEE J. Solid-State Circuits 2024, 59, 1603–1611. [Google Scholar] [CrossRef]

- Long, Y.; Na, T.; Mukhopadhyay, S. ReRAM-Based Processing-in-Memory Architecture for Recurrent Neural Network Acceleration. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 2781–2794. [Google Scholar] [CrossRef]

- Hsieh, Y.T.; Anjum, K.; Pompili, D. Ultra-low Power Analog Recurrent Neural Network Design Approximation for Wireless Health Monitoring. In Proceedings of the 2022 IEEE 19th International Conference on Mobile Ad Hoc and Smart Systems (MASS), Denver, CO, USA, 19–23 October 2022; pp. 211–219. [Google Scholar] [CrossRef]

- Sarpeshkar, R. Emulation of Quantum and Quantum-Inspired Spectrum Analysis and Superposition with Classical Transconductor-Capacitor Circuits. U.S. Patent US10204199B2, 12 February 2019. [Google Scholar]

- Liang, J.; Malavipathirana, H.; Hariharan, S.; Madanayake, A.; Mandal, S. Analog switched-capacitor circuits for solving the Schrödinger equation. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Korea, 22–28 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Mourya, S.; Cour, B.R.L.; Sahoo, B.D. Emulation of Quantum Algorithms Using CMOS Analog Circuits. IEEE Trans. Quantum Eng. 2023, 4, 1–16. [Google Scholar] [CrossRef]

- Cressman, A.J.; Sarpeshkar, R. Emulation of Density Matrix Dynamics With Classical Analog Circuits. IEEE Trans. Quantum Eng. 2025, 6, 1–16. [Google Scholar] [CrossRef]

- Mandal, S.; Sarpeshkar, R. Log-domain circuit models of chemical reactions. In Proceedings of the 2009 IEEE International Symposium on Circuits and Systems, Taipei, Taiwan, 24–27 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 2697–2700. [Google Scholar] [CrossRef]

- Mandal, S.; Sarpeshkar, R. Circuit models of stochastic genetic networks. In Proceedings of the 2009 IEEE Biomedical Circuits and Systems Conference, Beijing, China, 26–28 November 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 109–112. [Google Scholar] [CrossRef]

- Woo, S.S.; Kim, J.; Sarpeshkar, R. A Cytomorphic Chip for Quantitative Modeling of Fundamental Bio-Molecular Circuits. IEEE Trans. Biomed. Circuits Syst. 2015, 9, 527–542. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.S.; Kim, J.; Sarpeshkar, R. A Digitally Programmable Cytomorphic Chip for Simulation of Arbitrary Biochemical Reaction Networks. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 360–378. [Google Scholar] [CrossRef]

- Kim, J.; Woo, S.S.; Sarpeshkar, R. Fast and Precise Emulation of Stochastic Biochemical Reaction Networks With Amplified Thermal Noise in Silicon Chips. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 379–389. [Google Scholar] [CrossRef]

- Beahm, D.R.; Deng, Y.; Riley, T.G.; Sarpeshkar, R. Cytomorphic Electronic Systems: A review and perspective. IEEE Nanotechnol. Mag. 2021, 15, 41–53. [Google Scholar] [CrossRef]

- Zhao, H.; Sarpeshkar, R.; Mandal, S. A compact and power-efficient noise generator for stochastic simulations. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 70, 3–16. [Google Scholar] [CrossRef]

- Vigoda, B.W. Continuous-Time Analog Circuits for Statistical Signal Processing. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2003. [Google Scholar]

- Vigoda, B.; Reynolds, D. Belief Propagation Processor. U.S. Patent US8799346B2, 3 March 2015. [Google Scholar]

- Coughlan, J. A Tutorial Introduction to Belief Propagation; The Smith-Kettlewell Eye Research Institute: San Francisco, CA, USA, 2009. [Google Scholar]

- Vigoda, B.; Reynolds, D.; Bernstein, J.; Weber, T.; Bradley, B. Low power logic for statistical inference. In Proceedings of the 16th ACM/IEEE International Symposium on Low Power Electronics and Design, Austin, TX, USA, 18–20 August 2010; pp. 349–354. [Google Scholar] [CrossRef]

- Solli, D.R.; Jalali, B. Analog optical computing. Nat. Photonics 2015, 9, 704–706. [Google Scholar] [CrossRef]

- Daniel, R.; Rubens, J.R.; Sarpeshkar, R.; Lu, T.K. Synthetic analog computation in living cells. Nature 2013, 497, 619–623. [Google Scholar] [CrossRef]

- Rubens, J.R.; Selvaggio, G.; Lu, T.K. Synthetic mixed-signal computation in living cells. Nat. Commun. 2016, 7, 11658. [Google Scholar] [CrossRef] [PubMed]

- Fages, F.; Le Guludec, G.; Bournez, O.; Pouly, A. Strong Turing completeness of continuous chemical reaction networks and compilation of mixed analog-digital programs. In Proceedings of the International Conference on Computational Methods in Systems Biology, Darmstadt, Germany, 27–29 September 2017; pp. 108–127. [Google Scholar] [CrossRef]

- Kendon, V.M.; Nemoto, K.; Munro, W.J. Quantum analogue computing. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2010, 368, 3609–3620. [Google Scholar] [CrossRef]

- Daley, A.J.; Bloch, I.; Kokail, C.; Flannigan, S.; Pearson, N.; Troyer, M.; Zoller, P. Practical quantum advantage in quantum simulation. Nature 2022, 607, 667–676. [Google Scholar] [CrossRef]

- Ige, A.; Yang, L.; Yang, H.; Hasler, J.; Hao, C. Analog system high-level synthesis for energy-efficient reconfigurable computing. J. Low Power Electron. Appl. 2023, 13, 58. [Google Scholar] [CrossRef]

- Ige, A.; Hasler, J. ASHES 1.5: Analog Computing Synthesis for FPAAs and ASICs. In Proceedings of the 2025 Design, Automation & Test in Europe Conference (DATE), Lyon, France, 31 March 2025–2 April 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Achour, S. Towards Design Optimization of Analog Compute Systems. In Proceedings of the 30th Asia and South Pacific Design Automation Conference, Tokyo, Japan, 20–23 January 2025; pp. 857–864. [Google Scholar] [CrossRef]

- Wang, Y.N.; Cowan, G.; Rührmair, U.; Achour, S. Design of Novel Analog Compute Paradigms with Ark. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, La Jolla, CA, USA, 27 April–1 May 2024; Volume 2, pp. 269–286. [Google Scholar] [CrossRef]

- Hasler, J.; Hao, C. Programmable analog system benchmarks leading to efficient analog computation synthesis. ACM Trans. Reconfigurable Technol. Syst. 2024, 17, 12001. [Google Scholar] [CrossRef]

| Challenge | Description and Implications |

|---|---|

| Limited Precision | Susceptibility to noise, nonlinearity, and mismatch limits accuracy. Analog systems typically operate at 4–8 bits of effective precision. |

| Calibration | Device characteristics vary with temperature, aging, and process variations, requiring regular calibration, which is complex and often manual. |

| Programmability | Lack of high-level software and compiler toolchains makes analog systems hard to reconfigure for general-purpose use. |

| Scalability | Interconnect complexity and device variability significantly increase the implementation complexity of large-scale analog systems. |

| Toolchain Maturity | Unlike digital flows (e.g., Verilog/VHDL + synthesis), analog flows lack standardized, modular toolchains for design, simulation, and verification. |

| Variability | Device mismatch and manufacturing tolerances introduce computational uncertainty, necessitating adaptive or robust design techniques. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belostotski, L.; Uddin, A.; Madanayake, A.; Mandal, S. A Survey of Analog Computing for Domain-Specific Accelerators. Electronics 2025, 14, 3159. https://doi.org/10.3390/electronics14163159

Belostotski L, Uddin A, Madanayake A, Mandal S. A Survey of Analog Computing for Domain-Specific Accelerators. Electronics. 2025; 14(16):3159. https://doi.org/10.3390/electronics14163159

Chicago/Turabian StyleBelostotski, Leonid, Asif Uddin, Arjuna Madanayake, and Soumyajit Mandal. 2025. "A Survey of Analog Computing for Domain-Specific Accelerators" Electronics 14, no. 16: 3159. https://doi.org/10.3390/electronics14163159

APA StyleBelostotski, L., Uddin, A., Madanayake, A., & Mandal, S. (2025). A Survey of Analog Computing for Domain-Specific Accelerators. Electronics, 14(16), 3159. https://doi.org/10.3390/electronics14163159