1. Introduction

Web 2.0 technologies have catalyzed social media platforms [

1], enabling instantaneous opinion sharing that generates substantial user-generated content online [

2]. As dietary habits constitute a fundamental aspect of daily life, they feature prominently in public discourse on these platforms. Review aggregators like Yelp and Dianping now host extensive restaurant evaluations covering food quality, hygiene standards, and dining experiences. Crucially, the emotion dimensions within these reviews provide valuable insights for consumer decision making [

3].

This study employs natural language processing and deep learning techniques to collect extensive dietary review data from major dietary platforms and extract objective information, such as dietary features, and subjective information, including various emotion words. These data are integrated to construct a binary or ternary dietary review knowledge base, which facilitates rapid access to and credible retrieval of dining information. Additionally, the knowledge base serves as a robust foundation for generating dietary reviews using large language models (LLMs). Accordingly, this paper proposes a multi-emotional knowledge extraction method for online dietary review texts. Specifically, a schema layer for multivariate knowledge extraction is designed based on an in-depth analysis of online reviews gathered from platforms, such as Meituan, Dianping, and Yelp. To enhance the accuracy and efficiency of knowledge extraction with the DRE-UIE (Dietary-Review Emotional-Unified Information-Extraction) model, a dataset optimization method is proposed. The optimized dataset is used to fine-tune the model and extract dietary–emotional knowledge. The advantages of the proposed approach are validated on Chinese and English datasets.

The design of the schema layer forms the foundation of knowledge extraction. Existing studies generally categorize schema layers into the following four categories: top-level ontology, domain ontology, task ontology, and application ontology. Based on an analysis of the characteristics of dietary reviews, the schema layer for dietary knowledge tends to align more closely with task or application ontology. For example, Zhao et al. [

4] constructed a health-domain schema layer grounded in the entity attributes and relationships related to food. From the perspective of user needs, Cui [

5] selected common edible flower recipes with health benefits and developed an ontology model focused on flower recipes possessing these benefits. Through an analysis of data from health and food websites, Yuan [

6] employed a top-down approach to construct a diet-domain schema layer. Huang [

7] integrates traditional Chinese medicine concepts, such as “medical-food homology”, with modern nutrition science, employing a seven-step methodology to represent and model a healthy-diet-domain ontology. This ontology has also found application in medicine and related fields. The construction of the aforementioned schema layers largely overlooks the rich emotional information embedded in the reviews, making it difficult to capture a user’s subjective experience and preference. Zheng et al. [

8] derived an emotional hierarchy of needs for the slimming population based on their characteristics, integrating emotional information analysis. References [

9,

10] incorporate emotion into the objective descriptions of restaurants and service quality, including aspects such as service attitude, restaurant facilities, and location [

11]. However, further analysis reveals that the existing research rarely correlates subjective and objective information simultaneously or extracts multi-knowledge to support more downstream tasks. Additionally, current emotional knowledge bases lack coverage of emergent online emotional expressions and negative terms, such as emoticons and “Yanti” characters. Therefore, considering the rich emotional content and diverse expression in online dietary reviews, this paper combines emotional and dietary features to construct a two-dimensional professional dietary–emotional schema layer. This framework enables knowledge extraction that better integrates subjective and objective information while maintaining strong scalability.

In recent years, deep learning technologies, especially pre-trained language models based on the Transformer architecture, have made breakthrough progress in the field of natural language processing. These techniques have demonstrated significant advantages in knowledge extraction tasks, gradually replacing traditional rule-based and statistical machine learning-based methods and becoming the mainstream paradigm in current knowledge extraction research [

12,

13,

14]. For example, Reference [

15] mentions an entity recognition algorithm that combines a domain-specific dictionary with a conditional random field (CRF) model, which enhances the quality of entity extraction to a certain extent. Li [

16] introduce an attention mechanism into the BERT-BiLSTM-CRF model for named entity recognition on a Chinese dietary culture dataset, achieving promising results. Reference [

17] employs a weakly supervised learning approach to extract relationships from heterogeneous, large-scale data. Although these methods achieve knowledge extraction in the dietary domain, all rely heavily on extensive annotated datasets for training, which remains time-consuming and labor-intensive. In addition, the number of dietary labels in reviews is extensive, whereas the distribution of subjective emotion labels is sparse. This imbalance adversely affects the performance of knowledge extraction models. Through research and analysis, data-enhanced few-shot learning methods have been shown to effectively address this issue, with several relevant studies reported in the literature [

18]. Among these, the few-shot learning methods based on data synthesis [

19] and feature enhancement [

20] demonstrate greater flexibility and applicability. Therefore, to tackle the feature content imbalance problem in online dietary review texts, this paper proposes a novel doubly constrained dataset optimization approach that integrates both data synthesis and feature enhancement techniques. Firstly, the data synthesis method is employed to moderately increase both the quantity and diversity of few-shot optimization datasets, thereby enabling the model to better capture the semantic features of the sentence. Secondly, the feature enhancement technique is applied to balance the distribution of sample categories within the optimized dataset, mitigating model bias caused by class imbalance. As a result, the fine-tuned UIE model trained on this optimized dataset significantly improves learning and recognition performance, achieving multivariate, multi-task knowledge extraction of dietary attributes and emotions. This advancement also establishes a solid data foundation for the construction of a comprehensive multivariate emotional knowledge base.

2. Construction of Emotional Knowledge Extraction Schema Layer for Dietary Reviews

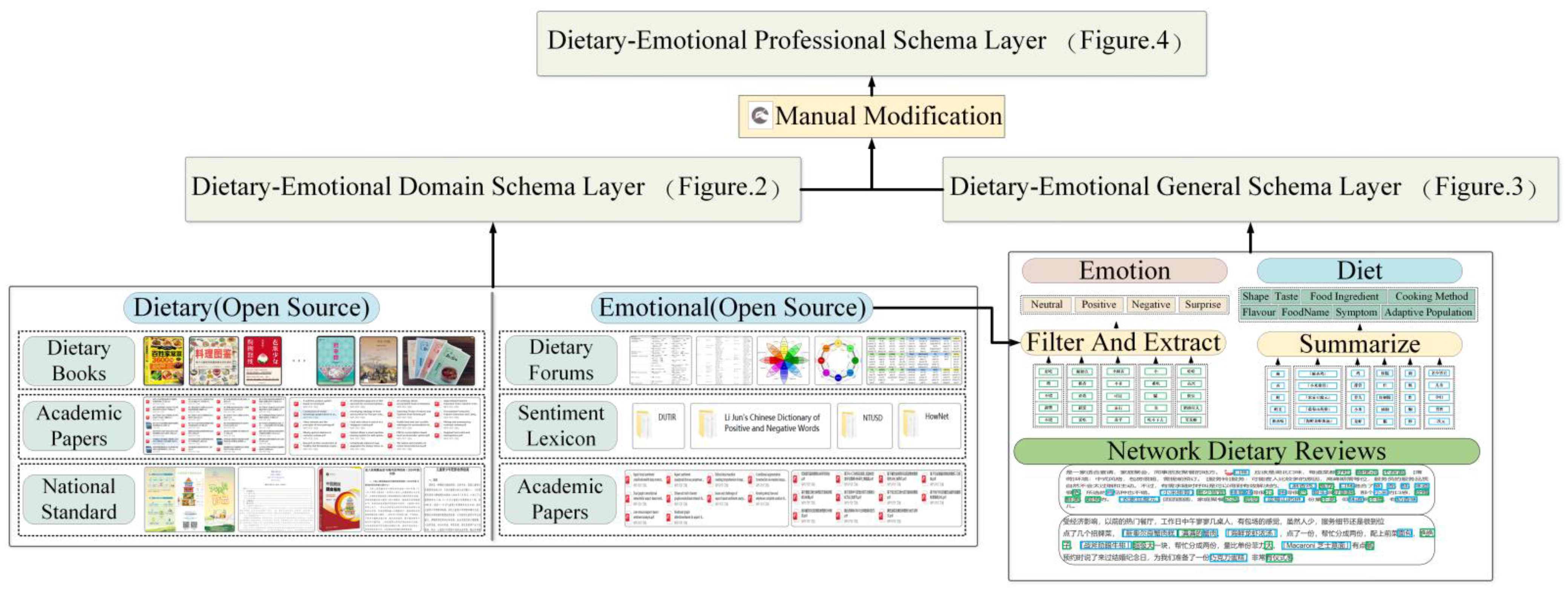

Dietary reviews not only describe the objective attributes of food but also incorporate consumers’ rich experiential and emotional responses. By integrating these two dimensions—objective dietary attributes and subjective emotional categories—this paper proposes a complementary coupled schema layer construction method. This method provides structured guidance for the simultaneous extraction of both objective food attributes and subjective emotional expressions from dietary reviews. The overall process is illustrated in

Figure 1.

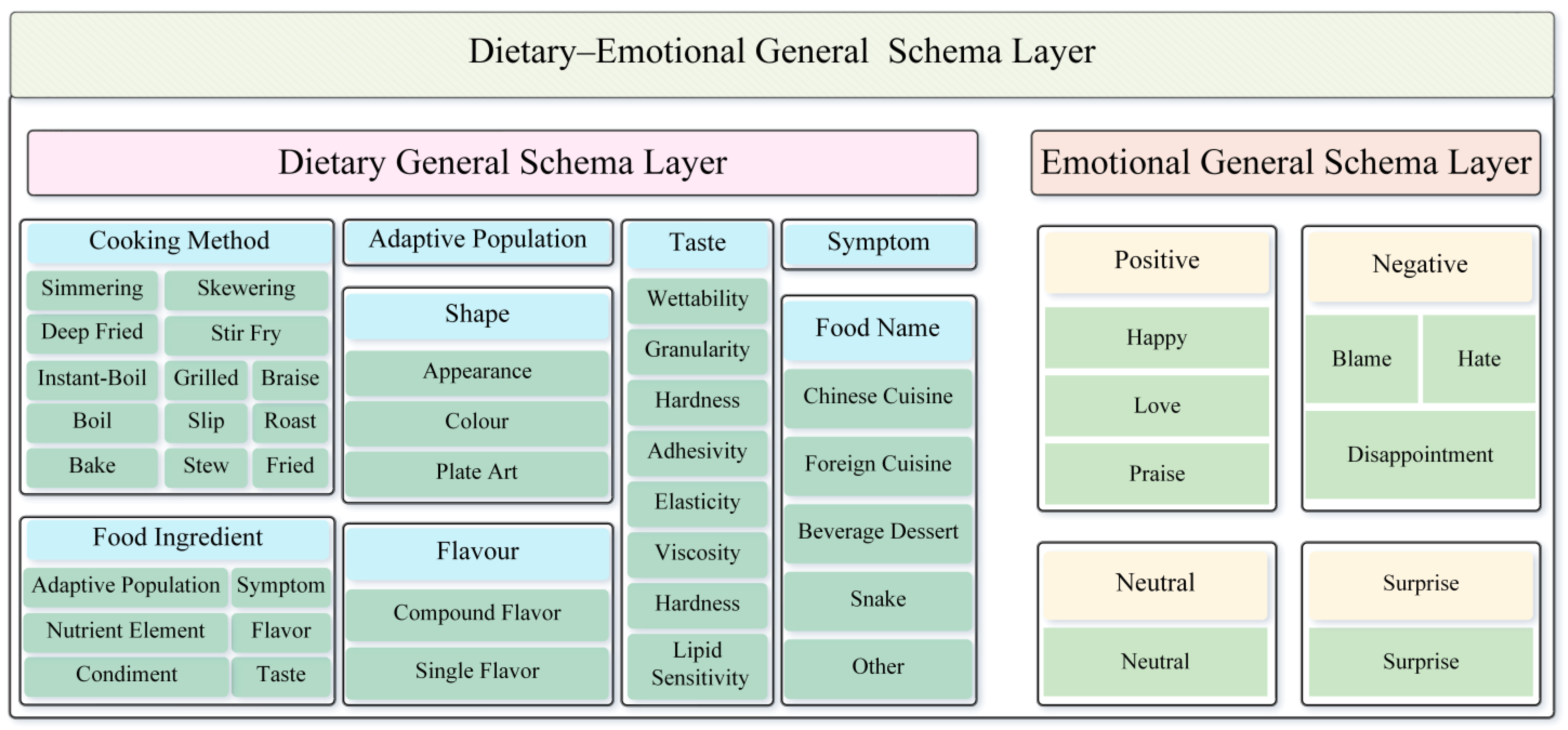

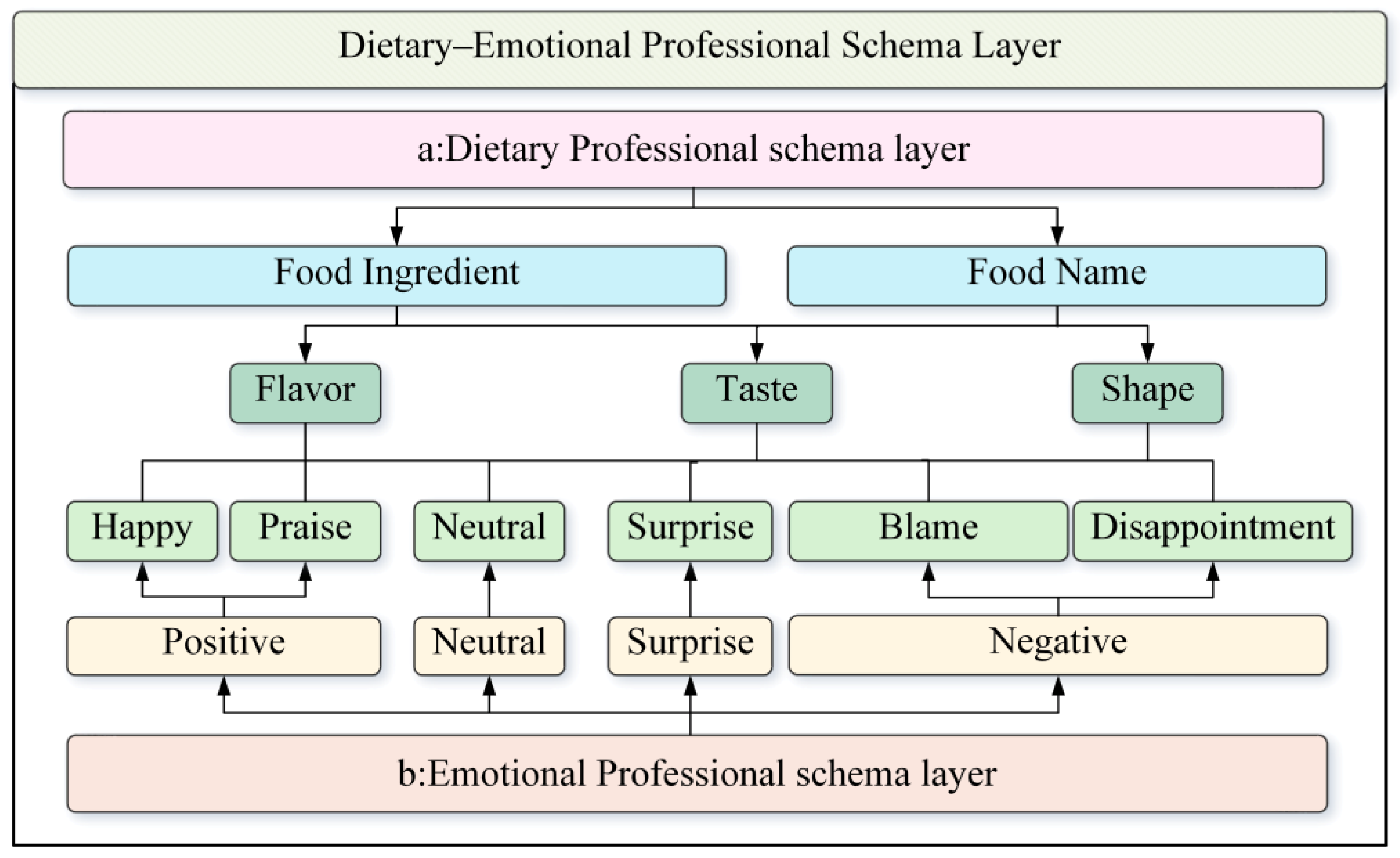

Based on the concepts illustrated in

Figure 1, this paper constructs the following two complementary schema layers for dietary–emotional knowledge extraction: the domain schema layer (e.g.,

Figure 2) and the general schema layer (e.g.,

Figure 3). The domain schema layer is developed by synthesizing authoritative academic research in the dietary field [

9,

10,

15,

21,

22,

23,

24,

25]. However, this layer mainly addresses objective information about diets and does not incorporate emotional aspects. Due to the strong reliance on manual labor, the domain schema layer construction is not well suited for large-scale data processing. In contrast, the general schema layer is data-driven. It involves manually summarizing and organizing knowledge elements directly from the data, abstracting them into conceptual schemas. However, this approach may impact the accuracy of the extracted concepts because of the uneven quality of the underlying data. To address this issue, this paper first constructs a dietary–emotional domain schema layer based on professional knowledge. Subsequently, it abstracts the relevant concepts from the real-time review datasets collected from platforms such as Meituan and Dianping to form a general schema layer. During this process, the domain schema layer serves as a standardizing framework for concepts, while the concepts derived from the general schema layer are used to enrich and supplement the domain schema. By combining these two approaches, a professional and practical dietary–emotional schema layer—referred to as the professional dietary–emotional schema layer—is created (illustrated in

Figure 4). The knowledge base structure developed under the guidance of this schema exhibits clear hierarchy, low redundancy, and rich diversity.

3. Design of Dietary–Emotional Knowledge Joint Extraction Model

When using traditional models for information extraction, each extraction task requires dedicated input structures, making the process cumbersome and time-consuming. In contrast, the UIE framework enables modeling of diverse information extraction tasks in a unified manner, facilitating a seamless text-to-structure conversion across different tasks [

26]. This capability is realized through the following two key components: the Structured Schema Instructor (SSI) and Structured Extraction Language (SEL). The SSI decomposes the information extraction task into fundamental elements, spotting and associating, which are then used to construct a tailored prompt for the extraction task. This prompt design within SSI is crucial for enabling the UIE model to effectively extract knowledge across diverse tasks. The dietary–emotional knowledge joint extraction model based on the UIE framework, as proposed in this paper, is illustrated in

Figure 5.

Figure 5 illustrates the architecture of our model, which employs ERNIE 3.0 as the semantic understanding backbone, and the design features a dual-layer structure: the lower layer serves as a general representation module that captures fundamental natural language features, while the upper layer functions as a task-specific representation module that encodes features tailored to the particular extraction task. For different information extraction tasks, the lower layer remains fixed with shared parameters, and only the upper task-specific layer is fine-tuned, thereby enhancing training efficiency.

The ability of Transformer-XL in the module to capture the desired underlying linguistic features, such as lexicon and syntax, is enhanced by appropriately setting the number of layers and parameters [

27]. The task-specific representation module then learns the high-level semantic features according to different tasks. Specifically, it is assumed that the segment length used for training Transformer-XL is L, and its two consecutive segments are denoted as S

τ = [X

τ,1,…, X

τ,L] and S

τ+1 = [X

τ+1,1,…, X

τ+1,L]. The state of the hidden node of S

τ is denoted as

, where d is the dimension of the hidden node, and the calculation formula for state S

τ+1 of the hidden node of

is as follows:

In the above formula, τ represents the segment number, n expresses the hidden layer at which layer, h states the output of the hidden layer, stands for the hidden layer state of the previous fragment, denotes the hidden state of the previous layer that is linearly transformed and used as an input to the current layer, W denotes the parameter to be learned by the model, and represents the query vector of the n layer, the key vector of the n layer, and the numerical vector of the n layer calculated at time τ + 1.

In the decoding layer, a multilayer label pointer network, implemented using fully connected layers, is used to predict the start and end positions of entities by decoding the hidden semantics obtained from the encoding layer, outputting the relational triples. The entity prediction loss function is calculated as follows:

where cross-entropy shows the cross-entropy loss function. L

start expresses the start position loss value. P

start indicates the predicted entity start position. Y

start states the entity start position. L

end shows the end position loss value. P

end stands for the predicted entity end position. Y

end expresses the entity end position. L

span states the entity loss value. P

i,j denotes the probability of predicting the entity, and Y

i,j indicates the predicted entity position.

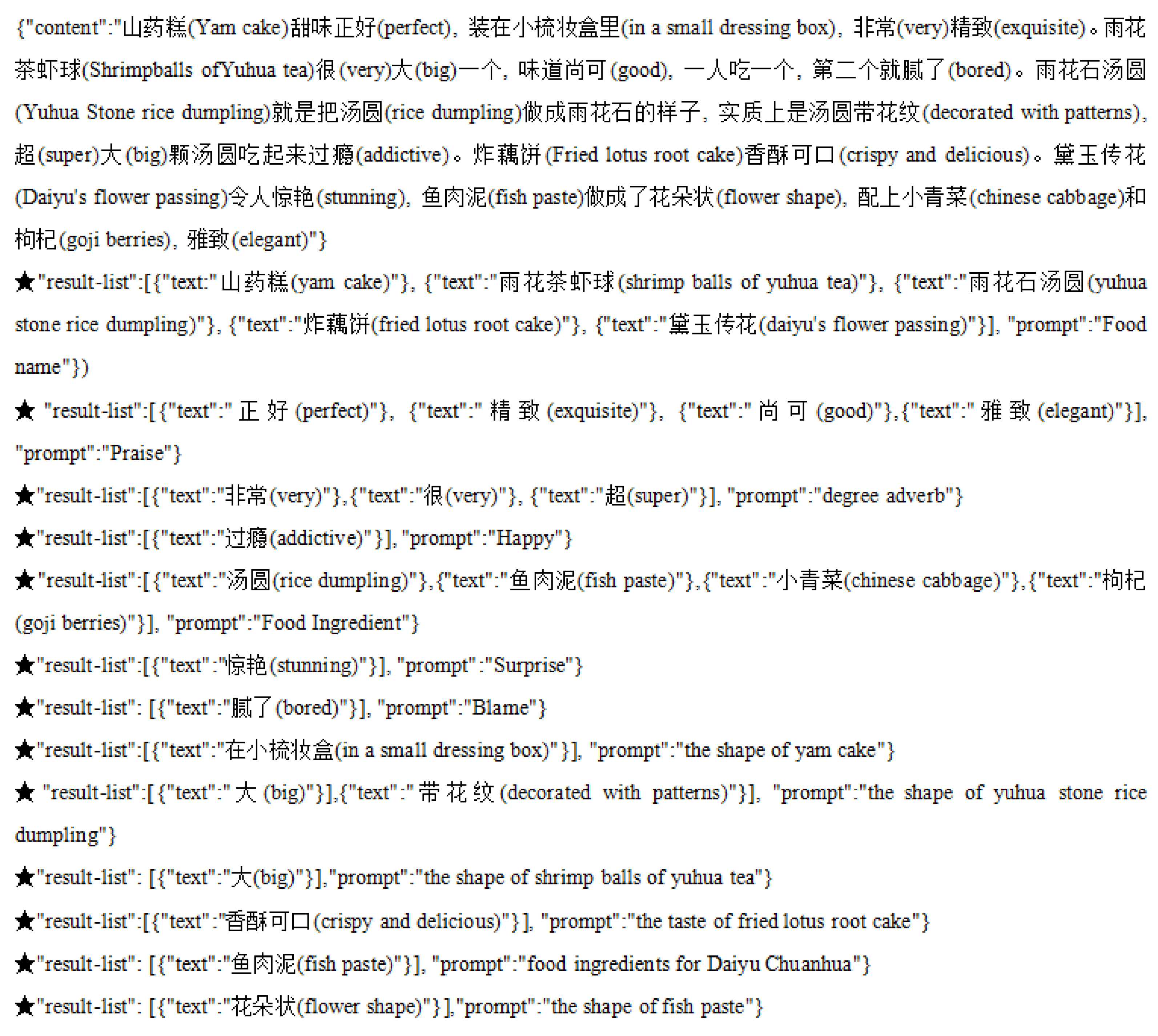

The output of decoded entities in a structured language format primarily depends on the design of the SSI template. Based on the knowledge structure defined in the schema layer, the input guidance template format for knowledge extraction is designed as in Equation (7).

where

I is the actual sentence input to the UIE model.

Text is the original text.

Prompttype is the type of knowledge extraction, the extraction type is different, and the content is different. RL expresses a representation of the annotation content of the sentence. An example of the design of the data input format for the extraction model in this paper is shown in

Figure 6.

4. Experimental Design and Model Performance Evaluation

4.1. Experimental Environment and Parameter Settings

The specific configuration of the experimental environment is shown in

Table 1. The training parameter settings for the six UIE basic models are shown in

Table 2.

In order to evaluate the entity extraction effectiveness of the model, three evaluation indicators are used in this paper—precision, recall, and F1—which are calculated as shown in Equations (8), (9), and (10), respectively.

where

Tp expresses the number of positive samples identified in the output sequence of the model.

Fp states the number of incorrect samples predicted as positive samples in the output sequence of the model.

Fn stands for the number of correct samples predicted as negative samples in the output sequence of the model.

P denotes the ratio of samples predicted positively and correctly by the model to the proportion of all samples predicted as positive.

4.2. Dataset Construction

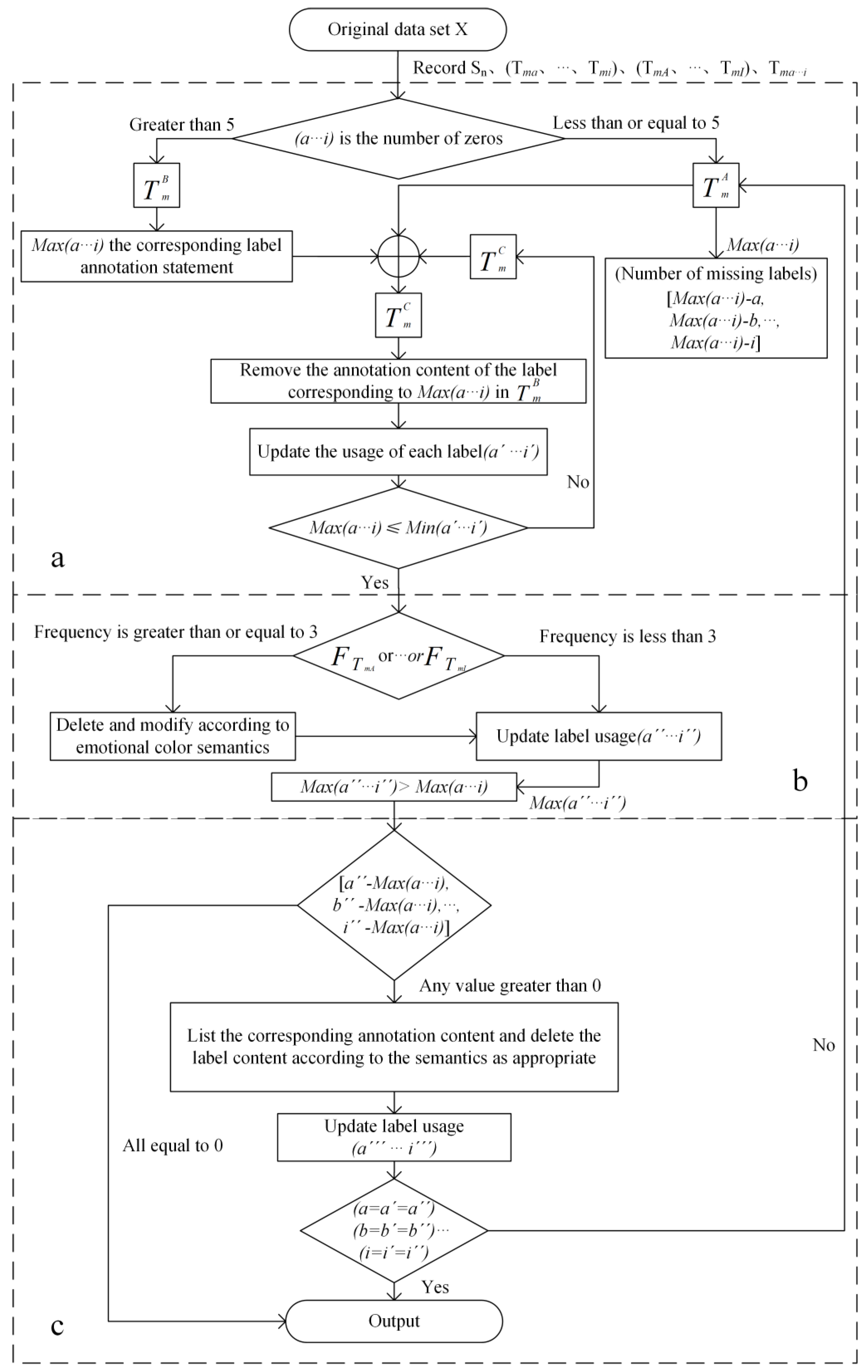

The experimental corpus for this study consists of 48,000 dietary reviews collected from Meituan and Dianping. After preprocessing and cleaning, the resulting dataset was used for analysis. A labeled subset of 80 sentences was employed to fine-tune the UIE model, which was subsequently applied to extract dietary–emotional knowledge from the original dataset. The experimental results showed that the optimal F1 score ranged between 0.58 and 0.79. Various optimization methods, including increasing the data volume and adjusting model parameters, were attempted but produced limited improvements. Further statistical analysis of the annotated sentences reveals a substantial imbalance between the number of emotion words and objective attribute words in the dietary review data. Additionally, there is significant disparity in distribution of emotion categories across sentences. This imbalance in the dietary–emotional information adversely affects the model’s training effectiveness. To address this issue, this paper proposes a dual-constraint method for the number of labels and frequency to construct a few-shot optimization dataset, with which the UIE model is fine-tuned to improve its performance, and the specific construction process is shown in

Figure 7. A dual-constraint method based on label quantity and frequency to construct a few-shot optimized dataset. The UIE model is then fine-tuned using this optimized dataset to enhance its performance. The detailed construction process is illustrated in

Figure 7.

The main ideas are the following: Let X denote the original dataset and Tm represent the annotated sentences within X. The frequency and content of each label in every sentence of (X) are recorded. The dietary–emotional schema is defined as Sn(n = 1···9), where the value of the parameter n from 1 to 9 represents the food name, food ingredient, degree adverb, surprise, happy, praise, neutral, blame, and disappointment labels, in order. Based on Sn, set Tma,…, Tmi as the number of annotations for a certain label in the m-th sentence. TmA,…, TmI is the content of a certain label in the m-th sentence. Tma···i is the number of annotations for each label in the m-th sentence. To balance the number of each label in the dataset, the optimization process is divided into three steps, as follows.

First is sentence splicing. This step aims to supplement emotional labels with fewer occurrences in the corpus, addressing the issue of imbalanced label distribution that negatively impacts the overall model recognition performance. The procedure is as follows: determine the maximum number of labels present in the A sentence set as the threshold, and select the corresponding annotated content as the reference. Then, search in the B sentence set for phrases containing this reference content and concatenate them directly into the corresponding sentences in the A sentence set. Count the number of each label in the set of A sentences after splicing and judge whether the corresponding value is greater than or equal to the threshold before proceeding to the next step.

Second, frequency-based deletion is applied. After the initial step, the identical annotated content in the A sentence set may appear frequently, which can reduce the model’s generalization ability. Therefore, based on experimental observations, the labeled content with a high frequency of occurrence in a sentence is reduced to less than 3. To preserve the sentence’s affective semantics, the objective attribute word in the A sentence set is retained, while subsequent affective words are deleted, and the corresponding affective word in sentence B is then directly appended after the objective attribute.

Third, label elimination is performed. After the first two steps, a large number of sentences with partial labels exceeding the threshold will be produced. This step focuses on removing the labeled content with a low repetition rate but exceeding the threshold.

4.3. Experiment and Result Analysis

4.3.1. Schema Layer Verification Experiment

To verify the applicability of the three dietary–emotional schema layers designed in

Section 2 for knowledge extraction from review texts, this experiment randomly selects 10 unannotated sentences from the original dataset, extracts dietary–emotional knowledge using the UIE model based on the zero-shot method, and compares it with manual annotation to evaluate the reasonableness of the schema layer construction. The detailed experimental results are shown in

Table 3. (For brevity and clarity, the table abbreviates key metrics as follows: Total Number of Labels Annotated as TNLA, Rate of Detected Labels as RDL, Ratio of Number of Detected Labels as RNDL, and Rate of Correct Labels as RCL.)

In

Table 3, RDL is the ratio of labels defined by the schema layer to be identified within the corpus, RNDL reflects the ratio of labels identified by the model compared to the manually annotated labels, and RCL refers to the accuracy of the automatically identified annotated labels. The analysis of

Table 3 shows that, for an equal number of sentences, the dietary–emotional professional schema layer constructed in this paper outperforms others across all indices. This result suggests that the schema layer’s concept and entity definitions and classifications are more precise and coherent, making it a more effective framework for guiding knowledge extraction.

4.3.2. UIE Model Fine-Tuning Experiment

The UIE architecture encompasses multiple model variants with distinct semantic processing capabilities, arising from differences in their parameters. To determine the optimal UIE configuration for dietary–emotional knowledge extraction, each base UIE model is fine-tuned on the optimized dataset using specifically designed prompt templates aimed at maximizing performance. Subsequently, the knowledge extraction performance of these fine-tuned models is verified, and the experimental results are shown in

Table 4. The optimal hyper-parameter learning rate of each model is set to 1e-5, and the batch size is 2. To visually demonstrate the recognition effectiveness of the various base UIE models on the dataset, the results are depicted in

Figure 8.

From

Table 4 and

Figure 8, it is evident that the fine-tuned DRE-UIE model achieves the highest F1 score, indicating that it has the best overall performance. This advantage stems from the DRE-UIE model’s larger parameter scale and deeper network structure compared to the media, micro, mini, and nano models. Specifically, its multi-head self-attention layers enable more effective capture of long-range dependencies and the global contextual information within the textual sequences. This enhances the ability to aggregate and interact with input features makes the DRE-UIE model the preferred knowledge extraction model in this study.

4.3.3. Comparative Experiment Between This Model and Other SOTA Models

To further validate the superior performance of DRE-UIE model in dietary–emotional knowledge extraction, it was fine-tuned and experimentally compared with the SOTA models, including TENER, using 100 original datasets. Each SOTA model compared has its own characteristics in semantic understanding, feature extraction, and sequence annotating tasks. Their experimental results are shown in

Table 5, and the corresponding visualizations are shown in

Figure 9.

From

Table 5, it can be seen that DRE-UIE has the best performance in entity recognition on the original dataset. Notably, the BiLSTM-CRF model improved its F1 value by 19.17% with the BERT, and compared to Word2vec-BILSTM-CRF, the F1 value of the BERT-BiLSTM-CRF model is improved by 2.89%, reflecting its enhanced contextual understanding capabilities. Moreover, the TENER model outperforms the BERT-BiLSTM-CRF model by 15.69% in F1 score, which can be attributed to its use of relative position encoding and a directional attention mechanism that bolster the recognition of low-frequency labels. The DRE-UIE model’s highest F1 value stems not only from ERNIE’s knowledge-enhanced embeddings but, more importantly, from the prompt template design introduced in this study and the fine-tuning performed on the optimized dataset. Because the prompt template incorporates dietary–emotional domain knowledge, it enables the model to better understand the semantic context using priori knowledge, thereby enhancing both the model’s recognition ability and the overall quality of knowledge extraction. Additionally, the prompt template serves as an explicit guide for the model, directing it to accurately extract aspect-level information from the text.

4.3.4. Optimize the Dataset Method Validation

To validate the effectiveness of our proposed optimized dataset construction method in addressing low-resource annotation challenges, we conducted comparative few-shot learning experiments using the original and optimized datasets. This study systematically evaluates label-wise recognition performance across varying sample sizes (5–30 shots). Each experimental configuration was repeated four times, with averaged results recorded in

Table 6 and

Table 7, while

Table 7 provides visual representations of the corresponding results of

Figure 10.

Table 6 reveals consistent performance improvements with increasing sample sizes across both datasets, likely attributable to enhanced feature learning and dietary–emotional semantic understanding. However, as shown in

Table 6 and

Figure 10, when the number of samples reaches 20-shot, the recognition performance of the model does not improve due to the increase in the number of samples and determines the optimal number of samples for few-shot learning of the DRE-UIE model. This experiment thus identifies 20-shot as the optimal sample size for few-shot learning in the DRE-UIE model.

Table 7 shows that on the original dataset, DRE-UIE achieves a high recognition rate for the five labels of ingredients, food name, praise, blame, and neutral emotion, whereas the recognition rates for other emotion labels remain relatively low. The reason is that these labels are annotated with a lot of content and distinctive features, which makes it easy for the model to understand and distinguish such labels. Otherwise, the opposite happens. To evaluate the few-shot learning capability of the DRE-UIE model, 5-shot and 20-shot are conducted. At 5-shot on the original dataset, the model’s recognition rates for disappointment, surprise, and disparagement are effectively zero, with only poor performance for the praise emotion type. The sparse distribution of these emotions hinders the model’s capacity to learn and recognize them. In contrast, when using an equivalent number of optimized data samples, the recognition metrics for these emotions improved markedly, as follows: praise is improved by 18%, disparagement and disappointment increased by about 60%, surprise by 76%, and neutral by 9%. These results, illustrated in

Figure 10, demonstrate the effectiveness of our dataset optimization method in mitigating data scarcity problems.

On the basis of obtaining optimal entity recognition results, this paper further conducts relationship extraction experiments on the optimized dataset. The results, presented in

Table 8, demonstrate that the model maintains strong performance in relationship recognition on the optimized data.

4.3.5. Model’s Experiment on Emotion Recognition in Chinese and English Corpora

To verify the generalization ability and robustness of the model on different languages, the performance of the model on dietary–emotional entity recognition is compared on both the Chinese and English original corpora. The English corpus is sourced from the Yelp website, and the English prompt template is consistent with that of the Chinese setting. Both the Chinese and English corpora consist of 130 samples each. The experimental results are summarized in

Table 9.

From the experimental results in

Table 9, it can be seen that the model achieves comparable recognition performance on both Chinese and English corpora, demonstrating strong robustness and generalization capabilities across languages. The possible reasons are as follows: firstly, the original data in Chinese and English used in the experiments are of high quality, providing reliable training and evaluation material. Secondly, the model is effectively designed to handle textual inputs from different languages. Thirdly, the prompt designed for the text features of food reviews in this paper exhibit a high degree of universality. After knowledge extraction and knowledge cleaning of 50,000 Chinese corpora and 1,000,000 English corpora, along with the collection of expressions and kaomoji and the construction of sentiment lexicons, a multivariate dietary–emotional knowledge base was formed. This knowledge base includes 300,000 Chinese triples, 4,960,000 English triples, 1073 expressions, 489 kaomoji, sentiment lexicons containing six emotions each for both Chinese and English, and a total of 1,510,000 emotional words. The dietary–emotional multivariate knowledge base is shown in

Figure 11. To demonstrate the associations within the knowledge more intuitively, a knowledge graph is used to for visualization. Part of the knowledge graph is shown in

Figure 12, including the Chinese knowledge graph (a) and the English knowledge graph (b).