Abstract

Large Language Models (LLMs) have been the cutting-edge technology in natural language processing (NLP) in recent years, making machine-generated text indistinguishable from human-generated text. On the other hand, “rule-based” Natural Language Generation (NLG) and Natural Language Understanding (NLU) algorithms were developed in earlier years, and they have performed well in certain areas of Natural Language Processing (NLP). Today, an arduous task that arises is how to estimate the quality of the produced text. This process depends on the aspects of text that you need to assess, varying from correct grammar and syntax to more intriguing aspects such as coherence and semantical fluency. Although the performance of LLMs is high, the challenge is whether LLMs can cooperate with rule-based NLG/NLU technology by leveraging their assets to overcome LLMs’ weak points. This paper presents the basics of these two families of technologies and the applications, strengths, and weaknesses of each approach, analyzes the different ways of evaluating a machine-generated text, and, lastly, focuses on a first-level approach of possible combinations of these two approaches to enhance performance in specific tasks.

1. Introduction

Artificial Intelligence has been the “talk of the town” in the scientific community during the last few years, making scientists hold their breath regarding its potential, with the most “threatening” question that arises being the following: Will machines replace humans or not? One of the critical aspects regarding this question is how well a machine can mimic the verbal or written skills of a human being.

Natural Language Processing (NLP) is the branch of Artificial Intelligence (AI) responsible for answering the previous challenge. NLP is the umbrella under which Natural Language Generation (NLG) and Natural Language Understanding (NLU) technologies try to achieve their respective (after their name) targets. Nowadays, the rise of Large Language Models (LLMs) has triggered a profound paradigm shift, from the older “rule-based” technologies to technologies based on neural networks. Today, LLMs, with their wide-ranging applications, comprise the AI research marquee. However, are the “rule-based” technologies obsolete, or, after acknowledging the advantages and drawbacks of both technologies, could they be combined to achieve increased performance in NLP tasks?

In their gist, LLMs’ cutting-edge performance is based on the relatively new transformer architecture, comprised of hundreds of billions of parameters, or even more. These neural models undergo extensive training using vast amounts of textual data that encompass a wide range of sources like internet content, articles, book libraries, and many more. This extensive pre-training phase equips the models with the ability to discern the statistical patterns and intricacies inherent in human language. Today, LLMs serve an ever-increasing array of applications regarding NLP tasks.

On the other hand, rule-based NLG/NLU algorithms were used extensively in earlier times, being the only available approach for NLP. Rule-based NLU, as a subfield of NLP, provides computers with the appropriate algorithms to comprehend human language. Based on lexicons, grammar rules, semantics, and logic inference rules, they achieve the ability to dig out meanings, to name involved entities and existing emotions, and to highlight the possible intention of a text. Usually, following NLU comes the NLG stage where algorithms now focus on the generation of new text, which comes as a natural response to the given prompt. Rule-based NLG/NLU algorithms have excelled in fields like virtual assistants and chatbots, sentiment analysis, text translation, and in applications where there is a need to textualize numerical or other complex data in a comprehendible form.

In [1], the authors, after extensively discussing NLP applications for both approaches, give a first-level presentation of their technologies, capabilities, strengths, and weaknesses, contributing to a deeper comprehension of the different ways Artificial Intelligence in general, and language understanding and generation more specifically, benefit from them.

Extending [1], this paper starts by introducing the architecture and operational features of LLM and rule-based NLG/NLU technologies. Next it presents applications, merits, and limitations of each approach, along with the evaluation procedure, which assesses the quality of a machine-generated text. Lastly, it proposes new ways that these two technologies could be combined to escalate their performance. Therefore, this paper aims to highlight the potential synergies of these two technologies and contribute to promising research directions arising from such synergies.

More specifically, Section 2 and Section 3 give a thorough description of the architecture of LLMs and rule-based NLG/NLU. Section 4 outlines the applications, assets, and liabilities of each technology, while Section 5 presents alternative procedures that can be used to evaluate a machine-generated text. Section 6 discusses the different ways of blending these technologies to surpass drawbacks and increase proficiency. Lastly, Section 7 concludes the paper and discusses future trends in the field of NLP.

2. LLMs Technology

Large language models have gone under extensive and intensive research in recent years, providing, in most cases, state-of-the-art performance in a wide variety of tasks. LLMs depend on a vast amount of data to be able to perform adequately and effectively simulate language responses or more general language capabilities of a human being. In this direction, research has led to the adoption of a specific kind of deep neural network called a transformer [2].

2.1. Architecture

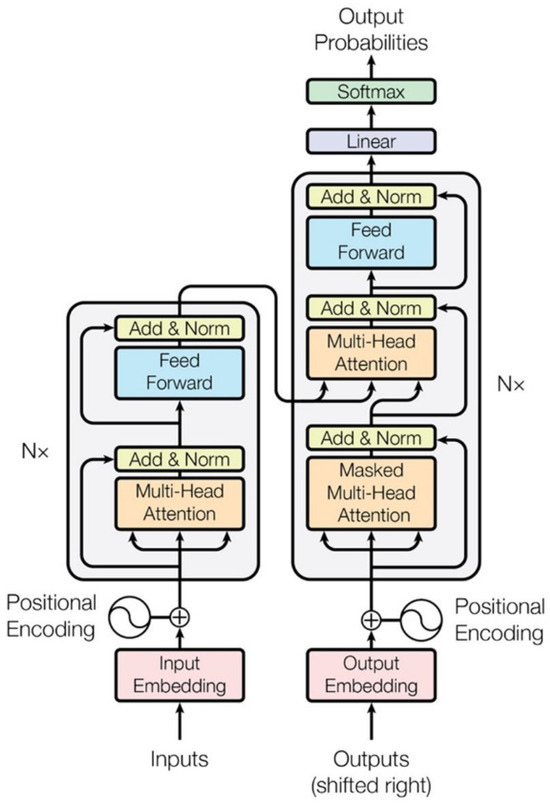

Transformers comprise the fundamental building block of LLMs [2] (Figure 1). They are a specific type of deep neural network designed to handle textual data effectively, which contains and trains billions of parameters.

Figure 1.

The architecture of a transformer as presented in the original paper [2]. Figure reproduced by permission granted from [3].

2.1.1. The Encoder–Decoder Structure

The transformer’s overall architecture is based on an encoder–decoder structure (left and right schemes in Figure 1). The encoder part (left scheme in Figure 1) receives an input sequence of symbol representations (x1, …, xn), which is mapped by the encoder to a sequence of continuous representations z = (z1, …, zn). Using the encoder’s output, z, the decoder then generates an output sequence (y1, …, ym) of symbols one at a time. At each step, the model is auto-regressive, taking the already generated symbols as additional input in order to generate the next symbol.

Encoder: The proposed encoder in [2] is composed of a stack of n = 6 identical layers. Each layer has two sub-layers: a multi-head attention (MHA) mechanism as a first sub-layer, and a simple, position-wise fully connected feed-forward network as a second sub-layer. A residual connection (shortcut connection) is added around each of the sub-layers, followed by a normalization layer.

Decoder: The decoder in [2] is also composed of a stack of n = 6 identical layers. In addition to the two sub-layers in each encoder layer, the decoder adds a third sub-layer, the Masked MHA, which performs multi-head attention over the output of the encoder stack. Similar to the encoder, it employs residual connections around each of the sub-layers, followed by a normalization layer.

2.1.2. Multi-Head Attention (MHA)

Transformers build on top of multi-head attention, which performs dot product attention on an input h times (h for heads) and finally concatenates the relevant outputs. The dot product attention serves as a powerful tool for modeling dependencies and capturing relevant information in data sequences. Specifically, it measures the similarity and importance or significance of the various elements (words or tokens); this way, it allows the model to selectively focus on the most crucial input’s parts.

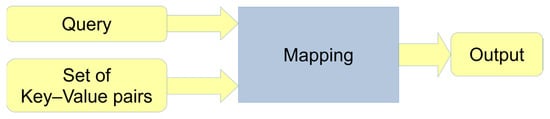

The attention mechanism can be described as the process of mapping a query and a set of key–value pairs to an output (Figure 2); in this case the query, key, value, and the output are all vectors. The result (attention score output) is calculated as a weighted sum of the values, where the weight assigned to each value is calculated using the query’s compatibility function with the corresponding key.

Figure 2.

The attention mechanism (abstract view).

In the proposed model, the key is always an input word (token), which is first converted into a hidden state (a word-embedding vector), while the translated word embedding vector is the query. The value is the weight vector assigned to each input word (key) depending on its position inside the input sentence; in other words, it contains information associated with each position in the input sequence. Last, the attention score, which is the output vector, is calculated by the query and the key using the attention function.

2.2. LLMs’ Taxonomy Regarding Their Architecture

Next, we classify specific LLMs regarding the part of the encoder–decoder structure they follow.

2.2.1. Encoder–Decoder LLMs

We review below some representative LLMs that follow the standard encoder–decoder transformer architecture as presented in [2]:

BART

BART (Bidirectional and Auto-Regressive Transformers) [4] uses the standard transformer architecture, except that the ReLU activation functions were replaced with GELUs (Gaussian Error Linear Units). BART features a bidirectional encoder similar to BERT and a left-to-right decoder like GPT.

T5

T5 [5] is a Text-to-Text Transfer Transformer (T5) model, where transfer learning is effectively exploited for NLP and text-to-text generation tasks. They found that using a standard encoder–decoder structure achieved good results on both generative and classification tasks.

LLaMA

The LLaMA [6] model is based on the transformer architecture, with some improvements: (1) Pre-normalization. To improve the training stability, they normalize the input of each transformer sub-layer, instead of normalizing the output. (2) The SwiGLU activation function. (3) Rotary Positional Embeddings (RoPE Embeddings).

2.2.2. Encoder-Only LLMs

BERT (Bidirectional Encoder Representations from Transformers) [7] is one of the most widely used encoder-only language models. It consists of three modules: (1) an embedding module that converts input text into a sequence of embedding vectors, (2) a stack of transformer encoders, and (3) a fully connected output layer. Some improved variants of BERT are RoBERTa, ALBERT, DeBERTa, etc.

2.2.3. Decoder-Only LLMs

GPT

A prominent decoder-only LLM has been the Generative Pre-trained Transformer 1 (GPT-1) [8] developed by OpenAI and released in 2018. This model laid the foundation for more powerful LLMs, such as its next versions, i.e., GPT-2, GPT-3, and GPT-4. When GPT-1 was released, it demonstrated for the first time that good performance over a wide range of natural language tasks can be obtained by generative pre-training of a decoder-only transformer model.

PaLM

PaLM [9], aka the “Pathways Language Model”, is a densely activated decoder-only transformer pre-trained language model, with some further modifications: (1) SwiGLU activation. (2) Parallel layers. They use a “parallel” formulation in each transformer block rather than the standard “serialized” formulation. (3) RoPE Embeddings.

Gemini

Gemini [10] builds on top of transformer decoders that are enhanced with improvements in the model’s architecture and optimization to enable stable training at a large scale and optimized inference on Google’s Tensor Processing Units (TPUs). Gemini is a family of highly capable multimodal models developed at Google. They trained Gemini models jointly across image, audio, video, and textual data for the purpose of building a model with advanced generic capabilities.

2.3. The Different Kinds of Training

A crucial stage in LLMs’ deployment is their training phase. The need to get educated in the human world of knowledge is answered by a long training phase, where the model “digests” a vast amount of information, and billions of parameters are calculated to tune its performance. This process formats the so-called pre-trained model [11]. In an effort to enhance the abilities of such a model, different techniques are employed.

Fine-tuning is an additional phase of training where the pre-trained model gets further “education” on specific tasks and data, augmenting its general knowledge with a more refined/specialized one. A different process, instruction-tuning, improves a model’s performance by prοviding the model with explicit instructions and guidance to generate text that aligns with the users’ specific requirements [12]. The idea is to train the language model in answering a wide variety of prompts in order to learn how to respond to new, unseen prompts. In-context learning [13] (also known as few-shot prompting) could be an added ability. The user includes one or more examples of inputs and outputs for a given task before prompting the model to complete the same task.

Moreover, human evaluation is introduced by the process of Reinforcement Learning with Human Feedback (RLHF) [14]. RLHF is generally based on a reward function for the model to optimize itself. Finally, by the process of multi-step reasoning, a reasoning ability emerges in the presented models. For example, Chain-of-Thought (CoT) prompting [3] infuses reasoning by presenting the model several examples of a problem and their solutions, based on such a process.

Nevertheless, we have to highlight that some of the aforementioned kinds of training, as noted in the literature [15], can be exploited only when the size of models goes beyond a certain limit.

3. NLG/NLU Technology

NLG [16] can be defined as the generation of human-like text, without the intervention of a human for its generation. NLG systems increase efficiency by automating time-consuming tasks, reducing errors in text, and permitting the production of information that is personalized to individuals. NLG belongs in the broader area known as natural language processing (NLP), while it uses NLU to achieve its purpose.

NLU analyzes the meaning of human language, which means that rather than simply quoting the meanings of individual words that make up sentences, NLU aims to discover connotations and implications existing in human statements, such as the emotion, effort, intent, or goal. NLU enables the comprehension of the sentiments expressed in natural language text.

3.1. NLG Internals

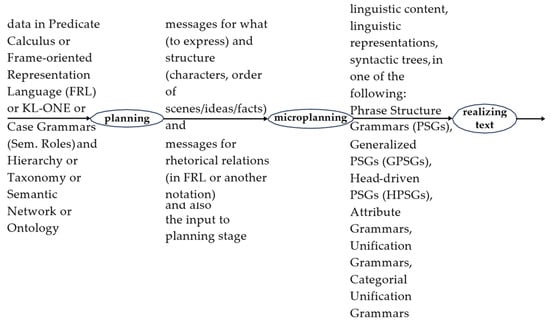

NLG systems and applications should use some sources of knowledge, and they are usually organized in three or four stages [17] that perform various steps for the production of discourse/speech. We will, firstly, briefly describe the stages and the steps performed in each stage. This will allow us to better understand what the required sources of knowledge are, which we will discuss next. Given the above, we will discuss the forms of input and output information for each stage.

Stages and Steps. The stages are Planning, Microplanning, Realizing, and (optionally) Presenting.

Planning is needed in order for the system/application to decide what the most interesting parts of input data are that should be expressed with the discourse/speech, and what the structure of discourse/speech is that will be produced (communicated ideas/facts, rhetorical relations implicitly provided through output).

Microplanning is needed in order for the system/application to perform aggregation, anaphora generation, and lexical item selection, and determine the syntactic structure of each sentence/phrase of the output. Aggregation is needed when there are input (structured) data having similar meanings or being obvious consequences of other input data. Microplanning merges all these (similar meanings and obvious consequences) in a single structure/sentence. Anaphora generation is needed for reducing the repetition of entities’ names. Otherwise, the output is monotonous, and obviously artificial. The selection of the appropriate lexical items is another way to make a discourse/speech understandable by the audience and not seem strange. For example, in a discourse/speech about a football game, it is better to use the lexical item “ball” instead of “sphere”. Syntactic structures are needed for structuring the lexical items in a sentence. Sometimes the same concept can be expressed with sentences having different syntactic structures and the same lexical items. Usually, behind the different syntaxes, there are hidden rhetorical relations that the NLG introduces.

Realizing has to do with morphologically and orthographically correct discourse/speech. It deals with inflection (gender, number, and case for noun phrases [nps]; tense, mood, voice, number, and person for verb phrases [vps]), orthography, and ordering of adjectives.

Presenting is an optional stage. For written texts, it deals with titles, emphasis (bold), and punctuation marks. For oral speeches, it deals with intonation and sentence type (affirmative, negative, imperative, interrogative, etc.).

The sources of knowledge used in the various NLG stages are presented in Appendix A.1.

3.2. NLU as a Prerequisite for NLG

In some cases of NLG applications, the input data can be knowledge sources in some structured form (Predicate Calculus/PC, Frame-oriented Representation Language/FRL, KL-ONE, case grammars/CGs, or even database records). For example, in a weather forecasting system, the input data are in a structured form. In some other cases, the input data can be unstructured (human) natural language text (NLT, e.g., English or Greek text). For example, the input data for a summarization or a machine translation system is usually NLT. Also, the input data for a Q+A or a virtual assistant system are short interrogative sentences (a form of NLT). Consequently, in such systems, before the execution of their NLG stage, these systems must perform some understanding of the input NLT. By understanding, we mean the translation of NLT to some structured form (PC, FRL, KL-ONE, CG, etc.). In other words, NLU is a prerequisite for NLG.

3.3. NLU Internals

In more technical terms, NLU is the translation of NLT to some knowledge representation (structured) form (e.g., PC, FRL, KL-ONE, CG, etc.). A conceptual/mental perception of NLU is the effort to make computers able to comprehend what the ideas/scenes/facts communicated by an NLT monologue or dialogue are. By “comprehend”, we mean that the computer can extract data in structures that it can maintain, process, and utilize for human-serving applications.

NLU (usually) consists of the following tasks:

- Tokenization;

- Part-of-Speech tagging;

- Syntactic Analysis;

- Structural Disambiguation (Resolution of Syntactic Ambiguity);

- Word Sense Disambiguation;

- Semantic representation;

- Anaphora resolution;

- Optionally, Affective Computing, discourse analysis, and Pragmatics.

Tokenization is the process where the boundaries between words are identified, and the punctuation marks are removed. Tokenization also includes the identification of the root form of a word (the so-called lexeme).

Part-of-Speech tagging is the process for labeling the tokens with their syntactic category (e.g., “noun” (N), “verb” (V), “adjective” (ADJ), etc.). In this stage, it is possible to assign some other features (e.g., tense for verbs, case for nouns, etc.) to each token.

Syntactic Analysis is the process that checks if a sentence is well formed and returns the derivation/phrase structure tree [18] of the sentence.

Dependency Analysis is an alternative to Syntactic Analysis. Dependency grammars are grammars that define the dependency (grammatical) relations between the words forming a sentence. A dependency tree depicts the head words (up) and the dependent words (down). The head word at the top is the root of the dependency tree. The arcs linking the vertices are labeled with the dependency (grammatical) relations. See more in Chapter 18, “Dependency Parsing”, of [19]. Dependency grammars use a fixed inventory of grammatical relations [20].

Structural Disambiguation (Resolution of Syntactic Ambiguity) is a requirement when a grammar assigns more than one possible syntactic/phrase structure tree to the input sentence. See also Chapter 6, "Structural disambiguation”, of [21]. A well-known type of syntactic ambiguity is the prepositional phrase (PP) attachment ambiguity. Selecting the wrong phrase structure tree results in a wrong semantic representation (wrong meaning).

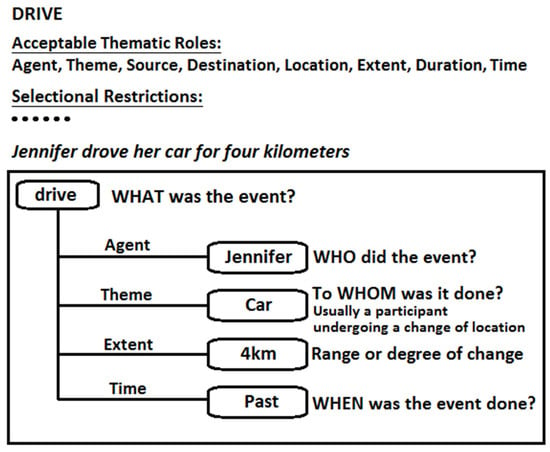

Word Sense Disambiguation. There are cases of polysemy, words having two or more meanings. See also Chapter 4, "Lexical disambiguation”, of [21]. In such cases, it is necessary to have some method for selecting the appropriate meaning. In some cases, the context (the nearby words and the nearby sentences) can resolve the ambiguity [22] of word sense selection. In other cases, restrictions (“selectional restrictions”) of the examined thematic role of the sentence’s verb allow only one of the meanings of the polysemy word. For the application of selectional restrictions, the NLU system must have a knowledge resource, a Hierarchy, Taxonomy, semantic network, or ontology of meanings.

Semantic representation. One of the possible semantic representations of (the meaning of) a sentence is the case grammars. The theory/idea was created by the American linguist Charles J. Fillmore in 1968 [23]. This theory analyzes the surface syntactic structure of sentences by studying the combination of deep cases (i.e., semantic roles) required by a specific verb. Deep cases can be Agent, Object, Beneficiary, Location, Instrument, etc. See more in [24]. As a newer, advanced version of case grammars, we can mention VerbNet [25]. In VerbNet, the available set of possible semantic/thematic roles is much more detailed than the basic ones defined by Fillmore. The VerbNet Reference Page [20] describes all possible thematic roles. At the end of semantic processing, a frame or some other similar structure with the meaning of a sentence is created. An example of a semantic representation is given in Appendix A.2.

Anaphora resolution. The problem of resolving what a pronoun or a noun phrase refers to is called anaphora resolution. There are various Anaphora Types. We can mention pronominal (simple pronoun), possessive pronoun, reflexive pronoun, reciprocal pronoun, and lexical and one anaphoras. An anaphora resolution system, based on “c-command” constraints and implemented in Prolog, is discussed by Karanikolas [26]. This system and every other anaphora resolution solution based on “c-command” constraints requires detailed syntactic (phrase structure) trees and consequently cannot be implemented when shallow parsing is used.

Affective Computing [27], Discourse Analysis, Rhetorical Relations [28], and Pragmatics [29,30] are not discussed here due to size and focus restrictions.

Resources needed. According to the above discussion, the resources needed are the following:

- Off-the-shelf Part-of-Speech (POS) taggers or morphology rules to create rule-based POS taggers.

- Lexicons containing the lexeme (basic form, e.g., “eat”) and all inflected forms (e.g., “eats” and “eaten”, listed or computed based on inflectional rules) for the same lexeme.

- Repository with Feature Structures for each inflected word form. Features could be mood, tense, voice, number, person for verbs; sex, number, case for nouns; etc. Alternatively, Feature Structures can be dynamically created by software having the wisdom (morphology, etc., rules).

- Some semantic network or ontology expressing the relations between different lexemes (e.g., the relation of the Greek noun “τρέξιμο” and the Greek verb “τρέχω”). Alternatively, software implementing word-formation (derivation) rules in order to uncover the relations of lexemes.

- Lexicons for sentiment, affect, and connotation.

- Grammars and parsers (software using the grammars to extract the parse trees).

- Some mechanism for Feature Agreement. For example, the Lexical Functional Grammar (LFG), the Generalized Phrase Structure Grammar (GPSG), and the Head-driven Phrase Structure Grammar (HPSG) are able to check Feature Agreement.

- Case frame/frame-based/slot-filler representations for semantic representation and integration of selectional restrictions.

- Taxonomy/Hierarchy/Semantic Network/Ontology for checking selectional restrictions.

- Explicit knowledge representation of Beliefs for the extraction of Implicatures that humans do.

- Some other needed resources for Speech Acts, Rhetorical Relations, and anaphoras.

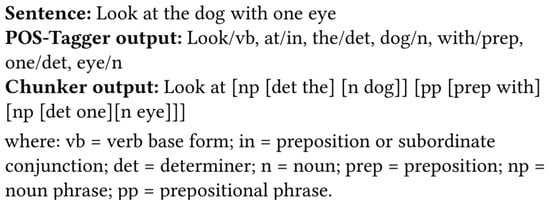

3.4. Shallow Parsing

Deep (complete) parsing (almost) always delivers syntactic ambiguity. The ambiguity can be resolved later by the next steps, e.g., through semantic processing. This complicates NLU. However, partial parsing (parsing of chunks) can be useful for various NLP (processing) tasks like Named Entity Recognition, information retrieval, classification, question answering, virtual assistants, etc. Partial parsing is widely known as shallow parsing [31,32].

A shallow parser usually works in a three-step process. The steps are as follows:

- Word Identification (POS tagging);

- Chunk Identification (using regular expressions, Context-Free Grammars, or Phrase Structure Grammars);

- Merging/splitting of chunks (via rules).

The final chunks, after the third step, are valuable information to feed the Semantic Analysis and Semantic Representation modules of an NLU system. So deep parsing can possibly be replaced by the cheaper shallow parsing. To build a shallow parser, there are two main approaches:

- The rule-based approach requires the definition of a simple chunk grammar;

- The machine learning approach requires us to train a classifier using a supervised classification algorithm and an annotated (with POS and Chunk tags) corpus (collection of texts).

For a shallow parsing example, see Appendix A.3.

A more detailed presentation of NLG/NLU technology is provided by [33].

3.5. Example Pipelines of NLG/NLU and LLM Technologies

Next, in Table 1, we present indicative pipelines of the two technologies for specific question answering, highlighting the different ways answers are created.

Table 1.

Pipelines of NLG/NLU and LLM technologies for specific question answering.

4. Assessing the Merits and Limitations of LLM-Based NLP and Traditional NLP

In this section, we present the applications and benefits, as well as the limitations of each of the two technologies, LLMs and rule-based NLG/NLU.

4.1. LLM-Based NLP, NLG, and NLU: Benefits Unveiled

Large language models (LLMs), built upon deep learning frameworks, represent revolutionary assets in the domain of natural language processing (NLP), propelling advancements in both LLM-based NLP and general NLP techniques, including Natural Language Understanding (NLU) and Natural Language Generation (NLG). These models exhibit extraordinary capabilities in understanding, generating, and processing human-like text.

LLMs exhibit remarkable strengths that contribute to their widespread adoption and utility in various applications. These strengths include the ability to generate text that is often indistinguishable from human-written content and efficiently automate a wide range of tasks across different domains, leveraging their capacity to process vast amounts of text data [34] and learn from it rapidly. Moreover, these models demonstrate fast response times, making them suitable for real-time applications. Furthermore, LLMs can be tailored to specific use cases through additional training and fine-tuning [13], enhancing their versatility and applicability. Leveraging large-scale pre-training and fine-tuning techniques, LLMs produce high-quality outputs that mimic human writing styles and exhibit proficiency in tasks such as summarization, translation, and creative writing, both in LLM-based NLP and general NLP contexts. They also support multilingual capabilities, enabling interactions in multiple languages. LLMs, when paired with speech-to-text or text-to-speech solutions, can assist those with visual impairments. Moreover, the process of image captioning, when utilized by screen readers, can help the same group of people. When coupled with group and remote tutoring options, these models can contribute to the creation of inclusive learning approaches, offering necessary support for tasks like adaptive writing and translation. Additionally, LLMs excel in extracting meaning and intent from unstructured text data, comprehending nuances, inferring context, and extracting valuable insights, benefiting both LLM-based NLP and traditional NLP applications. This proficiency is particularly valuable in applications such as sentiment analysis, entity recognition [35], and question-answering systems [36]. Overall, LLMs enhance user experience by providing meaningful and context-aware responses, thereby advancing human–computer interaction and natural language processing capabilities, in both LLM-based NLP and traditional NLP domains.

Rule-based Natural Language Understanding (Rb-NLU), in the realm of computational linguistics, distinguishes itself by its ability to empower machines to comprehend human language, thereby enabling a diverse array of applications. It enhances user experience by facilitating seamless communication through voice-activated assistants, chatbots, and customer service platforms [37]. Moreover, Rb-NLU revolutionizes information extraction from vast textual data sources. In an era inundated with vast volumes of textual information, Rb-NLU algorithms serve as invaluable tools for discerning meaningful insights. Whether it is sentiment analysis to gauge public opinion [38], entity recognition to identify key entities, or topic modeling to uncover trends, Rb-NLU streamlines the extraction and synthesis of actionable intelligence, empowering organizations to make informed decisions swiftly and effectively. By autonomously categorizing, summarizing, and extracting pertinent information from text, Rb-NLU systems alleviate the burden of manual labor, freeing up human resources to focus on higher-value initiatives. This boosts operational efficiency and productivity, yielding tangible results across sectors. Additionally, Rb-NLU enables personalized content delivery tailored to individual preferences and behaviors. Analyzing user interactions, it offers customized recommendations, news articles, advertisements, and product suggestions, enhancing user engagement and fostering brand loyalty [39]. From a societal perspective, Rb-NLU promotes accessibility by democratizing digital interfaces. Voice-activated assistants and text-to-speech systems make technology more inclusive for individuals with developmental disabilities [40], elderly populations, and non-native speakers. As Rb-NLU technology continues to evolve and mature, its transformative impact on human–machine interaction, information retrieval, decision-making processes, and accessibility is poised to reshape the digital landscape, driving innovation and progress in the years to come.

Rule-based Natural Language Generation (Rb-NLG) offers a myriad of benefits across various industries, primarily driven by its ability to automate the creation of human-like text. One of its key advantages lies in enhancing communication and engagement by generating personalized content tailored to individual preferences [41]. This personalized approach fosters stronger connections between businesses and their customers, leading to improved customer satisfaction and loyalty. Another significant benefit of Rb-NLG is its capacity to streamline and accelerate content production processes. By automating the generation of reports, summaries, and product descriptions, Rb-NLG enables organizations to increase efficiency and reduce time-to-market [42]. This increase in efficiency not only conserves resources but also enables teams to dedicate their efforts to tasks of greater significance that demand human ingenuity and analytical thinking. Rb-NLG also plays a pivotal role in data-driven decision-making by transforming complex datasets into actionable insights presented in easily understandable language. By converting raw data into narrative form, Rb-NLG enables stakeholders to grasp key information quickly, facilitating faster and more informed decision-making processes. This capability is particularly valuable in fields such as healthcare, marketing, and finance, where thorough data examination is of utmost importance. Furthermore, Rb-NLG contributes to accessibility by making information more readily available to individuals with disabilities. By converting text-based content into alternative formats such as audio or braille, Rb-NLG helps bridge the accessibility gap, ensuring that everyone can access and comprehend the information regardless of their abilities. In addition to accessibility, Rb-NLG enhances multilingual communication by automatically translating content into different languages [43]. This capability not only facilitates global outreach but also ensures linguistic accuracy and consistency across diverse markets, thereby strengthening brand reputation and credibility on a global scale.

The synergy between Rb-NLG and Rb-NLU, facilitated by LLMs, unlocks numerous benefits across various domains and industries. For instance, in healthcare, these systems, empowered with image captioning for diagnostic images of MRIs and X-rays, can automate accurate medical report generation [44], while Rb-NLU capabilities aid in analyzing patient records to support clinical decision-making. Similarly, in finance, these models enable the automated generation of financial reports and the extraction of insights from market news and social media data. Moreover, LLMs contribute to advancements in human–computer interaction by enabling more natural and intuitive communication interfaces. Chatbots, virtual assistants, and recommendation systems leverage Rb-NLG to deliver personalized responses, while Rb-NLU capabilities enhance user interactions by understanding and responding to user queries and commands accurately. Text classification and image captioning can help detect fraud in text, classify our email or legal documents, and can automatically recognize and flag offensive or damaging information within text or images.

Overall, the integration of LLMs, Rb-NLG, and Rb-NLU holds promise for revolutionizing how humans interact with machines and process natural language data. Table 2 contains, point-by-point, the comparative merits of both technologies.

Table 2.

Comparative merits of LLM-based vs. rule-based NLP systems.

4.2. Navigating the Limitations of LLM-Based NLP, Rb-NLG, and Rb-NLU

Large language models (LLMs) have attracted considerable interest due to their remarkable capabilities in generating human-like text. However, they are not without limitations. One major drawback is their voracious appetite for computational resources. Training and fine-tuning LLM-based NLP systems require immense computing power and energy, resulting in negative environmental impact [45], rendering them inaccessible to numerous individuals and organizations constrained by limited resources. Furthermore, LLM-based NLP systems struggle with context understanding and coherence, particularly when generating longer passages of text or responding to nuanced prompts. While they excel at surface-level understanding and can produce grammatically correct sentences, LLM-based NLP systems often lack deeper comprehension of context, leading to nonsensical or irrelevant responses in certain situations. This limitation hampers their utility in applications requiring a nuanced understanding and contextual relevance. From an economic standpoint, LLM-based NLP systems present a risk of displacing jobs in certain sectors by automating tasks that have historically been carried out by humans. This situation calls for societal adaptations and the implementation of new approaches to workforce training [46]. Another worry is cultural uniformity, where the prevalence of certain languages and cultures in the training data of LLM-based NLP systems could result in the misrepresentation or underrepresentation of minority cultures [46]. Privacy issues arise because LLMs have the capability to unintentionally remember and reveal sensitive personal data, which poses a major concern in environments with different standards for data protection [46]. Additionally, LLMs raise concerns about intellectual property and content ownership [45]. Since they are trained on vast amounts of copyrighted text, questions arise regarding the ownership and licensing of the generated content. Determining the legal rights and responsibilities associated with LLM-generated text poses challenges for content creators and users alike, especially in commercial or proprietary contexts. Issues regarding transparency and accountability also surface due to the challenge of identifying who is responsible for the outputs of large language models and the opaque nature of these models, often referred to as black box systems [46]. Another limitation of LLMs is their susceptibility to biases present in the data they are trained on. This risk is particularly evident in varied social settings, possibly resulting in unjust or damaging consequences, due to the creation of discriminatory or stereotypical content [46]. Given that LLMs have absorbed vast quantities of text created by humans, there is a constant possibility that the flow of conversation could veer in directions unintended or unforeseen by the designer of the chatbot [47]. For instance, a mental health chatbot could offer a higher frequency of negative remarks compared to human therapists [48]. Additionally, there is a chance that unethical or prejudiced expressions present in the datasets used for training the models might surface in the chatbot’s responses, leading to the dissemination of socially biased [49] or toxic language [50]. Finally, there are ethical considerations surrounding the potential misuse of LLMs for nefarious intents, such as impersonating individuals, spreading misinformation, or fabricating fake news. The democratization of powerful language generation technology brings with it the risk of misuse and manipulation, necessitating robust safeguards and ethical guidelines to mitigate these risks and promote responsible usage. Overall, while LLMs offer immense potential, addressing their limitations and ethical considerations is crucial for harnessing their benefits responsibly and equitably.

Rb-NLU systems, while powerful, also have their limitations. One significant challenge lies in the ambiguity of natural language and its contextual understanding. Given that natural language inherently contains ambiguity, words and phrases frequently possess diverse interpretations depending on the context. This ambiguity manifests across syntactic, semantic, and lexical levels, where sentences can be parsed into various syntactical forms, words may carry multiple interpretations, and individual terms might harbor diverse meanings [51]. Rb-NLU systems may struggle to accurately interpret ambiguous language, leading to errors or misunderstandings in their understanding of user inputs. Another limitation is the difficulty in handling complex language structures and nuances. Human language is rich in nuances, idioms, and colloquialisms that can be challenging for Rb-NLU systems to grasp accurately. This complexity can result in misinterpretations or misclassifications of user intents, impacting the effectiveness of Rb-NLU-driven applications. Furthermore, Rb-NLU systems may face difficulties with out-of-vocabulary words or rare language patterns. While they may excel at understanding common language constructs, encountering unfamiliar vocabulary or unconventional language usage can pose challenges for Rb-NLU models, affecting their ability to accurately process user inputs. Additionally, Rb-NLU systems are prone to biases present in the training data [52]. Like other machine learning models, Rb-NLU systems learn from large datasets, which may contain biases based on factors such as demographic trends or societal stereotypes. These biases can be inadvertently perpetuated by Rb-NLU systems, leading to skewed or unfair outcomes in their understanding and processing of natural language inputs. Moreover, Rb-NLU typically necessitates significant quantities of labeled training data to reach peak efficiency. Obtaining and annotating such datasets can prove to be time-intensive and expensive, particularly in niche domains or languages with restricted resources. This data dependency can hinder the scalability and accessibility of Rb-NLU solutions, particularly for smaller organizations or niche applications. Finally, privacy and security concerns arise with the use of Rb-NLU systems [53], particularly in applications involving sensitive user data. Rb-NLU models may inadvertently reveal private information or be vulnerable to adversarial attacks aimed at manipulating their outputs. Safeguarding user privacy and ensuring the security of Rb-NLU-driven applications is essential to building trust and fostering widespread adoption of these technologies.

Rb-NLG offers numerous benefits, but it also has its limitations. One significant challenge is the difficulty in generating truly human-like and contextually appropriate text [54]. Rb-NLG systems require an extensive understanding of human behavior, language, and communication patterns to effectively interact with humans. Given the intricate nature of these aspects, Rb-NLG systems necessitate a considerable amount of complex knowledge. This complexity is a significant factor contributing to the difficulty in acquiring knowledge for Rb-NLG systems [55]. While Rb-NLG systems can produce grammatically correct sentences, they may struggle with nuances of language, resulting in output that lacks the fluency and coherence of human writing. Another challenge in Rb-NLG arises from Content Determination, which involves determining the domain necessary for generating text based on input. This task is influenced by diverse communicative goals, as different users may require varying content to fulfill their objectives. Furthermore, the expertise level of users and the content within the system’s information sources add layers of complexity to this process [54]. Balancing these factors to produce contextually appropriate and meaningful content presents a formidable challenge for Rb-NLG systems. Furthermore, Rb-NLG systems may struggle with handling ambiguity and generating natural variations in language. Human language is inherently diverse and dynamic, with multiple ways to express the same idea. Rb-NLG systems may have difficulty capturing this richness and may produce output that feels robotic or unnatural to human readers. Additionally, the performance of Rb-NLG systems could be restricted by both the quantity and quality of accessible training data. Training Rb-NLG models demands extensive text data, and the caliber of this data can profoundly influence the effectiveness of the resultant system. Rb-NLU models frequently encounter unintentional biases within their datasets, leading to the acquisition of erroneous simplifications and resulting in inaccurate or overly specific predictions [35]. Moreover, Rb-NLG systems may face challenges in generating content that is truly insightful or creative. While they can automate the production of factual information or straightforward narratives, Rb-NLG systems may struggle with tasks requiring higher-level reasoning or imaginative storytelling. Finally, privacy and ethical concerns may arise with the use of Rb-NLG systems, particularly in applications involving sensitive or proprietary information. Rb-NLG systems may inadvertently disclose confidential information or perpetuate biases present in the training data. Safeguarding against these risks and ensuring responsible use of Rb-NLG technology is essential to building trust and mitigating potential harm.

Table 3 contains the comparative limitations of both technologies with respect to several required features.

Table 3.

Comparative limitations of LLM-based vs. rule-based NLP systems.

5. Evaluation

Viewing technological advances in their entirety, one could argue that, although machines present great attainments in areas such as automation, industry, space, and many more, exceeding by far and “extending” human abilities, their performance, when understanding or generating language is concerned, is very impressive, but not always equally excellent. From the introduction of the Turing test in 1950s, until the recent introduction of LLMs, humans have been trying to figure out the correctness, coherence, reliability, and other characteristics concerning the quality of machine-generated text.

Interestingly, current research has shown that there is not a unified approach when the issue of evaluating such a text arises. This is because text generation and understanding are applied in a large variety of tasks, each one exhibiting its own requirements. For example, chatbots need to give coherent and accurate responses to a customer’s questions, using mostly everyday language, while an essay needs to be rich in context and coherent, with a more formal use of language. Consequently, evaluation metrics need to focus on the demands of each separate task.

Moreover, tasks can be allotted into two categories. Those, such as summarization or translation, where a reference text that serves as the gold truth of the expected response (a text that meets all the requirements the task poses and that the automated text will hopefully reach) can exist and, on the other hand, tasks such as chatbots, where such a text could not exist, rather ad hoc responses are expected. “Reference” texts are mostly provided by humans who prepare properly annotated datasets for different kinds of applications, to help measure the machine-produced responses.

In the following, there is a brief presentation and discussion regarding the evaluation metrics that have been defined, up to today’s LLM-based evaluation.

5.1. The Metrics

According to the above, and before going deeper into the process of evaluation, we must acknowledge that the gold standard of text is human-generated text. Humans are almost irreplaceable for tasks requiring a detailed understanding and qualities like sensitivity or creativity. Moreover, humans prevail when metrics such as coherence and fluency (grammatical and semantical) are concerned. Consequently, the closer we get to the gold standard, the better the overall quality of the produced text is.

The evaluation metrics that were initially introduced, often called automatic metrics (e.g., BLEU score, ROUGE score, or Perplexity), focused only on the surface, that is, the morphology, and not on the semantics, text nuances, or generated emotions. Most of them are based on a reference text and measure the similarity between the reference and the generated text. Therefore, they seem to fail to capture similarity when sentences use different structures and vocabulary, despite leading to the same semantics. In addition, they are characterized by limited interpretability and a limited range of evaluation features that they can assess.

With the introduction of deep learning algorithms, the process of evaluation has been enriched with new, model-based metrics. Metrics like BERTScore [56] and BARTScore [57], for example, use those models’ different language representations (embeddings) and generation probabilities to compute similarity. These metrics have been extensively used because they seem to succeed in capturing the overall quality of the text in aspects such as fluency, coherence, coverage, and faithfulness. However, researchers [58] question their adequacy and prove that automatic evaluation is still behind human evaluation, presenting aspects that require improvement.

Nowadays, we are all witnesses to the fact that the advent of LLMs has broadened the use of machine-generated text; however, it has not always done so accurately or faultlessly. It is extremely noteworthy that recent research has suggested the use of LLMs in one more task, that of measuring their own performance [59,60,61,62]. Although strange at first (the creator is also the judge of its own creation), this suggestion proves to be very promising [63]. LLMs and their emergent abilities have presented extremely good behavior when trying to estimate the quality of texts. Processes like fine-tuning, instruction-tuning, and human reinforcement seem to come to their aid.

5.2. LLM-Driven Evaluation

In [64], authors present a full description of the ongoing research regarding the use of LLMs in the process of evaluation. Moreover, several benchmarks have been developed recently, fostering the evaluation process, which are usually devoted to specific tasks and measure specific aspects of performance (e.g., BigBench [65], ChatEval [66], HumanEval [67]). In addition, certain benchmarks have been developed recently to cover the rising need of evaluating the performance of other modalities other than text, like images, video, or time-series data (Koala [68], MTBench [69]). Following, the different ways an LLM can be exploited to assist evaluation are described, along with a short description of the resulting benefits and drawbacks.

Prompting LLMs: LLMs’ feature of prompting can be leveraged, helping both the evaluation criteria and the process to be explained to the system in natural language. Specifically, strong LLM features such as the Chain-Of-Thought process (e.g., G-Eval [70]) and instruction-tuning [71] advance evaluation: humans can coach an LLM, giving it a full set of instructions and paradigms in natural language.

Fine-tuning: Fine-tuning, when applied to LLMs, helps them “get educated” in niche tasks, in order to further improve their efficiency. However, fine-tuning can also be leveraged in the evaluation process, like in [62]. Using prepared, annotated evaluation data, an LLM can be tuned to focus on, and consequently improve, certain evaluation aspects regarding text generation. More specifically, the process that tends to get standardized in recent research is to avoid using heavy, energy-dependent LLMs and to replace them with more compact, open-source ones. These are fine-tuned to improve evaluation assessments, aiming to achieve performance equivalent to that of a more elaborate LLM. The training dataset usually includes exquisite examples of annotated data, mostly provided by humans, of both adequate and inadequate outputs, along with annotations regarding aspects like coherence, factual accuracy, and creativity. After fine-tuning, LLMs can be used to measure other LLMs’ output.

Human–LLM Collaborative Evaluation: As already mentioned, although very competent, LLMs cannot always replace humans, especially in more demanding tasks that need nuanced understanding and/or human intuition and consciousness. On the other hand, human evaluation is labor-intensive. Therefore, collaboration between humans and LLM systems can leverage their advantages and diminish their shortcomings, achieving robust and reliable evaluations (EvalGen [72]). The RLHF (Reinforcement Learning with Human Feedback) feature [73] is such a paradigm, where humans reward LLMs when a response, best aligned to human preferences, is given. An extensive study on this task is presented in [74], where researchers, aiming to improve (separately) the generated text from the aspect of helpfulness and harmlessness, employed crowdworkers to act as reward estimators and explore different ways of training.

Peer Review and Discussion: Exactly like humans, LLMs can be used in the process of peer review (aiming for a more elaborate evaluation process), where the drawbacks of a single LLM will be balanced by the strong points of other LLMs. The main idea is to challenge several LLMs to generate text relevant to a certain prompt, and then call them to evaluate each other’s output, concurrently generating feedback regarding the strengths and weaknesses in each case.

5.3. Assets and Liabilities of LLM-Based Evaluation

Besides the above-mentioned benefits, a strong point in adapting LLMs in the process of evaluation is that of auditing the trail of responses. LLMs’ responses can be fully explained, facilitating interpretability and a high correlation with human judgements. In addition, the diversity of the used models, along with the respective diverse training phase, helps to present equally diverse evaluation points, covering more aspects of the text quality. Moreover, LLMs can evaluate a large volume of text outputs quickly, making them suitable for large-scale tasks.

However, by having an LLM assess another LLM’s output, potential biases and drawbacks of the specific model might arise. Research has shown that the length of a text seems to play a role in evaluation, and that there seems to be a preference for factual rather than grammatical errors, affecting LLMs’ scoring [60]. In addition, it seems that there might be a bias towards texts that are generated by the same LLM that conducts the evaluation, or that LLMs might present inferior performance in closed-ended scenarios. Moreover, robustness arises as an issue, as has been shown through adversarial perturbations and other stress tests [73], conducted to assess the stability of LLMs’ evaluation in different challenging scenarios. Language can also affect the performance since there seems to be a difference in performance when text in Latinate or non-Latinate languages is considered.

Another important issue is efficiency, because LLMs are extremely demanding of time and computational resources, especially those with large volumes of parameters. Collaboration between humans and LLMs can balance efficiency and cost; however, the respective price needs to be paid compared to the fully automated version. Fairness is also a critical factor in the use of LLMs. LLMs, both in the process of generating text and when evaluating it, might present social bias to a significant degree, depending on the training they have undergone.

Lastly, another aspect of evaluation that only recently has come into practice is that of attribution [75]. Considering the hallucinations that are often present in LLM-produced responses, resulting in undermining their reliability, researchers have tried to alleviate this problem. By introducing external tools that focus on incorporating references, they try to justify the correctness of the response. However, research has shown that only half of the presented references really account for it. Therefore, automatic evaluation methods are proposed to be applied to examine attribution.

Reflecting on the above and on the peculiarities present in different text-understanding or text-generation tasks, one should not consider older metrics as obsolete. On the contrary, a deep understanding of text nuances that influence each metric is required to follow a thorough and valid evaluation procedure.

Following, in Section 7, where possible collaboration of LLMs and rule-based NLP (Rb-NLP) systems is described, we propose a procedure for the evaluation of collaboration patterns in specific tasks.

6. LLMs Working with Rule-Based NLG/NLU

The collaboration between LLMs and rule-based NLG/NLU technologies in hybrid systems has the potential to produce numerous advances and state-of-the-art performance across various domains and tasks. Older NLP technologies like rule-based systems (RBSs) are not really outdated, though they seem to represent a step backward from newer deep-learning technologies (i.e., LLMs). RBSs are still competitive in text-understanding and -generation tasks, especially in specific domains such as medical or legal texts, combined with lower operational demands. On the other hand, LLMs have proven to be more flexible, presenting naturalness and expressing nuances that align closely with human responses. The big question that arises effortlessly is whether the “old” and the “new” can cooperate to improve each other’s performance. Following, we introduce some prominent examples of state-of-the-art systems incorporating both LLMs and Rb-NLU/NLG techniques.

In the domain of educational question-answering chatbots, especially in university admission chatbots, Nguyen and Quan [76] suggest URAG. In order to enhance lightweight LLMs for usage in chatbots for university entrance, they suggest the Unified RAG (URAG) architecture. URAG creates a two-tiered approach by combining the flexibility of RAG with the dependability of rule-based systems. To provide precise answers to frequently asked questions, particularly those involving sensitive or important information, the first tier makes use of an extensive frequently asked questions (FAQ) system. The second tier finds pertinent documents from an enriched database and creates an LLM response if there is not a match in the FAQ. RAG is now a commonly used method for developing LLM-based question-answering (QA) systems. This method is preferred because of its cost-effectiveness and capacity to integrate the generative power of LLMs with retrieval-based techniques. The Retriever and the Generator are the two main parts of a typical RAG pipeline.

Additionally, in the field of domain-specific chatbots, Panagoulias et al. [77] introduce their method, called “Med—Primary AI assistant,” which is a chatbot focused on user interactions with medical software that is powered by AI and offers medical advice in the form of suggested diagnostics and symptom analysis. They establish the framework of domain-specific knowledge and the user-relevant domain-specific content using natural language processing (NLP) methods. This improves the experience of interacting with AI in a medical setting. The system, which makes use of the GPT-4 engine, has been thoroughly tested using multiple-choice questions that center on general pathology symptomatology.

Sanchi et al. [78] advocate for LLMs to advance the accessibility, usage, and explainability of rule-based legal systems, contributing to a democratic view and utilization of legal technology. The issue of explaining the intricate syntax and specialized vocabulary used in legal provisions to laypeople has been discussed since the 1960s. So, they developed a methodology to explore the potential use of LLMs for translating the explanations produced by rule-based systems, allowing all users a fast, clear, and accessible interaction with such technologies. Their study continues by building upon these explanations to empower common people—not trained in law, science, or legal matters—with the ability to execute complex juridical tasks on their own, using prompting (they define it as a Chain-of-Prompts—CoP—approach) for the autonomous legal comparison of different rule-based inferences, applied to the same factual case.

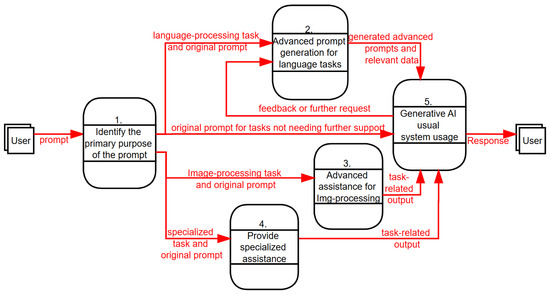

Next, extending the efforts described above, certain architectures of such a collaboration are given in Section 6.1, along with task-based collaboration examples in Section 6.2. Later, in Section 6.3, a proposal for evaluating a collaboration of Rb-NLP and LLM patterns is given, and, in Section 6.4, detailed examples of use cases entailing such a collaboration are described.

6.1. Architecture-Based Collaboration from a Generic Standpoint

In the following, the serial and parallel architectures of collaboration are described.

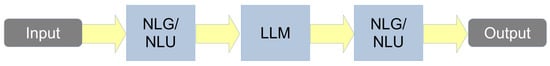

6.1.1. Serial Collaboration

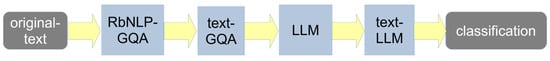

A hybrid LLM and rule-based NLG/NLU system could be designed by implementing the serial architecture presented in Figure 3. In the first stage, an NLG/NLU system (first box in Figure 1) receives the input text, or else the user’s prompt, and runs a thorough syntactical and glossological check. For example, it may perform an analysis on the user’s input, resulting in filtering out irrelevant or meaningless text, semantically incorrect sentences, etc. In the second stage, the first system’s output will be provided as input to an LLM (second box in Figure 3). The LLM completes its execution and passes its own output (depending on the task, it may be masked language modeling, question answering, translation, etc.) to an NLG/NLU system again (third box), which will now proceed to an advanced refinement (e.g., syntactical and glossological check, etc.) to the LLM’s response, and will provide the final output.

Figure 3.

Serial collaboration of rule-based NLG/NLU and LLMs.

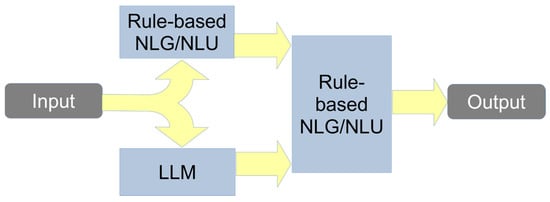

6.1.2. Parallel Collaboration

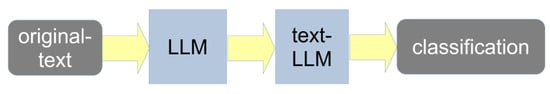

Another example of a hybrid system’s architecture, combining LLMs and rule-based NLG/NLU in a parallel way, is depicted in Figure 4. The input text (user’s prompt) is inserted in a parallel way into two systems, a rule-based NLG/NLU and an LLM, as shown in the figure. The two systems will provide their output according to the relevant task, and these two outputs will be combined, refined, and/or summarized by a rule-based NLG/NLU system again, which is responsible for producing the final system’s output. In addition to the outputs that the two parallel systems give in the first phase, they will also provide the reasoning process through which they have arrived at their response, in order to enhance the comprehensibility of the next step. Finally, the next rule-based NLG/NLU part could evaluate the two processes and give as the final output the response that is logically better explained and supported.

Figure 4.

Parallel collaboration of rule-based NLG/NLU and LLMs.

6.2. Tasks That Would Highly Benefit from LLMs and Rule-Based NLG/NLU Collaboration

Named Entity Recognition—Part-of-Speech Tagging

Firstly, LLMs’ performance seems to have room for improvement in Named Entity Recognition (NER) and in Part-of-Speech (POS) tagging, tasks where traditional rule-based NLP methods are renowned for working very well. When there is a need for such a task, traditional methods could work at a pre-processing level, performing NER or POS on the given prompt, before handing down their output as an aid to an LLM, to carry on with the user’s request. NER is a key component of NLP systems, such as chatbots, sentiment analysis applications, and search engines.

Emotion Identification—Sentiment Analysis

In the same direction, identifying prompts that entail emotions, or unravelling whether the produced machine text is written in a certain emotional state, or even whether it brings out emotions to a reader, are all aspects of text processing that could be assisted by traditional NLP algorithms. Traditional NLP is acknowledged as being an expert in identifying emotions; therefore, passing the user’s prompt as input first in an RBS could help identify them. As a second step, this outcome could be given, along with the original text, to an LLM to assist its performance regarding emotional nuances. In addition, at a post-processing level, an RBS could assist in discerning and, consequently, removing offensive content that might arise in an LLM’s response.

Chatbots

NLU systems and LLMs enable chatbots to interact with humans in a more accurate, knowledgeable, and personalized manner. The interaction between LLMs and NLU systems enables chatbots to keep a coherent conversational flow. While the LLM consults its knowledge base and reacts suitably, the NLU system offers intent recognition and rule-based consistency within conversations. This way, far more interesting conversations are produced, which simulate human-to-human interactions.

Reasoning—Logical Coherence

Another liability present in LLMs is their reasoning process, especially in sensitive tasks that need a certain degree of reliability. Although extremely competent in producing text, situations come up where the LLM’s response presents nonsensical inconsistencies with respect to the given prompt. Studies have already focused on this issue, trying to improve reasoning, or else logical coherence, mostly through the Chain-of-Thought (CoT) process. Traditional NLP could act as a guardrail, checking whether the prompt and resulting text follow a certain reasoning process or not. In case they do not, human collaboration could be a solution to help the LLM with extra information to improve its performance.

Text Annotation—Dataset Production

Older models seem to be less demanding of resources; consequently, they are still applied in many situations. In a “reverse” direction, LLMs could assist the work of older models by being utilized for annotation purposes. LLMs present human-like behavior in the process of annotation, a necessary process to produce varied datasets. This necessity is especially prominent in environments where there is a shortage of such datasets, and they could help to improve the performance of older models.

6.3. Proposal for Evaluating Potential Collaboration of Rb-NLP and LLM Patterns

Following, we introduce an evaluation process for a hybrid system with the collaboration of Rb-NLP and LLM-NLP sub-systems. The task we select is text classification, but the presented evaluation procedure could potentially be utilized in different NLP tasks in accordance with the needs of the user.

Firstly, we select a suitable dataset with a large number of pre-classified—into various categories—texts, which could be mutually exclusive or not; i.e., the selected dataset could be a collection of legal cases with the relevant categories, such as divorce, homicide, theft, drugs, pimping, etc.

Next, we feed each text (legal case) of the dataset into the following alternative text classification patterns:

- System #1 is composed of two sub-systems, an Rb-NLP system and an LLM-NLP system, in this order, as shown in Figure 5. The Rb-NLP stage provides text with generic question-answering (GQA) information to the LLM stage, which then functions like a generative stage that adds more context to the original one, producing in this way a text-LLM, as depicted in Figure 5. The text-LLM is an adorned, enriched, and extended text. Finally, it classifies the adorned, enriched, and extended text into one of the above-mentioned categories.

Figure 5. System #1.

Figure 5. System #1.

- 2.

- System #2 consists of two sub-systems, an LLM-NLP system and an Rb-NLP system, in this order, as in Figure 6, and executes the same steps as described above but in a different order, regarding the LLM-NLP and Rb-NLP sub-systems, producing the text-GQA, a new classification output. Finally, it classifies the text-GQA into one of the above-mentioned categories.

Figure 6. System #2.

Figure 6. System #2.

- 3.

- System #3 is a pure LLM-NLP system, as shown in Figure 7, producing a text-LLM. The text-LLM is an adorned, enriched, and extended text. Finally, it classifies the adorned, enriched, and extended text into one of the above-mentioned categories.

Figure 7. System #3.

Figure 7. System #3.

After prompting the three systems with every legal case in the dataset, the success rate of the classification task is calculated for each one of them. By comparing their success rates, the best collaboration choice will be distinguished.

We believe that this evaluation procedure could be used as a compass and should be executed whenever we need to create a high-performance, state-of-the-art NLP system. Engineers and researchers, using the above-mentioned evaluation procedure, can find the optimum way to harness the power of both Rb-NLP and LLM-NLP systems.

6.4. Specific Use Cases of Collaboration

Next, certain examples of collaborating systems are described in the area of sentiment analysis, translation, and voice-to-text conversion.

6.4.1. NLG/NLU Hybrid System for Emotion Identification–Sentiment Analysis (EI-SA)

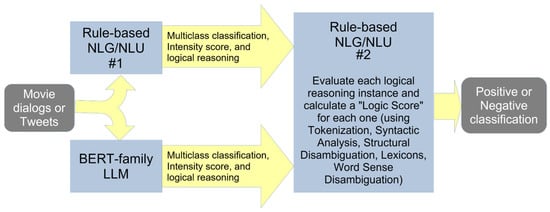

At this point, we would like to present, in more detail, the architecture of a hybrid system that could combine rule-based NLG/NLU and LLMs in a parallel way, as shown in Figure 8. The output of the system is a two-way text classification of the input: positive or negative.

Figure 8.

NLG/NLU hybrid system for Emotion Identification–Sentiment Analysis (EI-SA).

In the training phase, a dataset from [79], which follows Ekman’s emotion classification model, consisting of six basic emotions (anger, fear, sadness, joy, disgust, and surprise), can be used. Such datasets (following Ekman’s model, plus a neutral category) are the DailyDialog dataset, comprising 13k movie dialogs in the English language, and the EmoEvent dataset, comprising 8.5k tweets, also in English. This way, we would have a dataset with a quite satisfactory number of different emotions (categories) and a medium size of total sentences (examples).

Regarding the LLM part, a member of the BERT family, like RoBERTa, DeBERTa, or DistilBERT, is embodied. This choice is justified by the fact that this family seems to receive a lot of users and supporters, both in the research community (as described in the state-of-the-art literature) and in the Hugging Face AI platform/community (https://huggingface.co/ (accessed on 4 June 2025)), regarding the specific task of EI-SA and text classification in general.

Further on, the "Rule-based NLG/NLU #1" part consists of the following steps:

- Tokenization;

- Part-of-Speech tagging;

- Syntactic Analysis;

- Structural Disambiguation;

- Word Sense Disambiguation;

- Rules specifically designed for EI-SA.

These two first stages (BERT and Rule-based NLG/NLU #1) will accomplish three sub-tasks:

- Solve the multiclass classification problem. They would classify the input sentence (a movie dialog or a Tweet) in a set of various emotions/categories following Ekman’s emotion classification model [79]. This way, we would have one or two distinct categories for the relevant input. For example, the Tweet “ultimately I will lose you forever because I feel like my only role now would be to tear your sails with my pessimism and discontent”, could be classified as “fear” from the rule-based #1 stage and as “sadness” from the LLM stage, or “sadness” from both of them.

- Calculate an intensity score of the relevant class. For example, the class “fear” could receive an intensity measure of 0.4 from the Rule-based NLG/NLU #1 stage, or the class “sadness” could receive an intensity measure of 0.99 from the LLM stage.

- Provide some logical reasoning output. This would be in order to enhance the comprehensibility of the next step, namely the Rule-based NLG/NLU #2 stage.

Next, the Rule-based NLG/NLU #2 stage would receive the above-mentioned set of inputs: the multiclass classification, the intensity scores, and the text-based logical reasoning. This stage would apply a set of specific rules in order to come up with a single, final output: positive or negative classification. The rules implemented inside the Rule-based NLG/NLU #2 stage have to be designed and implemented on a use-case or task basis.

Evidently, the above-mentioned architecture/system must be compared with a pure LLM and a pure rule-based NLG/NLU system, respectively, to be able to argue that the performance of the presented system is superior and reaches the state-of-the-art level. This is among our plans for future work.

6.4.2. Rule-Based NLUs for the Improvement of LLMs’ Translations

The following two sentences are in the Greek language, and they have exactly the same meaning, even though they have some surface differences:

#1Gr. Δεν ξέρω εάν η ανεπιφύλακτη χρήση των επιβαλλόμενων άνωθεν κλισέ από τους κατέχοντες θέσεις ευθύνης είναι συνέπεια της εν μέρει ικανότητας αντίληψης τους ή/και της εν μέρει κοινωνικής ευαισθησίας τους ή/και της εν μέρει αυτοκυριαρχίας τους.

[Den xero ean i anepifylakti chrisi ton epivallomenon anothen klise apo tous katechontes theseis efthynis einai synepeia tis en merei ikanotitas antilipsis tous i/kai tis en merei koinonikis evaisthisias tous i/kai tis en merei aftokyriarchias tous.]

#2Gr. Δεν ξέρω εάν η ανεπιφύλακτη χρήση από τους κατέχοντες θέσεις ευθύνης των επιβαλλόμενων άνωθεν κλισέ είναι συνέπεια της εν μέρει ικανότητας αντίληψης τους ή/και της εν μέρει κοινωνικής ευαισθησίας τους ή/και της εν μέρει αυτοκυριαρχίας τους.

[Den xero ean i anepifylakti chrisi apo tous katechontes theseis efthynis ton epivallomenon anothen klise einai synepeia tis en merei ikanotitas antilipsis tous i/kai tis en merei koinonikis evaisthisias tous i/kai tis en merei aftokyriarchias tous.]

Unfortunately, the translations from applications using LLMs do not have the same meaning:

#1En. I don’t know if the unreserved use of clichés imposed from above by those in positions of responsibility is a consequence of their partly perceptive ability and/or partly their social sensitivity and/or partly their self-control.

#2En. I don’t know if the unreserved use by those in positions of responsibility of the clichés imposed from above is a consequence of their partial ability to perceive and/or their partial social sensitivity and/or their partial self-control.

#1En should have “their partial” in all three marked (with bold) positions in order to be the correct translation of #1Gr. #2En is a correct translation of #2Gr.

Let us analyze #1Gr but provide English (by human) translations, for the convenience of the audience:

(I don’t know (oblique question—A))

(I don’t know (if (main sentence—B)))

(I don’t know (if ((secondary nominal sentence—C) is a consequence (lined-up sentences—D))))

C stands for the original utterance “η ανεπιφύλακτη χρήση των επιβαλλόμενων άνωθεν κλισέ από τους κατέχοντες θέσεις ευθύνης” and it is with solid underline above. The translation (prepared by a human) is “the unreserved use of clichés imposed from above by those holding positions of responsibility”.

D stands for the original utterance “της εν μέρει ικανότητας αντίληψης τους ή/και της εν μέρει κοινωνικής ευαισθησίας τους ή/και της εν μέρει αυτοκυριαρχίας τους”, and it is indicated with dotted underline above. Its translation (prepared by a human) is “their partial perception ability and/or their partial social sensitivity and/or their partial self-control”.

The syntax (constituency) tree of the translated English C utterance is as follows:

- np_ss (det (the), adj (unreserved), noun (use),

- pp1 (prep (of), np1 (noun (clichés), adj (imposed),

- pp3 (prep (from), noun (above)))),

- pp2 (prep (by), np2 (those holding positions of responsibility))

Let us analyze #2Gr, but we provide English translations (by humans), for the convenience of the audience.

It has the same construction (generation) as #1Gr. The only difference is that C is replaced by C”.

C” stands for the original utterance “η ανεπιφύλακτη χρήση από τους κατέχοντες θέσεις ευθύνης των επιβαλλόμενων άνωθεν κλισέ”, and it is indicated with the dashed underline above. Its translation (prepared by a human) is “the unreserved use by those holding positions of responsibility of clichés imposed from above”.

The syntax (constituency) tree of the translated English of the C” utterance is as follows:

- np_ss (det (the), adj (unreserved), noun (use),

- pp2 (prep (by), np2 (those holding positions of responsibility)),

- pp1 (prep (of), np1 (noun (clichés), adj (imposed),

- pp3 (prep (from), noun (above))))

Our analysis for C and C” has been primarily done for the original utterances in Greek. We presented here the analysis with the English (by a human) translations for convenience, and because the syntactic trees between Greek and English versions are almost identical, and the conclusions about the relation of C and C” are exactly the same for both languages. C and C” have the same syntax except that in C” there is left extraposition [80] of np2.

Consequently, sentence #2Gr is the same as #1Gr, with the exception that the constituent (secondary nominal sentence) C has been replaced by C” where there is left extraposition [80] of np2 (those holding positions of responsibility), possibly for giving emphasis to np2. It is a discourse analysis phenomenon—a hidden path—not imposing any difference on the meaning of the complete isolated sentence #2Gr. This is the knowledge (the conclusion drawn by a Rule-based NLU—the same semantics and the same syntax, with the exception of an extraposition)—that will feed an LLM-based translator. It will drive the translator to try another solution/pattern of generation and return a correct translation of the #1Gr sentence.

Another, even simpler, conclusion drawn from a Rule-based NLU is that D (noted above with dotted underline) is exactly the same in both #1Gr and #2Gr sentences and consequently it should have been translated the same in #1En and #2En. As previously, it will drive the translator to try another solution/pattern of generation and return a correct translation of the #1 sentence.

6.4.3. Rule-Based NLUs for the Improvement of LLM’s Active-to-Passive Syntax Conversions

A more specific kind of request for languages is the transformation of utterances/sentences from active to passive syntax. We will delve into it specifically for the Greek language. For this, we tried a range of example Greek sentences in active syntax, and we requested that some LLM-based systems provide their equivalent in passive syntax. We have concluded that the LLM transformation works well for sentences having some categories of verbs, but works very badly for some other categories of verbs. We will mention the kind of verbs named “deponent verbs” (“αποθετικά ρήματα” [apothetika rimata] in Greek language) for the bad results. These verbs have only passive morphology (endings existing for verbs in passive voice) but have active mood [81]. We will explain by giving a counter-example (a non-deponent verb).

The Greek verb (ρήμα) “πλένω” [pleno] has an active morphology (ending “-ω”) and an active mood and means “wash”. As an example, we provide the sentence “(Εγώ) πλένω το πουκάμισο μου” [(Ego) pleno to poukamiso mou], which is translated into English as “I am washing my shirt”. For this verb, there is also its corresponding one in passive morphology (voice) and passive mood. It is the verb “πλένομαι” [plenomai] (the passive morphology is denoted by the ending “-ομαι”). The corresponding sentence in passive syntax is “Το πουκάμισο μου πλένεται (από εμένα)” [To poukamiso mou plenetai (apo emena)], and it is translated as “My shirt is being washed (by me)”.