Deep Edge IoT for Acoustic Detection of Queenless Beehives

Abstract

1. Introduction

2. Materials and Methods

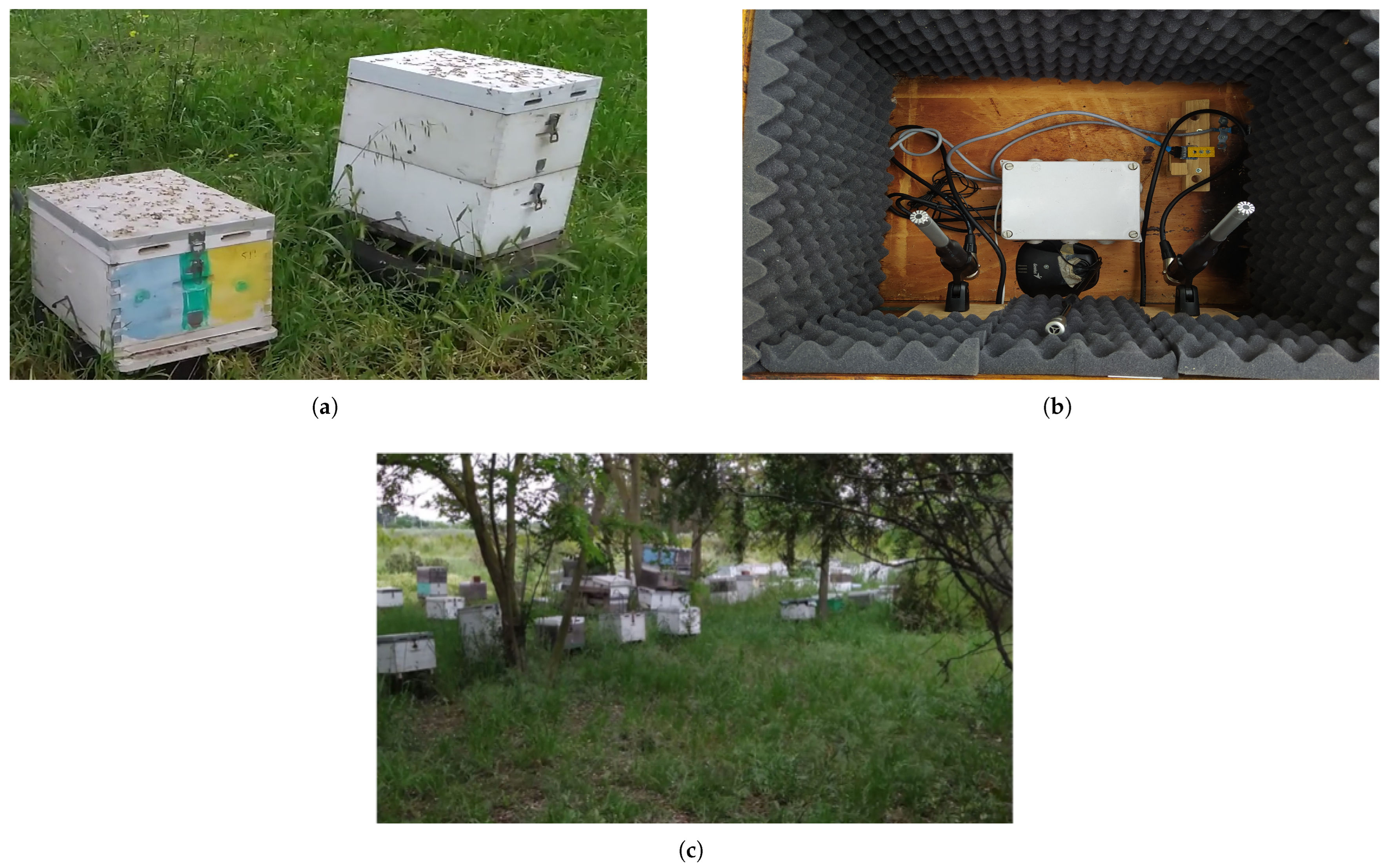

2.1. Dataset Acquisition

2.2. Pre-Processing—Feature Extraction

2.3. Feature Importance

2.4. Machine Learning Approach

2.5. Hardware Implementation

- high-quality sampling rate: 44.1 kHz;

- system sampling rate: 4–16 kHz;

- recording duration: 60 s;

- audio content: sounds of an active hive;

- placement of the two microphones (high-quality and system’s): in close proximity inside the hive;

- the analysis of the recordings was performed using the Welch method.

3. Results

3.1. PCA

3.2. ML Models Evaluation

3.3. Hardware Implementation

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| ML | Machine Learning |

| TL | Transfer Learning |

| MFCC | Mel Frequency Cepstral Coefficients |

| HHT | Hilbert Huang Transform |

| LPC | Linear Predictive Coding |

| STFT | Short-Time Fourier Transform |

| DL | Deep Learning |

| NN | Neaural Networks |

| SVM | Support Vector Machine |

| KNN | K-Nearest Neighbor |

| MLP | Multilayer Perceptron |

| RF | Random Forest |

| CNN | Convolutional Neural Networks |

| LSTM | Long Short-Term Memory |

| FFT | Fast Fourier Transform |

| DCT | Discrete Cosine Transformation |

| PCs | Principal Components |

| PCA | Principal Component Analysis |

| LR | Logistic Regression |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| lr | learning rate |

| FC | Fully Connected |

References

- Kanelis, D.; Liolios, V.; Papadopoulou, F.; Rodopoulou, M.A.; Kampelopoulos, D.; Siozios, K.; Tananaki, C. Decoding the Behavior of a Queenless Colony Using Sound Signals. Biology 2023, 12, 1392. [Google Scholar] [CrossRef] [PubMed]

- Albayrak, A.; Çeven, S.; Bayır, R. Modeling of migratory beekeeper behaviors with machine learning approach using meteorological and environmental variables: The case of Turkey. Ecol. Inform. 2021, 66, 101470. [Google Scholar] [CrossRef]

- Kaplan Berkaya, S.; Sora Gunal, E.; Gunal, S. Deep learning-based classification models for beehive monitoring. Ecol. Inform. 2021, 64, 101353. [Google Scholar] [CrossRef]

- Hrncir, M.; Friedrich, B.; Juergen, D. Vibratory and Airborne-Sound Signals in Bee Communication (Hymenoptera). In Insect Sounds and Communication: Physiology, Behaviour, Ecology, and Evolution; Drosopoulos, S., Claridge, M.F., Eds.; CRC Press: Boca Raton, FL, USA, 2006; pp. 421–422. [Google Scholar]

- Nolasco, I.; Benetos, E. To bee or not to bee: Investigating machine learning approaches for beehive sound recognition. In Proceedings of the Proceedings of the Detection and Classification of Acoustic Scenes and Events Workshop (DCASE2018), Surrey, UK, 19–20 November 2018. [Google Scholar]

- Dietlein, D.G. A method for remote monitoring of activity of honeybee colonies by sound analysis. J. Apic. Res. 1985, 24, 176–183. [Google Scholar] [CrossRef]

- Uthoff, C.; Homsi, M.N.; von Bergen, M. Acoustic and vibration monitoring of honeybee colonies for beekeeping-relevant aspects of presence of queen bee and swarming. Comput. Electron. Agric. 2023, 205, 107589. [Google Scholar] [CrossRef]

- Qandour, A.; Ahmad, I.; Habibi, D.; Leppard, M. Remote beehive monitoring using acoustic signals. Acoust. Aust. 2014, 42, 204–209. [Google Scholar]

- Fahrenholz, L.; Lamprecht, I.; Schricker, B. Calorimetric investigations of the different castes of honey bees, Apis mellifera carnica. J. Comp. Physiol. B 1992, 162, 119–130. [Google Scholar] [CrossRef]

- Bell, H.; Kietzman, P.; Nieh, J. The Complex World of Honey Bee Vibrational Signaling: A Response to Ramsey et al. (2017). arXiv 2024, arXiv:2408.14430. [Google Scholar]

- Greggers, U.; Koch, G.; Schmidt, V.; Dürr, A.; Floriou-Servou, A.; Piepenbrock, D.; Göpfert, M.C.; Menzel, R. Reception and learning of electric fields in bees. Proc. R. Soc. B Biol. Sci. 2013, 280, 20130528. [Google Scholar] [CrossRef]

- Liao, Y.; McGuirk, A.; Biggs, B.; Chaudhuri, A.; Langlois, A.; Deters, V. Noninvasive Beehive Monitoring Through Acoustic Data Using SAS® Event Stream Processing and SAS® Viya®; Technical report; SAS Institute Inc.: Belgrade, Serbia, 2020. [Google Scholar]

- Cejrowski, T.; Szymanski, J.; Mora, H.; Gil, D. Detection of the bee queen presence using sound analysis. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Dong Hoi City, Vietnam, 19–21 March 2018; pp. 297–306. [Google Scholar]

- Phan, T.T.H.; Nguyen, H.D.; Nguyen, D.D. Evaluation of Feature Extraction Methods for Bee Audio Classification. In Intelligence of Things: Technologies and Applications; Nguyen, N.T., Dao, N.N., Pham, Q.D., Le, H.A., Eds.; Springer: Cham, Switzerland, 2022; pp. 194–203. [Google Scholar]

- Polyniak, Y.; Fedasyuk, D.; Marusenkova, T. Identification of Bee Colony Acoustic Signals using the Dynamic Time Warping Algorithm. ECONTECHMOD Int. Q. J. Econ. Technol. Model. Process 2019, 8, 19–27. [Google Scholar]

- Cecchi, S.; Spinsante, S.; Terenzi, A.; Orcioni, S. A Smart Sensor-Based Measurement System for Advanced Bee Hive Monitoring. Sensors 2020, 20, 2726. [Google Scholar] [CrossRef]

- Andrijević, N.; Urošević, V.; Arsić, B.; Herceg, D.; Savić, B. IoT Monitoring and Prediction Modeling of Honeybee Activity with Alarm. Electronics 2022, 11, 783. [Google Scholar] [CrossRef]

- Ngo, T.N.; Wu, K.C.; Yang, E.C.; Lin, T.T. A real-time imaging system for multiple honey bee tracking and activity monitoring. Comput. Electron. Agric. 2019, 163, 104841. [Google Scholar] [CrossRef]

- Voudiotis, G.; Kontogiannis, S.; Pikridas, C. Proposed Smart Monitoring System for the Detection of Bee Swarming. Inventions 2021, 6, 87. [Google Scholar] [CrossRef]

- Voudiotis, G.; Moraiti, A.; Kontogiannis, S. Deep Learning Beehive Monitoring System for Early Detection of the Varroa Mite. Signals 2022, 3, 506–523. [Google Scholar] [CrossRef]

- Mrozek, D.; Gorny, R.; Wachowicz, A.; Małysiak-Mrozek, B. Edge-Based Detection of Varroosis in Beehives with IoT Devices with Embedded and TPU-Accelerated Machine Learning. Appl. Sci. 2021, 11, 1078. [Google Scholar] [CrossRef]

- Ngo, T.N.; Rustia, D.J.A.; Yang, E.C.; Lin, T.T. Automated monitoring and analyses of honey bee pollen foraging behavior using a deep learning-based imaging system. Comput. Electron. Agric. 2021, 187, 106239. [Google Scholar] [CrossRef]

- Kulyukin, V. Audio, Image, Video, and Weather Datasets for Continuous Electronic Beehive Monitoring. Appl. Sci. 2021, 11, 4632. [Google Scholar] [CrossRef]

- De Simone, A.; Barbisan, L.; Turvani, G.; Riente, F. Advancing Beekeeping: IoT and TinyML for Queen Bee Monitoring Using Audio Signals. IEEE Trans. Instrum. Meas. 2024, 73, 2527309. [Google Scholar] [CrossRef]

- Otesbelgue, A.; de Lima Rodrigues, Í.; dos Santos, C.F.; Gomes, D.G.; Blochtein, B. The missing queen: A non-invasive method to identify queenless stingless bee hives. Apidologie 2025, 56, 28. [Google Scholar] [CrossRef]

- Maralit, A.D.; Imperial, A.A.; Cayangyang, R.T.; Tan, J.B.; Maaño, R.A.; Belleza, R.C.; De Castro, P.J.C.; Oreta, D.E.S. QueenBuzz: A CNN-based architecture for Sound Processing of Queenless Beehive Towards European Apis Mellifera Bee Colonies’ Survivability. In Proceedings of the 2023 International Conference on Computer Science, Information Technology and Engineering (ICCoSITE), Jakarta, Indonesia, 16 February 2023; pp. 691–696. [Google Scholar] [CrossRef]

- Doinea, M.; Trandafir, I.; Toma, C.V.; Popa, M.; Zamfiroiu, A. IoT Embedded Smart Monitoring System with Edge Machine Learning for Beehive Management. Int. J. Comput. Commun. Control 2024, 19, 632. [Google Scholar] [CrossRef]

- Kulyukin, V.; Mukherjee, S.; Amlathe, P. Toward Audio Beehive Monitoring: Deep Learning vs. Standard Machine Learning in Classifying Beehive Audio Samples. Appl. Sci. 2018, 8, 1573. [Google Scholar] [CrossRef]

- Ruvinga, S.; Hunter, G.; Duran, O.; Nebel, J.C. Identifying Queenlessness in Honeybee Hives from Audio Signals Using Machine Learning. Electronics 2023, 12, 1627. [Google Scholar] [CrossRef]

- Ruvinga, S.; Hunter, G.J.; Duran, O.; Nebel, J.C. Use of LSTM Networks to Identify “Queenlessness” in Honeybee Hives from Audio Signals. In Proceedings of the 2021 17th International Conference on Intelligent Environments (IE), Dubai, United Arab Emirates, 21–24 June 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Music Group Ltd. ECM8000 Technical Specifications: Ultra-Linear Measurement Condenser Microphone; Behringer: Willich, Germany, 2013; Datasheet; Available online: https://cdn.mediavalet.com/aunsw/musictribe/ri9AgIhkSkeKygnwBCmTbQ/QNqLVKUimkqAIilLXp9r3Q/Original/ECM8000_P0118_S_EN.pdf (accessed on 22 July 2025).

- Cross Spectrum Labs. Microphone Frequency Response Measurement Report; Cross Spectrum Labs: Burlington, MA, USA, 2011; Report; Available online: https://www.cross-spectrum.com/cslmics/001_mic_report.pdf (accessed on 23 July 2025).

- Focusrite Audio Engineering Limited. Scarlett 2i2 (3rd Gen) User Guide, 2nd ed.; Focusrite: High Wycombe, UK, 2021; Available online: https://fael-downloads-prod.focusrite.com/customer/prod/downloads/Scarlett%202i2%203rd%20Gen%20User%20Guide%20V2.pdf (accessed on 22 July 2025).

- Molau, S.; Pitz, M.; Schluter, R.; Ney, H. Computing mel-frequency cepstral coefficients on the power spectrum. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing, Salt Lake City, UT, USA, 7–11 May 2001; Proceedings (cat. No. 01CH37221). IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. 73–76. [Google Scholar]

- Giordani, P. Principal component analysis. In Encyclopedia of Social Network Analysis and Mining; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1831–1844. [Google Scholar]

- Odhiambo Omuya, E.; Onyango Okeyo, G.; Waema Kimwele, M. Feature Selection for Classification using Principal Component Analysis and Information Gain. Expert Syst. Appl. 2021, 174, 114765. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- STMicroelectronics. MP34DT01-M: MEMS Audio Sensor Omnidirectional Digital Microphone, 3rd ed.; STMicroelectronics: Geneva, Switzerland, 2014; Datasheet; Available online: https://www.st.com/resource/en/datasheet/mp34dt01-m.pdf (accessed on 23 July 2025).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Quaderi, S.J.; Labonno, S.; Mostafa, S.; Akhter, S. Identify The Beehive Sound Using Deep Learning. arXiv 2022, arXiv:2209.01374. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Lin, J.; Chen, W.M.; Lin, Y.; Gan, C.; Han, S. Mcunet: Tiny deep learning on iot devices. Adv. Neural Inf. Process. Syst. 2020, 33, 11711–11722. [Google Scholar]

- Fedorov, I.; Stamenovic, M.; Jensen, C.; Yang, L.C.; Mandell, A.; Gan, Y.; Mattina, M.; Whatmough, P.N. TinyLSTMs: Efficient neural speech enhancement for hearing aids. arXiv 2020, arXiv:2005.11138. [Google Scholar] [CrossRef]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks: Training deep neural networks with weights and activations constrained to+ 1 or-1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Terven, J.; Cordova-Esparza, D.M.; Ramirez-Pedraza, A.; Chavez-Urbiola, E.A.; Romero-Gonzalez, J.A. Loss functions and metrics in deep learning. arXiv 2023, arXiv:2307.02694. [Google Scholar] [CrossRef]

| Dataset Configuration | Fs (Hz) | N_FFT | N_mels | Fmax (Hz) |

|---|---|---|---|---|

| config_0 | 4096 | 1024 | 9 | 60–2000 |

| config_1 | 8192 | 1024 | 16 | 60–4000 |

| config_2 | 8192 | 2048 | 32 | 60–4000 |

| config_3 | 16384 | 2048 | 32 | 60–8000 |

| Mel Band | Frequency Range (Hz) | Importance |

|---|---|---|

| mel_0 | [56–240] | 0.2013 |

| mel_1 | [144–336] | 0.0973 |

| mel_5 | [504–688] | 0.0444 |

| mel_2 | [232–424] | 0.0438 |

| mel_4 | [416–600] | 0.0343 |

| mel_22 | [2888–3488] | 0.0327 |

| mel_14 | [1376–1664] | 0.0303 |

| Dataset Configuration | Hive | Precision (Class 0) | Recall (Class 0) | F1-Score (Class 0) | Precision (Class 1) | Recall (Class 1) | F1-Score (Class 1) | Average F1 |

|---|---|---|---|---|---|---|---|---|

| config_0 | m11 | 0.84 | 0.90 | 0.87 | 0.63 | 0.49 | 0.55 | 0.81 |

| m12 | 0.88 | 0.91 | 0.90 | 0.84 | 0.79 | 0.82 | 0.87 | |

| config_1 | m11 | 0.85 | 0.91 | 0.88 | 0.67 | 0.54 | 0.60 | 0.81 |

| m12 | 0.91 | 0.95 | 0.93 | 0.83 | 0.71 | 0.77 | 0.89 | |

| config_2 | m11 | 0.86 | 0.93 | 0.89 | 0.73 | 0.56 | 0.64 | 0.83 |

| m12 | 0.93 | 0.96 | 0.95 | 0.87 | 0.80 | 0.84 | 0.92 | |

| config_3 | m11 | 0.86 | 0.95 | 0.90 | 0.78 | 0.56 | 0.65 | 0.85 |

| m12 | 0.92 | 0.96 | 0.94 | 0.85 | 0.76 | 0.80 | 0.90 |

| Model | Average F1-Score (Original Dataset, m12) | Average F1-Score (New Dataset with TL, m11) |

|---|---|---|

| NN | 0.91 | 0.82 |

| KNN | 0.94 | 0.81 |

| Data | Feature Extraction | Inference | ||

|---|---|---|---|---|

| Memory (kB) | Processing Time (ms) | Memory (kB) | Processing Time (ms) | |

| config_0 | ||||

| config_1 | ||||

| config_2 | ||||

| config_3 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sad, C.; Kampelopoulos, D.; Sofianidis, I.; Kanelis, D.; Nikolaidis, S.; Tananaki, C.; Siozios, K. Deep Edge IoT for Acoustic Detection of Queenless Beehives. Electronics 2025, 14, 2959. https://doi.org/10.3390/electronics14152959

Sad C, Kampelopoulos D, Sofianidis I, Kanelis D, Nikolaidis S, Tananaki C, Siozios K. Deep Edge IoT for Acoustic Detection of Queenless Beehives. Electronics. 2025; 14(15):2959. https://doi.org/10.3390/electronics14152959

Chicago/Turabian StyleSad, Christos, Dimitrios Kampelopoulos, Ioannis Sofianidis, Dimitrios Kanelis, Spyridon Nikolaidis, Chrysoula Tananaki, and Kostas Siozios. 2025. "Deep Edge IoT for Acoustic Detection of Queenless Beehives" Electronics 14, no. 15: 2959. https://doi.org/10.3390/electronics14152959

APA StyleSad, C., Kampelopoulos, D., Sofianidis, I., Kanelis, D., Nikolaidis, S., Tananaki, C., & Siozios, K. (2025). Deep Edge IoT for Acoustic Detection of Queenless Beehives. Electronics, 14(15), 2959. https://doi.org/10.3390/electronics14152959