SS-LIO: Robust Tightly Coupled Solid-State LiDAR–Inertial Odometry for Indoor Degraded Environments

Abstract

1. Introduction

- (1)

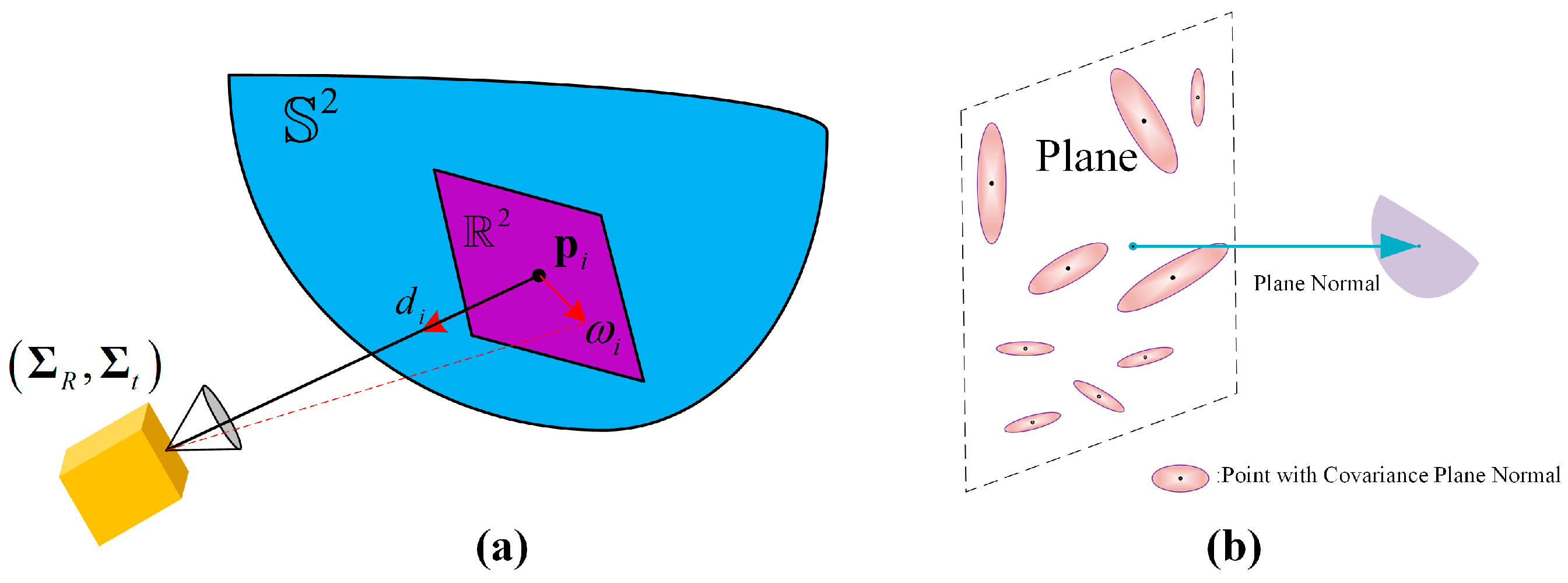

- A probabilistic model for planar elements is built for mitigating degradation effects.

- (2)

- A tightly coupled iterative extended Kalman filter with an IMU is employed to integrate LiDAR feature points with IMU data, thus alleviating the issue of geometric degradation

- (3)

- A set of voxels which contain planar features for generating a new map is formed by facilitating probabilistic and precise alignment of LiDAR scans with the environment.

2. Related Work

2.1. LiDAR (-Inertial) Odometry

2.2. Mapping Methods

3. Methodology

3.1. Notation

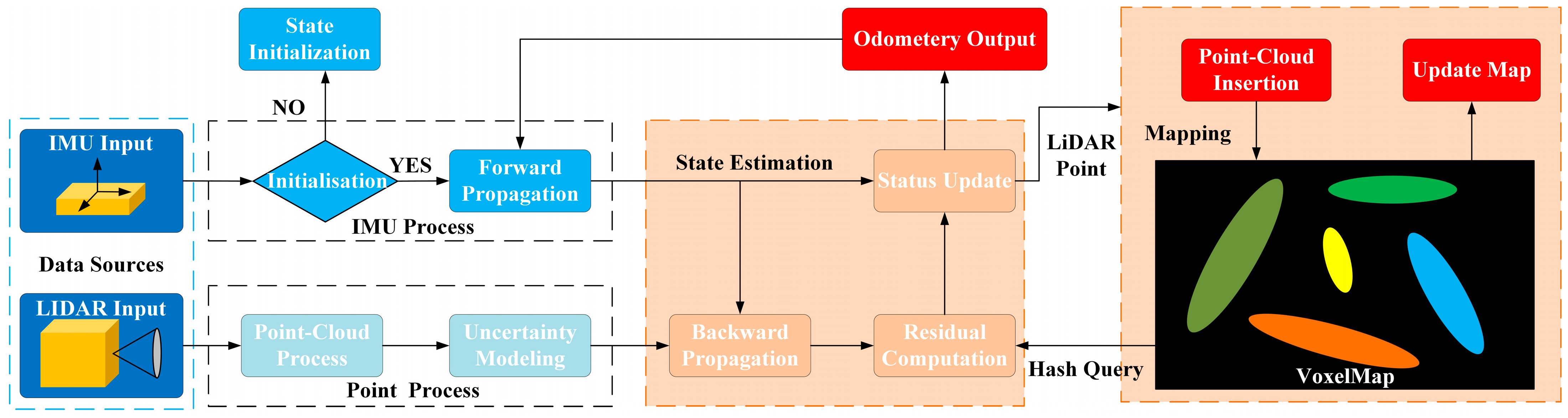

3.2. System Description

- (1)

- Kinematic Model

- (2)

- Measurement Model

3.3. Data Process

- (1)

- IMU Process

- (2)

- LiDAR Process

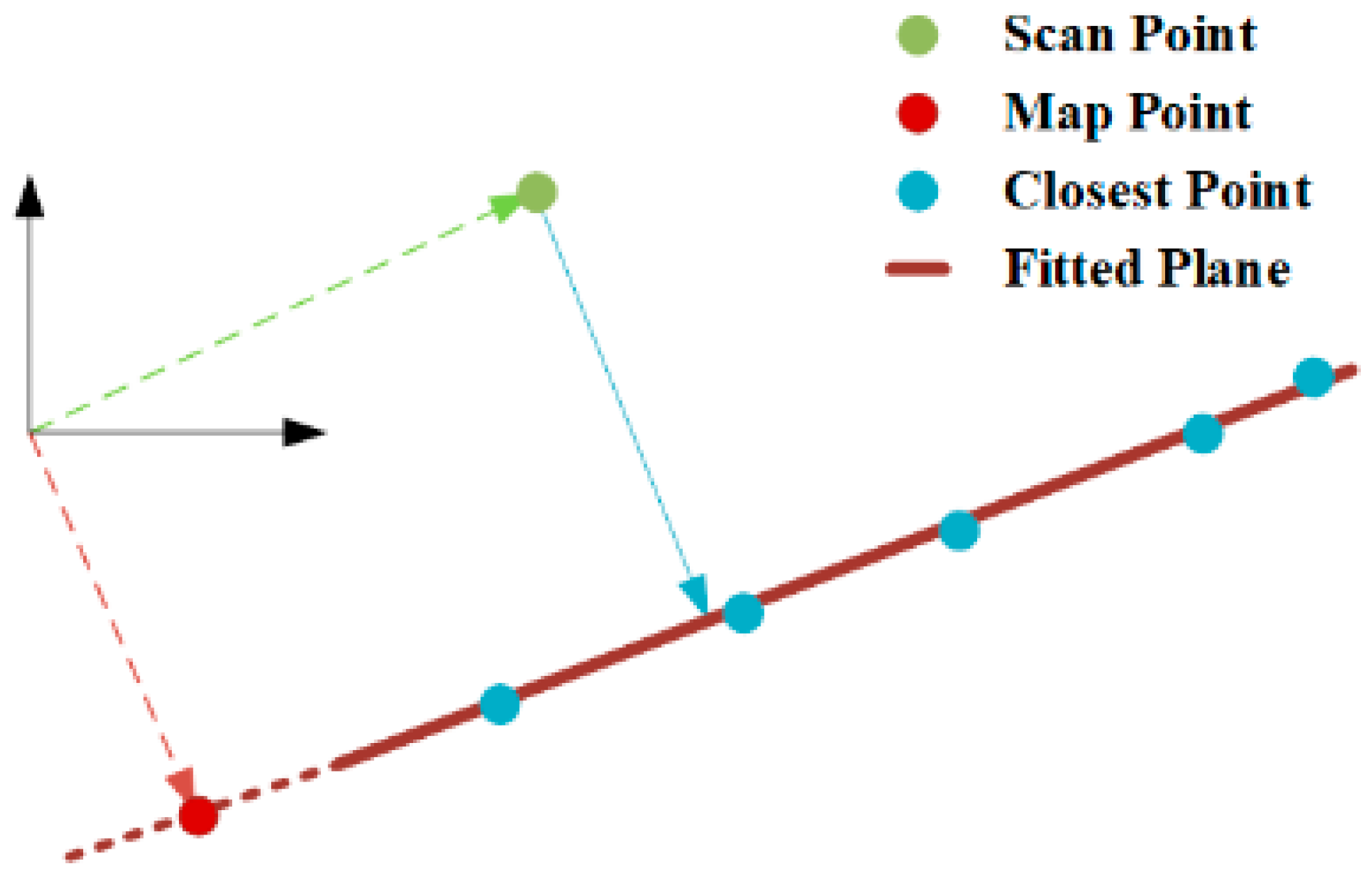

3.4. Iterated State Estimation

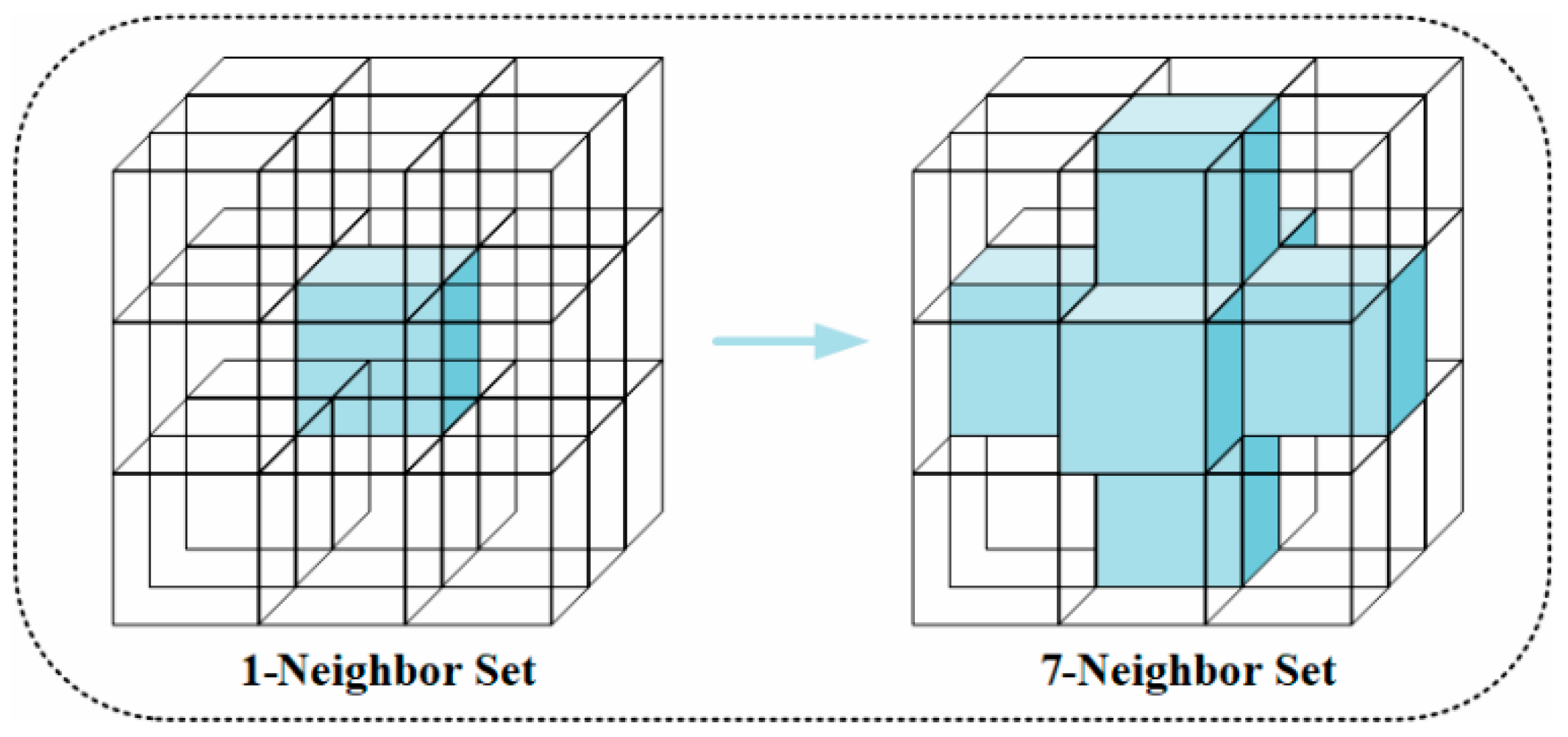

3.5. Incremental Global Map

4. Experimental Results and Analysis

4.1. Experimental Setup

- (i)

- The system exhibits estimation accuracy on par with cutting-edge LIO systems.

- (ii)

- It delivers precise pose estimation across diverse environments and motion profiles.

- (iii)

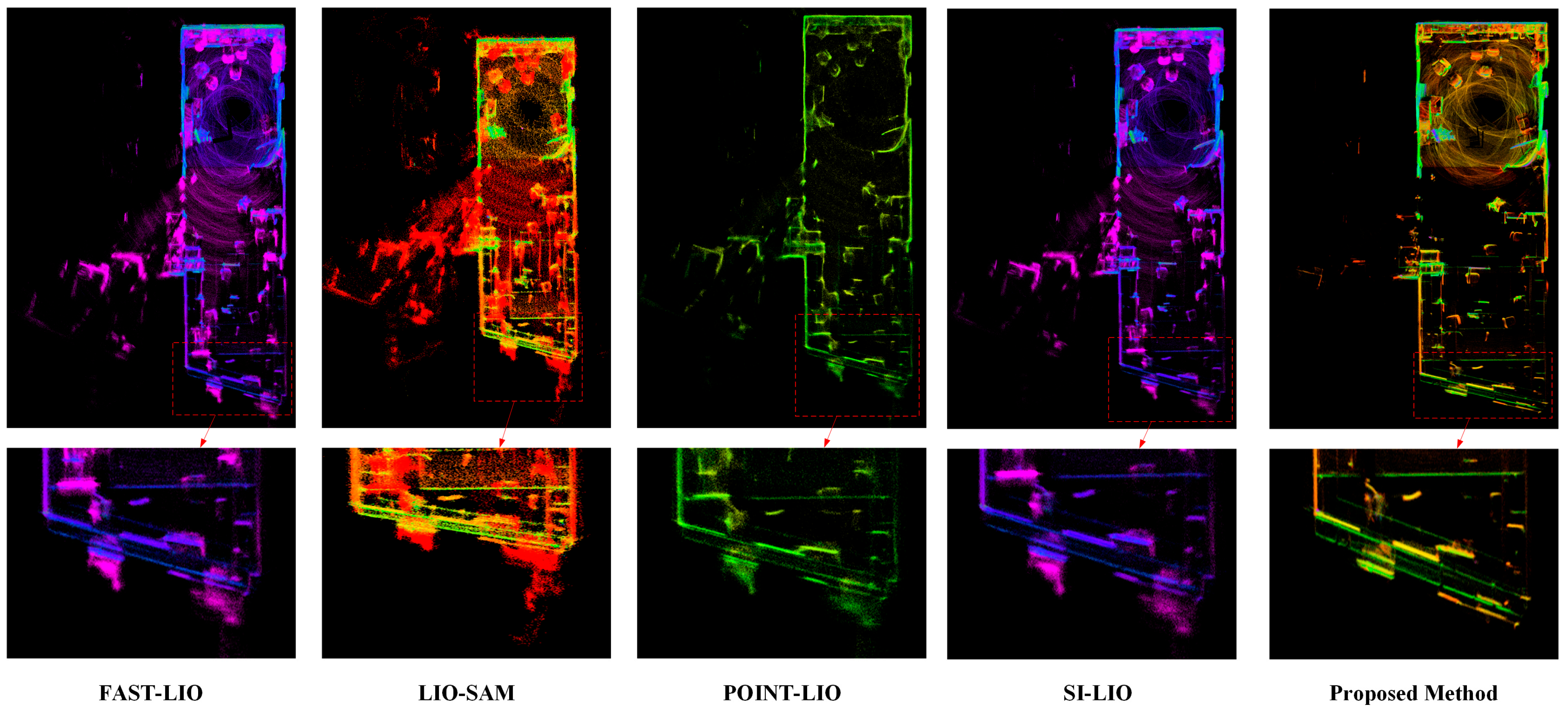

- It achieves superior localization and mapping in indoor environments with degraded conditions.

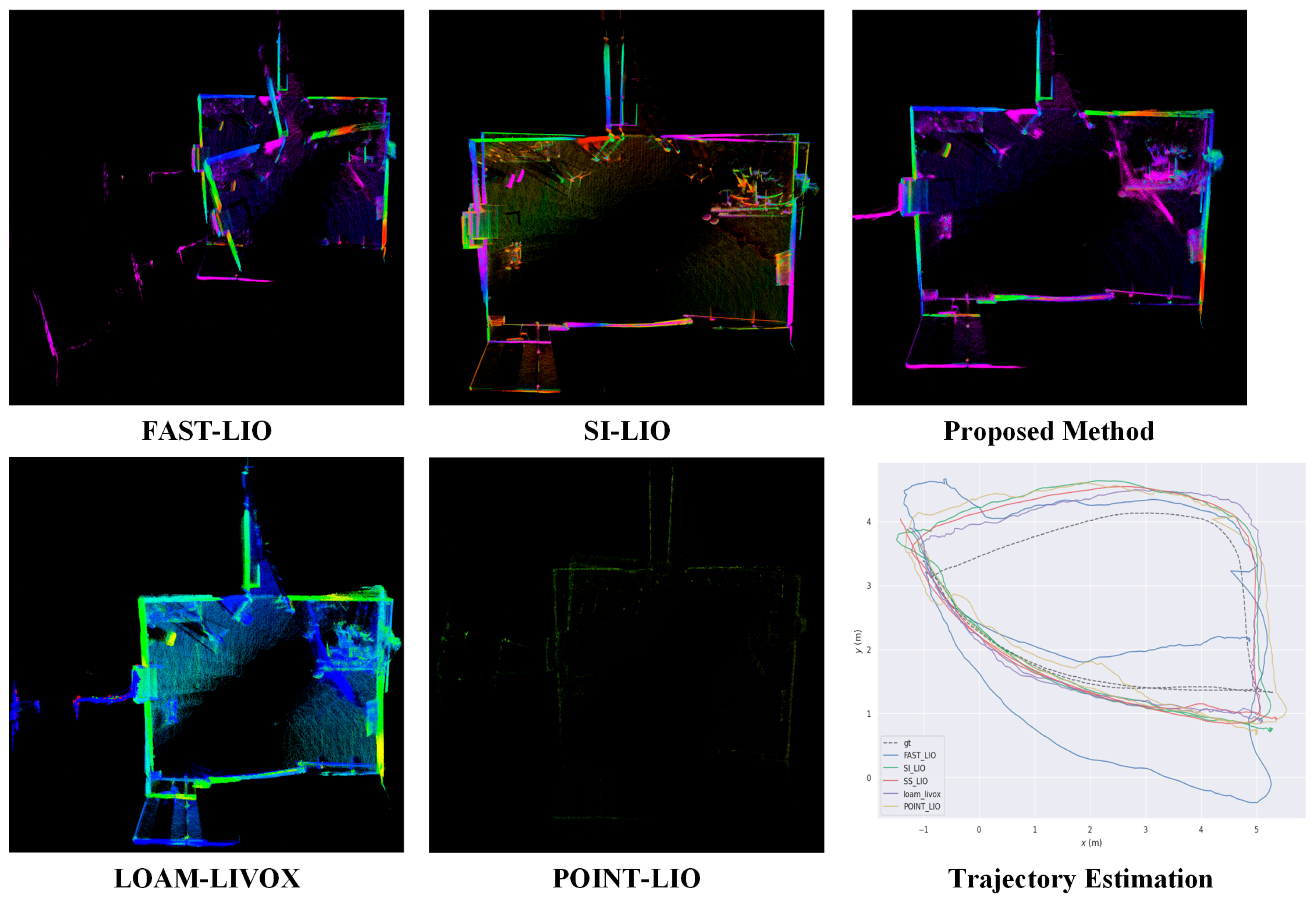

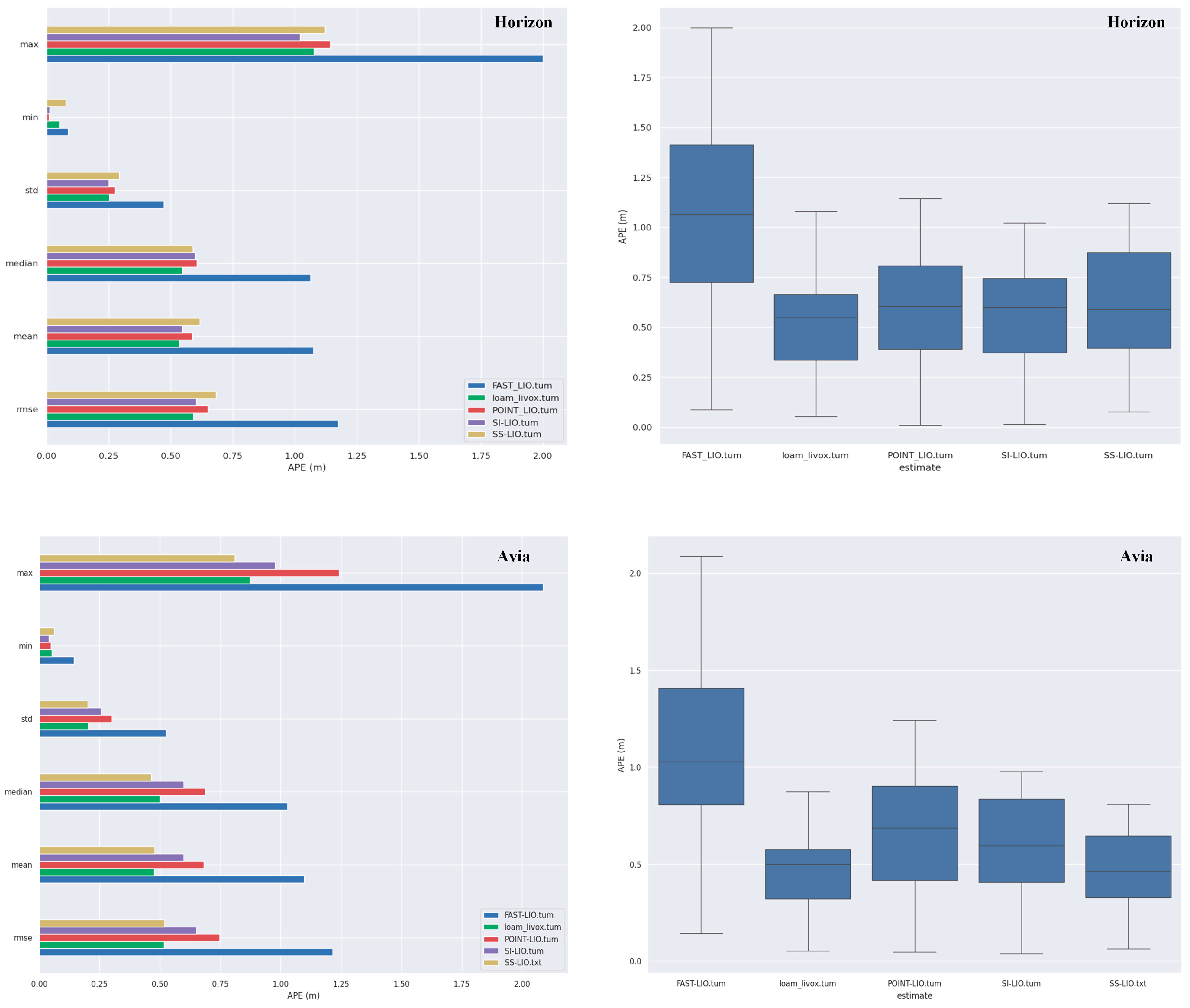

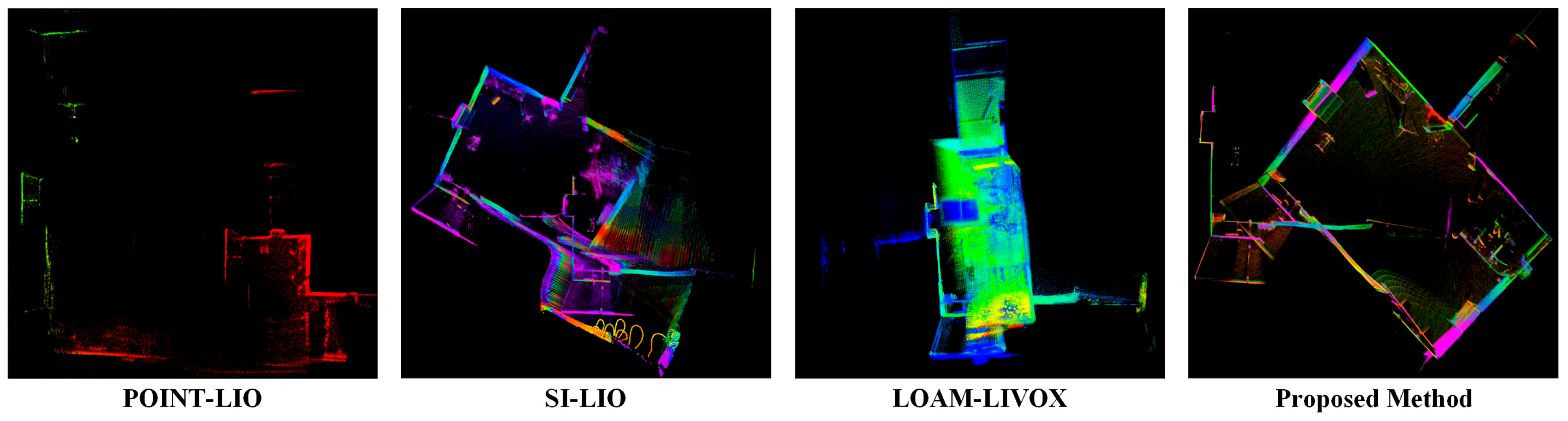

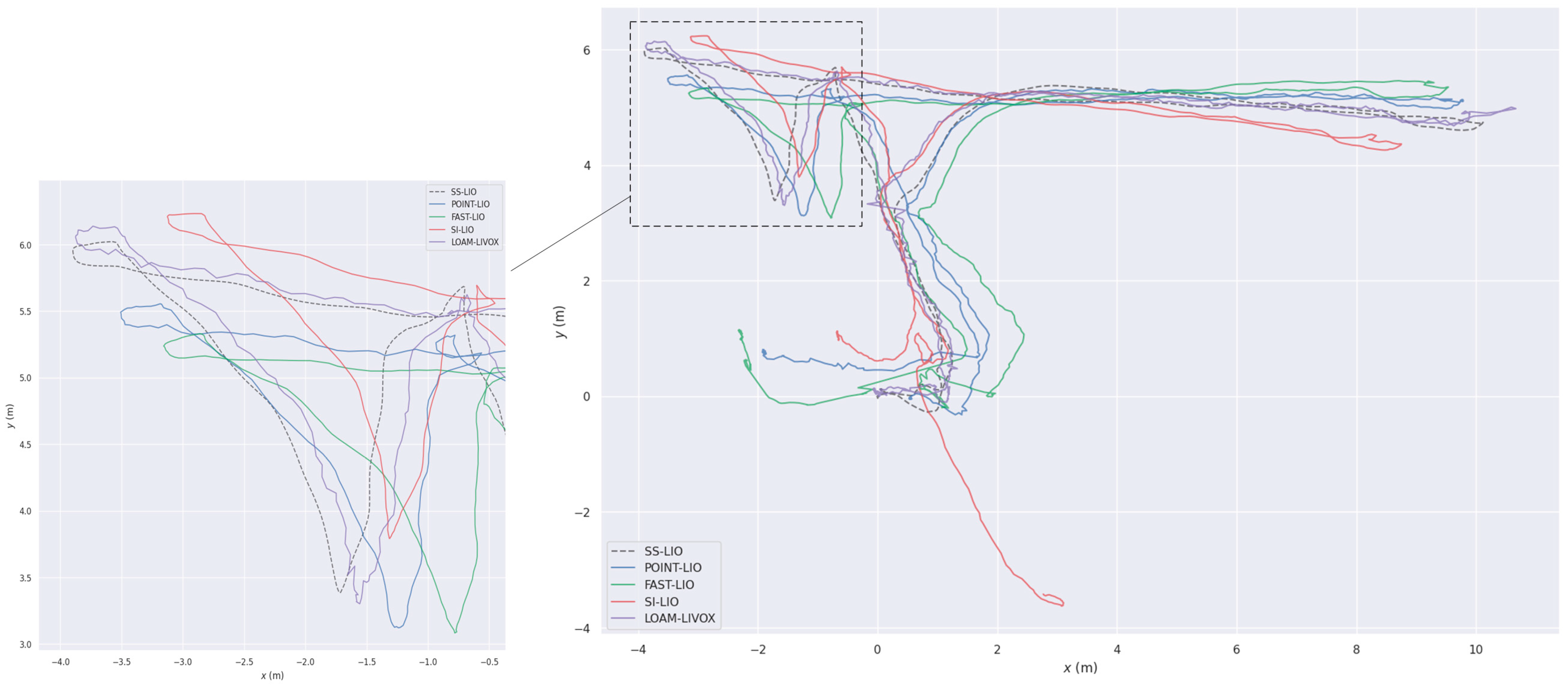

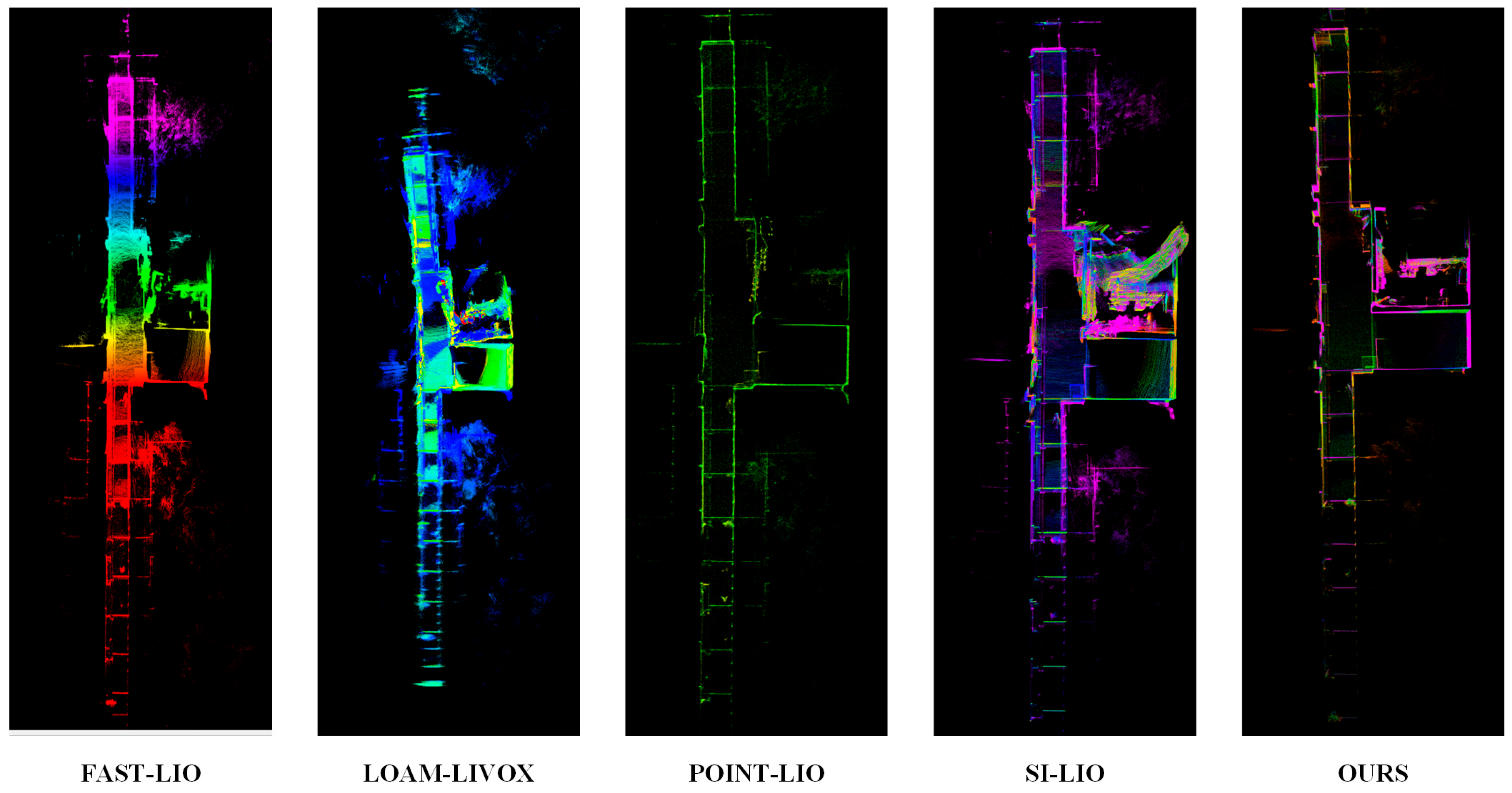

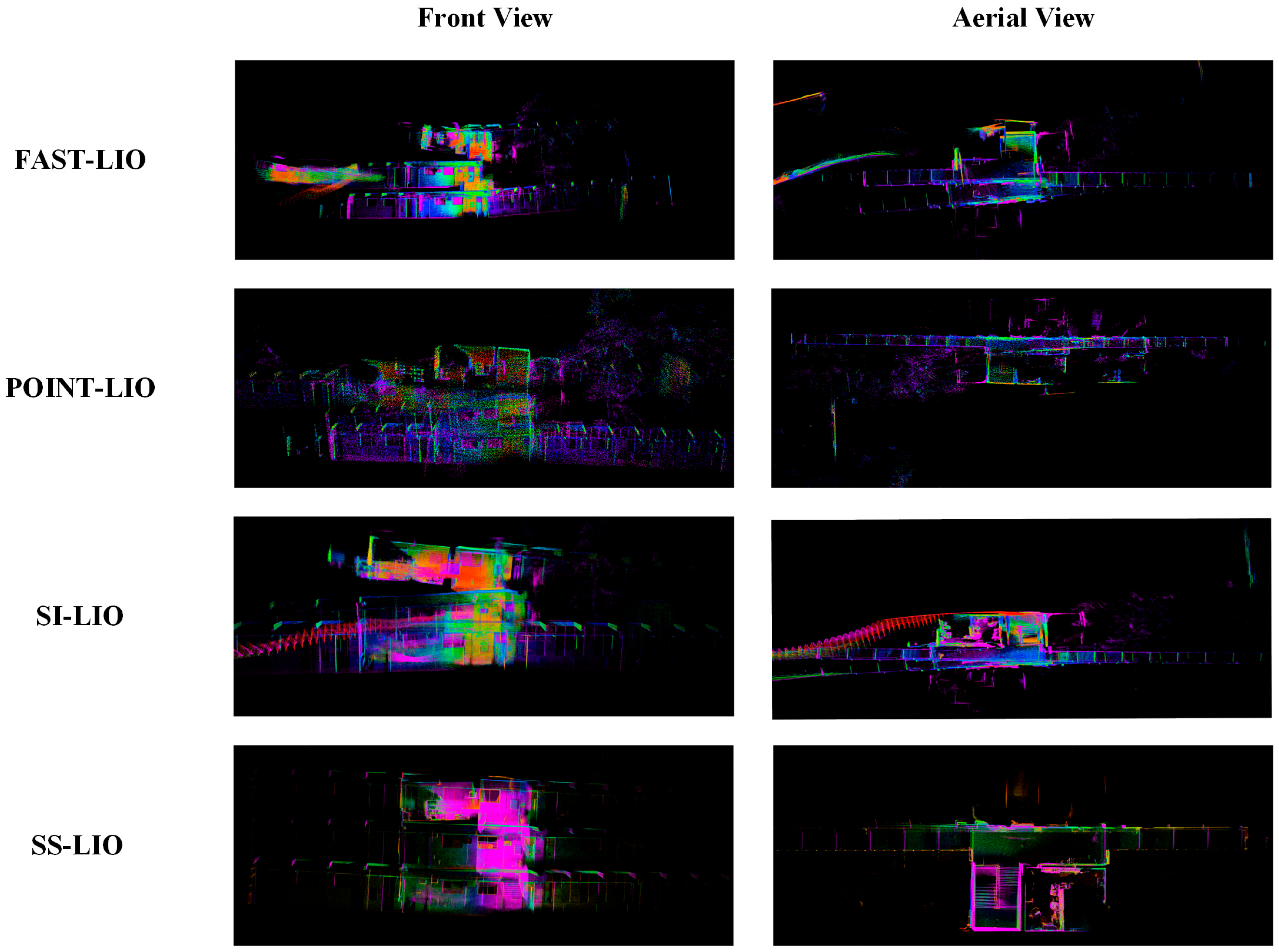

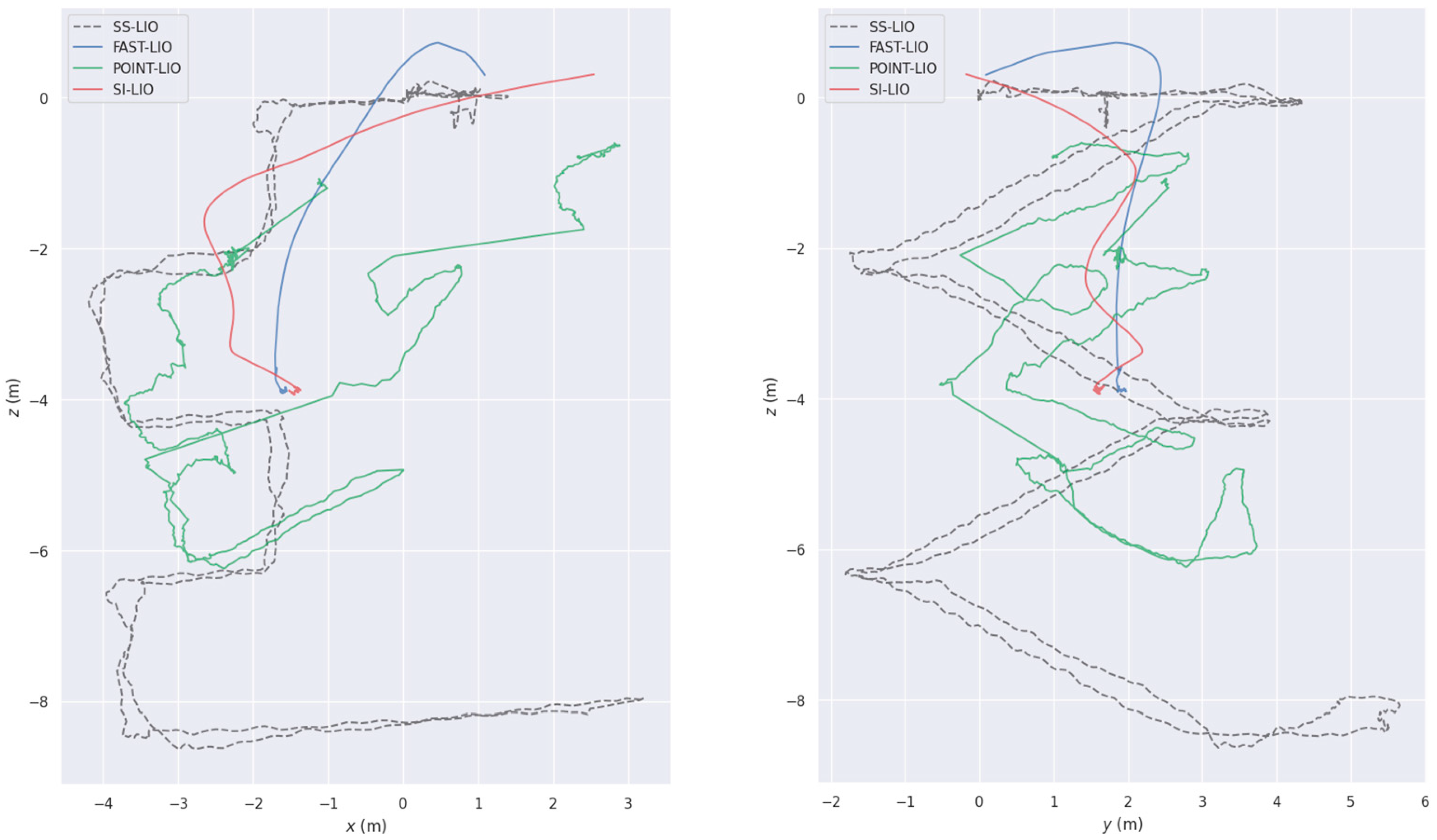

4.2. Evaluation of Odometry Accuracy

- (1)

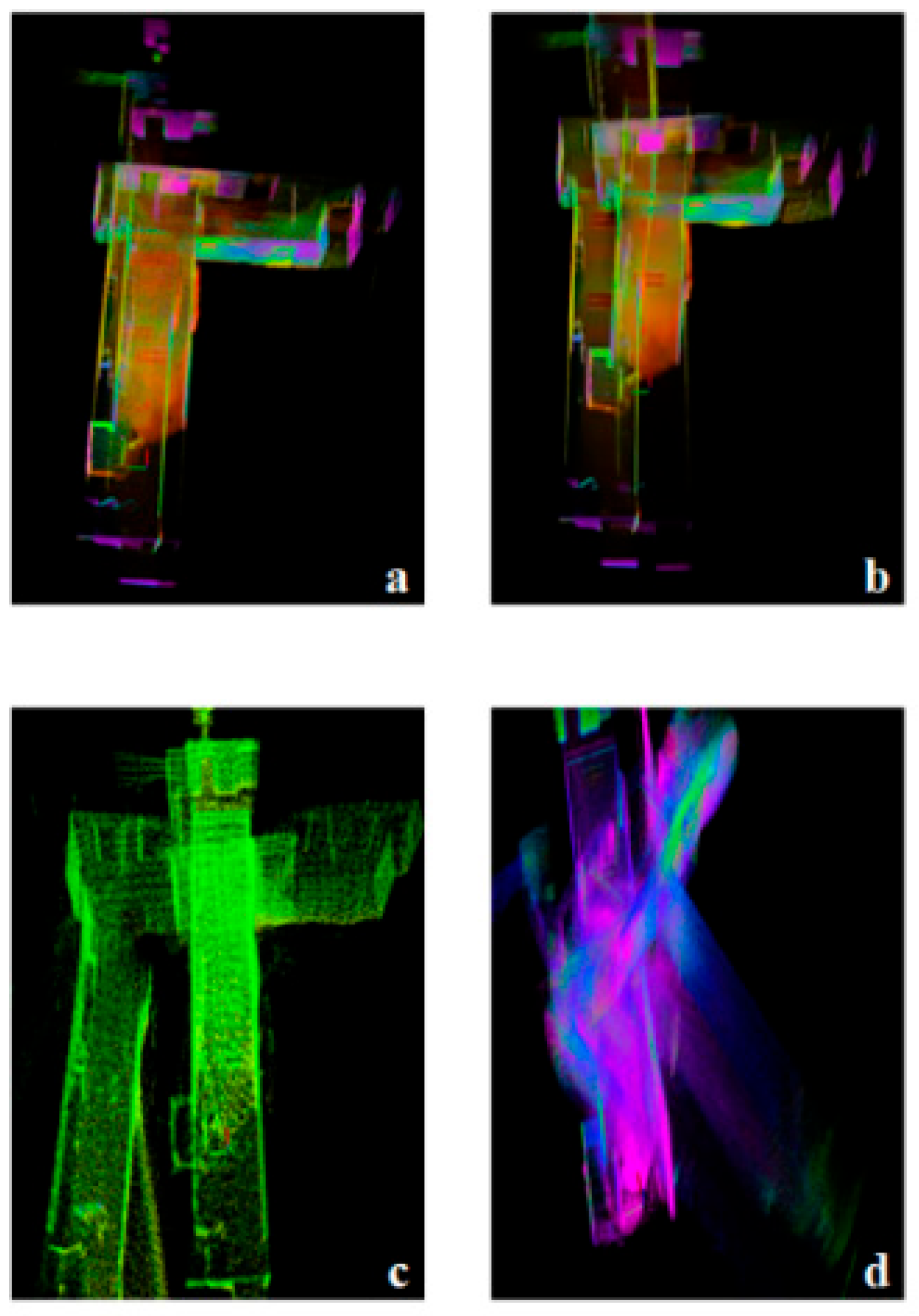

- LiDAR degeneration experiment: Evaluation is conducted on the indoor degenerate sequence degenerate_seq_02 from the R3LIVE dataset [27] and the indoor split of the LiDAR_Degenerated dataset [28], both recorded with a Livox Avia operating at 10 Hz and offering a 70.4° × 77.2° non-repetitive field of view. These sequences highlight the geometric weakness inherent to solid-state LiDAR in corridor-like environments. To broaden the scope, indoor02 and indoor03 from the Tiers-LiDAR [29] dataset are included, providing time-synchronized measurements from the same Avia and a Livox Horizon running at 10 Hz with an 81.7° × 25.1° field of view. The Horizon’s wider horizontal but substantially narrower vertical coverage delivers complementary geometric constraints, enabling a controlled assessment of degeneracy effects across two distinct solid-state LiDAR configurations.

- (2)

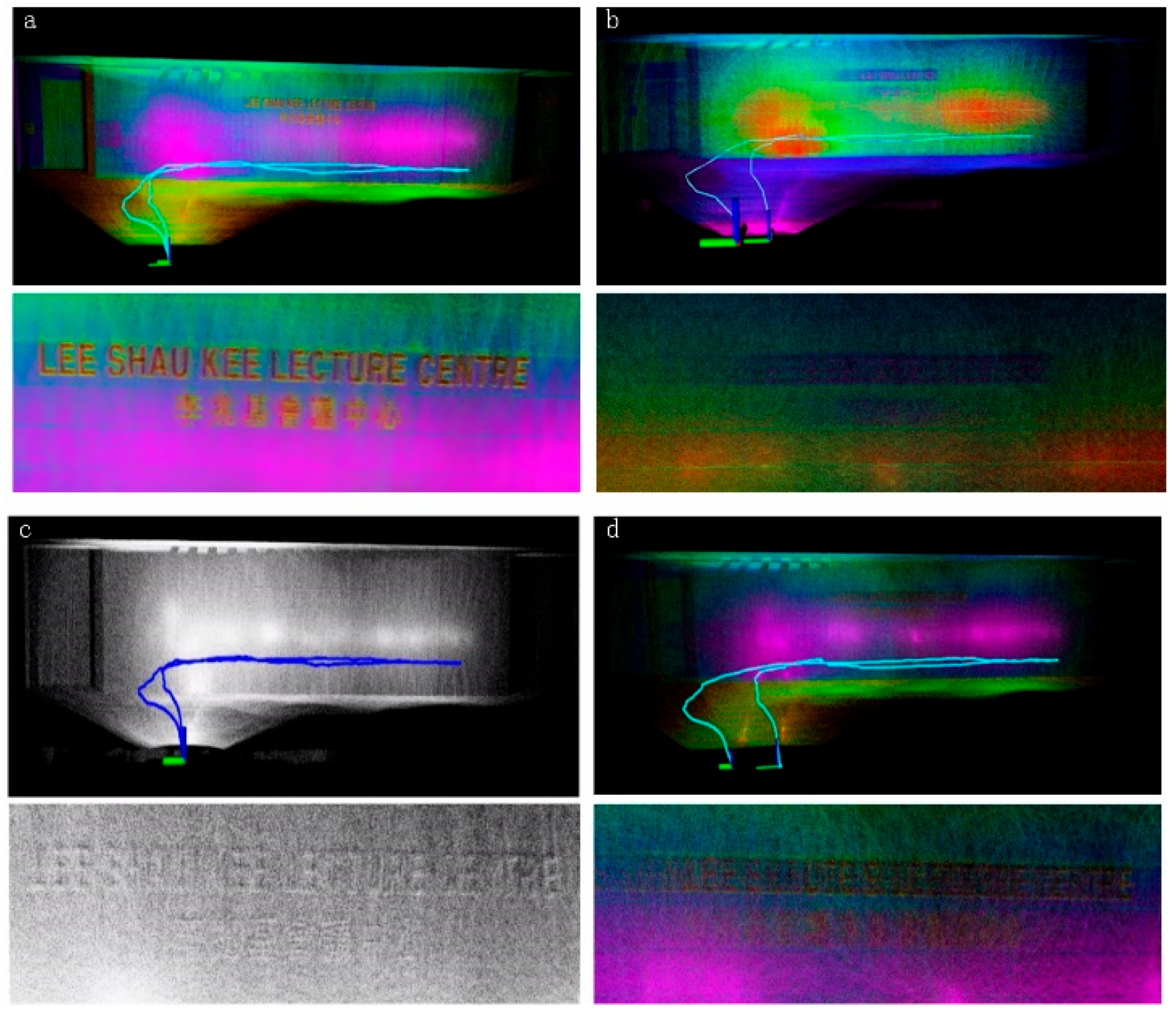

- Private Dataset: The SS-LIO system is evaluated on two purposely collected indoor sequences—a geometrically degraded long corridor and a corridor with integrated stairs—engineered to rigorously test localization stability and mapping accuracy under demanding conditions. Figure 11 shows the photos of our data collection equipment.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Bloesch, M.; Czarnowski, J.; Clark, R.; Leutenegger, S.; Davison, A.J. CodeSLAM-Learning a Compact, Optimisable Representation for Dense Visual SLAM. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2560–2568. [Google Scholar]

- Dellenbach, P.; Deschaud, J.-E.; Jacquet, B.; Goulette, F. CT-ICP: Real-Time Elastic LiDAR Odometry with Loop Closure. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5580–5586. [Google Scholar]

- Chen, K.; Lopez, B.T.; Agha-mohammadi, A.; Mehta, A. Direct LiDAR Odometry: Fast Localization With Dense Point Clouds. IEEE Robot. Autom. Lett. 2022, 7, 2000–2007. [Google Scholar] [CrossRef]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. KISS-ICP: In Defense of Point-to-Point ICP–Simple, Accurate, and Robust Registration If Done the Right Way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-Coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A Fast, Robust LiDAR–Inertial Odometry Package by Tightly-Coupled Iterated Kalman Filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast Direct LiDAR–Inertial Odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Bai, C.; Xiao, T.; Chen, Y.; Wang, H.; Zhang, F.; Gao, X. Faster-LIO: Lightweight Tightly Coupled LiDAR–Inertial Odometry Using Parallel Sparse Incremental Voxels. IEEE Robot. Autom. Lett. 2022, 7, 4861–4868. [Google Scholar] [CrossRef]

- Lim, H.; Kim, D.; Kim, B.; Myung, H. AdaLIO: Robust Adaptive LiDAR–Inertial Odometry in Degenerate Indoor Environments. In Proceedings of the 2023 20th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 25–28 June 2023; pp. 48–53. [Google Scholar]

- He, D.; Xu, W.; Chen, N.; Kong, F.; Yuan, C.; Zhang, F. Point-LIO: Robust High-Bandwidth Light Detection and Ranging Inertial Odometry. Adv. Intell. Syst. 2023, 5, 2200459. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-Time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.-L.; Xie, L. F-LOAM: Fast LiDAR Odometry and Mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4390–4396. [Google Scholar]

- Qin, C.; Ye, H.; Pranata, C.E.; Han, J.; Zhang, S.; Liu, M. LINS: A LiDAR–Inertial State Estimator for Robust and Efficient Navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 8899–8906. [Google Scholar]

- Yuan, C.; Xu, W.; Liu, X.; Hong, X.; Zhang, F. Efficient and Probabilistic Adaptive Voxel Mapping for Accurate Online LiDAR Odometry. IEEE Robot. Autom. Lett. 2022, 7, 8518–8525. [Google Scholar] [CrossRef]

- Park, C.; Moghadam, P.; Williams, J.L.; Kim, S.; Sridharan, S.; Fookes, C. Elasticity Meets Continuous-Time: Map-Centric Dense 3D LiDAR SLAM. IEEE Trans. Robot. 2022, 38, 978–997. [Google Scholar] [CrossRef]

- Nguyen, T.-M.; Duberg, D.; Jensfelt, P.; Yuan, S.; Xie, L. SLICT: Multi-Input Multi-Scale Surfel-Based LiDAR–Inertial Continuous-Time Odometry and Mapping. IEEE Robot. Autom. Lett. 2023, 8, 2102–2109. [Google Scholar] [CrossRef]

- Li, K.; Li, M.; Hanebeck, U.D. Towards High-Performance Solid-State-LiDAR–Inertial Odometry and Mapping. IEEE Robot. Autom. Lett. 2021, 6, 5167–5174. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, F. BALM: Bundle Adjustment for Lidar Mapping. IEEE Robot. Autom. Lett. 2021, 6, 3184–3191. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, J.; Liu, Q.; Liu, Y.; Qin, J. SI-LIO: High-Precision Tightly-Coupled LiDAR- Inertial Odometry via Single-Iteration Invariant Extended Kalman Filter. IEEE Robot. Autom. Lett. 2025, 10, 564–571. [Google Scholar] [CrossRef]

- Hertzberg, C.; Wagner, R.; Frese, U.; Schröder, L. Integrating Generic Sensor Fusion Algorithms with Sound State Representations through Encapsulation of Manifolds. Inf. Fusion 2013, 14, 57–77. [Google Scholar] [CrossRef]

- Ji, X.; Yuan, S.; Yin, P.; Xie, L. LIO-GVM: An Accurate, Tightly-Coupled LiDAR–Inertial Odometry With Gaussian Voxel Map. IEEE Robot. Autom. Lett. 2024, 9, 2200–2207. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. Loam Livox: A Fast, Robust, High-Precision LiDAR Odometry and Mapping Package for LiDARs of Small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Lin, J.; Zhang, F. R3LIVE: A Robust, Real-Time, RGB-Colored, LiDAR–Inertial-Visual Tightly-Coupled State Estimation and Mapping Package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 10672–10678. [Google Scholar]

- Zheng, C.; Zhu, Q.; Xu, W.; Liu, X.; Guo, Q.; Zhang, F. FAST-LIVO: Fast and Tightly-Coupled Sparse-Direct LiDAR–Inertial-Visual Odometry. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4003–4009. [Google Scholar]

- Li, Q.; Yu, X.; Queralta, J.P.; Westerlund, T. Multi-Modal Lidar Dataset for Benchmarking General-Purpose Localization and Mapping Algorithms. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 3837–3844. [Google Scholar]

| Notations | Explanation |

|---|---|

| The connection between Lie algebra and rotation. | |

| , , | Rotation, position, and velocity in the IMU coordinate system relative to the world coordinate system. |

| The error state of state . | |

| A posteriori estimation of state . | |

| The -th update of state in optimization. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, Y.; Meng, P.; Xiong, J.; Wan, X. SS-LIO: Robust Tightly Coupled Solid-State LiDAR–Inertial Odometry for Indoor Degraded Environments. Electronics 2025, 14, 2951. https://doi.org/10.3390/electronics14152951

Zou Y, Meng P, Xiong J, Wan X. SS-LIO: Robust Tightly Coupled Solid-State LiDAR–Inertial Odometry for Indoor Degraded Environments. Electronics. 2025; 14(15):2951. https://doi.org/10.3390/electronics14152951

Chicago/Turabian StyleZou, Yongle, Peipei Meng, Jianqiang Xiong, and Xinglin Wan. 2025. "SS-LIO: Robust Tightly Coupled Solid-State LiDAR–Inertial Odometry for Indoor Degraded Environments" Electronics 14, no. 15: 2951. https://doi.org/10.3390/electronics14152951

APA StyleZou, Y., Meng, P., Xiong, J., & Wan, X. (2025). SS-LIO: Robust Tightly Coupled Solid-State LiDAR–Inertial Odometry for Indoor Degraded Environments. Electronics, 14(15), 2951. https://doi.org/10.3390/electronics14152951