Negative Expressions by Social Robots and Their Effects on Persuasive Behaviors

Abstract

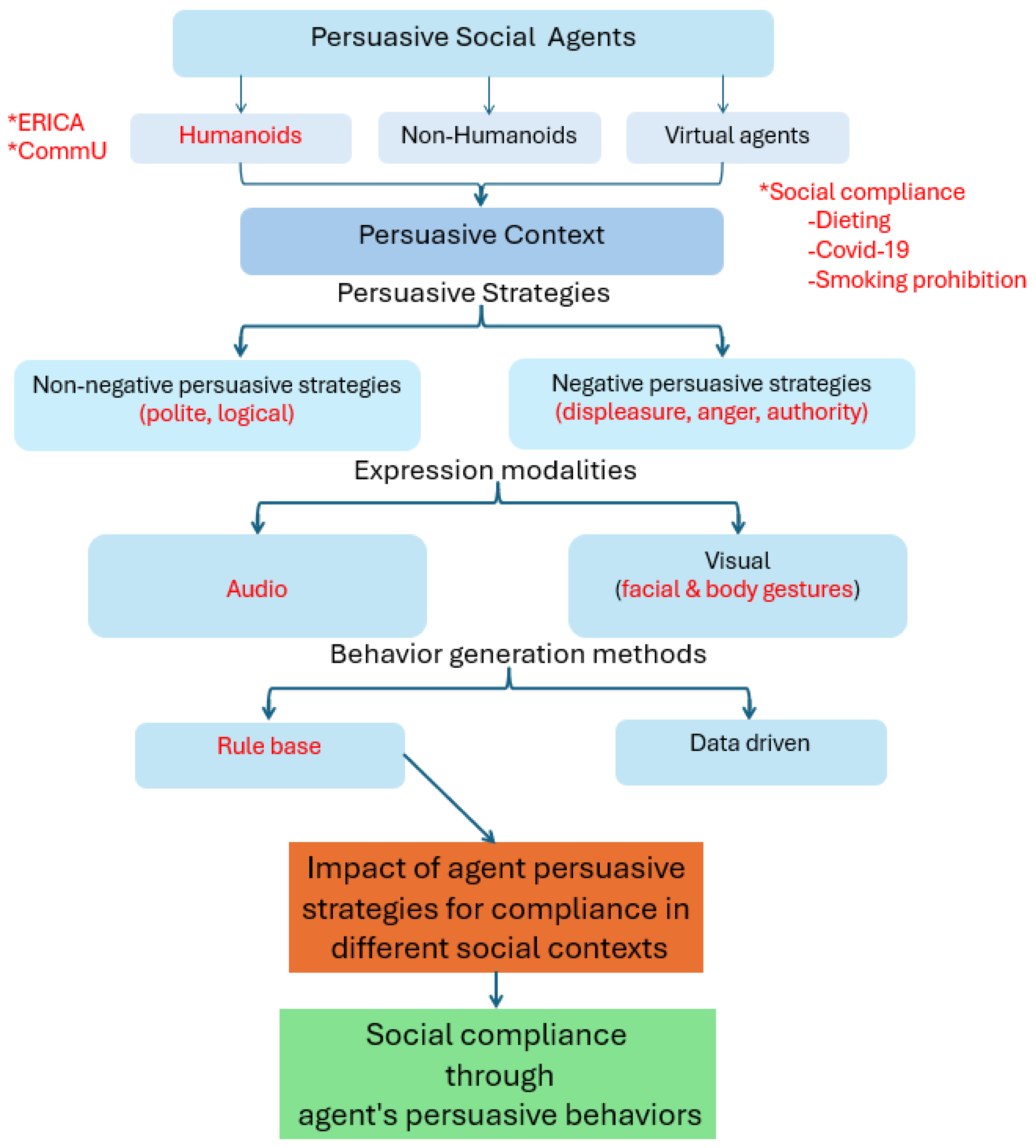

1. Introduction

2. Related Work

2.1. Emotional Social Agents

2.2. Persuasive Social Agents

2.3. Situation Awareness and Persuasion

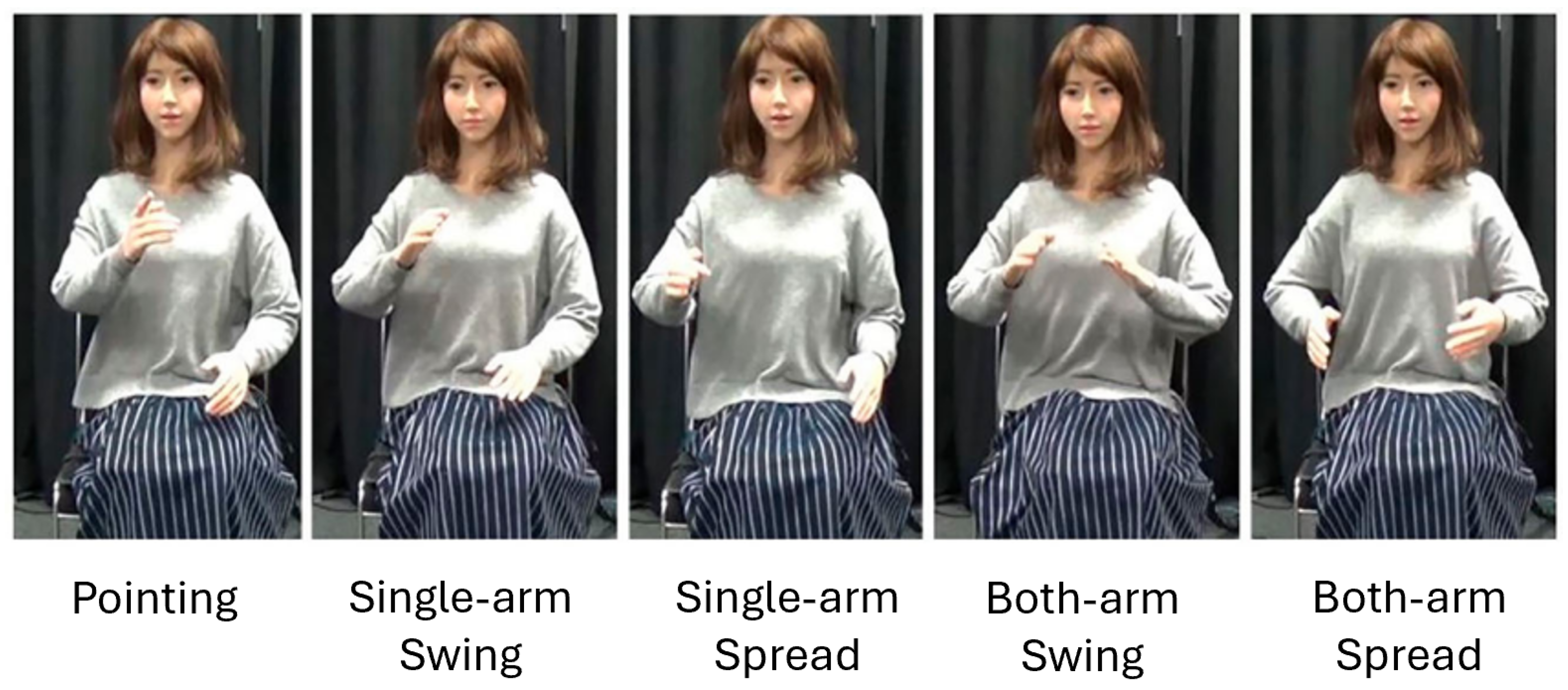

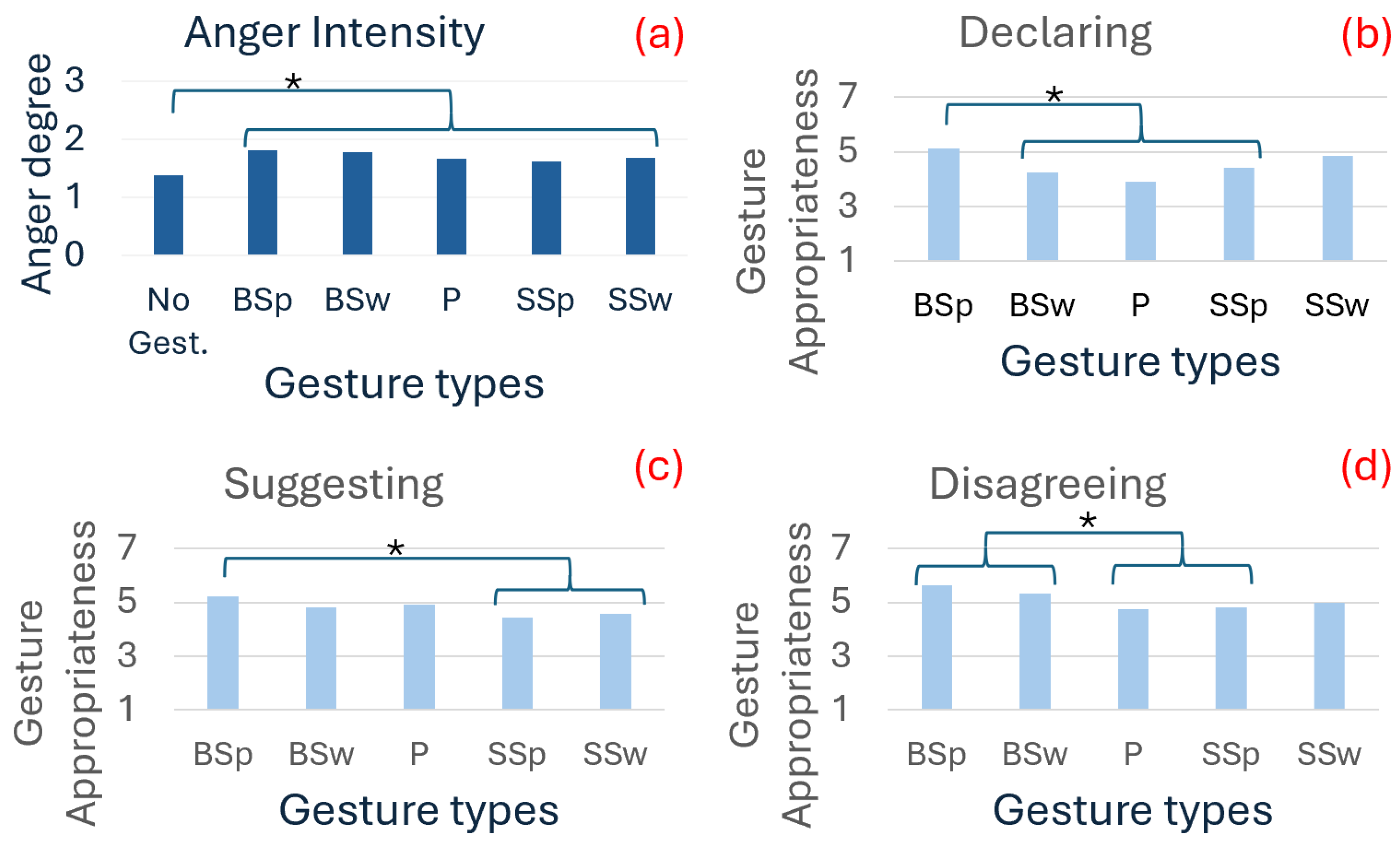

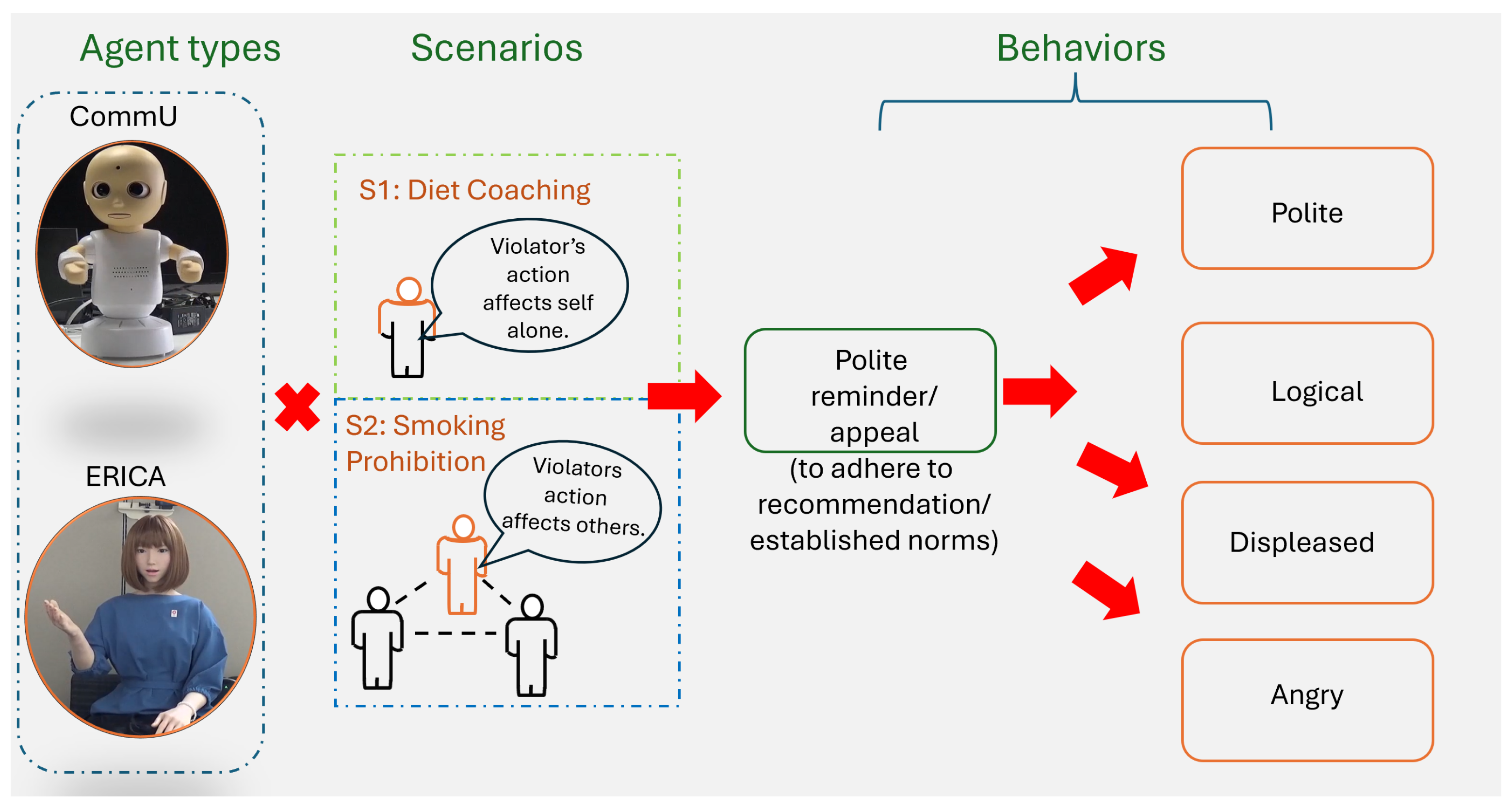

3. Study 1: Negative Persuasive Behavior by Android Robot

3.1. Background and Hypothesis

3.2. Experiment Design and Procedures

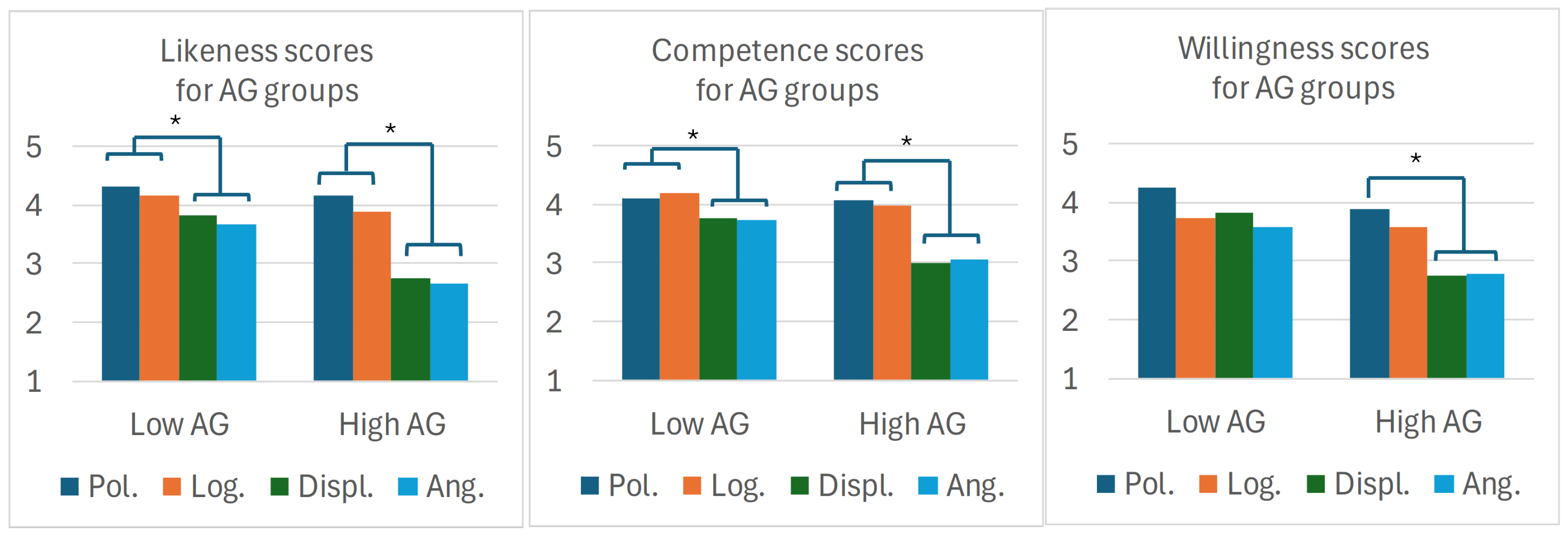

3.3. Results and Discussions

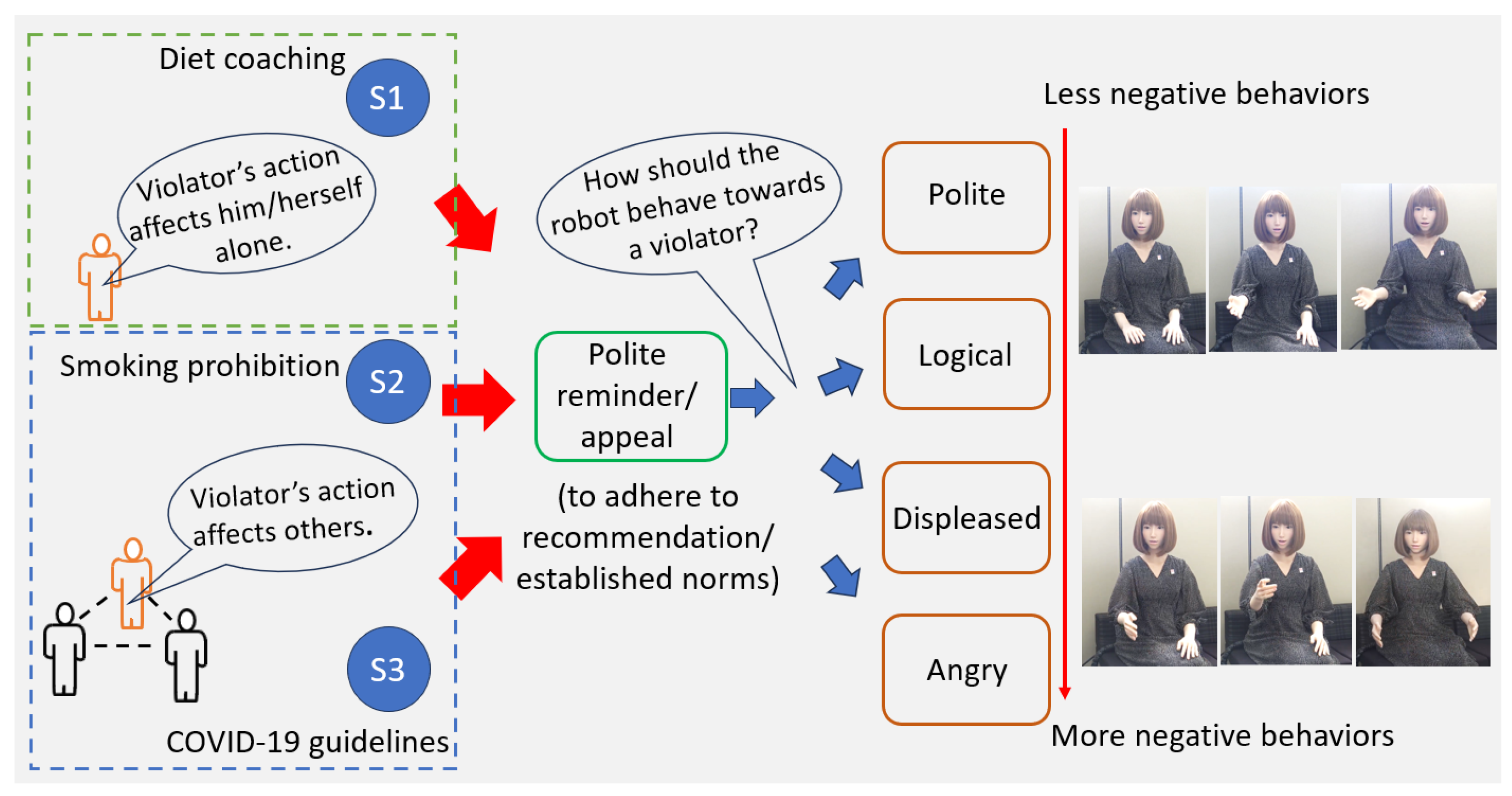

4. Study 2: Robot’s Persuasive Behaviors and Contexts of Violation

4.1. Background and Hypothesis

4.2. Experiment Design and Procedures

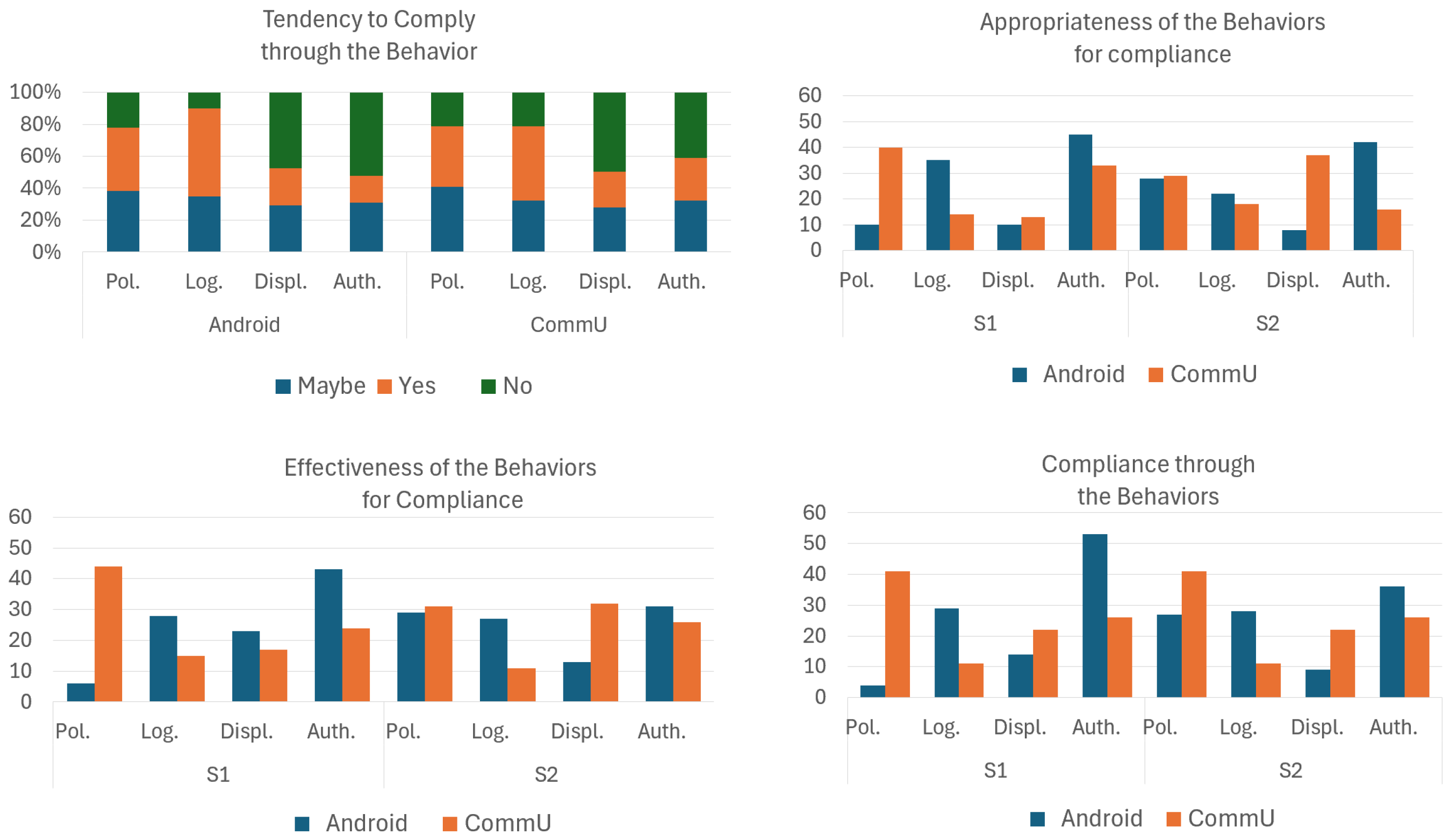

4.3. Results

4.4. Discussions Relative to Hypothesis

5. Study 3: Robot’s Appearance on Persuasive Behaviors

5.1. Background and Hypothesis

5.2. Experiment Design and Procedures

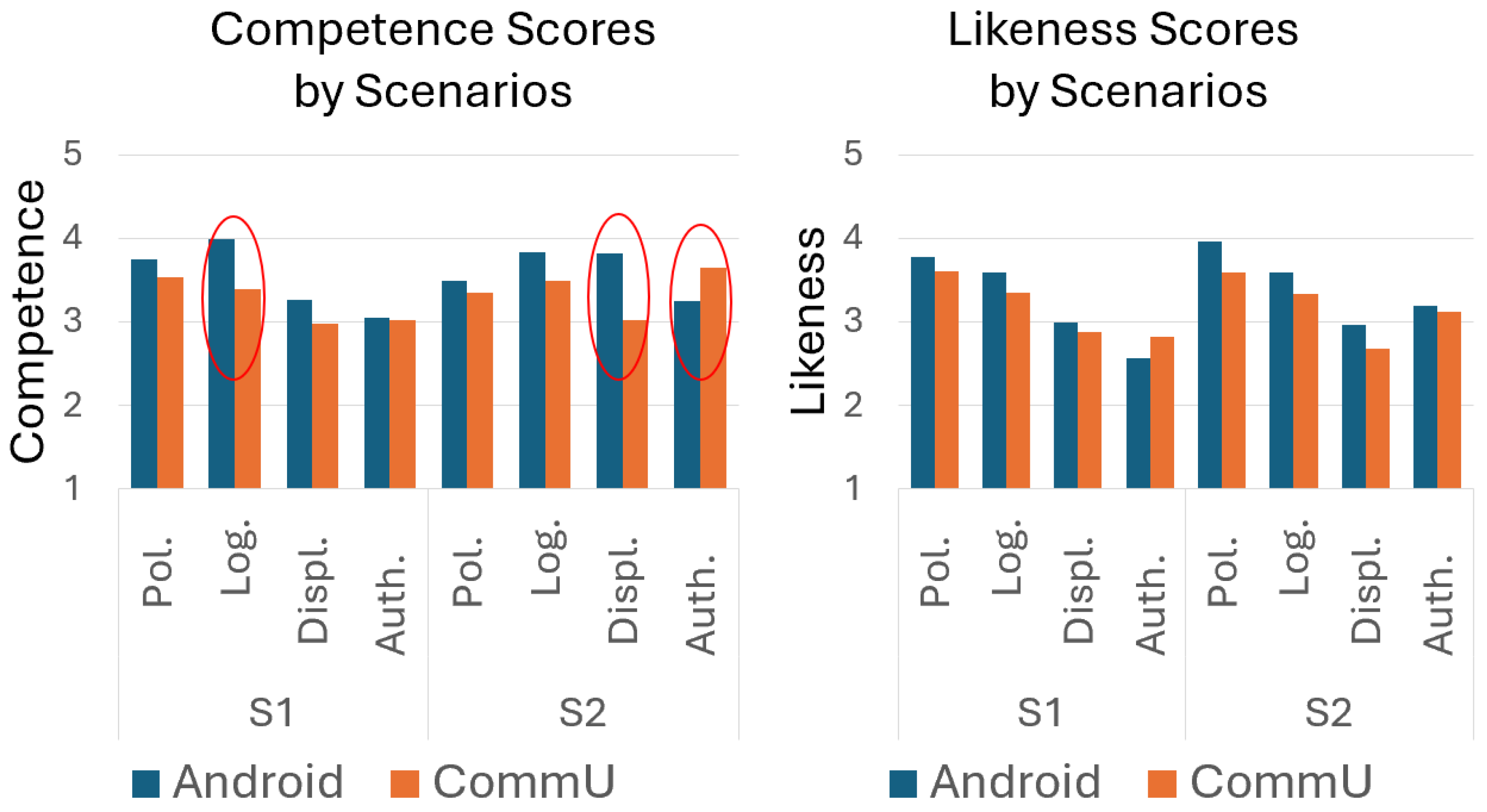

5.3. Results and Discussions

6. Limitations

7. Implications

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

List of Abbreviations

| AG | Agreeableness |

| AMT | Amazon Mechanical Turk |

| ANOVA | Analysis of variance |

| AI | Artificial Intelligence |

| AMA | Artificial Moral Agents |

| CA | Compliance Awareness |

| COVID-19 | Coronavirus Disease 2019 |

| DoF | Degrees of Freedom |

| HHI | Human-Human Interaction |

| HRI | Human–Robot Interaction |

| MELD | Multimodal Multi-Party Dataset for Emotion Recognition in Conversation |

| RAISA | Robots, AI, Service Automation |

| WHO | World Health Organization |

| Gen Z | Generation Z |

References

- Sun, S.; Ye, H.; Law, R. Cognitive–analytical and emotional–social tasks achievement of service robots through human–robot interaction. Int. J. Contemp. Hosp. Manag. 2025, 37, 180–196. [Google Scholar] [CrossRef]

- Laban, G.; Morrison, V.; Cross, E.S. Social robots for health psychology: A new frontier for improving human health and well-being. Eur. Health Psychol. 2024, 23, 1095–1102. [Google Scholar]

- Arango, J.A.; Marco-Detchart, C.; Inglada, V.J. Personalized Cognitive Support via Social Robots. Sensors 2025, 25, 888. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, E.; Buruk, O.O.; Hamari, J. Human–robot companionship: Current trends and future agenda. Int. J. Soc. Robot. 2024, 6, 1809–1860. [Google Scholar] [CrossRef]

- Lampropoulos, G. Social robots in education: Current trends and future perspectives. Information 2025, 16, 29. [Google Scholar] [CrossRef]

- Augustine Ajibo, C.; Ishi, C.T.; Ishiguro, H. Assessing the influence of an android robot’s persuasive behaviors and context of violation on compliance. Adv. Robot. 2024, 38, 1679–1689. [Google Scholar] [CrossRef]

- Ottoni, L.T.; Cerqueira, J.D. A systematic review of human–robot interaction: The use of emotions and the evaluation of their performance. Int. J. Soc. Robot. 2024, 16, 2169–2188. [Google Scholar] [CrossRef]

- Carnevale, A.; Raso, A.; Antonacci, C.; Mancini, L.; Corradini, A.; Ceccaroli, A.; Casciaro, C.; Candela, V.; de Sire, A.; D’Hooghe, P.; et al. Exploring the Impact of Socially Assistive Robots in Rehabilitation Scenarios. Bioengineering 2025, 12, 204. [Google Scholar] [CrossRef]

- Kabacińska, K.; Dosso, J.A.; Vu, K.; Prescott, T.J.; Robillard, J.M. Influence of User Personality Traits and Attitudes on Interactions With Social Robots: Systematic Review. Collabra Psychol. 2025, 11, 129175. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Z.; Zhang, W.; Zeng, T.; Sun, B.; Zhao, J.; An, P. Exploring the Effects of AI Nonverbal Emotional Cues on Human Decision Certainty in Moral Dilemmas. arXiv 2024, arXiv:2412.15834. [Google Scholar]

- Bernhard, F.; Rudolph, U. Predicting Emotional and Behavioral Reactions to Collective Wrongdoing: Effects of Imagined Versus Experienced Collective Guilt on Moral Behavior. J. Behav. Decis. Mak. 2024, 37, e2410. [Google Scholar] [CrossRef]

- Carrasco-Farre, C. Large language models are as persuasive as humans, but how? About the cognitive effort and moral-emotional language of LLM arguments. arXiv 2024, arXiv:2404.09329. [Google Scholar]

- Demetriades, S.Z.; Kalny, C.S.; Turner, M.M.; Walter, N. Is all anger created equal? A meta-analytic assessment of anger elicitation in persuasion research. Emotion 2024, 24, 1428–1441. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Arpan, L.M.; Clayton, R.B. Assessing the Role of Self-Efficacy in Reducing Psychological Reactance to Guilt Appeals Promoting Sustainable Behaviors. Sustainability 2024, 16, 7777. [Google Scholar] [CrossRef]

- Reinecke, M.G.; Wilks, M.; Bloom, P. Developmental changes in the perceived moral standing of robots. Cognition 2025, 254, 105983. [Google Scholar] [CrossRef]

- Kumar, S.; Choudhury, S. AI humanoids as moral agents and legal entities: A study on the human–robot dynamics. J. Sci. Technol. Policy Manag. 2025. [Google Scholar] [CrossRef]

- Baum, K.; Dargasz, L.; Jahn, F.; Gros, T.P.; Wolf, V. Acting for the Right Reasons: Creating Reason-Sensitive Artificial Moral Agents. arXiv 2024, arXiv:2409.15014. [Google Scholar]

- Gabriel, I.; Manzini, A.; Keeling, G.; Hendricks, L.A.; Rieser, V.; Iqbal, H.; Tomašev, N.; Ktena, I.; Kenton, Z.; Rodriguez, M.; et al. The ethics of advanced ai assistants. arXiv 2024, arXiv:2404.16244. [Google Scholar]

- Arora, A.S.; Marshall, A.; Arora, A.; McIntyre, J.R. Virtuous integrative social robotics for ethical governance. Discov. Artif. Intell. 2025, 5, 8. [Google Scholar] [CrossRef]

- Raper, R. Is there a need for robots with moral agency? A case study in social robotics. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; pp. 1–6. [Google Scholar]

- Song, Y.; Luximon, Y. When Trustworthiness Meets Face: Facial Design for Social Robots. Sensors 2024, 24, 4215. [Google Scholar] [CrossRef]

- Fernández-Rodicio, E.; Castro-González, Á.; Gamboa-Montero, J.J.; Carrasco-Martínez, S.; Salichs, M.A. Creating Expressive Social Robots That Convey Symbolic and Spontaneous Communication. Sensors 2024, 24, 3671. [Google Scholar] [CrossRef] [PubMed]

- Wu, B.; Liu, C.; Ishi, C.T.; Shi, J.; Ishiguro, H. Extrovert or Introvert? GAN-Based Humanoid Upper-Body Gesture Generation for Different Impressions. Int. J. Soc. Robot. 2025, 17, 457–472. [Google Scholar] [CrossRef]

- Gao, Y.; Fu, Y.; Sun, M.; Gao, F. Multi-modal hierarchical empathetic framework for social robots with affective body control. IEEE Trans. Affect. Comput. 2024, 15, 1621–1633. [Google Scholar] [CrossRef]

- Mahadevan, K.; Chien, J.; Brown, N.; Xu, Z.; Parada, C.; Xia, F.; Zeng, A.; Takayama, L.; Sadigh, D. Generative expressive robot behaviors using large language models. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–14 March 2024; pp. 482–491. [Google Scholar]

- Kang, H.; Santos, T.F.; Moussa, M.B.; Magnenat-Thalmann, N. Mitigating the Uncanny Valley Effect in Hyper-Realistic Robots: A Student-Centered Study on LLM-Driven Conversations. arXiv 2025, arXiv:2503.16449. [Google Scholar]

- Lawrence, S.; Jouaiti, M.; Hoey, J.; Nehaniv, C.L.; Dautenhahn, K. The Role of Social Norms in Human–Robot Interaction: A Systematic Review. ACM Trans. Hum.-Robot. Interact. 2025, 14, 1–44. [Google Scholar] [CrossRef]

- Berns, K.; Ashok, A. “You Scare Me”: The Effects of Humanoid Robot Appearance, Emotion, and Interaction Skills on Uncanny Valley Phenomenon. Actuators 2024, 13, 419. [Google Scholar] [CrossRef]

- Rachmad, Y.E. Social Influence Theory; United Nations Economic and Social Council: New York, NY, USA, 2025.

- Voorveld, H.A.; Meppelink, C.S.; Boerman, S.C. Consumers’ persuasion knowledge of algorithms in social media advertising: Identifying consumer groups based on awareness, appropriateness, and coping ability. Int. J. Advert. 2024, 43, 960–986. [Google Scholar] [CrossRef]

- Ji, J.; Hu, T.; Chen, M. Impact of COVID-19 vaccine persuasion strategies on social endorsement and public response on Chinese social media. Health Commun. 2025, 40, 856–867. [Google Scholar] [CrossRef]

- Windrich, I.; Kierspel, S.; Neumann, T.; Berger, R.; Vogt, B. Enforcement of Fairness Norms by Punishment: A Comparison of Gains and Losses. Behav. Sci. 2024, 14, 39. [Google Scholar] [CrossRef]

- Andersson, P.A.; Vartanova, I.; Västfjäll, D.; Tinghög, G.; Strimling, P.; Wu, J.; Hazin, I.; Akotia, C.S.; Aldashev, A.; Andrighetto, G.; et al. Anger and disgust shape judgments of social sanctions across cultures, especially in high individual autonomy societies. Sci. Rep. 2024, 14, 5591. [Google Scholar] [CrossRef]

- Liu, R.W.; Lapinski, M.K. Cultural influences on the effects of social norm appeals. Philos. Trans. R. Soc. B 2024, 379, 20230036. [Google Scholar] [CrossRef] [PubMed]

- Iwasaki, M.; Yamazaki, A.; Yamazaki, K.; Miyazaki, Y.; Kawamura, T.; Nakanishi, H. Perceptive Recommendation Robot: Enhancing Receptivity of Product Suggestions Based on Customers’ Nonverbal Cues. Biomimetics 2024, 9, 404. [Google Scholar] [CrossRef] [PubMed]

- Sakai, K.; Ban, M.; Mitsuno, S.; Ishiguro, H.; Yoshikawa, Y. Leveraging the Presence of Other Robots to Promote Acceptability of Robot Persuasion: A Field Experiment. IEEE Robot. Autom. Lett. 2024, 9, 9813–9819. [Google Scholar] [CrossRef]

- Belcamino, V.; Carfì, A.; Seidita, V.; Mastrogiovanni, F.; Chella, A. A Social Robot with Inner Speech for Dietary Guidance. arXiv 2025, arXiv:2505.08664. [Google Scholar]

- Xu, J.; van der Horst, S.A.; Zhang, C.; Cuijpers, R.H.; IJsselsteijn, W.A. Robot-Initiated Social Control of Sedentary Behavior: Comparing the Impact of Relationship-and Target-Focused Strategies. arXiv 2025, arXiv:2502.08428. [Google Scholar]

- Zhao, Q.; Zhao, X.; Liu, Y.; Cheng, W.; Sun, Y.; Oishi, M.; Osaki, T.; Matsuda, K.; Yao, H.; Chen, H. SAUP: Situation Awareness Uncertainty Propagation on LLM Agent. arXiv 2024, arXiv:2412.01033. [Google Scholar]

- Senaratne, H.; Tian, L.; Sikka, P.; Williams, J.; Howard, D.; Kulić, D.; Paris, C. A Framework for Dynamic Situational Awareness in Human Robot Teams: An Interview Study. arXiv 2025, arXiv:2501.08507. [Google Scholar] [CrossRef]

- Fiorini, L.; D’Onofrio, G.; Sorrentino, A.; Cornacchia Loizzo, F.G.; Russo, S.; Ciccone, F.; Giuliani, F.; Sancarlo, D.; Cavallo, F. The Role of Coherent Robot Behavior and Embodiment in Emotion Perception and Recognition During Human-Robot Interaction: Experimental Study. JMIR Hum. Factors 2024, 11, e45494. [Google Scholar] [CrossRef]

- Pekçetin, T.N.; Evsen, S.; Pekçetin, S.; Acarturk, C.; Urgen, B.A. Real-world implicit association task for studying mind perception: Insights for social robotics. In Proceedings of the Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–14 March 2024; pp. 837–841. [Google Scholar]

- Trafton, J.G.; McCurry, J.M.; Zish, K.; Frazier, C.R. The perception of agency. ACM Trans. Hum.-Robot. Interact. 2024, 13, 1–23. [Google Scholar] [CrossRef]

- Haresamudram, K.; Torre, I.; Behling, M.; Wagner, C.; Larsson, S. Talking body: The effect of body and voice anthropomorphism on perception of social agents. Front. Robot. AI 2024, 11, 1456613. [Google Scholar] [CrossRef]

- Huang, P.; Hu, Y.; Nechyporenko, N.; Kim, D.; Talbott, W.; Zhang, J. EMOTION: Expressive Motion Sequence Generation for Humanoid Robots with In-Context Learning. arXiv 2024, arXiv:2410.23234. [Google Scholar] [CrossRef]

- Wang, X.; Li, Z.; Wang, S.; Yang, Y.; Peng, Y.; Fu, C. Enhancing emotional expression in cat-like robots: Strategies for utilizing tail movements with human-like gazes. Front. Robot. AI 2024, 11, 1399012. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Du, X.; Liu, Y.; Tang, W.; Xue, C. How the Degree of Anthropomorphism of Human-like Robots Affects Users’ Perceptual and Emotional Processing: Evidence from an EEG Study. Sensors 2024, 24, 4809. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Xie, Y. Can robots elicit empathy? The effects of social robots’ appearance on emotional contagion. Comput. Hum. Behav. Artif. Humans 2024, 2, 100049. [Google Scholar] [CrossRef]

- de Rooij, A.; Broek, S.V.; Bouw, M.; de Wit, J. Co-Creating with a Robot Facilitator: Robot Expressions Cause Mood Contagion Enhancing Collaboration, Satisfaction, and Performance. Int. J. Soc. Robot. 2024, 16, 2133–2152. [Google Scholar] [CrossRef]

- Zhang, D.; Peng, J.; Jiao, Y.; Gu, J.; Yu, J.; Chen, J. ExFace: Expressive Facial Control for Humanoid Robots with Diffusion Transformers and Bootstrap Training. arXiv 2025, arXiv:2504.14477. [Google Scholar]

- Ding, B.; Kirtay, M.; Spigler, G. Imitation of human motion achieves natural head movements for humanoid robots in an active-speaker detection task. In Proceedings of the 2024 IEEE-RAS 23rd International Conference on Humanoid Robots (Humanoids), Nancy, France, 22–24 November 2024; pp. 645–652. [Google Scholar]

- Nishiwaki, K.; Brščić, D.; Kanda, T. Expressing Anger with Robot for Tackling the Onset of Robot Abuse. ACM Trans. Hum.-Robot. Interact. 2024, 14, 1–23. [Google Scholar] [CrossRef]

- Pfeuffer, A.; Hatfield, H.R.; Evans, N.; Kim, J. Illegally beautiful? The role of trust and persuasion knowledge in online image manipulation disclosure effects. Int. J. Advert. 2025, 44, 696–717. [Google Scholar] [CrossRef]

- Leite, J.A.; Razuvayevskaya, O.; Scarton, C.; Bontcheva, K. A cross-domain study of the use of persuasion techniques in online disinformation. In Proceedings of the Companion Proceedings of the ACM on Web Conference 2025, Sydney, Australia, 28 April–2 May 2025; pp. 1100–1103. [Google Scholar]

- Maj, K.; Grzybowicz, P.; Kopeć, J. “No, I Won’t Do That.” Assertive Behavior of Robots and its Perception by Children. Int. J. Soc. Robot. 2024, 16, 1489–1507. [Google Scholar] [CrossRef]

- Gonzalez-Oliveras, P.; Engwall, O.; Majlesi, A.R. Sense and Sensibility: What makes a social robot convincing to high-school students? arXiv 2025, arXiv:2506.12507. [Google Scholar]

- Lee, H.; Yi, Y. Humans vs. Service robots as social actors in persuasion settings. J. Serv. Res. 2025, 28, 150–167. [Google Scholar] [CrossRef]

- Getson, C.; Nejat, G. Investigating Persuasive Socially Assistive Robot Behavior Strategies for Sustained Engagement in Long-Term Care. arXiv 2024, arXiv:2408.14322. [Google Scholar]

- Peng, Z.L.; Mattila, A.S.; Sharma, A. Gendered robots and persuasion: The interplay of the robot’s gender, the consumer’s gender, and their power on menu recommendations. J. Hosp. Tour. Manag. 2025, 62, 294–303. [Google Scholar] [CrossRef]

- Vriens, E.; Andrighetto, G.; Tummolini, L. Risk, sanctions and norm change: The formation and decay of social distancing norms. Philos. Trans. R. Soc. B 2024, 379, 20230035. [Google Scholar] [CrossRef]

- Mitsuishi, K.; Kawamura, Y. Avoidance of altruistic punishment: Testing with a situation-selective third-party punishment game. J. Exp. Soc. Psychol. 2025, 116, 104695. [Google Scholar] [CrossRef]

- Corrao, F.; Nardelli, A.; Renoux, J.; Recchiuto, C.T. EmoACT: A Framework to Embed Emotions into Artificial Agents Based on Affect Control Theory. arXiv 2025, arXiv:2504.12125. [Google Scholar]

- Li, J.; Song, H.; Zhou, J.; Nie, Q.; Cai, Y. RMG: Real-Time Expressive Motion Generation with Self-collision Avoidance for 6-DOF Companion Robotic Arms. arXiv 2025, arXiv:2503.09959. [Google Scholar]

- Bartosik, B.; Wojcik, G.M.; Brzezicka, A.; Kawiak, A. Are you able to trust me? Analysis of the relationships between personality traits and the assessment of attractiveness and trust. Front. Hum. Neurosci. 2021, 15, 685530. [Google Scholar] [CrossRef]

- van Otterdijk, M.; Laeng, B.; Saplacan-Lindblom, D.; Baselizadeh, A.; Tørresen, J. Seeing Meaning: How Congruent Robot Speech and Gestures Impact Human Intuitive Understanding of Robot Intentions. Int. J. Soc. Robot. 2025, 1–4. [Google Scholar] [CrossRef]

- Staffa, M.; D’Errico, L.; Maratea, A. Influence of Social Identity and Personality Traits in Human–Robot Interactions. Robotics 2024, 13, 144. [Google Scholar] [CrossRef]

- A’yuninnisa, R.N.; Carminati, L.; Wilderom, C.P. Promoting employee flourishing and performance: The roles of perceived leader emotional intelligence, positive team emotional climate, and employee emotional intelligence. Front. Organ. Psychol. 2024, 2, 1283067. [Google Scholar] [CrossRef]

- Johnson, J.A. Calibrating personality self-report scores to acquaintance ratings. Personal. Individ. Differ. 2021, 169, 109734. [Google Scholar] [CrossRef]

- Watson, J.; Valsesia, F.; Segal, S. Assessing AI receptivity through a persuasion knowledge lens. Curr. Opin. Psychol. 2024, 58, 101834. [Google Scholar] [CrossRef] [PubMed]

- Van Kleef, G.A. Understanding the positive and negative effects of emotional expressions in organizations: EASI does it. Hum. Relations 2014, 67, 1145–1164. [Google Scholar] [CrossRef]

- Dennler, N.; Nikolaidis, S.; Matarić, M. Singing the Body Electric: The Impact of Robot Embodiment on User Expectations. arXiv 2024, arXiv:2401.06977. [Google Scholar]

- Schulz, D.; Unbehaun, D.; Doernbach, T. Investigating the Effects of Embodiment on Presence and Perception in Remote Physician Video Consultations: A Between-Participants Study Comparing a Tablet and a Telepresence Robot. i-com. 2025. Available online: https://www.degruyterbrill.com/document/doi/10.1515/icom-2024-0045/html?srsltid=AfmBOopZpEQ9TaIduZrQIkv0QHpV2G24-llchM0OBtsONxOCfiSWX7rc (accessed on 28 June 2025).

- Roselli, C.; Lapomarda, L.; Datteri, E. How culture modulates anthropomorphism in Human-Robot Interaction: A review. Acta Psychol. 2025, 255, 104871. [Google Scholar] [CrossRef]

- Hauser, E.; Chan, Y.C.; Modak, S.; Biswas, J.; Hart, J. Vid2Real HRI: Align video-based HRI study designs with real-world settings. In Proceedings of the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), Pasadena, CA, USA, 26–30 August 2024; pp. 542–548. [Google Scholar]

- Steinhaeusser, S.C.; Heckel, M.; Lugrin, B. The way you see me-comparing results from online video-taped and in-person robotic storytelling research. In Proceedings of the Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–15 March 2024; pp. 1018–1022. [Google Scholar]

- Osakwe, C.N.; Říha, D.; Elgammal, I.M.; Ramayah, T. Understanding Gen Z shoppers’ interaction with customer-service robots: A cognitive-affective-normative perspective. Int. J. Retail. Distrib. Manag. 2024, 52, 103–120. [Google Scholar] [CrossRef]

- Fu, M.; Fraser, B.; Arcodia, C. Digital natives on the rise: A systematic literature review on generation Z’s engagement with RAISA technologies in hospitality services. Int. J. Hosp. Manag. 2024, 122, 103885. [Google Scholar] [CrossRef]

- Collier, M.A.; Narayan, R.; Admoni, H. The Sense of Agency in Assistive Robotics Using Shared Autonomy. arXiv 2025, arXiv:2501.07462. [Google Scholar]

- Cantucci, F.; Marini, M.; Falcone, R. The Role of Robot Competence, Autonomy, and Personality on Trust Formation in Human-Robot Interaction. arXiv 2025, arXiv:2503.04296. [Google Scholar]

| Traits | Threshold | Distribution |

|---|---|---|

| CA | 1.00 ≤ X ≤ 3.49 | Low CA (N = 47: 30 Male, 17 Female; M = 3.24, SD = 0.55) |

| 3.50 ≤ X ≤ 5.00 | High CA (N = 51: 30 Male, 21 Female; M = 4.38, SD = 0.34) | |

| AG | 1.00 ≤ X ≤ 2.99 | Low AG (N = 51: 35 Male, 16 Female; M = 2.69, SD = 0.58) |

| 3.00 ≤ X ≤ 5.00 | High AG (N = 47: 25 Male, 22 Female; M = 4.47, SD = 0.46) |

| Impression Items | Scen-Beh F(6, 576) | CA-Beh F(3, 288) | AG-Beh F(3, 288) |

|---|---|---|---|

| Likeness | 3.4 * | 5.9 * | 14.4 ** |

| Appropriateness | 22.7 ** | 1.8 (ns) | 6.5 * |

| Effectiveness | 15.0 ** | 2.2 (ns) | 1.6 (ns) |

| Competence | 1.8 (ns) | 4.3 * | 7.4 * |

| Willingness | 12.3 ** | 6.3 * | 8.4 ** |

| Impression items | CA F(1, 96) | AG F(1, 96) | Scen F(2, 192) | Beh F(3, 288) |

|---|---|---|---|---|

| Likeness | 29.8 ** | 18.5 ** | 2.8 * | 65.2 ** |

| Appropriateness | NaN (ns) | NaN (ns) | NaN (ns) | 17.0 ** |

| Effectiveness | NaN (ns) | NaN (ns) | NaN (ns) | 5.9 * |

| Competence | 22.7 ** | 11.8 ** | 0.3 (ns) | 35.3 ** |

| Willingness | 33.6 ** | 16.5 ** | 2.4 * | 34.1 ** |

| Impression Items | Beh-Scen F(3, 300) | Beh-Agt F(3, 300) |

|---|---|---|

| Likeness | 7.3 * | 2.1 (ns) |

| Competence | 16.5 * | 8.6 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ajibo, C.A.; Ishi, C.T.; Ishiguro, H. Negative Expressions by Social Robots and Their Effects on Persuasive Behaviors. Electronics 2025, 14, 2667. https://doi.org/10.3390/electronics14132667

Ajibo CA, Ishi CT, Ishiguro H. Negative Expressions by Social Robots and Their Effects on Persuasive Behaviors. Electronics. 2025; 14(13):2667. https://doi.org/10.3390/electronics14132667

Chicago/Turabian StyleAjibo, Chinenye Augustine, Carlos Toshinori Ishi, and Hiroshi Ishiguro. 2025. "Negative Expressions by Social Robots and Their Effects on Persuasive Behaviors" Electronics 14, no. 13: 2667. https://doi.org/10.3390/electronics14132667

APA StyleAjibo, C. A., Ishi, C. T., & Ishiguro, H. (2025). Negative Expressions by Social Robots and Their Effects on Persuasive Behaviors. Electronics, 14(13), 2667. https://doi.org/10.3390/electronics14132667