AI-Powered Gamified Scaffolding: Transforming Learning in Virtual Learning Environment

Abstract

1. Introduction

- The research examines how various gamification modes affect learner engagement, experience, and perceived outcomes in a second-language virtual environment.

- Our study engaged a cohort of one hundred ESL (English as a second language) students, providing valuable insights by comparing different gamified levels of learning environments.

- The results of the study indicate that it is essential to understand user backgrounds to ensure effective virtual learning.

- While higher interactivity in virtual learning environments can enhance the learning experience, it might have a negative impact on actual learning outcomes.

- H1: In the blended language-learning environment, learners are more engaged in learning with a non-linear gamified guiding mode (exploration mode) than in the other two learning modes.

- H2: In the blended language-learning environment, learners have a better learning experience in learning with a non-linear gamified guiding mode (exploration mode) than in the other two learning modes.

- H3: In the blended language-learning environment, learners perceive a better understanding of learning with a non-linear gamified guiding mode (exploration mode) than in the other two learning modes.

2. Related Work

2.1. Guiding Strategies in Learning

2.2. Second-Language Learning in a Virtual Learning Environment

2.3. Gamification in Language Learning

2.4. Artificial Intelligence in Education

2.5. AI-Powered Gamification in Education

3. User Study

3.1. Participant

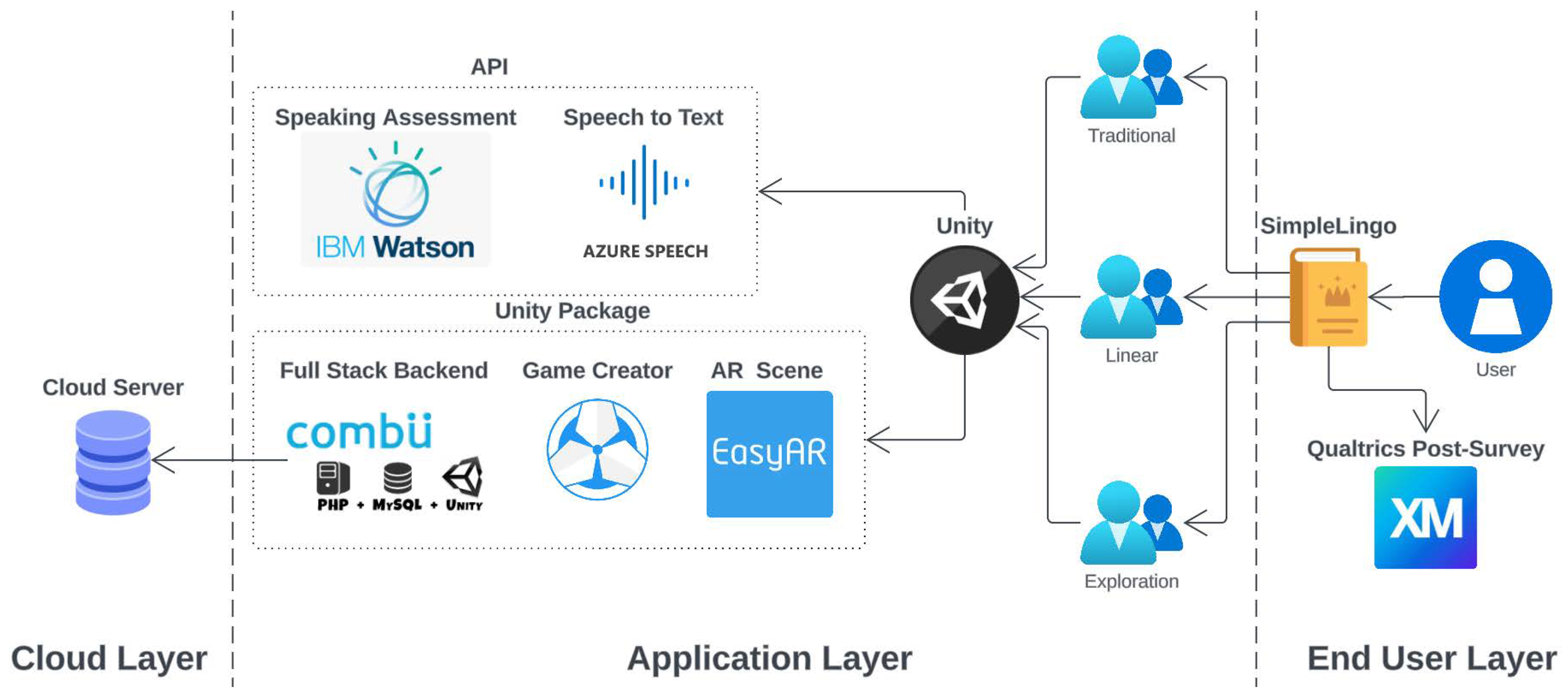

3.2. System Design

3.3. Procedure

3.3.1. Traditional Group

3.3.2. Linear Group

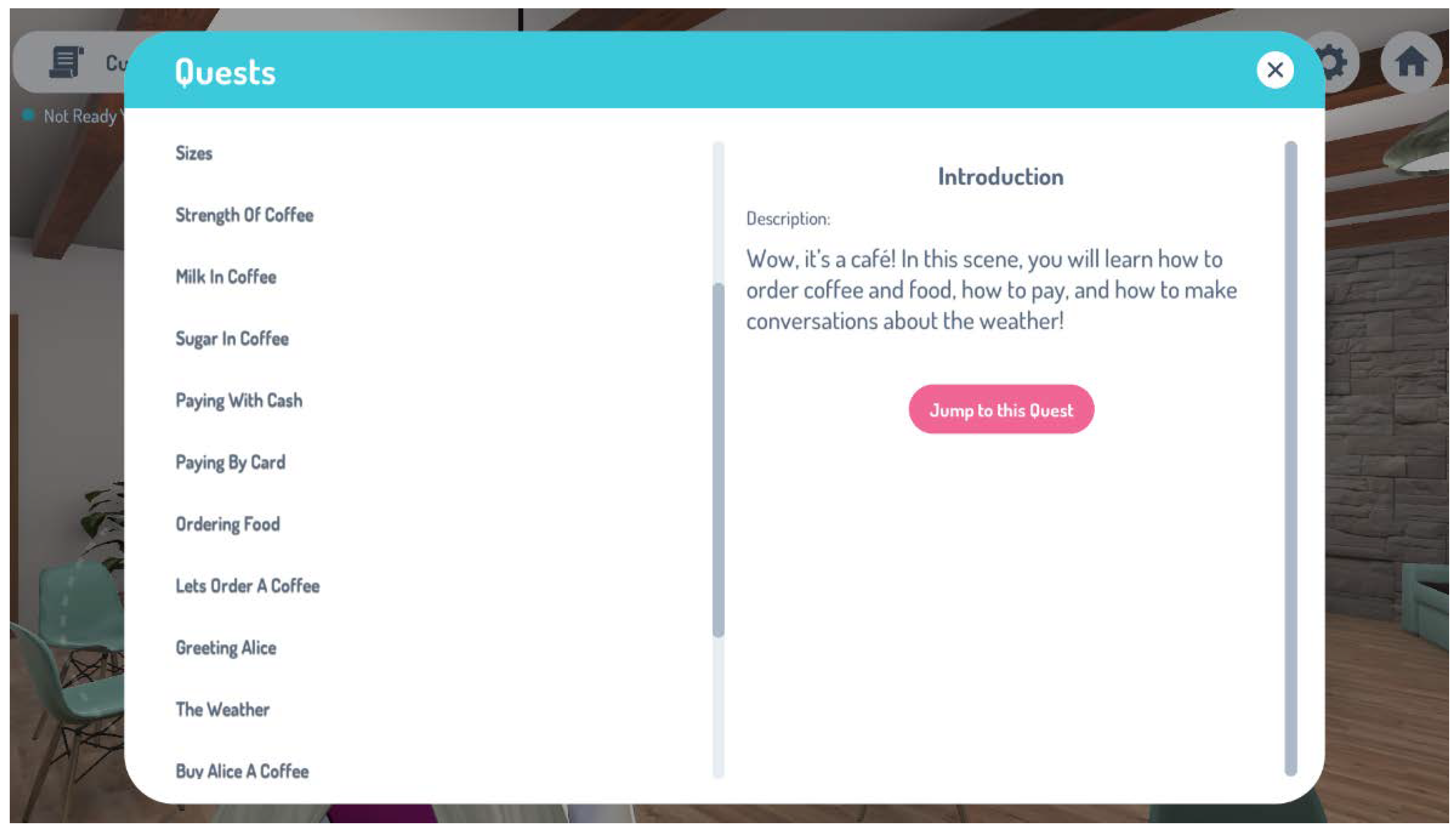

3.3.3. Exploration Group

3.3.4. Data Collection and Analysis

4. Results

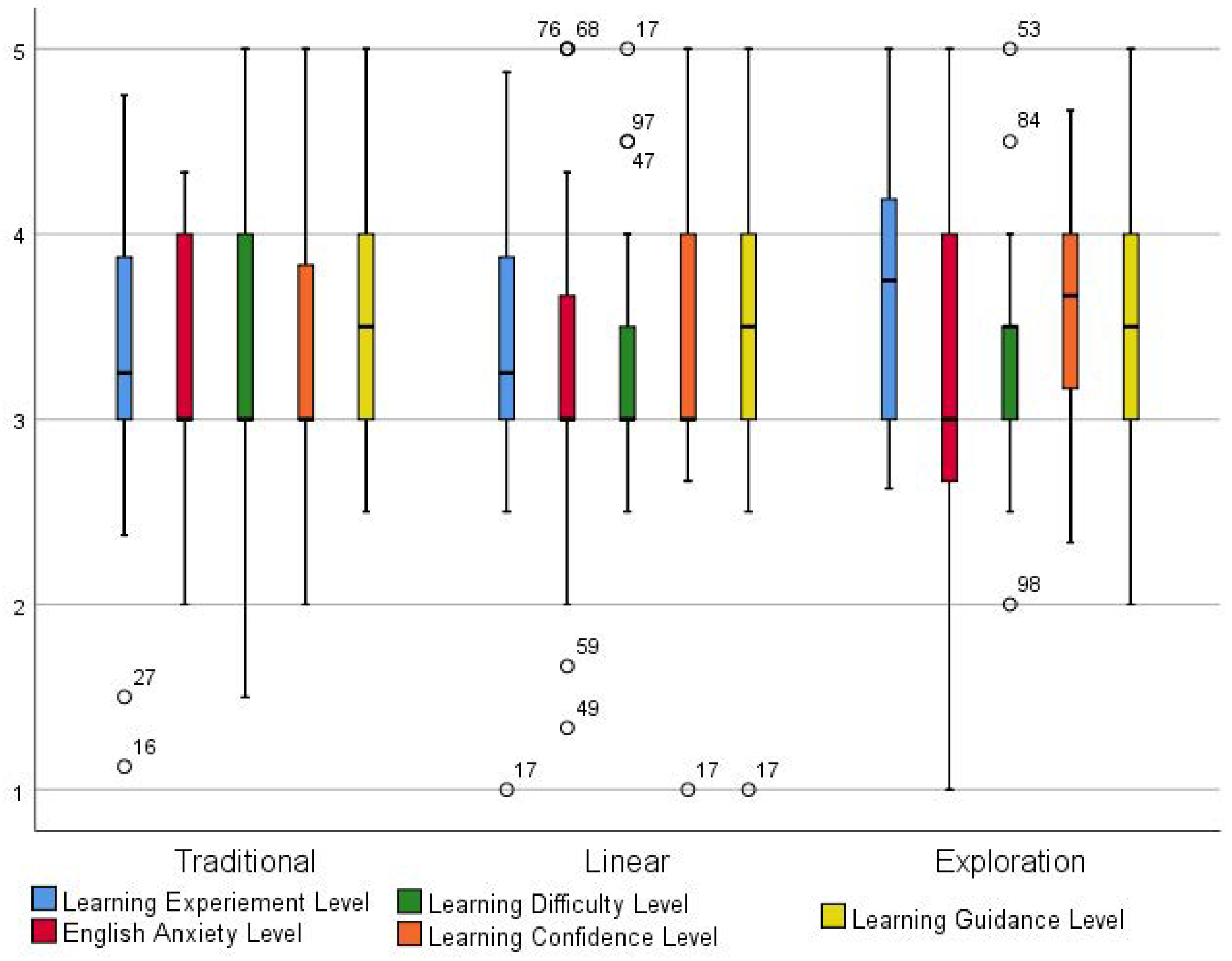

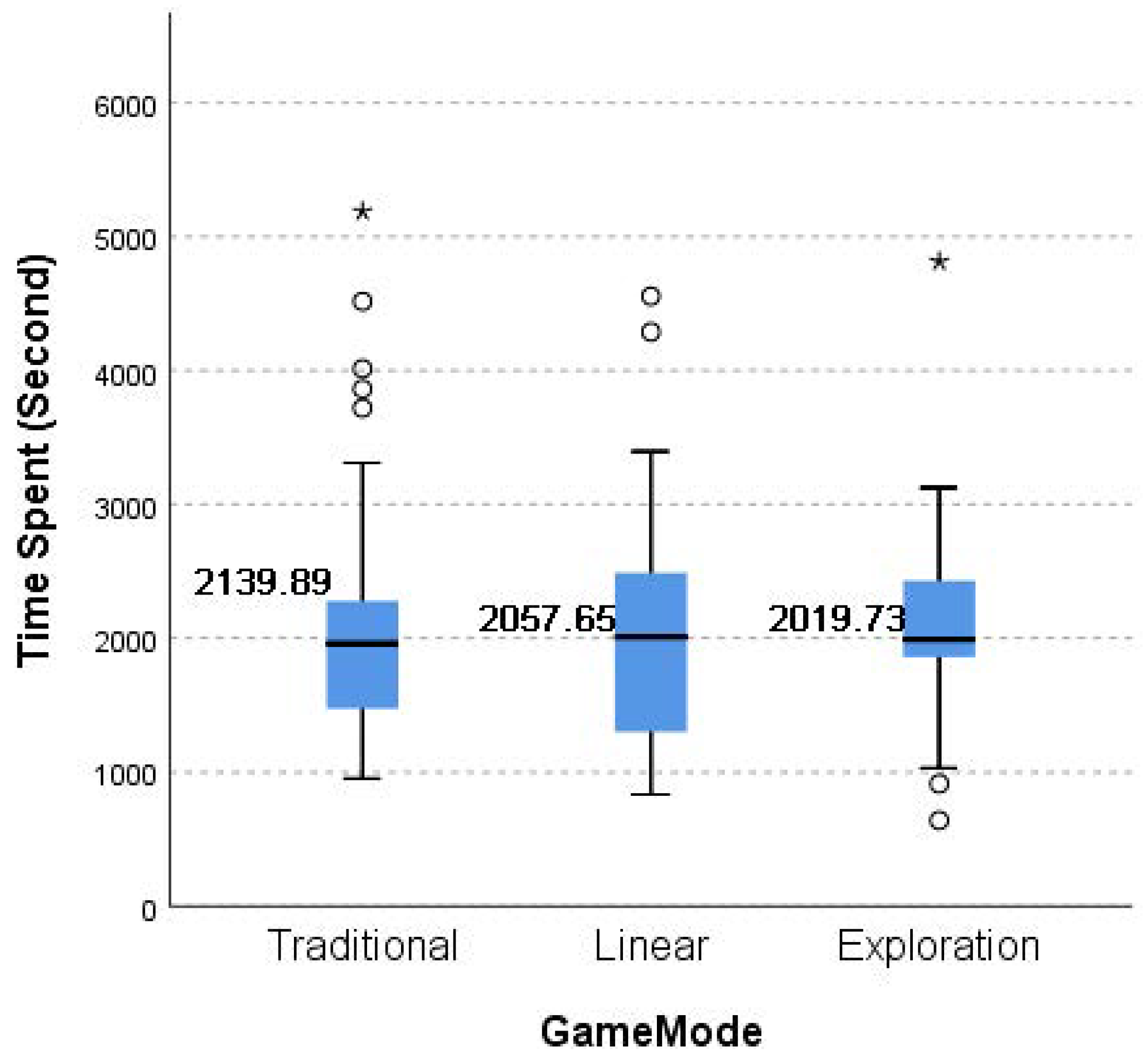

4.1. The Impact of Gamified Scaffolding on L2 Learning

4.2. The Impact of Second-Language Anxiety on L2 Learning

4.3. The Impact of Other Factors on L2 Learning

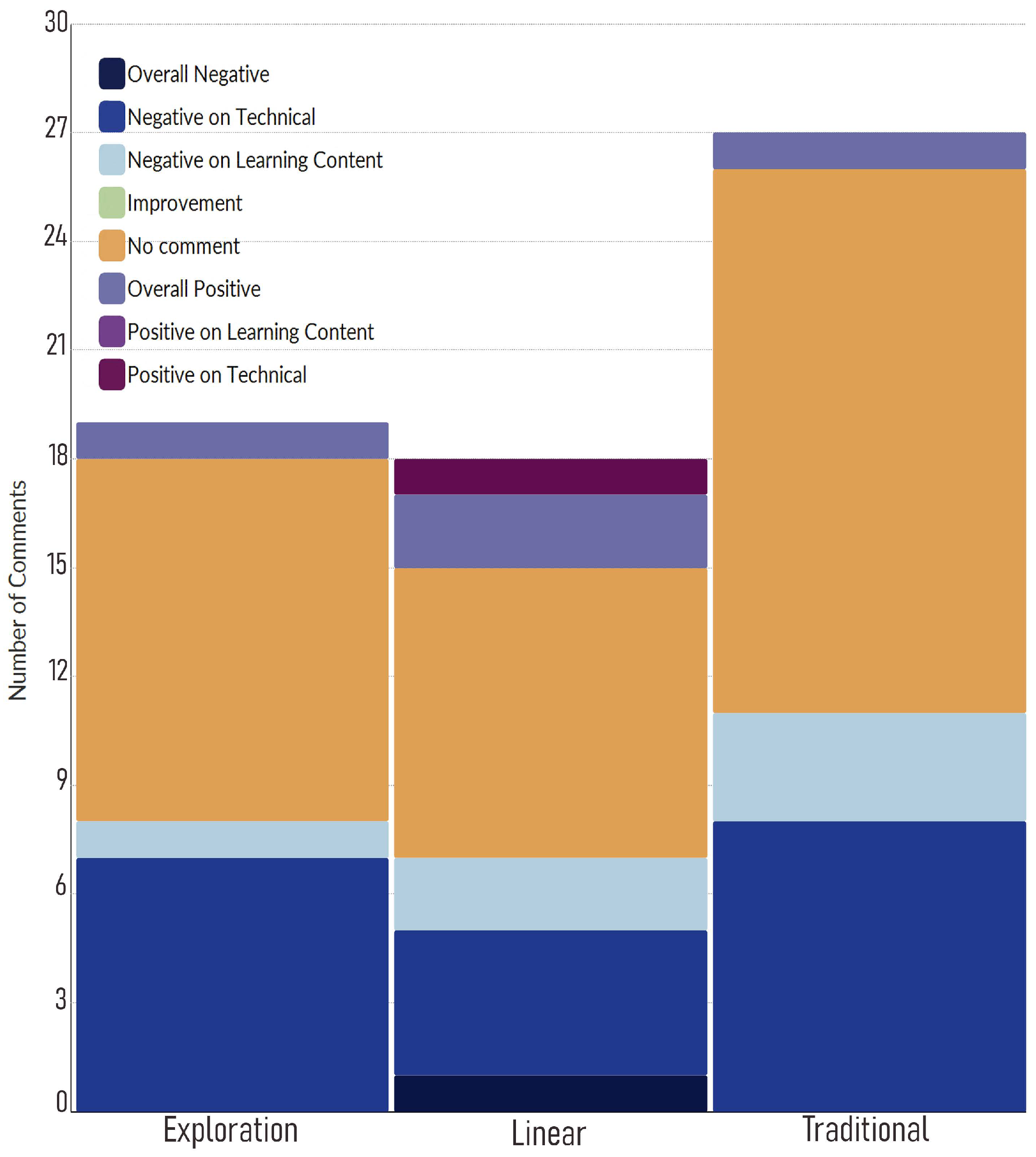

5. Discussion

5.1. Interactivity

5.2. Motivation and Engagement

5.3. Limitation and Future Research

- 1

- Limited learning resources: Another limitation is that as an in-house proof-of-concept prototype, our application has limited learning resources and functionalities for the user, and this could affect user engagement if they were expecting a commercial-grade application experience.

- 2

- Lack of adaptive design: In our study, the gamified environment was not personalised to learners’ cognitive styles, motivational drivers, or individual gamification preferences. Future work should include pre-assessments of learner profiles to better match gamification strategies with learner needs.

- 3

- Comprehensive user profile: In addition, the participants recruited were from a very similar demographic background—most of them were university undergraduate students, and a majority of them did not have study experiences in an English-speaking country. This could affect the results because some participants felt the content was less relevant to them. Moreover, not factoring in participants’ prior gaming experiences provided a neutral ground to evaluate their engagement and perceptions in the virtual learning environment without biases from past experiences. Future research should incorporate a more diverse participant demographic to mitigate the potential biases and provide robust outcomes.

- 4

- Short-term learning duration: Additionally, this research was designed during a Master’s study, which limited the duration of the learning session. While short-term studies like ours offer immediate insights, they might not capture the evolving nature of engagement and perceptions over time. Extended learning sessions in a longitudinal setup may potentially reveal different engagement patterns and perception trends not evident in our short-term investigation. Future research could beneficially extend this framework, applying our findings in a long-term study to explore the longitudinal benefits and provide a comprehensive gamification guide for language-learning environments.

6. Conclusions

- 1

- Our study revealed that second-language anxiety is not the key factor affecting the learning experience in any of the three modes in a virtual environment. The result indicated that a gamified virtual learning environment could be a powerful tool for language learning as it has the potential to ease users’ second-language anxiety.

- 2

- The results reveal that the traditional linear learning context with fewer interactions had the lowest learning experience in the L2 learning environment among the three groups, while participants in both the linear mode and the exploration mode reported a higher level of learning experiences and engagement. However, since the differences were not statistically significant, fully understanding the role of interaction in L2 virtual learning environments requires further investigation.

- 3

- As our study revealed that different modes in virtual language learning do not significantly affect L2 learning in terms of the learning experience, engagement, and perceived learning, we suggest that such applications can adopt any learning mode or a combination of different modes that best suits the learning content.

- 4

- AI should be integrated strategically to enhance specific pedagogical approaches, such as providing adaptive scaffolding for task-based learning, rather than being viewed as a one-size-fits-all solution. Its value lies in its ability to personalise, adapt, and provide targeted feedback within a well-defined learning strategy, guiding the learning process.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Butler, Y.G. English language education among young learners in East Asia: A review of current research (2004–2014). Lang. Teach. 2015, 48, 303–342. [Google Scholar] [CrossRef]

- Zheng, Y. A phantom to kill: The challenges for Chinese learners to use English as a global language: Why should we encourage a bilingual user identity of global English? Engl. Today 2014, 30, 34–39. [Google Scholar] [CrossRef]

- Flores, J.F.F. Using gamification to enhance second language learning. Digit. Educ. Rev. 2015, 27, 32–54. [Google Scholar]

- Alizadeh, M. The impact of motivation on English language learning. Int. J. Res. Engl. Educ. 2016, 1, 11–15. [Google Scholar]

- Brown, H.D.; Lee, H. Principles of Language Learning and Teaching; Longman: New York, NY, USA, 2000; Volume 4. [Google Scholar]

- McCombs, B.L. Motivation and lifelong learning. Educ. Psychol. 1991, 26, 117–127. [Google Scholar] [CrossRef]

- Matsubara, P.G.F.; Da Silva, C.L.C. Game elements in a software engineering study group: A case study. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering: Software Engineering Education and Training Track (ICSE-SEET), Buenos Aires, Argentina, 20–28 May 2017; pp. 160–169. [Google Scholar]

- Khodabandelou, R.; Fathi, M.; Amerian, M.; Fakhraie, M.R. A comprehensive analysis of the 21st century’s research trends in English Mobile Learning: A bibliographic review of the literature. Int. J. Inf. Learn. Technol. 2021; ahead of print. [Google Scholar]

- Poole, F.J.; Clarke-Midura, J. A systematic review of digital games in second language learning studies. Int. J. -Game-Based Learn. IJGBL 2020, 10, 1–15. [Google Scholar] [CrossRef]

- Zhang, R.; Cheng, G.; Chen, X. Game-based self-regulated language learning: Theoretical analysis and bibliometrics. PLoS ONE 2020, 15, e0243827. [Google Scholar] [CrossRef]

- Janson, A.; Sollner, M.; Leimeister, J.M. Ladders for learning: Is scaffolding the key to teaching problem-solving in technology-mediated learning contexts? Acad. Manag. Learn. Educ. 2020, 19, 439–468. [Google Scholar] [CrossRef]

- Jumaat, N.F.; Tasir, Z. Instructional scaffolding in online learning environment: A meta-analysis. In Proceedings of the 2014 International Conference on Teaching and Learning in Computing and Engineering, Kuching, Malaysia, 11–13 April 2014; pp. 74–77. [Google Scholar]

- Harden, R.; Laidlaw, J.M.; Ker, J.S.; Mitchell, H.E. Task-Based Learning: An educational strategy for undergraduate, postgraduate and continuing medical education, part 1. Med. Teach. 1996, 18, 7–14. [Google Scholar] [CrossRef]

- Engelmann, K.; Bannert, M.; Melzner, N. Do self-created metacognitive prompts promote short-and long-term effects in computer-based learning environments? Res. Pract. Technol. Enhanc. Learn. 2021, 16, 1–21. [Google Scholar] [CrossRef]

- Al-Smadi, M.; Wesiak, G.; Guetl, C. Assessment in serious games: An enhanced approach for integrated assessment forms and feedback to support guided learning. In Proceedings of the 2012 15th International Conference on Interactive Collaborative Learning (ICL), Villach, Austria, 26–28 September 2012; pp. 1–6. [Google Scholar]

- Lengyel, P.S. Can the game-based learning come? Virtual classroom in higher education of 21st century. Int. J. Emerg. Technol. Learn. 2020, 15, 112. [Google Scholar] [CrossRef]

- Chen, H.J.H.; Yang, T.Y.C. The impact of adventure video games on foreign language learning and the perceptions of learners. Interact. Learn. Environ. 2013, 21, 129–141. [Google Scholar] [CrossRef]

- Govender, T.; Arnedo-Moreno, J. An analysis of game design elements used in digital game-based language learning. Sustainability 2021, 13, 6679. [Google Scholar] [CrossRef]

- DeHaan, J.; Reed, W.M.; Kuwanda, K. The effect of interactivity with a music video game on second language vocabulary recall. Lang. Learn. Technol. 2010, 14, 74–94. [Google Scholar]

- Xu, Z.; Chen, Z.; Eutsler, L.; Geng, Z.; Kogut, A. A scoping review of digital game-based technology on English language learning. Educ. Technol. Res. Dev. 2020, 68, 877–904. [Google Scholar] [CrossRef]

- Prabawa, H. A Review of gamification in technological pedagogical content Knowledge. Proc. J. Phys. Conf. Ser. 2017, 812, 012019. [Google Scholar] [CrossRef]

- Dicheva, D.; Dichev, C.; Agre, G.; Angelova, G. Gamification in education: A systematic mapping study. J. Educ. Technol. Soc. 2015, 18, 75–88. [Google Scholar]

- Park, S.; Kim, S. A badge design framework for a gamified learning environment: Cases analysis and literature review for badge design. JMIR Serious Games 2019, 7, e14342. [Google Scholar] [CrossRef]

- Starks, K. Cognitive behavioral game design: A unified model for designing serious games. Front. Psychol. 2014, 5, 28. [Google Scholar] [CrossRef]

- Lavoue, E.; Ju, Q.; Hallifax, S.; Serna, A. Analyzing the relationships between learners’ motivation and observable engaged behaviors in a gamified learning environment. Int. J. Hum. Comput. Stud. 2021, 154, 102670. [Google Scholar] [CrossRef]

- dos Santos, L.F.; Oliveira, W.; Corrêa de Lima, A.; de Castro Junior, A.A.; Hamari, J. The Effects of Gamification on Learners’ Engagement According to Their Gamification User Types. In Technology, Knowledge and Learning; Springer Nature: Heidelberg, Germany, 2025; pp. 1–21. [Google Scholar]

- Marczewski, A. Even Ninja Monkeys Like to Play; Blurb Inc.: London, UK, 2015; Volume 1, p. 28. [Google Scholar]

- Toda, A.M.; Oliveira, W.; Klock, A.C.; Palomino, P.T.; Pimenta, M.; Gasparini, I.; Shi, L.; Bittencourt, I.; Isotani, S.; Cristea, A.I. A taxonomy of game elements for gamification in educational contexts: Proposal and evaluation. In Proceedings of the 2019 IEEE 19th International Conference on Advanced Learning Technologies (ICALT), Maceio, Brazil, 15–18 July 2019; Volume 2161, pp. 84–88. [Google Scholar]

- O’Brien, H.L.; Cairns, P.; Hall, M. A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. Int. J. Hum. Comput. Stud. 2018, 112, 28–39. [Google Scholar] [CrossRef]

- Ye, J.H.; Watthanapas, N.; Wu, Y.F. Applying Kahoot in Thai Language and Culture Curriculum: Analysis of the Relationship among Online Cognitive Failure, Flow Experience, Gameplay Anxiety and Learning Performance. Int. J. Inf. Educ. Technol. 2020, 10, 563–572. [Google Scholar] [CrossRef]

- Harris, C.; Sun, B. Assessing the Effect of Interactivity Design In VR Based Second Language Learning Tool. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, 17–21 October 2022; pp. 18–25. [Google Scholar]

- Rapp, A.; Hopfgartner, F.; Hamari, J.; Linehan, C.; Cena, F. Strengthening gamification studies: Current trends and future opportunities of gamification research. Int. J. Hum. Comput. Stud. 2019, 127, 1–6. [Google Scholar] [CrossRef]

- Huang, Y.M.; Huang, Y.M. A scaffolding strategy to develop handheld sensor-based vocabulary games for improving students’ learning motivation and performance. Educ. Technol. Res. Dev. 2015, 63, 691–708. [Google Scholar] [CrossRef]

- Hanus, M.D.; Fox, J. Assessing the effects of gamification in the classroom: A longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Comput. Educ. 2015, 80, 152–161. [Google Scholar] [CrossRef]

- Gobert, J.D.; Pedro, M.A.S.; Baker, R.S.; Toto, E.; Montalvo, O. Leveraging Educational Data Mining for Real-time Performance Assessment of Scientific Inquiry Skills within Microworlds. J. Educ. Data Min. 2012, 4, 111–143. [Google Scholar] [CrossRef]

- Holmes, W.; Tuomi, I. State of the art and practice in AI in education. Eur. J. Educ. 2022, 57, 542–570. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Koper, R.; Tattersall, C. New directions for lifelong learning using network technologies. Br. J. Educ. Technol. 2004, 35, 689–700. [Google Scholar] [CrossRef]

- Dehghanzadeh, H.; Fardanesh, H.; Hatami, J.; Talaee, E.; Noroozi, O. Using gamification to support learning English as a second language: A systematic review. Comput. Assist. Lang. Learn. 2021, 34, 934–957. [Google Scholar] [CrossRef]

- Ebrahimzadeh, M.; Alavi, S. Digital Video Games: E-learning Enjoyment as a Predictor of Vocabulary Learning. Electron. J. Foreign Lang. Teach. 2017, 14, 145–158. [Google Scholar]

- Sharma, S.; Yadav, R. Chat GPT–A technological remedy or challenge for education system. Glob. J. Enterp. Inf. Syst. 2022, 14, 46–51. [Google Scholar]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Almuqren, L.; Almgren, A.S.; Alshimai, A.; Al-Adwan, A.S. The Effect of AI Gamification on Students’ Engagement and Academic Achievement: SEM Analysis Perspectives. IEEE Access 2025, 13, 70791–70810. [Google Scholar] [CrossRef]

- Montella, R.; De Vita, C.G.; Mellone, G.; Ciricillo, T.; Caramiello, D.; Di Luccio, D.; Kosta, S.; Damaševičius, R.; Maskeliūnas, R.; Queirós, R.; et al. Leveraging large language models to support authoring gamified programming exercises. Appl. Sci. 2024, 14, 8344. [Google Scholar] [CrossRef]

- Kok, C.L.; Koh, Y.Y.; Ho, C.K.; Teo, T.H.; Lee, C. Enhancing Learning: Gamification and Immersive Experiences with AI. In Proceedings of the TENCON 2024-2024 IEEE Region 10 Conference (TENCON), Singapore, 1–4 December 2024; pp. 1853–1856. [Google Scholar]

- Yaikhong, K.; Usaha, S. A Measure of EFL Public Speaking Class Anxiety: Scale Development and Preliminary Validation and Reliability. Engl. Lang. Teach. 2012, 5, 23–35. [Google Scholar] [CrossRef]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum. Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Jeffries, P.R.; Rizzolo, M.A. Student Satisfaction and Self-Confidence in Learning; National League for Nursing: New York, NY, USA, 2005; Available online: https://www.nln.org/docs/default-source/uploadedfiles/default-document-library/instrument-2-satisfaction-and-self-confidence-in-learning.pdf (accessed on 1 April 2022).

- Alamprese, J.A.; Dunton, L. CAELA Network Evaluation Report; Technical Report; Abt Associates: Cambridge, MA, USA, 2010. [Google Scholar]

- Franklin, A.E.; Burns, P.; Lee, C.S. Psychometric testing on the NLN Student Satisfaction and Self-Confidence in Learning, Simulation Design Scale, and Educational Practices Questionnaire using a sample of pre-licensure novice nurses. Nurse Educ. Today 2014, 34, 1298–1304. [Google Scholar] [CrossRef]

- Sawir, E. Language difficulties of international students in Australia: The effects of prior learning experience. Int. Educ. J. 2005, 6, 567–580. [Google Scholar]

- You, C.; Dörnyei, Z.; Csizér, K. Motivation, vision, and gender: A survey of learners of English in China. Lang. Learn. 2016, 66, 94–123. [Google Scholar] [CrossRef]

- Dörnyei, Z. Towards a better understanding of the L2 Learning Experience, the Cinderella of the L2 Motivational Self System. Stud. Second. Lang. Learn. Teach. 2019, 9, 19–30. [Google Scholar] [CrossRef]

- Kasbi, S.; Elahi Shirvan, M. Ecological understanding of foreign language speaking anxiety: Emerging patterns and dynamic systems. Asian-Pac. J. Second Foreign Lang. Educ. 2017, 2, 1–20. [Google Scholar] [CrossRef]

- Jun, M.; Lucas, T. Gamification elements and their impacts on education: A review. Multidiscip. Rev. 2025, 8, 2025155. [Google Scholar] [CrossRef]

- Li, X.; Xia, Q.; Chu, S.K.W.; Yang, Y. Using gamification to facilitate students’ self-regulation in e-learning: A case study on students’ L2 English learning. Sustainability 2022, 14, 7008. [Google Scholar] [CrossRef]

- Sun, J.C.Y.; Hsieh, P.H. Application of a gamified interactive response system to enhance the intrinsic and extrinsic motivation, student engagement, and attention of English learners. J. Educ. Technol. Soc. 2018, 21, 104–116. [Google Scholar]

| Game Mode | |||

|---|---|---|---|

| Traditional | Linear | Exploration | |

| Full Learning Content | ✓ | ✓ | ✓ |

| Role-play Practice | ✓ | ✓ | ✓ |

| Movement Control | ✗ | ✓ | ✓ |

| Interactive Environment | ✗ | ✓ | ✓ |

| Progress Control | ✗ | ✗ | ✓ |

| Questions |

|---|

| General questions What is your ID on the home screen? What is your first language? Which country are you living in now? Please indicate your self-assessed English level |

| Survey questions (1)You get nervous and confused when you are speaking English. (2) You want to speak less because you feel shy while speaking English. (3) You start to panic when you have to speak English without preparation in advance. (4) You feel less anxious to speak English after you have practised and prepared the conversation. (5) Rate the difficulty you experienced in carrying out the task (6) The contents/materials distributed were helpful. (7) You were making progress toward the end of the session. (8) You are confident that you are mastering the content of the simulation activity that the training presented to me. (9) Overall, how interested are you in the learning session? (10) Participation and interaction were encouraged in the learning session. (11) You were motivated to continue learning new content in the learning session (12) Did you find yourself becoming so involved that you were unaware you were even using controls at any point in the learning session? (13) The teaching methods used in this training were helpful and effective. (14) Overall, you were guided toward the end of the session. (15) You know how to get help when you do not understand the concepts covered in this training. (16) Overall, the learning session was easy to follow. (17) Did you enjoy the learning session? (18) You would recommend the learning materials to your friends or classmates. Do you have any other comments regarding the questions above? |

| Component | |||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| 1 | −0.028 | 0.897 | −0.041 | 0.009 | 0.054 |

| 2 | −0.063 | 0.926 | −0.071 | 0.000 | −0.117 |

| 3 | 0.177 | 0.813 | −0.041 | −0.159 | −0.011 |

| 4 | 0.293 | 0.117 | 0.752 | −0.047 | −0.236 |

| 5 | 0.111 | 0.209 | −0.789 | 0.068 | −0.254 |

| 6 | 0.554 | 0.125 | −0.023 | −0.835 | −0.074 |

| 7 | 0.547 | 0.072 | 0.016 | −0.891 | 0.093 |

| 8 | 0.274 | 0.044 | 0.307 | −0.704 | 0.557 |

| 9 | 0.844 | 0.174 | 0.106 | −0.501 | 0.128 |

| 10 | 0.813 | 0.179 | 0.134 | −0.579 | 0.091 |

| 11 | 0.873 | 0.148 | 0.177 | −0.543 | 0.111 |

| 12 | 0.792 | −0.058 | 0.092 | −0.362 | 0.031 |

| 13 | 0.890 | −0.012 | 0.072 | −0.337 | 0.132 |

| 14 | 0.777 | −0.005 | 0.094 | −0.480 | 0.217 |

| 15 | 0.556 | 0.117 | −0.073 | −0.185 | 0.721 |

| 16 | 0.571 | −0.081 | 0.358 | −0.433 | 0.608 |

| 17 | 0.832 | 0.008 | 0.031 | −0.417 | 0.309 |

| 18 | 0.884 | 0.075 | 0.046 | −0.333 | 0.216 |

| H1.1 | H1.2 | H1.3 | H1.4 | H1.5 | |

|---|---|---|---|---|---|

| Normality | < = 0.05 | < = 0.05 | < = 0.05 | < = 0.05 | < = 0.05 |

| K-W ANOVA | > = 0.05 | > = 0.05 | > = 0.05 | > = 0.05 | > = 0.05 |

| Descriptives | No Diff | No Diff | No Diff | No Diff | No Diff |

| Homogeneity | > = 0.05 | < = 0.05 | > = 0.05 | > = 0.05 | > = 0.05 |

| One-way ANOVA | > = 0.05, F < 0.05 | > = 0.05, F > 0.05 | > = 0.05, F > 0.05 | > = 0.05, F > 0.05 | > = 0.05, F > 0.05 |

| Turkey HSD | < = 0.05 | N/A | N/A | N/A | N/A |

| Power Calculation | > = 0.8 | > = 0.8 | > = 0.8 | > = 0.8 | > = 0.8 |

| Null Hypothesis | Accepted | Accepted | Accepted | Accepted | Accepted |

| N | Mean | Std. Deviation | ||

|---|---|---|---|---|

| Positive Interactions | Traditional | 36 | 56.69 | 54.393 |

| Linear | 34 | 89.12 | 168.374 | |

| Exploration | 30 | 55.60 | 75.664 | |

| Total | 100 | 67.39 | 111.440 | |

| Negative Interactions | Traditional | 36 | 38.03 | 16.455 |

| Linear | 34 | 32.26 | 13.583 | |

| Exploration | 30 | 30.53 | 17.336 | |

| Total | 100 | 33.82 | 15.994 | |

| Total Interactions | Traditional | 36 | 94.72 | 56.996 |

| Linear | 34 | 121.38 | 171.438 | |

| Exploration | 30 | 86.13 | 80.451 | |

| Total | 100 | 101.21 | 114.304 | |

| Time Spent (sec) | Traditional | 36 | 2139.89 | 1005.020 |

| Linear | 34 | 2057.65 | 914.918 | |

| Exploration | 30 | 2019.73 | 803.709 | |

| Total | 100 | 2075.88 | 909.887 | |

| N | Mean | Std. Deviation | ||

|---|---|---|---|---|

| Experience | Traditional | 35 | 3.3714 | 0.78969 |

| Linear | 34 | 3.3750 | 0.74366 | |

| Exploration | 31 | 3.6935 | 0.72875 | |

| Total | 100 | 3.4725 | 0.76264 | |

| English Anxiety | Traditional | 35 | 3.2571 | 0.70981 |

| Linear | 34 | 3.1961 | 0.92521 | |

| Exploration | 31 | 3.2043 | 0.94154 | |

| Total | 100 | 3.2200 | 0.85309 | |

| Difficulty | Traditional | 35 | 3.3286 | 0.70651 |

| Linear | 34 | 3.3382 | 0.59950 | |

| Exploration | 31 | 3.3387 | 0.62433 | |

| Total | 100 | 3.3350 | 0.63982 | |

| Confidence | Traditional | 35 | 3.3048 | 0.66848 |

| Linear | 34 | 3.4216 | 0.80949 | |

| Exploration | 31 | 3.6452 | 0.57048 | |

| Total | 100 | 3.4500 | 0.70013 | |

| Guidance | Traditional | 35 | 3.5857 | 0.65849 |

| Linear | 34 | 3.4412 | 0.83271 | |

| Exploration | 31 | 3.5806 | 0.77564 | |

| Total | 100 | 3.5350 | 0.75296 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, X.; Wang, R.; Hoang, T.; Ranaweera, C.; Dong, C.; Myers, T. AI-Powered Gamified Scaffolding: Transforming Learning in Virtual Learning Environment. Electronics 2025, 14, 2732. https://doi.org/10.3390/electronics14132732

Jiang X, Wang R, Hoang T, Ranaweera C, Dong C, Myers T. AI-Powered Gamified Scaffolding: Transforming Learning in Virtual Learning Environment. Electronics. 2025; 14(13):2732. https://doi.org/10.3390/electronics14132732

Chicago/Turabian StyleJiang, Xuemei, Rui Wang, Thuong Hoang, Chathurika Ranaweera, Chengzu Dong, and Trina Myers. 2025. "AI-Powered Gamified Scaffolding: Transforming Learning in Virtual Learning Environment" Electronics 14, no. 13: 2732. https://doi.org/10.3390/electronics14132732

APA StyleJiang, X., Wang, R., Hoang, T., Ranaweera, C., Dong, C., & Myers, T. (2025). AI-Powered Gamified Scaffolding: Transforming Learning in Virtual Learning Environment. Electronics, 14(13), 2732. https://doi.org/10.3390/electronics14132732