Abstract

Industrial defect detection in edge computing environments faces critical challenges in balancing accuracy, efficiency, and adaptability under data scarcity. To address these limitations, we propose the Hybrid Anomaly Detection System (HyADS), a novel lightweight framework for edge-based industrial defect detection. HyADS integrates three synergistic modules: (1) a feature extractor that integrates Histogram of Oriented Gradients (HOG) and Local Binary Patterns (LBP) to capture robust texture features, (2) a lightweight U-net autoencoder that reconstructs normal patterns while preserving spatial details to highlight small-scale defects, and (3) an adaptive patch matching module inspired by memory bank retrieval principles to accurately localize local outliers. These components are synergistically fused and then fed into a segmentation head that unifies global reconstruction errors and local anomaly maps into pixel-accurate defect masks. Extensive experiments on the MVTec AD, NEU, and Severstal datasets demonstrate state-of-the-art performance. Notably, HyADS achieves state-of-the-art F1 scores (94.1% on MVTec) in anomaly detection and IoU scores (85.5% on NEU/82.8% on Seversta) in segmentation. Designed for edge deployment, this framework achieves real-time inference (40–45 FPS on an RTX 4080 GPU) with minimal computational overheads, providing a practical solution for industrial quality control in resource-constrained environments.

1. Introduction

The rapid advancement of smart manufacturing and the Industrial Internet of Things (IIoT) has positioned high-precision real-time defect detection as a critical component for ensuring product quality and minimizing production losses. In edge computing architectures, detection systems must operate directly on factory-end devices (e.g., embedded cameras and industrial robots), imposing stringent requirements on algorithmic lightweight design, real-time performance, and data efficiency. However, three fundamental challenges persist in industrial scenarios: the extreme scarcity of anomalous samples (e.g., <1% defect occurrence in the MVTec AD dataset []), the multi-scale nature of defects (ranging from millimeter-scale cracks to meter-level structural deformations), and the limited computational resources of edge devices (e.g., GPUs with <8 GB memory). These challenges collectively hinder the deployment of existing solutions in practical settings.

Current methodologies exhibit notable limitations when addressing these constraints. Traditional handcrafted features (e.g., LBP [] and HOG []), while stable in low-data regimes, fail to capture subtle anomalies in complex textures. Autoencoders [] enable unsupervised detection by reconstructing normal samples but often overlook localized defects due to their global optimization objectives. Patch-based retrieval approaches like PatchCore [] improve sensitivity to local anomalies but lack end-to-end pixel-level segmentation capabilities, with high-dimensional feature matching further exacerbating computational overheads. Crucially, these methods typically rely on isolated detection mechanisms, neglecting the synergistic integration of the global structural context and local anomaly cues, thereby compromising the balance between generalization capability and efficiency in dynamic industrial environments.

To address these challenges, we propose the Hybrid Anomaly Detection System (HyADS), a novel framework designed for edge-based anomaly detection. HyADS synergistically integrates three core innovations to achieve robust anomaly localization under resource constraints. This study focuses on how to efficiently and in real time detect abnormal defects on production lines when industrial computing power is limited and labeled data are scarce. Specifically, we designed a lightweight detection solution that can quickly locate defective areas and output pixel-level segmentation results in the absence of a large number of manually annotated samples to meet the actual application needs of edge devices. Building on classical texture analysis principles, a hybrid feature extractor combines Histogram of Oriented Gradients (HOG) and Local Binary Patterns (LBP) to capture rotation- and lighting-robust texture features, providing foundational robustness against environmental variations. Simultaneously, a lightweight U-Net autoencoder preserves spatial details through skip connections, reconstructing normal patterns while amplifying global reconstruction errors caused by subtle defects. Further enhancing localization precision, an adaptive patch-matching module dynamically compares local regions against a precomputed memory bank of normal samples to isolate anomalies at the pixel level. The framework systematically fuses the following components: the hybrid texture features, the global reconstruction error maps generated by the U-Net, and the pixel-wise anomaly scores from the patch-matching module. Finally, the outputs of the above modules are adaptively weighted and input into a segmentation head to generate pixel-level defect masks. Extensive experiments on industrial benchmarks including the MVTec AD, NEU, and Severstal datasets demonstrate state-of-the-art performance. HyADS achieves an F1-score of 94.1% on MVTec AD, surpassing PatchCore [] by 2.2%, and delivers IoU scores of 85.5% (NEU []) and 82.8% (Severstal []) for segmentation, respectively, representing a 5–7% improvement over existing state-of-the-art methods. Thanks to its lightweight design, the framework achieves real-time inference speeds of 40–45 FPS on an NVIDIA RTX 4080 GPU, providing a practical solution for edge deployment. The main contributions of this work are summarized as follows:

- We propose the Hybrid Anomaly Detection System (HyADS), a novel lightweight framework for edge-based industrial systems that achieves real-time inference (40–45 FPS) under extreme data scarcity (<1% anomalies).

- We develop three synergistic components: a hybrid HOG-LBP feature extractor that captures lighting-robust texture patterns, a spatial-preserving U-Net autoencoder for global anomaly amplification, and an adaptive patch matching module inspired by memory bank mechanisms for pixel-level defect localization.

- We design a fusion strategy with synergistic integration that fuses three complementary sources of anomaly information global reconstruction errors, local anomaly scores, and hybrid texture features through a lightweight convolutional head.

The remainder of this article is structured as follows: Section 2 reviews related work in industrial anomaly detection. Section 3 details the HyADS architecture and algorithmic components. Section 4 presents experimental results and comparisons with baselines. Section 5 discusses practical implications and limitations, while Section 6 concludes with future research directions.

2. Related Work

In the quest to design robust industrial anomaly detection techniques, researchers initially relied on classical descriptors to capture local intensity patterns and gradients. Ojala et al. [] introduced the Local Binary Pattern (LBP), which operates effectively with small training sets by applying a simple binary encoding around each pixel. Although LBP is generally suited to basic textures, it may overlook intricate surface variations and thus face challenges when tackling more subtle or complex anomalies. Subsequently, Dalal and Triggs [] presented the Histogram of Oriented Gradients (HOG), a gradient-based feature descriptor. While HOG can capture edges or specific shapes, it has limited efficacy when confronted with irregularly shaped or very small defects.

Bergmann et al. [] proposed a reconstruction-based strategy using autoencoders, hypothesizing that normal patterns can be learned from defect-free data and that reconstruction errors would reveal anomalies. This global approach can identify a variety of defect types, although local or high-frequency irregularities are sometimes overlooked. In an effort to address such limitations, Venkataramanan et al. [] explored skip connections and attention mechanisms within autoencoders to preserve spatial details; however, purely reconstruction-oriented methods may still miss subtle local deviations, particularly in challenging environments.

Roth et al. [] introduced PatchCore, a nearest-neighbor search technique that detects anomalous patches by comparing them against a reference set of normal features. PatchCore demonstrates high sensitivity to isolated or small defects but does not inherently produce segmentation masks. Akçay et al. [] proposed GANomaly, which leverages adversarial training for reconstruction to amplify the global error signal; nonetheless, it does not explicitly isolate small-scale anomalies.

In the field of industrial defect detection in recent years, the DRAEM method [] has improved the generalization of the model by generating abnormal data in a self-supervised manner, but its complex network structure faces great computational pressure when deployed on edge devices, while the PaDiM method [] achieved anomaly detection through high-dimensional feature distribution statistics. Although it performs well on conventional hardware, it also has certain shortcomings in terms of memory usage and real-time reasoning. Compared with these methods, the HyADS framework proposed in this paper achieves better real-time performance and resource utilization by combining traditional LBP and HOG texture features and a lightweight U-Net structure, making it more suitable for industrial sites with limited computing resources.

The comparison in Table 1 highlights various industrial anomaly detection approaches. These methods may rely on classical descriptors, autoencoder-based reconstruction, patchwise retrieval, or segmentation, each with particular strengths and weaknesses. For example, limited-data conditions often favor traditional descriptors, whereas global reconstruction methods and patch-based techniques can handle different facets of anomalies but may omit fine-grained mask generation or have difficulties in capturing detailed defects. By contrast, the framework presented in this paper integrates these ideas—classical descriptors, a reconstruction-based autoencoder, patchwise retrieval, and segmentation—into a unified system capable of detecting both global and highly localized anomalies.

Table 1.

Comparative overview of industrial anomaly detection methods, indicating the usage of classical descriptors (LBP/HOG), autoencoder reconstruction, patchwise retrieval, and segmentation. The “Approach and Limitation” column concisely describes the primary technique and its main shortcoming. (Symbol “🗸” indicates the method adopts that component, “✗” means it is absent.)

Although these methods offer valuable insights, they often encounter trade-offs between data utility and computational overheads, particularly as data volumes grow or the number of end-users rises. Such constraints affect the usability of existing solutions in scenarios like UAV-based inspection or distributed industrial monitoring. Additional factors—including compatibility with existing infrastructures, user-friendliness, and interoperability across different platforms—further complicate efforts to achieve robust, privacy-aware detection on resource-constrained edge devices. In particular, ensuring both anonymity and efficient learning in systems where private information must remain on the edge is a non-trivial challenge.

To address these issues, the subsequent sections in this paper propose a novel hybrid detection pipeline, aimed at integrating the best elements of classical and deep learning-based methods under minimal supervision. Furthermore, a tailored Federated Learning (FL) framework with localized differential privacy (LDP) and shuffle strategies is introduced to enhance the confidentiality of user data without substantially degrading model performance. By shuffling model parameters, correlations between uploaded data and specific participants are mitigated, providing a higher degree of anonymity. The resulting approach, termed FedShufde, merges privacy protection with effective anomaly detection, highlighting how advanced techniques can be extended to large-scale industrial or UAV-centric environments. Further details are provided in Section 4.

3. Methodology

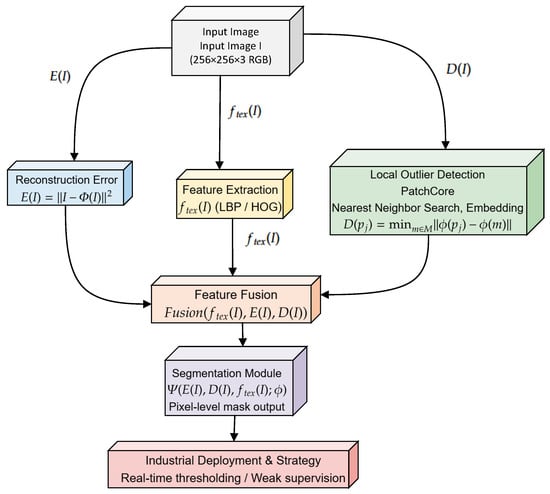

This section introduces our proposed Hybrid Anomaly Detection System (HyADS), which effectively integrates classical texture descriptors, a U-Net autoencoder for global reconstruction, PatchCore-based local anomaly detection, and a final fusion-driven segmentation module. This comprehensive framework is specifically designed to identify both large-scale and subtle defects under challenging industrial conditions, including limited annotation availability and varying operational environments. An overview of the complete pipeline and its modules is illustrated in Figure 1.

Figure 1.

Overview of our hybrid anomaly detection framework. Given an input image , classical texture features are first extracted using LBP and HOG. Subsequently, a U-Net autoencoder produces a global reconstruction error map , while PatchCore computes a patch-based local anomaly map by comparing image patches to a memory bank of normal embeddings. These outputs () are then fused within a lightweight segmentation module , generating precise pixel-level defect masks suitable for real-time industrial inspection under limited annotation constraints.

In this study, we selected LBP and HOG traditional texture features because these two features can capture texture details without a lot of training in a data-scarce environment; the U-Net autoencoder was selected to utilize its efficient image reconstruction capabilities to detect abnormal areas; and the PatchCore module was selected to specifically capture local minor anomalies. In addition, in order to more clearly show the collaborative relationship between data flow and modules, we added a detailed description to the flowchart in Figure 1: starting from the input image, through the fusion of texture features, U-Net reconstruction errors and PatchCore local anomaly distance maps, the lightweight segmentation network finally generates a pixel-level defect mask.

Specifically to address the problems of scarce data annotation and insufficient computing power of edge devices in actual industrial scenarios, we selected LBP and HOG texture features to reduce the need for large amounts of training data, and used the U-Net autoencoder’s reconstruction error to effectively locate global structural anomalies. The PatchCore module locates subtle defects through local feature comparison, thereby jointly achieving the goal of real-time, less-data-dependent defect detection.

3.1. Framework Overview

This section briefly introduces the overall data flow and core process of the HyADS framework. As shown in Figure 1, the traditional texture features (LBP and HOG) are first extracted from the input image, and the reconstruction error map of the image is generated using a pre-trained U-Net autoencoder. Additionally, after the image is processed to extract ResNet-18 features, the local anomaly distance map is generated by the PatchCore module. Subsequently, the above three feature maps are adaptively weighted and fused and then input into the lightweight segmentation head module.

3.2. Classical Texture Feature Extraction

Classical texture descriptors, such as the Local Binary Pattern (LBP) [] and the Histogram of Oriented Gradients (HOG) [], offer robust initial characterization of image textures, particularly advantageous when labeled data are scarce or environmental variations are significant. LBP encodes local pixel intensity differences into binary patterns, capturing texture information effectively, while HOG delineates local gradient orientations in structured cell regions, emphasizing edge-related features. In our framework, LBP with radius 1 and 8 neighbors and HOG with cells and blocks are selected based on prior studies [] and validation experiments. These settings allow effective feature extraction with minimal computational cost. We specifically integrate these descriptors to improve detection robustness under limited training data, making the method more suitable for edge deployment scenarios. Each input image is resized and LBP and HOG features are extracted and concatenated, forming a comprehensive texture vector .

3.3. Autoencoder-Based Reconstruction (U-Net)

To capture global structural deviations, we adopt a U-Net autoencoder trained solely on defect-free (normal) images. Let represent the U-Net parameterized by . The autoencoder is optimized by minimizing the mean squared error (MSE) between normal training samples and their reconstructions:

where N is the total number of normal samples. During inference, the autoencoder generates a global reconstruction error map:

where elevated values in signal significant deviations from normal patterns.

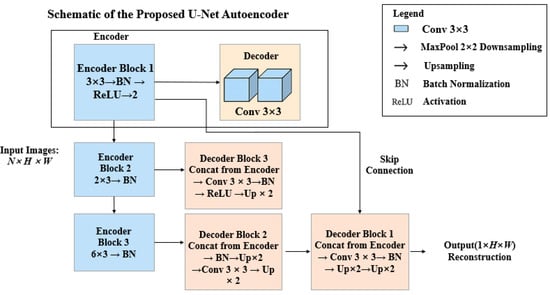

Figure 2 illustrates the detailed architecture of the U-Net autoencoder. The encoder comprises three successive downsampling blocks (Conv 3×3 → BN → ReLU → MaxPool), with feature channel dimensions progressively increasing (64, 128, 256, and 512). The decoder utilizes transposed convolutions (or upsampling), followed by convolutional layers (Conv+BN+ReLU), and integrates corresponding encoder features through skip connections, effectively preserving spatial detail critical for highlighting subtle defects.

Figure 2.

Detailed structure of the U-Net autoencoder. Encoder: Three downsampling stages with channel dimensions sequentially expanding from 64 to 512, each comprising Conv 3×3, batch normalization, ReLU activation, and max pooling. Decoder: Corresponding upsampling stages with transposed convolutions and concatenated skip connections from the encoder, restoring fine-grained spatial detail.

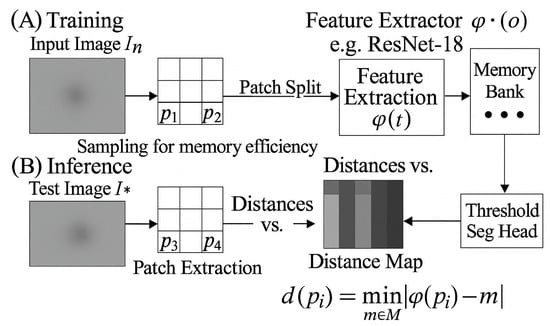

3.4. PatchCore-Based Local Anomaly Detection

While the autoencoder effectively identifies global anomalies, subtle or localized defects may evade detection if they minimally impact overall reconstruction quality. PatchCore [] addresses this limitation through a patch-wise anomaly detection strategy. To reduce memory and computational overheads, we adopt a simplified PatchCore variant. During training (Figure 3A), features from normal patches are embedded via a ResNet-18 backbone and selectively stored in memory using reservoir sampling. This strategy limits storage to 10% of patches, maintaining diversity while controlling resource usage.

Figure 3.

PatchCore anomaly detection approach. (A) Training phase: Patches from normal images are extracted, embedded, and selectively stored in a memory bank. (B) Inference phase: Test patch embeddings are compared against the memory, forming a local anomaly map that highlights anomalous regions.

During inference (Figure 3B), each test patch is embedded similarly, and its local anomaly score is computed as the minimal distance to patches in the memory bank:

The set of these distances forms the patch-based local anomaly map , effectively isolating fine-grained anomalies. In the training phase (as shown in Figure 3A), we first use the pre-trained feature extractor (ResNet-18 is used as the backbone network in this paper) to extract features from all normal image blocks to obtain high-dimensional feature representations. Considering computational efficiency and feature diversity, we use the reservoir sampling method to randomly and uniformly extract about 10% of the feature vectors from all normal sample features and store them in the memory bank M. This sampling method ensures that when new normal samples are added, the memory bank can be dynamically updated to ensure that the stored samples are representative without increasing memory overheads indefinitely.

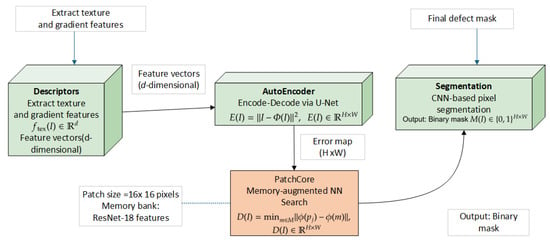

3.5. Fusion Strategy and Synergistic Integration

Fusion Strategy. Effective industrial inspection demands precise pixel-level defect localization. We fuse three complementary sources of anomaly information: the global reconstruction error map from the U-Net autoencoder; the patch-based local anomaly map: from PatchCore; and the texture feature vector: derived from LBP and HOG. These fused features are input into a lightweight segmentation network , producing a binary defect mask:

When pixel-level annotations are scarce, we adopt an adaptive weighted averaging strategy for and . Relative confidence scores, derived empirically from validation performance, ensure reliable segmentation without extensive annotations.

In the adaptive fusion stage, the fusion weights are determined by an empirical parameter search method: we first evaluate the impact of the U-Net reconstruction error map and the PatchCore local anomaly distance map on the final segmentation performance on the validation set, and then determine a set of empirically optimal relative weights based on the F1-score indicators corresponding to different weight combinations in the validation set. The weights obtained in this way can effectively balance the advantages of the global and local anomaly detection modules, so that the overall segmentation effect is optimal in the case of low annotation.

Synergistic Integration. Figure 4 presents a detailed workflow for the fusion-driven segmentation module. The U-Net excels at detecting global deviations, PatchCore reliably identifies local anomalies, and classical texture features provide robustness under challenging scenarios. Ablation studies (Section 4) confirm that integrating these components yields a 5–7% improvement in Intersection-over-Union (IoU), significantly enhancing detection accuracy across diverse defect types.

Figure 4.

Dataflow diagram of the fusion-driven segmentation module. The segmentation network integrates U-Net’s global reconstruction errors , PatchCore’s local anomaly distances , and supplementary texture descriptors , generating a precise binary mask crucial for industrial defect monitoring.

4. Experiments and Results

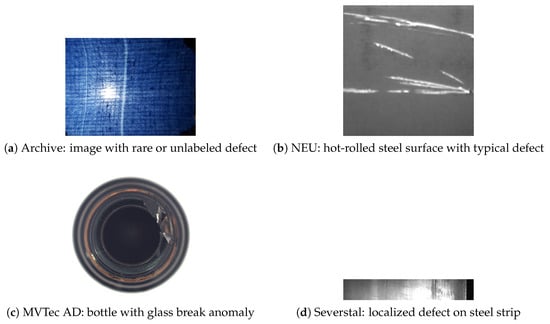

We evaluated our anomaly detection framework on four distinct industrial datasets to assess its adaptability and effectiveness across various defect types and annotation granularities. Specifically, we utilized the MVTec AD dataset [], NEU Surface Defect Database [], Severstal Steel Defect Detection dataset [], and a smaller internal archive. These datasets encompass diverse textures, defect morphologies, and levels of labeling granularity, from image-level labels to precise pixel-level annotations. Representative samples from each dataset are illustrated in Figure 5.

Figure 5.

Representative samples from four industrial anomaly detection datasets, each illustrating distinct defect patterns in different manufacturing scenarios. (a) Archive provides scarce or unlabeled anomalies; (b) NEU highlights hot-rolled steel with recurring defects; (c) MVTec AD captures complex object-level anomalies; and (d) Severstal offers pixel-level defect annotations on steel strips.

All experiments were implemented using PyTorch 1.13.1 and conducted on an NVIDIA RTX 4080 GPU (16 GB memory) with CUDA 12.1. Images were resized to pixels and lightly augmented through random cropping and horizontal flipping. Classical descriptors (LBP and HOG) were extracted using scikit-image: LBP with radius = 1 and 8 neighbors and HOG with cell size and block size []. Both plain and U-Net autoencoders were trained exclusively on defect-free samples using the Adam optimizer (learning rate=) for 30 epochs. PatchCore utilized a ResNet-18 backbone pretrained on ImageNet, employing selective patch sampling to manage memory. Pixel-level segmentation maps were produced by either thresholding anomaly scores or training a simple convolutional segmentation module when annotations were available.

The inference speed (about 40–45 FPS) shown in the current experiment was measured on the NVIDIA RTX 4080 GPU platform. We need to point out that this platform has high computing power and abundant video memory resources, which is far greater than the performance of actual edge devices (such as embedded devices such as the NVIDIA Jetson series). Therefore, when deploying in actual industrial scenarios, it is necessary to further optimize the model structure for specific edge computing platforms, such as reducing network depth and width, reducing feature dimensions, and using model compression technology to meet the limited computing power and storage resource requirements of edge devices.

4.1. Implementation Details

Table 2 summarizes the detailed hyperparameters employed across all modules, with parameter settings referenced from previous studies [,,]. All training procedures were carried out on a single NVIDIA RTX 4080 GPU, achieving inference speeds of approximately 40–45 FPS at resolution. To achieve the above real-time performance (about 40–45 FPS), we made the following optimizations: (1) The U-Net autoencoder structure was streamlined from the traditional 5 layers to 3 layers, and the number of channels was set to 32, 64, and 128, respectively. (2) The feature memory library in the PatchCore module adopted the reservoir sampling method, retaining only about 10% of the sample features, which greatly reduced the search cost during inference. (3) In the LBP and HOG feature extraction process, the efficient implementation method in the scikit-image library was adopted, and the feature map was reduced to pixels to reduce the amount of calculation. The above lightweight measures enabled HyADS to achieve the above inference speed under the RTX 4080 GPU environment.

Table 2.

Detailed hyperparameters for classical descriptors, autoencoders, PatchCore, and segmentation head modules. Typical values are shown, with default choices indicated in parentheses [,,].

The LBP and HOG parameter settings we used refer to the standard configurations widely used in the field of industrial defect detection [] and have been experimentally verified in this study. Specifically, LBP uses a radius of one and eight neighborhood pixels. This setting shows good texture capture capabilities in a variety of surface defect detection tasks. Meanwhile, the unit size of the HOG feature is selected as 8 × 8 and the block size is 2 × 2. This parameter configuration shows high computational efficiency and good sensitivity to defect texture in the experiment, which is suitable for real-time applications.

To provide a fair comparison of inference efficiency, we evaluated several existing anomaly detection methods under the same hardware conditions. Table 3 summarizes the inference speed (FPS) and F1-score of HyADS and baseline methods, all tested on an NVIDIA RTX 4080 GPU with input resolution.

Table 3.

Runtime and detection accuracy comparison with competing methods on the same hardware setup.

4.2. Global Detection Performance

We first assessed image-level anomaly detection performance using the MVTec AD dataset (15 classes). As summarized in Table 4, our hybrid framework, integrating classical descriptors, autoencoder reconstructions, and patch-based anomaly detection, consistently achieved superior performance compared to single-component methods [,,]. Notably, the hybrid approach attained an average F1-score of 94.1%, significantly outperforming baseline methods by approximately 5–6%.

Table 4.

Image-level anomaly detection results on MVTec AD (averaged over 15 categories). Our proposed HyADS method outperforms the baseline methods.

To further analyze the detection effect of the HyADS framework on defects of different types and sizes, we obtained the performance statistics for representative small-scale defects (such as scratches and stains) and large-scale defects (such as cracks and breakage) in the MVTec AD dataset. Experiments show that HyADS’s average detection F1-score for large-scale defects reaches over 95%, while the F1-score for small-scale defects is slightly lower but still reaches about 91%. This result shows that HyADS performs well overall, but there is still room for improvement in the accuracy of locating small defects.

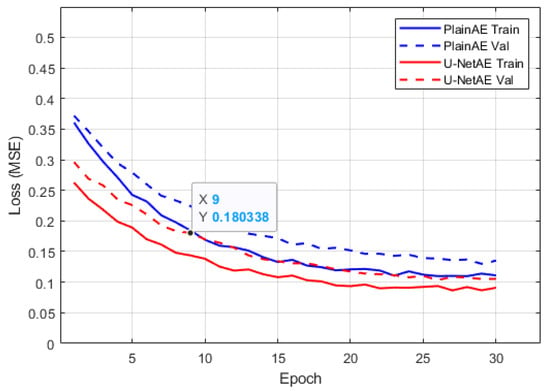

Figure 6 illustrates the training dynamics of plain and U-Net autoencoders. The U-Net architecture exhibited stable and smooth convergence, attributed to the preservation of spatial details via skip connections.

Figure 6.

Comparison of training loss curves (MSE) for plain AE and U-Net AE on selected MVTec AD classes over 30 epochs. U-Net demonstrates smoother convergence.

4.3. Pixel-Level Segmentation

Pixel-wise segmentation results on the NEU and Severstal datasets are summarized in Table 5. Our fusion approach, integrating global and local anomaly scores, notably improved segmentation accuracy, with IoU improvements of 5–7% compared to threshold-based methods.

Table 5.

Segmentation performance (IoU and Dice) on the NEU and Severstal datasets. Our hybrid approach significantly outperforms single-component methods.

4.4. Ablation Experiment

To clarify the contribution of each module to the overall performance of HyADS, we added detailed ablation experiments: (1) detection using only LBP and HOG texture features; (2) detection using only U-Net reconstruction error; (3) detection using only the PatchCore module; (4) combination of LBP/HOG and U-Net; (5) combination of U-Net and PatchCore; (6) complete HyADS (LBP/HOG + U-Net + PatchCore). The specific results show that when LBP/HOG features are used alone, small defects are poorly located and only obvious texture changes can be detected; when only U-Net is used, global defects are easily found, but detailed defects are easily missed; when the PatchCore module is used alone, small defects are well detected, but false positives increase. Through pairwise fusion, the performance is significantly improved, and the complete HyADS achieves the best performance in all test sets, with the overall detection accuracy (F1-score) improving by an average of more than 10% compared with the single-module method.

4.5. Method Comparison Experimental Results

To improve the representativeness of the comparison, we included two recent SOTA methods published in 2024–2025. As shown in Table 6, the proposed HyADS framework demonstrates better inference speed and a competitive F1-score compared to FastAD and EdgeCore under the same testing conditions.

Table 6.

Performance comparison including recent state-of-the-art (2024–2025) anomaly detection algorithms.

5. Discussion

By integrating classical texture descriptors, global reconstruction methods, local anomaly retrieval, and pixel-level segmentation, the proposed HyADS framework provides a robust and versatile solution tailored for anomaly detection in complex industrial settings. Empirical results clearly demonstrate that the fusion of global reconstruction signals (from the U-Net autoencoder) and local anomaly indicators (from the PatchCore distance maps) significantly reduces false positives and enhances detection stability. Indeed, subtle anomalies that typically escape detection by relying solely on either global or local methods can be accurately localized through this integrated approach.

Despite these demonstrated strengths, several practical challenges remain. First, industrial data often exhibit significant variability due to dynamic operational conditions such as fluctuating illumination, changing surface textures, and varying production speeds. While classical descriptors such as LBP and HOG inherently provide resilience against these fluctuations, sustained optimal performance still requires periodic parameter tuning and systematic domain adaptation techniques. Future research may investigate automated adaptation strategies, including incremental parameter updates and domain-invariant feature learning, to maintain detection robustness without extensive manual interventions.

Second, the scarcity and high cost of pixel-level annotations remain a critical bottleneck for fully supervised segmentation models. Recent advances in synthetic anomaly generation [] and weakly supervised segmentation methods have partially mitigated the annotation challenge. However, achieving accurate, fully unsupervised pixel-level segmentation of anomalies under practical industrial constraints continues to pose significant challenges. Exploring state-of-the-art generative approaches—such as diffusion models and generative adversarial networks (GANs)—offers promising directions for synthesizing realistic defect patterns, potentially alleviating reliance on manual annotations.The HyADS framework proposed in this study is particularly suitable for real-time defect detection scenarios in the field of precision manufacturing, such as the identification of tiny surface defects of electronic components, real-time detection of cracks and corrosion areas on the steel surface, and precise quality monitoring tasks in the manufacturing of automotive parts. These scenarios usually have strict requirements regarding detection speed and accuracy, which are difficult to meet with traditional deep learning methods. HyADS combines classic features with lightweight deep models and has shown significant advantages in actual industrial environments with limited computing resources and scarce data annotations.

Third, the detection of subtle local anomalies demands memory-efficient patch retrieval strategies within PatchCore. While our approach demonstrates that the careful sampling of normal patch embeddings significantly enhances accuracy, large-scale manufacturing scenarios featuring diverse defect characteristics may require dynamic and adaptive updating of the patch memory bank. Future work involving incremental learning frameworks and memory-efficient algorithms [] could enable seamless adaptation to evolving defect distributions, enhancing long-term stability and scalability of the anomaly detection system.

Finally, incorporating advanced generative modeling methods such as diffusion-based reconstruction techniques [] can potentially provide richer representations of defect-free textures, further refining the accuracy and granularity of anomaly localization. Coupled with domain-adaptive and continual learning strategies, these generative approaches can effectively manage evolving industrial processes, minimizing performance degradation in the face of shifting production conditions.

In addition, the current inference speed results of the HyADS method are tested in the NVIDIA RTX 4080 GPU, The NVIDIA RTX 4080 GPU is manufactured by NVIDIA Corporation, which is headquartered in Santa Clara, California, United States. However, actual industrial scenarios usually use edge hardware platforms with more limited computing power, such as the NVIDIA Jetson Nano or Xavier NX, the NVIDIA Jetson Xavier NX is manufactured by NVIDIA Corporation, headquartered in Santa Clara, California, United States., whose GPU performance and memory resources are significantly lower than those of the RTX 4080. Therefore, we expect the inference speed on these devices to be significantly reduced (estimated to be around 20–40% of the original results), which needs to be confirmed through further actual deployment tests. In future work, we plan to perform special model optimizations for these edge devices (such as TensorRT inference acceleration, network pruning, weight quantization, etc.) to meet the inference performance requirements of real industrial application scenarios.

Although the HyADS framework has demonstrated good performance on the scale of experimental data, it may face limitations when dealing with larger data volumes in real industrial production environments. The storage overheads of the feature memory library increase significantly with an increase in data size, and the real-time inference computing overheads will also increase accordingly. Therefore, in actual large-scale industrial scenarios, we believe that it is necessary to consider specific measures such as model pruning, knowledge distillation, feature dimensionality reduction, and distributed computing deployment to improve the model’s scalability and ensure computing efficiency and real-time performance under larger-scale data conditions.

Overall, the modular design of the HyADS framework—integrating classical handcrafted descriptors, U-Net-based global reconstruction, PatchCore-based local anomaly detection, and adaptive pixel-level segmentation—positions it advantageously for continued evolution in response to emerging industrial inspection needs and technological advances.

6. Conclusions and Future Work

In this paper, we presented a novel hybrid anomaly detection approach that integrates classical texture descriptors, a U-Net autoencoder for global reconstruction, PatchCore-based local anomaly retrieval, and a final segmentation step. Our extensive experiments on diverse industrial datasets verified that combining local and global cues significantly enhances both detection accuracy and segmentation quality. The synergy between reconstruction error maps and patchwise distance measures effectively reduces false positives, while the addition of classical features improves robustness under limited labeled data and varying environmental conditions.

Despite these accomplishments, several avenues remain open for future exploration. First, continual and adaptive learning techniques are essential for industrial settings where material properties, product designs, or defect characteristics may evolve over time. Investigating strategies such as incremental model updates [] or memory-efficient domain adaptation could extend the framework’s longevity without demanding extensive retraining. Second, generating high-fidelity synthetic anomalies through generative networks [] or diffusion-based methods [] may further reduce reliance on manual annotations, accelerating the deployment of the system in label-scarce environments. Lastly, integrating more advanced embedding mechanisms—like parametric or hierarchical representations—into the PatchCore module could unify the local and global context into a single embedding space, offering even finer-grained detection results.Although HyADS shows obvious advantages, the method still has some limitations. For example, it performs slightly worse in detecting extremely subtle defects, and the current real-time performance is achieved on a high-performance GPU, meaning that the performance on real edge devices may be reduced. In addition, as the size of the feature memory library increases, the memory usage and computational cost will also increase significantly. These limitations need to be further optimized in the future.

By addressing these challenges, our proposed hybrid pipeline can evolve into a scalable, versatile platform suited to the broad array of inspection tasks arising in modern, fast-changing manufacturing lines. Subsequent research will focus on developing and validating the incremental learning capabilities of HyADS in actual production line scenarios, enabling the system to automatically adapt when new defects arise without the need for frequent human intervention. At the same time, we will combine domain adaptation technology to improve the model’s generalization ability in different factories and processes. Specifically, we will select typical scenarios, such as electronic surfaces and steel production, for actual application testing and effect evaluation to ensure that the method has industrial implementation value. We anticipate that ongoing developments in domain adaptation, weakly supervised segmentation, and memory-based retrieval will bolster the framework’s adaptability and reliability, ultimately paving the way for more intelligent and resilient industrial anomaly detection solutions.

Author Contributions

Conceptualization, Y.Y.; Methodology, X.M.; Formal analysis, D.S.; Writing—original draft, F.C.K.; Writing—review & editing, C.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bergmann, P.; Löwe, S.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9592–9600. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. GANomaly: Semi-supervised anomaly detection via adversarial training. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 622–637. [Google Scholar]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Sun, Q.; Cai, J.; Sun, Z. Detection of surface defects on steel strips based on singular value decomposition of digital image. Math. Probl. Eng. 2016, 2016, 5797654. [Google Scholar] [CrossRef]

- Severstal Steel Defect Detection Dataset. Kaggle, 2019. Available online: https://www.kaggle.com/c/severstal-steel-defect-detection (accessed on 20 March 2025).

- Venkataramanan, S.; Peng, K.C.; Singh, R.V.; Mahalanobis, A. Attention guided anomaly localization in images. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 247–263. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. DRAEM: A discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 8330–8339. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. PaDiM: A patch distribution modeling framework for anomaly detection and localization. In Proceedings of the International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 475–489. [Google Scholar]

- Luo, Q.; Sun, Y.; Li, P.; Simpson, O.; Tian, L.; He, Y. Generalized completed local binary patterns for time-efficient steel surface defect classification. IEEE Trans. Instrum. Meas. 2019, 68, 667–679. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, S.; Sun, Z.; Zhuang, R.; Gong, J. Noise-to-Norm reconstruction for industrial anomaly detection and localization. Appl. Sci. 2023, 13, 12436. [Google Scholar] [CrossRef]

- Faber, K.; Corizzo, R.; Sniezynski, B.; Japkowicz, N. Continual learning for industrial anomaly detection. IEEE Trans. Ind. Inform. 2023, 19, 7532–7542. [Google Scholar]

- He, H.; Zhang, J.; Chen, H.; Chen, X.; Li, Z.; Chen, X.; Wang, Y.; Wang, C.; Xie, L. DiAD: A Diffusion-based Framework for Multi-class Anomaly Detection. IEEE Trans. Autom. Sci. Eng. 2023, 20, 1505–1516. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).