EnvMat: A Network for Simultaneous Generation of PBR Maps and Environment Maps from a Single Image

Abstract

1. Introduction

2. Related Work

2.1. Material Capture and Estimation

2.2. Deep Learning-Based Material Estimation

2.3. Generative Models for Material Synthesis

2.4. Differences from Existing Work

3. Methodology

3.1. Datasets

3.1.1. PBR Maps

3.1.2. Environment Maps (Env Maps)

3.1.3. Rendered Images

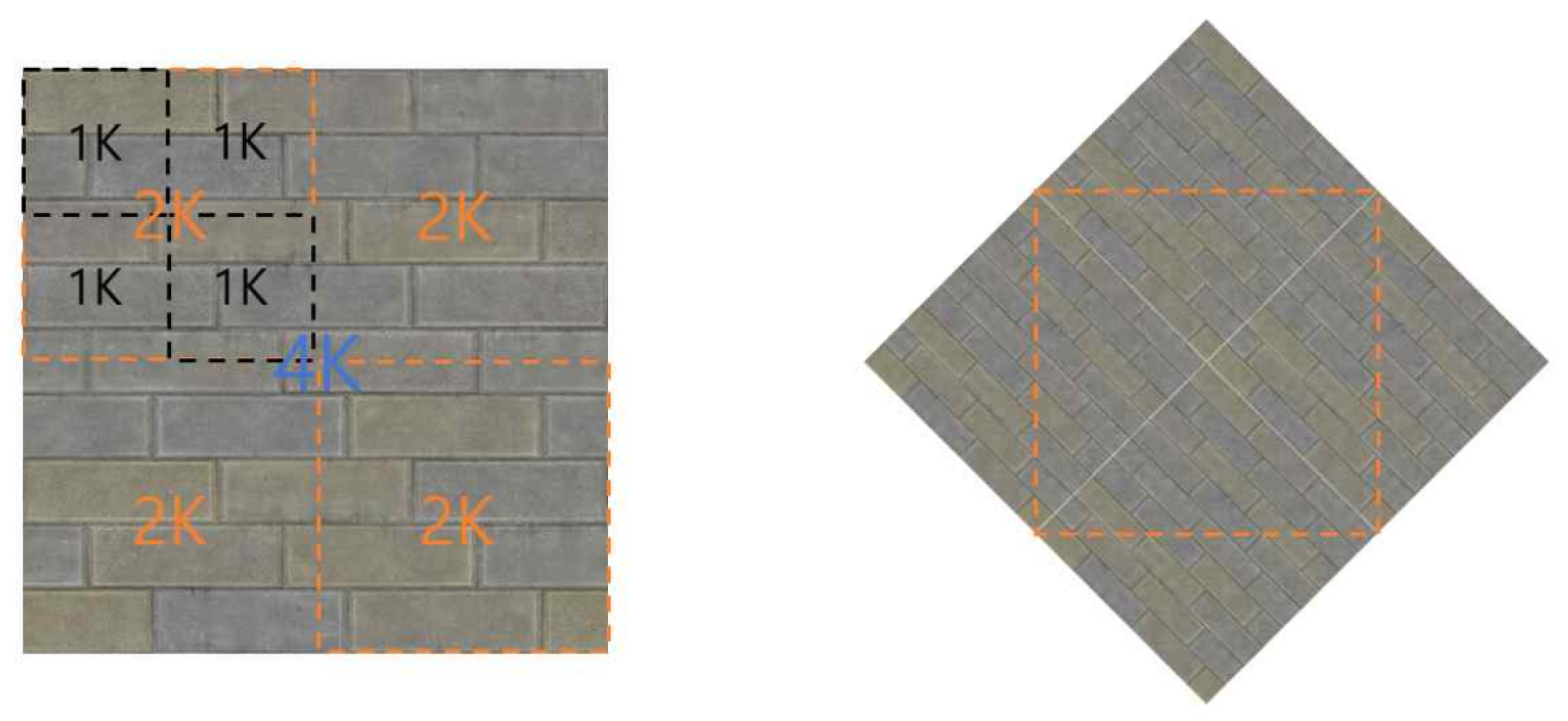

3.2. Model Training

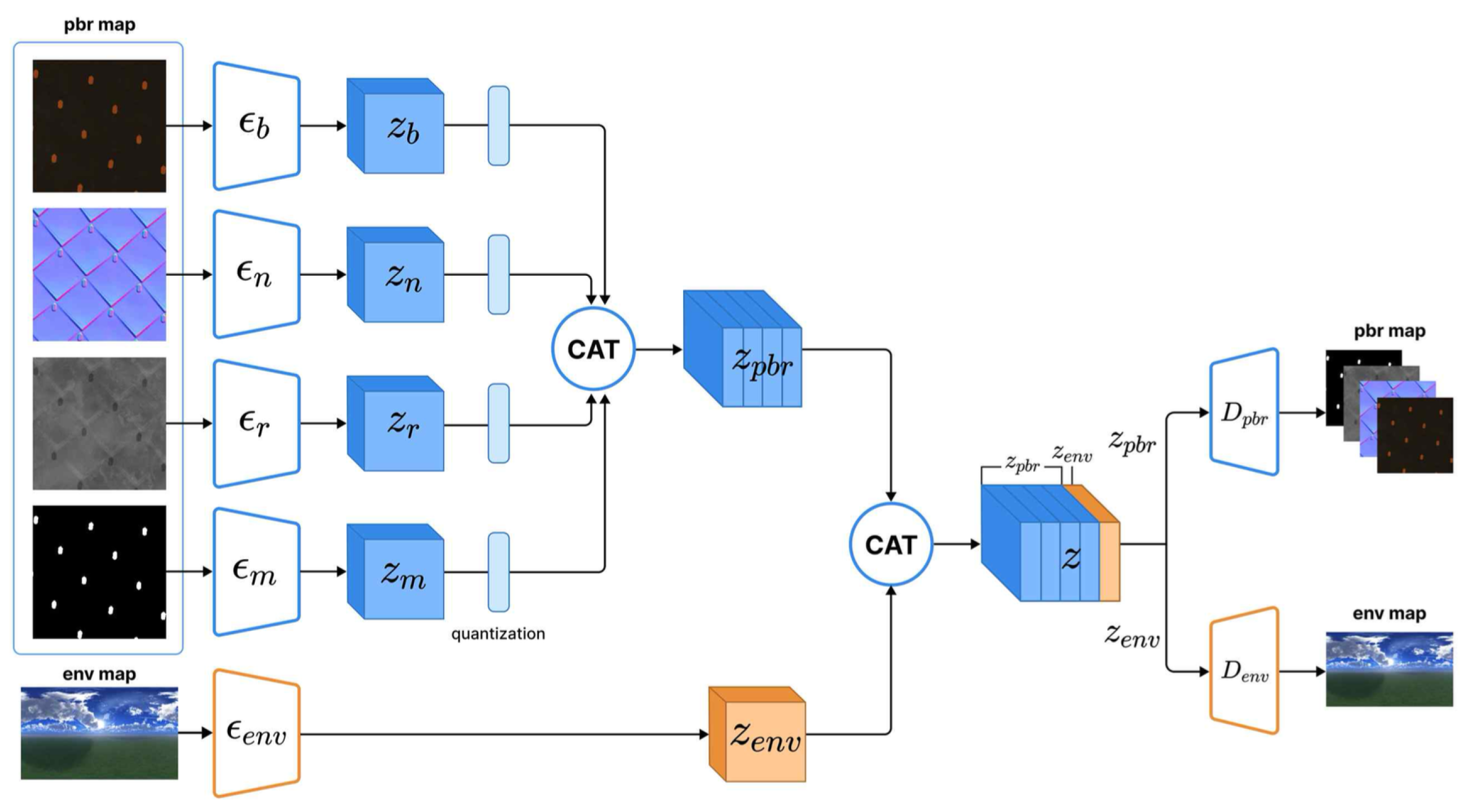

3.2.1. PBR VAE

3.2.2. ENV VAE

3.2.3. Latent Diffusion Model (LDM)

4. Experiment

4.1. Evaluation Method

- L-PIPSmeasures perceptual similarity by computing differences between deep feature maps obtained from pretrained vision models such as VGG-16. This metric effectively captures high-level visual similarities including textures, styles, and structures. An L-PIPS score closer to 0 indicates higher similarity between the two images.

- MS-SSIM is an extended version of the Structural Similarity Index Measure (SSIM), evaluating structural similarities at multiple scales. While SSIM comprehensively evaluates luminance, contrast, and structural similarity, MS-SSIM enhances this evaluation across various resolutions, providing a more robust measure of structural similarity. An MS-SSIM score closer to one indicates higher similarity.

- CIEDE2000 measures color difference based on the Lab color coordinates (L: luminance, a: green-to-red axis, b: blue-to-yellow axis). This metric accounts for human visual sensitivity to luminance, chroma, and hue. A higher CIEDE2000 value indicates a greater color difference. Generally, differences below three are barely noticeable or indistinguishable to the human eye, differences between three and six are clearly distinguishable, and differences above six are considered significantly noticeable.

4.2. Training Results

4.2.1. Baseline Model Reproduction

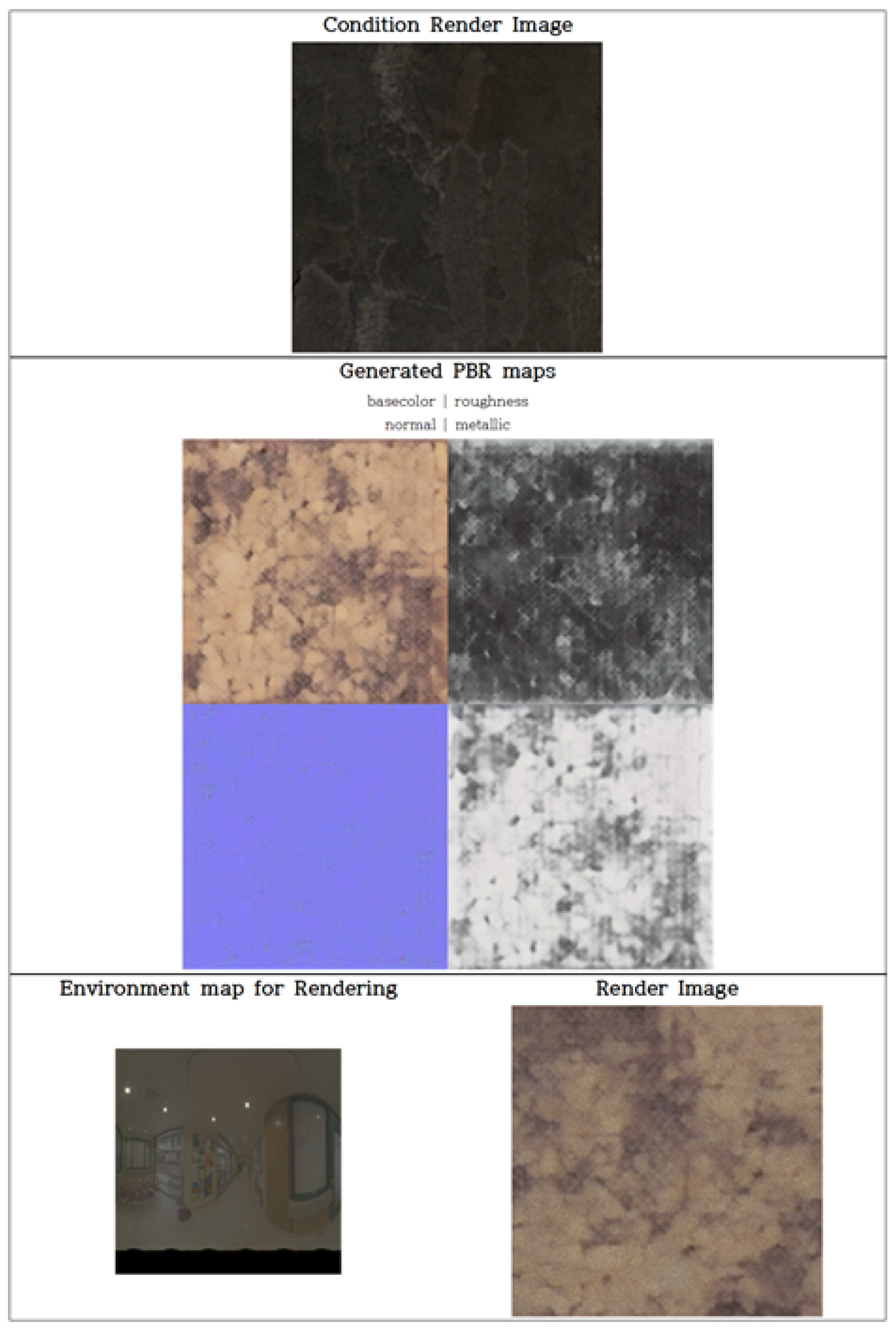

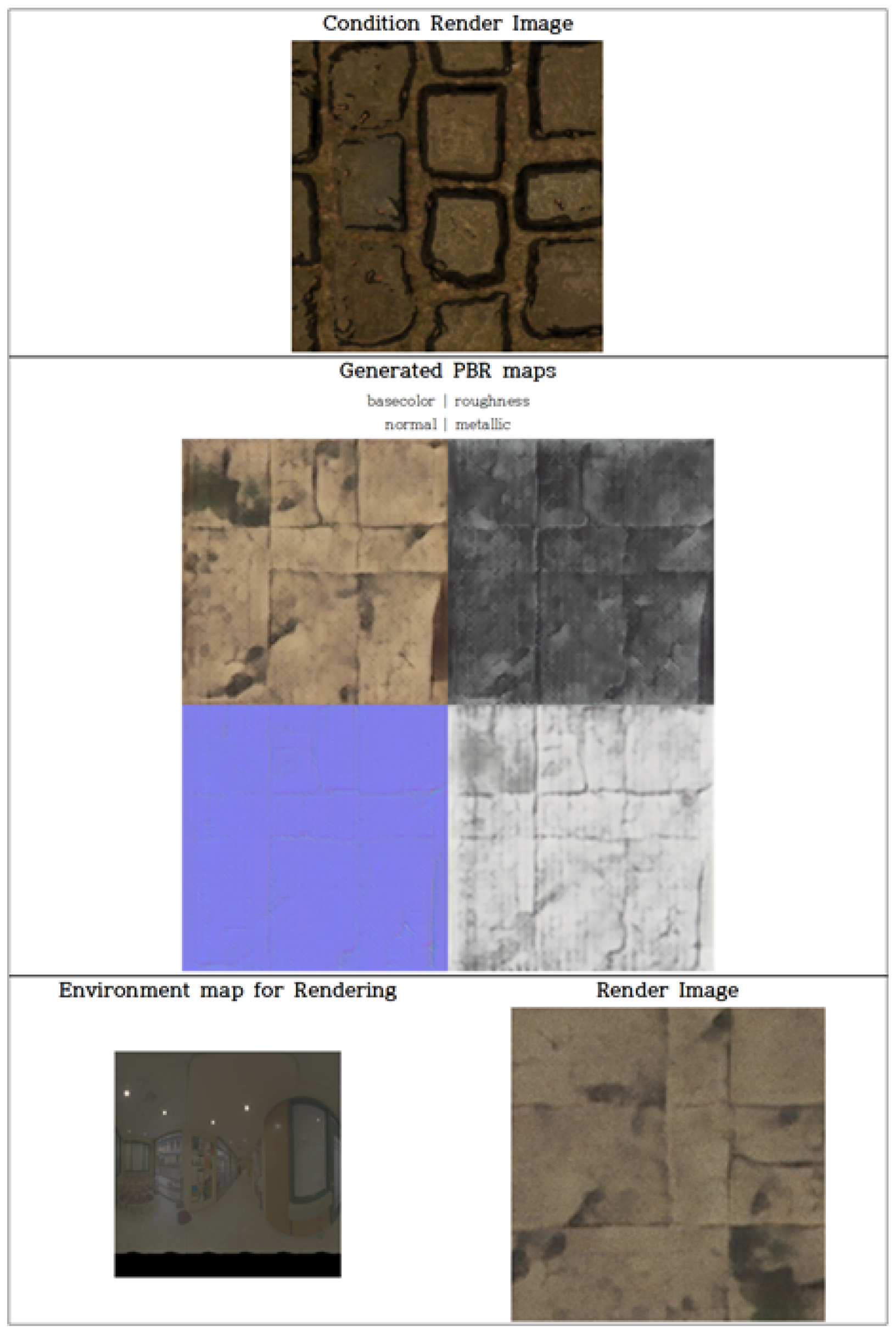

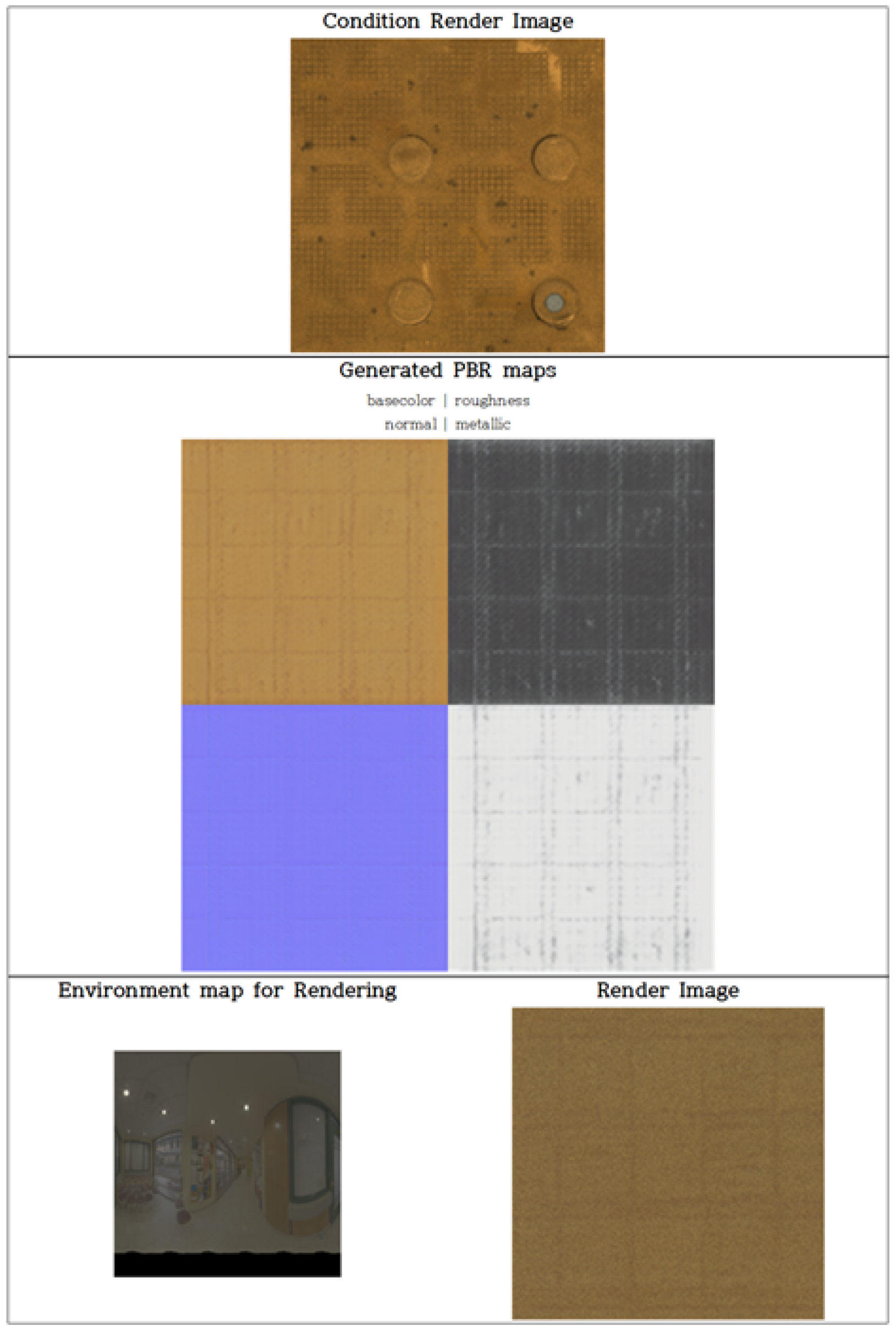

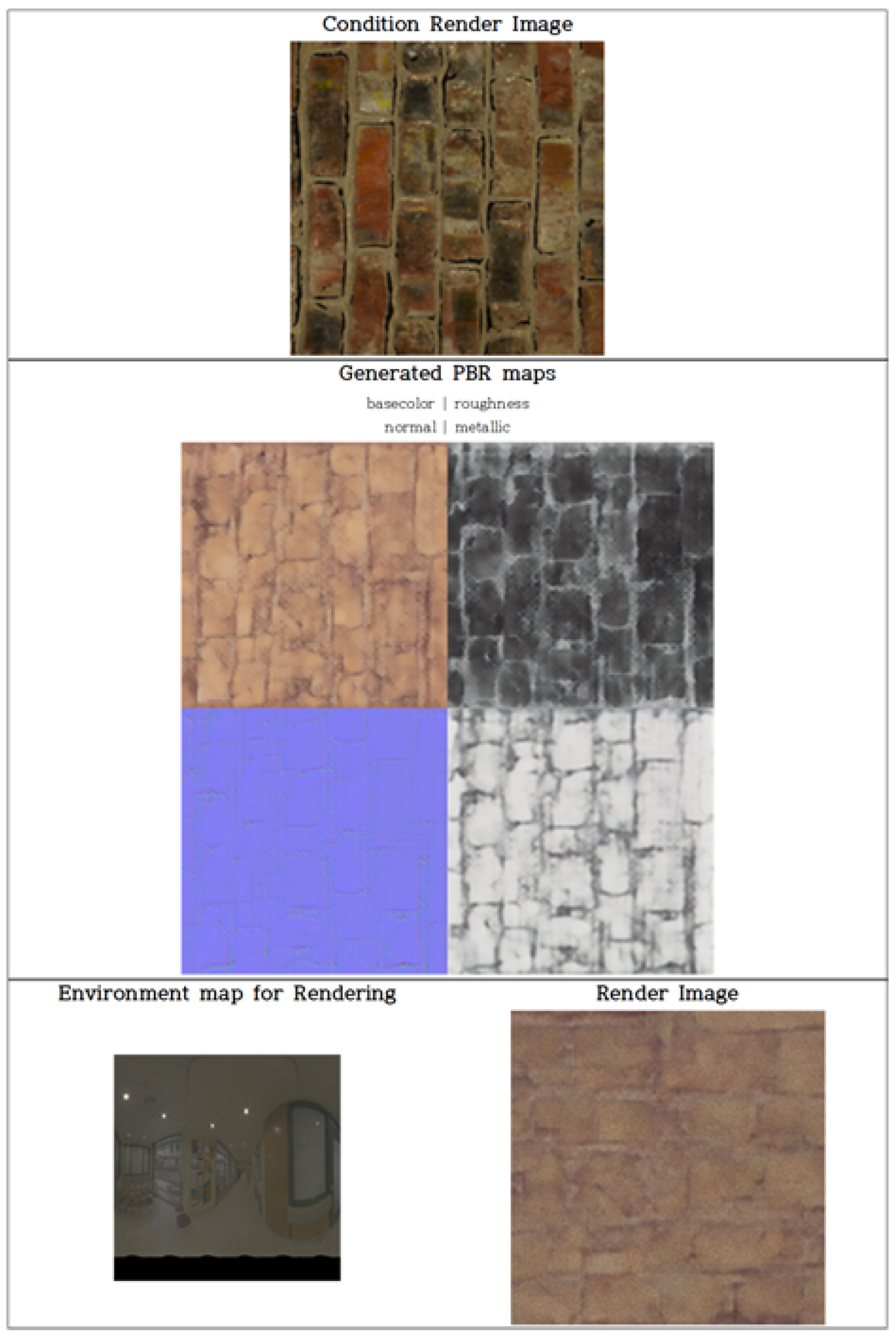

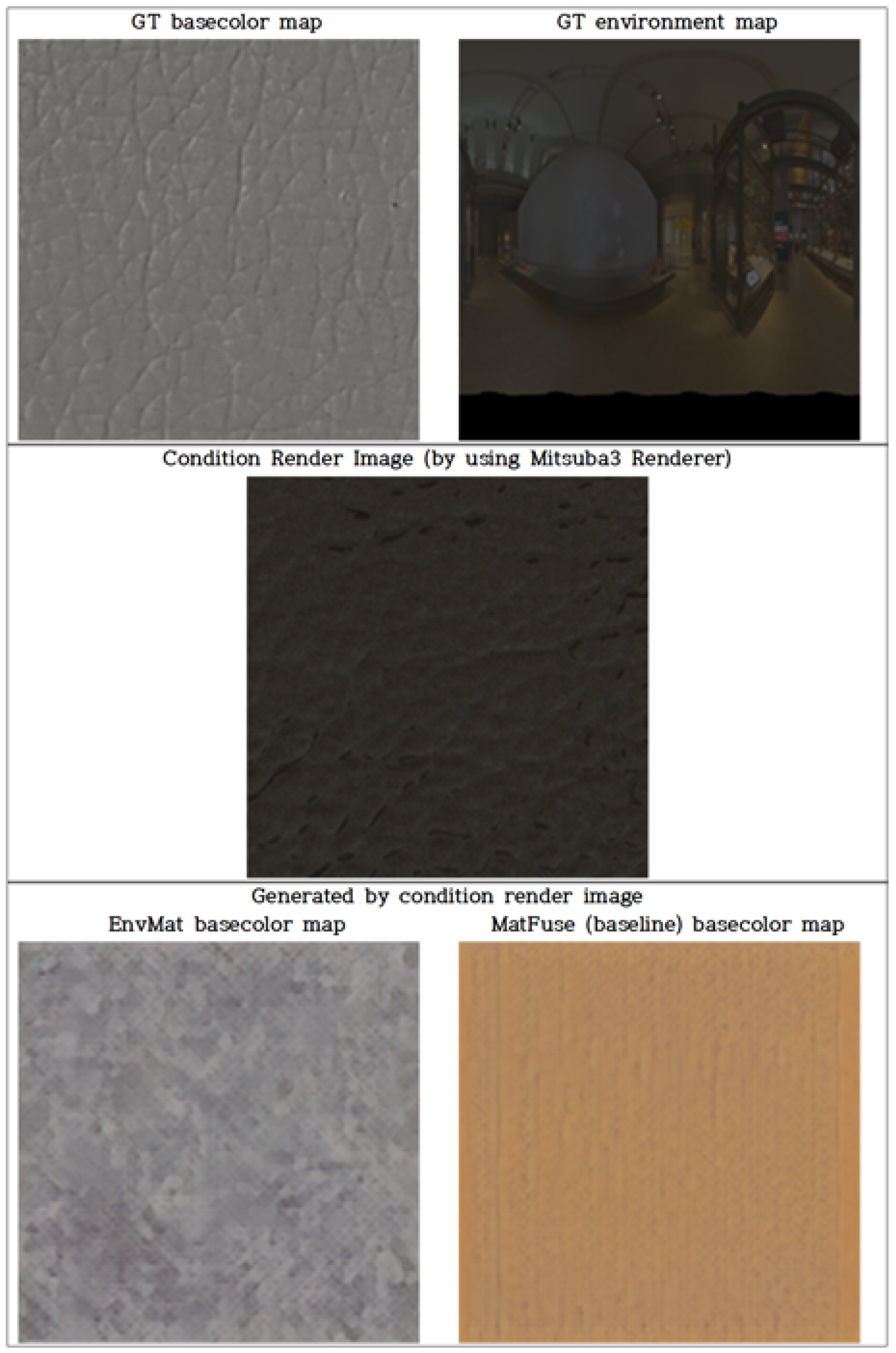

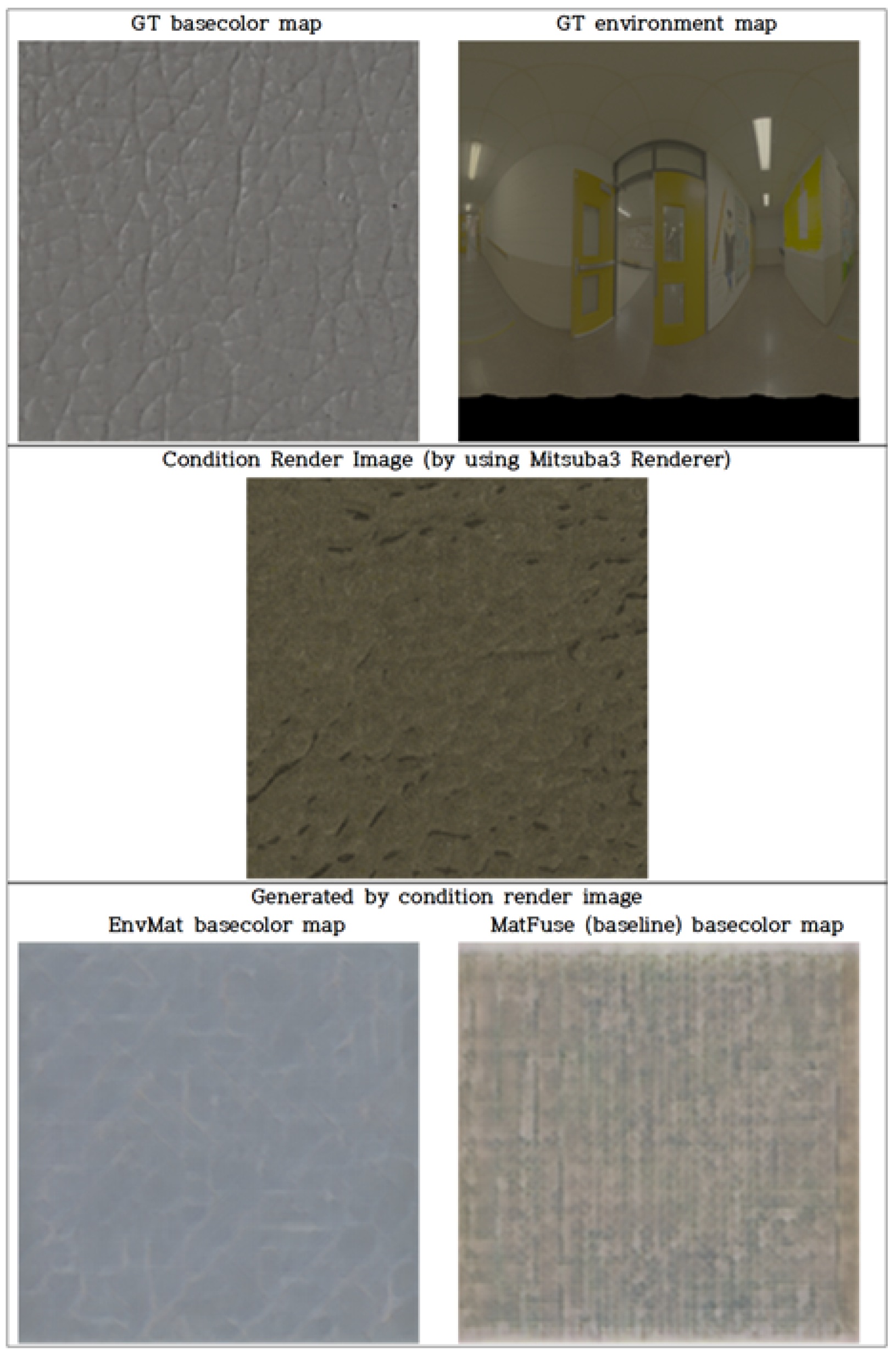

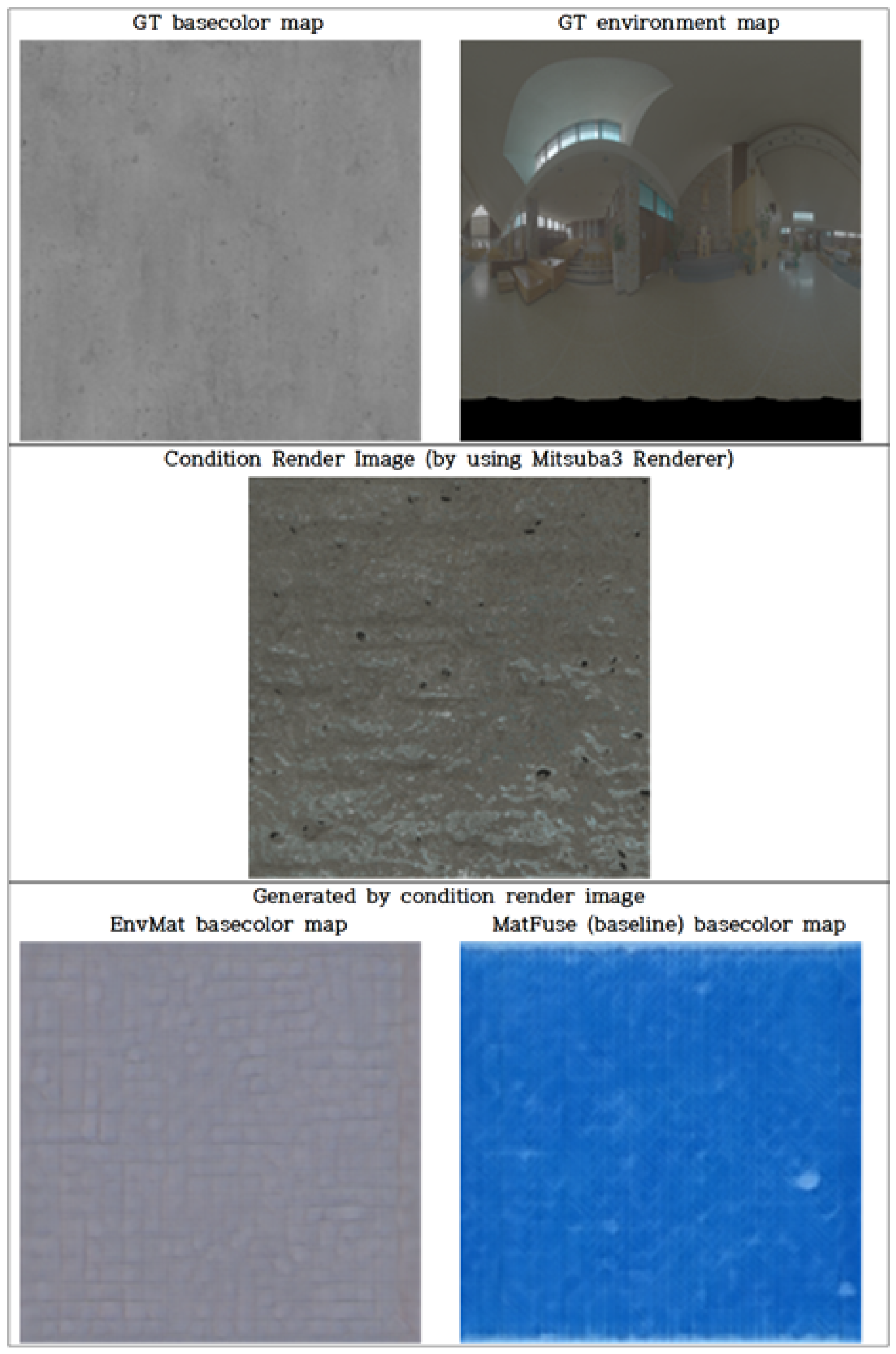

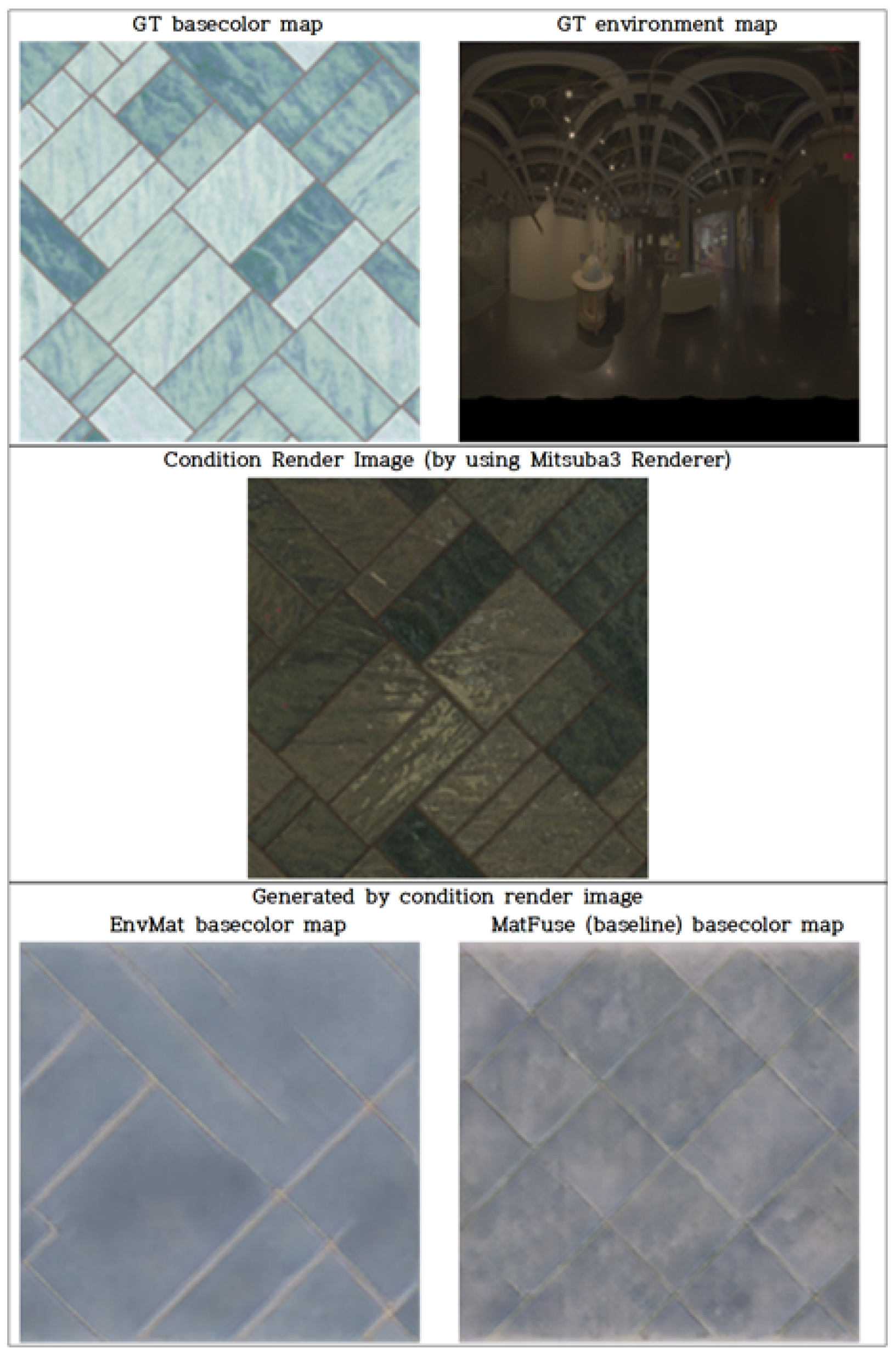

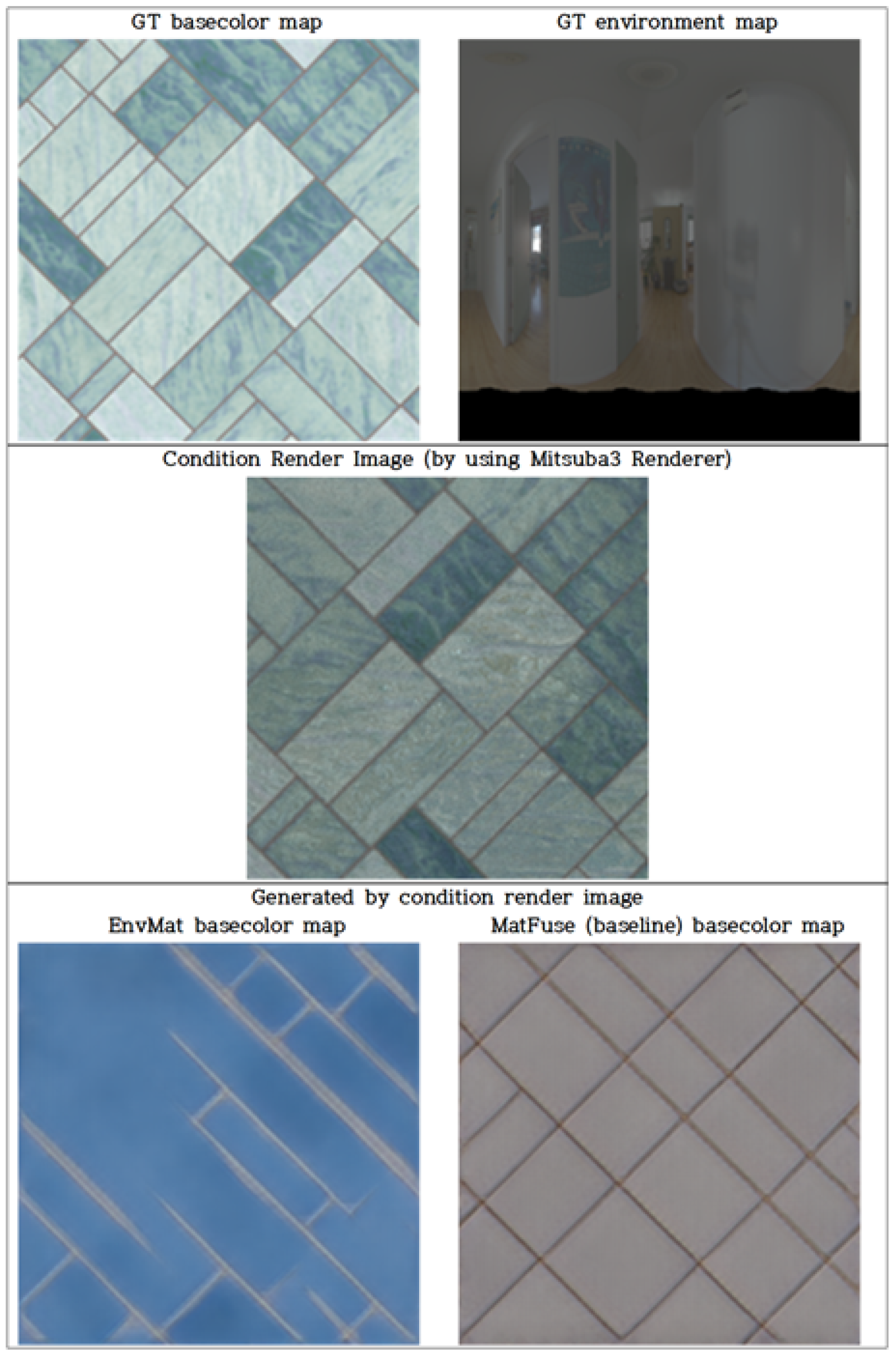

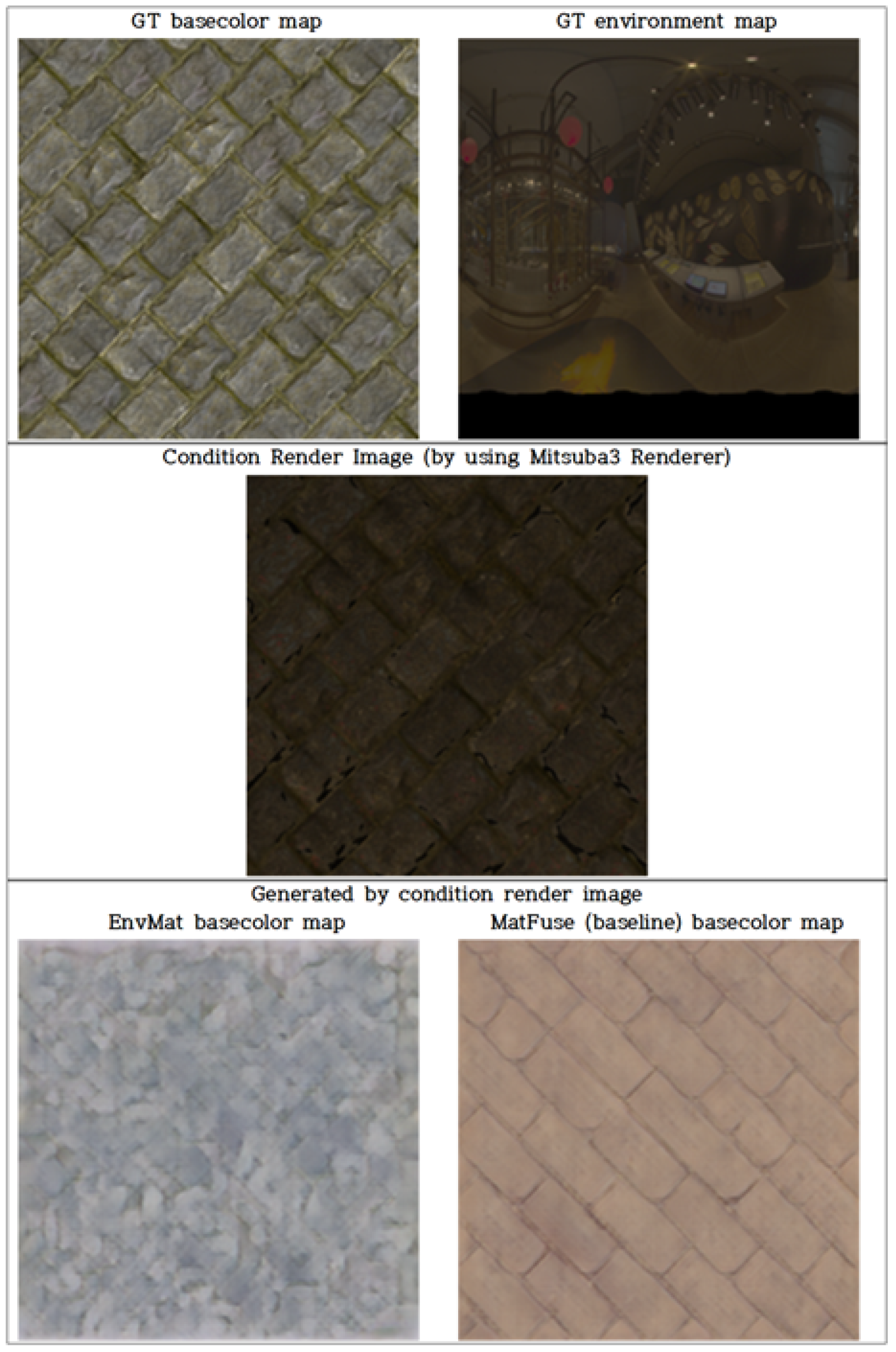

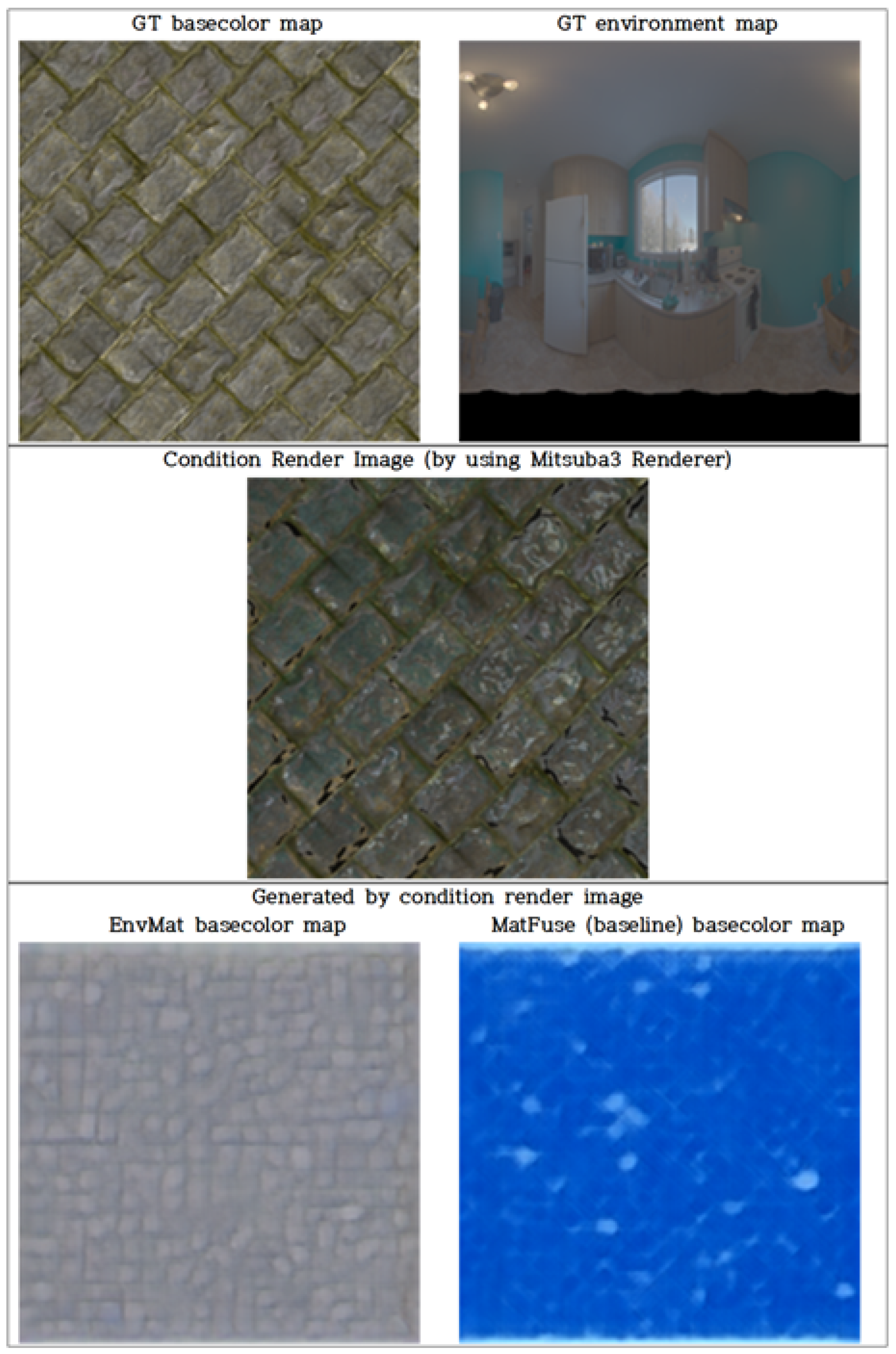

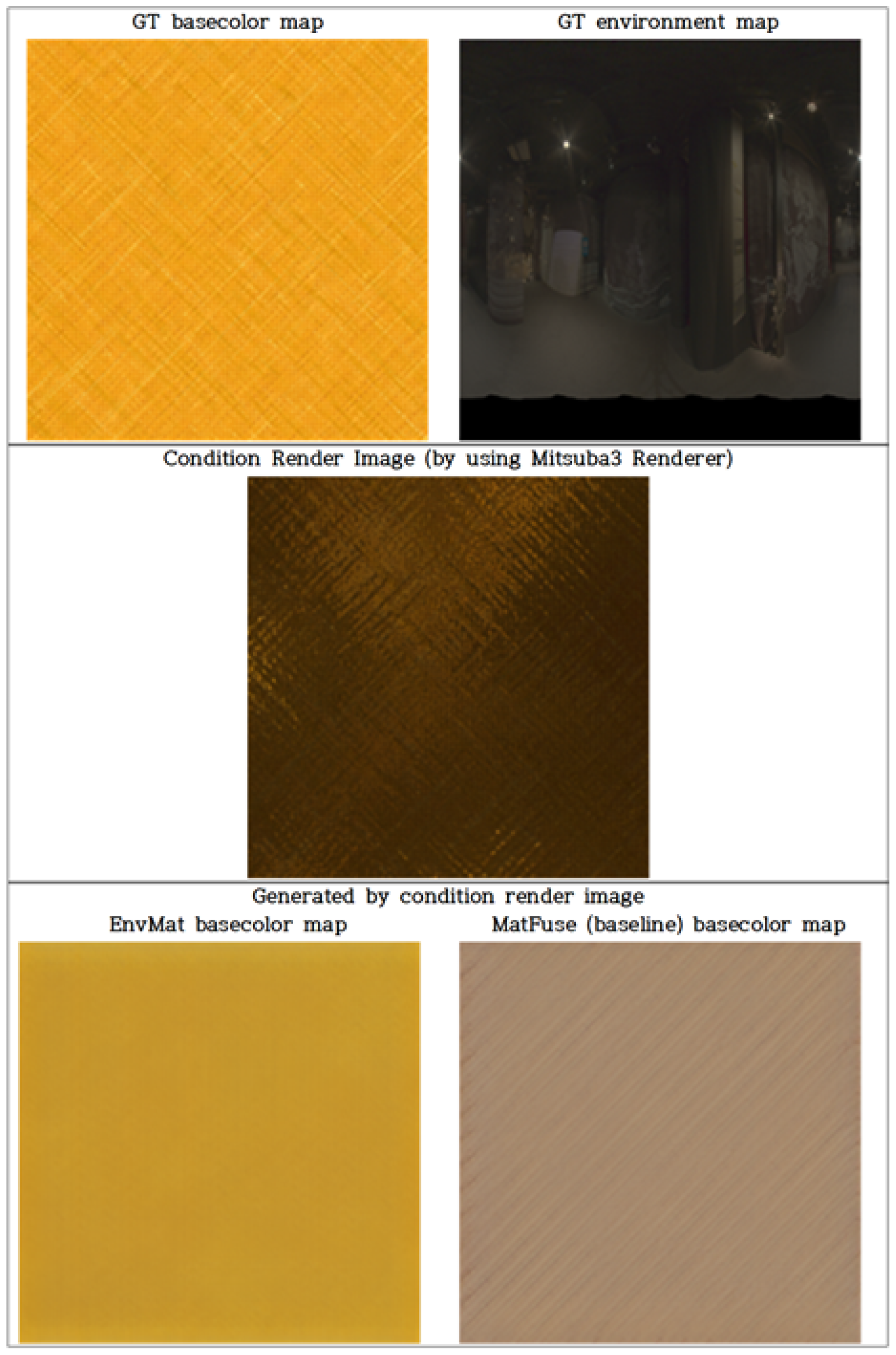

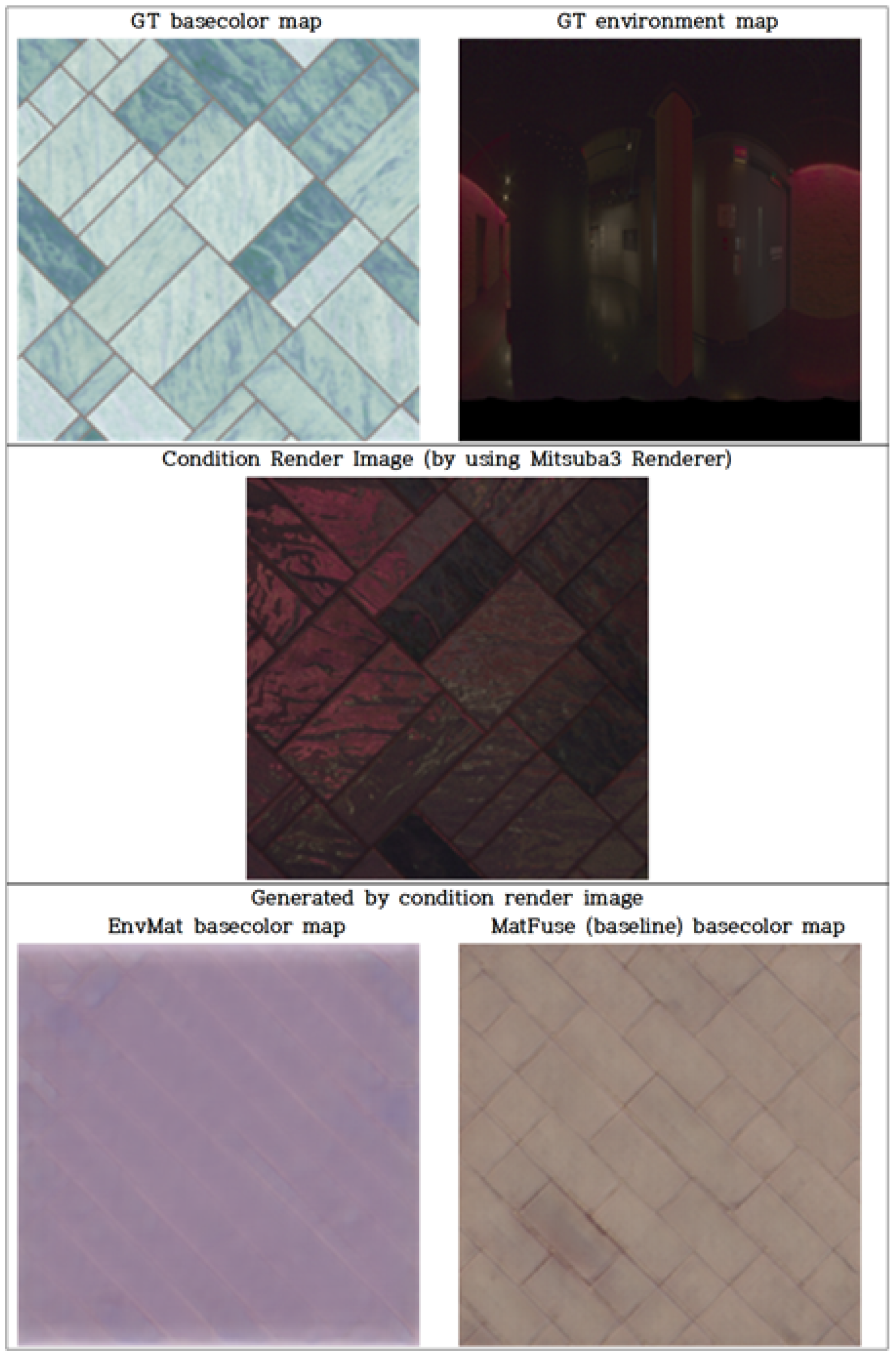

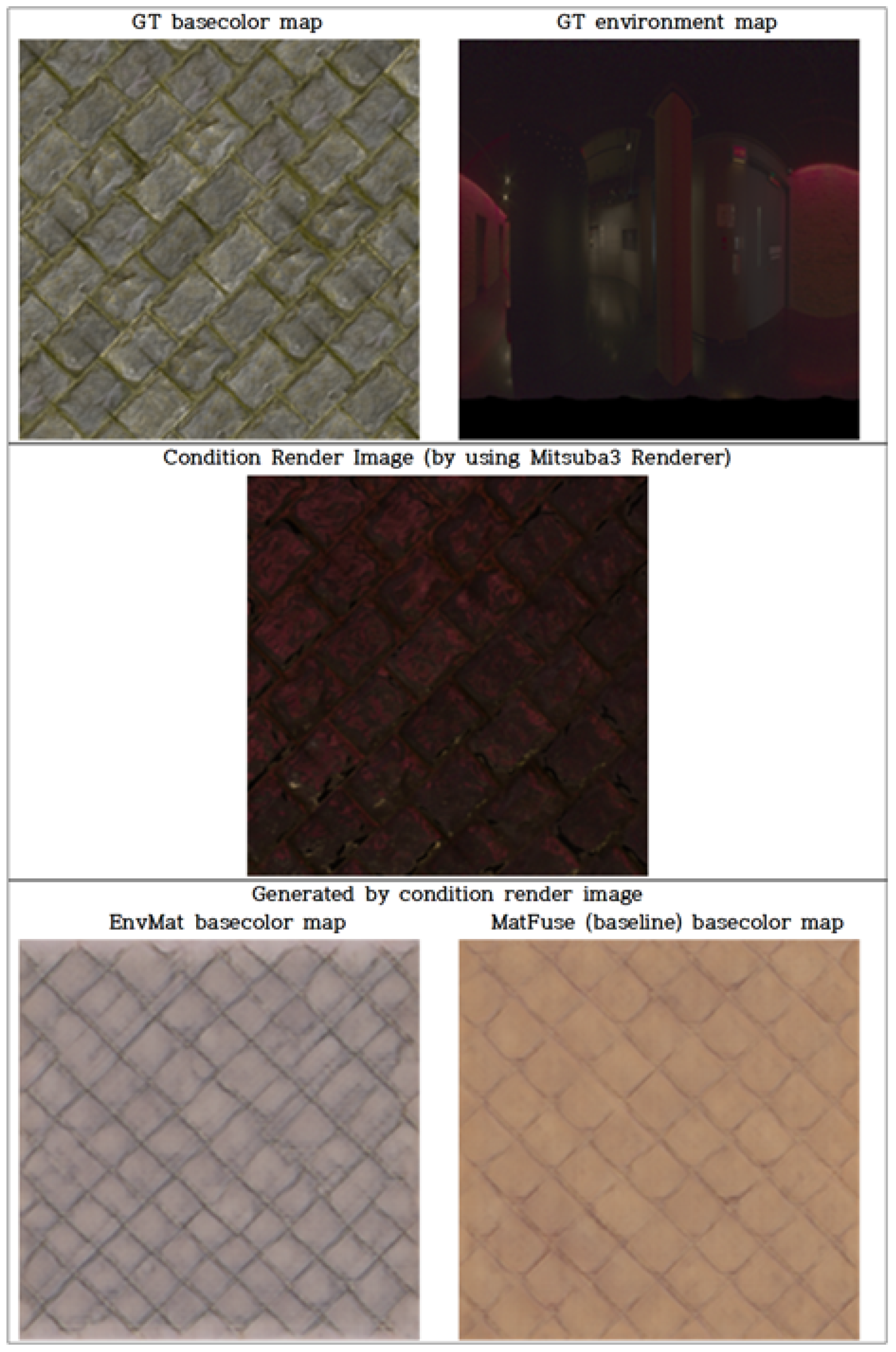

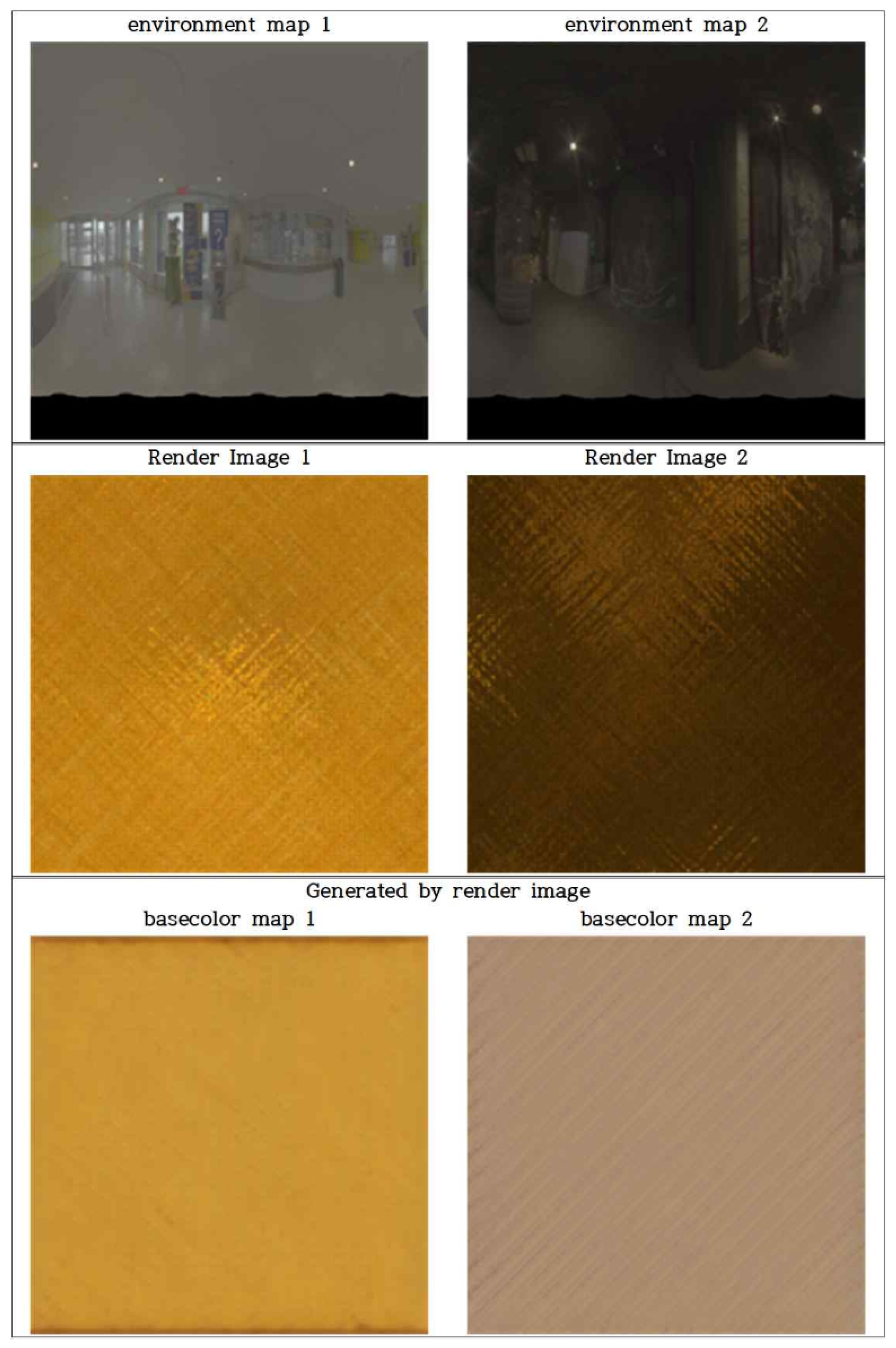

4.2.2. Qualitative Evaluation

4.2.3. Quantitative Evaluation

4.3. Performance Analysis

5. Conclusions and Future Work

5.1. Conclusions

5.2. Limitations

5.3. Future Work

- Increased Generalization through Expanded Datasets: Future research should employ larger and more diverse datasets to further enhance the model’s generalization capability. Expanding the training dataset with additional MatSynth materials, env maps featuring diverse color distributions, as well as env maps captured from various environments (including outdoor and studio lighting conditions), will substantially improve the robustness and applicability of the proposed model.

- Incorporation of Render Loss in UNet Training: While Render Loss is often beneficial for optimizing physically based rendering (PBR) assets by evaluating the difference between rendered outputs generated from predicted maps and original renderings, its direct application to our Latent UNet architecture presents inherent structural challenges. Render Loss operates by comparing renderings at the pixel level. However, our Latent UNet operates and generates representations within a latent space, not the pixel space, thereby preventing the direct rendering of intermediate PBR and environment maps for Render Loss computation during its training. Although this loss was effectively employed during VAE training, its integration into the UNet training process remains a significant challenge. This characteristic is common in Latent Diffusion Models. Consistent with the previous work utilizing UNets in similar latent spaces, we did not employ Render Loss during UNet training. Future research should explore methods to effectively integrate Render Loss into UNet training, which could lead to even closer alignment with original renderings and potentially further improve the accuracy of generated results.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 25, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and Improving the Image Quality of StyleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Vecchio, G.; Sortino, R.; Palazzo, S.; Spampinato, C. MatFuse: Controllable Material Generation with Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 4429–4438. [Google Scholar]

- Deschaintre, V.; Aittala, M.; Durand, F.; Drettakis, G.; Bousseau, A. Single-Image SVBRDF Capture with a Rendering-Aware Deep Network. ACM Trans. Graph. 2018, 37, 128:1–128:15. [Google Scholar] [CrossRef]

- Vecchio, G.; Palazzo, S.; Spampinato, C. SurfaceNet: Adversarial SVBRDF Estimation from a Single Image. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 12840–12848. [Google Scholar]

- Guo, Y.; Smith, C.; Hašan, M.; Sunkavalli, K.; Zhao, S. MaterialGAN: Reflectance Capture Using a Generative SVBRDF Model. ACM Trans. Graph. 2020, 39, 254:1–254:13. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. In Proceedings of the Advances in Neural Information Processing Systems 34, Virtual, 6–14 December 2021; pp. 8780–8794. [Google Scholar]

- Esser, P.; Rombach, R.; Ommer, B. Taming Transformers for High-Resolution Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12873–12883. [Google Scholar]

- He, Z.; Guo, J.; Zhang, Y.; Tu, Q.; Chen, M.; Guo, Y.; Wang, P.; Dai, W. Text2Mat: Generating Materials from Text. In Proceedings of the Pacific Graphics 2023—Short Papers and Posters, Daejeon, Republic of Korea, 10–13 October 2023; pp. 89–97. [Google Scholar]

- Martin, R.; Roullier, A.; Rouffet, R.; Kaiser, A.; Boubekeur, T. MaterIA: Single Image High-Resolution Material Capture in the Wild. Comput. Graph. Forum 2022, 41, 163–177. [Google Scholar] [CrossRef]

- Vecchio, G.; Martin, R.; Roullier, A.; Kaiser, A.; Rouffet, R.; Deschaintre, V.; Boubekeur, T. ControlMat: A Controlled Generative Approach to Material Capture. ACM Trans. Graph. 2024, 43, 164:1–164:17. [Google Scholar] [CrossRef]

- Vecchio, G.; Deschaintre, V. MatSynth: A Modern PBR Materials Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 22109–22118. [Google Scholar]

- Laval Indoor HDR Environment Map Dataset. 2014. Available online: http://hdrdb.com/indoor/ (accessed on 12 May 2025).

- Chung, H.; Kim, J.; McCann, M.T.; Klasky, M.L.; Ye, J.C. Diffusion Posterior Sampling for General Noisy Inverse Problems. arXiv 2022, arXiv:2209.14687. [Google Scholar]

| Model | CLIP-IQA (↑) | FID (↓) |

|---|---|---|

| GT Render Image | - | |

| MatFuse (paper) | 158.53 | |

| MatFuse (baseline) | 196.32 | |

| EnvMat (ours) | 188.02 |

| Model | L-PIPS (↓) | MS-SSIM (↑) | CIEDE2000 (↓) |

|---|---|---|---|

| MatFuse (Baseline) | 0.6899 | 0.4861 | 47.4045 |

| EnvMat (Proposed) | 0.6476 | 0.5195 | 37.2581 |

| Model | Inference Time (s) | Memory Usage (MiB) |

|---|---|---|

| MatFuse (Baseline) | 3.6–3.7 | 8232 |

| EnvMat (Proposed) | 3.6–3.8 | 8498 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oh, S.; Jung, M.; Kim, T. EnvMat: A Network for Simultaneous Generation of PBR Maps and Environment Maps from a Single Image. Electronics 2025, 14, 2554. https://doi.org/10.3390/electronics14132554

Oh S, Jung M, Kim T. EnvMat: A Network for Simultaneous Generation of PBR Maps and Environment Maps from a Single Image. Electronics. 2025; 14(13):2554. https://doi.org/10.3390/electronics14132554

Chicago/Turabian StyleOh, SeongYeon, Moonryul Jung, and Taehoon Kim. 2025. "EnvMat: A Network for Simultaneous Generation of PBR Maps and Environment Maps from a Single Image" Electronics 14, no. 13: 2554. https://doi.org/10.3390/electronics14132554

APA StyleOh, S., Jung, M., & Kim, T. (2025). EnvMat: A Network for Simultaneous Generation of PBR Maps and Environment Maps from a Single Image. Electronics, 14(13), 2554. https://doi.org/10.3390/electronics14132554