Boundary-Aware Camouflaged Object Detection via Spatial-Frequency Domain Supervision

Abstract

1. Introduction

- We design a two-stage boundary supervision framework, SFNet, which extracts subject features by dynamically capturing detailed changes and adaptively combines boundary information obtained through a dual-domain boundary supervision mechanism, enabling the accurate detection of camouflaged objects.

- To ensure consistent characterization of both the body and the boundary features of the camouflaged object, we introduce a multi-scale dynamic attention module, a dual-domain boundary supervision mechanism, and an adaptive gated boundary guidance module for extracting, enhancing, and fusing object body and boundary features.

- Extensive experiments indicate that the model we propose achieves the highest performance across all four evaluation metrics, outperforming 18 existing advanced methods by a significant margin while incurring lower computational overhead and memory costs.

2. Related Work

2.1. Feature Representation Learning

2.2. Boundary-Supervised Learning

3. Methodology

3.1. Multi-Scale Dynamic Attention Module

3.2. Dual-Domain Boundary Supervision Module

3.3. Adaptive Gated Boundary Guided Module

3.4. Loss Function

4. Experiments

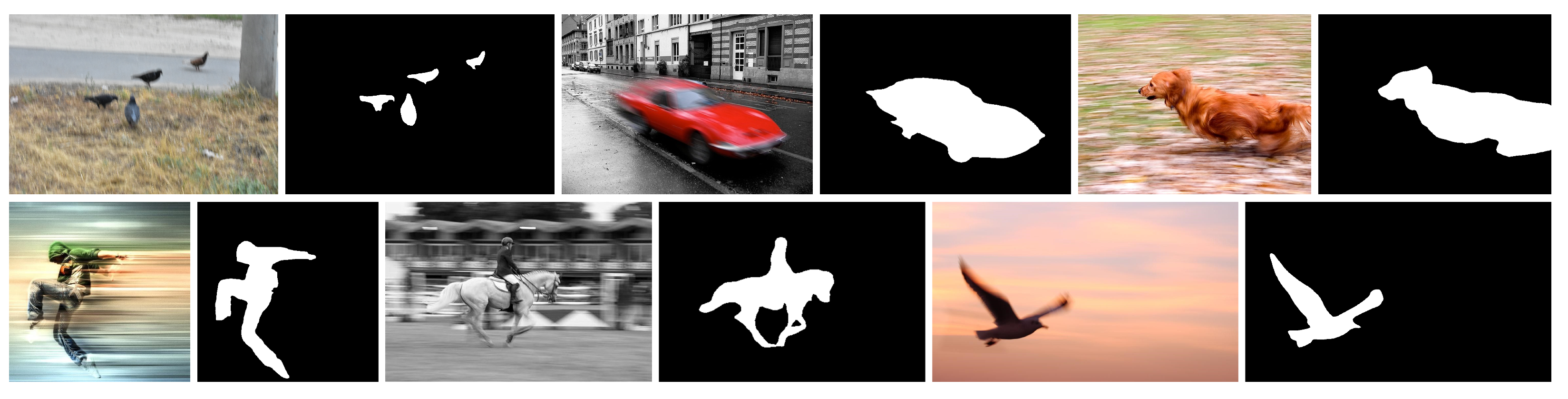

4.1. Datasets

4.2. Evaluation Metrics

4.3. Training Settings

4.4. Comparison to the State of the Art

4.5. Quantitative Analysis

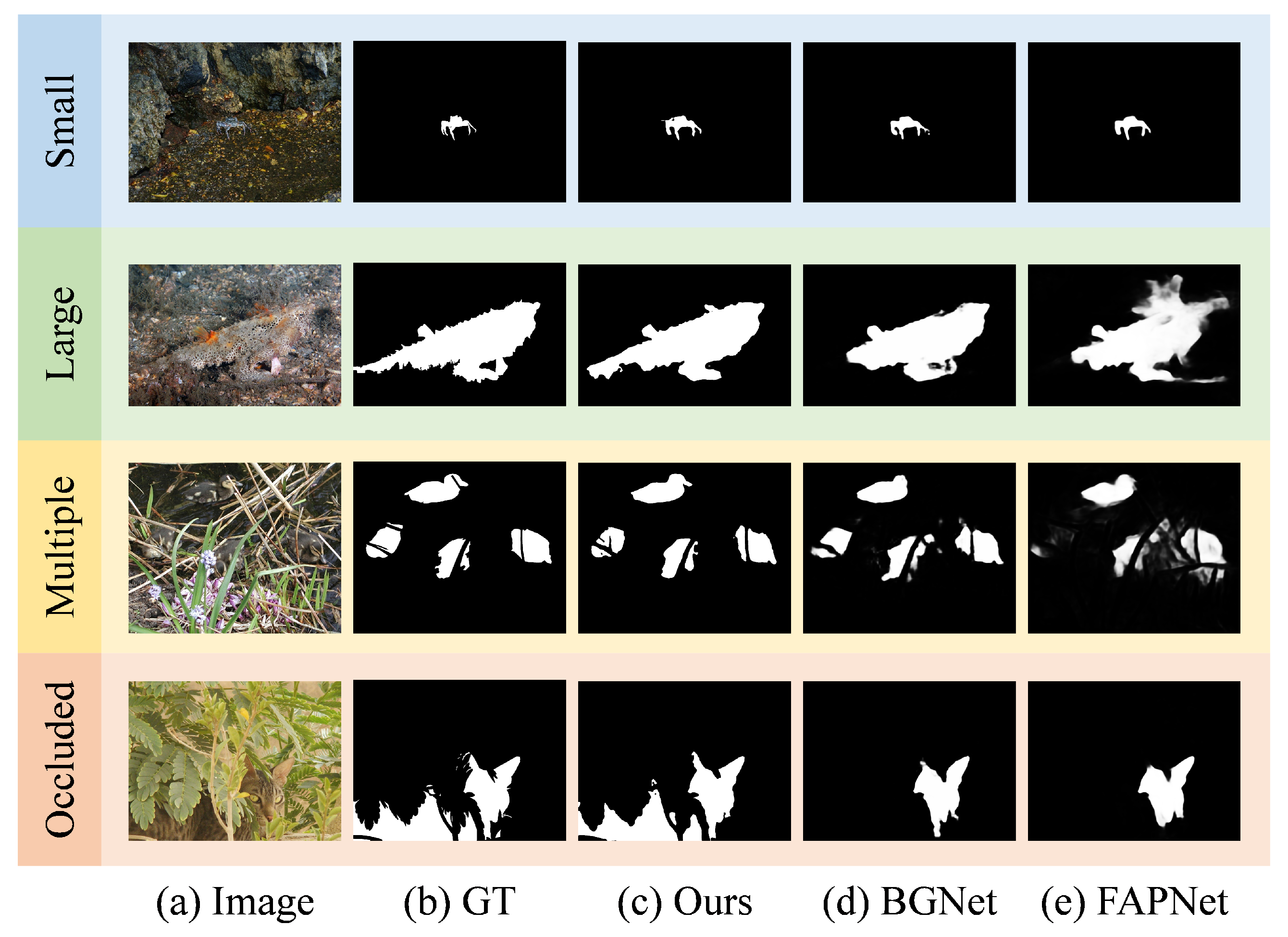

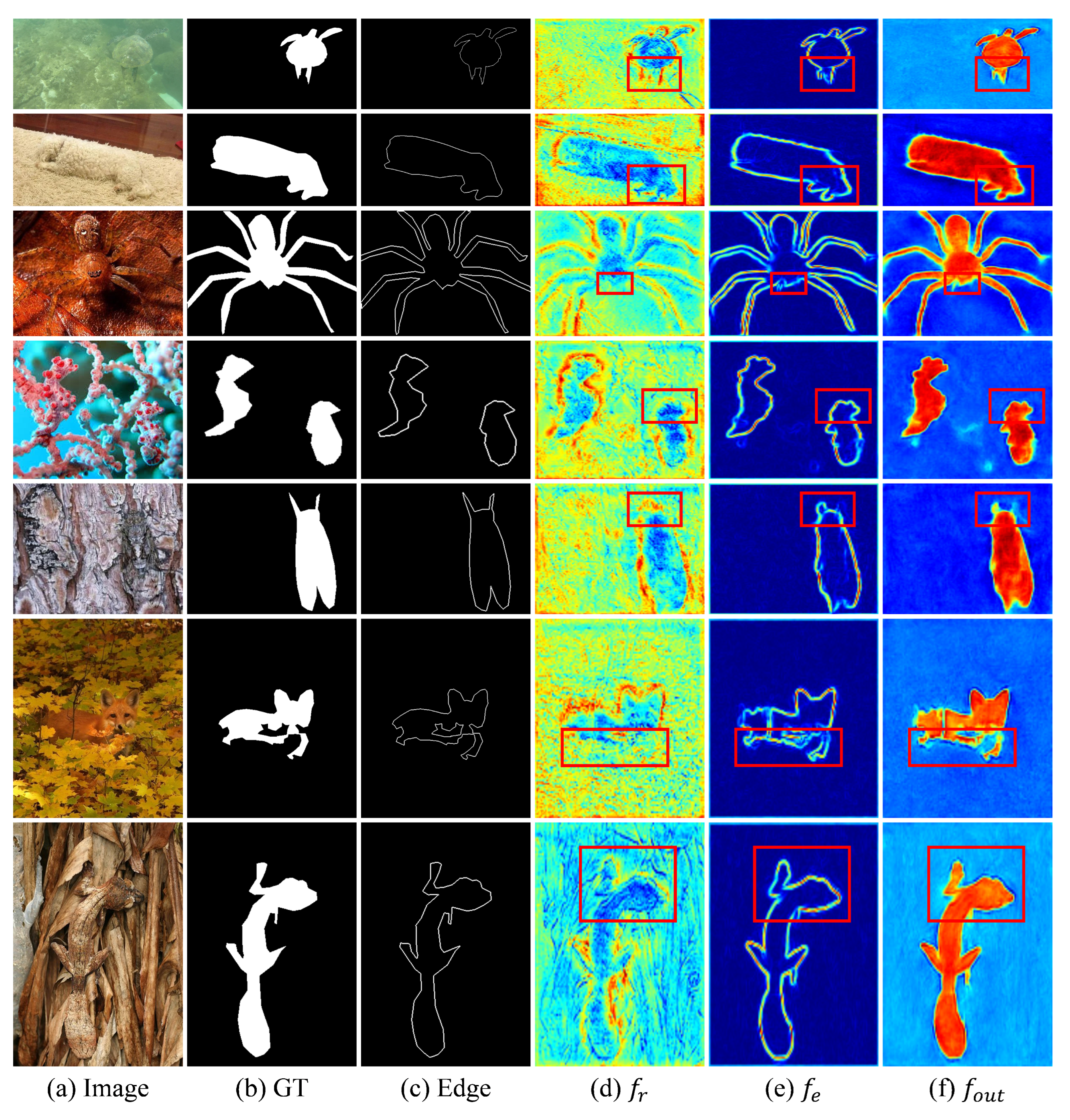

4.6. Qualitative Analysis

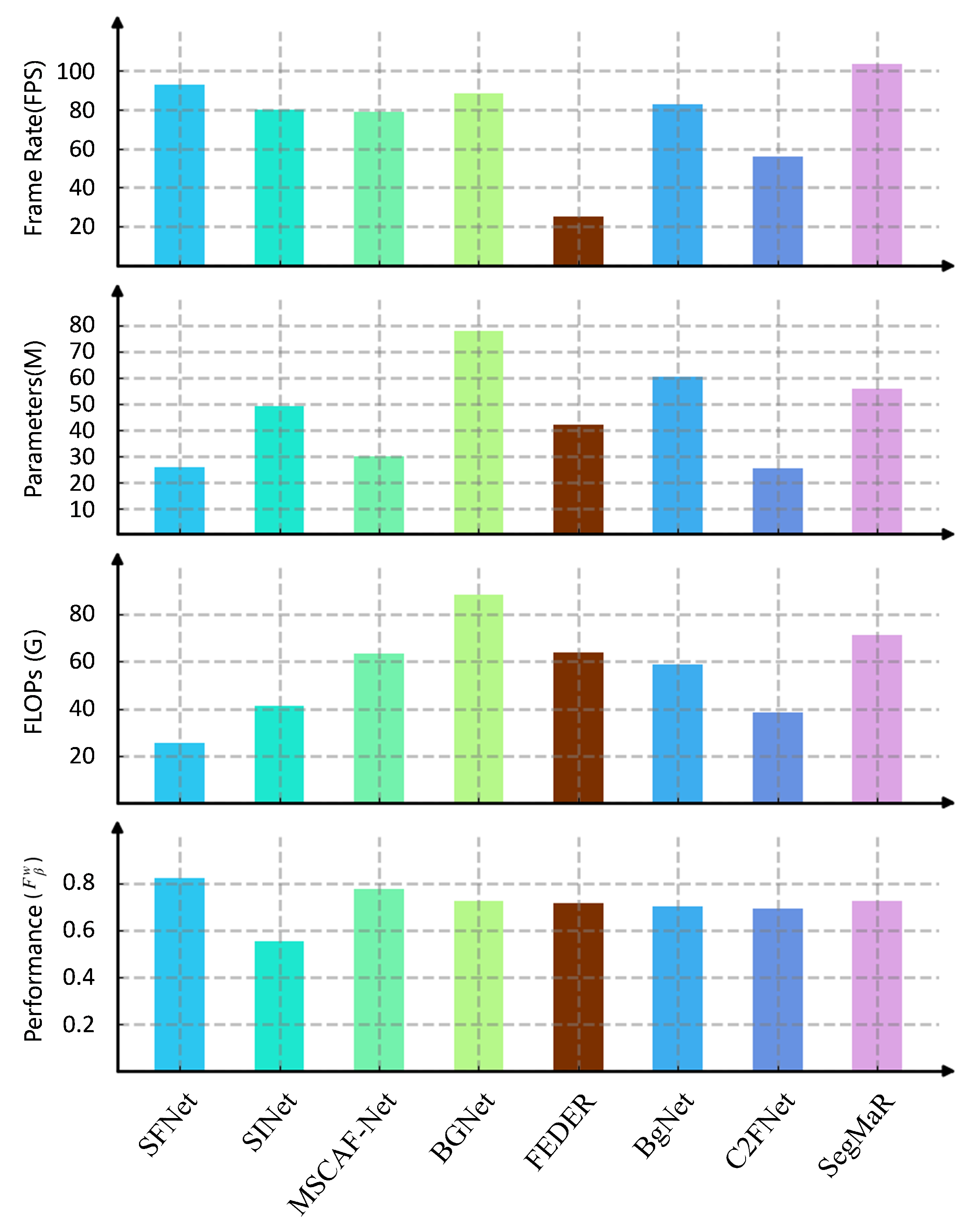

4.7. Efficiency Analysis

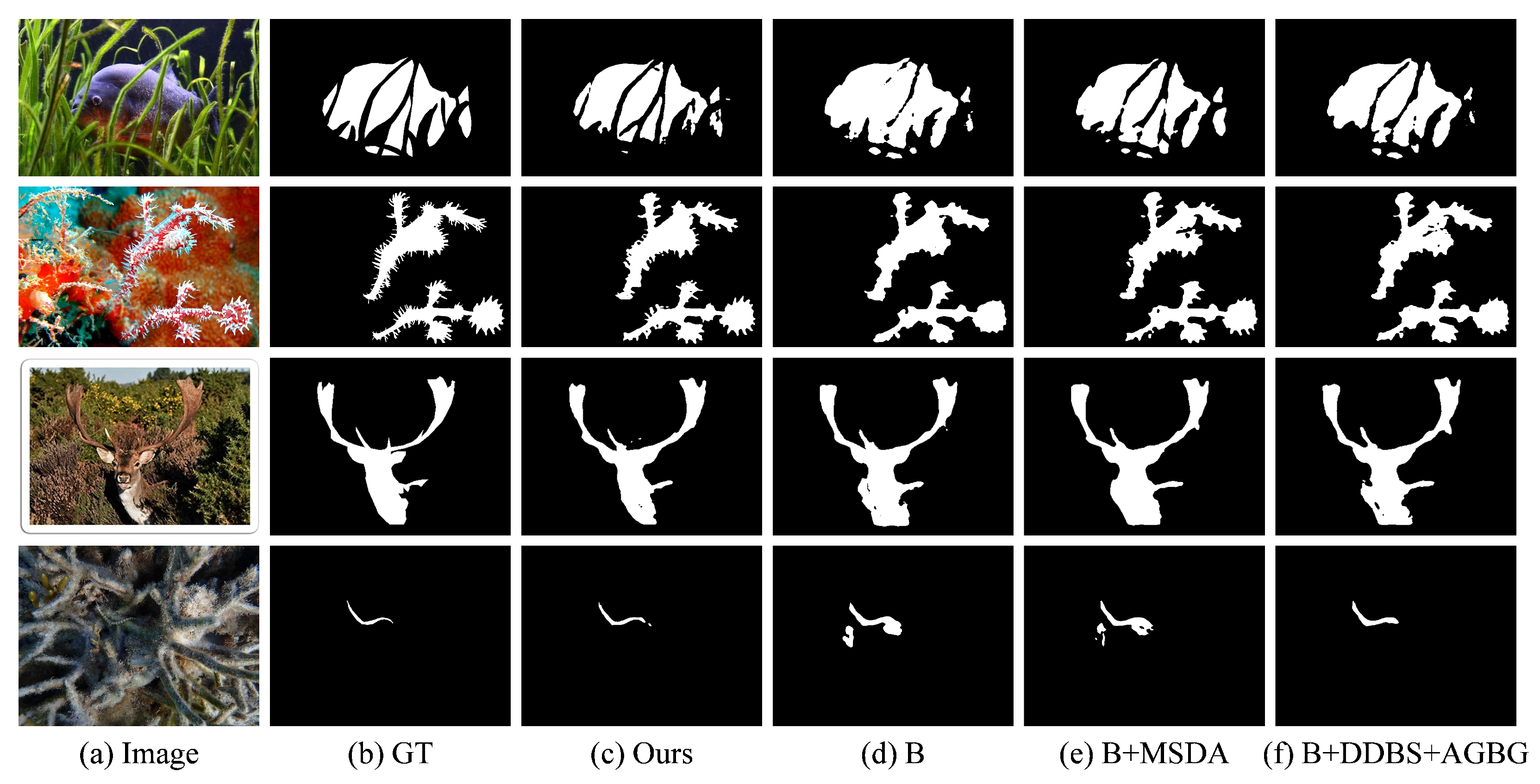

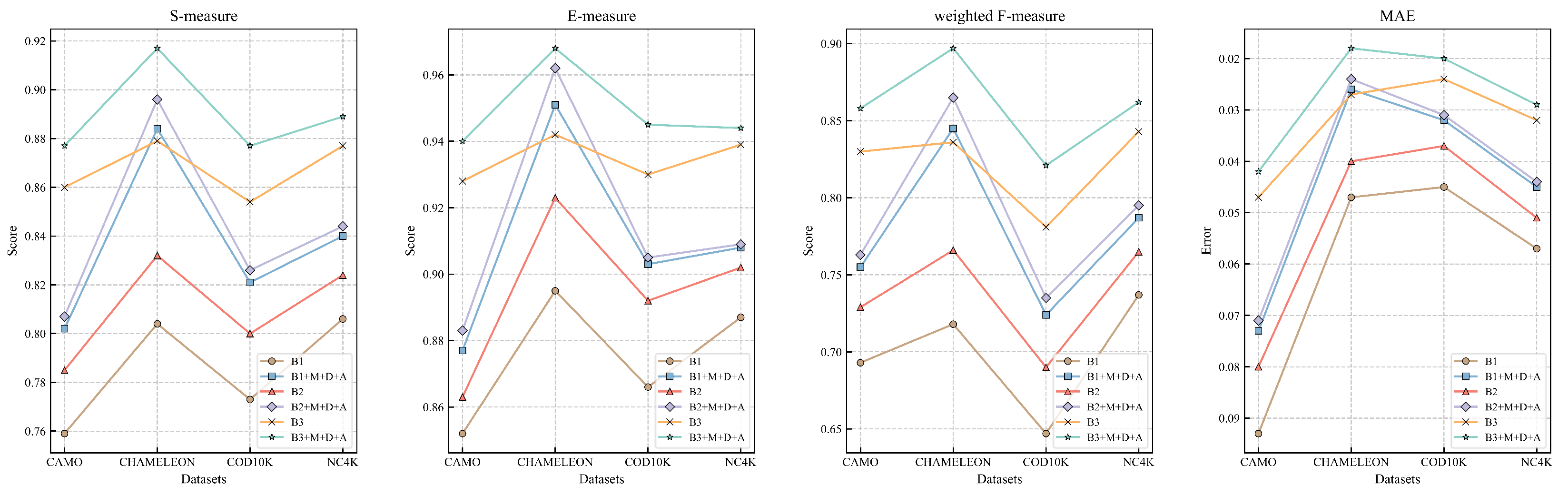

5. Ablation Study

5.1. Effectiveness of Multi-Scale Dynamic Attention

5.2. Effectiveness of Dual-Domain Boundary Supervision and Adaptive Gated Guidance

5.3. Effectiveness of the PVTv2

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Method | Category | CAMO | CHAMELEON | COD10K | NC4K | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SINet [1] | Biology | 0.751 | 0.771 | 0.606 | 0.100 | 0.869 | 0.891 | 0.740 | 0.044 | 0.771 | 0.806 | 0.551 | 0.051 | ‡ | ‡ | ‡ | ‡ |

| SegMaR [53] | Biology | 0.815 | 0.874 | 0.753 | 0.071 | 0.906 | 0.951 | 0.860 | 0.025 | 0.833 | 0.899 | 0.724 | 0.034 | ‡ | ‡ | ‡ | ‡ |

| ZoomNet [11] | Biology | 0.820 | 0.877 | 0.752 | 0.066 | 0.902 | 0.943 | 0.845 | 0.023 | 0.838 | 0.888 | 0.729 | 0.029 | 0.853 | 0.896 | 0.784 | 0.043 |

| MFFN [54] | Biology | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | 0.851 | 0.897 | 0.752 | 0.028 | 0.858 | 0.902 | 0.793 | 0.043 |

| SARNet [60] | Biology | 0.868 | 0.927 | 0.828 | 0.047 | 0.912 | 0.957 | 0.871 | 0.021 | 0.864 | 0.931 | 0.777 | 0.024 | 0.886 | 0.937 | 0.842 | 0.032 |

| MSCAF-Net [61] | Biology | 0.873 | 0.929 | 0.828 | 0.046 | 0.912 | 0.958 | 0.865 | 0.022 | 0.865 | 0.927 | 0.775 | 0.024 | 0.887 | 0.934 | 0.838 | 0.032 |

| TINet [12] | Texture | 0.781 | 0.847 | 0.678 | 0.087 | 0.874 | 0.916 | 0.783 | 0.038 | 0.793 | 0.848 | 0.635 | 0.043 | ‡ | ‡ | ‡ | ‡ |

| TANet [22] | Texture | 0.830 | 0.884 | 0.763 | 0.066 | 0.903 | 0.963 | 0.862 | 0.023 | 0.823 | 0.884 | 0.763 | 0.066 | ‡ | ‡ | ‡ | ‡ |

| UGTR [52] | Uncertainty | 0.785 | 0.823 | 0.686 | 0.086 | 0.888 | 0.911 | 0.796 | 0.031 | 0.818 | 0.853 | 0.667 | 0.035 | 0.839 | 0.874 | 0.747 | 0.052 |

| OCENet [24] | Uncertainty | 0.802 | 0.852 | 0.723 | 0.080 | 0.897 | 0.940 | 0.833 | 0.027 | 0.827 | 0.894 | 0.707 | 0.033 | 0.853 | 0.902 | 0.785 | 0.045 |

| RISNet [59] | Depth | 0.870 | 0.922 | 0.827 | 0.050 | ‡ | ‡ | ‡ | ‡ | 0.873 | 0.931 | 0.799 | 0.025 | 0.882 | 0.925 | 0.834 | 0.037 |

| VSCode [28] | Prompt | 0.836 | 0.892 | 0.768 | 0.060 | ‡ | ‡ | ‡ | ‡ | 0.847 | 0.913 | 0.744 | 0.028 | 0.874 | 0.920 | 0.813 | 0.038 |

| MGL [16] | Boundary | 0.775 | 0.812 | 0.673 | 0.088 | 0.893 | 0.917 | 0.812 | 0.031 | 0.814 | 0.851 | 0.666 | 0.035 | 0.833 | 0.867 | 0.739 | 0.053 |

| BGNet [18] | Boundary | 0.812 | 0.870 | 0.749 | 0.073 | 0.901 | 0.943 | 0.850 | 0.027 | 0.831 | 0.901 | 0.722 | 0.033 | 0.851 | 0.907 | 0.788 | 0.044 |

| BgNet [56] | Boundary | 0.831 | 0.884 | 0.762 | 0.065 | 0.894 | 0.943 | 0.823 | 0.029 | 0.826 | 0.898 | 0.703 | 0.034 | 0.855 | 0.908 | 0.784 | 0.040 |

| FEDER [33] | Boundary+Frequency | 0.802 | 0.867 | 0.738 | 0.071 | 0.887 | 0.946 | 0.834 | 0.030 | 0.822 | 0.900 | 0.716 | 0.032 | ‡ | ‡ | ‡ | ‡ |

| FPNet [58] | Boundary+Frequency | 0.851 | 0.905 | 0.802 | 0.056 | 0.914 | 0.960 | 0.868 | 0.022 | 0.850 | 0.912 | 0.755 | 0.028 | 0.889 | 0.934 | 0.851 | 0.032 |

References

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2777–2787. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Zhou, T.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Pranet: Parallel reverse attention network for polyp segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; pp. 263–273. [Google Scholar]

- Li, J.; He, W.; Li, Z.; Guo, Y.; Zhang, H. Overcoming the uncertainty challenges in detecting building changes from remote sensing images. ISPRS J. Photogramm. Remote Sens. 2025, 220, 1–17. [Google Scholar] [CrossRef]

- Liu, M.; Di, X. Extraordinary MHNet: Military high-level camouflage object detection network and dataset. Neurocomputing 2023, 549, 126466. [Google Scholar] [CrossRef]

- Li, J.; Wei, Y.; Wei, T.; He, W. A Comprehensive Deep-Learning Framework for Fine-Grained Farmland Mapping from High-Resolution Images. IEEE Trans. Geosci. Remote Sens. 2024, 63. [Google Scholar] [CrossRef]

- Chu, H.K.; Hsu, W.H.; Mitra, N.J.; Cohen-Or, D.; Wong, T.T.; Lee, T.Y. Camouflage images. ACM Trans. Graph. 2010, 29, 51–61. [Google Scholar] [CrossRef]

- Galun; Sharon; Basri; Brandt. Texture segmentation by multiscale aggregation of filter responses and shape elements. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 716–723. [Google Scholar]

- Pulla Rao, C.; Guruva Reddy, A.; Rama Rao, C. Camouflaged object detection for machine vision applications. Int. J. Speech Technol. 2020, 23, 327–335. [Google Scholar] [CrossRef]

- Tankus, A.; Yeshurun, Y. Detection of regions of interest and camouflage breaking by direct convexity estimation. In Proceedings of the 1998 IEEE Workshop on Visual Surveillance, Bombay, India, 2 January 1998; pp. 42–48. [Google Scholar]

- Beiderman, Y.; Teicher, M.; Garcia, J.; Mico, V.; Zalevsky, Z. Optical technique for classification, recognition and identification of obscured objects. Opt. Commun. 2010, 283, 4274–4282. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Xiang, T.Z.; Zhang, L.; Lu, H. Zoom in and out: A mixed-scale triplet network for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2160–2170. [Google Scholar]

- Zhu, J.; Zhang, X.; Zhang, S.; Liu, J. Inferring camouflaged objects by texture-aware interactive guidance network. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 3599–3607. [Google Scholar]

- Kajiura, N.; Liu, H.; Satoh, S. Improving camouflaged object detection with the uncertainty of pseudo-edge labels. In Proceedings of the 3rd ACM International Conference on Multimedia in Asia, Gold Coast, Australia, 1–3 December 2021; pp. 1–7. [Google Scholar]

- Wang, Q.; Yang, J.; Yu, X.; Wang, F.; Chen, P.; Zheng, F. Depth-aided camouflaged object detection. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 3297–3306. [Google Scholar]

- Tang, L.; Jiang, P.T.; Shen, Z.H.; Zhang, H.; Chen, J.W.; Li, B. Chain of visual perception: Harnessing multimodal large language models for zero-shot camouflaged object detection. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024; pp. 8805–8814. [Google Scholar]

- Zhai, Q.; Li, X.; Yang, F.; Jiao, Z.; Luo, P.; Cheng, H.; Liu, Z. MGL: Mutual graph learning for camouflaged object detection. IEEE Trans. Image Process. 2022, 32, 1897–1910. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, B.; Tang, L.; Kuang, S.; Wu, S.; Ding, S. Detecting camouflaged object in frequency domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4504–4513. [Google Scholar]

- Sun, Y.; Wang, S.; Chen, C.; Xiang, T.Z. Boundary-Guided Camouflaged Object Detection. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22, Vienna, Austria, 23–29 July 2022; pp. 1335–1341. [Google Scholar]

- Zhou, T.; Zhou, Y.; Gong, C.; Yang, J.; Zhang, Y. Feature aggregation and propagation network for camouflaged object detection. IEEE Trans. Image Process. 2022, 31, 7036–7047. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Cheng, M.M.; Shao, L. Concealed object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6024–6042. [Google Scholar] [CrossRef]

- He, C.; Li, K.; Zhang, Y.; Zhang, Y.; You, C.; Guo, Z.; Li, X.; Danelljan, M.; Yu, F. Strategic Preys Make Acute Predators: Enhancing Camouflaged Object Detectors by Generating Camouflaged Objects. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Ren, J.; Hu, X.; Zhu, L.; Xu, X.; Xu, Y.; Wang, W.; Deng, Z.; Heng, P.A. Deep texture-aware features for camouflaged object detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 33, 1157–1167. [Google Scholar] [CrossRef]

- Ji, G.P.; Fan, D.P.; Chou, Y.C.; Dai, D.; Liniger, A.; Van Gool, L. Deep gradient learning for efficient camouflaged object detection. Mach. Intell. Res. 2023, 20, 92–108. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, J.; Barnes, N. Modeling aleatoric uncertainty for camouflaged object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1445–1454. [Google Scholar]

- Zhang, Y.; Zhang, J.; Hamidouche, W.; Deforges, O. Predictive uncertainty estimation for camouflaged object detection. IEEE Trans. Image Process. 2023, 32, 3580–3591. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, J.; Zhou, Z.; An, Z.; Jiang, Q.; Demonceaux, C.; Sun, G.; Timofte, R. Object segmentation by mining cross-modal semantics. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, BC, Canada, 29 October–3 November 2023; pp. 3455–3464. [Google Scholar]

- Hu, J.; Lin, J.; Gong, S.; Cai, W. Relax image-specific prompt requirement in sam: A single generic prompt for segmenting camouflaged objects. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 12511–12518. [Google Scholar]

- Luo, Z.; Liu, N.; Zhao, W.; Yang, X.; Zhang, D.; Fan, D.P.; Khan, F.; Han, J. Vscode: General visual salient and camouflaged object detection with 2d prompt learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17169–17180. [Google Scholar]

- Wang, L.; Lu, H.; Wang, Y.; Feng, M.; Wang, D.; Yin, B.; Ruan, X. Learning to detect salient objects with image-level supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 136–145. [Google Scholar]

- Li, P.; Yan, X.; Zhu, H.; Wei, M.; Zhang, X.P.; Qin, J. Findnet: Can you find me? boundary-and-texture enhancement network for camouflaged object detection. IEEE Trans. Image Process. 2022, 31, 6396–6411. [Google Scholar] [CrossRef] [PubMed]

- Guan, J.; Fang, X.; Zhu, T.; Qian, W. SDRNet: Camouflaged object detection with independent reconstruction of structure and detail. Knowl.-Based Syst. 2024, 299, 112051. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Z.; Peng, C. AGFNet: Attention guided fusion network for camouflaged object detection. In Proceedings of the CAAI International Conference on Artificial Intelligence, Beijing, China, 27–28 August 2022; Springer: Berlin/Heidelberg, Germany; pp. 478–489. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Tang, L.; Zhang, Y.; Guo, Z.; Li, X. Camouflaged object detection with feature decomposition and edge reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22046–22055. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Multi-scale interactive network for salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9413–9422. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Nam, J.H.; Syazwany, N.S.; Kim, S.J.; Lee, S.C. Modality-agnostic domain generalizable medical image segmentation by multi-frequency in multi-scale attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 11480–11491. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Zhao, R.; Li, Y.; Zhang, Q.; Zhao, X. Bilateral decoupling complementarity learning network for camouflaged object detection. Knowl.-Based Syst. 2025, 314, 113158. [Google Scholar] [CrossRef]

- Wei, J.; Wang, S.; Huang, Q. F3Net: Fusion, feedback and focus for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12321–12328. [Google Scholar]

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Skurowski, P.; Abdulameer, H.; Błaszczyk, J.; Depta, T.; Kornacki, A.; Kozieł, P. Animal camouflage analysis: Chameleon database. Unpubl. Manuscr. 2018, 2, 7. [Google Scholar]

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11591–11601. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4548–4557. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment Measure for Binary Foreground Map Evaluation. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, Stockholm, Sweden, 13–19 July 2018; pp. 698–704. [Google Scholar]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 248–255. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef]

- Yang, F.; Zhai, Q.; Li, X.; Huang, R.; Luo, A.; Cheng, H.; Fan, D.P. Uncertainty-guided transformer reasoning for camouflaged object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4146–4155. [Google Scholar]

- Jia, Q.; Yao, S.; Liu, Y.; Fan, X.; Liu, R.; Luo, Z. Segment, magnify and reiterate: Detecting camouflaged objects the hard way. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4713–4722. [Google Scholar]

- Zheng, D.; Zheng, X.; Yang, L.T.; Gao, Y.; Zhu, C.; Ruan, Y. Mffn: Multi-view feature fusion network for camouflaged object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6232–6242. [Google Scholar]

- Chen, G.; Liu, S.J.; Sun, Y.J.; Ji, G.P.; Wu, Y.F.; Zhou, T. Camouflaged object detection via context-aware cross-level fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6981–6993. [Google Scholar] [CrossRef]

- Chen, T.; Xiao, J.; Hu, X.; Zhang, G.; Wang, S. Boundary-guided network for camouflaged object detection. Knowl.-Based Syst. 2022, 248, 108901. [Google Scholar] [CrossRef]

- Huang, Z.; Dai, H.; Xiang, T.Z.; Wang, S.; Chen, H.X.; Qin, J.; Xiong, H. Feature shrinkage pyramid for camouflaged object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5557–5566. [Google Scholar]

- Cong, R.; Sun, M.; Zhang, S.; Zhou, X.; Zhang, W.; Zhao, Y. Frequency perception network for camouflaged object detection. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, BC, Canada, 29 October–3 November 2023; pp. 1179–1189. [Google Scholar]

- Wang, L.; Yang, J.; Zhang, Y.; Wang, F.; Zheng, F. Depth-aware concealed crop detection in dense agricultural scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17201–17211. [Google Scholar]

- Xing, H.; Gao, S.; Wang, Y.; Wei, X.; Tang, H.; Zhang, W. Go closer to see better: Camouflaged object detection via object area amplification and figure-ground conversion. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5444–5457. [Google Scholar] [CrossRef]

- Liu, Y.; Li, H.; Cheng, J.; Chen, X. MSCAF-Net: A general framework for camouflaged object detection via learning multi-scale context-aware features. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4934–4947. [Google Scholar] [CrossRef]

| Method | Backbone | CAMO | CHAMELEON | COD10K | NC4K | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SINet [1] | ResNet50 | 0.751 | 0.771 | 0.606 | 0.100 | 0.869 | 0.891 | 0.740 | 0.044 | 0.771 | 0.806 | 0.551 | 0.051 | ‡ | ‡ | ‡ | ‡ |

| MGL [16] | ResNet50 | 0.775 | 0.812 | 0.673 | 0.088 | 0.893 | 0.917 | 0.812 | 0.031 | 0.814 | 0.851 | 0.666 | 0.035 | 0.833 | 0.867 | 0.739 | 0.053 |

| UGTR [52] | ResNet50 | 0.785 | 0.823 | 0.686 | 0.086 | 0.888 | 0.911 | 0.796 | 0.031 | 0.818 | 0.853 | 0.667 | 0.035 | 0.839 | 0.874 | 0.747 | 0.052 |

| OCENet [24] | ResNet50 | 0.802 | 0.852 | 0.723 | 0.080 | 0.897 | 0.940 | 0.833 | 0.027 | 0.827 | 0.894 | 0.707 | 0.033 | 0.853 | 0.902 | 0.785 | 0.045 |

| SegMaR [53] | ResNet50 | 0.815 | 0.874 | 0.753 | 0.071 | 0.906 | 0.951 | 0.860 | 0.025 | 0.833 | 0.899 | 0.724 | 0.034 | ‡ | ‡ | ‡ | ‡ |

| ZoomNet [11] | ResNet50 | 0.820 | 0.877 | 0.752 | 0.066 | 0.902 | 0.943 | 0.845 | 0.023 | 0.838 | 0.888 | 0.729 | 0.029 | 0.853 | 0.896 | 0.784 | 0.043 |

| FEDER [33] | ResNet50 | 0.802 | 0.867 | 0.738 | 0.071 | 0.887 | 0.946 | 0.834 | 0.030 | 0.822 | 0.900 | 0.716 | 0.032 | ‡ | ‡ | ‡ | ‡ |

| MFFN [54] | ResNet50 | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | ‡ | 0.851 | 0.897 | 0.752 | 0.028 | 0.858 | 0.902 | 0.793 | 0.043 |

| C2FNet [55] | Res2Net50 | 0.799 | 0.859 | 0.730 | 0.077 | 0.893 | 0.946 | 0.845 | 0.028 | 0.811 | 0.887 | 0.691 | 0.036 | ‡ | ‡ | ‡ | ‡ |

| BGNet [18] | Res2Net50 | 0.812 | 0.870 | 0.749 | 0.073 | 0.901 | 0.943 | 0.850 | 0.027 | 0.831 | 0.901 | 0.722 | 0.033 | 0.851 | 0.907 | 0.788 | 0.044 |

| FAPNet [19] | Res2Net50 | 0.815 | 0.865 | 0.734 | 0.076 | 0.893 | 0.940 | 0.825 | 0.028 | 0.822 | 0.888 | 0.694 | 0.036 | 0.851 | 0.899 | 0.775 | 0.047 |

| BgNet [56] | Res2Net50 | 0.831 | 0.884 | 0.762 | 0.065 | 0.894 | 0.943 | 0.823 | 0.029 | 0.826 | 0.898 | 0.703 | 0.034 | 0.855 | 0.908 | 0.784 | 0.040 |

| FSPNet [57] | ViT | 0.856 | 0.899 | 0.799 | 0.050 | 0.908 | 0.943 | 0.851 | 0.023 | 0.851 | 0.895 | 0.735 | 0.026 | 0.879 | 0.915 | 0.816 | 0.035 |

| VSCode [28] | Swin | 0.836 | 0.892 | 0.768 | 0.060 | ‡ | ‡ | ‡ | ‡ | 0.847 | 0.913 | 0.744 | 0.028 | 0.874 | 0.920 | 0.813 | 0.038 |

| FPNet [58] | PVT | 0.851 | 0.905 | 0.802 | 0.056 | 0.914 | 0.960 | 0.868 | 0.022 | 0.850 | 0.912 | 0.755 | 0.028 | 0.889 | 0.934 | 0.851 | 0.032 |

| RISNet [59] | PVT | 0.870 | 0.922 | 0.827 | 0.050 | ‡ | ‡ | ‡ | ‡ | 0.873 | 0.931 | 0.799 | 0.025 | 0.882 | 0.925 | 0.834 | 0.037 |

| SARNet [60] | PVTv2 | 0.868 | 0.927 | 0.828 | 0.047 | 0.912 | 0.957 | 0.871 | 0.021 | 0.864 | 0.931 | 0.777 | 0.024 | 0.886 | 0.937 | 0.842 | 0.032 |

| MSCAF-Net [61] | PVTv2 | 0.873 | 0.929 | 0.828 | 0.046 | 0.912 | 0.958 | 0.865 | 0.022 | 0.865 | 0.927 | 0.775 | 0.024 | 0.887 | 0.934 | 0.838 | 0.032 |

| SFNet(Ours) | PVTv2 | 0.877 | 0.940 | 0.858 | 0.042 | 0.917 | 0.968 | 0.897 | 0.018 | 0.877 | 0.945 | 0.821 | 0.020 | 0.889 | 0.944 | 0.862 | 0.029 |

| Method | CAMO | CHAMELEON | COD10K | NC4K | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.860 | 0.928 | 0.830 | 0.047 | 0.879 | 0.942 | 0.836 | 0.027 | 0.854 | 0.930 | 0.781 | 0.024 | 0.877 | 0.939 | 0.843 | 0.032 |

| Baseline+MDSA | 0.870 | 0.939 | 0.847 | 0.043 | 0.897 | 0.957 | 0.867 | 0.024 | 0.868 | 0.941 | 0.805 | 0.021 | 0.886 | 0.945 | 0.859 | 0.029 |

| Baseline+DDBS+AGBG | 0.867 | 0.939 | 0.843 | 0.043 | 0.892 | 0.954 | 0.854 | 0.023 | 0.865 | 0.943 | 0.802 | 0.021 | 0.885 | 0.946 | 0.856 | 0.029 |

| Baseline+MDSA+DDBS+AGBG | 0.877 | 0.940 | 0.858 | 0.042 | 0.917 | 0.968 | 0.897 | 0.018 | 0.877 | 0.945 | 0.821 | 0.020 | 0.889 | 0.944 | 0.862 | 0.029 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Zhao, Y.; Hu, Z. Boundary-Aware Camouflaged Object Detection via Spatial-Frequency Domain Supervision. Electronics 2025, 14, 2541. https://doi.org/10.3390/electronics14132541

Wang P, Zhao Y, Hu Z. Boundary-Aware Camouflaged Object Detection via Spatial-Frequency Domain Supervision. Electronics. 2025; 14(13):2541. https://doi.org/10.3390/electronics14132541

Chicago/Turabian StyleWang, Penglin, Yaochi Zhao, and Zhuhua Hu. 2025. "Boundary-Aware Camouflaged Object Detection via Spatial-Frequency Domain Supervision" Electronics 14, no. 13: 2541. https://doi.org/10.3390/electronics14132541

APA StyleWang, P., Zhao, Y., & Hu, Z. (2025). Boundary-Aware Camouflaged Object Detection via Spatial-Frequency Domain Supervision. Electronics, 14(13), 2541. https://doi.org/10.3390/electronics14132541