Quantifying Cyber Resilience: A Framework Based on Availability Metrics and AUC-Based Normalization

Abstract

1. Introduction

2. Related Research

2.1. Recent Trends and Emerging Directions in Cybersecurity Research

2.2. Trends in Cyber Resilience Policies and Frameworks

2.3. Research on ICT and Web Service Quality Metrics

2.4. Cyber Resilience Quantification Models and Simulation-Based Research

3. Criteria for Selecting Quantitative Metrics

3.1. The Necessity of Criteria for Selecting Quantitative Metrics

3.2. Selection Criteria for Quantitative Cyber Resilience Metrics

3.2.1. Objectivity

3.2.2. Reproducibility

3.2.3. Scalability

3.2.4. Practicality

3.2.5. Resilience Representation

3.3. Complementary Metric Design Criteria

ME and CE Principles

4. Process for Selecting Quantitative Metrics

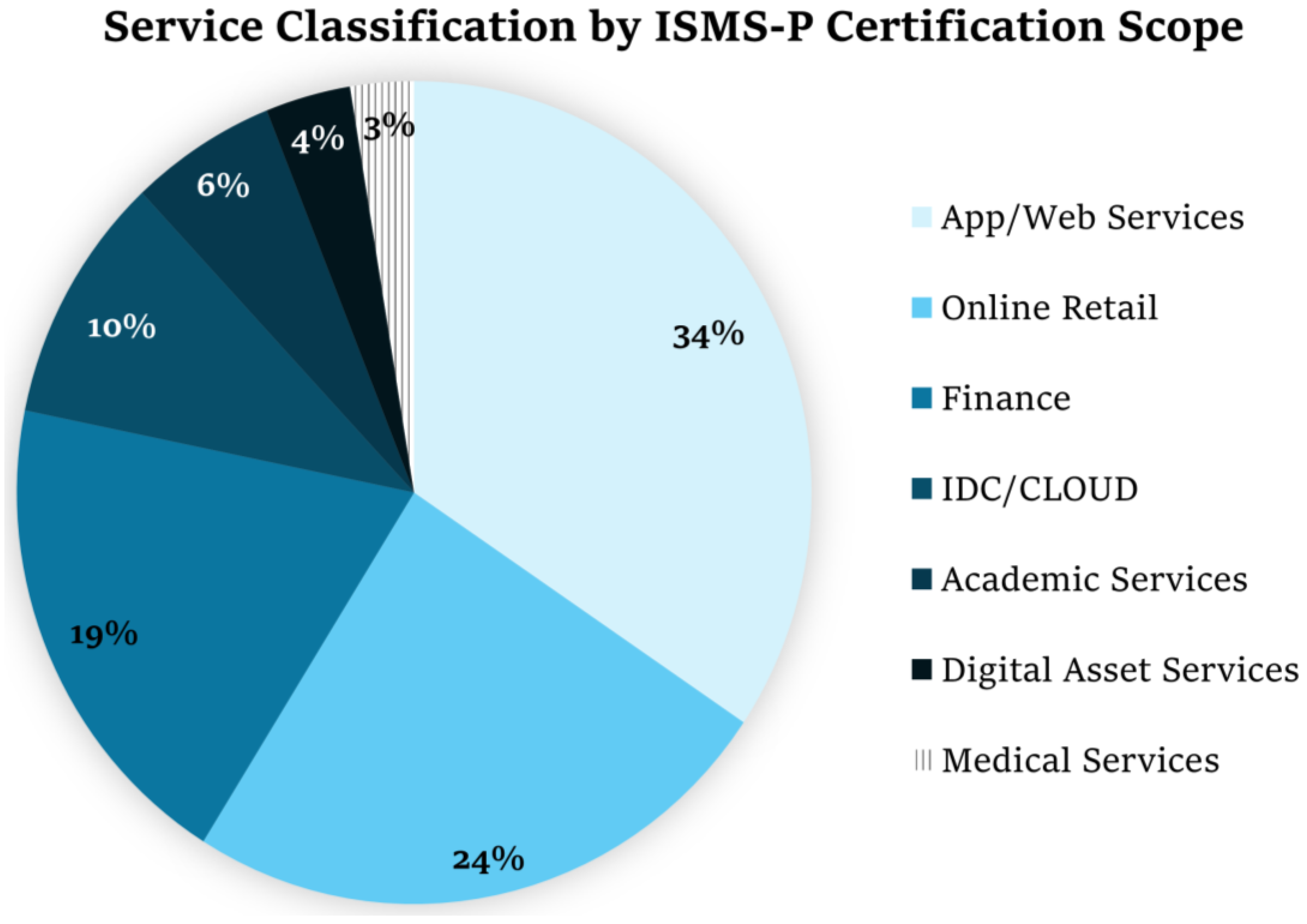

4.1. Selection of Target Services

4.2. Identification of Key Services and Candidate Quantitative Metrics

- Preliminary Metric Selection: Literature review and sector case analysis were conducted to identify metrics aligned with resilience attributes required in key industries, such as finance, public services, and healthcare.

- Derivation of Candidate Metrics: Based on the analysis, we derived a list of candidate metrics that reflect real-world operational characteristics.

4.3. Incorporation of Resilience Quantitative Metrics Selection Criteria

4.4. Application of Complementary Design Criteria for Metrics Selection

4.4.1. Mutually Exclusive (ME)

4.4.2. Collectively Exhaustive (CE)

4.5. Final Selection of Quantitative Metrics

5. Normalization of Cyber Resilience Metrics

5.1. Necessity of Normalizing Quantitative Metrics

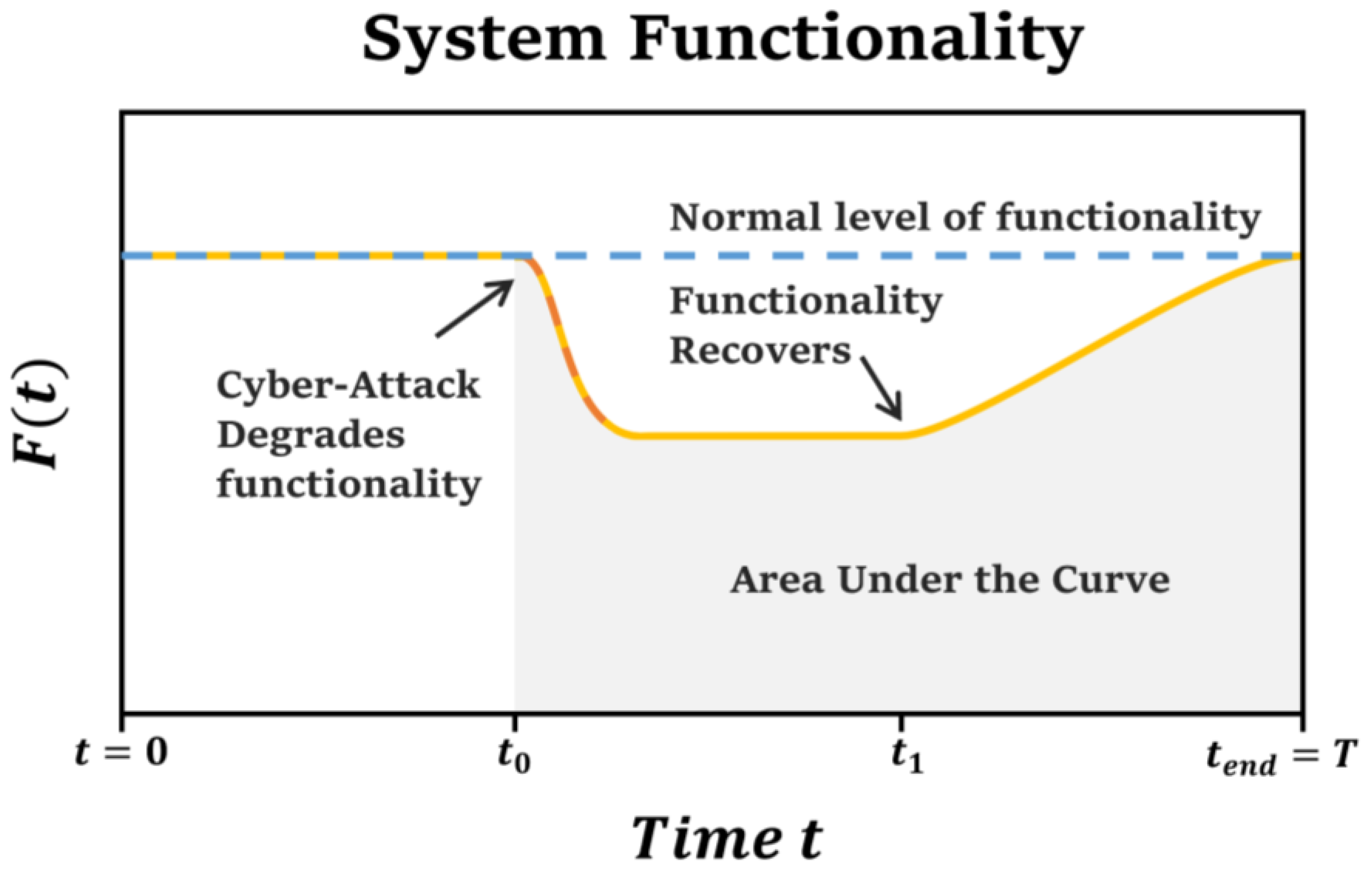

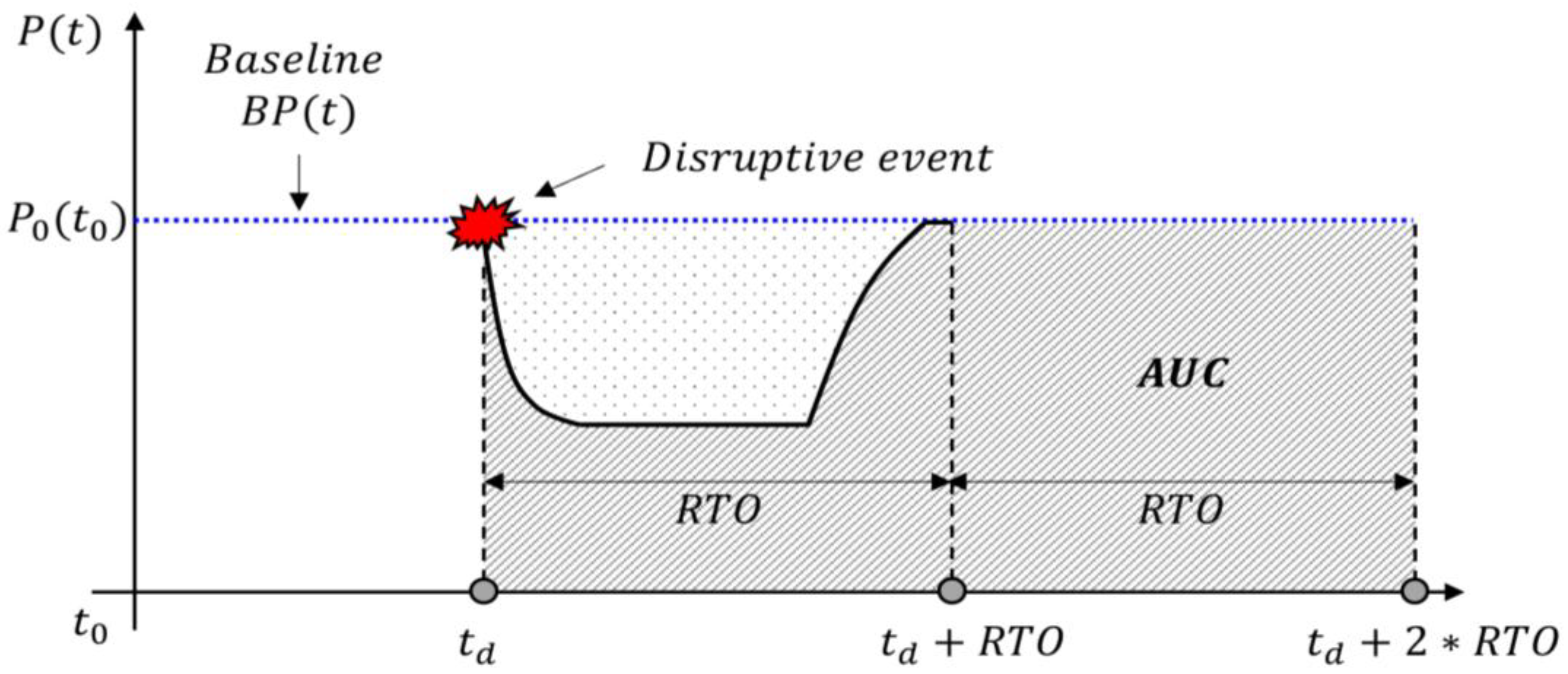

5.2. AUC-Based Normalization Evaluation

6. Demonstration of Cyber Resilience Metrics

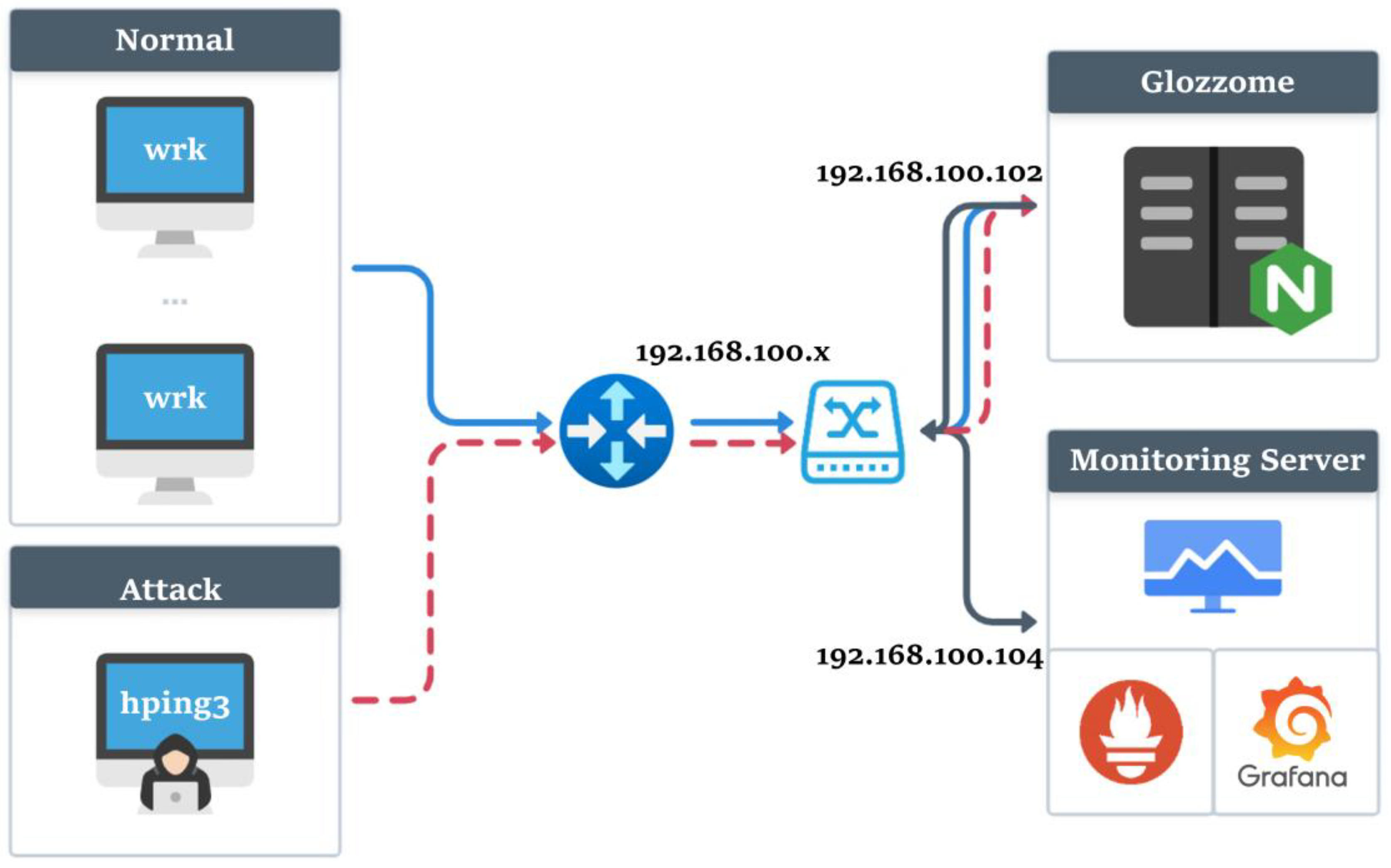

6.1. Experimental Environment

6.2. Experimental Process

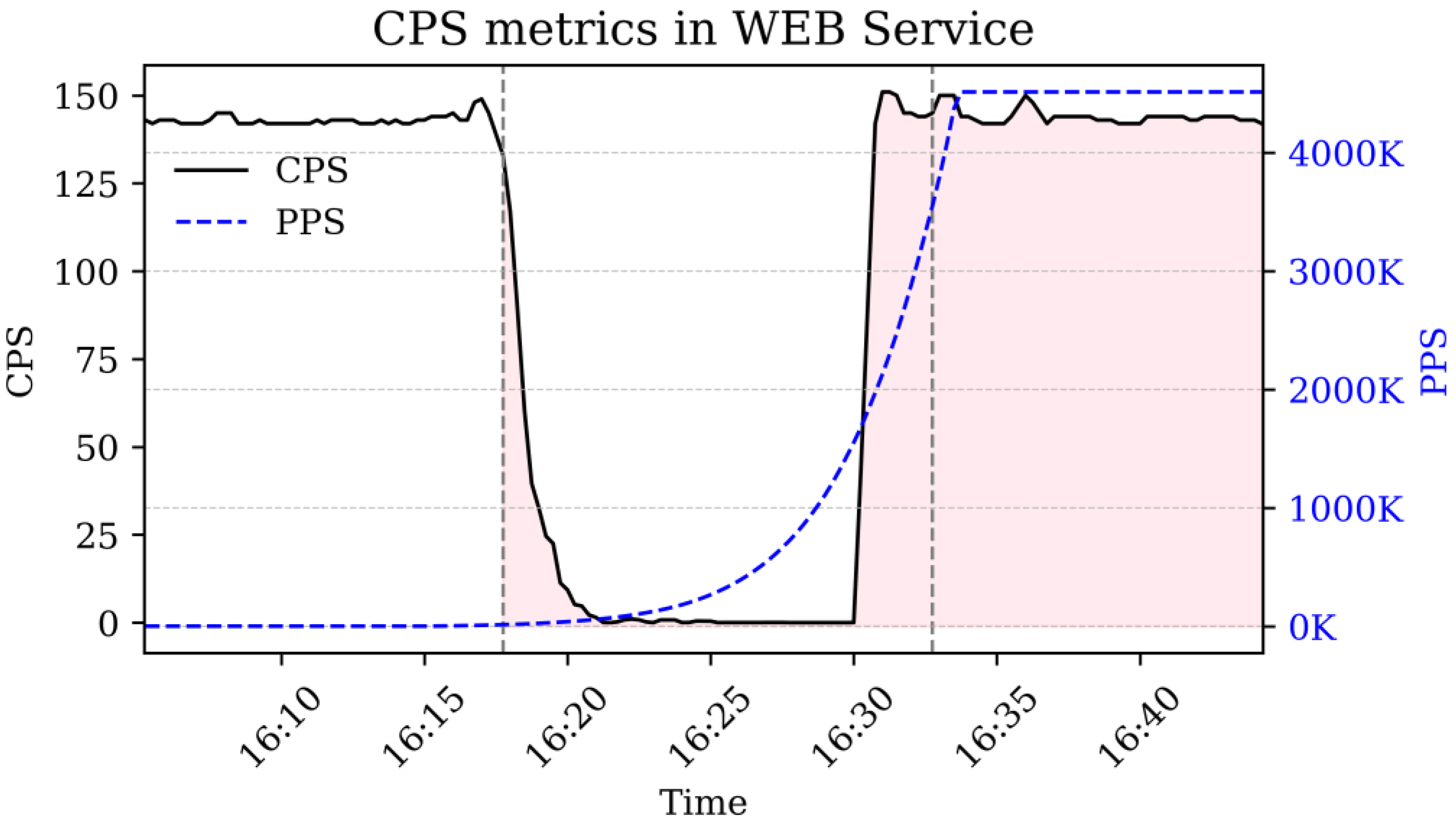

6.2.1. Normal Service Phase (Normal)

6.2.2. Initial Attack Phase (Survive)

6.2.3. Sustained Attack Phase (Sustain)

6.2.4. Defense and Recovery Phase (Recovery)

6.3. Normalized Resilience Index

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Peisert, S.; Schneier, B.; Okhravi, H.; Massacci, F.; Benzel, T.; Landwehr, C.; Michael, J.B. Perspectives on the SolarWinds Incident. IEEE Secur. Priv. 2021, 19, 7–13. [Google Scholar] [CrossRef]

- Beerman, J.; Berent, D.; Falter, Z.; Bhunia, S. A Review of Colonial Pipeline Ransomware Attack. In Proceedings of the 2023 IEEE/ACM 23rd International Symposium on Cluster, Cloud and Internet Computing Workshops (CCGridW), Brisbane, Australia, 8–15 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 8–15. [Google Scholar] [CrossRef]

- UNECE Task Force on Digitalization in Energy. Case Study “Cyber Resilience of Critical Energy Infrastructure”. 2023. Available online: https://unece.org/sites/default/files/2023-12/Pipeline_Cyberattack_case.study_.2023_rev.2_0.pdf (accessed on 3 June 2025).

- GovInsider. South Korea’s 56 Hours of Paralysis Is a Cyber Resilience Cautionary Tale. Available online: https://govinsider.asia/intl-en/article/south-koreas-56-hours-of-paralysis-is-a-cyber-resilience-cautionary-tale (accessed on 3 June 2025).

- Abdullah, F. Social and Ethical Implications of the 2024 CrowdStrike Vulnerability: A Cybersecurity Case Study; University of North Texas: Denton, TX, USA, 2024. [Google Scholar] [CrossRef]

- Bodeau, D.J.; Graubart, R. Cyber Resiliency Engineering Framework; MITRE Technical Report MTR110237; MITRE Corporation: Bedford, MA, USA, 2011. [Google Scholar]

- Yodo, N.; Wang, P. Engineering Resilience Quantification and System Design Implications: A Literature Survey. J. Mech. Des. 2016, 138, 111408. [Google Scholar] [CrossRef]

- Cong, X.; Zhu, H.; Cui, W.; Zhao, G.; Yu, Z. Critical Observability of Stochastic Discrete Event Systems Under Intermittent Loss of Observations. Mathematics 2025, 13, 1426. [Google Scholar] [CrossRef]

- Lin, I.C.; Ruan, J.Y.; Chang, C.C.; Chang, C.C.; Wang, C.T. A Cybersecurity Detection Platform Integrating IOTA DLT and IPFS for Vulnerability Management. Electronics 2025, 14, 1929. [Google Scholar] [CrossRef]

- Kim, C.; Son, S.; Park, Y. A Privacy-Preserving Authentication Scheme Using PUF and Biometrics for IoT-Enabled Smart Cities. Electronics 2025, 14, 1953. [Google Scholar] [CrossRef]

- Song, T.; Qiao, Y.; He, Y.; Wu, N.; Li, Z.; Liu, B. Dual-Arm Cluster Tool Scheduling for Reentrant Wafer Flows. Electronics 2023, 12, 2411. [Google Scholar] [CrossRef]

- The White House. National Cybersecurity Strategy. 2023. Available online: https://bidenwhitehouse.archives.gov/wp-content/uploads/2023/03/National-Cybersecurity-Strategy-2023.pdf (accessed on 3 June 2025).

- European Commission. Proposal for a Regulation of the European Parliament and of the Council on Horizontal Cybersecurity Requirements for Products with Digital Elements and Amending Regulation (EU) 2019/1020 (COM(2022) 454 Final, 2022/0272(COD)). 2022. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52022PC0454 (accessed on 3 June 2025).

- Cabinet Office. National Cyber Strategy 2022. 2021. Available online: https://www.gov.uk/government/publications/national-cyber-strategy-2022 (accessed on 3 June 2025).

- National Center of Incident Readiness and Strategy for Cybersecurity (NISC). Cybersecurity Strategy. 2021. Available online: https://www.nisc.go.jp/eng/pdf/cs-senryaku2021-en.pdf (accessed on 3 June 2025).

- National Institute of Standards and Technology. The NIST Cybersecurity Framework (CSF) Version 2.0; NIST: Washington, DC, USA, 2024. [CrossRef]

- World Economic Forum. The Cyber Resilience Index: Advancing Organizational Cyber Resilience; World Economic Forum: Geneva, Switzerland, 2022; Available online: https://www.weforum.org/publications/the-cyber-resilience-index-advancing-organizational-cyber-resilience/ (accessed on 3 June 2025).

- Ross, R.; Pillitteri, V.; Graubart, R.; Bodeau, D.; McQuaid, R. NIST Special Publication 800-160 Volume 2: Developing Cyber Resilient Systems: A Systems Security Engineering Approach; NIST: Gaithersburg, MD, USA, 2019. [Google Scholar] [CrossRef]

- Ministry of Science and ICT. 2024 Telecommunications Service Coverage Inspection and Quality Evaluation Results; Ministry of Science and ICT: Seoul, Republic of Korea, 2024. [Google Scholar]

- Oriol, M.; Marco, J.; Franch, X. Quality Models for Web Services: A Systematic Mapping. Inf. Softw. Technol. 2014, 56, 1167–1182. [Google Scholar] [CrossRef]

- AlHidaifi, S.M.; Asghar, M.R.; Ansari, I.S. Towards a Cyber Resilience Quantification Framework (CRQF) for IT Infrastructure. Comput. Netw. 2024, 247, 110446. [Google Scholar] [CrossRef]

- Weisman, M.J.; Kott, A.; Ellis, J.E.; Murphy, B.J.; Parker, T.W.; Smith, S.; Vandekerckhove, J. Quantitative Measurement of Cyber Resilience: Modeling and Experimentation. In Proceedings of the 2023 ACM Workshop on Secure and Trustworthy Cyber-Physical Systems (SaT-CPS’23), New York, NY, USA, 15–17 October 2023; p. 3. [Google Scholar] [CrossRef]

- Llansó, T.; McNeil, M. Towards an Organizationally-Relevant Quantification of Cyber Resilience. In Proceedings of the 54th Hawaii International Conference on System Sciences, Maui, HI, USA, 5–8 January 2021; pp. 7065–7074. [Google Scholar] [CrossRef]

- Bodeau, D.J.; Graubart, R.D.; McQuaid, R.M.; Woodill, J. Cyber Resiliency Metrics, Measures of Effectiveness, and Scoring: Enabling Systems Engineers and Program Managers to Select the Most Useful Assessment Methods; MITRE Technical Report; MITRE Corporation: Bedford, MA, USA, 2018. [Google Scholar]

- Kent, K.; Souppaya, M.; Johnson, J.; Dempsey, K. NIST Special Publication 800-55 Revision 1: Performance Measurement Guide for Information Security; NIST: Gaithersburg, MD, USA, 2008. [Google Scholar] [CrossRef]

- ISO/IEC 27004:2016; Information Security Management—Monitoring, Measurement, Analysis and Evaluation, 2nd ed. International Organization for Standardization: Geneva, Switzerland, 2016.

- Bruneau, M.; Chang, S.E.; Eguchi, R.T.; Lee, G.C.; O’Rourke, T.D.; Reinhorn, A.M.; Shinozuka, M.; Tierney, K.; Wallace, W.A.; von Winterfeldt, D. A Framework to Quantitatively Assess and Enhance the Seismic Resilience of Communities. Earthq. Spectra 2003, 19, 733–752. [Google Scholar] [CrossRef]

- Murino, G.; Armando, A.; Tacchella, A. Resilience of Cyber-Physical Systems: An Experimental Appraisal of Quantitative Measures. In Proceedings of the 2019 11th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 22–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; Volume 900, pp. 1–19. [Google Scholar] [CrossRef]

- Haque, M.A.; De Teyou, G.K.; Shetty, S.; Krishnappa, B. Cyber Resilience Framework for Industrial Control Systems: Concepts, Metrics, and Insights. In Proceedings of the IEEE International Conference on Intelligence and Security Informatics (ISI), Miami, FL, USA, 21–23 September 2018; pp. 25–30. [Google Scholar] [CrossRef]

- Bodeau, D.; Graubart, R. Cyber Resiliency Design Principles; MITRE Technical Report MTR170001; MITRE Corporation: Bedford, MA, USA, 2017. [Google Scholar]

- Lee, C.Y.; Chen, B.S. Mutually-Exclusive-and-Collectively-Exhaustive Feature Selection Scheme. Appl. Soft Comput. 2018, 68, 961–971. [Google Scholar] [CrossRef]

- MITRE Corporation. MITRE ATT&CK Framework. 2023. Available online: https://attack.mitre.org/ (accessed on 3 June 2025).

- Kim, S.J.; Kim, T.-S. Analysis on ISMS Certification and Organizational Characteristics Based on Information Security Disclosure Data. Inf. Syst. Rev. 2023, 25, 205–231. [Google Scholar] [CrossRef]

- Sahasrabudhe, M.; Panwar, M.; Chaudhari, S. Application performance monitoring and prediction. In Proceedings of the 2013 IEEE International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 26–28 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–6. [Google Scholar]

- Tuyishime, E.; Balan, T.C.; Cotfas, P.A.; Cotfas, D.T.; Rekeraho, A. Enhancing cloud security—Proactive threat monitoring and detection using a siem-based approach. Appl. Sci. 2023, 13, 12359. [Google Scholar] [CrossRef]

- IETF. RFC 5481: TCP Loss Probing; RFC Editor: Fremont, CA, USA, 2009; Available online: https://datatracker.ietf.org/doc/html/rfc5481 (accessed on 3 June 2025).

- IETF. RFC 2330: Framework for IP Performance Metrics; RFC Editor: Fremont, CA, USA, 1998; Available online: https://www.rfc-editor.org/rfc/rfc2330.html (accessed on 3 June 2025).

- Ju, M.; Liu, Y.; Zhou, F.; Xiao, S. Disaster-Resilient and Distance-Adaptive Services Provisioning in Elastic Optical Inter-Data Center Networks. J. Lightwave Technol. 2022, 40, 4064–4077. [Google Scholar] [CrossRef]

- ETSI. 101 329 V2.1.1: Telecommunications and Internet Protocol Harmonization Over Networks (TIPHON); ETSI: Sophia Antipolis, France, 1999. [Google Scholar]

- Dessavre, D.G.; Ramirez-Marquez, J.E.; Barker, K. Multidimensional Approach to Complex System Resilience Analysis. Reliab. Eng. Syst. Saf. 2016, 149, 34–43. [Google Scholar] [CrossRef]

- Mrhrifat. Glozzome. GitHub. 2025. Available online: https://github.com/mrhrifat/glozzome (accessed on 3 June 2025).

- IETF. RFC 4987: TCP SYN Flooding Attacks and Common Mitigations; RFC Editor: Fremont, CA, USA, 2007; Available online: https://datatracker.ietf.org/doc/html/rfc4987 (accessed on 3 June 2025).

- Rahiminejad, A.; Plotnek, J.; Atallah, R.; Dubois, M.A.; Malatrait, D.; Ghafouri, M.; Mohammadi, A.; Debbabi, M. A resilience-based recovery scheme for smart grid restoration following cyberattacks to substations. Int. J. Electr. Power Energy Syst. 2023, 145, 108610. [Google Scholar] [CrossRef]

| Service Type | Classification Criteria | Key Resilience Requirements |

|---|---|---|

| App/Web Service | Public Portals, Content Services, Communities, ERP | Fast response and page loading, Scalability, Data integrity |

| Online Retail | E-commerce, Payment Systems, Logistics Integration Platforms | Service availability, Transaction integrity and reliability, Prompt response, Scalability, Security |

| Academic Service | LMS, Academic Administration, Examination Systems | Concurrent user handling, Stable performance, Data accuracy/integrity |

| IDC/Cloud | IaaS, PaaS, CDN, DNS, NMS | Automatic fault recovery and prompt restoration, Network quality assurance, Scalability, Security |

| Digital asset service | Blockchain Exchange, Smart Contracts, Wallet Service | Transaction integrity and consistency, Prompt transaction processing, Scalability, Security |

| Finance | Internet Banking, Card Payments, Securities Trading, Insurance Information | Data integrity/accuracy, Low latency and stable response, Security, Prompt recovery |

| Medical Service | EMR, Telemedicine, HIS | Data integrity/accuracy, Security, Real-time performance, Prompt fault recovery |

| No. | App/Web Service | Online Retail | Academic Service | IDC/Cloud | Digital Asset Service | Finance | Medical Service | |

|---|---|---|---|---|---|---|---|---|

| 1 | Connection Success Rate | O | O | O | ||||

| 2 | CPS | O | O | O | O | |||

| 3 | Latency | O | O | O | O | |||

| 4 | Throughput | O | O | |||||

| 5 | DataLossRate | O | O | O | O | O | O | O |

| 6 | Authentication | O | O | O | O | O | O | |

| 7 | TPS | O | O | O | O | O | O | O |

| 8 | Capacity | O | O | O | O | |||

| 9 | Response Time | O | O | O | O | O | O | |

| 10 | MTTR | O | O | O | O | O | O | O |

| 11 | CC | O | O | O | O | |||

| 12 | Jitter | O | O | O | O | |||

| 13 | Packet Loss | O | O | |||||

| 14 | End-to-End Delay | O | ||||||

| 15 | Provisioning Time | O | ||||||

| 16 | Failure Detection Time | O | O | O | O | O | ||

| 17 | Page Load Time | O | O | O | O | |||

| 18 | Purchase Completion Rate | O | ||||||

| 19 | Test Submission Success | O |

| No. | Objectivity | Reproducibility | Scalability | Practicality | Resilience Representation | ME | Duplicated | Selected | |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Connection Success Rate | O | O | O | O | △ | X | CPS | |

| 2 | CPS | O | O | O | O | O | X | CPS | O |

| 3 | Latency | O | △ | O | O | O | X | Response Time | |

| 4 | Throughput | O | O | O | O | O | X | Throughput | O |

| 5 | DataLossRate | O | △ | O | △ | △ | X | Packet Loss Rate | |

| 6 | Authentication | O | O | O | O | O | O | - | O |

| 7 | TPS | O | O | O | O | O | X | TPS | O |

| 8 | Capacity | X | X | X | X | X | X | Throughput | |

| 9 | Response Time | O | O | O | O | O | X | Response Time | O |

| 10 | MTTR | O | O | O | O | O | O | MTTR | O |

| 11 | CC | O | O | O | O | O | O | - | O |

| 12 | Jitter | X | X | O | △ | △ | X | Response Time | |

| 13 | Packet Loss Rate | O | △ | O | O | O | X | Packet Loss Rate | O |

| 14 | End-to-End Delay | O | △ | O | O | O | X | Response Time | |

| 15 | Provisioning Time | O | O | O | O | O | O | MTTR | O |

| 16 | Failure Detection Time | O | O | O | O | O | O | - | O |

| 17 | Page Load Time | O | O | O | O | △ | X | Response Time | |

| 18 | Purchase Completion Rate | O | O | O | O | △ | X | TPS | |

| 19 | Test Submission Success | O | O | △ | △ | △ | X | TPS |

| No. | Duplicated | Formula | Unit | |

|---|---|---|---|---|

| 1 | Failure Detection Time | Time elapsed from the occurrence of a system failure until it is detected. | Detection Time − Failure Occurrence Time | sec |

| 2 | Packet Loss Rate | The ratio of lost packets to the total packets transmitted over the network. | ((Number of Transmitted Packets − Number of Received Packets)/Number of Transmitted Packets) × 100 | % |

| 3 | CPS | The number of new connections that a system or device (e.g., load balancer) can establish per second. | Total Number of Connections/Time (seconds) | connections/s |

| 4 | Response Time | The total time from when the client sends a request to when it receives a response from the server. | Response Received Time − Request Sent Time | ms |

| 5 | Throughput | The amount of data successfully transmitted or processed through the system over a specified period, measured in bits per second. | Data Transferred/Time | bps |

| 6 | Authentication | The ratio of successful authentications (logins) without error or delay to the total number of authentication attempts. | ((Number of Successful Authentication Attempts/Total Number of Authentication Attempts) × 100) | % |

| 7 | TPS | The number of transactions the system can process per second, indicating the transactions executed within a given time interval. | Total Number of Transactions/Time (seconds) | requests/s |

| 8 | MTTR | Mean Time to Recovery is defined as the average time required to restore the system to its pre-failure (normal operating) state following a system failure. | Total Recovery Time/Number of Failures | time |

| 9 | CC | The number of active connections (sessions) the system can maintain concurrently. | Number of Concurrent Sessions | cnt |

| 10 | Provisioning Time | An metrics reflecting how quickly and completely a virtual machine (VM) in a clustered environment recovers after a failure. | ((Number of Recovered Resources/Number of Resources Affected by the Failure) × 100) | % |

| RTO | IRI (TPS) | NRI (TPS) | |||

|---|---|---|---|---|---|

| 16:15 | 16:17:45 | 16:31 | 5 min | 0.28 | 0.13 |

| 16:15 | 16:17:45 | 16:31 | 10 min | 0.28 | 0.41 |

| 16:15 | 16:17:45 | 16:31 | 15 min | 0.28 | 0.60 |

| 16:15 | 16:17:45 | 16:31 | 20 min | 0.28 | 0.71 |

| 16:15 | 16:17:45 | 16:45 | 5 min | 0.61 | 0.13 |

| 16:15 | 16:17:45 | 16:45 | 10 min | 0.61 | 0.41 |

| 16:15 | 16:17:45 | 16:45 | 15 min | 0.61 | 0.60 |

| 16:15 | 16:17:45 | 16:45 | 20 min | 0.61 | 0.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, H.; Sung, J.-H.; Kang, H.-J.; Jang, J.; Shin, D. Quantifying Cyber Resilience: A Framework Based on Availability Metrics and AUC-Based Normalization. Electronics 2025, 14, 2465. https://doi.org/10.3390/electronics14122465

Cho H, Sung J-H, Kang H-J, Jang J, Shin D. Quantifying Cyber Resilience: A Framework Based on Availability Metrics and AUC-Based Normalization. Electronics. 2025; 14(12):2465. https://doi.org/10.3390/electronics14122465

Chicago/Turabian StyleCho, Harksu, Ji-Hyun Sung, Hye-Jin Kang, Jisoo Jang, and Dongkyoo Shin. 2025. "Quantifying Cyber Resilience: A Framework Based on Availability Metrics and AUC-Based Normalization" Electronics 14, no. 12: 2465. https://doi.org/10.3390/electronics14122465

APA StyleCho, H., Sung, J.-H., Kang, H.-J., Jang, J., & Shin, D. (2025). Quantifying Cyber Resilience: A Framework Based on Availability Metrics and AUC-Based Normalization. Electronics, 14(12), 2465. https://doi.org/10.3390/electronics14122465