Abstract

For external inspection of electrical equipment, poor lighting conditions often lead to problems such as uneven illumination, insufficient brightness, and detail loss, which directly affect subsequent analysis. To solve this problem, the Retinex image enhancement method based on the Component Generation Network (CGNet) is proposed in this paper. It employs CGNet to accurately estimate and generate the illumination and reflection components of the target image. The CGNet, based on UNet, integrates Residual Branch Dual-convolution blocks (RBDConv) and the Channel Attention Mechanism (CAM) to improve the feature-learning capability. By setting different numbers of network layers, the optimal estimation of the illumination and reflection components is achieved. To obtain the ideal enhancement results, gamma correction is applied to adjust the estimated illumination component, while the HSV transformation model preserves color information. Finally, the effectiveness of the proposed method is verified on a dataset of poorly illuminated images from external inspection of electrical equipment. The results show that this method not only requires no external datasets for training but also improves the detail clarity and color richness of the target image, effectively addressing poor lighting of images in external inspection of electrical equipment.

1. Introduction

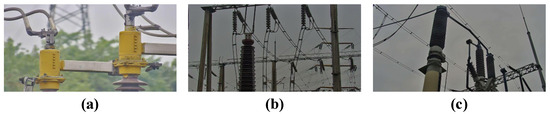

In the complex environments of electrical equipment external inspection, lighting conditions are often a critical determinant for acquiring high-quality videos or images. Nevertheless, in scenarios such as nighttime, cloudy conditions, or rainy weather, insufficient natural illumination is inevitable, resulting in frequently low-brightness and detail-losing captured images of electrical equipment, as depicted in Figure 1 [1,2,3]. This situation severely impedes maintenance personnel and machines from accurately discerning key information within the captured images. To address these challenges, the application of image enhancement technology becomes of utmost significance.

Figure 1.

Low-illumination image samples in external inspection of electrical equipment.

In general, image enhancement techniques can be divided into three categories: traditional image enhancement methods, deep learning-based image enhancement methods, and hybrid image enhancement methods. Traditional image enhancement methods include histogram equalization, gamma correction, tone mapping, distribution-based mapping, Retinex decomposition, and dark channel prior [4]. Among these, histogram equalization enhances global or local contrast by redistributing pixel intensities [5,6], while gamma correction improves brightness and contrast by nonlinearly transforming the gray values of an image [7,8]. Tone mapping [9,10] optimizes image brightness and contrast by adjusting dynamic range, and Retinex decomposition first separates an image into reflectance and illumination components before enhancing the image by individually optimizing these components [11,12]. The dark channel prior estimates atmospheric light and transmission maps using dark channel information to precisely compensate for lighting conditions, thereby improving image quality [13,14]. However, despite these advantages, traditional image enhancement methods often struggle to effectively enhance local details. Especially in external inspection of electrical equipment with complex backgrounds, their enhancement performance remains constrained.

Recent advances in artificial intelligence have spurred significant developments in deep learning-based image enhancement techniques. Pioneering work by Lore et al. [15] introduced the Low-Light Image Enhancement Network (LLNet), a neural network architecture that employs stacked sparse denoising autoencoders to simultaneously address brightness improvement and noise suppression in low-light conditions. This framework utilizes a latent representation compressed by the encoder that is subsequently reconstructed into enhanced images through the decoder pathway. Further innovations emerged with the Multi-branch Low-Light Enhancement Network (MBLLEN) [16], an end-to-end multi-branch network that synergistically combines feature extraction, enhancement, and fusion modules to achieve superior performance. Cai et al.’s Single Image Contrast Enhancer (SICE) framework [17] represents another milestone, implementing a three-stage enhancement pipeline where dedicated subnetworks sequentially handle illumination adjustment, detail recovery, and global texture refinement. The Global Illumination Awareness and Detail-preserving Network (GLADNet) architecture [18] introduced a novel dual-component approach: initial global illumination estimation via encoder–decoder networks followed by CNN-based detail preservation. Although these supervised methods deliver impressive results, their reliance on paired low/normal-light image datasets limits practical applicability due to acquisition challenges. To address this limitation, unsupervised alternatives have emerged as promising alternatives. Guo et al.’s Zero-Reference Deep Curve Estimation (Zero-DCE) [19] reformulates enhancement as a curve estimation problem, where a lightweight network dynamically predicts pixel-wise mapping functions. Similarly, EnlightGAN [20] leverages adversarial training to learn illumination transformations without paired supervision, demonstrating the potential of generative approaches in this domain.

Hybrid image enhancement methods combine traditional and deep learning approaches. Inspired by Retinex theory, several researchers have reformulated the image enhancement pipeline into subtasks including image decomposition, illumination adjustment, and reflectance enhancement, with neural networks employed to execute these components. Chen et al. [21] pioneered the Retinex-Net framework. This framework consists of two core modules. The first is a decomposition network, which separates input images into illumination and reflectance components. The second is an enhancement network, which is specifically designed to amplify brightness and suppress noise in the illumination component. Zhu et al.’s Robust Retinex Decomposition Network (RRDNet) [22] advanced this paradigm through a triple-branch fully convolutional architecture that decomposes images into distinct reflectance, illumination, and noise maps, with network weights iteratively optimized via loss function minimization. Further innovating this direction, Zhao et al. [23] developed RetinexDIP, which leverages deep networks for Retinex decomposition while strategically decoupling the interdependence between illumination and reflectance components. This approach not only enhances the derived illumination map but also significantly improves visual quality through optimized component separation.

Although existing methods have shown promising results for natural image enhancement, their direct application to electrical equipment external inspection images often introduces artifacts and color distortions. To address these domain-specific challenges, this paper proposes an innovative Retinex-based illumination enhancement method with a component generation strategy, specifically designed for external inspection of electrical equipment image enhancement. The proposed method consists of two key stages: Retinex decomposition and illumination enhancement. First, the Component Generation Network (CGNet) is employed to accurately estimate and generate the target illumination and reflectance components from noisy input images. Moreover, to further improve component estimation accuracy, we configure different numbers of CGNet layers to generate illumination and reflection components. Next, the estimated illumination component is refined using gamma correction to achieve balanced lighting distribution. The preliminary enhanced image is then obtained through a straightforward element-wise division operation between the original image and the adjusted illumination map. Finally, to ensure strict preservation of color fidelity, only the Value (V) channel in the HSV color space is modified, while the Hue (H) and Saturation (S) components remain unchanged, effectively preventing unnatural color shifts in the enhanced results. The proposed method not only eliminates the need for external paired image datasets as training samples, but also efficiently handles images with uneven or insufficient lighting in external inspection of electrical equipment, and avoids white spots, artifacts, and other imperfections, so that the images can meet the subsequent requirements for understanding and analyzing the key components.

2. Materials and Methods

2.1. Overall Architecture

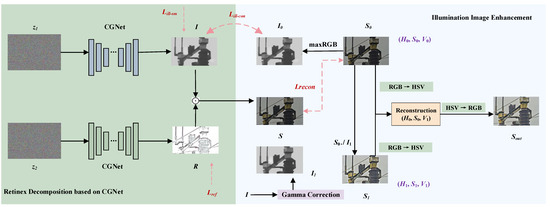

Inspired by the RetinexDIP method, we combine the Retinex image enhancement theory and deep learning techniques to obtain the illumination and reflection components in the model. As illustrated in Figure 2, the proposed algorithm comprises two core modules: Retinex Decomposition based on CGNet and Illumination Image Enhancement.

Figure 2.

An overview of the proposed method.

The proposed framework executes enhancement through the following steps. First, the CGNet architecture generates latent illumination and reflectance components, with iterative optimization governed by loss functions to achieve image decomposition based on Retinex theory. Subsequently, gamma correction is applied to the estimated illumination component, yielding the adjusted illumination map I1. The enhanced image S1 is then obtained through the element-wise division between the original image S0 and the refined illumination component I1. Both the original image S0 and the enhanced image S1 undergo RGB-to-HSV conversion, and then only the V channel is substituted while preserving H and S. The final output is produced by converting the recombined HSV image back to RGB space. The detailed algorithm steps of our model are shown in Appendix A.1.

It should be mentioned that our algorithm is unlike the conventional end-to-end deep learning models. In our algorithm, the process of enhancing an image is an iterative refinement process where no training is required. By minimizing the loss function, the generation network will converge to a stable state. Then, the output of the model will produce an enhanced image. When a new image is input, the iterative refinement process will repeat. Therefore, there is no network training procedure like the conventional end-to-end deep learning style.

2.2. Retinex Decomposition Based on CGNet

In Retinex theory, each pixel value of an image can be regarded as the product of object reflectance and scene illumination, where the reflectance component represents the true color and texture of the object, while the illumination component reflects the lighting conditions of the scene. Traditional Retinex models usually use complex mathematical operations to decompose these two components, but this process is often limited by computational efficiency and accuracy.

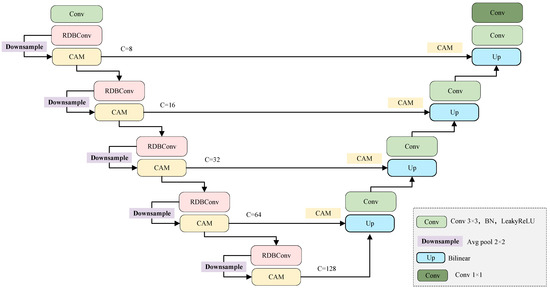

2.2.1. The Structure of CGNet

To overcome the above problems, we developed CGNet, a Component Generation Network built upon a modified UNet encoder–decoder architecture. As illustrated in Figure 3, the proposed network enhances the standard UNet framework through two key innovations: the RDBConv and the CAM. These measures effectively enhance the network’s ability to learn image features, enabling it to generate more realistic illumination and reflection components.

Figure 3.

Network structure of CGNet.

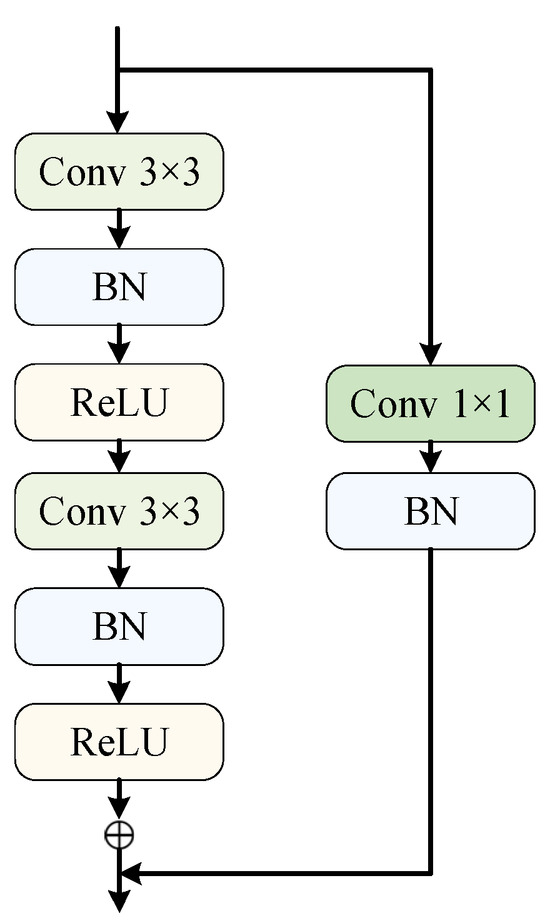

In this case, the RDBConv is shown in Figure 4. Unlike simply stacking dual-convolution layers in the encoder section, this structure innovatively embeds a residual branch composed of convolutional layers and batch normalization layers, allowing the input feature image to be passed directly to the output, thus preserving and transferring the detailed information of the input image at multiple layers. The residual connection, which bypasses input features to deeper layers, facilitates the model to learn useful features more easily during training while preventing performance degradation associated with network depth. This mechanism ensures robust gradient flow even in deep network configurations, consequently enhancing both training efficiency and overall network performance. Specifically, during the generation of illumination and reflection components, this architecture significantly improves feature propagation and learning capabilities, which can more accurately retain the information of the original image, thus generating feature-rich illumination and reflection components and reducing the degree of distortion of the enhanced image. In this way, the network not only learns the features of the image better but also effectively maintains the naturalness and authenticity of the image, which is particularly important for image enhancement.

Figure 4.

The structure of RDBConv.

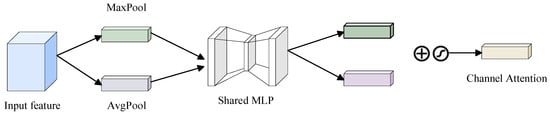

In UNet architecture, the downsampling process plays a pivotal role in shaping feature representation capabilities. This study introduces the CAM during the downsampling phase, and its structure is illustrated in Figure 5. The mechanism employs parallel global max-pooling and average-pooling operations to capture global statistical characteristics of feature channels, followed by a multilayer perceptron (MLP) to model nonlinear interdependencies among channels, thereby generating discriminative channel attention weights. This design enables dynamic modulation of channel-wise contributions during downsampling: it automatically enhances high-frequency detail features critical for image reconstruction while effectively suppressing interference from noisy or redundant channels. Such attention-guided downsampling not only preserves richer semantic information but also establishes a robust foundation for subsequent feature fusion.

Figure 5.

The structure of the CAM.

To further optimize the fusion of shallow and deep features, the CAM is also incorporated into skip connections. The mechanism first performs channel-wise recalibration on encoder output features before concatenation with decoder features. This architecture allows a more precise selection of features most relevant to the current reconstruction stage while effectively filtering out noise and irrelevant information. Consequently, the model achieves more efficient multi-scale feature integration and significant suppression of noise amplification, leading to substantially improved image quality.

It is noteworthy that we adopt differentiated network depth configurations for the illumination and reflection component generation branches, motivated by their distinct physical characteristics. Specifically, the reflection component generator employs a deeper network architecture compared to its illumination counterpart, as the former requires the enhanced capacity to preserve fine-grained texture details and color fidelity, whereas the latter utilizes shallower layers to ensure smooth transition characteristics. Detailed specifications will be presented in Section 3.5.

2.2.2. Loss Function

In the proposed component generation strategy, both component generation networks employ randomly sampled Gaussian white noise as their input, i.e., , where denotes the standard deviation of the Gaussian distribution. The noises matrices are the same size as the target image, and they are randomly sampled in each iteration. And the model learns to generate realistic illumination and reflectance components by minimizing reconstruction errors, which consist of four loss terms: Reconstruction Loss (), Illumination-Consistency Loss (), Reflectance Loss (), and Illumination Smoothness Loss (Lill-sm).

serves as the fundamental objective, ensuring the model’s ability to faithfully recover the original input image, and it can be expressed as

where indicates the generated illumination component, indicates the generated reflection component, and indicates that it is a reconstructed image.

aims to ensure that the illumination components generated in each iteration are structurally consistent with the initial illumination, thus maintaining the coherence of the illumination distribution during the image enhancement process. As shown as follows,

where denotes the value of pixel p in the image in the three channels of RGB, is the maximum value of pixel p in the image in the three channels of RGB and is used as the initial illuminance value of that pixel, and the initial illuminance values of each pixel point in the image are combined to form the complete initial illuminance map of that image .

is concerned with the quality of the generated reflection components, in particular their smoothness and naturalness. This loss function suppresses noise by minimizing the gradient variation of the model-generated reflection component, thus ensuring that the generated reflection component is smooth and close to the true reflection characteristics. is given as follows:

where denotes the first-order gradient operator. To make the generated reflection component close to the ideal noise-free reflection component, we adopt the Total Variation (TV) loss as a constraint to reduce the noise in the reflection component and make the reflection look more natural.

is designed to maintain the smoothness of the illumination component while avoiding the loss of image details due to excessive smoothing, which is defined as follows:

The effect of this loss term can be effectively controlled by calculating the gradient change in the illumination component and imposing appropriate weights, as shown below:

where denotes horizontal and vertical gradients.

Consequently, the composite loss function guiding the iterative training process combines all losses through weighted summation, and is formulated as follows:

where , , and represent tunable parameters balancing the contribution of each loss term.

2.2.3. Retinex Decomposition Process

Based on the Retinex theory, we propose a generative strategy to separately generate the illumination and reflection components. Firstly, the CGNet network takes a random noise matrix as input to roughly generate an illumination map which contains lighting information and a reflection map with structural details. Secondly, optimization is driven by decreasing loss functions to ensure that the generated components comply with the requirements of Retinex theory. Thirdly, after multiple iterations, the network parameters are updated until the total loss function gradually converges, resulting in the generation of optimized illumination and reflection components. Therefore, our algorithm obtains more realistic components which make enhanced images with fewer artifacts.

2.3. Illumination Image Enhancement

In the algorithm proposed in this paper, the illumination component and reflection component generated by the CGNet network will be optimized by iterative optimization until the loss function reaches the minimum value, thus ensuring the accuracy of the image decomposition. Then, to obtain the final illumination-enhanced image, we carry out the following processing.

Gamma correction is first applied to the illumination component as shown in the following:

where denotes the correction factor, represents the illumination component, and denotes the adjusted illumination component which is a single-channel (grayscale) image.

Then, the following formula is applied to obtain the enhanced image :

where denotes the input image, and the original image S0 in each of the RGB channels is divided element-wise by the single-channel illumination component . This operation is performed separately for each color channel.

The obtained has a good enhancement effect, but the hue and chroma may be inconsistent with the original image . To solve this problem, we convert and to HSV color space, and then only the V channel is substituted while preserving H and S, and we subsequently convert it back to the RGB space. The details are as follows: firstly, the original image and the enhanced image S1 are converted from the RGB color space to the HSV color space, and the HSV components of and are obtained as , . Then, we extract the V channel, which represents the brightness of the image, and replace the V channels of and to obtain the recombined HSV image . Finally, the recombined HSV image is converted from the HSV space back to the RGB space, and the obtained RGB image is used as the final output. Therefore, it is the HSV transformation model that reduces the color distortion by replacing only the V channel while preserving the original image’s H and S channels.

3. Experiments

3.1. Datasets

This study utilizes external inspection of electrical equipment data provided by an electric power research institute as the research subject, which covers the states of various electrical equipment, such as isolating switches, bushings, and neutral equipment under different scenarios and lighting conditions. Images with poor light are classified and organized according to corresponding scenarios and equipment types, forming a dataset of poor-lighting images in external inspection of electrical equipment.

Figure 6 shows examples of external inspection of electrical equipment images under different lighting conditions, including backlit and twilight scenarios. Subfigure (a) displays a properly illuminated scene where the threads and colors of a disconnect switch are clearly visible. In contrast, subfigure (b) presents an underexposed scenario where the edges of a bushing appear blurred with indistinguishable color information, and subfigure (c) shows an image taken from an upward perspective, where insufficient light intake results in poor visibility of neutral equipment. For algorithm validation, we selected 85 images from the organized external inspection of electrical equipment image dataset to establish the experimental dataset, designated as the Low-Illumination External Inspection of Electrical Equipment (LIEE) Dataset. Additionally, part of the LIEE dataset can be available at: https://github.com/Fancin/CGNet_Image_Enhancement (accessed on 12 May 2025).

Figure 6.

Comparison of lighting conditions in external inspection of electrical equipment. (a) Well-illuminated scene; (b) poorly illuminated scene; (c) upward perspective with insufficient illumination.

3.2. Implementation Details

For our experiments, we established the development environment using PyTorch 2.0.0 on a Windows 11 64-bit operating system. The parameter configuration of the proposed algorithm is detailed in Appendix A.2. Specifically, the CGNet of the reflection component was configured with 6 layers, and the illumination component was configured with 4 layers. The rationale behind these architectural decisions will be comprehensively analyzed in Section 3.5.

3.3. Performance Criteria

To objectively evaluate the performance of the lighting image enhancement algorithm, this paper adopts three no-reference image quality evaluation metrics: Natural Image Quality Evaluator (NIQE), Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE), and Contrast and Energy-based Image Quality (CEIQ). The NIQE measures image naturalness by assessing image quality based on natural scene statistical features, where a lower score indicates better naturalness [24]. The BRISQUE evaluates image distortion by analyzing changes in natural image statistical characteristics, with smaller values corresponding to reduced distortion [25]. The CEIQ comprehensively considers factors such as color saturation, contrast, and brightness, where higher scores reflect minimized color distortion [26].

3.4. Comparison with Other Methods

To verify the effect of the algorithm proposed in external inspection of electrical equipment, we conducted a comparative analysis with several widely used image enhancement methods, most of which operate without reference images. The benchmark methods include LIME [27], NeurBR [28], RetinexDIP [23], SCI [29], URetinex-Net [30], and Zero-DCE [19]. The parameter settings of the network models used in these methods all use default parameter values and provided weight values.

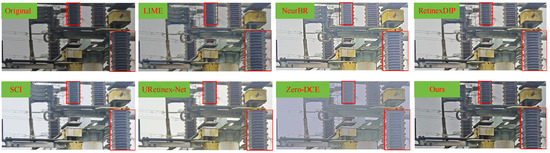

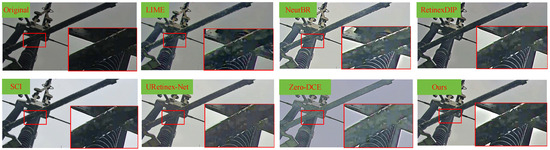

For subjective evaluation, we randomly selected test images P1 and P2 from the LIEE dataset. The enhancement results are presented in Figure 7 and Figure 8, and the critical local details are highlighted with red bounding boxes and corresponding zoomed-in insets for each result.

Figure 7.

Subjective comparison of enhancement results on Image P1. From left to right and top to bottom: the original image, the enhanced results by LIME, NeurBR, RetinexDIP, SCI, URetinex-Net, Zero-DCE, and the proposed method in this paper.

Figure 8.

A subjective comparison of the enhancement results for Image P2. From left to right and top to bottom: the original image, the enhanced results by LIME, NeurBR, RetinexDIP, SCI, URetinex-Net, Zero-DCE, and the proposed method in this paper.

Figure 7 demonstrates that in the enhanced results of LIME, NeurBR, SCI, URetinex-Net, and Zero-DCE, the edges of the bushing threads are less clearly visible than those in the images enhanced by RetinexDIP and our proposed method. Meanwhile, the overall colors of the images enhanced by NeurBR, SCI, and Zero-DCE are unnatural with color distortion: the image enhanced by NeurBR has excessively high saturation, the image enhanced by SCI appears whitish, and the image enhanced by Zero-DCE has an overall gray-pink tone. In contrast, both RetinexDIP and the algorithm in this paper perform excellently in terms of color presentation and detailed information. However, the zoomed-in insets reveal that our method achieves finer texture clarity in bushing threads compared to RetinexDIP.

Figure 8 shows the comparison of test results for Image P2. The edges of pipes in the images enhanced by LIME, NeurBR, URetinex-Net, and Zero-DCE are blurred. Additionally, color distortion remains in the images enhanced by NeurBR, SCI, and Zero-DCE. Both the algorithm in this paper and the RetinexDIP algorithm show better enhancement effects on P2 than the other five methods, demonstrating rich and clear detailed information with natural color presentation. The algorithm in this paper performs well in processing dark areas, making the threads of bushings in dark regions clearly visible.

Therefore, the images enhanced by the algorithm in this paper exhibit good visual effects, restoring the clarity of image details while improving the overall color naturalness of the images.

To further objectively evaluate the effect of the proposed algorithm, the LIEE dataset was used for testing, and the average values of objective metrics NIQE, BRISQUE, and CEIQ for different algorithms across different images were compared. The specific results are shown in Table 1. The results indicate that our algorithm ranks first in all metrics, demonstrating that the enhanced images have minimal distortion and more natural color contrast. Bold fonts in the table denote the best results in each column, where ↓ indicates that smaller values are better and ↑ indicates that larger values are better.

Table 1.

Image quality metrics of LIEE enhanced by different algorithms.

In addition, we calculated the standard deviation of each method across different images, as shown in the brackets in Table 1. Our method not only achieved the best average values in all three metrics but also exhibited lower standard deviations, indicating that our algorithm has higher stability and consistency in enhancing low-light images.

Secondly, we used the Friedman test to conduct a significance test for each method across all images. The Friedman test is a non-parametric statistical method that compares the performance rankings of different methods across multiple images to determine whether there are significant differences among them. The results of the Friedman test revealed that the value of p for NIQE, BRISQUE, and CEIQ among the seven comparison methods are , , and , all significantly lower than 0.05. This indicates that there are significant differences among seven methods. Our method achieved the best results in all three metrics, further demonstrating its superior performance in image enhancement.

Additionally, we chose ten real-world images of electricity scenes from domain experts and invited them to subjectively rate the enhanced images. Specifically, Grade A indicates that the enhanced image has ideal brightness, clear detail information, and natural color. Grade B indicates that the enhanced image has moderate brightness, partially visible details and generally natural color. Grade C indicates that the enhanced image exhibits only moderate improvement in brightness, with texture details remaining somewhat obscured and noticeable color distortion present. Finally, we summarize the results in Table 2 and the value means the image number with corresponding grade. The results show that our method attained higher numbers in Grade A and had the lowest numbers in Grade C, which further validates the effectiveness of our method.

Table 2.

Subjective ratings of enhanced images, as given by experts.

3.5. Impact of CGNet Depth on Image Enhancement

In the proposed image enhancement algorithm, the illumination and reflection component generation networks have similar structures but different numbers of layers. To investigate the impact of the number of layers in the CGNet on image enhancement effects and to obtain the optimal layer configuration, experiments were conducted using 40 images selected from the LIEE dataset for testing. The test results are shown in Table 3, where the subjective evaluation aims to determine whether enhanced images have undesirable issues such as white spots or artifacts from the perspective of human visual perception. The red numbers indicates the top-ranked index, and the green numbers indicates the second-ranked index.

Table 3.

Test results of layer settings for illumination and reflection CGNet.

By analyzing the data in Table 2, it is found that the proposed algorithm performs well in the three metrics under Approach 4, 6, 7, and 8, while the metric values are relatively poor under other schemes. This difference is attributed to the fact that the reflection component contains richer detail information, requiring a deeper network structure. Therefore, when the number of layers in the reflection CGNet exceeds that in the illumination CGNet, the algorithm achieves optimal enhancement performance and better metrics. Based on experimental results and comprehensive consideration of subjective and objective evaluations, Approach 7 is selected as the optimal configuration of the algorithm in this paper.

3.6. Ablation Study

In this study, to evaluate the impact of the RDBConv and CAM module in the CGNet network architecture on image enhancement effects, an ablation experiment was conducted using the LIEE dataset. By comparing experimental results under different configurations, we can more accurately understand the specific roles of these improvements in enhancing image quality. The results are shown in Table 4, where bold fonts indicate the best results in each column.

Table 4.

Comparison of ablation experiment metrics.

As shown in the table, Experiment 1, without the RDBConv and CAM module, has the largest number of unsatisfactory images, with the worst evaluation values in NIQE and BRISQUE. When the CAM module is added in Experiment 2, the number of unsatisfactory images decreases, and the values of NIQE and BRISQUE improve, demonstrating the effectiveness of introducing the CAM module in skip connections. In Experiment 3, after adding the RDBConv, all three metrics are significantly improved, and the number of unsatisfactory images is greatly reduced, verifying the effectiveness and necessity of the RDBConv. Finally, Experiment 4, combining both the RDBConv and CAM module, achieves optimal performance in all metrics, with only a marginal compromise in NIQE. Notably, the enhanced images exhibit zero unsatisfactory cases, validating the efficacy and practicality of our proposed method.

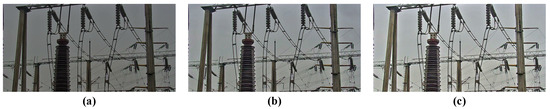

To verify the effectiveness of the HSV transformation module in image enhancement, a random image from the LIEE dataset was tested, as shown in Figure 9. Subfigure (a) shows that the original low-illumination image is overall dark and blurry in detail. Subfigure (b) presents the enhanced result without the HSV transformation module, where although the brightness and saturation are moderately improved, the image remains visually dim with insufficient detail clarity. In contrast, subfigure (c) demonstrates the enhanced result using the HSV transformation module, which exhibits significantly improved brightness, sharper details, and more natural color reproduction compared to subfigure (b). Analysis confirms that the HSV transformation module effectively enhances image brightness, clarity, and color restoration.

Figure 9.

Impact analysis of HSV transformation on enhancement quality. (a) Original low-illumination image; (b) enhanced result without HSV transformation; (c) enhanced result with HSV transformation.

3.7. Experimental Validation on Other Datasets

To further evaluate the effectiveness of the proposed algorithm in practical applications, we conduct a series of experiments to test its performance on other publicly available image datasets. They are DICM, Fusion, LIME, and NPE. We compare the enhanced results obtained by LIME, NeurBR, RetinexDIP, SCI, URetinex-Net, Zero-DCE, MBLLEN [16], LCENet [31], and Pan’s method [32]. In these methods, some use the deep learning techniques like MBLLEN, LCENet, and Pan’s method which is particularly designed for underground scenarios. The experimental results are shown in Table 5.

Table 5.

Comparative BRISQUE scores across datasets.

As shown in Table 5, the proposed algorithm achieved the first rank on the Fusion and LIME datasets, the second rank on the DICM dataset, and also demonstrated a strong performance on the NPE dataset. Moreover, our method exhibited the best result in the average sense.

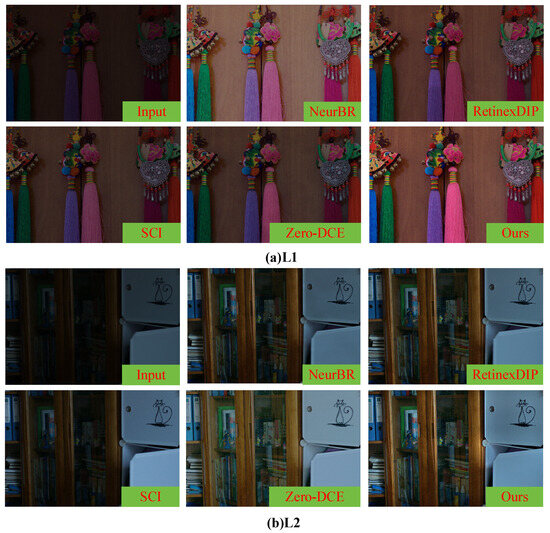

Additionally, we choose the LOL dataset containing “ideal image-low light image” pairs to evaluate the performance of different algorithms in terms of PSNR and SSIM. The test results in Table 6 show that our algorithm achieved preferable results in LOL dataset. Additionally, we select L1 and L2 from LOL dataset to make subjective comparisons. As shown in Figure 10, the enhanced images by our method achieve the best texture sharpness and natural color.

Table 6.

A comparison of PSNR and SSIM metrics on the LOL dataset.

Figure 10.

Enhanced image of LOL dataset.

3.8. Discussion About Key Parameters

In this section, we discuss the key parameters of our model, focusing on the weighting of the loss functions and the selection of the gamma value for gamma correction. As shown in Equation (7), based on the experience from Retinex-based image enhancement methods, and are very important in the model, so we set the weight of and as 1 (). Next, we will discuss the weights of and , i.e., and .

is used to constrain the generation network to produce noise-free reflectance, thereby preserving the details and textures of the image. The purpose of is to distinguish between textural details and strong edges to avoid over-smoothing. We choose as 0, 0.001, and 0.0001. is chosen as 0.1 and 0.5. Then, we test different settings and observe the results in Table 7. The results in Group 5 have good performance across all three metrics, so we set as 0.0001 and as 0.5 in our algorithm.

Table 7.

The impact of key parameters.

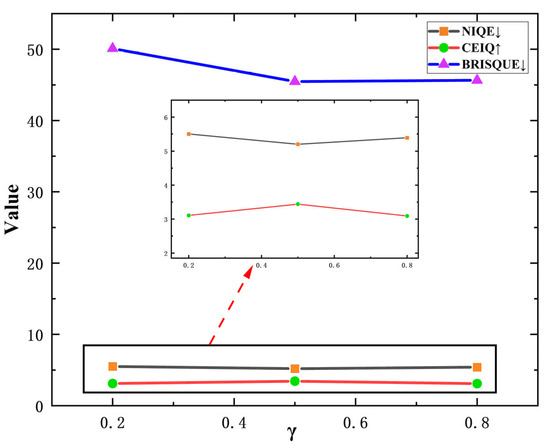

Gamma correction is a crucial step in our algorithm, aimed at adjusting the illumination component generated by CGNet to achieve a more natural visual effect, as shown in Equation (8). A smaller value increases the brightness of the illumination component, while a larger value darkens it. In our algorithm, we set different gamma values of 0.2, 0.5, and 0.8 to observe their impact on image enhancement. The results are shown in Figure 11.

Figure 11.

Quantitative metrics for the LIEE dataset with different values.

As shown in Figure 10, the best performance is achieved when the gamma value is 0.5. Therefore, we choose 0.5 as our value .

3.9. Analysis About Computational Complexity and Inference Time

To evaluate computational complexity, we compared seven methods and the results are shown in Table 8 where inference time is the mean time measured on 10 test images from LIEE dataset. From the data in Table 8, we can see the methods like SCI, URetinex_Net and Zero-DCE have the short inference time. The reason is that these methods need model-training and then the confirmed model has a short inference time. While, LIME, NeurBR, RetinexDIP, and our method require no training, so the inference time is longer. At the same time, the inference memory occupation of our method is a little more. Even so, the enhanced images obtained by our method own the clearer texture and more natural color. Especially for the images captured in electricity scene, there is no paired “ideal images–low-quality images”. Therefore, our method is more suitable to use.

Table 8.

Comparison of model complexity and inference time across different methods.

4. Discussion

The proposed CGNet-based Retinex enhancement method presents an innovative solution to address low-light conditions in external inspection of electrical equipment. Unlike conventional deep learning approaches that rely on paired training data (e.g., low-light/normal-light image pairs), our method leverages the intrinsic priors of Retinex decomposition within an unsupervised framework, requiring no external datasets for training. Comparative experiments with six state-of-the-art methods demonstrate the superiority of our approach in terms of detail preservation and color fidelity. The key limitation of our method lies in its inference time. Unlike pre-trained deep learning models, our approach enhances images by optimizing losses individually for each input image, so that it consumes more time to generate the enhanced image. But the advantage of our method is that it requires no training and can directly process the images with poor lighting. In the future, we will try our best to reduce the inference time of our algorithm.

5. Conclusions

The proposed Retinex-based illumination enhancement method, which employs Component Generation Networks (CGNets), demonstrates superior performance in external inspection of electrical equipment. Built upon a UNet backbone, the CGNet architecture incorporates Residual Branch Dual-convolution Blocks (RBDConv) and a Channel Attention Mechanism (CAM) to significantly enhance feature extraction and component generation capabilities. The framework strategically applies gamma correction to optimize the estimated illumination component while utilizing HSV color space transformation to preserve color fidelity in the final enhanced output. Through comprehensive evaluation using NIQE, BRISQUE, and CEIQ metrics compared with six enhancement algorithms, the experimental results demonstrate that it reduces the average NIQE by 0.24 and BRISQUE by 4.67 compared to existing methods, indicating superior noise suppression and perceptual quality enhancement. Furthermore, the method exhibits excellent color preservation capabilities, as evidenced by an average CEIQ improvement of 0.42. Comparative analysis confirms that our approach more effectively improves the detail clarity and color richness of enhanced images, effectively addressing issues of uneven or insufficient illumination in external inspection of electrical equipment.

Author Contributions

Conceptualization, X.L. and Q.C.; methodology, J.Z. and Y.Z.; software, X.L. and J.Z.; validation, X.L. and J.Z.; formal analysis, Q.W.; investigation, Q.W. and Y.L.; resources, Q.W.; data curation, Z.Z. and Y.L.; writing—original draft preparation, X.L. and J.Z.; writing—review and editing, Q.C. and Y.Z.; visualization, J.Z.; supervision, Q.C., Y.Z., and Z.Z.; project administration, X.L. and Q.C.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Research on Image Quality Improvement Technology for Remote Intelligent Inspection Terminal in UHV Substations, grant number 522023240018.

Data Availability Statement

The datasets presented in this article are not readily available because they contain confidential monitoring images of critical power infrastructure, which are protected under industrial data security regulations. Requests to access the datasets should be directed to the corresponding author and will require formal approval from the Chongqing Electric Power Research Institute.

Conflicts of Interest

Authors Xiong Liu, Qian Wang, Zining Zhao and Yong Li were employed by the State Grid Chongqing Electric Power Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CGNet | Component Generation Network |

| RBDConv | Residual Branch Dual-convolution blocks |

| CAM | Channel Attention Mechanism |

| HSV | Hue, Saturation, Value |

| LIEE | Low-Illumination External Inspection of Electrical Equipment Dataset |

| NIQE | Natural Image Quality Evaluator |

| BRISQUE | Blind/Referenceless Image Spatial Quality Evaluator |

| CEIQ | Contrast and Energy-based Image Quality |

Appendix A

Appendix A.1

In order to more clearly illustrate the workflow and implementation details of our model, we provide the complete algorithm steps in this Appendix A.1. These steps detail the entire process from inputting a low-light image to generating an enhanced image, including Retinex decomposition based on CGNet, illumination enhancement, and the final image reconstruction. The specific steps are shown in the following Algorithm A1:

| Algorithm A1. Detailed algorithm steps of our model |

|

Appendix A.2

In this section, we provide the training details and parameter settings of our model. These details include the optimizer configuration, learning rate scheduling and data preprocessing during training. The specific settings are summarized in the following table:

Table A1.

Network structure and training parameter settings.

Table A1.

Network structure and training parameter settings.

| Parameter | Configuration |

|---|---|

| 1 | |

| 0.0001 | |

| 0.5 | |

| 0.5 | |

| Reflection network layer numbers | 6 |

| Illumination network layer numbers | 4 |

| Learning rate | 0.01 |

| Maximum iterations K | 500 |

| Optimizer | Adam |

| Data preprocessing | Normalize pixel values to [0,1], and resize to |

References

- Aboalia, H.; Hussein, S.; Mahmoud, A. Enhancing Power Lines Detection Using Deep Learning and Feature-Level Fusion of Infrared and Visible Light Image. Arab. J. Sci. Eng. 2024, 50, 1–13. [Google Scholar] [CrossRef]

- Xi, Y.; Zhang, Z.; Wang, W. Low-Light Image Enhancement Method for Electric Power Operation Sites Considering Strong Light Suppression. Appl. Sci. 2023, 13, 9645. [Google Scholar] [CrossRef]

- Liu, K.; Wu, Y.; Ge, Y.; Ji, S. Enhancing the Visual Effectiveness of Overexposed and Underexposed Images in Power Marketing Field Operations Using Gray Scale Logarithmic Transformation and Histogram Equalization. Appl. Math. Nonlinear Sci. 2024, 9, 1–16. [Google Scholar] [CrossRef]

- Sun, F.; Lyu, Z.; Lyu, Z.W. Review of low light image enhancement based on deep learning. Appl. Res. Comput. 2025, 42, 19–27. [Google Scholar]

- Rao, B.S. Dynamic Histogram Equalization for contrast enhancement for digital images. Appl. Soft Comput. 2020, 89, 106114. [Google Scholar] [CrossRef]

- Hidayah, S.N.; Mat, I.N.A.; Mohd, S.H. Nonlinear Exposure Intensity Based Modification Histogram Equalization for Non-Uniform Illumination Image Enhancement. IEEE Access 2021, 9, 93033–93061. [Google Scholar]

- Li, X.; Liu, M.; Ling, Q. Pixel-Wise Gamma Correction Mapping for Low-Light Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 681–694. [Google Scholar] [CrossRef]

- Jeon, J.J.; Park, J.Y.; Eom, I.K. Low-light image enhancement using gamma correction prior in mixed color spaces. Pattern Recognit. 2024, 146, 110001. [Google Scholar] [CrossRef]

- Du, C.; Li, J.; Yuan, B. Low-illumination image enhancement with logarithmic tone mapping. Open Comput. Sci. 2023, 13, 20220274. [Google Scholar] [CrossRef]

- Amanda, G.C.; Barry, T.M.; David, F.G. Weibull Tone Mapping (WTM) for the Enhancement of Underwater Imagery. Sensors 2023, 23, 3533. [Google Scholar] [CrossRef]

- Luo, Y.; Lv, G.; Ling, J.; Hu, X. Low-light image enhancement via an attention-guided deep Retinex decomposition model. Appl. Intell. 2024, 55, 194. [Google Scholar] [CrossRef]

- Yin, M.; Yang, J. ILR-Net: Low-light image enhancement network based on the combination of iterative learning mechanism and Retinex theory. PLoS ONE 2025, 20, e0314541. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Shi, H.; Wang, H.; Liu, W.; Wang, L. Image Enhancement Method Based on Dark Channel Prior. In Proceedings of the 2022 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shijiazhuang, China, 22–24 July 2022; pp. 200–204. [Google Scholar]

- Ghada, S.; Randa, A.; Arafat, A.H.; Farouk, A.R. A low-light image enhancement method based on bright channel prior and maximum colour channel. IET Image Process. 2021, 15, 1759–1772. [Google Scholar]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Lv, F.; Lu, F.; Wu, J.; Lim, C. MBLLEN: Low-Light Image/Video Enhancement Using CNNs. Br. Mach. Vis. Conf. 2018, 220, 4. [Google Scholar]

- Cai, J.R.; Gu, S.H.; Zhang, L. Learning a Deep Single Image Contrast Enhancer from Multi-Exposure Images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef]

- Wang, W.; Wei, C.; Yang, W.; Liu, J. GLADNet: Low-Light Enhancement Network with Global Awareness. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 751–755. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1777–1786. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Chen, W.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Zhu, A.; Zhang, L.; Shen, Y.; Ma, Y.; Zhao, S.; Zhou, Y. Zero-Shot Restoration of Underexposed Images via Robust Retinex Decomposition. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Zhao, Z.; Xiong, B.; Wang, L.; Ou, Q.; Yu, L.; Kuang, F. RetinexDIP: A Unified Deep Framework for Low-Light Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1076–1088. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Li, J.; Fu, X. No-Reference Quality Assessment of Contrast-Distorted Images using Contrast Enhancement. arXiv 2019, arXiv:1904.08879. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Lin, H.; Shi, D.; Zhou, G. A non-regularization self-supervised Retinex approach to low-light image enhancement with parameterized illumination estimation. Pattern Recognit. 2024, 146, 110025. [Google Scholar] [CrossRef]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. URetinex-Net: Retinex-based Deep Unfolding Network for Low-light Image Enhancement. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5891–5900. [Google Scholar]

- Zhou, Z.; Shi, Z.; Ren, W. Linear Contrast Enhancement Network for Low-Illumination Image Enhancement. IEEE Trans. Instrum. Meas. 2023, 72, 1–16. [Google Scholar] [CrossRef]

- Pan, S.; Yu, T.; Chen, W.; Tian, Z.J.; Yue, Z.W. Underground low-light self-supervised image enhancement method based on structure and texture perception. J. China Coal Soc. 2025, 50, 2310–2320. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).