User Experience-Oriented Content Caching for Low Earth Orbit Satellite-Enabled Mobile Edge Computing Networks

Abstract

1. Introduction

- This paper characterizes the average QoE of users based on the effective transmission rate and adopts it as the performance metric. Note that the average QoE of users enables us to characterize the capability of our network in satisfying the users’ heterogeneous content requests. Moreover, through analysis, we reveal that the average QoE of users is determined by the content cache decisions at the satellites, taking into account the interference at the user end.

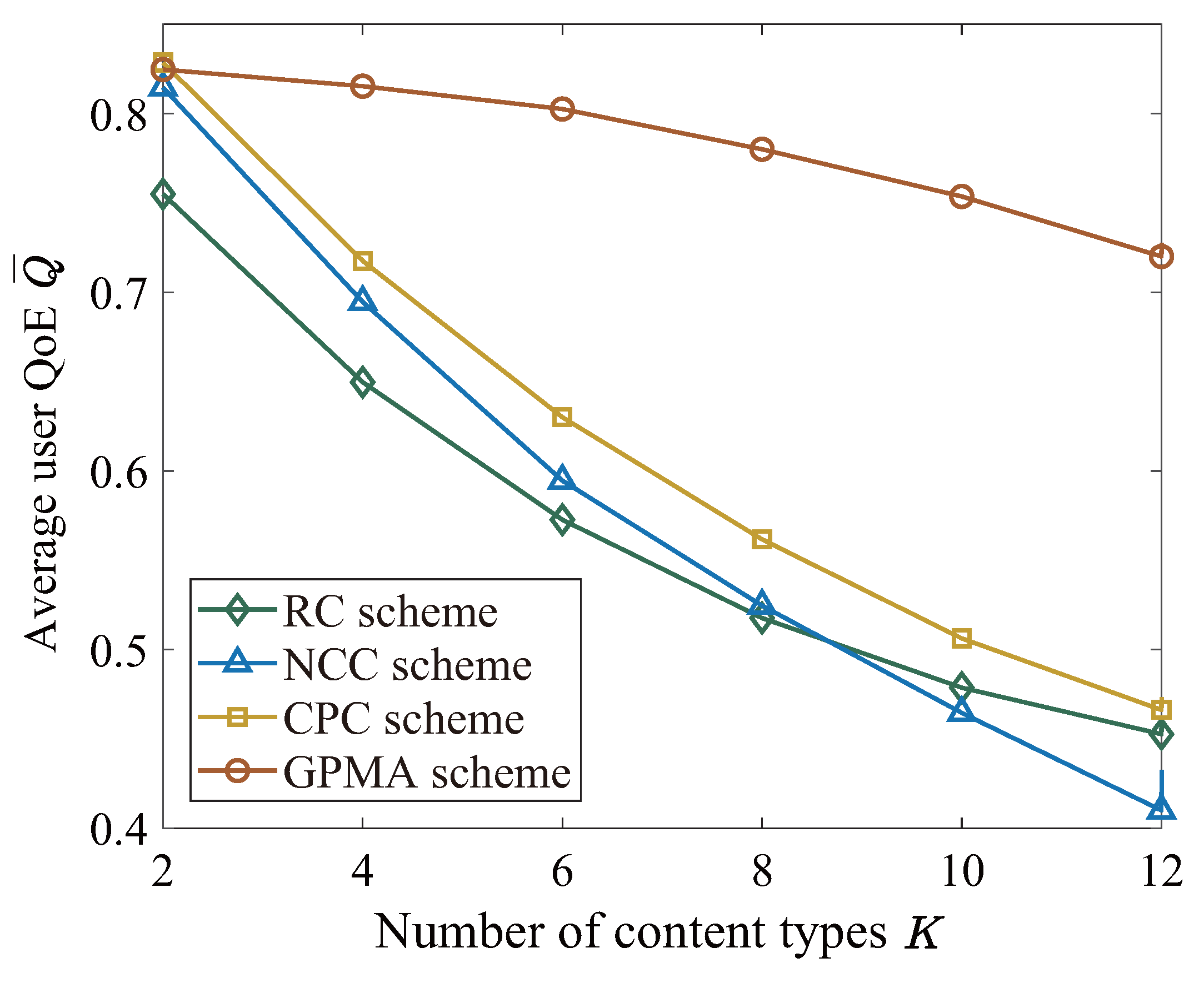

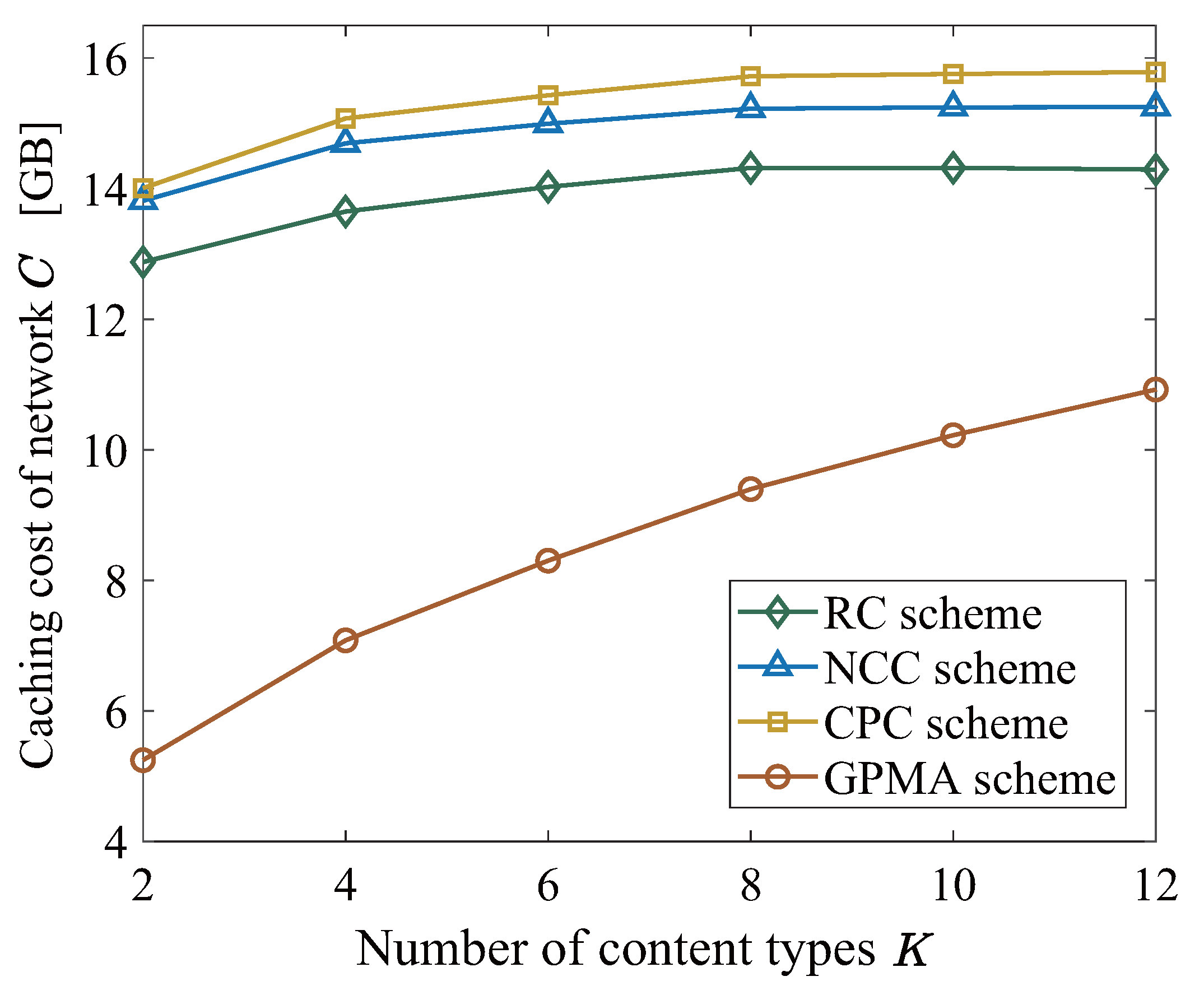

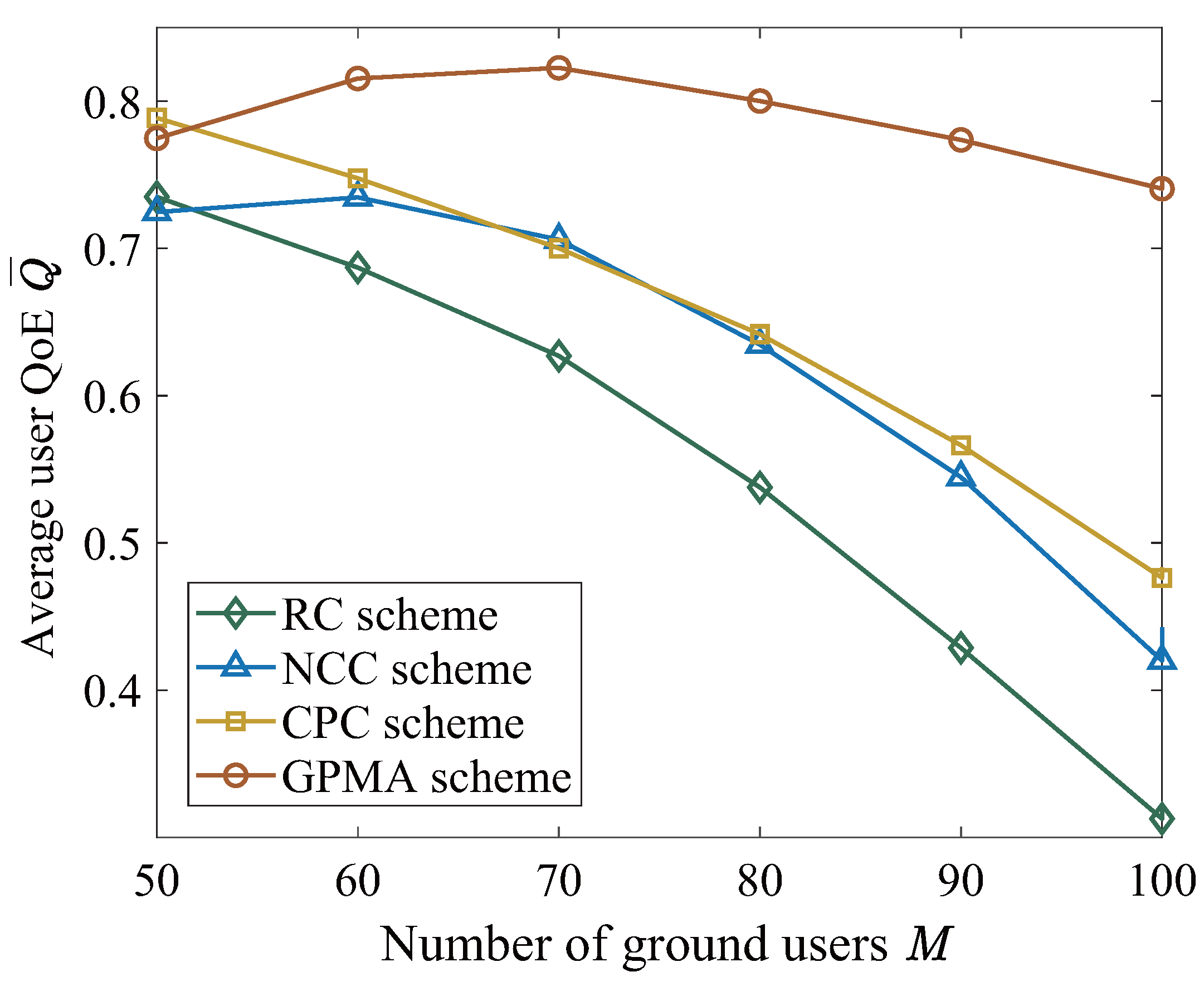

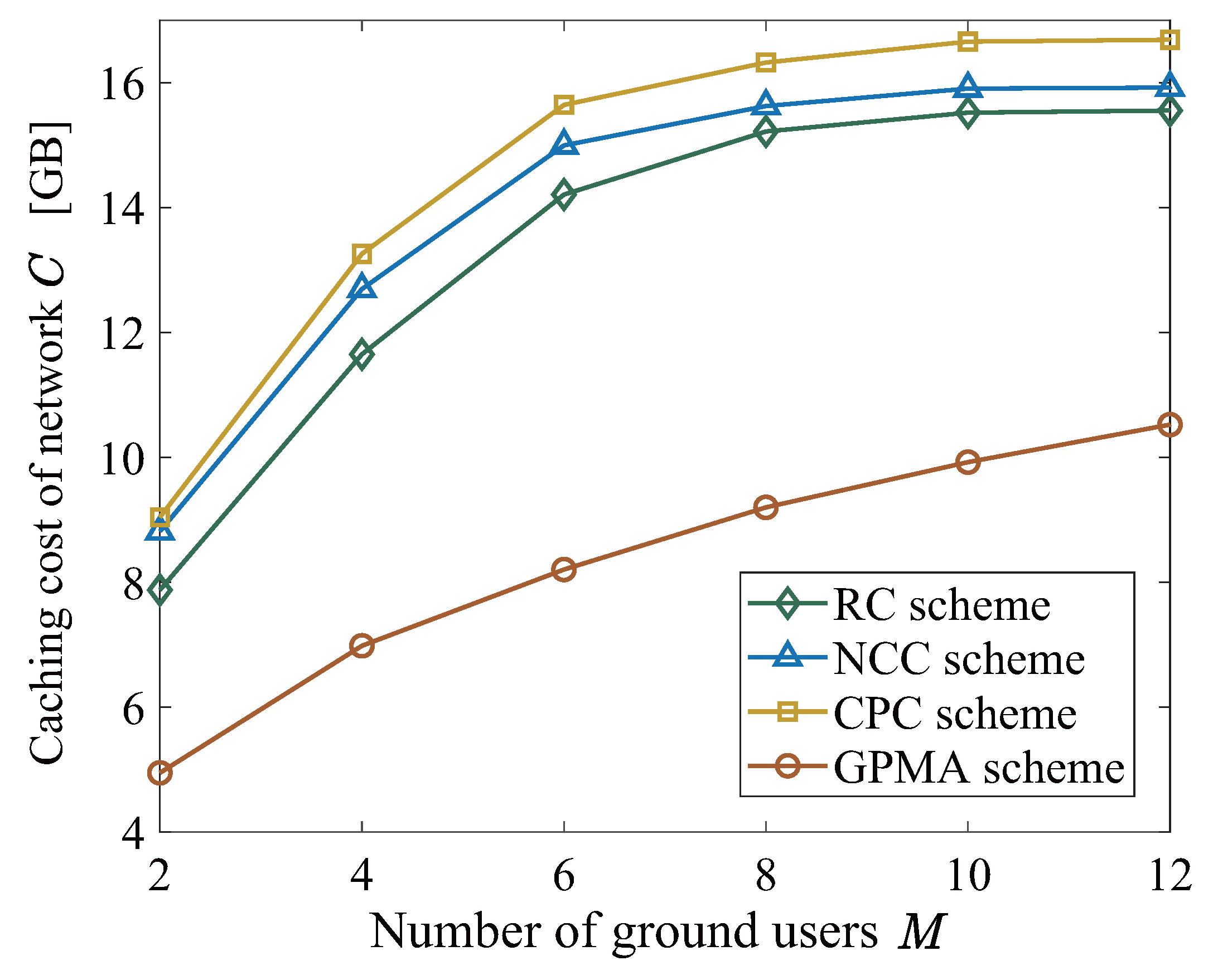

- To enhance the performance of LEO satellite-enabled MEC networks in satisfying users’ content demands, we formulate an average user QoE maximization problem, subject to practical constraints on the satellites’ caching and computation capabilities. To solve this non-convex problem, a two-stage content caching algorithm based on the divide-and-conquer and greedy strategies is designed. Via numerical results, we verify the effectiveness of our proposed algorithms through comparisons with various benchmarks, especially when the caching resources at the satellites are limited.

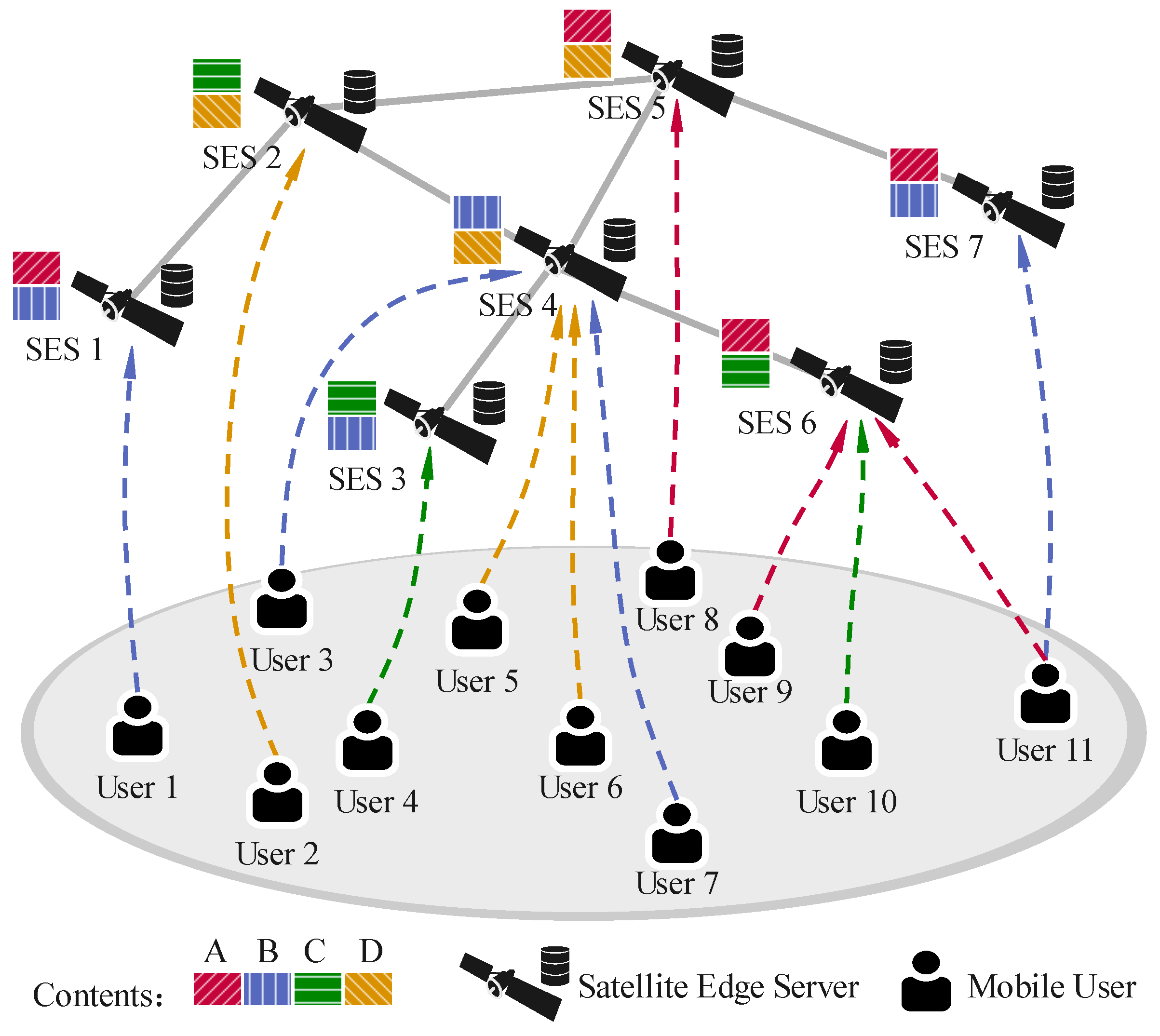

2. System Model and Problem Formulation

2.1. Network Model

2.2. Cache Model

2.3. User Access Model

2.4. Interference Model

2.5. Performance Metrics

2.6. Problem Formulation

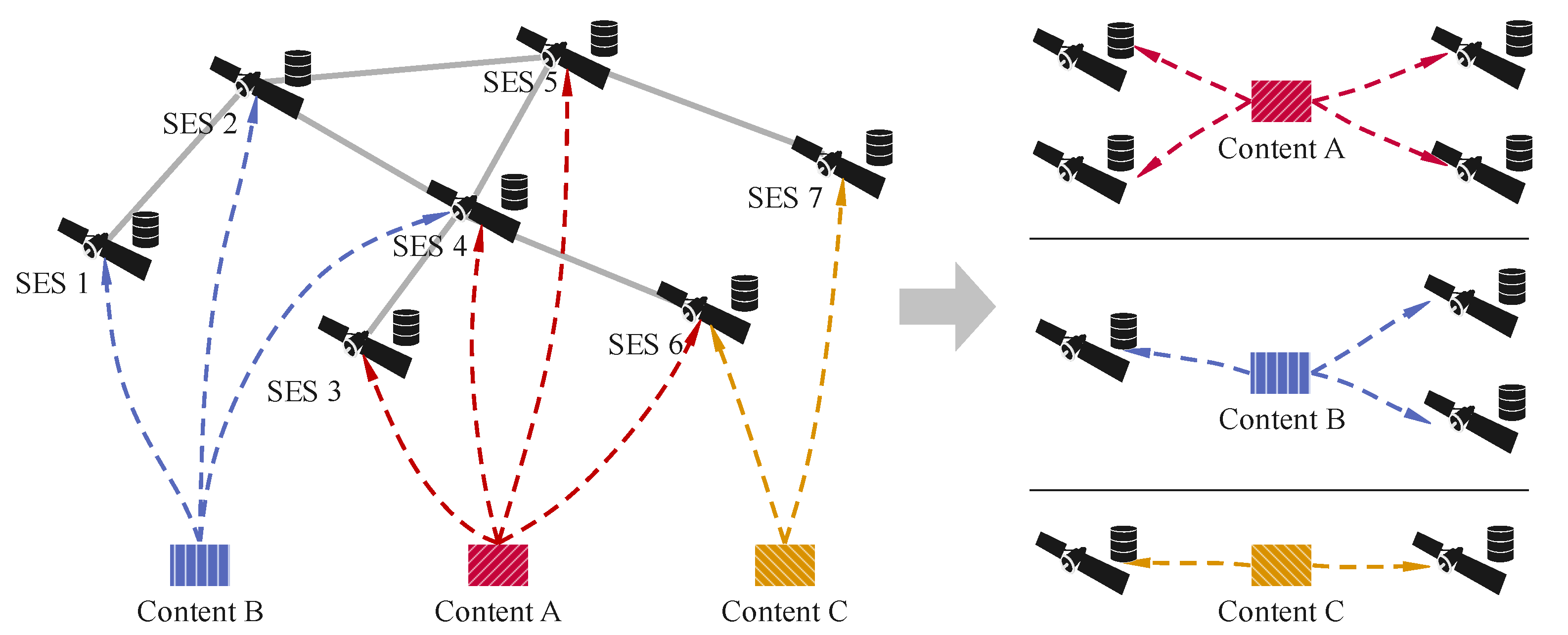

3. Proposed Satellite Edge Network Content Caching Design

3.1. A Two-Stage Content Caching Algorithm Based on Greedy Policies

- Give priority to select SESs that can generate a larger effective unit value for caching the content;

- Give priority to select SESs that can satisfy more previously unmet user requests.

3.2. Algorithm Convergence and Complexity Analysis

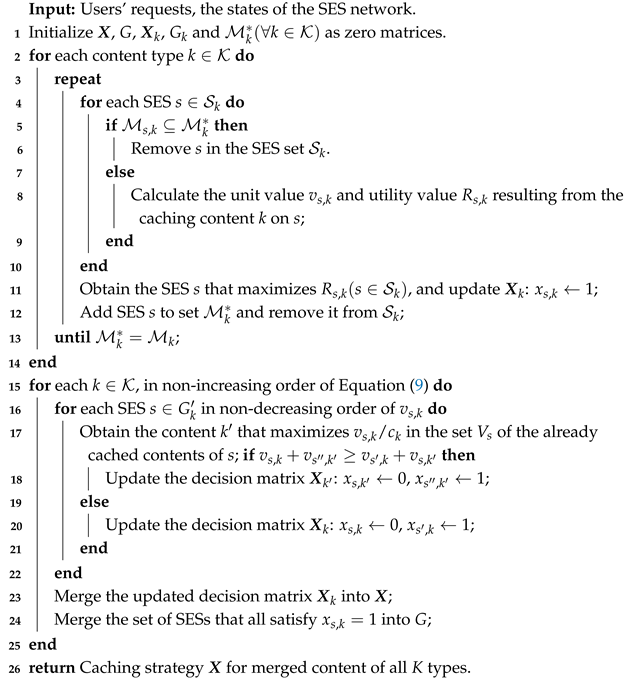

| Algorithm 1: Two-stage content caching algorithm based on greedy policy. |

|

4. Numerical Results

- Non-cooperation caching [13] (NCC): All SESs ignore potential communication with other SESs and focus solely on optimizing the QoE for ground users within their own wireless coverage areas. Each SES independently determines its content caching strategy.

- Coverage prioritization caching [29] (CPC): For each content instance, the algorithm greedily selects the SES that can provide the content to the largest number of unsatisfied requesting users, caching the content instance there until all user requests are fulfilled.

- Random caching [22] (RC): SESs randomly cache various content instances until the content requests of all users are covered.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Set of SESs | |

| Set of ground users | |

| Set of content types | |

| Indicator of whether content k is requested by user m | |

| Indicator of whether SES s has content k in its cache | |

| Size of each content instance | |

| Storage capacity of SES | |

| Indicator of the wireless coverage of a user-SES pair | |

| Indicator of whether user m can communicate with s | |

| Set of SESs that are adjacent to SES s | |

| Set of users that can access the content from s | |

| Effective transmission rate when receiving content from s | |

| Channel gain between user m and SES s | |

| Data transmission rate between SES s and user m | |

| Perceived QoE of user m | |

| Average QoE of all ground users | |

| C | Caching cost of the SES network |

| Set of SESs capable of caching content k | |

| Set of users requesting content k | |

| Set of users requesting content k from SES s | |

| Unit value of caching content k on SES s | |

| Utility value of caching content k on SES s | |

| Set of users whose requests have been satisfied | |

| Decisions of caching content k | |

| Decisions of caching all K types of content | |

| G | Set of SESs that no longer have caching conflicts |

| Set of all SESs where caching content k is obtained | |

| Set of SESs that have conflicts between G and |

References

- Daurembekova, A.; Schotten, H.D. Opportunities and Limitations of Space-Air-Ground Integrated Network in 6G Systems. In Proceedings of the 2023 IEEE 34th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Toronto, ON, Canada, 5–8 September 2023. [Google Scholar] [CrossRef]

- Azari, M.M.; Solanki, S.; Chatzinotas, S.; Kodheli, O.; Sallouha, H.; Colpaert, A. Evolution of Non-Terrestrial Networks From 5G to 6G: A Survey. IEEE Commun. Surv. Tutor. 2022, 24, 2633–2672. [Google Scholar] [CrossRef]

- Su, Y.; Liu, Y.; Zhou, Y.; Yuan, J.; Cao, H.; Shi, J. Broadband LEO Satellite Communications: Architectures and Key Technologies. IEEE Wireless Commun. 2019, 26, 55–61. [Google Scholar] [CrossRef]

- Massoglia, P.; Pozesky, M.; Germana, G. The use of satellite technology for oceanic air traffic control. Proc. IEEE 1989, 77, 1695–1708. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and Challenges in Intelligent Remote Sensing Satellite Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Casoni, M.; Grazia, C.A.; Klapez, M.; Patriciello, N.; Amditis, A.; Sdongos, E. Integration of satellite and LTE for disaster recovery. IEEE Commun. Mag. 2015, 53, 47–53. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Yang, L.; Cao, J.; Liang, G.; Han, X. Cost Aware Service Placement and Load Dispatching in Mobile Cloud Systems. IEEE Trans. Comput. 2016, 65, 1440–1452. [Google Scholar] [CrossRef]

- He, X.; Wang, S.; Wang, X.; Xu, S.; Ren, J. Age-Based Scheduling for Monitoring and Control Applications in Mobile Edge Computing Systems. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022. [Google Scholar] [CrossRef]

- Kim, T.; Sathyanarayana, S.D.; Chen, S.; Im, Y.; Zhang, X.; Ha, S. MoDEMS: Optimizing Edge Computing Migrations for User Mobility. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022. [Google Scholar] [CrossRef]

- Farhadi, V.; Mehmeti, F.; He, T.; La Porta, T.F.; Khamfroush, H.; Wang, S. Service Placement and Request Scheduling for Data-Intensive Applications in Edge Clouds. IEEE/ACM Trans. Netw. 2021, 29, 779–792. [Google Scholar] [CrossRef]

- Xu, J.; Chen, L.; Zhou, P. Joint Service Caching and Task Offloading for Mobile Edge Computing in Dense Networks. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, C.; Hu, X.; He, J.; Peng, M.; Ng, D.W.K. Joint Content Caching, Service Placement, and Task Offloading in UAV-Enabled Mobile Edge Computing Networks. IEEE J. Sel. Areas Commun. 2025, 43, 51–63. [Google Scholar] [CrossRef]

- Gao, Y.; Guan, H.; Qi, Z.; Hou, Y.; Liu, L. A multi-objective ant colony system algorithm for virtual machine placement in cloud computing. J. Comput. Syst. Sci. 2013, 79, 1230–1242. [Google Scholar] [CrossRef]

- Liu, Y.; Mao, Y.; Shang, X.; Liu, Z.; Yang, Y. Distributed Cooperative Caching in Unreliable Edge Environments. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022. [Google Scholar] [CrossRef]

- Xie, R.; Tang, Q.; Wang, Q.; Liu, X.; Yu, F.R.; Huang, T. Satellite-Terrestrial Integrated Edge Computing Networks: Architecture, Challenges, and Open Issues. IEEE Netw. 2020, 34, 224–231. [Google Scholar] [CrossRef]

- Li, Q.; Wang, S.; Ma, X.; Sun, Q.; Wang, H.; Cao, S. Service Coverage for Satellite Edge Computing. IEEE Internet Things J. 2022, 9, 695–705. [Google Scholar] [CrossRef]

- Pasteris, S.; Wang, S.; Herbster, M.; He, T. Service Placement with Provable Guarantees in Heterogeneous Edge Computing Systems. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 514–522. [Google Scholar] [CrossRef]

- Zhu, X.; Jiang, C.; Kuang, L.; Zhao, Z. Cooperative Multilayer Edge Caching in Integrated Satellite-Terrestrial Networks. IEEE Trans. Wireless Commun. 2022, 21, 2924–2937. [Google Scholar] [CrossRef]

- Tang, J.; Li, J.; Chen, X.; Xue, K.; Zhang, L.; Sun, Q. Cooperative Caching in Satellite-Terrestrial Integrated Networks: A Region Features Aware Approach. IEEE Trans. Veh. Technol. 2024, 73, 10602–10616. [Google Scholar] [CrossRef]

- Han, P.; Liu, Y.; Guo, L. Interference-Aware Online Multicomponent Service Placement in Edge Cloud Networks and its AI Application. IEEE Internet Things J. 2021, 8, 10557–10572. [Google Scholar] [CrossRef]

- Zhao, L.; Tan, W.; Li, B.; He, Q.; Huang, L.; Sun, Y. Joint Shareability and Interference for Multiple Edge Application Deployment in Mobile-Edge Computing Environment. IEEE Internet Things J. 2022, 9, 1762–1774. [Google Scholar] [CrossRef]

- Li, B.; He, Q.; Cui, G.; Xia, X.; Chen, F.; Jin, H. READ: Robustness-Oriented Edge Application Deployment in Edge Computing Environment. IEEE Trans. Serv. Comput. 2022, 15, 1746–1759. [Google Scholar] [CrossRef]

- Cui, G.; He, Q.; Chen, F.; Jin, H.; Yang, Y. Trading off Between Multi-Tenancy and Interference: A Service User Allocation Game. IEEE Trans. Services Comput. 2022, 15, 1980–1992. [Google Scholar] [CrossRef]

- Geoffrion, A.M. Generalized benders decomposition. J. Optim. Theory Appl. 1972, 10, 237–260. [Google Scholar] [CrossRef]

- Billionnet, A.; Soutif, É. An exact method based on Lagrangian decomposition for the 0–1 quadratic knapsack problem. Eur. J. Oper. Res. 2004, 157, 565–575. [Google Scholar] [CrossRef]

- Tan, W.; Zhao, L.; Li, B.; Xu, L.; Yang, Y. Multiple Cooperative Task Allocation in Group-Oriented Social Mobile Crowdsensing. IEEE Trans. Services Comput. 2022, 15, 3387–3401. [Google Scholar] [CrossRef]

- Fiedler, M.; Hossfeld, T.; Tran-Gia, P. A generic quantitative relationship between quality of experience and quality of service. IEEE Network 2010, 24, 36–41. [Google Scholar] [CrossRef]

- He, T.; Khamfroush, H.; Wang, S.; La Porta, T.; Stein, S. It’s Hard to Share: Joint Service Placement and Request Scheduling in Edge Clouds with Sharable and Non-Sharable Resources. In Proceedings of the 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), Vienna, Austria, 2–6 July 2018; pp. 365–375. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Number of SESs, S | 16 |

| Number of ground users, M | |

| Number of content type varieties, K | |

| SES storage space, | 2 GB |

| Size of instance of content k, | MB |

| Wireless link rate between SESs, | 40 MB/s |

| Wireless bandwidth of subchannel, W | 1 MHz |

| Signal transmission power of user, | 1 W |

| Noise power, | dBm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, J.; Zhao, Y.; Ma, Y.; Wang, Q. User Experience-Oriented Content Caching for Low Earth Orbit Satellite-Enabled Mobile Edge Computing Networks. Electronics 2025, 14, 2413. https://doi.org/10.3390/electronics14122413

He J, Zhao Y, Ma Y, Wang Q. User Experience-Oriented Content Caching for Low Earth Orbit Satellite-Enabled Mobile Edge Computing Networks. Electronics. 2025; 14(12):2413. https://doi.org/10.3390/electronics14122413

Chicago/Turabian StyleHe, Jianhua, Youhan Zhao, Yonghua Ma, and Qiang Wang. 2025. "User Experience-Oriented Content Caching for Low Earth Orbit Satellite-Enabled Mobile Edge Computing Networks" Electronics 14, no. 12: 2413. https://doi.org/10.3390/electronics14122413

APA StyleHe, J., Zhao, Y., Ma, Y., & Wang, Q. (2025). User Experience-Oriented Content Caching for Low Earth Orbit Satellite-Enabled Mobile Edge Computing Networks. Electronics, 14(12), 2413. https://doi.org/10.3390/electronics14122413