Abstract

Edge intelligence is an emerging paradigm generated by the deep integration of artificial intelligence (AI) and edge computing. It enables data to remain at the edge without being sent to remote cloud servers, lowering response time, saving bandwidth resources, and opening up new development opportunities for multi-user intelligent services (MISs). Although edge intelligence can address the problems of centralized MISs, its inherent characteristics also introduce new challenges, potentially leading to serious security issues. Malicious attackers may use inference attacks and other methods to access private information and upload toxic updates that disrupt the model and cause severe damage. This paper provides a comprehensive review of multi-user privacy protection mechanisms and compares the network architectures under centralized and edge intelligence paradigms, exploring the privacy and security issues introduced by edge intelligence. We then investigate the state-of-the-art defense mechanisms under the edge intelligence paradigm and provide a systematic classification. Through experiments, we compare the privacy protection and utility trade-offs of existing methods. Finally, we propose future research directions for privacy protection in MISs under the edge intelligence paradigm, aiming to promote the development of user privacy protection frameworks.

1. Introduction

In recent years, the continuous development of big data, edge computing [1,2], AI, and communication technologies has led society into a new era of intelligent information. The development of intelligent services has improved the lives of more and more users, yet the demands of multi-user scenarios pose several long-term problems for these services. First, intelligent services rely on huge numbers of users offering personal information. Users who upload personal data suffer severe privacy threats. Second, service providers must solve adaptation challenges caused by various devices (such as smartphones, PCs, and smart wearable devices) as well as consumers’ unique wants. The massive amount of heterogeneous data places enormous strain on the processing capabilities of intelligent systems. Third, the increased number of mobile devices in multi-user scenarios necessitates services that can adapt to device mobility and unstable network conditions. In scenarios with limited bandwidth or unstable network connections, intelligent services struggle to meet the demand for immediate responses.

Currently, the application of AI is gradually shifting toward edge computing, resulting in the profound integration of edge computing and AI, thereby establishing a novel research domain, edge intelligence, which is receiving widespread attention from both academia and industry [3,4]. Edge intelligence is a technological paradigm in which AI algorithms run directly on edge devices to process data, without the need to transmit data to the cloud or data centers for processing [5,6]. The “edge” denotes any device linked to a network, including smartphones, sensors, smart home devices, smartwatches, smart cameras, or other IoT devices. Multi-user intelligent services (MISs) are widely used on mobile smart devices, and their requirement for intelligent, real-time replies aligns well with the functionalities of edge intelligence. Unlike traditional cloud-based intelligence, which requires end devices to upload generated or collected data to remote clouds, edge intelligence processes and analyzes data locally, thereby preventing direct access to user data by malicious attackers, effectively protecting user privacy, reducing response time, and saving bandwidth resources [7]. In practical applications, edge intelligence has already been implemented in various typical scenarios, such as autonomous driving, intelligent security, and remote healthcare. For example, Apple’s Apple Neural Engine (ANE), along with its supported large-scale models (such as the on-device generative models in iOS 17), enables local execution of tasks such as speech recognition, image classification, and text generation on iPhones and iPads without relying on cloud services. Google’s Edge TPU platform allows developers to deploy TensorFlow Lite models on embedded devices, enabling edge AI inference. Meanwhile, frameworks such as TensorFlow Lite, PyTorch Mobile, OpenVINO, and Core ML have significantly reduced the difficulty of migrating and deploying AI models to edge devices. The emergence of edge intelligence offers a better development outlook for MISs.

During the training process of edge intelligence, the central server must aggregate updates from edge devices to construct a shared global model. Currently, most optimization processes for deep learning models depend on variants of stochastic gradient descent (SGD). For instance, federated learning, as a typical training method in edge intelligence [8], enables neural network models to undergo distributed training within a mobile edge computing framework. Clients engaged in the training are not required to upload local data. Instead, they upload updated model parameters after training, which are aggregated and updated by the edge server node, and the optimized parameters are subsequently redistributed to the clients [9]. This approach effectively reduces the risk of local data leaking by users.

If edge intelligence is to be widely applied in MISs, its structural characteristics will introduce new threats. These challenges can be summarized in the following two aspects:

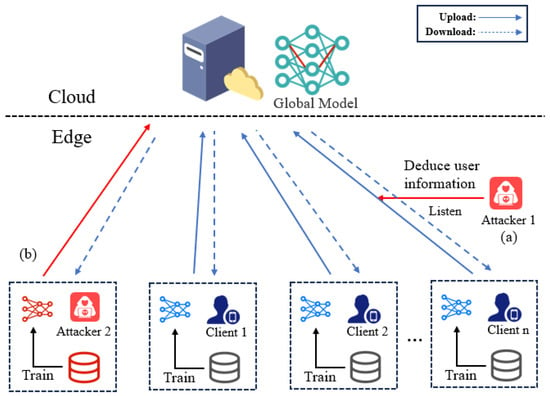

The first is data-centric privacy issues with respect to MISs. As shown in Figure 1(a), although the updates uploaded by each edge device typically contain little user-local data, existing research shows that attackers can infer a user’s private data from partial information in the updates or interaction data between different users using techniques such as membership inference attacks or model inversion attacks. For example, by analyzing computational processes and shared data from multiple users, attackers may be able to infer a user’s location, activity history, preferences, and other personal information.

Figure 1.

Potential threats of MISs in the context of edge intelligence. (a) Data-centric privacy issues with respect to MISs. (b) Model-centric privacy issues with respect to MISs.

The second is the model-centric privacy issues with respect to MISs. In multi-user scenarios, edge intelligence systems typically rely on multiple devices (such as smartphones, IoT devices, etc.) to collaboratively process data and train models. As shown in Figure 1(b), backdoor attacks can insert harmful input into model training, resulting in inaccurate predictions or judgments. Backdoor attacks in edge intelligence systems can result in unfair resource allocation and decision-making among multiple users. By injecting malicious samples, attackers can bias the model toward specific users, undermining fairness, causing user dissatisfaction, and leading to user attrition.

These two risks are closely interconnected, as attackers can exploit privacy leakage to acquire additional user information, which, in turn, enables them to craft more effective backdoor attacks, manipulate the model training and decision-making processes, and compromise the fairness and security of edge intelligence systems.

Existing reviews on edge intelligence primarily focus on investigating the current methods used in various application scenarios, with only a brief mention and analysis of the privacy and security issues it introduces. If these issues are not addressed, edge intelligence, which can provide many benefits for multi-user systems, will encounter significant practical challenges, leading to the leakage of sensitive user information and compromising service reliability. This trend has prompted us to investigate the new threats posed by edge intelligence in MISs. In this paper, we conduct a detailed study of the challenges and solutions that edge intelligence faces in the widespread application of MISs [10]. Specifically, the contributions of this paper are as follows:

- First, we compare the common centralized and edge intelligence-based frameworks for MISs and examine in detail the new challenges that edge intelligence faces.

- Second, we survey and summarize the relevant literature on MISs under edge intelligence from multiple perspectives, including privacy protection and model security protection. We analyze their strengths and weaknesses and compare these methods through experiments.

- Finally, we discuss the research directions on multi-user privacy and security in future edge intelligence frameworks from five different aspects, with the hope of providing insights for the field.

The rest of the paper is organized as follows: the following section introduces the propaedeutic concepts necessary for this survey to be illustrative. Section 3 discusses the distinctions between MISs in the traditional and edge intelligent modes, as well as the novel challenges that edge intelligence presents for MIS privacy. Section 4 summarizes the state-of-the-art methods to address these difficulties and compares them through experiments. Finally, potential future research directions are discussed in Section 5, and conclusions are presented in Section 6.

2. Preliminaries

2.1. Privacy Protection Mechanisms

In multi-user intelligent services, researchers have proposed various privacy protection methods to safeguard user privacy. Based on their protection methods, these methods can be divided into three main categories, as follows: encryption-based methods, perturbation-based methods, and anonymization-based methods. Each type of method has different technologies and application scenarios. Yin has previously summarized the related technologies of these mechanisms [10]. This paper will classify and introduce these methods in Table 1. The following provides a brief description of each method to assist readers in understanding the content and classification criteria in Table 1.

Table 1.

Classification of common privacy protection methods.

Encryption-based mechanisms: The basic principle of encryption mechanisms is to encrypt users’ sensitive information using cryptographic methods. When users send service requests, their real data is encrypted before transmission, ensuring that even if the data is maliciously intercepted, it cannot be read. Commonly used related techniques include homomorphic encryption, space transformation, and secure multi-party computation.

Perturbation-based mechanisms: The key idea of a perturbation technique is to add noise or variations to the user’s private data. The goal of this technique is to make private data imprecise for potential attackers, such that statistical information obtained from the perturbed data cannot be separated from the original data, preventing sensitive information from being leaked. The three most frequent perturbation approaches are differential privacy, additive perturbation, and multiplicative perturbation [10].

Anonymization-based mechanisms: Anonymization techniques involve hiding users’ identity information while retaining the utility of the published data, thus removing identifiable information to protect user privacy. In MISs, users’ identity information (such as names, addresses, etc.) is separated from their private data to prevent attackers from inferring the user’s identity through private information. Three widely used anonymization techniques are k-anonymity, l-diversity, and t-closeness.

2.2. Edge Intelligence

Edge intelligence emerges from the integration of edge computing frameworks with AI models, such as machine learning and deep learning, aiming to enhance data processing and user data privacy protection while fully utilizing edge resources. Existing computing architectures face unavoidable limitations when dealing with massive data processing. The computing activities necessary for big data processing cannot be supported by end devices because of their limited computational capacity. Meanwhile, cloud computing architectures face significant backbone network pressures and high transmission latency. As a result, by pushing AI applications to the network edge, the concept of Edge intelligence has emerged.

In the Edge intelligence framework, data are increasingly processed at edge servers or end devices, rather than being transmitted directly over the backbone network. As the edge intelligence level increases, the amount of data uploaded across the network decreases significantly, decreasing the risk of information leakage and data tampering caused by network attacks during data transmission.

Edge intelligence also enables applications with high real-time requirements to become feasible. In contrast to traditional cloud-based computing architectures, edge intelligence reduces data transmission latency and improves low-latency data processing capabilities by significantly shortening the physical distance between edge servers and end devices. Moving the computation process from cloud servers to edge servers significantly reduces the unavoidable network transmission latency.

3. MIS Protection and New Challenges

This section reviews traditional privacy protection methods for MISs, as well as those developed in the context of edge intelligence. In addition, it highlights the new challenges to privacy and security introduced by edge intelligence.

3.1. MIS Protection Framework

The network model for MISs consists of three entities: mobile users, wireless communication networks, and service providers [11]. In a typical MIS system, mobile users (clients) send a request to the cloud server via the wireless communication network. The cloud server then returns the corresponding results, which represent the correct response to the service request specified by the user.

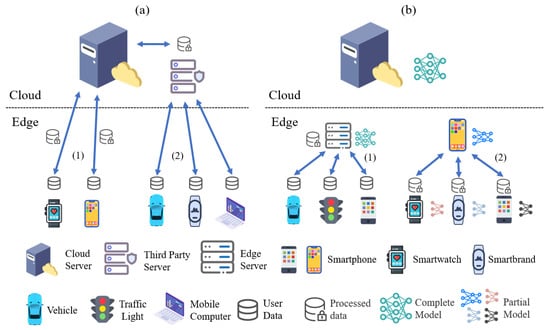

Figure 2a illustrates the privacy protection mechanisms used in a traditional MIS design. There are two main architectural types: third-party-based and third-party-free.

Figure 2.

Comparison of traditional intelligence and edge intelligence from the perspective of implementation. In traditional intelligence, all data must be uploaded to a central cloud server, while in edge intelligence, intelligent application tasks are done at the edge with locally generated data in a distributed manner. (a) Centralized intelligence. (b) Edge intelligence.

Third-party-based architecture: The architecture, as indicated in (a) (1), is made up of mobile users, third-party providers, and cloud servers. The major duty of the third party is to gather and process users’ raw data, safeguard their privacy information using privacy-preserving technologies, and then transport the processed data to the cloud server. After receiving the data, the cloud server evaluates the request and returns the results to the third-party proxy. The proxy then sends the results to the appropriate users.

Third-party-free architecture: The architecture depicted in (a) (2) comprises solely of mobile users and cloud servers. The system structure necessitates that the mobile devices carried by the users have particular processing and storage capabilities. Depending on their privacy needs, mobile users employ privacy-preserving technologies to encrypt their personal data on the mobile device before sending it to the server.

3.2. Changes and Challenges in the Context of Edge Intelligence

To clarify, Figure 2 compares traditional centralized intelligence and edge intelligence from an implementation standpoint. As illustrated in Figure 2a, traditional centralized intelligence necessitates that all edge devices upload their data to a central server before performing intelligent operations such as model training or inference. The data center is typically located in a remote cloud. After processing at the central server, the findings (such as recognition or prediction outcomes) are returned to the edge devices. Figure 2b depicts the deployment of edge intelligence, in which tasks such as recognition and prediction are handled by edge servers and peer devices, or through a collaborative edge-cloud framework. In this strategy, very little or no data is uploaded to the cloud. For example, in scenario (b)(1), the edge can run an entire intelligent model to serve edge devices directly. In scenario (b)(2), the model is separated into functional components that are executed by many edge devices. The devices work together to complete the mission [3].

However, the characteristics of edge intelligence introduce new challenges for MIS privacy protection, which can be categorized into two principal aspects. First, parameter updates can reveal private information, with inference attacks serving as an example method. Second, the effectiveness of model training can be compromised by backdoor attacks, which are a commonly used strategy.

Inference attacks: The objective of inference attacks is to analyze shared models or model updates during the edge intelligence training process to infer sensitive information about participants’ data [12]. Different types of inference attacks include: (1) property inference attacks, (2) model inversion attacks, and (3) membership inference attacks [13].

Property inference attacks involve analyzing changes in data distribution during model update cycles to infer sensitive attributes unrelated to the learning objective, such as the user’s age, gender, location, or preferences [14]. By analyzing model updates uploaded from different devices, especially when there are significant changes in the distribution of sensitive attribute data, attackers can gain additional information. In edge intelligence scenarios, attackers may expose users’ sensitive information through changes in data within model updates, which can then be used for malicious advertising, personalized recommendations, or social engineering attacks. Model inversion attacks involve analyzing the input–output behavior of a machine learning model to infer training data or model parameters. Even though the raw data is not directly uploaded to the cloud, attackers can still analyze model updates to recover users’ private data or features. For example, in smart device applications, attackers may reverse-engineer sensitive information such as the user’s health status or movement trajectory. Membership inference attacks involve querying a pre-trained AI model to infer whether a given input data point is part of the training set [15,16]. Attackers construct specific inputs and obtain model outputs, using these outputs to infer whether the data was part of the training process [17]. Even if the data is retained on local devices, attackers can still infer whether users’ identities or sensitive information have been exposed by analyzing the model output, leading to the leakage of training data.

Backdoor attacks: During the training process in edge intelligence, each user of an edge device can act as a participant, observing the intermediate model state and contributing arbitrary updates to the globally shared model [18]. Most studies assume that participants in the training process are benign, providing genuine training data and uploading updates accordingly. However, if certain participants are malicious, they may upload incorrect updates to the central server, potentially leading to training failure. In some cases, such attacks can result in significant economic losses. For instance, in a backdoor-compromised facial recognition-based authentication system, an attacker could deceive the system into recognizing them as an authorized individual, allowing unauthorized access to a building.

Based on attack strategies, backdoor attacks can be classified into two categories: data poisoning attacks and model poisoning attacks. Data poisoning attacks undermine the overall performance of the model by tampering with the training data (e.g., altering the accuracy of the training data), causing the model to produce incorrect outputs when handling normal inputs [19]. Model poisoning attacks, on the other hand, only alter the model’s response to specific inputs without affecting its performance on other inputs. In this way, attackers can manipulate the model’s decision-making process in specific situations without causing widespread anomalies in the system [20,21].

In edge intelligence, backdoor attacks not only compromise the reliability of the model but also may lead the model to respond abnormally to malicious inputs, affecting the fairness and security of the system, and may even be used to manipulate system behavior, creating significant security risks. Therefore, preventing backdoor attacks is key to ensuring the stability and reliability of edge intelligence systems.

In real-world multi-user service scenarios, if the number of malicious participants is low, the impact of data poisoning attacks will be mitigated during aggregation. This is because malicious attackers constitute only a small fraction of the total participants, making data poisoning-based model attacks relatively inefficient. In contrast, model poisoning attacks, which directly manipulate the global model, are more effective. Therefore, our focus will primarily be on this attack vector.

4. Existing Advanced Protection Methods

In this section, we conduct an in-depth study of existing multi-user privacy protection methods in edge intelligence from two perspectives, that is, data-centric privacy issues with respect to MISs and model-centric privacy issues with respect to MISs. The performance of representative methods is then experimentally evaluated in terms of privacy protection effectiveness and utility trade-offs.

4.1. Data-Centric Privacy Issues with Respect to MISs

This section discusses the various privacy protection methods used in edge intelligence. Based on the privacy protection techniques used in edge intelligence [22], privacy-preserving methods are classified into three types, as follows: (1) encryption-based, (2) perturbation-based, and (3) anonymization-based approaches. Table 2 summarizes the advanced methods and evaluates them in five dimensions: privacy protection methods, consideration of privacy-utility trade-offs, lightweight design, and defensible attack types.

Table 2.

Comparison of privacy protection methods concerning data-centric privacy issues in MISs.

4.1.1. Encryption-Based Methods

Encryption-based privacy protection methods mainly leverage cryptographic techniques to ensure data privacy [10]. Among these, homomorphic encryption is commonly used as an effective privacy protection mechanism. However, traditional homomorphic encryption methods frequently have high computational and communication overhead, especially in cross-institutional (heterogeneous) federated learning scenarios. Yan et al. proposed the FedPHE framework [23], which is a homomorphic encryption-based federated learning framework that protects privacy while addressing client heterogeneity. FedPHE uses CKKS homomorphic encryption and sparse techniques to achieve efficient encrypted weighted aggregation, lowering communication costs and speeding up the training process. However, the framework sacrifices some model accuracy, indicating that information may be lost during the encryption process.

On the other hand, Jin et al. [24] introduced a more efficient homomorphic encryption framework, FedML-HE. This framework reduces computational and communication overhead by selectively encrypting sensitive parameters, resulting in outstanding performance when training large-scale base models (e.g., ResNet-50). FedML-HE improves the model aggregation algorithm to provide customizable privacy protection while effectively reducing the burdens imposed by homomorphic encryption. Despite significant performance improvements in large-scale federated learning, computational costs remain an issue when client resources are limited.

Nguyen et al. proposed the CoLR framework [25], which uses low-rank structures to lower communication costs in federated recommendation systems. CoLR modifies lightweight trainable parameters while leaving most others intact, lowering communication overhead without sacrificing recommendation performance. Although this strategy greatly reduces payload size, additional optimization may be required to deal with bandwidth variability.

In the field of cryptography, Janbaz et al. [26] proposed a non-interactive publicly verifiable secret sharing (PVSS) scheme based on homogeneous linear recursions (HLRs). By reducing the number of group exponentiations, this scheme outperforms the existing Schoenmakers scheme in computational complexity and supports fast key generation and verification, making it suitable for secret sharing in distributed systems. However, its practical applicability and communication latency in large-scale distributed systems still require further investigation.

Zhang et al. proposed a privacy-preserving scheme that combines the Chinese Remainder Theorem (CRT) with Paillier homomorphic encryption [27]. The scheme ensures privacy by processing shared gradients and introduces bilinear aggregate signature techniques to verify the correctness of the aggregated gradients. Experimental results show that the scheme maintains high accuracy and efficiency while preserving privacy, but its scalability on large-scale datasets remains to be fully validated.

4.1.2. Perturbation-Based Methods

Perturbation mechanisms safeguard user privacy by introducing noise or modifying private data, with an emphasis on how noise is introduced. Hu et al. introduced Fed-SMP [28], a unique differential privacy federated learning approach that provides client-level DP guarantees while preserving high model accuracy. Fed-SMP employs sparse model perturbation (SMP), which involves first sparsifying local model updates and then using Gaussian noise to perturb selected coordinates. This strategy provides a better mix of privacy protection and model accuracy, particularly for high-dimensional datasets.

In real-world multi-user applications, privacy requirements vary among users. To address this, Hu and Song proposed a user-consent federated recommendation system (UC-FedRec) [29], which allows users to customize privacy preferences, meeting diverse privacy needs while minimizing the cost of recommendation accuracy. The UC-FedRec framework protects sensitive personal information by applying sensitive attribute filters flexibly.

Mai and Pang introduced the VerFedGNN framework [30], a vertical federated graph neural network designed for training recommendation systems while preserving the privacy of user interaction information. VerFedGNN achieves privacy protection by aggregating neighbor embeddings and perturbing public parameter gradients using random projections and ternary quantization mechanisms.

Chen et al. proposed a federated learning scheme named “Piggyback DP” [31], which achieves differential privacy by integrating gradient compression techniques with additive white Gaussian noise (AWGN) inherent in wireless communication channels. The approach aims to minimize the need for manually injected noise while safeguarding training data. By jointly leveraging gradient quantization and sparsification techniques along with the natural AWGN of wireless channels, the method reduces the reliance on manually added noise and enhances the efficiency of privacy preservation.

Chamikara et al. proposed a distributed data perturbation algorithm named DISTPAB [32], which aims to protect the privacy of horizontally partitioned data. The algorithm perturbs data through multidimensional transformations and random expansion, and selects optimal perturbation parameters using the -separation privacy model, thereby achieving strong privacy protection while maintaining high data utility. Experimental results demonstrate that DISTPAB performs well in terms of classification accuracy, efficiency, scalability, and robustness against attacks. However, the algorithm may be inefficient when handling vertically partitioned data, and its performance on resource-constrained devices has not been fully evaluated.

Wang et al. [33] proposed a defense mechanism that protects privacy by adding random noise on the client side and performing global update compensation on the server side. This mechanism outperforms baseline defense methods in reducing the PSNR and SSIM of reconstructed images and maintains training accuracy through global gradient compensation. However, its scalability in large-scale distributed systems and its defense capability against malicious servers still need further evaluation.

4.1.3. Anonymization-Based Methods

Although perturbation-based privacy protection approaches offer high privacy assurances, they frequently result in a drop in utility. As a result, many researchers have turned to anonymization techniques. Anonymization is primarily used to achieve group anonymity by deleting identifiable information while retaining the usefulness of the released material. Jin et al. proposed a dynamic quality of service (QoS) optimization strategy [34], aimed at optimizing user service quality in mobile edge networks while also safeguarding user privacy. This technique uses incremental learning and federated learning to optimize edge service caching and assign edge servers to consumers, effectively protecting their privacy and feature information. By incorporating the concept of ”K-anonymity” and restoring user mobility models, this approach not only maintains regional QoS optimization but also significantly enhances training efficiency.

Choudhury et al. introduced a syntactic anonymization method [35] for privacy protection in federated learning environments. This method incorporates a local syntactic anonymization step and adopts an “anonymize-and-mine” approach to process raw data, enabling subsequent data mining tasks. Empirical evaluations in the medical field demonstrate its ability to provide the required level of privacy protection while maintaining high model performance. Compared with differential privacy-based methods, this syntactic anonymization approach delivers higher model performance across different datasets and privacy budgets.

Zhao et al. [36] proposed an anonymous and privacy-preserving federated learning scheme that employs proxy servers and differential privacy mechanisms to safeguard data privacy. The scheme reduces the number of shared parameters and adds Gaussian noise to protect privacy while ensuring participant anonymity. Experimental results indicate that the scheme maintains accuracy comparable to non-enhanced methods while protecting privacy, but its performance with non-independent and identically distributed (non-IID) data needs further validation.

4.1.4. Summary and Analysis

Early encryption methods had significant computational overhead, which affected their feasibility in practical applications. FedPHE reduces communication costs and improves training speed by integrating sparse techniques. This approach not only represents an advancement in homomorphic encryption technology but also promotes the integration of encryption methods with edge intelligence systems. However, while encryption methods effectively protect data, their high computational and communication costs remain a bottleneck limiting their widespread application. In contrast, perturbation-based methods offer the advantage of relatively low computational overhead, but this often results in weaker privacy protection compared to encryption methods, especially when faced with complex attacks.

Anonymization-based methods, while computationally lightweight, offer relatively low privacy protection, especially in high-dimensional data or when attackers are present, making them susceptible to re-identification attacks that could lead to privacy breaches. Therefore, anonymization methods may not provide sufficient protection in high-risk scenarios involving privacy leakage.

Through the analysis of the three privacy protection methods above, it is clear that each method has its unique advantages and challenges. With the advancement of research, privacy protection methods are gradually moving toward integration and dynamic adaptability. For example, by combining homomorphic encryption from encryption methods and differential privacy from perturbation methods, the complementary advantages of both can enable more flexible and efficient privacy protection in different scenarios. Additionally, the introduction of hardware acceleration technologies, such as using GPUs and FPGAs to accelerate privacy protection algorithms, will help enhance the privacy protection capabilities of edge intelligence systems.

4.2. Model-Centric Privacy Issues with Respect to MISs

To mitigate the threat of backdoor attacks in edge intelligence, a variety of defense techniques have been proposed. Based on existing strategies, these defenses can be broadly categorized into two types, as follows: (1) anomaly detection and (2) model tolerance. Table 3 presents a summary of state-of-the-art methods from the following perspectives: defense strategy, consideration of the privacy–utility trade-off, lightweight design, and the types of attacks that can be defended against [37].

Table 3.

Comparison of privacy protection methods concerning mode-centric privacy issues in MISs.

4.2.1. Anomaly Detection

Anomaly detection focuses primarily on identifying abnormal behaviors during data input or model training. By monitoring data distribution, feature patterns, or gradient changes during training, it aims to detect signs that deviate from expected behavior. Once an anomaly is detected, the system can promptly take countermeasures, such as discarding suspicious data, pausing the training process, or issuing alerts, thereby preventing poisoning attacks from compromising the model training process.

Yan et al. [38] proposed RECESS, an active defense mechanism designed to protect federated learning from model poisoning attacks. RECESS queries the aggregated gradients from each participating client and detects malicious client responses, enabling precise differentiation between malicious and benign clients. Unlike traditional passive analysis methods, RECESS significantly improves the accuracy of the final trained model by constructing test gradients and comparing them with the responses of the clients.

Traditional defenses often rely on assumptions of IID (independent and identically distributed) data and a majority of benign clients, which are not always valid in real-world applications. To address these vulnerabilities, Xie et al. introduced FedREDefense [39], a novel defense mechanism against model poisoning attacks in federated learning. Unlike previous methods, FedREDefense does not depend on the similarity of data distributions across clients or a dominant presence of benign clients. Instead, it utilizes model update reconstruction errors to identify malicious clients. The core idea is that updates from genuine training processes can be reconstructed using local knowledge, while updates from poisoned models cannot. Despite its effectiveness, some limitations remain. For instance, the method’s reliance on reconstruction errors might be less effective against sophisticated attack strategies that manipulate model updates to closely resemble legitimate ones. Additionally, while the defense is robust against non-IID data, its performance could degrade in cases of extreme data heterogeneity.

Yan et al. [40] proposed DeFL, a new defense mechanism designed to counter model poisoning attacks by identifying critical learning periods (CLPs) in deep neural networks. DeFL detects fraudulent clients and identifies CLPs using easily computable joint gradient norm vectors, which are then removed during the aggregation phase. DeFL improves performance significantly in minimizing poisoning assaults on the global model while remaining robust to detection mistakes.

Xu et al. [41] proposed a dual-defense framework called DDFed, which combines fully homomorphic encryption (FHE) and differential privacy (DP) to simultaneously protect model privacy and effectively defend against model poisoning attacks. The framework incorporates a two-stage anomaly detection mechanism, consisting of secure similarity computation and feedback-driven collaborative selection, which enables the identification of malicious models within encrypted model updates. DDFed exhibits strong robustness and privacy protection in both cross-device and cross-institution federated learning scenarios. However, it incurs high computational overhead and shows limitations when faced with a high proportion of adversarial clients.

Qin et al. [42] introduced a novel backdoor defense framework named Snowball, which selects model updates from an individual perspective using a bidirectional election mechanism, comprising bottom-up and top-down elections. Snowball leverages variational autoencoders (VAEs) to learn the nonlinear features of model updates, thereby enhancing its capability to detect malicious models. Snowball demonstrates superior robustness under complex non-independent and identically distributed (non-IID) data and high adversary ratios.

Zheng et al. [43] proposed a defense mechanism named GCAMA, which combines gradient-weighted class activation mapping (Grad-CAM) and autoencoders (AEs) to transform local model updates into low-dimensional heatmaps and amplify their salient features, thereby improving the detection of malicious updates. Experimental validation shows that GCAMA outperforms existing methods on the CIFAR-10 and GTSRB datasets, achieving fast convergence and maintaining high global model test accuracy. However, its dependence on test data and high computational cost limit its applicability in large-scale scenarios.

4.2.2. Model Tolerance

Model tolerance focuses on enhancing the model’s intrinsic resistance to poisoning attacks. Even if an attacker successfully tampers with part of the data or model parameters, the model can still maintain relatively stable performance and avoid serious erroneous outputs. This can be achieved through a variety of techniques, such as employing robust model training algorithms like adversarial training, which introduces adversarial examples during training to help the model learn to resist malicious data interference. Alternatively, model architectures with regularization mechanisms can be designed to prevent overfitting to abnormal data. In addition, model validation and calibration techniques can be used to assess and fine-tune the trained model [15], ensuring it maintains reliable performance in the presence of potential poisoning attacks.

Panda et al. [44] presented SparseFed, a technique that mitigates model poisoning attacks in federated learning through global top-k updates and device-level gradient clipping. SparseFed proposes a theoretical framework for analyzing the resilience of protection techniques against poisoning attacks, as well as convergence analysis of the algorithm.

Jia et al. [45] proposed a game-theory-based defense mechanism, FedGame, aimed at mitigating backdoor attacks in federated learning. FedGame formalizes the defense problem as a minimax game, creating an interactive defense mechanism by simulating the strategic interplay between the defender and dynamic attackers. Theoretical investigation demonstrates that, under proper assumptions, the global model trained using FedGame performs similarly under backdoor attacks to the model trained without an attack.

Zhu et al. [46] proposed LeadFL, a client-side self-defense method based on Hessian matrix optimization. This method introduces a regularization term into local model training to minimize the influence of the Hessian matrix, thereby defending against model poisoning attacks.

Zhang et al. [47] proposed the FLIP framework, which defends against backdoor attacks through trigger reverse engineering and low-confidence sample filtering. FLIP performs well across various datasets and attack scenarios, significantly reducing the attack success rate (ASR) while maintaining high accuracy for the main task. FLIP primarily focuses on model tolerance and does not address data privacy protection.

Huang et al. [48] introduced Lockdown, a method that restricts parameter access for malicious clients through isolated subspace training, thus preventing contamination of the global model. Lockdown significantly reduces the success rate of backdoor attacks while also lowering communication overhead and model complexity. However, it relies on sparse training techniques, whose acceleration benefits remain underdeveloped, and offers limited defense against advanced attacks such as Omniscience.

Mai et al. [49] proposed the RFLPA framework, which leverages secure aggregation (SecAgg) to protect local gradient privacy. It defends against model poisoning by computing cosine similarity and applying verifiable packed Shamir secret sharing. RFLPA maintains high accuracy while significantly reducing communication and computational costs. However, it depends on a small, clean dataset on the server side, which may not be feasible in certain scenarios, and its high computational complexity may limit scalability in large-scale systems.

Yu et al. [50] proposed a non-targeted attack method called ClusterAttack for federated recommender systems, along with a defense mechanism named UNION based on uniformity constraints. ClusterAttack uploads malicious gradients to force item embeddings to converge into a few dense clusters, thereby disrupting ranking order. In contrast, UNION optimizes the embedding distribution into a uniform structure via contrastive learning to resist such non-targeted attacks.

Kabir et al. [51] introduced the FLShield framework, which addresses model poisoning attacks in federated learning through a verification mechanism. The framework validates local model updates using representative models and the loss impact per class (LIPC) metric, effectively filtering out malicious updates. Representative models are generated by aggregating multiple local updates, enhancing generalization while preserving data privacy. Furthermore, FLShield demonstrates robustness against gradient inversion attacks, ensuring the protection of local data privacy.

Sun et al. [52] proposed FL-WBC (white blood cell for federated learning), a client-side defense method aimed at mitigating model poisoning attacks that have already compromised the global model. FL-WBC quantifies the long-term attack effect on global model parameters (AEP) and perturbs the null space of the Hessian matrix during local training to alleviate the lasting impact of the attack. Experimental results show that FL-WBC effectively mitigates attack effects within a few communication rounds with minimal performance degradation. Moreover, FL-WBC complements existing server-side robust aggregation methods, such as coordinate-wise median and trimmed mean, further enhancing the robustness of federated learning systems.

4.2.3. Summary and Analysis

Anomaly detection and model tolerance play a crucial role in defending against model poisoning attacks (such as backdoor attacks). Both RECESS and FedREDefense identify and isolate malicious clients by analyzing anomalies in model updates, thus preventing attacks from damaging model training. However, anomaly detection methods are often accompanied by high computational and communication overhead, and the system’s response may be significantly affected, especially when data distribution is uneven or attack behaviors are complex. In contrast, model tolerance focuses on enhancing the robustness of the model itself, allowing it to maintain stable performance even when facing partial malicious updates. Methods like SparseFed and FedGame effectively enhance the model’s resilience to data poisoning by optimizing the training process or introducing adversarial training, particularly in scenarios with a small number of malicious participants in multi-user environments. The advantage of these methods lies in improving the model’s inherent fault tolerance, but they cannot typically directly identify attacks and instead rely more on the model’s stability and optimization strategies.

Although anomaly detection and model tolerance are two independent strategies, they are not mutually exclusive and can be combined to form a more robust privacy protection system. The proposal of a cross-layer collaborative defense system also reflects the parallel development of both strategies. In this multi-layer defense architecture, anomaly detection at the client layer and model tolerance at the server layer complement each other, forming a more comprehensive defense system.

Existing anomaly detection and model tolerance methods each have their own computational overhead and performance bottlenecks. Since edge devices are often limited by performance constraints, reducing the computational and communication costs of these methods while ensuring efficient privacy protection will be a key area of future research.

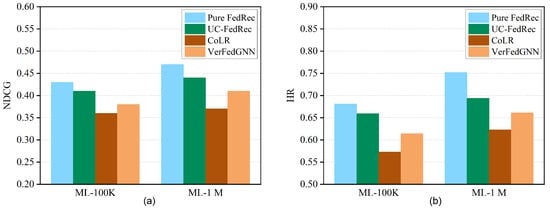

4.3. Experimental Evaluation

Due to the articles employing anonymization-based methods that do not provide source code, we compared the approaches from [25,29,30] to evaluate the performance of encryption-based methods and anonymization-based methods. For the evaluation, we used the ML-100K and ML-1M datasets [53]. ML-100K consists of 100,000 ratings from 943 users on 1682 movies collected from the MovieLens website, while ML-1M is a larger dataset containing 1 million ratings from 6039 users on 3705 movies. We treat users’ gender and age as private attributes. We adopt FedGNN [54] as the base model. Following the methodology in [29], we employ the leave-one-out approach to evaluate the recommendation utility. The performance of the ranking lists is measured using the hit rate (HR) and normalized discounted cumulative gain (NDCG). Both metrics are computed with the ranking list truncated at 10. We calculate these two metrics and report the average scores. For privacy protection evaluation, we used the widely adopted F1 scores to assess the attacker’s inference accuracy and report inference accuracy when the train–test split ratio is 10% and 20%, respectively. Our goal is to prevent feature inference attacks, where a lower F1 score indicates better privacy protection.

As shown by our results in Table 4 and Figure 3, all three privacy-protecting methods [25,29,30] yield substantially lower attribute-inference F1 scores than the vanilla FedRec baseline, demonstrating their effectiveness in safeguarding user attributes. Notably, the CoLR method consistently achieves the lowest F1 values across both ML-100K and ML-1M datasets ( = 10% and 20%), indicating the strongest privacy protection among the three. UC-FedRec attains intermediate F1 reductions, while VerFedGNN generally exhibits higher F1 (weaker privacy protection). In terms of recommendation utility (Figure 3), CoLR also maintains accuracy closest to the baseline. UC-FedRec incurs a somewhat larger accuracy reduction, and VerFedGNN suffers the greatest utility loss (approximately a 0.10 drop in HR/NDCG). Thus, CoLR offers the best privacy–utility trade-off, achieving high privacy gains (very low F1) with only a modest sacrifice in HR/NDCG, whereas the other two methods trade somewhat higher accuracy loss for less pronounced privacy improvement. It is also worth noting that methods based on encryption mechanisms provide stronger privacy protection than those based on perturbation mechanisms, though this may come at the cost of higher computational and communication overhead. These observations, grounded in Table 4 and Figure 3, clarify the relative strengths and weaknesses of the evaluated approaches.

Table 4.

Performance of methods [27,31,32] in terms of recommendation and privacy.

Figure 3.

Utility evaluation of methods [25,29,30], (a) NDCG and (b) HR performance on two datasets.

5. Discussion and Future Research Directions

Based on an analysis of the existing papers, the development of multi-user privacy protection in edge intelligence faces numerous challenges, while also revealing several important directions for future research. The future research directions concerning data-centric privacy issues with respect to MISs will be discussed in the following sections.

- Balancing privacy protection and computational efficiency: Privacy protection technologies, such as homomorphic encryption and differential privacy, considerably improve privacy security but also impose large computational overhead and delays, becoming the key bottleneck in current research [25]. Future research should aim to resolve the tension between privacy protection and computing performance. On the one hand, hardware acceleration solutions, such as using GPUs and FPGAs to improve the execution efficiency of encryption algorithms, can be investigated. On the other hand, new encryption algorithms (e.g., lightweight homomorphic encryption or quantum encryption) and hybrid privacy protection methods (e.g., combining differential privacy with local perturbation) may reduce computational costs and provide new solutions for efficient privacy protection.

- Privacy protection in multi-party collaboration: In multi-party collaboration scenarios, although federated learning and vertical federated learning have proposed preliminary privacy protection schemes, incomplete trust among participants remains a key challenge in research. Future work could combine homomorphic encryption and trusted execution environments to achieve efficient computation while maintaining data security. Furthermore, the introduction of blockchain technology has the potential to help construct transparent and tamper-proof data transmission and computation procedures [4], hence improving privacy protection in multi-party collaborations. In particular, zero-knowledge proof (ZKP) technology can verify the correctness and compliance of data computation without exposing the actual data [55], thus further improving the privacy protection capabilities of data transmission and increasing the system’s credibility.

- Dynamic adjustment and adaptive mechanisms for privacy protection: Existing privacy protection methods are often statically designed and struggle to meet the demands of dynamic environments [29]. Future research could concentrate on creating adaptive privacy protection algorithms that dynamically modify the level of privacy protection in response to real-time feedback, such as data sensitivity, system status, or participant trust levels. Furthermore, incorporating reinforcement learning into privacy protection may enable intelligent optimization of privacy measures, resulting in a balance of privacy and utility. Moreover, blockchain not only provides data transparency but also enables an automated privacy consent process through smart contracts [56]. Users can authorize or revoke the use of their data through smart contracts, further enhancing the autonomy and transparency of privacy protection.

- User consent and privacy transparency: Although numerous user privacy protection schemes have emerged, implementing transparent and clear user consent mechanisms remains a challenge. Future studies could use blockchain technology to record and maintain users’ consent histories, giving them more control over their privacy settings. Furthermore, the usage of smart contracts could automate the privacy consent process, improving compliance while maintaining users’ privacy rights.

- Application of emerging technologies: Unlearning technology, as an emerging privacy protection method, is gaining increasing attention in edge intelligence systems [57]. Traditional privacy protection methods, such as homomorphic encryption and differential privacy, are effective but have high computational overhead and latency. Unlearning technology reduces computational costs and improves efficiency by deleting specific users’ data from a trained model without requiring the retraining of the entire model. In edge intelligence, unlearning technology ensures that when users request the deletion of personal data, only the parts of the model related to that user are removed, without affecting the privacy of other users or the accuracy of the global model. This allows edge devices to efficiently process data locally, avoiding the costs associated with data transmission and storage. In the future, unlearning will play an important role in privacy protection in edge intelligence, helping achieve privacy compliance and efficient data management.

As for model-centric privacy issues with respect to MISs, we propose the following three potential future research directions that merit further investigation.

- Privacy-security co-optimization: Most existing methods rely on the default privacy guarantees of edge intelligence, but a fundamental conflict exists between encrypted aggregation and robust defense. For instance, while DDFed’s fully homomorphic encryption preserves gradient privacy [41], it prevents the server from performing similarity detection. Conversely, the reconstruction error analysis in FedREDefense [39] may leak data distribution characteristics through gradient inversion. A promising breakthrough lies in designing dual-secure aggregation protocols that integrate secure multi-party computation with robust statistics (e.g., trimmed mean) within the encrypted domain, allowing the filtering of malicious clients without decrypting model updates. Additionally, a quantitative model linking the differential privacy budget to defense effectiveness should be established, enabling the dynamic adjustment of the privacy–robustness trade-off via adaptive noise injection. Federated feature distillation is also worth investigating. By constructing a shared feature space through knowledge transfer, this approach can protect data privacy while disrupting the propagation pathways of poisoned features.

- Cross-layer collaborative defense systems: A single-layer defense is vulnerable to targeted attacks, necessitating a multi-level protection strategy. At the client level, integrating model sanitization techniques with gradient obfuscation can mitigate the persistence of attack parameters via noise injection. At the edge level, secure verification modules based on trusted execution environments can be deployed, using isolation and encryption to filter model updates in real time. At the global level, inter-node defense knowledge-sharing protocols can be designed to synchronize the attack signature databases of edge servers through federated learning, fostering a co-evolutionary defense ecosystem. This three-tier architecture—comprising terminal-level immunity, edge-level filtering, and global coordination—can significantly enhance overall system robustness.

- Improved theoretical evaluation systems: Current studies lack rigorous proof of model convergence under defensive mechanisms, and existing evaluation standards remain fragmented. Future work should establish a joint convergence-robustness analysis framework to quantify how defense intensity affects model convergence rates based on stochastic optimization theory. Designing composite evaluation metrics should go beyond single-dimensional constraints, forming a triangular evaluation framework that includes computational cost, communication overhead, and defense effectiveness. In addition, developing defense cost-efficiency models will aid in selecting suitable solutions for resource-constrained scenarios, using Pareto frontier analysis to identify optimal trade-offs among privacy, security, and efficiency.

6. Conclusions

Edge intelligence enables user data to be retained at the edge rather than being uploaded to the cloud, reducing response times and saving bandwidth resources, but posing new challenges for privacy protection. In this paper, we present a full overview of multi-user privacy protection systems and the issues faced by edge intelligence. We categorize and describe available studies while also comparing numerous strategies using experimental evaluation. Finally, we propose several open research issues, with the hope that this work will shed more light on multi-user privacy protection.

Author Contributions

Conceptualization, X.L. and B.L.; methodology, X.L.; software, B.L.; validation, X.L., B.L. and S.C.; formal analysis, B.L.; investigation, B.L.; resources, B.L.; data curation, B.L.; writing—original draft preparation, B.L.; writing—review and editing, Z.X.; visualization, B.L.; supervision, X.L.; project administration, X.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep Learning with Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Xu, D.; Li, T.; Li, Y.; Su, X.; Tarkoma, S.; Jiang, T.; Crowcroft, J.; Hui, P. Edge Intelligence: Empowering Intelligence to the Edge of Network. Proc. IEEE 2021, 109, 1778–1837. [Google Scholar] [CrossRef]

- Ahvar, E.; Ahvar, S.; Lee, G.M. Artificial Intelligence of Things: Architectures, Applications, and Challenges. In Springer Handbook of Internet of Things; Springer Handbooks; Ziegler, S., Radócz, R., Quesada Rodriguez, A., Matheu Garcia, S.N., Eds.; Springer International Publishing: Cham, Switzerland, 2024; pp. 443–462. [Google Scholar] [CrossRef]

- Zhu, G.; Lyu, Z.; Jiao, X.; Liu, P.; Chen, M.; Xu, J.; Cui, S.; Zhang, P. Pushing AI to Wireless Network Edge: An Overview on Integrated Sensing, Communication, and Computation towards 6G. Sci. China-Inf. Sci. 2023, 66, 130301. [Google Scholar] [CrossRef]

- McEnroe, P.; Wang, S.; Liyanage, M. A Survey on the Convergence of Edge Computing and AI for UAVs: Opportunities and Challenges. IEEE Internet Things J. 2022, 9, 15435–15459. [Google Scholar] [CrossRef]

- Zhu, R.; Liu, Y.; Gao, Y.; Shi, Y.; Peng, X. Edge Intelligence Based Garbage Classification Detection Method. In Edge Computing and IoT: Systems, Management and Security; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Xiao, Z., Zhao, P., Dai, X., Shu, J., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 478, pp. 128–141. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 12. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Nguyen, T.; Le Nguyen, P.; Pham, H.H.; Doan, K.D.; Wong, K.-S. Backdoor Attacks and Defenses in Federated Learning: Survey, Challenges and Future Research Directions. Eng. Appl. Artif. Intell. 2024, 127, 107166. [Google Scholar] [CrossRef]

- Yin, X.; Zhu, Y.; Hu, J. A Comprehensive Survey of Privacy-Preserving Federated Learning: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2021, 54, 131:1–131:36. [Google Scholar] [CrossRef]

- Abi-Char, P.E. A User Location Privacy Protection Mechanism for LBS Using Third Party-Based Architectures. In Proceedings of the 2022 45th International Conference on Telecommunications and Signal Processing (TSP), Prague, Czech Republic, 13–15 July 2022; pp. 139–145. [Google Scholar] [CrossRef]

- Rigaki, M.; Garcia, S. A Survey of Privacy Attacks in Machine Learning. ACM Comput. Surv. 2024, 56, 101. [Google Scholar] [CrossRef]

- Hu, H.; Salcic, Z.; Sun, L.; Dobbie, G.; Yu, P.S.; Zhang, X. Membership Inference Attacks on Machine Learning: A Survey. ACM Comput. Surv. 2022, 54, 235. [Google Scholar] [CrossRef]

- Xie, Y.-A.; Kang, J.; Niyato, D.; Van, N.T.T.; Luong, N.C.; Liu, Z.; Yu, H. Securing Federated Learning: A Covert Communication-Based Approach. IEEE Netw. 2022, 37, 118–124. [Google Scholar] [CrossRef]

- Thai, M.T.; Phan, H.N.; Thuraisingham, B. (Eds.) Handbook of Trustworthy Federated Learning; Springer Optimization and Its Applications; Springer International Publishing: Cham, Switzerland, 2025; Volume 213. [Google Scholar] [CrossRef]

- Zhang, Y.; Bai, G.; Chamikara, M.A.P.; Ma, M.; Shen, L.; Wang, J.; Nepal, S.; Xue, M.; Wang, L.; Liu, J. AgrEvader: Poisoning Membership Inference against Byzantine-Robust Federated Learning. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April 2023; WWW ’23. Association for Computing Machinery: New York, NY, USA, 2023; pp. 2371–2382. [Google Scholar] [CrossRef]

- Zhang, L.; Li, L.; Li, X.; Cai, B.; Gao, Y.; Dou, R.; Chen, L. Efficient Membership Inference Attacks against Federated Learning via Bias Differences. In Proceedings of the 26th International Symposium on Research in Attacks, Intrusions and Defenses, Hong Kong, China, 16–18 October 2023; pp. 222–235. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.-T. Backdoor Learning: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 5–22. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Cong, Y.; Dong, J.; Wang, Q.; Lyu, L.; Liu, J. Data Poisoning Attacks on Federated Machine Learning. IEEE Internet Things J. 2022, 9, 11365–11375. [Google Scholar] [CrossRef]

- Sharma, A.; Marchang, N. A Review on Client-Server Attacks and Defenses in Federated Learning. Comput. Secur. 2024, 2024, 103801. [Google Scholar] [CrossRef]

- Cao, X.; Gong, N.Z. MPAF: Model Poisoning Attacks to Federated Learning Based on Fake Clients. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3396–3404. [Google Scholar]

- Du, R.; Liu, C.; Gao, Y. Anonymous Federated Learning Framework in the Internet of Things. Concurr. Comput. 2024, 36, e7901. [Google Scholar] [CrossRef]

- Yan, N.; Li, Y.; Chen, J.; Wang, X.; Hong, J.; He, K.; Wang, W. Efficient and Straggler-Resistant Homomorphic Encryption for Heterogeneous Federated Learning. In Proceedings of the IEEE INFOCOM 2024-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 20–23 May 2024; pp. 791–800. [Google Scholar]

- Jin, W.; Yao, Y.; Han, S.; Gu, J.; Joe-Wong, C.; Ravi, S.; Avestimehr, S.; He, C. FedML-HE: An Efficient Homomorphic-Encryption-Based Privacy-Preserving Federated Learning System. arXiv 2024. [Google Scholar] [CrossRef]

- Nguyen, N.-H.; Nguyen, T.-A.; Nguyen, T.; Hoang, V.T.; Le, D.D.; Wong, K.-S. Towards Efficient Communication and Secure Federated Recommendation System via Low-Rank Training. In Proceedings of the ACM Web Conference 2024, Stuttgart, Germany, 21–24 May 2024; ACM: Singapore, 2024; pp. 3940–3951. [Google Scholar] [CrossRef]

- Janbaz, S.; Asghari, R.; Bagherpour, B.; Zaghian, A. A Fast Non-Interactive Publicly Verifiable Secret Sharing Scheme. In Proceedings of the 2020 17th International ISC Conference on Information Security and Cryptology (ISCISC), Tehran, Iran, 9–10 September 2020; pp. 7–13. [Google Scholar] [CrossRef]

- Zhang, X.; Fu, A.; Wang, H.; Zhou, C.; Chen, Z. A Privacy-Preserving and Verifiable Federated Learning Scheme. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Hu, R.; Guo, Y.; Gong, Y. Federated Learning with Sparsified Model Perturbation: Improving Accuracy under Client-Level Differential Privacy. IEEE Trans. Mob. Comput. 2023, 23, 8242–8255. [Google Scholar] [CrossRef]

- Hu, Q.; Song, Y. User Consented Federated Recommender System Against Personalized Attribute Inference Attack. In Proceedings of the 17th ACM International Conference on Web Search and Data Mining, Mérida, Mexico, 4–8 March 2024; ACM: Merida, Mexico, 2024; pp. 276–285. [Google Scholar] [CrossRef]

- Mai, P.; Pang, Y. Vertical Federated Graph Neural Network for Recommender System. In Proceedings of the International Conference on Machine Learning, Edmonton, AB, Canada, 30 June–3 July 2023; pp. 23516–23535. [Google Scholar]

- Chen, R.; Huang, C.; Qin, X.; Ma, N.; Pan, M.; Shen, X. Energy Efficient and Differentially Private Federated Learning via a Piggyback Approach. IEEE Trans. Mob. Comput. 2023, 23, 2698–2711. [Google Scholar] [CrossRef]

- Wang, J.; Guo, S.; Xie, X.; Qi, H. Protect Privacy from Gradient Leakage Attack in Federated Learning. In Proceedings of the IEEE INFOCOM 2022-IEEE Conference on Computer Communications, Online, 2–5 May 2022; pp. 580–589. [Google Scholar]

- Chamikara, M.A.P.; Bertok, P.; Khalil, I.; Liu, D.; Camtepe, S. Privacy Preserving Distributed Machine Learning with Federated Learning. Comput. Commun. 2021, 171, 112–125. [Google Scholar] [CrossRef]

- Jin, H.; Zhang, P.; Dong, H.; Wei, X.; Zhu, Y.; Gu, T. Mobility-Aware and Privacy-Protecting QoS Optimization in Mobile Edge Networks. IEEE Trans. Mob. Comput. 2022, 23, 1169–1185. [Google Scholar] [CrossRef]

- Choudhury, O.; Gkoulalas-Divanis, A.; Salonidis, T.; Sylla, I.; Park, Y.; Hsu, G.; Das, A. Anonymizing Data for Privacy-Preserving Federated Learning. arXiv 2020, arXiv:2002.09096. [Google Scholar] [CrossRef]

- Zhao, B.; Fan, K.; Yang, K.; Wang, Z.; Li, H.; Yang, Y. Anonymous and Privacy-Preserving Federated Learning with Industrial Big Data. IEEE Trans. Ind. Inform. 2021, 17, 6314–6323. [Google Scholar] [CrossRef]

- Majeed, A.; Lee, S. Anonymization Techniques for Privacy Preserving Data Publishing: A Comprehensive Survey. IEEE Access 2020, 9, 8512–8545. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, W.; Chen, Q.; Li, X.; Sun, W.; Li, H.; Lin, X. RECESS Vaccine for Federated Learning: Proactive Defense Against Model Poisoning Attacks. Adv. Neural Inf. Process. Syst. 2023, 36, 8702–8713. [Google Scholar]

- Yueqi, X.I.E.; Fang, M.; Gong, N.Z. Fedredefense: Defending against Model Poisoning Attacks for Federated Learning Using Model Update Reconstruction Error. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Yan, G.; Wang, H.; Yuan, X.; Li, J. DeFL: Defending against Model Poisoning Attacks in Federated Learning via Critical Learning Periods Awareness. Proc. AAAI Conf. Artif. Intell. 2023, 37, 10711–10719. [Google Scholar] [CrossRef]

- Xu, R.; Gao, S.; Li, C.; Joshi, J.; Li, J. Dual Defense: Enhancing Privacy and Mitigating Poisoning Attacks in Federated Learning. Adv. Neural Inf. Process. Syst. 2024, 37, 70476–70498. [Google Scholar]

- Qin, Z.; Chen, F.; Zhi, C.; Yan, X.; Deng, S. Resisting Backdoor Attacks in Federated Learning via Bidirectional Elections and Individual Perspective. Proc. AAAI Conf. Artif. Intell. 2024, 38, 14677–14685. [Google Scholar] [CrossRef]

- Zheng, J.; Li, K.; Yuan, X.; Ni, W.; Tovar, E. Detecting Poisoning Attacks on Federated Learning Using Gradient-Weighted Class Activation Mapping. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; WWW ’24. Association for Computing Machinery: New York, NY, USA, 2024; pp. 714–717. [Google Scholar] [CrossRef]

- Jia, J.; Yuan, Z.; Sahabandu, D.; Niu, L.; Rajabi, A.; Ramasubramanian, B.; Li, B.; Poovendran, R. FedGame: A Game-Theoretic Defense against Backdoor Attacks in Federated Learning. Adv. Neural Inf. Process. Syst. 2023, 36, 53090–53111. [Google Scholar]

- Panda, A.; Mahloujifar, S.; Bhagoji, A.N.; Chakraborty, S.; Mittal, P. SparseFed: Mitigating Model Poisoning Attacks in Federated Learning with Sparsification. In Proceedings of the 25th International Conference on Artificial Intelligence and Statistics, Valencia, Spain, 28–30 March 2022; pp. 7587–7624. [Google Scholar]

- Zhu, C.; Roos, S.; Chen, L.Y. LeadFL: Client Self-Defense against Model Poisoning in Federated Learning. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Zhang, K.; Tao, G.; Xu, Q.; Cheng, S.; An, S.; Liu, Y.; Feng, S.; Shen, G.; Chen, P.-Y.; Ma, S.; et al. FLIP: A Provable Defense Framework for Backdoor Mitigation in Federated Learning. arXiv 2022, arXiv:2210.12873. [Google Scholar]

- Huang, T.; Hu, S.; Chow, K.-H.; Ilhan, F.; Tekin, S.F.; Liu, L. Lockdown: Backdoor Defense for Federated Learning with Isolated Subspace Training. Adv. Neural Inf. Process. Syst. 2023, 36, 10876–10896. [Google Scholar]

- Mai, P.; Yan, R.; Pang, Y. RFLPA: A Robust Federated Learning Framework against Poisoning Attacks with Secure Aggregation. Adv. Neural Inf. Process. Syst. 2024, 37, 104329–104356. [Google Scholar]

- Yu, Y.; Liu, Q.; Wu, L.; Yu, R.; Yu, S.L.; Zhang, Z. Untargeted Attack against Federated Recommendation Systems via Poisonous Item Embeddings and the Defense. Proc. AAAI Conf. Artif. Intell. 2023, 37, 4854–4863. [Google Scholar] [CrossRef]

- FLShield: A Validation Based Federated Learning Framework to Defend Against Poisoning Attacks|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/10646613 (accessed on 16 March 2025).

- Sun, J.; Li, A.; DiValentin, L.; Hassanzadeh, A.; Chen, Y.; Li, H. FL-WBC: Enhancing Robustness against Model Poisoning Attacks in Federated Learning from a Client Perspective. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Irvine, CA, USA, 2021; Volume 34, pp. 12613–12624. [Google Scholar]

- Harper, F.M.; Konstan, J.A. The MovieLens Datasets: History and Context. ACM Trans. Interact. Intell. Syst. 2016, 5, 1–19. [Google Scholar] [CrossRef]

- Wu, C.; Wu, F.; Cao, Y.; Huang, Y.; Xie, X. FedGNN: Federated Graph Neural Network for Privacy-Preserving Recommendation. Nat. Commun. 2022, 13, 3091. [Google Scholar] [CrossRef]

- Sun, X.; Yu, F.R.; Zhang, P.; Sun, Z.; Xie, W.; Peng, X. A Survey on Zero-Knowledge Proof in Blockchain. IEEE Netw. 2021, 35, 198–205. [Google Scholar] [CrossRef]

- Cao, L. Decentralized AI: Edge Intelligence and Smart Blockchain, Metaverse, Web3, and DeSci. IEEE Intell. Syst. 2022, 37, 6–19. [Google Scholar] [CrossRef]

- Bourtoule, L.; Chandrasekaran, V.; Choquette-Choo, C.A.; Jia, H.; Travers, A.; Zhang, B.; Lie, D.; Papernot, N. Machine Unlearning. In Proceedings of the 2021 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021; pp. 141–159. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).