Abstract

To address the need for comprehensive terminology construction in rapidly evolving domains such as blockchain, this study examines how large language models (LLMs), particularly GPT, enhance automatic term extraction through human feedback. The experimental part involves 60 bachelor’s students interacting with GPT in a three-step iterative prompting process: initial prompt formulation, intermediate refinement, and final adjustment. At each step, the students’ prompts are evaluated by a teacher using a structured rubric based on 6C criteria (clarity, complexity, coherence, creativity, consistency, and contextuality), with their summed scores forming an overall grade. The analysis indicates that (1) students’ overall grades correlate with GPT’s performance across all steps, reaching the highest correlation (0.87) at Step 3; (2) the importance of rubric criteria varies across steps, e.g., clarity and creativity are the most crucial initially, while complexity, coherence and consistency influence subsequent refinements, with contextuality having no effect at all steps; and (3) the linguistic accuracy of prompt formulations significantly outweighs domain-specific factual content in influencing GPT’s performance. These findings suggest GPT has a robust foundational understanding of blockchain terminology, making clear, consistent, and linguistically structured prompts more effective than contextual domain-specific explanations for automatic term extraction.

1. Introduction

Artificial intelligence (AI) is increasingly being integrated into educational settings, offering new ways to support both teaching and learning. The advent of large language models (LLMs) like OpenAI’s GPT series (e.g., ChatGPT) has brought about what some call a paradigm shift in pedagogy. Generative AI tools are reconfiguring the educational landscape by providing more dialogic, learner-centric approaches. On the one hand, ChatGPT has even demonstrated performance comparable to human learners on professional examinations, on the other, it has shown potential as a virtual intelligent tutor in fields like medicine, physics [1], and programming [2].

One critical factor in effectively using AI in education is how questions or tasks are communicated to it, that is, the prompt given to the AI model. The quality and strategy of prompts can significantly influence the relevance and accuracy of the AI’s responses. Indeed, prompt engineering has emerged as a key skill for both educators and students when interacting with LLMs. Although prompt engineering is a relatively new field, early explorations indicate that well-crafted prompts can significantly enhance the usefulness of AI in educational tasks [3,4]. For example, giving the model a specific role or context, clearly defining the task, or providing example answers can all steer it to produce more relevant and detailed responses. On the other hand, poorly phrased or vague prompts may lead to misleading or incorrect answers, which is a known challenge when humans use AI tools without guidance [5]. Therefore, understanding and teaching prompt engineering is increasingly seen as vital to AI-assisted learning [6].

In this context, our study explores prompt engineering in an educational environment. We conducted an experiment with 60 bachelor students using a ChatGPT-based application for automatic term extraction (ATE). Our goal is to examine how various prompt strategies affect GPT’s performance and the learning support it offers. As students rely more on AI tools, knowing how to ask questions or provide instructions becomes a crucial skill. Automatic term extraction is a prime example; while GPT can help students identify key concepts, its effectiveness depends on how the task is formulated in the prompt, and different prompt strategies can produce widely varying results. This variability motivates a systematic investigation of which prompt characteristics lead to more accurate, reliable GPT outputs. By understanding effective prompt design, we aim to enhance AI-assisted learning, helping students obtain better support from GPT. Our study addresses these challenges through several research questions:

- RQ1. What should be the criteria for evaluating student prompts?

- RQ2. Is there a relationship between teacher-assigned grades, based on established criteria, and GPT performance?

- RQ3. How does varying prompt formulation affect GPT’s performance?

- RQ4. Are there clear patterns indicating that certain prompt strategies lead to better GPT performance?

The rest of the paper is organized as follows. The “Related work” section reviews prior studies on GPT applications in education and demonstrates the importance of prompt engineering for AI-assisted learning. The “Materials and Methods” section outlines our approach to prompt evaluation and describes the experimental work. The “Results and Discussion” section presents and interprets the experimental results, highlighting their implications for AI-assisted learning. Finally, the “Conclusions” section formulates important insights and suggests directions for future research.

2. Related Work

The use of AI, and specifically large language models like GPT, in education has grown rapidly in recent years. Several studies have highlighted the potential of ChatGPT and similar models to transform teaching and learning practices [7,8]. For instance, Kamalov et al. reviewed the multifaceted impact of AI on education and identified four key areas of influence: personalized learning, intelligent tutoring systems, assessment automation, and teacher–student collaboration [9]. Their foresight aligns with the work of Yu and Guo, who identified four key perspectives on the role of generative AI in education: personalized education, intelligent tutoring, collaborative education, and virtual learning [10]. These studies collectively emphasize several complementary dimensions through which AI is increasingly reshaping educational methodologies and student-teacher interactions.

Personalized learning with generative AI may include the following: (1) generating unique learning content based on student’s interests, abilities, and knowledge levels, (2) supporting unique learning routes based on the student’s progress, and (3) personalized assessment and feedback [10]. For example, Diyab et al. developed an AI-based assessment system, which uses GPT-4 to automatically grade exam questions, identify knowledge gaps, and provide instant feedback to students [4]. Their system demonstrated that GPT-4 could successfully produce relevant practice exercises based on course outcomes, flag areas where a student’s understanding is weak, and supply feedback tailored to the student’s answers. Ref. [11] presented an AI-enhanced evaluation tool, which customizes GPT-4 to automate the process of evaluation of students’ image-based typography designs. For the most part, good alignment between the AI tool and human evaluators is observed; however, some findings reveal notable differences, which require further investigation. Ref. [12] explored the efficacy of using ChatGPT as an automatic grader for student essays. The researcher examined how well ChatGPT grades form written responses compared to human raters, discussing issues such as grading consistency and feedback quality.

Such results underscore that, when properly directed, AI can reduce teacher workload and enhance the learning experience by personalizing support at scale. A study reported that ChatGPT performed at a near-human level in specialized medical licensing tests and acted as a virtual tutor in domains like healthcare training [13]. These successes come with caveats: concerns about misinformation, bias, or over-reliance on AI have also been noted [14,15]. Nonetheless, the consensus in the recent literature is that generative AI, if guided appropriately, can be a powerful tool for education, from intelligent tutoring systems and content generation to collaborative learning facilitation.

Considering this potential, prompt engineering has emerged as an important area of study because the quality of AI output in educational contexts is highly dependent on the prompt [3]. Preliminary work by White et al. introduced a prompt pattern catalog to guide effective prompt engineering with ChatGPT [16]. They identified a variety of prompt patterns that can improve the model’s understanding and responses, such as giving role instructions, providing step-by-step examples, or asking the model to “think aloud” (chain-of-thought prompting).

In the realm of education, Leung outlined several strategies that teachers can use to craft optimal prompts for student learning, for example, assigning specific roles to the AI, clearly defining objectives in the question, applying constraints, using structured formats or templates for prompts, and engaging in Socratic questioning through dialogue [3]. These strategies correspond closely to the prompt types we examine in our study, and are believed to foster more accurate, detailed, and useful answers from the AI. A survey of teachers cited by Leung found that using such prompt crafting techniques enhances students’ learning experiences with GPT, their engagement, and critical thinking [3].

While educators have started to document best practices, there is a growing interest in understanding how students themselves interact with ChatGPT and learn to optimize prompts. A qualitative study by Carl et al. observed non-expert teachers co-creating an educational game with ChatGPT and noted that through iterative interactions, the teachers tacitly learned the principles of prompt engineering [17]. A study by Chen et al. introduced a system called StuGPTViz to analyze how students prompt ChatGPT in classroom tasks [18]. By coding hundreds of student-AI conversation logs, the researchers identified common prompt patterns and linked them to students’ cognitive processes. They categorized prompts on two axes: learning-related (reflecting the student’s cognitive level or intent, such as recalling facts vs. creating new ideas) and ChatGPT-related (how well the student leverages the tool, e.g., providing context, examples, or instructions).

Scholl and Kiesler conducted large-scale surveys on GPT usage in educational settings (e.g., surveying 298 students in an introductory programming course) and discovered a remarkable diversity in how students apply and interact with GPT [19]. The majority reported using the tool for problem comprehension, conceptual understanding, code generation, debugging, and syntax correction. Although some students noted that ChatGPT could provide satisfactory answers to simple prompts even when spelling or syntax errors were present, a larger proportion encountered challenges in obtaining adequate responses for more complex queries, thus underscoring the importance of carefully crafted prompts. In a related study, Sun et al. found that incorporating structured prompts into programming instructions can improve students’ learning experiences when working with ChatGPT [20].

A recent study by Bozkurt emphasized that prompt engineering is more than a set of technical tricks, it is “the key to unlocking the full potential” of generative AI and stands at the intersection of art and science [21]. Effective prompt design transcends mere technical manipulation and involves creativity, strategic thinking, and a deep understanding of what AI models can (and cannot) do. The study outlined a range of strategies for crafting effective prompts, such as clarifying objectives, providing context, assigning roles or personas, iterating and refining prompts, ensuring step-by-step reasoning, specifying desired output formats, asking for evidence, and so on.

Cain highlighted three core components as the foundation of effective prompt engineering: content knowledge, critical thinking, and iterative design [22]. Firstly, a deep understanding of the content is crucial for guiding AI responses toward user objectives. This goes beyond knowing facts; it involves grasping their broader implications and contexts. As a result, crafting prompts encourages not only nuanced language use but also innovative engagement with the material. Secondly, critical thinking comes into play by questioning, verifying, and evaluating the AI’s outputs. And finally, prompt engineering typically begins with a vision and then moves through planning, design, testing, and refinement. The author emphasizes that this iterative process “is more than just a methodology, it’s the roadmap” for achieving optimal results from AI.

However, recent studies highlight the difficulties of incorporating prompt engineering into formal education due to its rapid evolution and short skill lifespan [23]. Each update to generative AI models necessitates new techniques, forcing practitioners to relearn the skill repeatedly. Oppenlaender et al. noted that, while industry leaders caution against investing heavily in mastering prompt engineering due to its mutability, demand for these skills continues to grow across both the tech industry and government institutions [23]. Moreover, their analysis of job postings revealed that prompt engineering roles demand a broader technical background, including Python programming, deep learning frameworks, and model evaluation, rather than just writing effective prompts. They argue that this complexity, coupled with rapid obsolescence, may widen the gap between academia and industry.

In summary, previous studies suggest that despite its challenges, prompt engineering is emerging as a key skill for the 21st century [24,25,26,27]. However, there is a notable lack of quantitative, controlled studies measuring the impact of different prompt strategies on objective performance metrics in student-AI interactions [28]. Empirical evidence remains scarce on whether students improve their interaction performance after applying specific strategies or tactics. Addressing this gap is crucial for transitioning from theoretical discussions to evidence-based practice. Our study contributes by experimentally comparing different prompt types in a real classroom setting and using statistical analysis to validate their effects.

3. Materials and Methods

3.1. Participants and Context

The experiment was conducted with 60 bachelor’s students majoring in Big Data who were learning to use AI in research. Although the task was nominally part of the “Research Methods and Tools” (RMT) course, the trimester’s curriculum also included two concurrent courses, “Big Data and Distributed Algorithms” (BDDA) and “Natural Language Processing” (NLP). By asking students to design prompts for automatic term extraction (ATE) in the blockchain domain, we placed the exercise in a cross-disciplinary context where the domain content came from BDDA, the methodological foundation came from NLP, and the meta-skill of prompt engineering was learned in RMT. Beyond the cross-disciplinary rationale, two additional motives drove our choice of ATE as the task and blockchain as the domain.

First, ATE provided a clearly defined, measurable task that could be completed within a single lab or practical session. Despite the limited time frame, this task embodied the full prompt-engineering cycle from query design and model interaction to result validation. The expected output was a finite list of domain terms, so success criteria could be quantified with straightforward precision, recall, and F1 metrics, allowing students to receive immediate feedback. ATE also minimized extraneous cognitive load, allowing students to focus on refining prompts instead of struggling with complex downstream evaluation pipelines.

Second, the fast-evolving, jargon-rich blockchain lexicon was generating new terms at a pace unprecedented even in other IT domains. Recent benchmarks indicated that state-of-the-art LLMs performed noticeably worse on blockchain topics than on mainstream NLP tasks [29]. Consequently, the blockchain domain served as a stress test for prompt design, forcing students to elicit reliable answers from models whose prior knowledge was partial or outdated and making the effect of prompt structure easy to observe and quantify.

The experiment was conducted as part of a flipped-learning format as shown in Figure 1. During the lab session, students worked independently with GPT-4o via a Python application (3.11.12 version), generating and iteratively refining prompts without explicit guidance. This exploratory phase revealed individual misconceptions and knowledge gaps. After reviewing the results, teachers led a feedback practical session in which they introduced the 6C evaluation criteria and discussed selected student prompts. A subsequent lecture then supplied the underlying theory of prompt engineering and situated the evaluation framework within a broader AI context.

Figure 1.

Experimental context—inspired by [30].

3.2. Experimental Design

All students used our custom chat application (powered by OpenAI’s ChatGPT, San Francisco, CA, USA) as a virtual assistant for automatic term extraction in the blockchain domain. Before the experiment, students received a brief orientation on using the application but were not explicitly trained in prompt engineering. The goal was to observe how students naturally interacted with GPT and how their prompts evolved as they attempted to obtain more accurate responses. The experiment employed a within-subjects design, in which related measurements were collected from the same students across three consecutive prompts. Each student’s initial prompt served as an internal baseline, rather than using separate control and treatment groups. This approach is common in prompt-engineering research, as shown in earlier studies (e.g., [31,32]).

The GPT-4.0 model was accessed through the application’s interface, allowing students to input prompts and receive responses. The model was configured with a temperature of 0 to ensure deterministic outputs. A 942-word text on the blockchain topic and a “gold” (reference) list of 50 manually extracted terms, verified by two blockchain experts, were provided for the task. The reference list served as the ground truth for evaluating the performance of the GPT model in response to students’ prompts. At the same time, we emphasized that the reference term list is not an indisputable ultimate truth. In terminology work, it can never be, because what qualifies as a term depends on perspective, purpose, and time. Our intention is to use the reference list as an operational gold standard whose inevitable imperfections have been minimized through double expert manual annotation. Therefore, the reference term list was used exclusively by the application to evaluate the model’s performance. GPT 4.0 had access only to the text to generate its own term list and did not see the reference list. To ensure consistency in the output format, students were informed that they did not need to specify how the extracted terms should be presented. The application implicitly appended a standardized service instruction to each prompt, directing GPT to output terms in a line-by-line format.

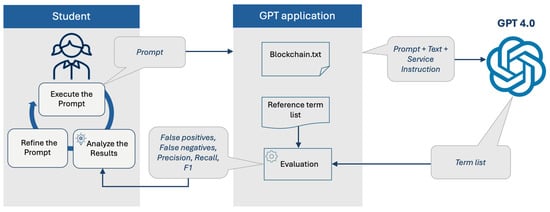

Figure 2 captures the entire three-step refinement loop. At each step, the student submits a prompt, which the application automatically wraps with the service instruction and the blockchain text before forwarding the bundle to GPT-4.0. The model deterministically returns a line-by-line list of candidate terms. The back-end then compares this list with the reference list, calculating precision, recall, and F1-score. These metrics and the extracted term list, which highlights false positives and false negatives, are displayed immediately to the student, who then analyzes the outcome, refines the prompt, and begins the next step.

Figure 2.

Iterative prompt refinement workflow.

3.3. ChatGPT Experimental Application

The lightweight web application was developed using Python 3.11.12 as a programming language and libraries Gradio version of 5.29.1 for the frontend and GPT-4o via API with OpenAI version of 0.28 as the backend to allow students to interactively extract domain-specific terms from a fixed text and refine their prompts across three steps. Each participant was assigned a unique session where all interactions, such as user prompts, assistant responses, and evaluation metrics, were stored in memory. The system maintains full conversation context by appending each new prompt and model reply to a session-specific message history. This ensures that subsequent prompts in Step 2 and Step 3 build directly on the model’s prior outputs, supporting multi-turn reasoning and cumulative refinement. Once the model responds, its output is parsed into a term list and compared to the reference term list, computing precision, recall, and F1-score. After Step 3, the application consolidates metrics, term lists, and prompt content from all stages into a single CSV file per participant.

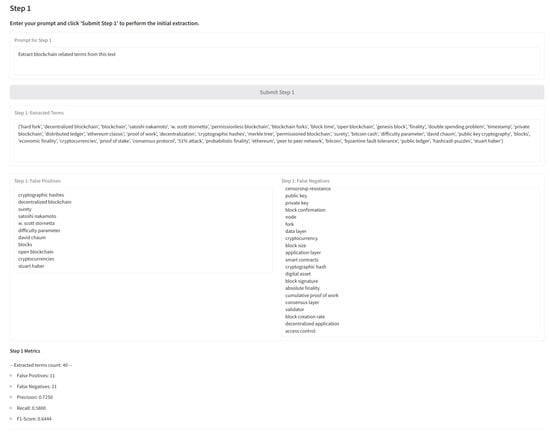

At the welcome window of the application, after starting the experiment, students were required to enter their name and ID and read the assigned text. Next, they proceeded to Step 1 of the task. Figure 3 and Figure 4 illustrate the students’ interaction with the application at the Welcome page and at Step 1, respectively.

Figure 3.

Welcome page of the GPT-based term extraction application.

Figure 4.

Step 1 Page of the GPT-based term extraction application.

Step 1. Students were asked to enter the first prompt to guide GPT in extracting terms from the text. Upon submitting their prompt by clicking “Submit Step 1”, students received four outputs as follows: (1) a list of terms extracted by GPT from the text; (2) a list of false positives, i.e., words and phrases that were identified as terms but absent from the reference term list; (3) a list of false negatives, i.e., words and phrases that were not identified as terms but present in the reference term list; and (4) a set of performance metrics, including precision, recall, and F-score. After reviewing these results, students proceeded to Step 2 of the task.

Step 2. Students were required to enter a second prompt to provide feedback to GPT, aiming to improve its ability to extract terms. After that, the experiment was repeated: the model extracted terms and displayed the updated results, including the extracted terms, false positives, false negatives, and performance metrics. After reviewing these results, students proceeded to Step 3 of the task.

Step 3. Students were required to submit a third prompt to provide further feedback to GPT. As in the previous steps, they received the model’s output, including the extracted terms, false positives, false negatives, and performance metrics. However, after this step, these results were considered final, and students could analyze them but not make further improvements. The completion of this step marked the conclusion of the experiment.

3.4. Data Collection

Upon completing the experiment, a dataset of 60 records was collected, each containing 24 variables corresponding to three sequential steps (8 variables per step). Table 1 provides the variable names along with their brief descriptions. Student identifiers and names were anonymized in the data. All students provided informed consent for their chat logs and performance to be used in research.

Table 1.

Variables explanation.

Figure 5 shows the distribution of F1-scores across three steps. In Step 1, F1-scores are more varied, with a notable number of values below 50. This suggests that initial prompts might be simple and not very targeted. In Step 2, F1-scores shift towards higher values, with more instances clustering above 60. This indicates that performance improves due to better prompt refinement. In Step 3, most F1-scores are concentrated in the upper range (above 70), with fewer occurrences of lower scores. This suggests that, by the final step, the model becomes highly sensitive and fluctuates in response to prompt refinements forcefully undertaken by students.

Figure 5.

F1-scores distribution across Step 1, Step 2, and Step 3.

3.5. 6C Criteria for Prompt Evaluation

Recent studies have expanded the understanding of what makes prompts effective and how to systematically evaluate them. Various methods and tools for prompt assessment have been developed. For example, Zhu et al. introduced PromptBench, a benchmark for testing how robustly LLMs handle different prompt perturbations [33]. Kim et al. proposed EvalLM, a system that uses another LLM to assess prompts based on user-defined criteria [34]. Meanwhile, Zhang et al. introduced GLaPE, a method that evaluates prompts without relying on manually annotated labels [35].

Although these frameworks focus on a model-centric evaluation by examining how reliably LLMs respond to varying prompts, educators still need clear, human-based guidelines for creating and evaluating prompts effectively. For instance, Lo’s CLEAR framework [36] proposed 5 criteria (Concise, Logical, Explicit, Adaptive, Reflective) to ensure coherent and context-rich formulation. Bsharat et al. collected 26 guiding principles for prompt design, ranging from emphasizing conciseness and clarity to specifying the model’s role [37]. Tested on various models (from LLaMA 7B to GPT 4), these principles consistently improve response quality. The authors note that, for different tasks and models, various principles prove differently effective. They also highlight clarity and specificity as especially effective, while advanced techniques, such as chain of thought prompting or using a penalized phrase, can help in specific situations.

In this study, we propose a set of six criteria that teachers can use to evaluate prompts from three perspectives: clarity vs. complexity, coherence vs. creativity, and consistency vs. contextuality (see Table 2). Although each pair may appear to involve opposing concepts, they function as complementary dimensions, collectively representing a unity of opposites. Below, we articulate the theoretical rationale for selecting the 6C criteria, which answers our first research question (RQ1): What should be the criteria for evaluating student prompts?

Table 2.

6C Criteria for prompt evaluation.

Clarity and complexity. When reflecting on the criteria by which any text is evaluated, the foremost consideration is clarity, closely followed by complexity. Clarity, as a fundamental characteristic of text quality, pertains to how easily a reader can understand information. A clear text reduces ambiguity, and this aligns with Grice’s Maxim of Manner [38], which states that effective communication should be clear, concise, and orderly. However, studies in science education show that oversimplifying material in the interest of clarity can cause learning problems. While simple models and analogies help make complex topics approachable, they can also lead to incomplete or inaccurate understanding [39]. The consensus is that communication should maintain clarity without sacrificing necessary complexity.

Coherence and creativity. From a cognitive perspective, coherence and creativity engage distinct thinking systems [40,41,42]. Structured and coherent reasoning (System 2) can inhibit creativity, whereas loosening cognitive constraints fosters associative thinking (System 1). In learning, Cognitive Load Theory [43] posits that coherent, well-organized materials reduce extraneous cognitive load, facilitating comprehension. However, excessive structure may constrain creativity, which thrives on breaking patterns and exploring novel ideas. The more individuals rely on step-by-step, structured reasoning, the harder it becomes to think outside the box [44]. Moreover, fully guided instructional materials do not reduce cognitive load for experienced learners; rather, they may increase it. This phenomenon, known as the expertise reversal effect [45], suggests that, while structured guidance benefits novices, it can hinder experts by imposing unnecessary constraints. Sawalha et al. observe undergraduate students using ChatGPT to solve engineering problems with different prompt approaches [46]. The quality and accuracy of ChatGPT’s answers improve significantly when students rephrase questions or ask multiple follow-up questions rather than simply copying and pasting the problem statement. Along with this, there are known cases when purely creative prompts reduce coherence or factual accuracy if not carefully constrained Zamfirescu [47]. Ultimately, synergy emerges when creativity and coherence complement each other, balancing structured organization with the flexibility to generate novel insights.

Consistency and contextuality. Contextuality refers to the dependence of behavior or meaning on the surrounding context, whereas consistency implies stability or uniformity across different contexts. These two principles often stand in tension: what works well in one context might not generalize globally without contradictions. In fact, a formal perspective from quantum theory captures this duality succinctly: “Contextuality arises where we have a family of data which is locally consistent, but globally inconsistent” [48]. In AI interactions, this is illustrated when slightly altered prompts yield divergent or contradictory responses. Within a single query, a model may produce a coherent and stylistically adapted answer (local coherence) yet rephrasing the prompt or modifying the sequence of prompts can lead to incompatible results. As in quantum scenarios lacking “global hidden variables”, there is no single chain of inference spanning all prompts. Each context can reveal a distinct “local truth”, and reconciling these truths often proves infeasible. LLMs have made progress in generating text that is contextually relevant, but they might change their interpretation of a prompt when context changes, which can threaten consistency. In fact, an LLM’s pretraining on vast, diverse data means it has absorbed many conflicting viewpoints; as a result, it may express inconsistent beliefs depending on which context or persona is invoked [49]. The tension arises because context can radically alter the interpretation and recall of knowledge, making consistency a non-trivial property. Productive synergy arises when local contexts feed into a global, evolving framework that maintains consistency without suppressing the contextual richness.

The rubric used in this study employs a three-point rating system (0–2) to evaluate performance levels for each of the 6C criteria (see Table 3). A score of 0 indicates a low level of a criterion, 1 a medium level, and 2 a high level. This scale allows for clear differentiation between levels while minimizing subjectivity in scoring. A more granular scale (e.g., 0–5 or 0–10) could introduce ambiguity.

Table 3.

The rubric for assessing prompts based on the 6C Framework.

4. Results

4.1. Grades, F1-Scores and Impact of 6C Characteristics on GPT Performance

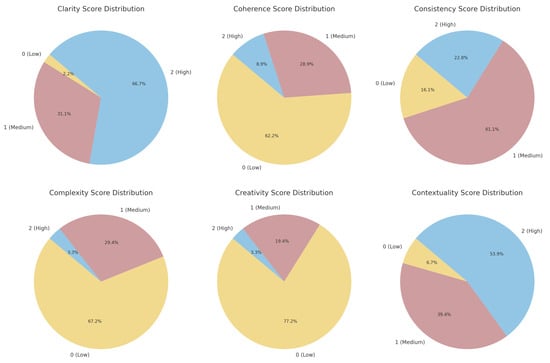

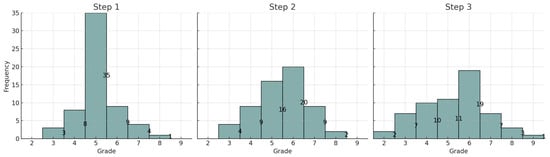

Using the proposed rubric, the 180 prompts created by 60 students across three steps were evaluated. The evaluation was conducted jointly by three teachers applying the 6C rubric, with scores assigned through group discussion and consensus. For reporting consistency, a designated lead evaluator compiled the final agreed-upon scores used in the analysis. After assigning scores for each criterion, the lead teacher summed these scores to obtain the overall prompt grade, which could thus range from 0 to 12. Figure 6 shows the 6C score distribution across Steps 1, 2, and 3, while Figure 7 illustrates the distribution of overall prompt grades across the three steps.

Figure 6.

Distribution of scores for the 6C criteria across Steps 1, 2, and 3.

Figure 7.

Overall grades distribution across Step 1, Step 2, and Step 3.

In Step 1, the grades are more evenly distributed. In this step, the highest grade is 8 and the lowest is 3, while the F1-score ranges from 44.44 to 70.71. By Step 2, a shift towards higher grades becomes noticeable. Although the highest and lowest grades remain the same (8 and 3, respectively), the highest F1-score increases to 76.47, with the lowest slightly improving to 46.94. In Step 3, the distribution does not show further improvement. The highest grade improves further to 9, while the lowest drops to 2. The highest F1-score reaches an impressive 94.95, but the lowest fell sharply to 14.93.

These results reveal both divergence in students’ ability to refine prompts and the model’s sensitivity to prompt variations over multiple iterations. The significant instability in lower-end performance, especially in F1-scores, suggests that, while some students effectively optimized their prompts, others inadvertently misled the model, resulting in considerable performance degradation. The growing gap between the highest and lowest F1-scores highlights the increasing sensitivity of GPT to prompt refinements, emphasizing the dual nature of iterative prompt engineering.

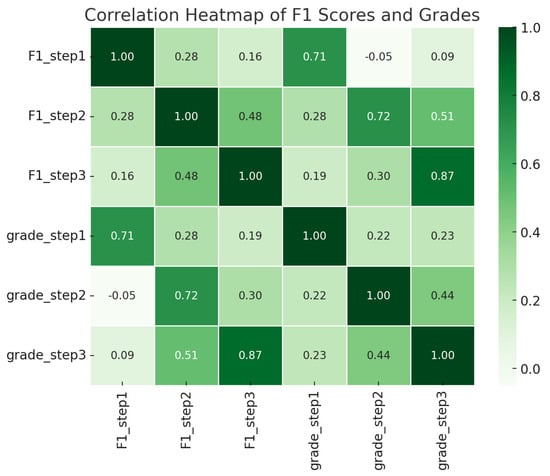

The correlation between teacher-assigned grades and F1-scores is high, measuring 0.71 in Step 1, 0.72 in Step 2, and increasing to 0.87 in Step 3 (see Figure 8). The steadily rising correlation demonstrates that the teacher’s rubric-based grades (6C criteria) increasingly align with ChatGPT’s measured performance (F1-scores). Even though GPT’s overall output quality does not necessarily improve monotonically across steps, the stronger alignment at Step 3 suggests that the aspects the teacher deems important (as reflected in the 6C rubric) become more closely tied to the actual F-measure for the terms extracted by GPT. Hence, these findings support a positive answer to RQ2, indicating that the teacher-assigned evaluations track well with GPT’s performance metrics.

Figure 8.

The correlation between teacher-assigned grades and F1-scores.

To assess the impact of prompt characteristics (clarity, complexity, coherence, creativity, consistency, and contextuality) on GPT’s performance (F1-score), ANOVA was used for the first step, while ANCOVA was applied for the second and third steps. At Step 1, among the 6C factors, clarity (p = 0.000026), creativity (p = 0.000050), complexity (p = 0.015531), and coherence (p = 0.049118) significantly impacted the initial extraction outcomes. Consistency showed marginal significance (p = 0.0988), while contextuality’s effect was absorbed by clarity and creativity. This suggests that clear, innovative, and logically structured prompts were simultaneously contextually rich and the most effective during the initial step. In this step, ANOVA explains 61.14% of the variance in performance.

In Step 2, the previous performance (Step 1 F1-score) was used as a covariate and proved to be a robust predictor of success (p < 0.0001), highlighting the cumulative learning process. Among the 6C factors, complexity (p = 0.000012), coherence (p = 0.000079), consistency (p = 0.000086), clarity (p = 0.000806), and creativity (p = 0.0019) showed significant positive impacts. This suggests that more structured, well-defined, and conceptually rich prompts played a critical role in improving performance at this intermediate step. Contextuality remained non-significant. In this step, ANCOVA explains 62.42% of the variance in performance.

In Step 3, the immediate prior performance (Step 2 F1-score) was used as a covariate. Prompt characteristics, such as coherence (p = 9.25 × 10−7), complexity (p = 0.00053), and consistency (p < 0.0001), significantly influenced performance. In this final step, clarity (p = 0.0067) and creativity (p = 0.0037) also showed significant effects, while contextuality remained non-significant. Performance in Step 2 did not significantly predict this step performance, meaning the outcome was primarily influenced by clarity, complexity, coherence, creativity, and consistency, rather than the prior score. In this step, ANCOVA explains 78.35% of the variance in performance.

The foregoing allows us to answer our second research question (RQ2): Is there a relationship between teacher-assigned grades, based on established criteria, and GPT performance? There is a statistically significant relationship between teacher-assigned scores based on the 6C framework and GPT performance (F1-score) across all three steps. Clarity, coherence, complexity, creativity, and consistency consistently predicted model output quality, while contextuality’s effect was absorbed by clarity and creativity.

4.2. The Sensitivity of GPT to Prompt Formulation

The preceding analysis established a statistically significant relationship between teacher-assigned 6C scores and GPT’s F1 performance, relying on correlation and ANOVA/ANCOVA. While these findings suggest that higher-rated prompts that satisfy the 6C criteria typically result in stronger GPT outputs, such coarse-grained statistical tools fail to capture the subtle semantic and structural nuances of prompts that influence model performance. Indeed, although rubric-based assessments correlate closely with GPT’s performance in Steps 1, 2, and 3 (0.71, 0.72, and 0.87, respectively), and ANOVA/ANCOVA explain a large share of the variance (61.14%, 62.42%, and 78.35%), a notable fraction of GPT’s performance remains unaccounted for (approximately 39%, 38%, and 22%).

This residual variability highlights the need for a more fine-grained analysis of how distinct prompt formulations influence GPT’s ability to extract terms effectively. For a fine-grained analysis, we first classified the prompts based on their complexity levels according to the SOLO taxonomy [50]. This allowed us to systematically examine variations within each complexity level. By doing so, we ensured that prompts of similar structural complexity were compared when assessing their impact on GPT’s term-extraction performance.

SOLO taxonomy consists of five hierarchical levels, but for our classification, we focused on three key levels relevant to prompt formulation: pre-structural (0), unistructural (1), and multi-structural (2). Pre-structural prompts are minimally simple, offering little to no structure or specificity in guiding GPT’s response. Unistructural prompts focus on a single aspect, providing basic structure but lacking depth. Multi-structural prompts incorporate multiple aspects but lack taxonomical or systematic depth. Table 4 presents examples of Step 1 prompts categorized by SOLO levels.

Table 4.

Examples of Step 1 prompts categorized by SOLO levels (the spelling has been preserved).

At complexity level 0 the prompts appear quite similar at first glance. They typically contain a simple instruction to extract or list terms related to blockchain. However, the model’s performance on these prompts varies noticeably, ranging from 49.21% to 69.77%. For example, prompts 12 and 20 differ only in one linguistic nuance: “related to blockchain” versus “about blockchain domain”. Yet, the model extracts 25 and 36 candidate terms, respectively. The phrase “related to blockchain” leads the model to focus on terms directly associated with blockchain. In contrast, “about blockchain domain” points to a more detailed examination of the entire field and leads the model to retrieve a wider range of terms, including technical and adjacent concepts. The phrase “blockchain domain” also encourages the model to identify a more rich and varied domain terminology. This broader scope and domain-specific framing are likely to explain the larger set of candidates in prompt 20 and, consequently, the higher recall, which led to an overall increase in F1-score performance.

It is also interesting to compare prompts 21 and 27. Both explicitly mention extracting or writing terms related to blockchain from a given text. However, prompt 27 includes the word “all”, which significantly increases the number of extracted candidates (76) compared to prompt 21 (37). Despite this difference, their F1-scores are comparable. In prompt 21, the more targeted extraction yields higher precision, which compensates for the lower recall. In contrast, the exhaustive approach in prompt 27 increases recall but reduces precision.

At complexity level 1, prompts 1, 6, and 17 also confirm the importance of the linguistic nuance of the word “all”. Prompt 1 requests the extraction of “key” terms, while prompts 6 and 17 ask for “all” terms. As a result, the high precision and low recall of prompt 1 balance each other through the F-score, just as the low precision and high recall of prompts 6 and 17 do. However, when comparing prompts 6 and 17, there is a notable difference. Prompt 6 is more detailed and structured, which improves the model’s precision. As a result, despite having the same recall, prompt 6 achieves a higher F1-score.

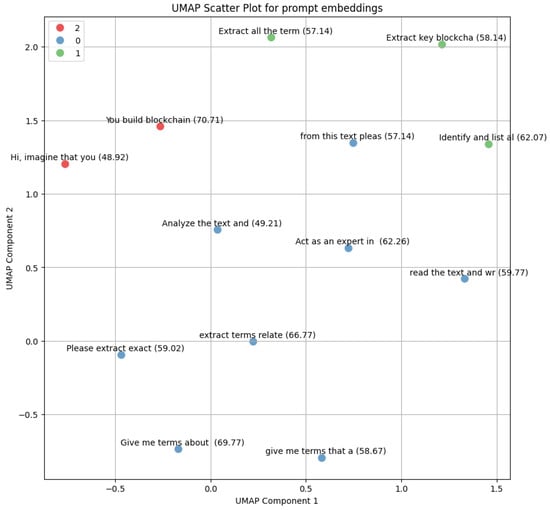

At complexity level 2, prompts 32 and 54 illustrate how task framing impacts performance. Prompt 32 positions the model as a data annotator, requiring it to assess each word and phrase for domain relevance. This directive maximizes recall (68%) but at the cost of lower precision (38.2%), leading to a lower F1-score (48.92%). The annotator role suggests the model focuses on inclusion rather than on selectivity. In contrast, prompt 54 frames the task as ontology construction, which implicitly shifts the model’s focus from broad extraction to structured selection. Precision rises sharply to 71.43%, while recall remains stable (70%), leading to a significantly higher F1-score (70.71%). Figure 9 illustrates the arrangement of embeddings for the analyzed prompts on a two-dimensional plot. While the embedding dimensionality is reduced using UMAP, their arrangement largely corresponds to their respective classes under the SOLO taxonomy. Notably, there is no evident correlation between the performance of closely related prompts, reinforcing the idea that subtle linguistic variations can significantly impact GPT’s performance.

Figure 9.

UMAP scatterplot of the embeddings for the analyzed prompts.

Therefore, we can answer our third research question (RQ3): How does varying prompt formulation affect GPT’s performance? GPT exhibits a distinct bias toward either precision or recall, depending on prompt formulation. Minor changes at first glance (such as adding a single word like “all”, “domain”, “only”, etc.) can lead to shifts in the results, for example, toward higher recall or, conversely, higher precision. This finding underscores the importance of carefully crafting prompts when designing LLM-based term extraction systems to achieve an optimal balance between minimizing false negatives and avoiding false positives. Translating RQ3’s answer into an educational context, it is important to teach students that LLMs do not “read minds”; rather, they respond to specific words, punctuation, and other textual features.

4.3. Patterns for Successful Prompts

To further investigate the interaction between the 6C criteria and GPT performance, we conducted an association rule analysis. This analysis aimed to uncover hidden dependencies among the criteria and their relationship with the final F1 scores across different steps. We applied a structured market-basket approach, where each criterion was treated as an item in a transaction. If a criterion received the highest rating (i.e., 2), it was added to the basket. Additionally, F1 was included when its value exceeded 70%. The analysis was performed across all three steps. We computed support, confidence, and lift to identify strong associations between criteria, revealing key patterns for successful prompts (see Table 5).

Table 5.

Key patterns for successful prompts.

Thus, we can answer the fourth research question (RQ4): Are there clear patterns indicating that certain prompt strategies lead to better GPT performance? There are certain strategies that are indeed quite evident. Students should begin with a prompt that is as clear (clarity) and logically structured (coherence) as possible, and then gradually increase complexity (complexity) while maintaining consistency (consistency). This structured approach significantly boosts the likelihood of obtaining high-quality responses from GPT. Additionally, another key pattern emerges when contextuality, consistency, coherence, and clarity are all present at high levels, as their combined effect strongly predicts higher F1 scores.

5. Discussion

5.1. Practical Recommendations for Prompt Engineering

The results of our experiment offer insights into how students’ prompt engineering impacts LLM performance in an educational context. Specifically, we observed distinct patterns across iterations that reveal significant implications for educational practice involving LLMs. Firstly, prompt clarity emerged consistently as a decisive factor influencing GPT’s accuracy in term extraction tasks. Prompts rated highly for clarity by teachers generally resulted in significantly better GPT performance. Notably, at all steps, students who maintained clear and precise prompts achieved stable or improved F1-scores. Conversely, attempts to increase domain-specific complexity without clear linguistic guidance often resulted in poorer performance. This reinforces previous observations by Leung [3] and Bozkurt [21] that effective prompts must balance clarity and complexity, supporting learners in crafting instructions that are understandable yet sufficiently detailed.

Secondly, complexity and coherence had an increasingly significant influence as students progressed through iterative refinement. At Steps 2 and 3, prompts exhibiting structured complexity—integrating multiple relevant aspects of the blockchain domain logically—yielded markedly higher GPT accuracy. This supports findings by White et al. [16] and Leung [3] that structured, multi-step prompts tend to improve LLM outputs. Notably, however, when complexity was introduced without a coherent structure, performance declined sharply, underscoring that complexity alone, absent logical coherence, can confuse rather than clarify GPT’s task interpretation.

Thirdly, prompts emphasizing creativity showed variable effects. While prompts inviting creative, open-ended responses occasionally boosted recall, they also frequently led to lower precision, resulting in moderate overall performance. This finding aligns with prior observations 35,46] that creative prompts, while useful in exploratory contexts, require careful constraints to maintain accuracy in technical tasks, such as automatic term extraction.

Fourthly, consistency and contextuality demonstrated nuanced roles. Consistency was increasingly crucial across iterations, significantly enhancing GPT’s reliability by reinforcing a stable thematic focus. In contrast, contextuality, though valuable in theory, had a limited measurable impact on performance. This might suggest that GPT’s preexisting knowledge of blockchain terminology already provided sufficient context, reducing the incremental value of additional context provided by student prompts.

Fifthly, performance variability highlighted GPT’s sensitivity to subtle linguistic nuances. Minor linguistic differences (e.g., “related to blockchain” versus “about blockchain domain”) dramatically influenced GPT’s outputs, underscoring that prompt engineering requires careful linguistic calibration. Educators should thus emphasize not just technical content knowledge but also nuanced linguistic skills when training students in effective AI interactions.

Finally, drawing on insights from the entire analytical pipeline—from ANOVA to association-rule mining—we distilled actionable recommendations that now underpin the lecture component of our flipped-learning module (see Table 6).

Table 6.

From theoretical constructs to applied techniques: 6C-based framework for prompt optimization.

5.2. Limitations

The main limitation of this study is that ATE is a closed-set task judged against a fixed reference term list. This emphasizes clarity, consistency, and contextuality while limiting the scope for creativity, coherence, and complexity. Additionally, the experiment included step-by-step feedback: after each iteration, the student observed the model’s false negatives and false positives. While this transparency is beneficial, it poses a risk of overfitting because the student may merely add missing terms and eliminate unnecessary ones without enhancing the prompt logic itself. Consequently, although metrics may improve, this improvement may partly result from memorizing a term list rather than gaining an understanding of the principles of LLMs. This limits the applicability of the research study to open generative tasks.

In summary, the results highlight the critical educational importance of structured, clear, and coherent prompts in AI-assisted learning. They also point to an essential pedagogical insight: effective interaction with GPT requires students to develop nuanced prompt engineering skills that carefully balance clarity, complexity, coherence, and creativity. Consequently, these skills should be explicitly integrated into curricula as essential competencies for the effective use of generative AI in education.

6. Conclusions

This study has empirically confirmed the significant impact of students’ prompt engineering strategies on the performance of GPT-4 in domain-specific automatic term extraction tasks. By conducting a three-step iterative experiment involving 60 undergraduate participants, we demonstrated that well-crafted prompts, particularly those characterized by clarity, coherence, and balanced complexity, significantly enhanced GPT’s ability to extract relevant domain-specific terms.

As large language models become more widely adopted across various fields, the ability to write clear and flexible prompts is becoming increasingly essential. Future research should examine the extent to which the findings of this study are transferable across different domains and among users with varying levels of technical expertise. Also, it is important to investigate how prompt quality impacts performance in other natural language processing tasks, such as named entity recognition or keyword extraction. This would allow for an assessment of whether the 6C criteria—clarity, coherence, creativity, contextuality, correctness, and completeness—remain robust indicators of prompt effectiveness in different task settings.

It is also important to test the 6C framework on open-ended, generative tasks, such as answering complex questions, engaging in problem-solving dialogues, or producing context-specific content. These tasks typically do not have a single correct answer and require flexible thinking, careful reasoning, and creative responses—areas where prompt quality plays a critical role. It can reveal whether the 6C criteria remain valid, whether certain criteria become more important, or if the framework needs to be updated for more complex interactions.

Subsequent studies might as well examine whether iterative prompt refinement leads not only to better short-term model performance but also to improved subject understanding and enhanced ability to interact with AI tools. If this is the case, it would show that prompt engineering is not just a technical skill but also a way to support deeper learning, critical thinking, and self-directed learning.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/electronics14112098/s1, The supplementary materials provides the study’s experimental dataset and the decision document from the Research Ethics Committee of Astana IT University. File S1: English ethical committee. Table S1: Datasets result.

Author Contributions

Conceptualization, A.N.; Formal analysis, A.N., A.A. and A.M.; Funding acquisition, M.M.; Methodology, A.N.; Software, A.A.; Supervision, M.M.; Visualization, A.N., A.A. and A.M.; Writing—original draft, A.N.; Writing—Review and Editing, A.N., A.A. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Higher Education of the Republic of Kazakhstan, grant funding project No. AP19679514 “A study on conceptualization of blockchain domain using text mining and formal concept analysis: focusing on teaching methodology”.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Research Ethics Committee of Astana IT University.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article and the Supplementary Materials. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kotsis, K.T. ChatGPT as teacher assistant for physics teaching. EIKI J. Eff. Teach. Methods 2024, 2. [Google Scholar] [CrossRef]

- Ghimire, A.; Edwards, J. Coding with ai: How are tools like chatgpt being used by students in foundational programming courses. In Proceedings of the International Conference on Artificial Intelligence in Education, Recife, Brazil, 8–12 July 2024; pp. 259–267. [Google Scholar]

- Leung, C.H. Promoting optimal learning with ChatGPT: A comprehensive exploration of prompt engineering in education. Asian J. Contemp. Educ. 2024, 8, 104–114. [Google Scholar] [CrossRef]

- Diyab, A.; Frost, R.M.; Fedoruk, B.D.; Diyab, A. Engineered Prompts in ChatGPT for Educational Assessment in Software Engineering and Computer Science. Educ. Sci. 2025, 15, 156. [Google Scholar] [CrossRef]

- Zamfirescu-Pereira, J.D.; Wong, R.Y.; Hartmann, B.; Yang, Q. Why Johnny can’t prompt: How non-AI experts try (and fail) to design LLM prompts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–21. [Google Scholar]

- Knoth, N.; Tolzin, A.; Janson, A.; Leimeister, J.M. AI literacy and its implications for prompt engineering strategies. Comput. Educ. Artif. Intell. 2024, 6, 100225. [Google Scholar] [CrossRef]

- Ming, G.K.; Mansor, M. Exploring the impact of chat-GPT on teacher professional development: Opportunities, challenges, and implications. Asian J. Res. Educ. Soc. Sci. 2023, 5, 54–67. [Google Scholar]

- Steele, J.L. To GPT or not GPT? Empowering our students to learn with AI. Comput. Educ. Artif. Intell. 2023, 5, 100160. [Google Scholar] [CrossRef]

- Kamalov, F.; Santandreu Calonge, D.; Gurrib, I. New era of artificial intelligence in education: Towards a sustainable multifaceted revolution. Sustainability 2023, 15, 12451. [Google Scholar] [CrossRef]

- Yu, H.; Guo, Y. Generative artificial intelligence empowers educational reform: Current status, issues, and prospects. Front. Educ. 2023, 8, 1183162. [Google Scholar] [CrossRef]

- Almasre, M. Development and Evaluation of a Custom GPT for the Assessment of Students’ Designs in a Typography Course. Educ. Sci. 2024, 14, 148. [Google Scholar] [CrossRef]

- Altamimi, A.B. Effectiveness of ChatGPT in Essay Autograding. In Proceedings of the 2023 International Conference on Computing, Electronics & Communications Engineering (iCCECE), Swansea, UK, 14–16 August 2023; pp. 102–106. [Google Scholar]

- Lema, K. Artificial General Intelligence (AGI) for Medical Education and Training. 2023. Available online: https://africarxiv.ubuntunet.net/server/api/core/bitstreams/690a5e1d-f74d-4f52-95d4-81daeb3d3250/content (accessed on 14 May 2025).

- Abramski, K.; Citraro, S.; Lombardi, L.; Rossetti, G.; Stella, M. Cognitive Network Science Reveals Bias in GPT-3, GPT-3.5 Turbo, and GPT-4 Mirroring Math Anxiety in High-School Students. Big Data Cogn. Comput. 2023, 7, 124. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- White, J.; Fu, Q.; Hays, S.; Sandborn, M.; Olea, C.; Gilbert, H.; Elnashar, A.; Spencer-Smith, J.; Schmidt, D.C. A prompt pattern catalog to enhance prompt engineering with chatgpt. arXiv 2023, arXiv:2302.11382. [Google Scholar]

- Carl, K.; Dignam, C.; Kochan, M.; Alston, C.; Green, D. Discovering prompt engineering: A qualitative study of nonexpert teachers’ interactions with ChatGPT. Issues Inf. Syst. 2024, 25, 205–220. [Google Scholar]

- Chen, Z.; Wang, J.; Xia, M.; Shigyo, K.; Liu, D.; Zhang, R.; Qu, H. StuGPTViz: A Visual Analytics Approach to Understand Student-ChatGPT Interactions. IEEE Trans. Vis. Comput. Graph. 2024, 31, 908–918. [Google Scholar] [CrossRef] [PubMed]

- Scholl, A.; Kiesler, N. How Novice Programmers Use and Experience ChatGPT when Solving Programming Exercises in an Introductory Course. arXiv 2024, arXiv:2407.20792. [Google Scholar]

- Sun, D.; Boudouaia, A.; Yang, J.; Xu, J. Investigating students’ programming behaviors, interaction qualities and perceptions through prompt-based learning in ChatGPT. Humanit. Soc. Sci. Commun. 2024, 11, 1447. [Google Scholar] [CrossRef]

- Bozkurt, A. Tell me your prompts and I will make them true: The alchemy of prompt engineering and generative AI. Open Prax. 2024, 16, 111–118. [Google Scholar] [CrossRef]

- Cain, W. Prompting change: Exploring prompt engineering in large language model AI and its potential to transform education. TechTrends 2024, 68, 47–57. [Google Scholar] [CrossRef]

- Oppenlaender, J.; Linder, R.; Silvennoinen, J. Prompting AI art: An investigation into the creative skill of prompt engineering. Int. J. Hum.–Comput. Interact. 2024; 1–23. [Google Scholar] [CrossRef]

- Federiakin, D.; Molerov, D.; Zlatkin-Troitschanskaia, O.; Maur, A. Prompt engineering as a new 21st century skill. Front. Educ. 2024, 9, 1366434. [Google Scholar] [CrossRef]

- Venerito, V.; Lalwani, D.; Del Vescovo, S.; Iannone, F.; Gupta, L. Prompt engineering: The next big skill in rheumatology research. Int. J. Rheum. Dis. 2024, 27, e15157. [Google Scholar] [CrossRef]

- Park, J.; Choo, S. Generative AI prompt engineering for educators: Practical strategies. J. Spec. Educ. Technol. 2024, 01626434241298954. [Google Scholar] [CrossRef]

- Ma, Q.; Peng, W.; Shen, H.; Koedinger, K.; Wu, T. What Should We Engineer in Prompts? Training Humans in Requirement-Driven LLM Use. arXiv 2024, arXiv:2409.08775. [Google Scholar] [CrossRef]

- Lee, D.; Palmer, E. Prompt engineering in higher education: A systematic review to help inform curricula. Int. J. Educ. Technol. High. Educ. 2025, 22, 7. [Google Scholar] [CrossRef]

- Masters, K.; MacNeil, H.; Benjamin, J.; Carver, T.; Nemethy, K.; Valanci-Aroesty, S.; Taylor, D.C.M.; Thoma, B.; Thesen, T. Artificial Intelligence in Health Professions Education Assessment: AMEE Guide No. 178. Med. Teach. 2025, 1–15. [Google Scholar] [CrossRef]

- University of Miami Academic Technologies. Flipped Learning. Available online: https://academictechnologies.it.miami.edu/explore-technologies/technology-summaries/flipped-learning/ (accessed on 10 May 2025).

- Güner, H.; Er, E. AI in the classroom: Exploring students’ interaction with ChatGPT in programming learning. Educ. Inf. Technol. 2025, 1–27. [Google Scholar] [CrossRef]

- Lee, U.; Jung, H.; Jeon, Y.; Sohn, Y.; Hwang, W.; Moon, J.; Kim, H. Few-shot is enough: Exploring ChatGPT prompt engineering method for automatic question generation in english education. Educ. Inf. Technol. 2024, 29, 11483–11515. [Google Scholar] [CrossRef]

- Zhu, K.; Zhao, Q.; Chen, H.; Wang, J.; Xie, X. Promptbench: A unified library for evaluation of large language models. J. Mach. Learn. Res. 2024, 25, 1–22. [Google Scholar]

- Kim, T.S.; Lee, Y.; Shin, J.; Kim, Y.H.; Kim, J. Evallm: Interactive evaluation of large language model prompts on user-defined criteria. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–21. [Google Scholar]

- Zhang, X.; Zhang, Z.; Zhao, H. GLaPE: Gold Label-agnostic Prompt Evaluation for Large Language Models. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 2027–2039. [Google Scholar]

- Lo, L.S. The CLEAR path: A framework for enhancing information literacy through prompt engineering. J. Acad. Librariansh. 2023, 49, 102720. [Google Scholar] [CrossRef]

- Bsharat, S.M.; Myrzakhan, A.; Shen, Z. Principled instructions are all you need for questioning llama-1/2, gpt-3.5/4. arXiv 2023, arXiv:2312.16171, 3. [Google Scholar]

- Logic and Conversation; The MIT Press: Cambridge, MA, USA, 2002. [CrossRef]

- Guerra-Reyes, F.; Guerra-Dávila, E.; Naranjo-Toro, M.; Basantes-Andrade, A.; Guevara-Betancourt, S. Misconceptions in the learning of natural sciences: A systematic review. Educ. Sci. 2024, 14, 497. [Google Scholar] [CrossRef]

- Sloman, S.A. The empirical case for two systems of reasoning. Psychol. Bull. 1996, 119, 3. [Google Scholar] [CrossRef]

- Stanovich, K.E. The Robot’s Rebellion: Finding Meaning in the Age of Darwin; University of Chicago Press: Chicago, IL, USA, 2005. [Google Scholar]

- Gronchi, G.; Giovannelli, F. Dual process theory of thought and default mode network: A possible neural foundation of fast thinking. Front. Psychol. 2018, 9, 1237. [Google Scholar] [CrossRef] [PubMed]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Kuang, C.; Chen, J.; Chen, J.; Shi, Y.; Huang, H.; Jiao, B.; Lin, Q.; Rao, Y.; Liu, W.; Zhu, Y.; et al. Uncovering neural distinctions and commodities between two creativity subsets: A meta-analysis of fMRI studies in divergent thinking and insight using activation likelihood estimation. Hum. Brain Mapp. 2022, 43, 4864–4885. [Google Scholar] [CrossRef] [PubMed]

- Sweller, J.; Ayres, P.L.; Kalyuga, S.; Chandler, P.A. The expertise reversal effect. Educ. Psychol. 2003, 38, 23–31. [Google Scholar]

- Sawalha, G.; Taj, I.; Shoufan, A. Analyzing student prompts and their effect on ChatGPT’s performance. Cogent Educ. 2024, 11, 2397200. [Google Scholar] [CrossRef]

- Zheng, M.; Pei, J.; Logeswaran, L.; Lee, M.; Jurgens, D. When “A Helpful Assistant” Is Not Really Helpful: Personas in System Prompts Do Not Improve Performances of Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 15126–15154. [Google Scholar]

- Abramsky, S. Contextuality: At the Borders of Paradox. In Categories for the Working Philosopher; Oxford University Press: Oxford, UK, 2017; Volume 262. [Google Scholar]

- Bartsch, H.; Jorgensen, O.; Rosati, D.; Hoelscher-Obermaier, J.; Pfau, J. Self-consistency of large language models under ambiguity. arXiv 2023, arXiv:2310.13439. [Google Scholar]

- Biggs, J.B.; Collis, K.F. Evaluating the Quality of Learning: The SOLO Taxonomy (Structure of the Observed Learning Outcome); Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).