Abstract

Uncertainty is inevitable in real-world systems. If uncertainty is not effectively addressed, it may degrade the performance of model predictive control (MPC). This paper proposes a stochastic dual model predictive control (SDMPC) method for linear systems with parameter uncertainty and measurement noise. The method not only actively explores uncertainty while optimizing control but also introduces probabilistic output constraints to expand the set of feasible solutions. Specifically, Kalman filtering is employed to construct a real-time parameter estimator. The future output errors are incorporated into the nominal cost function as exploration signals to balance exploration and exploitation. Simulation results in the field of solar collectors show that SDMPC can effectively track the temperature by varying the inlet flow under changing environmental and opportunity constraints. The cumulative performance index of SDMPC is 125.3, compared to 316.4 obtained by conventional MPC, validating its effectiveness.

1. Introduction

Under the background of carbon neutrality, parabolic trough solar field (PTSF) systems have attracted considerable attention both domestically and internationally due to their simple structure, low cost, and high commercial maturity [1]. In practice, the solar collector field is affected by external disturbances such as solar radiation fluctuations, ambient temperature variations, and measurement noise, while internal uncertainties caused by equipment aging lead to significant parameter variations [2]. These uncertainties result in outlet temperature fluctuations, which must be mitigated by regulating the heat transfer oil flow rate. Due to the time-delay effect of the oil flow rate on the outlet temperature, inadequate prediction and control of the system dynamics may lead to temperature deviations beyond the safe operating range [3]. Therefore, the uncertainties and time-delay effect pose significant challenges to achieving stable and efficient temperature control of PTSF systems.

In recent years, model predictive control (MPC) has demonstrated excellent performance in the temperature regulation of PTSF systems [4,5]. This is largely attributed to its inherent predictive capability, which functions as an implicit feedforward mechanism and effectively addresses the issue of large thermal inertia. However, the model-based optimization process relies heavily on the accuracy of the system model [6]. The widespread uncertainties in the actual system significantly affect prediction accuracy, reducing the stability and reliability of the control. The conventional MPC often lacks proactive identification and adaptation mechanisms when dealing with unknown dynamics or parameter variations, making it difficult to achieve robust control.

To better handle system uncertainties, several advanced MPC-based strategies have been developed. When uncertainties are bounded, robust model predictive control (RMPC) ensures system robustness by considering worst-case scenarios but often leads to overly conservative control [7,8]. Its performance is heavily dependent on the accuracy of the initial model, making it unsuitable for scenarios with large disturbances or significant parameter variations. Stochastic model predictive control (SMPC) incorporates probability distributions to characterize disturbances and parameter uncertainties [9,10]. Adaptive MPC updates the system model online by identifying model parameters [11]. However, under significant uncertainty, these approaches often overlook the potential of actively exploring unknown dynamics during the control process, which may result in degraded performance. To address these limitations in highly uncertain environments, researchers have begun incorporating dual control principles into MPC to proactively learn unknown system dynamics and improve online model identification accuracy.

The dual control problem was first proposed and named by Feldbaum in 1961 [12]. He noted that applying strong excitation to a system helps reveal richer modal information, thereby enhancing the understanding of system dynamics. In contrast, mild excitation reduces overshoot and stabilizes the output more quickly. These dual effects of control signals, exploration and exploitation, are inherently conflicting [13], but their careful balance can lead to optimal control performance. The later research has shown that incorporating exploration under uncertainty can enhance control outcomes [14]. MPC excels in rolling horizon optimization, constraint handling, and real-time implementation. Dual model predictive control (DMPC) integrates exploratory mechanisms into the MPC framework. By leveraging MPC’s predictive optimization and the active learning nature of dual control, DMPC naturally embeds exploratory signals into the control process. On the one hand, cautious inputs reduce output error; on the other hand, informative inputs help probe unknown dynamics, reducing system uncertainty. DMPC provides more robust and efficient control for stochastic systems.

Early studies mainly focused on validating the feasibility and effectiveness of DMPC algorithms [15]. For instance, La proposed an online experiment design method to evaluate control performance but without experimental validation [16]. Mesbah categorized DMPC into explicit and implicit dual approaches [17]. The implicit dual methods rely on dynamic programming for numerical solutions [18], which is computationally intensive. For example, Zacekova proposed a new algorithm that achieves the dual features of model predictive control by maximizing the smallest eigenvalue of the information matrix [19]. The explicit dual control strategies introduce excitation signals explicitly to enable dual effect [20]. For instance, Houska proposed a self-reflective MPC strategy, in which the future state estimation variance is incorporated into the objective function [21]. Based on the nominal cost and an additional exploration term, Feng further optimized the weighting scheme [22]. Bujarbaruah integrated set-membership filtering to enhance robustness [23]. Heirung also incorporated the trace of the covariance estimate into the cost function [24]. However, current methods still struggle to handle parameter uncertainty effectively. In the face of many complex real-world systems [25,26], the combination of theory and practice still faces challenges.

This paper proposes a stochastic dual model predictive control (SDMPC) method for linear systems with parameter uncertainty. Kalman filtering is integrated for online parameter updates, and a prediction error term related to the estimation accuracy is inserted into the objective function. Meanwhile, the output chance constraints are considered, forming a stochastic optimal control problem. The optimal solution obtained can be carefully applied to the system while actively exploring the uncertainty of parameters. A simplified simulation model of a solar collector field temperature control system was constructed to validate the proposed SDMPC method. Simulation results demonstrate that the proposed method can actively explore parameter variations and improve control performance.

In summary, the following points encapsulate this paper’s primary contributions:

We propose an SDMPC approach that integrates active learning to reduce system uncertainty. This method alleviates the dependence of traditional MPC on model accuracy and enhances its ability to handle complex constraints by introducing probabilistic output constraints. The approach combines the dual control learning component with the constraint handling capability.

Building on a deterministic equivalent framework [24], this paper incorporates future prediction errors as exploratory signals to balance exploration and exploitation, so that the probing behavior occurs naturally. This improves parameter estimation and control performance while expanding control solutions.

We validate the proposed method through simulations on a solar collector field temperature control system. The system has the constraints of thermal oil flow rate and outlet temperature. At the same time, it has external disturbances from solar radiation, ambient temperature and measurement noise. The internal disturbances may contain parameter change caused by structural aging. The simulation shows that the SDMPC method provides robust control performance and reliable temperature tracking, proving the effectiveness of the SDMPC method.

As a result, this paper is organized as follows: The next section presents the system model and research problem. Section 3 details the proposed SDMPC approach. Section 4 models the solar collector field temperature control system and completes the simulation experiments based on the MATLAB platform. Finally, Section 5 discusses conclusions and future outlook.

2. Problem Statement

This paper considers a class of linear discrete stochastic systems.

where t is the discrete time and and represent the system output and control input, respectively. The sets and represent system parameters, with . Some parameters are unknown. is additive observation noise, assumed as a Gaussian sequence with zero mean and variance r. The structure of system (1) is commonly referred to as an autoregressive model with exogenous inputs (ARX), which characterizes a linear dependency between the current output, historical outputs, and external inputs. To facilitate the explicit description of parameter uncertainty, the stochastic system can be reformulated as:

where is a system output measurement, determined by unknown parameter vector , the deterministic regression vector , and the external noise . The vectors are defined as:

Defining the information as the collection of all output measurements and past decisions recorded:

The finite-horizon control cost function over a prediction horizon of length N, denoted by , is defined as follows:

where N is the length of the predictive horizon. k is the sampling interval with . At current moment t, represents the predicted output, is the input, and is the reference trajectory. The weight values and are typically chosen based on experience or empirical methods. Assuming that the past information is available at time t, the input sequence is the problem solution, along with a sequence of control inputs for the subsequent N steps. denotes the conditional expectation given the known information . Note that this paper employs a finite horizon N for predictive control, and the objective function may become unbounded in the case of an infinite horizon.

For real systems subject to constraints, input limitations are represented using simple box constraints:

where and denote the lower and upper bounds of the input, respectively, typically determined by physical hardware limitations or design specifications.

For the stochastic system (2), the probabilistic characteristics of system uncertainties must be taken into account. The observation also contains random qualities. Accordingly, the output chance constraint can be expressed as follows:

where denotes the probability that the output satisfies the constraint, conditioned on the available information . The and represent the lower and upper bounds of the output, respectively. Parameters define the tolerable probability of violating the respective constraints.

This paper focuses on minimizing the objective function (4) for stochastic systems with parameter uncertainty and external disturbances. The output chance constraint (6), (7) and the input constraint (5) exist simultaneously. The problem is thus formulated as a stochastic optimal control problem over a finite prediction horizon N:

The constraint set (8c) and (8d) is highly nonlinear, increasing the complexity of the optimization problem. A key step in solving problem (8a) is the propagation of the mean and variance of the stochastic output through the system dynamics in (8b). To simplify problem (8a), it is necessary to derive a deterministic propagation form of the stochastic output. Note that the inclusion of in (8b) indicates the presence of uncertainty but does not imply the disturbed sequence is used directly for prediction.

3. Stochastic Dual Model Predictive Control

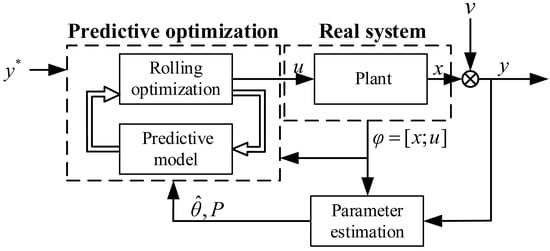

This section provides a detailed description of the proposed SDMPC algorithm. First, the evolution of parameter estimates updated at each time step is introduced. Then, the predicted output error is embedded in the objective function. Finally, the chance constraints are evaluated. The overall framework diagram of the SDMPC strategy is illustrated in Figure 1:

Figure 1.

Schematic diagram of SDMPC framework.

3.1. Parameter Estimation

For the system (2) with external noise and unknown parameters, the Kalman filter propagation form is employed for parameter estimation. Assume that the coefficients follow a Gaussian conditional distribution given the current information , with mean and covariance . The parameter estimates can be expressed as . Define the parameter estimation error as and the parameter covariance as .

Lemma 1.

For a system (2), the mean and prediction conditional covariance can be propagated forward as follows [27]:

where , , and are given. is known as the injection gain. r is the observation noise covariance.

In system (2), the regression vector contains deterministic inputs and outputs, while the parameter vector is unknown. Lemma 1 is applied to the real-time estimation of parameter and its covariance . Therefore, a time-propagated prediction form of the output is obtained and will be incorporated into the optimization problem as a constraint. The output conditional predictor is then defined as follows:

where is an estimate of the future output at the current moment t, which is used to optimize the solution of control at time .

3.2. Objective Function and Constraints

The effect of estimation accuracy on controller performance is significant. Directly equating the future output with its prediction neglects the uncertainty arising from estimation errors. Worse control performance is not what we expected. To address this issue, an incentive mechanism is introduced to reward the exploratory behavior of control decisions. We define the future output variance as the errors between the true and predicted outputs:

where is an incentive component term and it adds to the objective function. Unknown uncertainty can be visualized in the system output as a basis for parameter estimation at the next moment. When , the corresponding control action actively explores uncertainty. Based on Lemma 1 and (12), define as follows:

Corollary 1.

For a system (2), given and , the future output variance is as follows:

Proof of Corollary 1.

Depending on the definition of real system output, it can be derived as:

where the desired observation noise is . The parameter expectation can be expressed as , which can derived from:

According to (13), the future output expectation error can be expressed as:

By combining (15), Corollary 1 can be directly obtained. □

Learning the uncertainty significantly reduces the prediction error. then converges to zero, and the learning process terminates automatically. Consequently, Corollary 1 enables the reformulation of , as summarized in the following theorem.

Theorem 1.

When the shrinks to zero with the end of the study, (18) will degenerate into (4). The reward function also disappears as uncertainty dissipates.

The nonlinear constraints (8c) and (8d) in problem (8a) significantly increase the difficulty of solving the optimization problem. They are also known as opportunity constraints and are categorized as soft constraints. These can be violated under certain conditions, but the probability that the constraints hold cannot be less than a certain confidence level. This helps to expand the set of control solutions. In this paper, we consider a simplification of the nonlinear constraints with the help of Theorem 1 and Lemma 1. Typically, SDMPC uses the mean and variance information of the predicted outputs to approximate the output constraints in the actual demand. Assuming that the current information is known, the output measurement follows a Gaussian distribution. The mean and variance are and . Similarly, when in the forecasting process, the future conditional distribution of is also Gaussian. The mean and variance are given in (12) and (14).

It is also proposed in [28] that the updating step of the state covariances (9) and (11) is independent of the measurements when computed using the Kalman filter. Thus, the output covariance of the prediction can be pre-calculated offline instead of online. We consider the probabilistic output constraint to have a deterministic equivalent form as follows:

where and represent the probabilities that the constraints hold. denotes the standard normal cumulative distribution function (CDF). Parameters and can be determined offline in advance according to the definition of the CDF. Replacing the original constraints with these new ones during the online solution ensures that the chance constraints (8c) and (8d) are satisfied.

3.3. Stochastic Dual Model Predictive Control Algorithm

In summary, Lemma 1 provides the recursive forms of the estimates and covariances (9)–(11). Equation (12) offers the mean of the output. The future output error expectation (14) follows Corollary 1. Then, Theorem 1 defines the new cost function (18). Deterministic expressions (19) and (20) offer the output constraint chance constraints. Thus, the stochastic optimal control problem (8a) is reformulated as the problem (P′):

where , , , given.

It is clear that the problem (P′) is a quadratically constrained quadratic programming (QCQP) problem because of the quadratic objective function and the set of constraints. The solution to this problem is the optimal sequence of control inputs over the N step prediction horizon. In this paper, the barrier interior point method is used as the optimization solution algorithm. The method obtains the optimal solution by traversing the interior of the feasible region, which makes it excellent in dealing with large-scale optimization problems and its feasibility preservation and computational efficiency under multi-constraints. Current research has developed many advanced nonlinear programming algorithms such as GloMIQO [29] and BARON [30]. The specific Algorithm 1 steps for SDMPC are as follows:

| Algorithm 1: SDMPC |

| Input: the hyperstate: . |

| 1: Set . |

| 2: Collecting the information , measure and . |

| 3: Estimate parameters using Kalman filtering (9), (21c) and (21d), and update hyperstate. |

| 4: Calculate the future estimated output , expectation error , and chance constraints using (21b), (21e)–(21g). |

| 5: Solve the optimization problems (P′) by using barrier interior point method, and obtain the control . |

| 6: Apply the control to the system (1). |

| 7: Set , and go back to . |

The algorithm describes the SDMPC strategies that can be used to solve (P′). SDMPC is able to realize the control effect of output tracking in the presence of uncertainty and probabilistic output constraints of the system. In order to pursue the efficiency and reliability of simulation, this paper uses the fmincon function in the MATLAB R2023b platform to solve the problem (P′).

4. Simulation Experiment

In order to evaluate the control performance, this paper applies the proposed SDMPC to the temperature control system of a parabolic solar collector field. A conventional MPC method and a proportional integral derivative (PID) controller are utilized for comparative analysis. Subsequently, the immunity performance of SDMPC is verified through the incorporation of simulation experiments with parameter variations and temperature setpoint changes. These uncertainties are intended to replicate the inherent variability in ambient temperature and solar radiation levels.

4.1. Modeling of Trough Solar Collector Fields

Parabolic trough solar fields (PTSFs) are spatially distributed systems that collect and store solar radiation for power generation. Precise outlet temperature control is crucial for ensuring operational safety and energy conversion efficiency. However, environmental variations introduce multiple disturbances, complicating unbiased temperature regulation. In practical PTSF operations, disturbances fall into two categories. External factors include sensor noise and weather fluctuations. Internal variations involve changes in heat transfer coefficients and the effective area of the paraboloid.

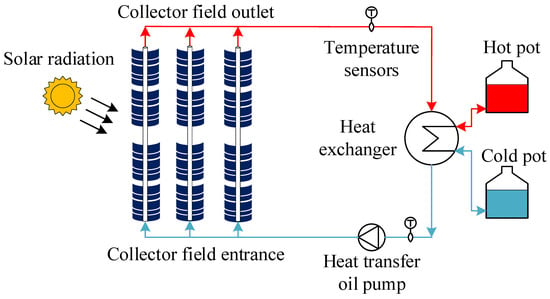

Figure 2 illustrates the system structure of a PTSF. The thermal energy collection device consists of several parallel absorber tubes and parabolic reflectors. The tube absorbs solar radiation, and the circulating oil transfers it to the heat exchanger.

Figure 2.

System architecture diagram of PTSF.

Assume that the heat transfer oil flow is equally distributed. The modeling focuses on the dynamic behavior of a single tube column. According to the principle of energy conservation and the actual heat exchange process, a simulation model is constructed following [31]. The system is represented by a set of ordinary differential equations (ODEs):

where (22) and (23) represent the energy balance of the heat sink and heat transfer oil, respectively. The subscripts f and m denote the heat-absorbing pipe and heat transfer oil, respectively. The outlet temperature is mainly influenced by solar radiation, ambient temperature, inlet temperature, and oil flow rate. Refer to Table 1 for the specific actual parameters. The oil flow rate is a directly adjustable influence in temperature control. Assuming efficient heat transfer, . Simplifying the model by capturing its key dynamic relationship with outlet temperature:

where is the control input and is the output value of the collector field. The physical parameter is .

Table 1.

Variables and parameters of PTSF system.

4.2. Model Linearization

The dynamic process is assumed to be smooth and continuously differentiable. Considering the system’s nonlinearity, the model is linearized and discretized around steady-state equilibrium points, which correspond to , , , and , and we obtain by calculating . A simplified differential equation can be obtained by the linearization method in reference [33]:

where the sampling interval is . denotes the deviation of the current variable from the operating point. The parameters are , , , , and . To clarify the proposed method’s model, Equation (25) is then rewritten in the ARX form as follows:

where the output is and the input is , which represents the change in outlet temperature and oil flow rate, respectively. is the observation noise, which arises from sensor inaccuracies and ambient disturbances. In control research, is commonly modeled as Gaussian white noise due to its mathematical tractability. Let be the external input with , and let each follow natural laws as measurable real values. This study adopts a single-input assumption to circumvent coupling effects in multi-input systems, which can be generalized to multiple outputs. Future work will focus on decoupling methodologies to enable generalized multi-input multi-output (MIMO) implementations.

For PTSF systems, the heat transfer oil flow rate is strictly constrained within , . Outlet temperature constraints can be relaxed as chance constraints. This study aims to regulate the oil flow to stabilize (or track) the outlet temperature under disturbance rejection and operational constraints.

4.3. Simulation and Results

The simplified model (26) of the solar thermal collector field can be written in the form (2) mentioned in Section 3, where the denotes the increment in outlet temperature. The known regression vector is defined as . Here, represents measurable but uncontrollable external inputs. is the control input, denoting the change in oil flow rate. The system parameter vector is directly determined by the physical parameters listed in Table 1. Special note: since the parameter term b shows negligible variation in measurements, it is considered constant in the control-oriented model.

The prediction and control horizons are set to and , respectively. The objective function weights are chosen as , and . These coefficients are chosen based on experience. The noise is set to . The simulation starts at and ends at . The initial values of identification parameters are , and the covariance matrix is , without any a priori information. The desired reference temperature is , and the initial output is . The initial flow rate is set to . The constraints are defined as , , and .

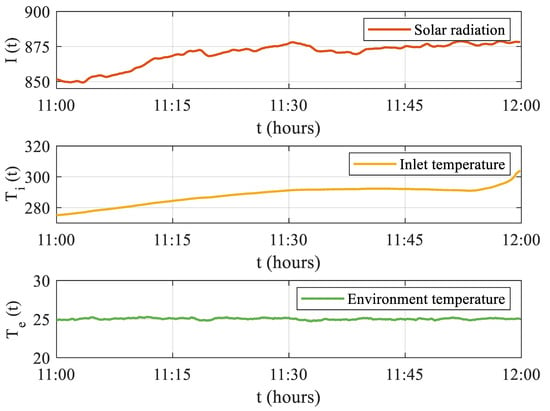

The simulation data simulate the possible variations in solar radiation, input temperature, and environment temperature from 11:00 to 12:00 noon, as shown in Figure 3. The total sampling step is , and we select the first 800 steps as part of the presentation.

Figure 3.

Evolution of collector field external measurable excitations.

4.3.1. Validation of Control Effectiveness

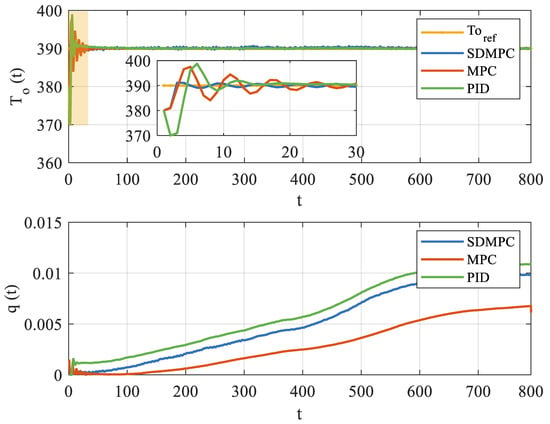

The experimental trial shows that SDMPC is able to satisfy the flow rate and output temperature constraints while stabilizing the outlet temperature.

The upper part of Figure 4 compares the outlet temperature control performance of the proposed SDMPC (blue curve), traditional MPC (red curve), and PID controller (green curve). The above three methods successfully track the reference temperature, increasing from an initial value of 380 °C to a steady-state value of 390 °C. As highlighted in the zoomed-in subplot, the SDMPC (blue curve) achieves a faster response and converges to the reference temperature (yellow curve) earlier. Meanwhile, the PID controller (green curve) exhibits more pronounced oscillations during the initial transient phase, and the baseline MPC method (red curve) shows certain oscillatory behavior. The lower part of Figure 4 shows the variation in the heat transfer oil flow rate. The control strategies show similar trends in flow rate adjustment. Comparing the results of experiments, it was demonstrated that the proposed SDMPC effectively tracks the outlet temperature and outperforms the baseline methods during the stabilization process.

Figure 4.

Control performance of outlet temperature under SDMPC, MPC, and PID.

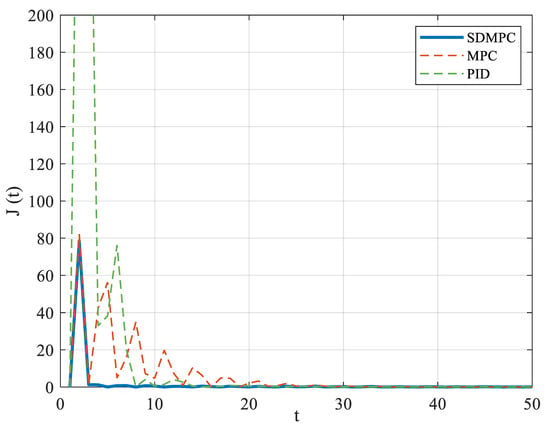

In addition, the performance index comparison in Figure 5 shows that the step cost of SDMPC (blue curve) remains consistently closer to zero than PID (green dashed curve) and the basic MPC (red dashed curve). The results also directly demonstrate the superior stabilization performance of the proposed SDMPC. To further validate this result, a Monte Carlo simulation was conducted. We repeated trials 500 times to obtain statistical variance, aiming to minimize randomness and enhance the reliability of the performance evaluation. The averaged performance indices are reported in Table 2. In conjunction with Figure 4 and Figure 5, it is also straightforward to conclude that the SDMPC achieves faster convergence to the desired temperature with a small overshoot, demonstrating stability and tracking capability.

Figure 5.

Performance index for first 50 time steps.

Table 2.

Quantitative comparison of SDMPC, MPC, and PID.

4.3.2. Robustness Evaluation Under Disturbances

This subsection analyzes the dynamic response of the proposed SDMPC method under unknown perturbations. Additionally, a certain equivalent model predictive control (CEMPC) method without exploration mechanisms was implemented for comparison. It differs from SDMPC solely by disregarding the potential presence of parameter uncertainties.

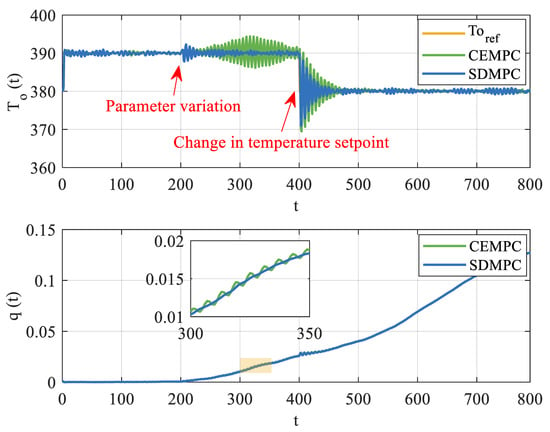

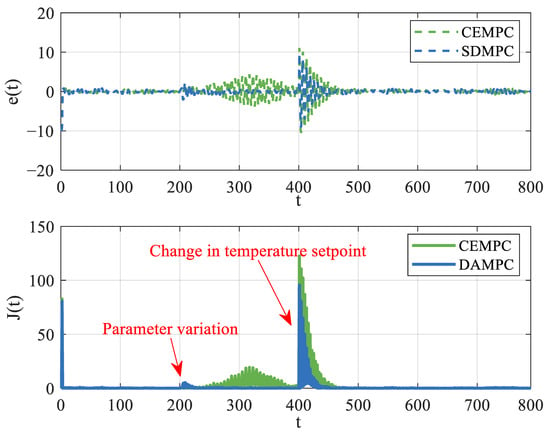

In this experiment, parameter variations and outlet temperature setpoint changes are introduced. Two disturbances are applied to the system at time instances s and s, respectively. For , the system parameters are defined as . At , the parameters abruptly switch to . Following this, at , the outlet temperature setpoint is adjusted from 390 to 380 °C.

Figure 6 illustrates the system responses of two methods under both parameter variation and reference setpoint change scenarios. The red arrows highlight two key changes. The solid blue curve represents SDMPC, and the solid green curve represents CEMPC. The yellow solid line denotes the desired reference temperature. As shown in the upper part of Figure 6, sudden parameter variations at the moment t = 200 s cause fluctuation in . Compared to SDMPC, the CEMPC results in larger output deviations, primarily due to its limited capability to adapt to parameter changes. As the lower zoomed-in part, the green curve representing the flow rate, exhibits larger oscillations during the transition.Changes in the reference temperature then cause a more substantial and longer-lasting fluctuation in the tracking response. The phenomenon can be illustrated in Figure 7 and Figure 8 in detail, which show the evolution of parameter estimates and their associated covariance.

Figure 6.

Comparison of output temperature tracking and input trajectories under SDMPC and CEMPC.

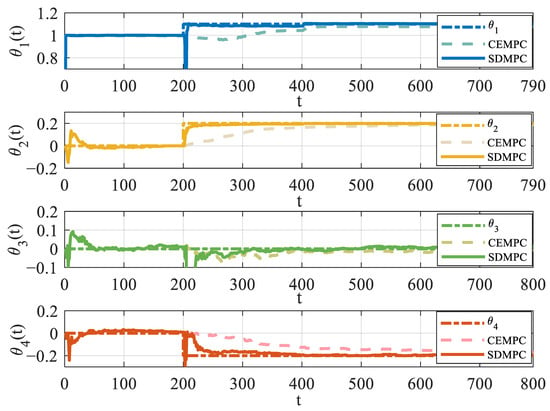

Figure 7.

Evolution of parameter estimates under SDMPC and CEMPC, illustrating different learning behaviors.

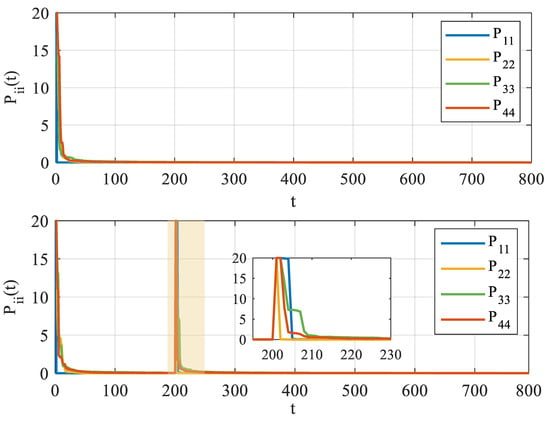

Figure 8.

The evolution of the diagonal elements of the covariance matrix over time.

In Figure 7, the dark dashed line represents true values. The bold solid line represents SDMPC, while the lighter dashed line corresponds to CEMPC. Different colors represent different parameter elements in turn. Taking (blue) as an example, at the time s, the SDMPC’s estimate (blue solid line) quickly converges to the true value (dark blue dashed line) via the real-time update of Kalman filter. In contrast, CEMPC’s estimate (light blue line) fails to identify the true parameters even after 100 steps. When the setpoint changes at s, the SDMPC has acquired sufficient knowledge to allow exit temperature tracking. Also, due to the increase in output error, learning is triggered again. The yellow, green, and red curves exhibit the same characteristics.

Figure 8 illustrates the variance (the diagonal elements of ). The upper subplot represents CEMPC, which conducts parameter learning solely at the initial time ( s), without further adaptation thereafter. But in the lower subplot, representing SDMPC, it can be visualized that at s and s two peaks occurred. The second peak in indicates that SDMPC introduces an exploratory signal into the control inputs, accelerating the learning process of the parameters. This observation is consistent with Figure 7, illustrating the exploration and adjustment process of SDMPC under two different perturbations.

Figure 9 shows the variation in outlet temperature error and the control performance index. Both disturbances induce significant fluctuations in the output error. The error of SDMPC (blue dotted line) between 200 s and 400 s is significantly smaller than that of CEMPC (green dotted line). Correspondingly, there are two increases in control performance (in the lower part of the Figure 9) observed, which is due to incorrect system parameters and large output errors. In conclusion, the SDMPC activates its exploration mechanism at the time of perturbations. SDMPC is better than CEMPC in terms of the ability to cope with parameter changes. The advantages are essential for long-term stability in solar collector control systems by mitigating parametric drift and cumulative errors.

Figure 9.

Output temperature tracking errors and stepwise performance indices under SDMPC and CEMPC.

5. Conclusions

This paper proposed a SDMPC strategy to improve MPC performance under uncertainty. By integrating stochastic dual control, the approach exploits information about system uncertainty to optimize control inputs that actively reduce its future impact. The simulation experiment is based on a solar collector field. Despite variations in solar radiation, ambient temperature, and setpoint changes, SDMPC can also accomplish output tracking tasks. The experiment results demonstrate improved parameter estimation accuracy and control performance. Future work will consider time-varying parameters to further improve the adaptivity and control quality. To take it one step further, we attempt to generalize this approach to multi-input modeling and explore its potential applications.

Author Contributions

Methodology and idea proposal, X.Z. and C.C.; simulation and validation, X.Z. and D.W.; writing—original draft preparation, D.W.; results analysis, D.W.; manuscript polishing, C.C.; writing—review and editing, X.S. and S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Research and Development Program of Shaanxi (Grant No. 2024GX-ZDCYL-03-06), Young Talent Nurturing Program of Shaanxi Provincial Science and Technology Association (Grant No. 20240109), Scientific research project of Shaanxi Provincial Educational Committee (Grant No. 23JK0486), National Natural Science Foundation of China (Grant No. 62303367), National Key R&D Program of China (Grant No. 2023YFC2809904), and The Youth Innovation Team of Shaanxi Universities (Grant No. 2023997).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors claim no conflicts of interest.

References

- Islam, M.T.; Huda, N.; Abdullah, A.B. A comprehensive review of state-of-the-art concentrating solar power (CSP) technologies: Current status and research trends. Renew. Sustain. Energy Rev. 2018, 91, 987–1018. [Google Scholar] [CrossRef]

- Su, S.; Ma, P.; Xue, Q. Optimal scheduling of extreme operating conditions in islanded microgrid based on model predictive control. Electronics 2025, 14, 206. [Google Scholar] [CrossRef]

- Xin, X.; Zhang, Z.; Zhou, Y. A comprehensive review of predictive control strategies in heating, ventilation, and air-conditioning (HVAC): Model-free VS model. J. Build. Eng. 2024, 94, 110013. [Google Scholar] [CrossRef]

- Pipino, H.A.; Morato, M.M.; Bernardi, E. Nonlinear temperature regulation of solar collectors with a fast adaptive polytopic LPV MPC formulation. Solar Energy 2020, 209, 214–225. [Google Scholar] [CrossRef]

- Pataro, I.M.L.; Gil, J.D.; da Costa, M.V.A. A stochastic nonlinear predictive controller for solar collector fields under solar irradiance forecast uncertainties. IEEE Trans. Control. Syst. Technol. 2023, 32, 99–111. [Google Scholar] [CrossRef]

- Schwenzer, M.; Ay, M.; Bergs, T. Review on model predictive control: An engineering perspective. Int. J. Adv. Manuf. Technol. 2021, 117, 1327–1349. [Google Scholar] [CrossRef]

- Zhao, Z.; Guo, J.; Luo, X. Distributed robust model predictive control-based energy management strategy for islanded multi-microgrids considering uncertainty. IEEE Trans. Smart Grid 2022, 13, 2107–2120. [Google Scholar] [CrossRef]

- Liang, S.; Xiao, B.; Wang, C. A Single-Tube Robust Model Predictive Control Method Based on ϵ-Approximation. Electronics 2023, 12, 2074. [Google Scholar] [CrossRef]

- Sehr, M.A.; Bitmead, R.R. Stochastic model predictive control: Output-feedback, duality and guaranteed performance. arXiv 2017, arXiv:1706.00733. [Google Scholar]

- Brudigam, T.; Olbrich, M.; Wollherr, D. Stochastic model predictive control with a safety guarantee for automated driving. IEEE Trans. Intell. Veh. 2021, 8, 22–36. [Google Scholar] [CrossRef]

- Minniti, M.V.; Grandia, R.; Farshidian, F. Adaptive CLF-MPC with application to quadrupedal robots. IEEE Robot. Autom. Lett. 2021, 7, 565–572. [Google Scholar] [CrossRef]

- Feldbaum, A.A. Dual control theory. I. Avtom. Telemekhanika 1960, 21, 1240–1249. [Google Scholar]

- Heirung, T.A.N.; Paulson, J.A.; O’Leary, J. Stochastic model predictive control—how does it work? Comput. Chem. Eng. 2018, 114, 158–170. [Google Scholar] [CrossRef]

- Heirung, T.A.N.; Santos, T.L.M.; Mesbah, A. Model predictive control with active learning for stochastic systems with structural model uncertainty: Online model discrimination. Comput. Chem. Eng. 2019, 128, 128–140. [Google Scholar] [CrossRef]

- Larsson, C.A.; Rojas, C.R.; Bombois, X. Hjalmarsson, H. Experimental evaluation of model predictive control with excitation (MPC-X) on an industrial depropanizer. Process Control 2015, 31, 1–16. [Google Scholar] [CrossRef]

- La, H.C.; Potschka, A.; Schloder, J.P. Dual control and online optimal experimental design. SIAM J. Sci. Comput. 2017, 39, 640–657. [Google Scholar] [CrossRef]

- Mesbah, A. Stochastic model predictive control with active uncertainty learning: A survey on dual control. Annu. Rev. Control. 2018, 45, 107–117. [Google Scholar] [CrossRef]

- Arcari, E.; Hewing, L.; Zeilinger, M.N. An approximate dynamic programming approach for dual stochastic model predictive control. IFAC-PapersOnLine 2020, 53, 8105–8111. [Google Scholar] [CrossRef]

- Zacekova, E.; Privara, S.; Pcolka, M. Persistent excitation condition within the dual control framework. J. Process. Control. 2013, 23, 1270–1280. [Google Scholar] [CrossRef]

- Heirung, T.A.N.; Foss, B.; Ydstie, B.E. MPC-based dual control with online experiment design. J. Process. Control. 2015, 32, 64–76. [Google Scholar] [CrossRef]

- Houska, B.; Telen, D.; Logist, F. Self-reflective model predictive control. SIAM J. Control. Optim. 2017, 55, 2959–2980. [Google Scholar] [CrossRef]

- Feng, X.; Houska, B. Real-time algorithm for self-reflective model predictive control. J. Process. Control. 2018, 65, 68–77. [Google Scholar] [CrossRef]

- Bujarbaruah, M.; Zhang, X.; Tanaskovic, M. Adaptive MPC under time varying uncertainty: Robust and stochastic. arXiv 2021, arXiv:1909.1347. [Google Scholar] [CrossRef]

- Heirung, T.A.N.; Ydstie, B.E.; Foss, B. Dual adaptive model predictive control. Automatica 2017, 80, 340–348. [Google Scholar] [CrossRef]

- Sigounis, A.M.; Vallianos, C.; Athienitis, A. Model predictive control of air-based building integrated PV/T systems for optimal HVAC integration. Renew. Energy 2023, 212, 655–668. [Google Scholar] [CrossRef]

- Tighirt, A.; Aatabe, M.; El Guezar, F. A new stochastic controller for efficient power extraction from small-scale wind energy conversion systems under random load consumption. Energies 2024, 17, 4927. [Google Scholar] [CrossRef]

- Ljung, L. System identification. In Signal Analysis and Prediction; Procházka, A., Uhlíř, J., Rayner, P.W.J., Kingsbury, N.G., Benedetto, J.J., Eds.; Applied and Numerical Harmonic Analysis; Birkhäuser Boston: Boston, MA, USA, 1998; pp. 163–173. [Google Scholar]

- Calafiore, G.C.; Dabbene, F.; Tempo, R. Research on probabilistic methods for control system design. Automatica 2011, 47, 1279–1293. [Google Scholar] [CrossRef]

- Tawarmalani, M.; Sahinidis, N.V. Global optimization of mixed-Integer nonlinear programs: A theoretical and computational study. Math. Program. 2004, 99, 563–591. [Google Scholar] [CrossRef]

- Misener, R.; Floudas, C.A. GloMIQO: Global mixed-integer quadratic optimizer. Glob. Optim. 2013, 57, 3–50. [Google Scholar] [CrossRef]

- Johansen, T.A.; Storaa, C. Energy-based control of a distributed solar collector field. Automatica 2002, 38, 1191–1199. [Google Scholar] [CrossRef]

- Gao, X.H. Optimal Model Predictive Disturbance Rejection Control Method and Application in Parabolic Trough Solar Fields. Ph.D. Thesis, Southeast University, Nanjing, China, 2022. [Google Scholar]

- Pasamontes, M.; Álvarez, J.D.; Guzmán, J.L. A switching control strategy applied to a solar collector field. Control. Eng. Pract. 2011, 19, 135–145. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).