Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0

Abstract

1. Introduction

- (1)

- What are the unique ethical challenges of human-centric collaborative intelligence in Industry 5.0?

- (2)

- How can systems be designed to inherently foster trust between humans and machines? and

- (3)

- What governance and validation mechanisms ensure ongoing ethical compliance?

- (“Industry 5.0” OR “Operator 5.0” OR “Society 5.0”) AND (“human-robot collaboration” OR “human-machine collaboration” OR “human-AI collaboration” OR “collaborative intelligence” OR “human-centred” OR “human-centric”)

- (“trust” OR “ethics” OR “sustainability” OR “resilience”) AND (“artificial intelligence” OR “robotics” OR “automation”) AND (“human factors” OR “collaboration” OR “teaming”)

- (“value sensitive design” OR “ethics by design” OR “privacy by design”) AND (“technology” OR “artificial intelligence” OR “industry”)

- (“collaborative robots”) AND (“acceptance” OR “trust” OR “implementation”)

2. The Emergence of Industry 5.0: Beyond Automation

2.1. Pillars of Industry 5.0

- Human-centricity: Fundamental human needs and well-being are prioritized in design and production processes. Rather than replacing humans, technology is used to empower workers, improve safety, and tailor production to individual needs. For example, even with increasing automation, human insight is valued for handling uncertainties and ensuring flexibility on the factory floor. This pillar is a compromise to uphold human dignity and foster meaningful work while countering fears of alienation by automation.

- Sustainability: Industry 5.0 emphasizes environmentally sustainable and circular production systems. This includes the achievement of carbon-neutral operations, prioritizing recycling and reusing resources, and minimizing waste. The objective is to align industrial growth with ecological responsibility, beyond Industry 4.0, by including societal and environmental value [15]. In practice, AI-optimized processes should not only save costs but also contemplate the reduction of energy consumption and emissions to contribute to achieving global sustainability goals.

- Resilience: To develop the capacity to withstand and adapt to crises—whether pandemics, economic disruptions, or supply chain shocks. Industry 5.0 prioritizes robust design and contingency planning so that critical infrastructure and supply lines continue to function under stress [16]. Technologies from Industry 4.0 (such as IoT sensors and predictive analytics) are leveraged with a new focus on risk mitigation and agility. The COVID-19 pandemic and other recent crises highlighted the need for such resilience and informed the Industry 5.0 agenda.

2.2. Relevance of Collaborative Intelligence

3. Ethical Considerations in Collaborative Intelligence

3.1. Ethical Challenges to Advance Collaborative Intelligence

- Transparency: Collaborative AI systems often operate as “black boxes” that are difficult for humans to understand. Lack of transparency in how an AI makes decisions or how a robot’s actions are determined can breed mistrust and confusion. Ethically, there is a demand for explainability—humans should be able to get clear, intelligible reasons for an AI system’s recommendations or actions [32,33]. Transparency is also critical for informed consent: workers should know what data is being collected and how algorithms are using it. Without transparency, power imbalances emerge where only the system (or its vendors) “know” why certain decisions are made, leaving users in the dark. Ensuring transparency (through user dashboards, visualizations, or explainable AI techniques) can build understanding and calibrated trust, as users can verify and make sense of the system’s behavior [34].

- Privacy: Collaborative intelligence systems frequently rely on large amounts of data—including personal and sensitive data about workers that can be obtained from wearable devices and smart tools, which are an embodiment of the Internet of Things (IoT), which can later be used to train different AI models to estimate workers’ performance, health indicators, or movements, converging towards what can be referred to as Artificial Intelligence of Things (AIoT) [35]. This raises concerns about data privacy and surveillance. If not properly governed, such data collection can infringe on workers’ privacy rights and create a climate of surveillance that erodes trust and autonomy [36]. Ethical use of collaborative systems demands strict adherence to privacy principles: data should be collected only for legitimate, agreed-upon purposes and with consent wherever possible; it should be anonymized or minimized to protect identities; and robust cybersecurity must protect it from breaches. Privacy considerations extend beyond the workplace—as collaborative robots interact in shared human environments, video or sensor data could inadvertently capture bystanders or sensitive information, necessitating careful privacy-by-design measures.

- Autonomy and human agency: A core ethical tension in human-machine collaboration is balancing machine autonomy with human control. On one hand, the AI or robot needs a degree of autonomy to be useful (e.g., a cobot adjusting its movements in real-time or an AI filtering relevant information). On the other hand, if the machine’s autonomy encroaches on human decision-making without oversight, it can diminish human agency and accountability. Who is in charge? Ethically, humans should retain meaningful control over the overall task and have the ability to overrule or adjust the machine’s actions according to the six possible paradigms of Human-Machine Interaction: Humans in the Loop (HITL), Humans on the Loop (HOTL), Humans out of the Loop (HOOTL), Humans alongside the Loop (HATL), Humans-in-command (HIC), and Coactive Systems [37]. Maintaining human agency is not just about operational control but also psychological empowerment [38]—workers should feel they are active participants, not passive servants to an AI’s instructions.

- Accountability: With shared human–AI decision-making, it can become unclear who is accountable when something goes wrong. Is it the worker using the AI, the AI’s developer, the employer deploying it, or the machine itself (which, lacking personhood, cannot bear responsibility in a moral or legal sense)? This diffusion of responsibility is a serious ethical and legal challenge. Collaborative systems should be designed such that accountability is traceable and assignable [39]—for instance, by keeping logs of AI decisions, providing tools for audit, and defining roles so that humans have specific oversight duties. If an AI system recommends a faulty course of action, there should be mechanisms to investigate whether the human followed blindly or whether the AI provided misleading information. Ethically, companies and technology providers need to share accountability by ensuring proper training, setting reasonable expectations for human intervention, and responding to incidents with transparency. In the absence of clear accountability, trust in the system will erode—people will be reluctant to use systems if they fear being scapegoated for their errors, or conversely if they worry no one will be responsible if the system harms them.

- Fairness and non-discrimination are of particular ethical consideration in collaborative intelligence. AI systems embedded in industrial settings might make decisions about task assignments, evaluations of work quality, or even hiring and promotion (in advanced scenarios). If these algorithms carry biases, they could unfairly disadvantage certain groups [40]. For example, an AI scheduling system might inadvertently assign more repetitive or risky tasks to certain workers based on biased data, or a decision support tool might underrate the contributions of older workers if it is not designed carefully [41]. Ensuring fairness requires careful design and continual monitoring of algorithms to detect disparate impacts. It also intersects with diversity and inclusion—a human-centric Industry 5.0 must accommodate diverse needs and avoid one-size-fits-all automation that ignores, for instance, workers with disabilities or different skill profiles. Engaging a diverse range of stakeholders in system design can help pre-empt bias and foster equity.

- Safety and reliability: In collaborative environments, physical and psychological safety is paramount. Ethically, robots and AI should be rigorously tested to fail-safe—meaning any malfunction should default to a safe state that minimizes risk to humans. The ISO and industry safety standards (such as ISO 10218-1:2025, ISO 10218-2:2025, and ISO/TS 15066:2016 for robot safety and collaborative robots [42,43,44]) provide guidelines for physical robot collaboration limits (such as force and speed limits when near humans). However, beyond physical safety, there are psychosocial safety concerns: research has found that the introduction of cobots can cause stress, job insecurity, and role ambiguity for workers if not handled properly [45]. These manifest as psychosocial hazards that can affect mental health. Ethical deployment requires addressing such safety holistically by providing training to build confidence, ensuring the technology truly reduces (and does not add to) cognitive workload, and maintaining a work environment where humans feel safe working with and alongside robots. Reliability of AI is equally crucial; frequent errors or unpredictable behavior quickly destroy trust. Thus, an ethical system must not promise more than it can deliver—transparency about the system’s limits and uncertainties is better than a misleading aura of infallibility.

3.2. Stakeholder Perspectives on Ethical Challenges

- Workers (human operators): Front-line workers are directly affected by collaborative systems, with job security a primary concern. Collaborative robots and AI can provoke fears of displacement or role downgrading; studies show workers often view cobots as threats, particularly when collaboration seems minimal and replacement plausible [46]. Resistance stems from perceived threats to autonomy, skill obsolescence, and safety, so organizations must offer transparency, training, and dialogue to ease AI anxiety [47]. Such fears can breed stress, erode trust, and raise safety (e.g., “Will the robot strike me?”), agency (“Do I still control my work?”), and privacy (“Is constant monitoring invasive?”) worries. Ethically, workers expect respectful treatment and prioritization of their well-being. If a collaborative system demonstrably reduces drudgery or injury risk, and management clearly communicates its benefits, workers are likelier to accept it. Involving workers in design and rollout through participatory design, training sessions, and feedback loops is widely recommended to address their concerns [48].

- Employers (organizations/managers): Employers seek productivity gains, quality improvements, and flexibility from collaborative intelligence. They are stakeholders in ensuring ROI on these technologies. However, they also carry responsibilities for worker safety, legal compliance, and maintaining a motivated workforce. Ethically, employers must balance profit motives with the duty of care for employees. They may worry about liability—if an AI causes a bad decision, the company could be responsible. Thus, they have an interest in clear accountability frameworks and reliable system performance. Change management is another concern: how to implement collaborative systems without disrupting operations or sparking labor disputes. From a trust perspective, employers need to build organizational trust—workers must trust that management is introducing AI/robots to assist rather than surveil or replace them. Research suggests that engaging employees early and transparently can smooth the transition and reduce psychosocial risks. Employers also must consider skill development—they should provide training so employees can effectively collaborate with AI, which in turn can improve acceptance and outcomes [21]. Forward-looking employers see collaborative intelligence as augmenting their human talent, not depreciating it.

- Technology providers (engineers and vendors): Those who design and supply collaborative AI/robot systems have a stakeholder interest in the successful and ethical use of their products. Their reputation and market success may depend on users trusting their technology. Providers face the challenge of translating ethical principles into design features—for example, building explainability, user-friendly interfaces, and safety mechanisms. Many tech companies are now adopting “responsible AI” charters, recognizing that neglecting ethics can lead to user backlash or regulatory action [49]. Providers might worry about intellectual property vs. transparency—how much of their algorithm’s inner workings to reveal. Ethically, they have a responsibility to ensure their systems are not biased or dangerous, which requires thorough testing and perhaps adhering to standards or certifications. There is also the issue of support and updates: a collaborative system may evolve with software updates or new data; providers should continuously monitor for ethical or safety issues post-deployment (sometimes in collaboration with the client). In essence, technology providers must practice Ethics by Design and often need to educate and support their clients in deploying technology in line with ethical best practices.

- Society and regulators: Society has a direct stake in Industry 5.0’s trajectory—it will shape employment, inequality, and well-being. Many hope it delivers meaningful jobs and sustainable practices, not just greater output [1]. he public cares whether collaborative intelligence augments workers (upskilling and safer roles) or merely eliminates positions and increases surveillance. This raises justice issues: ensuring productivity gains translate into better conditions or work-life balance, not solely corporate profits. Regulators and policymakers (see Section 7) are crafting guidelines stressing human oversight, non-discrimination, and privacy—for example, the EU’s Trustworthy AI framework mandates human agency, robustness, privacy, transparency, diversity, and accountability [50,51]. If collaborative systems violate fundamental rights or societal values, there could be regulatory penalties or public pushback (for instance, strong unions might oppose dangerous or dehumanizing tech). Society also includes consumers: in some settings (such as healthcare or customer service), the end-users of collaborative intelligence outputs are the public, who will trust a company more if they know its AI is ethically governed. Overall, societal stakeholders demand that Industry 5.0’s trajectory align with the public interest—creating inclusive, safe, and human-centered progress rather than exacerbating social harms.

3.3. Alignment and Limits of Current Frameworks Towards Industry 5.0

4. Proposed Ethical Framework: Trust by Design

4.1. Foundational Principles of Trust by Design

- Human agency and empowerment: The system must augment rather than replace human intelligence, preserving human control where it matters. Collaborative AI should be designed to enhance human capabilities (cognitive or physical) and support human decision-making while ensuring that users can override or steer the AI’s actions when necessary. This principle upholds the value of autonomy—the human operator remains an active agent in the loop. For example, an AI decision aid might present options and recommendations but let the human confirm or adjust the final decision, thus acting as a supportive colleague, not an infallible oracle. All design choices (from default settings to emergency stop buttons) should reinforce that the human is ultimately in command.

- Transparency and explainability: The system should function as a “glass box” to the extent feasible, providing clear explanations or insights into its operations [32]. This includes making the AI’s decision logic interpretable and the robot’s intent foreseeable (through visual signals or predictable motions in the case of physical cobots). When users understand why the AI produced a certain output or what the robot is about to do, they can develop informed trust [7]. Trust by Design calls for integrating explainability features (e.g., justification dialogues, user queries to the AI) and ensuring the UI communicates uncertainty or confidence levels of the AI. Even if the underlying algorithms are complex (such as deep learning), the system should translate that complexity into user-relevant terms (such as highlighting which factors most influenced a recommendation). Transparency extends to data practices—users should know what data is being collected and how it is used (akin to a privacy notice embedded in the interface).

- Privacy and data governance: From the outset, systems should adhere to “privacy by design” [58,59,60]—collecting minimal data, securing it rigorously, and using it in ethically and legally appropriate ways. In Trust by Design, any personal or sensitive data (e.g., worker biometrics, productivity metrics) is handled with confidentiality and respect for user consent. Technical measures such as encryption, access controls, and on-device processing (to avoid unnecessary data transmission) are employed to protect privacy. Additionally, the framework mandates transparency to users about data usage and provides options to opt out or control certain data sharing where possible. By safeguarding privacy, the system demonstrates respect for the user, which is fundamental for trust.

- Fairness and inclusivity: The framework embeds checks to ensure the system’s decisions or actions do not systematically disadvantage any individual or group without justification. This involves using bias mitigation techniques during model training (for AI components) and diverse user testing to see how the system performs across different scenarios and users. The values of equality and justice require that, for instance, a decision support AI should apply the same standards to everyone and be audited for bias. Research has shown that AI-enabled recruitment tools can perpetuate biases, leading to discriminatory hiring practices based on gender, race, or other characteristics [61]. Such patterns should be detected and corrected to ensure fair treatment of all candidates. Inclusivity also means designing the human interface with accessibility in mind (for different physical abilities, language skills, etc.), ensuring all workers can effectively collaborate with the system.

- Safety and reliability: Borrowing from the principle of technical robustness in trustworthy AI [51], Trust by Design prioritizes safety measures at all levels. Physical safety is addressed by compliant robot design, safe stop mechanisms, and strict testing against scenarios of potential collision or misuse. The system should be fail-safe and fail-transparent—if errors occur, the system defaults to a safe state and informs the user. Reliability entails thorough validation so that the system behaves predictably within its defined operating conditions. This principle builds trust by minimizing the occurrence of unexpected or dangerous behavior. Furthermore, psychosocial safety is included: features or policies are in place to mitigate stress (for example, the system might be designed to adapt to the user’s pace rather than enforcing an uncomfortable speed). Alarms or notifications are tuned to avoid causing alarm fatigue or distraction. Overall, the system’s robust performance and safety track record form the bedrock of user confidence.

- Accountability and auditability: The design should allow for tracing decisions and actions back to their source. This means maintaining logs of AI recommendations, robot actions, and human overrides in a secure but reviewable manner. In case of an incident or ethical dilemma, these records enable an audit to understand what happened and why. More proactively, the system can include self-checks or ethical governors—for instance, an AI could have constraints that prevent it from recommending actions violating certain rules (much like how a thermostat would not go beyond certain limits). Accountability is also organizational: roles are defined so that there is always a human responsible for monitoring the system’s outputs (e.g., a shift supervisor who reviews all critical AI suggestions). This clarity prevents diffusion of responsibility and assures users that the system is under accountable oversight.

- User involvement and training: While more of a process principle than a design element, Trust by Design emphasizes co-design with end-users and comprehensive training as part of system development. By involving workers and domain experts in the design phase (through feedback sessions, pilots, etc.), designers can capture contextual ethical issues and trust concerns early on. The framework treats user education as part of the design: intuitive tutorials, simulations, and continuous learning resources are built into the rollout so that users gain competence and confidence in interacting with the AI/robot. A system is trustworthy not only because of its internal qualities but because users feel competent in using it; thus, designing the learning curve and support materials is an ethical imperative here.

4.2. Embedding Trust in the Lifecycle of AI Systems

- Design and development stage: During design, user research and risk analysis inform features that directly address trust issues (e.g., adding an explanation panel after finding in user studies that operators mistrust opaque AI outputs). Simulation and modeling are used to foresee interaction patterns—for instance, simulate scenarios where the AI is wrong and ensure the system handles it gracefully (alerting the user, offering fallback). At this stage, ethical risk assessment is carried out to identify where things could go ethically wrong and to mitigate those risks upfront. Engineers incorporate redundant safety mechanisms so that if one component fails, another catches it (increasing reliability trust). Agile development methods can integrate ethics by having “ethical user stories”—e.g., “As a worker, I want to know why the scheduling AI gave me more shifts than my colleague, so that I feel the process is fair”. This user story would lead to implementing an explanation or adjustment feature.

- Testing and validation stage: The system is evaluated not only for functionality but also for ethical compliance and trust factors. This might involve user testing sessions specifically to gauge trust: do users feel comfortable after using the system? Can they correctly recount what the AI or robot did and why? Any confusion or discomfort is a red flag to address. Measures such as the Trust Scale [65] (a survey instrument from human factors research) can quantify user trust levels during trials. Additionally, safety tests under various edge cases show whether the system meets the safety principle. If during testing a scenario reveals, say, an ambiguous robot motion that startles workers, designers refine the motion planning to be more transparent (maybe slowing down and using a signal when humans approach). The system may also undergo an ethical audit by an internal or external committee to verify that privacy controls work, data bias is absent, and so on. By iterating at this stage, the final product that goes live is already tuned for trustworthiness.

- Deployment stage: Initial deployment is performed in a pilot or phased manner to build trust gradually. Trust by Design encourages introducing the system with a human-led orientation: explaining to the team the goals of the system, how it works (in lay terms), and addressing questions openly. Early positive experiences are crucial—thus, maybe the system starts with assisting in low-stakes tasks and, as users gain confidence, moves to more critical functions. Mentorship models can be employed (a tech champion on the factory floor helps peers learn the system, building peer trust). Moreover, the system itself can have built-in tutorials or AI-guided onboarding—for example, a collaborative robot might initially operate in a slower “training mode” around new users, essentially earning trust by demonstrating consistent safe behavior, and only later ramp up to full speed.

- Operation and maintenance stage: Trust is maintained through ongoing support and system transparency. The framework suggests continuous monitoring of system performance and user feedback. Dashboards for supervisors could show system health and any anomalies (transparency at the management level ensures they trust it and will advocate its use to workers). If the AI encounters a situation outside its training (novel input), it can either abstain or seek human confirmation, rather than act unpredictably—this humility in AI behavior (knowing when to defer to humans) significantly boosts trust. Regular training refreshers or update notes keep users in the loop on any changes, so they never feel the system is drifting beyond their understanding. From a technical side, predictive maintenance for hardware and retraining of AI models ensure the system remains reliable and up-to-date, preventing degradation of trust due to aging components or stale data.

- Feedback and evolution stage: A trustworthy system welcomes user feedback and adapts. Trust by Design embeds feedback channels—perhaps a feature for users to flag if an AI suggestion did not seem right or if they felt uncomfortable at any point. This feedback is reviewed by the development team or ethics committee to identify new ethical issues or needed improvements. In effect, the ethical framework itself evolves: maybe real-world use uncovers a scenario not anticipated (e.g., workers developing an overreliance on the AI for trivial decisions). The organization can then tweak procedures or the system (such as adding periodic “are you sure?” prompts or rotating tasks to keep skills sharp) to correct this. This responsiveness shows users that their trust in the system and the organization is reciprocated—the company is committed to continuous ethical improvement, not just a one-off deployment.

4.3. Navigating Trade-Offs and Proportionality

- Transparency vs. privacy—explaining decisions without over-exposing personal data.

- Human agency vs. safety and reliability—letting people override the system without undermining safeguards.

- Accountability vs. privacy—keeping audit trails while respecting individual confidentiality.

- Fairness and inclusivity vs. safety and reliability—tailoring for diverse users without weakening predictable behavior.

- Autonomy vs. Fairness—giving supervisors discretion without reintroducing bias.

- (a)

- Identify stakeholders and values in tension: Clearly identify all stakeholders affected by the conflict and explicitly state the ethical principles or values that appear to be competing.

- (b)

- Conduct relative impact analysis: Evaluate the potential impact and consequences of favoring each conflicting principle by applying tools such as a least-intrusion test, risk-benefit matrices, or ethical impact assessments.

- (c)

- Mitigate and document chosen safeguards: Develop and select specific technical or procedural safeguards designed to harmonize the conflicting ethical requirements, seeking the least intrusive yet effective solutions. Document thoroughly the rationale, justifications, and chosen mitigations to ensure accountability, transparency, and auditability.

5. Framework Implementation

5.1. Integration into Design and Development Processes

5.2. Ethical Risk Assessment Methodologies

5.3. Governance Structures and Responsibility Allocation

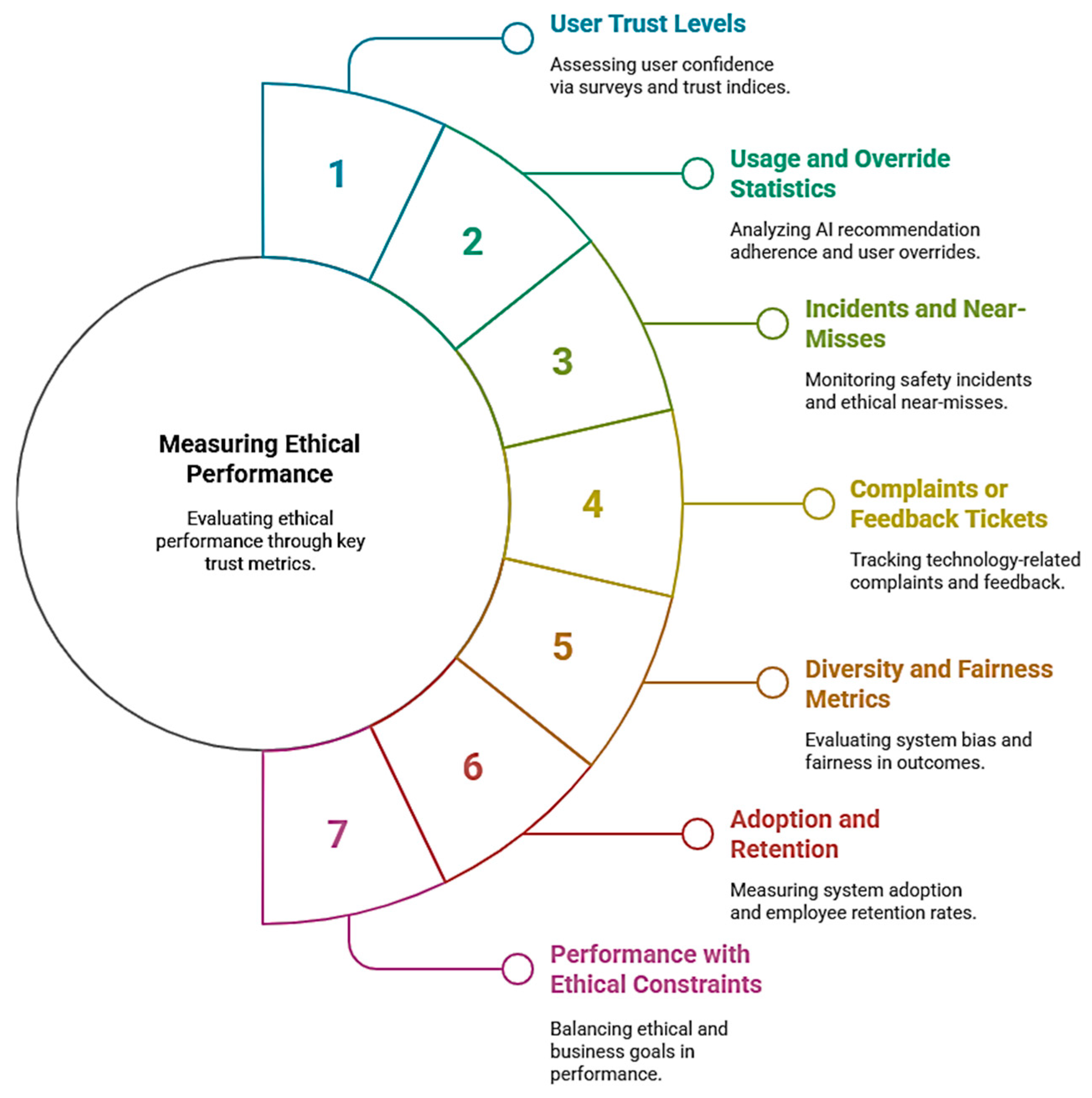

5.4. Metrics for Measuring Ethical Performance

- User trust levels: Measured via periodic surveys or interviews. Questions can gauge confidence in the system, perceived transparency, perceived impact on job satisfaction, etc. For instance, a trust index might be compiled from statements such as “I can predict how the robot will behave” or “The AI’s recommendations are generally sensible” rated by users. High trust scores (with healthy calibration—not overtrust) indicate success.

- Usage and override statistics: How often do users follow the AI recommendations vs. override them? How frequently do they resort to manual control of a cobot? If overrides are extremely high, it might indicate a lack of trust or usefulness. If overrides are zero but there were some AI errors that went unchecked, it could indicate over-trust or complacency. Balanced behavior where users appropriately rely on the system most of the time but occasionally correct it when needed would show well-calibrated trust.

- Incidents and near misses: Track any safety incidents or ethical issues (like a time the AI made a biased suggestion that was caught). Even if no actual harm occurred, near-miss reporting is invaluable. A log of “the AI almost caused X, but a human caught it” or “a worker felt uncomfortable with Y scenario” helps identify weak points. The goal is to see these numbers trend down as the system and training improve. An increasing trend would signal a need for intervention.

- Complaints or feedback tickets: If the company has a channel for employees to express concerns about technology, the number and nature of complaints related to the collaborative system is a metric. For example, if privacy complaints drop to zero after an update that clarified data use, that’s a win.

- Diversity and fairness metrics: Analyze system outcomes for potential bias. For example, if it is an AI allocating shifts or maintenance tasks, measure distribution across employees to see if any group is overburdened. If it is a quality control AI flagging human work, ensure no particular worker’s outputs are flagged disproportionately without explanation. Fairness metrics could include statistical parity indices or disparate impact ratios drawn from the AI ethics literature, applied to the specific context.

- Adoption and retention: Indirectly, trust is reflected in continued usage. Metrics such as how many tasks are successfully handled by human-AI teams versus reverted to manual processes can indicate acceptance. In training contexts, whether new employees are quick to learn the system can reflect its intuitiveness (a proxy for good design). Even employee retention or attrition rates in teams using the new system versus those that do not, could be insightful—ideally, the introduction of collaborative intelligence does not drive people to quit and perhaps even improves retention if it makes jobs easier or more engaging.

- Performance with ethical constraints: If the system uses multi-objective optimization including ethical factors, measure how well it is balancing them. For example, a scheduling system might have a target of no worker getting more than X hours of strenuous work. The metric would be the percentage of schedules adhering to that. Meeting ethical targets while achieving business goals demonstrates the framework’s success.

6. Application Scenarios

6.1. Scenario 1: Collaborative Robots in Manufacturing

6.2. Scenario 2: AI Decision Support in Industrial Operations

6.3. Scenario 3: Human Augmentation Technologies in Industrial Settings

7. Regulatory and Policy Implications

7.1. Comparative Regulatory Approaches in Europe and the United States

7.2. Industry Standards and Best Practices

- The ISO and IEEE are notable: IEEE’s 7000-series ethics standards—for example, IEEE 7001-2021 Transparency of Autonomous Systems and IEEE 7007-2021 Ontological Standard for Ethically Driven Robotics and Automation Systems—give engineers concrete, testable requirements for embedding ethical attributes [80,81]. ISO is working on an AI management system standard for AI governance, the ISO/IEC 42001:2023 Artificial Intelligence—Management System [82]. Companies can voluntarily adopt these to demonstrate their commitment to best practices. We recommend that industries adopt a certification approach—for example, a “Trustworthy AI” or “Collaborative System Safety” certification from a recognized body would signal to stakeholders (including insurers, clients, and employees) that the system meets a high ethical standard.

- Best practices sharing: Organizations such as the Robotics Industries Association (RIA) in the US or the International Federation of Robotics (IFR) often publish technical reports and case studies. For instance, guidance on implementing cobots safely or lessons learned from human-AI teamwork. Policymakers can encourage industry consortia to develop open guidelines—analogous to how the automotive industry shares safety test protocols. In the context of Industry 5.0, best practices might include how to involve employees in tech deployments or how to run an effective pilot. Companies should not have to reinvent the wheel ethically; documenting and sharing what works (such as effective training methods or interface designs that improved trust) can accelerate widespread adoption of trust-centric design.

- Another best practice is aligning corporate governance with these ideals: e.g., companies could incorporate ethical AI use into their ESG (Environmental, Social, Governance) reporting. Already, some companies report diversity and safety metrics; adding AI ethics metrics (such as the number of AI systems assessed for bias or having ethics committees) could become part of social responsibility indices. This pressures companies to follow frameworks such as Trust by Design to meet investor and public expectations.

8. Future Research Directions

8.1. Emerging Ethical Challenges

8.2. Technological Developments Impacting the Framework

8.3. Cross-Disciplinary Research Opportunities

8.4. Framework Evolution and Adaptation

9. Limitations

- (a)

- Role-based access controls on audit trails, so that only designated safety or ethics officers (not every manager) can view sensitive logs.

- (b)

- Clear data use policies that prohibit performance monitoring or punitive use of trust metrics, with enforceable penalties for violation.

- (c)

- Periodic “red-team” reviews by independent stakeholder representatives to ensure that data and controls remain aligned with user empowerment rather than managerial oversight.

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Trust by Design Implementation Checklist

- Initial Setup and Planning

- Governance and Responsibility

- Design and Development Stage

- Ethical Risk Assessment

- Testing and Validation Stage

- Deployment Stage

- Operation and Maintenance Stage

- Feedback and Evolution Stage

- Measurement and Reporting

- Trust by Design Implementation Checklist

- Initial Setup and Planning

- ☐

- Establish a cross-functional team including engineers, HR, safety officers, and ethics/legal experts

- ☐

- Define ethical goals and requirements alongside functional requirements

- ☐

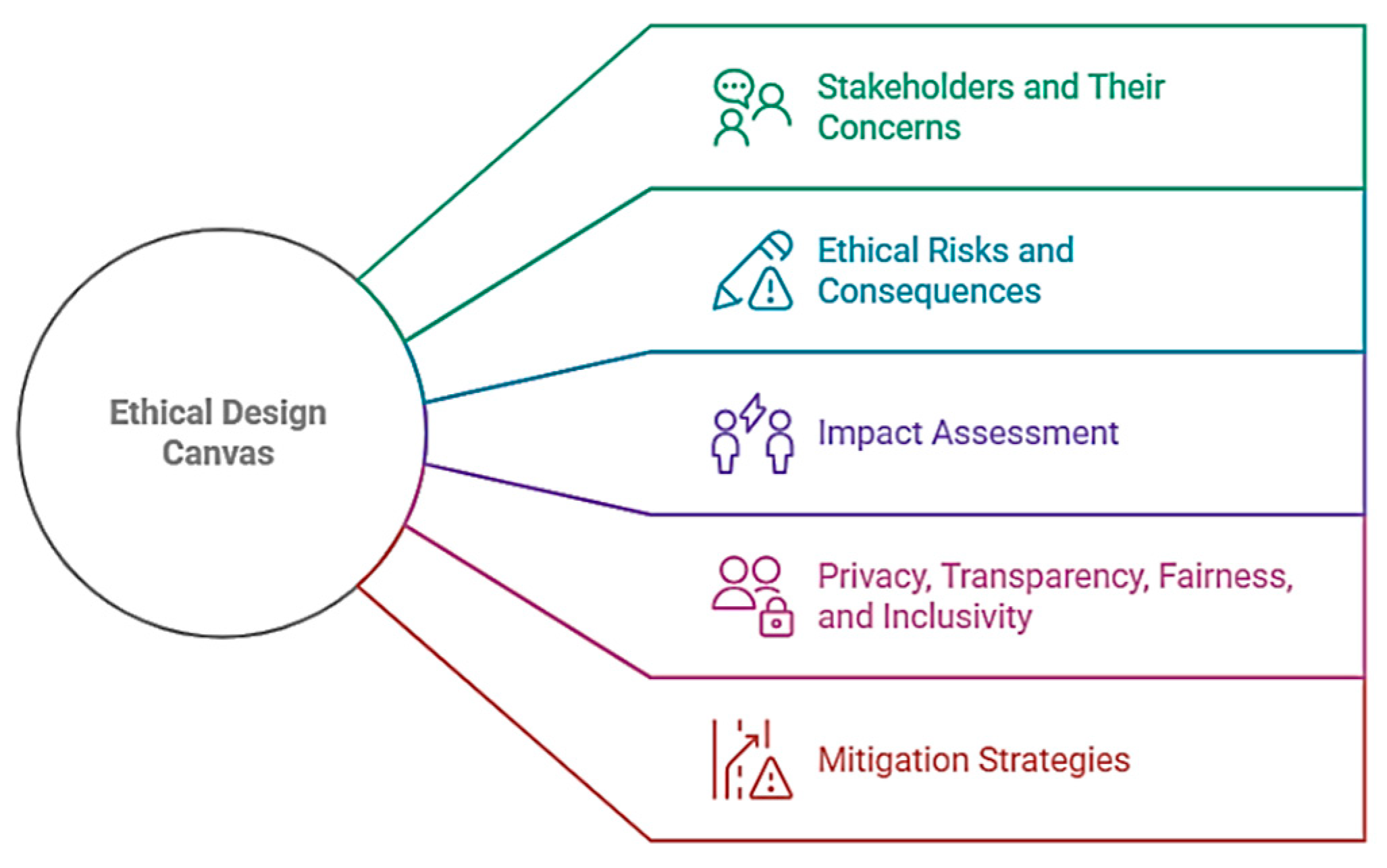

- Create an Ethical Design Canvas mapping stakeholders, potential harms, and mitigation strategies

- ☐

- Develop user journey maps that include emotional and trust-related states

- ☐

- Establish project-specific Trust by Design metrics and KPIs

- Governance and Responsibility

- ☐

- Designate ethics officer or form an AI ethics committee

- ☐

- Define clear roles and responsibilities for ethical oversight

- ☐

- Create a multi-stakeholder governance approach involving different organizational levels

- ☐

- Establish procedures for ethical review at key project milestones

- ☐

- Set up regular governance meetings (quarterly/annually) to review ethical performance

- Design and Development Stage

- ☐

- Incorporate ethical user stories in requirements documentation

- ☐

- Perform simulations and modeling to foresee interaction patterns

- ☐

- Design redundant safety mechanisms

- ☐

- Integrate ethics checkpoints in development cycles (Agile/DevOps)

- ☐

- Create explanation panels or features for AI outputs

- ☐

- Design features that address each core principle:

- ☐

- Human agency (override functions and confirmation requests)

- ☐

- Transparency (explanations and confidence levels)

- ☐

- Privacy (data minimization, encryption, and access controls)

- ☐

- Fairness (bias mitigation techniques)

- ☐

- Safety (fail-safe mechanisms and predictable behavior)

- ☐

- Accountability (decision logs and audit trails)

- ☐

- User involvement (tutorials and training resources)

- Ethical Risk Assessment

- ☐

- Conduct comprehensive ethical risk assessment or “Ethical FMEA”

- ☐

- Identify potential ethical and trust failure modes

- ☐

- Analyze each risk for likelihood and impact

- ☐

- Develop mitigations for each identified risk

- ☐

- Document limitations for risks that cannot be fully resolved

- ☐

- Align with existing safety risk assessments

- Testing and Validation Stage

- ☐

- Conduct user testing specifically to gauge trust levels

- ☐

- Use trust scales or surveys to quantify user trust

- ☐

- Perform safety tests under various edge cases

- ☐

- Conduct an ethical audit (internal or external)

- ☐

- Test with diverse users to ensure inclusivity

- ☐

- Verify compliance with relevant standards (e.g., IEEE 7000-2021)

- ☐

- Iterate design based on trust-related feedback

- Deployment Stage

- ☐

- Plan pilot or phased deployment approach

- ☐

- Prepare human-led orientation explaining the system’s goals and operations

- ☐

- Create mentorship models (tech champions for peer training)

- ☐

- Design built-in tutorials or AI-guided onboarding

- ☐

- Start with low-stakes tasks before progressing to critical functions

- ☐

- Communicate clearly about data usage and privacy controls

- Operation and Maintenance Stage

- ☐

- Implement continuous monitoring of system performance and user feedback

- ☐

- Create dashboards showing system health and anomalies

- ☐

- Program AI to defer to humans in novel situations

- ☐

- Schedule regular training refreshers and update communications

- ☐

- Implement predictive maintenance and AI model retraining

- ☐

- Monitor for signs of overreliance or skill atrophy

- Feedback and Evolution Stage

- ☐

- Establish accessible feedback channels for users

- ☐

- Create process for reviewing feedback by development team or ethics committee

- ☐

- Schedule periodic reassessment of ethical risks, especially when scaling

- ☐

- Document any new scenarios or issues that emerge in real-world use

- ☐

- Update the system based on feedback and evolving ethical considerations

- Measurement and Reporting

- ☐

- Track user trust levels through surveys or interviews

- ☐

- Monitor usage and override statistics

- ☐

- Log incidents and near misses

- ☐

- Track complaints or feedback tickets

- ☐

- Analyse diversity and fairness metrics

- ☐

- Measure adoption and retention rates

- ☐

- Evaluate performance against ethical constraints

- ☐

- Create transparent reporting dashboards

- ☐

- Establish response protocols for negative metrics

References

- Yitmen, I.; Almusaed, A.; Alizadehsalehi, S. Investigating the causal relationships among enablers of the construction 5.0 paradigm: Integration of operator 5.0 and society 5.0 with human-centricity, sustainability, and resilience. Sustainability 2023, 15, 9105. [Google Scholar] [CrossRef]

- Trstenjak, M.; Benešova, A.; Opetuk, T.; Cajner, H. Human Factors and Ergonomics in Industry 5.0—A Systematic Literature Review. Appl. Sci. 2025, 15, 2123. [Google Scholar] [CrossRef]

- Bhatt, A.; Bae, J. Collaborative Intelligence to catalyze the digital transformation of healthcare. NPJ Digit. Med. 2023, 6, 177. [Google Scholar] [CrossRef]

- Alves, M.; Seringa, J.; Silvestre, T.; Magalhães, T. Use of artificial intelligence tools in supporting decision-making in hospital management. BMC Health Serv. Res. 2024, 24, 1282. [Google Scholar] [CrossRef]

- Ammeling, J.; Aubreville, M.; Fritz, A.; Kießig, A.; Krügel, S.; Uhl, M. An interdisciplinary perspective on AI-supported decision making in medicine. Technol. Soc. 2025, 81, 102791. [Google Scholar] [CrossRef]

- Przegalinska, A.; Triantoro, T.; Kovbasiuk, A.; Ciechanowski, L.; Freeman, R.B.; Sowa, K. Collaborative AI in the workplace: Enhancing organizational performance through resource-based and task-technology fit perspectives. Int. J. Inf. Manag. 2025, 81, 102853. [Google Scholar] [CrossRef]

- Loizaga, E.; Bastida, L.; Sillaurren, S.; Moya, A.; Toledo, N. Modelling and Measuring Trust in Human–Robot Collaboration. Appl. Sci. 2024, 14, 1919. [Google Scholar] [CrossRef]

- Lykov, D.; Razumowsky, A. Industry 5.0 and Human Capital. In Proceedings of the International Scientific and Practical Conference “Environmental Risks and Safety in Mechanical Engineering”, Rostov-on-Don, Russia, 1–3 March 2023; p. 2023. [Google Scholar]

- Riar, M.; Weber, M.; Ebert, J.; Morschheuser, B. Can Gamification Foster Trust-Building in Human-Robot Collaboration? An Experiment in Virtual Reality. Inf. Syst. Front. 2025, 1–26. [Google Scholar] [CrossRef]

- Iqbal, M.; Lee, C.K.; Ren, J.Z. Industry 5.0: From Manufacturing Industry to Sustainable Society. In Proceedings of the 2022 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Kuala Lumpur, Malaysia, 7–10 December 2022; pp. 1416–1421. [Google Scholar]

- Shahruddin, S.; Sonet, U.N.; Azmi, A.; Zainordin, N. Traversing the complexity of digital construction and beyond through soft skills: Experiences of Malaysian architects. Eng. Constr. Archit. Manag. 2024. [Google Scholar] [CrossRef]

- Damaševičius, R.; Vasiljevas, M.; Narbutaitė, L.; Blažauskas, T. Exploring the impact of collaborative robots on human–machine cooperation in the era of Industry 5.0. In Modern Technologies and Tools Supporting the Development of Industry 5.0; CRC Press: Boca Raton, FL, USA, 2024. [Google Scholar]

- Martini, B.; Bellisario, D.; Coletti, P. Human-Centered and Sustainable Artificial Intelligence in Industry 5.0: Challenges and Perspectives. Sustainability 2024, 16, 5448. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Cruz, A.M.; Lopes, S.I. An Overview of Blockchain for Industry 5.0: Towards Human-Centric, Sustainable and Resilient Applications. IEEE Access 2024, 12, 116162–116201. [Google Scholar] [CrossRef]

- Rame, R.; Purwanto, P.; Sudarno, S. Industry 5.0 and sustainability: An overview of emerging trends and challenges for a green future. Innov. Green Dev. 2024, 3, 100173. [Google Scholar] [CrossRef]

- Brückner, A.; Wölke, M.; Hein-Pensel, F.; Schero, E.; Winkler, H.; Jabs, I. Assessing industry 5.0 readiness—Prototype of a holistic digital index to evaluate sustainability, resilience and human-centered factors. Int. J. Inf. Manag. Data Insights 2025, 5, 100329. [Google Scholar] [CrossRef]

- Chew, Y.C.; Mohamed Zainal, S.R. A Sustainable Collaborative Talent Management Through Collaborative Intelligence Mindset Theory: A Systematic Review. Sage Open 2024, 14, 1–22. [Google Scholar] [CrossRef]

- Rijwani, T.; Kumari, S.; Srinivas, R.; Abhishek, K.; Iyer, G.; Vara, H.; Gupta, M. Industry 5.0: A review of emerging trends and transformative technologies in the next industrial revolution. Int. J. Interact. Des. Manuf. 2025, 19, 667–679. [Google Scholar] [CrossRef]

- Langås, E.F.; Zafar, M.H.; Sanfilippo, F. Exploring the synergy of human-robot teaming, digital twins, and machine learning in industry 5.0: A step towards sustainable manufacturing. J. Intell. Manuf. 2025, 1–24. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chang, V. A Multi-criteria decision-making Framework to evaluate the impact of industry 5.0 technologies: Case Study, lessons learned, challenges and future directions. Inf. Syst. Front. 2024, 1–31. [Google Scholar] [CrossRef]

- Hassan, M.; Zardari, S.; Farooq, M.; Alansari, M.; Nagro, S. Systematic Analysis of Risks in Industry 5.0 Architecture. Appl. Sci. 2024, 14, 1466. [Google Scholar] [CrossRef]

- Karadayi-Usta, S. An Interpretive Structural Analysis for Industry 4.0 Adoption Challenges. IEEE Trans. Eng. Manag. 2020, 67, 973–978. [Google Scholar] [CrossRef]

- Hsu, C.-H.; Li, Z.-H.; Zhuo, H.-J.; Zhang, T.-Y. Enabling Industry 5.0-Driven Circular Economy Transformation: A Strategic Roadmap. Sustainability 2024, 16, 9954. [Google Scholar] [CrossRef]

- Carayannis, E.G.; Kafka, K.I.; Kostis, P.C.; Valvi, T. Robust, Resilient and Remunerative (R3) SMEs Ecosystems in the Quintuple Helix Context: Industry 5.0, Society 5.0 and AI Modalities Challenges and Opportunities for Theory, Policy and Practice. In The Economic Impact of Small and Medium-Sized Enterprises; Springer Nature: Berlin/Heidelberg, Germany, 2024; pp. 173–191. [Google Scholar]

- Santos, B.; Costa, R.L.; Santos, L. Cybersecurity in Industry 5.0: Open Challenges and Future Directions. In Proceedings of the 21st Annual International Conference on Privacy, Security and Trust (PST), Sydney, Australia, 28–30 August 2024; pp. 1–6. [Google Scholar]

- Chaudhuri, A.; Behera, R.K.; Bala, P.K. Factors impacting cybersecurity transformation: An Industry 5.0 perspective. Comput. Secur. 2025, 150, 104267. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Mahdiraji, H.A.; Iranmanesh, M.; Jafari-Sadeghi, V. From Industry 4.0 digital manufacturing to Industry 5.0 digital society: A roadmap toward human-centric, sustainable, and resilient production. Inf. Syst. Front. 2024, 1–33. [Google Scholar] [CrossRef]

- Chrifi-Alaoui, C.; Bouhaddou, I.; Benabdellah, A.C.; Zekhnini, K. Industry 5.0 for Sustainable Supply Chains: A Fuzzy AHP Approach for Evaluating the adoption Barriers. Procedia Comput. Sci. 2025, 253, 2645–2654. [Google Scholar] [CrossRef]

- Campagna, G.; Lagomarsino, M.; Lorenzini, M.; Chrysostomou, D.; Rehm, M.; Ajoudani, A. Promoting Trust in Industrial Human-Robot Collaboration Through Preference-Based Optimization. IEEE Robot. Autom. Lett. 2024, 9, 9255–9262. [Google Scholar] [CrossRef]

- Chen, N.; Liu, X.; Hu, X. Effects of robots’ character and information disclosure on human–robot trust and the mediating role of social presence. Int. J. Soc. Robot. 2024, 16, 811–825. [Google Scholar] [CrossRef]

- Thurzo, A. How is AI Transforming Medical Research, Education and Practice? Bratisl. Med. J. 2025, 126, 243–248. [Google Scholar] [CrossRef]

- Textor, C.; Zhang, R.; Lopez, J.; Schelble, B.G.; McNeese, N.J.; Freeman, G.; Visser, E.J. Exploring the Relationship Between Ethics and Trust in Human–Artificial Intelligence Teaming: A Mixed Methods Approach. J. Cogn. Eng. Decis. Mak. 2022, 16, 252–281. [Google Scholar] [CrossRef]

- IBM. What Is AI Ethics? Available online: https://www.mckinsey.com/capabilities/quantumblack/our-insights/building-ai-trust-the-key-role-of-explainability#/ (accessed on 15 January 2025).

- Giovine, C.; Roberts, R.; Pometti, M.; Bankhwal, M. Building AI Trust: The Key Role of Explainability. 2024. Available online: https://www.ibm.com/think/topics/ai-ethics (accessed on 20 February 2025).

- Hadzovic, S.; Mrdovic, S.; Radonjic, M. A Path Towards an Internet of Things and Artificial Intelligence Regulatory Framework. IEEE Commun. Mag. 2023, 61, 90–96. [Google Scholar] [CrossRef]

- Kolesnikov, M.; Lossi, L.; Alberti, E.; Atmojo, U.D.; Vyatkin, V. Addressing Privacy and Security Challenges at the Industry 5.0 Human-Intensive and Highly Automated Factory Floor. In Proceedings of the IECON 2024 50th Annual Conference of the IEEE Industrial Electronics Society, Chicago, IL, USA, 3–6 November 2024; pp. 1–6. [Google Scholar]

- Malatji, M. Evaluating Human-Machine Interaction Paradigms for Effective Human-Artificial Intelligence Collaboration in Cybersecurity. In Proceedings of the 2024 International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), Bali, Indonesia, 17–19 December 2024; pp. 1268–1272. [Google Scholar]

- Usmani, U.A.; Happonen, A.; Watada, J. Human-Centered Artificial Intelligence: Designing for User Empowerment and Ethical Considerations. In Proceedings of the 2023 5th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Istanbul, Turkey, 8–10 June 2023; pp. 1–7. [Google Scholar]

- Samarawickrama, M. The Irreducibility of Consciousness in Human Intelligence: Implications for AI, Legal Accountability, and the Human-in-the-Loop Approach. In Proceedings of the 2024 IEEE Conference on Engineering Informatics (ICEI), Melbourne, Australia, 20–28 November 2024; pp. 1–7. [Google Scholar]

- D’souza, C.; Tapas, P. Diversity 5.0 framework: Managing innovation in Industry 5.0 through diversity and inclusion. Eur. J. Innov. Manag. 2024. [Google Scholar] [CrossRef]

- Aydin, E.; Rahman, M.; Bulut, C.; Biloslavo, R. Technological Advancements and Organizational Discrimination: The Dual Impact of Industry 5.0 on Migrant Workers. Adm. Sci. 2024, 14, 240. [Google Scholar] [CrossRef]

- ISO/TS 15066:2016; Robots and Robotic Devices—Collaborative robots. ISO: Geneva, Switzerland, 2016.

- ISO10218-1:2025; Robotics—Safety Requirements Part 1: Industrial Robots. ISO: Geneva, Switzerland, 2025.

- ISO10218-2:2025; Robotics—Safety Requirements Part 2: Industrial Robot Applications and Robot Cells. ISO: Geneva, Switzerland, 2025.

- Makeshkumar, M.; Sasi Kumar, M.; Anburaj, J.; Ramesh Babu, S.; Vembarasan, E.; Sanjiv, R. Role of Cobots and Industrial Robots in Industry 5.0. In Intelligent Robots and Cobots: Industry 5.0 Applications; Wiley Online Library: Hoboken, NJ, USA, 2025; pp. 43–63. [Google Scholar]

- Liao, S.; Lin, L.; Chen, Q. Research on the acceptance of collaborative robots for the industry 5.0 era–The mediating effect of perceived competence and the moderating effect of robot use self-efficacy. Int. J. Ind. Ergon. 2023, 95, 103455. [Google Scholar] [CrossRef]

- Golgeci, I.; Ritala, P.; Arslan, A.; McKenna, B.; Ali, I. Confronting and alleviating AI resistance in the workplace: An integrative review and a process framework. Hum. Resour. Manag. Rev. 2025, 35, 101075. [Google Scholar] [CrossRef]

- Jacob, F.; Grosse, E.H.; Morana, S.; König, C.J. Picking with a robot colleague: A systematic literature review and evaluation of technology acceptance in human–robot collaborative warehouses. Comput. Ind. Eng. 2023, 180, 109262. [Google Scholar] [CrossRef]

- Lawton, R.; Boswell, S.; Crockett, K. The GM AI Foundry: A Model for Upskilling SME’s in Responsible AI. In Proceedings of the 2023 IEEE Symposium Series on Computational Intelligence (SSSCI), Mexico City, Mexico, 5–8 December 2023; pp. 1781–1787. [Google Scholar]

- OECD. OECD Principles on Artificial Intelligence; OECD Legal Instruments: 2019. Available online: https://www.oecd.org/en/topics/sub-issues/ai-principles.html (accessed on 10 December 2024).

- Directorate-General for Communications Networks, Content and Technology. The Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment; European Commission: Brussels, Belgium; Luxembourg, 2020. [Google Scholar]

- Bohr, B. How to Turn Ethical Values Into System Requirements: Lessons Learned from Adopting a New IEEE Standard in the Business World. IEEE Softw. 2025, 42, 9–16. [Google Scholar] [CrossRef]

- Brey, P.; Dainow, B. Ethics by design for artificial intelligence. AI Ethics 2024, 4, 1265–1277. [Google Scholar] [CrossRef]

- Callari, T.C.; Segate, R.V.; Hubbard, E.M.; Daly, A.; Lohse, N. An ethical framework for human-robot collaboration for the future people-centric manufacturing: A collaborative endeavour with European subject-matter experts in ethics. Technol. Soc. 2024, 78, 102680. [Google Scholar] [CrossRef]

- Palumbo, G.; Carneiro, D.; Alves, V. Objective metrics for ethical AI: A systematic literature review. Int. J. Data Sci. Anal. 2024, 1–21. [Google Scholar] [CrossRef]

- Thurzo, A. ProvableAI Ethics andExplainability in Medical andEducationalAIAgents: TrustworthyEthical Firewall. Electronics 2025, 14, 1294. [Google Scholar] [CrossRef]

- Nurock, V.; Chatila, R.; Parizeau, M.H. What Does “Ethical by Design” Mean? In Reflections on Artificial Intelligence for Humanity; Braunschweig, M.I.B., Ghallab, M., Eds.; Springer Nature: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Drev, M.; Delak, B. Conceptual Model of Privacy by Design. J. Comput. Inf. Syst. 2021, 62, 888–895. [Google Scholar] [CrossRef]

- Andrade, V.C.; Gomes, R.D.; Reinehr, S.; Freitas, C.O.; Malucelli, A. Privacy by design and software engineering: A systematic literature review. In Proceedings of the XXI Brazilian Symposium on Software Quality, Curitiba, Brazil, 7–10 November 2022; pp. 1–10. [Google Scholar]

- Kassem, J.A.; Müller, T.; Esterhuyse, C.A.; Kebede, M.G.; Osseyran, A.; Grosso, P. The EPI framework: A data privacy by design framework to support healthcare use cases. Future Gener. Comput. Syst. 2025, 165, 107550. [Google Scholar] [CrossRef]

- Chen, Z. Ethics and discrimination in artificial intelligence-enabled recruitment practices. Humanit. Soc. Sci. Commun. 2023, 10, 567. [Google Scholar] [CrossRef]

- Friedman, B.; Kahn, P.; Borning, A.; Huldtgren, A. Value Sensitive Design and Information Systems. In Early Engagement and New Technologies: Opening up the Laboratory; Doorn, N., Schuurbiers, D., Poel, I., Gorman, M., Eds.; Philosophy of Engineering and Technology; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Tolmeijer, S.; Christen, M.; Kandul, S.; Kneer, M.; Bernstein, A. Capable but amoral? Comparing AI and human expert collaboration in ethical decision making. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–17. [Google Scholar]

- Frigo, G.; Marthaler, F.; Albers, A.; Ott, S.; Hillerbrand, R. Training responsible engineers. Phronesis and the role of virtues in teaching engineering ethics. Australas. J. Eng. Educ. 2021, 26, 25–37. [Google Scholar] [CrossRef]

- Yagoda, R.; Gillan, D. You Want Me to Trust a ROBOT? The Development of a Human–Robot Interaction Trust Scale. Int. J. Soc. Robot. 2012, 4, 235–248. [Google Scholar] [CrossRef]

- Merritt, S.M.; Ilgen, D.R. Not All Trust Is Created Equal: Dispositional and History-Based Trust in Human-Automation Interactions. Hum. Factors 2008, 50, 194–210. [Google Scholar] [CrossRef] [PubMed]

- Reijers, W.; Koidl, K.; Lewis, D.; Pandit, H.; Gordijn, B. Discussing Ethical Impacts in Research and Innovation: The Ethics Canvas. In This Changes Everything—ICT and Climate Change: What Can We Do? Kreps, D., Ess, C., Leenen, L., Kimppa, K., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 537, pp. 299–313. [Google Scholar]

- Endmann, A.; Keßner, D. User Journey Mapping—A Method in User Experience Design. I-Com 2016, 15, 105–110. [Google Scholar] [CrossRef]

- Berx, N.; Adriaensen, A.; Decré, W.; Pintelon, L. Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption. Safety 2022, 8, 48. [Google Scholar] [CrossRef]

- Ong, J.C.; Chang, S.Y.; William, W.; Butte, A.J.; Shah, N.H.; Chew, L.S.; Ting, D.S. Ethical and regulatory challenges of large language models in medicine. Lancet Digit. Health 2024, 6, e428–e432. [Google Scholar] [CrossRef]

- UNESCO. Ethical Impact Assessment: A Tool of the Recommendation on the Ethics of Artificial Intelligence; UNESCO: Paris, France, 2023. [Google Scholar]

- Cancela-Outeda, C. The EU’s AI act: A framework for collaborative governance. Internet Things 2024, 27, 101291. [Google Scholar] [CrossRef]

- Rozenblit, L.; Price, A.; Solomonides, A.; Joseph, A.L.; Srivastava, G.; Labkoff, S.; Quintana, Y. Towards a Multi-Stakeholder process for developing responsible AI governance in consumer health. Int. J. Med. Inf. 2025, 195, 105713. [Google Scholar] [CrossRef]

- Triandis, H.C.; Gelfand, M.J. Converging measurement of horizontal and vertical individualism and collectivism. J. Pers. Soc. Psychol. 1998, 74, 118–128. [Google Scholar] [CrossRef]

- Pote, T.R.; Asbeck, N.V.; Asbeck, A.T. The ethics of mandatory exoskeleton use in commercial and industrial settings. IEEE Trans. Technol. Soc. 2023, 4, 302–313. [Google Scholar] [CrossRef]

- Directorate-General for Research and Innovation. Industry 5.0, a transformative vision for Europe. ESIR Policy Brief 2021. [Google Scholar] [CrossRef]

- European Parliament, European Union Commission. Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence (Artificial Intelligence Act). Off. J. Eur. Union 2024, 1–144. Available online: http://data.europa.eu/eli/reg/2024/1689/oj (accessed on 15 December 2024).

- NIST. Artificial Intelligence Risk Management; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2023.

- Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. The White House. 2022. Available online: https://bidenwhitehouse.archives.gov/ostp/ai-bill-of-rights/ (accessed on 15 November 2024).

- IEEE 7001-2021; IEEE Standard for Transparency of Autonomous Systems. IEEE: New York City, NY, USA, 2024.

- IEEE 7007-2021; IEEE Ontological Standard for Ethically Driven Robotics and Automation Systems. IEEE: New York City, NY, USA, 2021.

- ISO/IEC 42001:2023; ISO/IEC Information Technology—Artificial Intelligence—Management System. ISO: Geneva, Switzerland, 2023.

- Crootof, R.; Kaminski, M.; Price, W.N. Humans in the Loop. Vanderbilt Law Rev. 2023, 76, 429. [Google Scholar] [CrossRef]

- Ramírez-Moreno, M.; Carrillo-Tijerina, P.; Candela-Leal, M.; Alanis-Espinosa, M.; Tudón-Martínez, J.; Roman-Flores, A.; Lozoya-Santos, J. Evaluation of a Fast Test Based on Biometric Signals to Assess Mental Fatigue at the Workplace—A Pilot Study. Int. J. Environ. Res. Public Health 2021, 18, 11891. [Google Scholar] [CrossRef]

- Moshawrab, M.; Adda, M.; Bouzouane, A.; Ibrahim, H.; Raad, A. Smart Wearables for the Detection of Occupational Physical Fatigue: A Literature Review. Sensors 2022, 22, 7472. [Google Scholar] [CrossRef]

| Characteristic | Technology | Humans | Ethics |

|---|---|---|---|

| Focus | Augmenting human abilities | Algorithmic explainability and interpretability | Ethical, responsible systems |

| Goal | Enhance performance | Ensure effective human oversight | Embed ethical considerations |

| Key Aspect | Supportive, non-substitutive design | Maintain user confidence and control | Transparent and accountable governance |

| Paper | Approach | Domain Focus | Core Objective | Proposed Methodology | Ethics and Trust Focus | Industry 5.0 Alignment |

|---|---|---|---|---|---|---|

| Bohr (2025) [52] | Case study narrative of adopting IEEE 7000 ethics-by-design standard. | Ethics-by-design in software engineering. | Share lessons learned in translating ethical values into system requirements. | Risk-based integration of IEEE 7000 | Bridges high-level values to concrete requirements; emphasizes traceability and stakeholder engagement. | Governance framework for ethics-by-design in I5.0 development |

| Brey and Dainow (2024) [53] | Conceptual development of the EbD-AI ethics-by-design approach. | Ethically guided AI system design. | Present and compare a full EbD-AI framework adopted by EU Horizon ethics review. | Six-stage procedure: Values → stakeholder Review → monitoring | Comprehensive integration of seven ethics requirements; trust via upfront ethics embedding and review. | Practical methodology for embedding ethics in I5.0 AI lifecycles |

| Callari et al. (2024) [54] | Delphi-based co-creation of an ethical H-R collaboration framework. | Ethics in human–robot collaboration for people-centric manufacturing. | Co-design, with experts, a holistic ethical framework at shop floor, organizational, and societal levels. | Three-round Delphi with ethics experts | Central governance for ethics awareness, responsibility, and accountability to foster trust. | Human-centric pillar; governance for responsible robotics integration |

| Fraga-Lamas et al. [14] | Analytical review of blockchain’s role in Industry 5.0. | Blockchain for human-centric, sustainable, and resilient applications. | Provide a detailed guide on how blockchain can underpin I5.0’s pillars and what design factors to consider. | Taxonomy by I5.0 pillar + design guidelines | Positions blockchain as a trust anchor (immutability, decentralization); ethical focus on worker empowerment and data sovereignty. | Supports all three I5.0 pillars via trustworthy data sharing |

| Ghobakhloo et al. (2024) [27] | Content synthesis + HF-ISM roadmap modeling. | Roadmap from I4.0 digital manufacturing to I5.0 digital society. | Clarify drivers behind Industry 5.0’s emergence and sequence I4.0 sustainability functions to enable I5.0 goals. | I4.0 sustainability synthesis + HF-ISM for function interdependencies | Emphasizes governance, socio-environmental sustainability and trust via stakeholder-driven digitalization. | Integrates economic, social, and environmental pillars; resilience roadmap |

| Langås et al. (2025) [19] | Integrative review and conceptual mapping of HRT, digital twins, and ML synergy. | Sustainable manufacturing through human–robot teaming and digital twins. | Examine how combining HRT, DT, and ML can enable safe, efficient, and sustainable human-centric production. | HRI → HRC → pHRC → HRT mapping with DT/ML enablers | Implicit ethical concern for worker safety and well-being; limited explicit treatment of fairness. | Aligns digital-physical integration with human-centric and sustainability pillars |

| Martini et al. (2024) [13] | Position paper on HCAI in I5.0 and circular economy. | Human-centered AI and circular economy in additive manufacturing. | Identify major challenges and prospective areas for human-centered AI in I5.0. | Mapping HCAI onto AM workflows + policy analysis | Emphasizes ethics, transparency and regulation for HCAI; trust via participatory design. | Human-centric and sustainability pillars; circular economy enabler |

| Palumbo et al. (2024) [55] | SLR of objective metrics for ethical AI aligned with EU Trustworthy AI. | AI ethics metrics per seven EU principles. | Identify and categorize objective metrics to assess AI Ethics. | SLR protocol mapping metrics to 7 ethics principles | Deep focus on metrics for fairness, transparency, accountability; trust via measurable compliance. | Provides measurable KPIs for embedding ethics in I5.0 systems |

| Przegalińska et al. (2025) [6] | Experimental evaluation of generative AI as a collaborative assistant. | Human–AI collaboration in workplace tasks. | Explore how generative AI tools optimize organizational task performance across complexity and creativity. | RBV + TTF task typology + live generative-AI experiments | Supports trust by showing AI’s positive sentiment and clarity; ethics of augmentation, not replacement. | Human-AI teaming; hybrid intelligence; organizational performance |

| Riar et al. (2025) [9] | Experimental comparison of three design interventions (non-gamified, gameful, playful) in VR. | Human–robot collaboration (HRC) trust-building via gamification. | Investigate how gameful versus playful design influences cognitive and affective trust in collaborative robots. | The three-arm VR experiment manipulates gamification archetypes and measures trust outcomes. | Directly targets affective trust and specific antecedents; ethics in ensuring positive emotional connection. | Emphasizes user experience in cobots; human-centric interaction |

| Santos et al. (2024) [25] | Analytical review of cyberattack surfaces and countermeasures; critical analysis of existing frameworks. | Cybersecurity within Industry 5.0. | Identify new threats posed by I5.0 enabling technologies and evaluate current industrial implementation frameworks to secure the transition from I4.0 to I5.0. | Threat matrix + I4.0 framework gap analysis | Emphasizes safeguarding human-centric values, privacy and mental health by robust cybersecurity; builds trust through resilience. | Highlights resilience and human-centricity via robust cybersecurity |

| Textor et al. (2022) [32] | Mixed-methods exploration of ethics in human–AI teams. | Ethics and trust dynamics in human–AI teaming. | Uncover how ethical considerations shape—and are shaped by—trust in collaborative AI settings. | Interviews + surveys | Core focus on the co-dependence of ethics and trust; transparency and accountability emerge as key. | Underpins ethical governance in human-AI collaboration |

| Thurzo (2025) [56] | Architectural design of a provable-ethics “ethical firewall”. | Provable ethics and explainability in high-stakes AI (medical/educational). | Embed mathematically verifiable ethical constraints into AI decision cores. | Formal logic + blockchain + Bayesian escalation | Ethics and trust engineered into AI core—decisions provably aligned with human values. | Ensures real-time transparency and accountability in high-stakes I5.0 AI |

| Trstenjak et al. (2025) [2] | SLR of human factors and ergonomics in Industry 5.0 work environments. | Identify characteristics, dimensions, and principles enabling/hindering human-centric work designs. | PRISMA SLR of WoS (983 records → 119); thematic grouping into nine ergonomics domains. | PRISMA WoS review into nine domains | Addresses I5.0’s human-centric pillar by detailing ergonomic requirements for collaborative work. | Human-centric socio-technical design; sustainability; resilience |

| Lifecycle Stage | Dial | High-Reliability Industries | Light-Manufacturing/Creative Sectors | Collectivist Cultures | Individualist Cultures |

|---|---|---|---|---|---|

| Design and Development | Certification rigor | Mandatory, formal certification | Optional, guideline-based | Approval by shared committee | Individual sign-off with oversight |

| Stakeholder involvement | Broad, cross-functional review boards | Lean, agile prototyping workshops | Group-focused co-design sessions | Empowered experts driving design | |

| Testing and Validation | Audit frequency | Quarterly independent audits | Ad hoc peer reviews | Rotating team review rotations | One-on-one expert debriefs |

| Testing depth | Comprehensive in-situ trials | Minimal viable testing | Consensus-based pilot groups | Self-directed sandbox testing | |

| Deployment | Override mandate | Mandatory human-override layers | Optional “on-demand” override buttons | Shared decision-making boards | Personal override controls |

| Onboarding training | Formal certification courses | Informal workshops and demos | Group training sessions | Self-paced e-learning modules | |

| Operation and Maintenance | Monitoring intensity | Continuous real-time monitoring | Periodic spot checks | Team monitoring rosters | Personal dashboards and alerts |

| Transparency level | Detailed logs and dashboards | High-level summaries | Collective reporting sessions | Individual notifications | |

| Feedback and Evolution | Feedback loop formalization | Scheduled, structured review cycles | Open, rolling feedback channels | Committee-driven retrospectives | Direct feedback to system owners |

| Adjustment cycle | Fixed quarterly updates | Continuous integration/deployment | Collective roadmap planning | Individual-driven feature requests |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Merchán-Cruz, E.A.; Gabelaia, I.; Savrasovs, M.; Hansen, M.F.; Soe, S.; Rodriguez-Cañizo, R.G.; Aragón-Camarasa, G. Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0. Electronics 2025, 14, 1952. https://doi.org/10.3390/electronics14101952

Merchán-Cruz EA, Gabelaia I, Savrasovs M, Hansen MF, Soe S, Rodriguez-Cañizo RG, Aragón-Camarasa G. Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0. Electronics. 2025; 14(10):1952. https://doi.org/10.3390/electronics14101952

Chicago/Turabian StyleMerchán-Cruz, Emmanuel A., Ioseb Gabelaia, Mihails Savrasovs, Mark F. Hansen, Shwe Soe, Ricardo G. Rodriguez-Cañizo, and Gerardo Aragón-Camarasa. 2025. "Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0" Electronics 14, no. 10: 1952. https://doi.org/10.3390/electronics14101952

APA StyleMerchán-Cruz, E. A., Gabelaia, I., Savrasovs, M., Hansen, M. F., Soe, S., Rodriguez-Cañizo, R. G., & Aragón-Camarasa, G. (2025). Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0. Electronics, 14(10), 1952. https://doi.org/10.3390/electronics14101952