Abstract

The neural moving horizon estimator (NMHE) is a relatively new and powerful state estimator that combines the strengths of neural networks (NNs) and model-based state estimation techniques. Various approaches exist for constructing NMHEs, each with its unique advantages and limitations. However, a comprehensive literature review that consolidates existing knowledge, outlines design guidelines, and highlights future research directions is currently lacking. To address this gap, this systematic review screened 1164 records and ultimately included 22 primary studies, following the preferred reporting items for systematic reviews and meta-analyses (PRISMA) protocol. This paper (1) explains the fundamental principles of NMHEs, (2) explores three major NMHE architectures, (3) analyzes the types of NNs used, such as multi-layer perceptrons (MLPs), long short-term memory networks (LSTMs), radial basis function networks (RBFs), and fuzzy neural networks, (4) reviews real-time implementability—including reported execution times ranging from 1.6 μs to 11.28 s on different computing hardware—and (5) identifies common limitations and future research directions. The findings show that NMHEs can be realized in three principal ways: model learning, cost function learning, and approximating the real-time optimization in moving horizon estimation. Cost function learning offers flexibility in capturing task-specific estimation goals, while model learning and optimization approximation approaches tend to improve estimation accuracy and computational speed, respectively.

1. Introduction

State estimation is a fundamental problem in control engineering, crucial for ensuring the monitoring and control of dynamic systems. Achieving accurate and robust state estimation can be a formidable challenge due to sensor noise, incomplete or indirect measurements, nonlinearities, model uncertainties, and external disturbances [1].

Conventional state estimators, such as Kalman filters [2], particle filters [3], and moving horizon estimators (MHEs) [4], rely on a model of the system; hence the term model-based. These methods, while grounded in strong theoretical foundations and capable of providing precise estimates when accurate models are available, often struggle with handling model inaccuracies and computational complexity, particularly in nonlinear and time-varying systems. On the contrary, NN-based state estimation techniques offer greater flexibility and adaptability by learning directly from data. These methods can effectively manage complex and nonlinear systems without requiring explicit mathematical models. Still, their performance heavily depends on the quality and quantity of the training data, and they often lack transparency.

Recognizing the limitations of both model-based and NN-based approaches, there has been growing interest in hybrid methods that aim to combine the strengths of both [5]. By integrating the predictive accuracy and theoretical robustness of model-based techniques with the flexibility and adaptability of NN-based methods, hybrid approaches can provide more robust and accurate state estimation solutions, addressing many of the challenges inherent in traditional state estimation methods.

While several prior reviews have addressed the integration of NNs with classical estimators such as Kalman filters [6,7], these works do not focus on moving horizon estimation (MHE). MHE represents a fundamentally different class of estimators, relying on online constrained optimization over a finite window [8]. This distinction enables MHE to better handle constraints, model nonlinearity, and time-varying behavior, making its integration with NNs uniquely challenging and worthy of a dedicated review.

MHE is closely related to model predictive control (MPC), where future system behavior is predicted based on the current state estimate and a control sequence is optimized while accounting for system constraints [9]. Although MHE may not be as widely known as MPC, its similarities to MPC offer certain advantages for state estimation.

Both MHE and MPC share a core reliance on real-time optimization to make estimates or control decisions based on models, measurements, and constraints over a moving window in time, enabling them to effectively handle nonlinear dynamics, disturbances, and system limitations. The roots of this paradigm trace back to pioneering work in optimal control and estimation, including the development of the linear quadratic regulator [10] and Kalman filter [11], which laid the foundation for modern optimization-based techniques. Over time, advances in numerical solvers and computing power have propelled methods like MHE [12] and MPC [13] from theoretical constructs to practical tools used in safety-critical and high-performance applications.

Building on this optimization-based foundation, MHE has demonstrated remarkable effectiveness in handling nonlinear and time-varying dynamics, especially in the presence of input and state constraints, measurement noise, and sensor faults [14,15]. Its ability to incorporate system models and constraints directly into the estimation process makes it particularly suited for applications where reliability and performance are critical. Consequently, MHE has been successfully applied in domains such as process control [16], robotics [17], self-driving vehicles [18], and aerospace systems [19], showcasing its versatility and impact across diverse, high-demand environments.

However, MHE’s performance heavily relies on the accuracy of the system model [20]. Integrating NNs with MHE can help mitigate this issue. NNs learn system dynamics from data, reducing dependence on precise mathematical models and improving robustness to model inaccuracies [21]. They can also assist in the adaptive tuning of MHE parameters, optimizing performance over time. By leveraging the pattern recognition and approximation capabilities of NNs, the integration can lead to more accurate and reliable state estimation, addressing many of the inherent limitations of classical MHE [22].

There have been different approaches to integrating NNs with MHE, each presenting certain advantages. However, a comprehensive resource discussing the current neural MHE (NMHE) designs is lacking. This motivated us to systematically review the existing literature, providing readers with a thorough grasp of the capabilities, constraints, and possible applications of NMHE. It is worth noting that other machine learning algorithms, such as decision trees and Gaussian process regression, have also been combined with MHE [23,24]. However, it is the NNs that are the most commonly used learning models with the most advantages in the context of MHE, and our focus is specifically on them. That said, in this systematic review paper, we aim to answer the following questions:

- What are the different approaches to designing NMHEs?

- What are the different structures of NNs used in NMHEs?

- How do different techniques compare in terms of state estimation accuracy and computational efficiency?

The answers to the above questions clarify the pros and cons of existing approaches, generate recommendations for the design and implementation of NMHEs for a given application, and identify the existing gaps and future research directions.

It should be noted that this systematic review focuses specifically on how NNs are incorporated into MHE formulations. A detailed discussion of NN training procedures and common challenges—such as overfitting or training instability—is beyond the scope of this review. These topics are extensively covered in the existing literature [25,26,27,28,29] and are generally applicable to training NNs in the context of NMHE.

2. Overview of NMHE

Before we start with the systematic review, we present an overview of an MHE and its relation to NN methods. This clarifies the terminology and concepts, setting up the stage for our discussions in the subsequent sections.

2.1. MHE Formulation

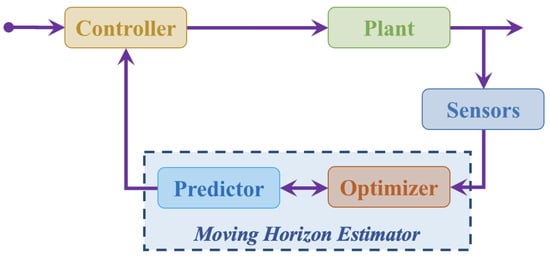

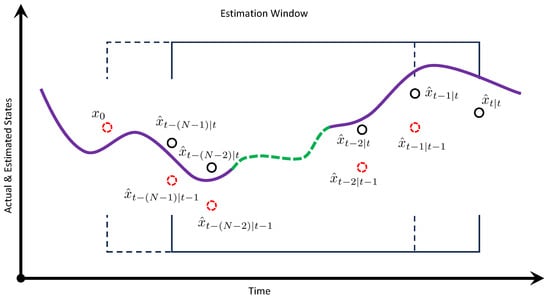

Figure 1 displays a basic architecture of an MHE implemented in a closed-loop control system. The main components of an MHE are a predictor and an optimizer. The predictor forecasts future states of the system based on the current state estimate and control input. The optimizer refines the state estimates by solving a constrained optimization problem [4], typically using real-time solvers such as sequential quadratic programming (SQP) available in open-source toolkits like ACADOS [30] or potentially through emerging machine learning-based approaches [31,32]. Both the predictor and the optimizer use a sequence of previous measurements within a moving window. Figure 2 illustrates how the window shifts over time, incorporating past measurements and predictions to update and refine state estimations iteratively. This iteration enables the MHE algorithm to continuously adjust its predictions based on new information, resulting in improved accuracy and reliability in estimating the underlying states of the system.

Figure 1.

Block diagram of MHE within closed-loop control system.

Figure 2.

Illustration of moving horizon in MHE.

To establish the mathematical formulation of an MHE, consider a discrete-time nonlinear system described by the following state-space equations:

where x is the state vector, u is the control input, y is the measurement vector, w is the process noise, v is the measurement noise, is the state transition function, and is the measurement function. The MHE aims to estimate the state sequence over a moving horizon of length N, given the measurements and control inputs .

According to seminal studies [12,33], a typical cost function used in the optimizer takes the following form:

where P, R, and Q are weighting matrices, is the initial state at the beginning of the estimation horizon, is the estimated initial state, is the measurement at time step i, is the estimated output, and represents the process noise at time step i.

Let us explore the terms within (2) in detail. The term represents the arrival cost, while the two others constitute the running cost. The arrival cost quantifies the penalty for the difference between the actual state at the start of the estimation horizon and the estimated initial state . This incorporates a summary of historical running cost before the current estimation horizon.

With respect to the running cost terms, penalizes the difference between the actual measurements and the predicted outputs based on the estimated states . By having this term in J, the optimizer drives the estimated states to match the observed outputs as closely as possible.

Finally, the term penalizes the magnitude of the process noise , which represents the discrepancy between the predicted state transition and the actual system dynamics. It promotes smoother state trajectories by discouraging large deviations attributed to process noise, important in handling uncertainties in the system model.

Note that if parameter estimation is desired, one can add the weighted squared norms of terms like to the cost function, where is a parameter to be estimated.

With the cost function established, the MHE problem formulation is

subject to

2.2. Integration of NNs with MHE

One limitation of MHE is its reliance on an accurate system model, which can be challenging to obtain or may not adequately capture all system dynamics. Recent advances in NNs have proven them to be powerful tools for learning complex relationships from data. Therefore, integrating NNs with MHE can significantly enhance MHE’s capabilities.

To elaborate, NNs excel at capturing complex nonlinear relationships between input and output variables, making them well suited for modeling dynamic systems within an MHE formulation. Their adaptability allows NNs to continuously learn from data, enabling robust state estimation even in the case of time-varying system dynamics. Additionally, NNs can automatically extract relevant features from raw sensor data. This eliminates the need for manual feature engineering, especially in control systems with multiple sensors where raw sensor data are high-dimensional and complex. Moreover, NNs are well suited for parallel computing, facilitating efficient computation necessary for real-time applications.

However, NNs also present certain limitations in MHE applications. One notable challenge is the requirement for large amounts of training data, which may be difficult to obtain and can lead to reduced performance in cases of limited or noisy data. Furthermore, the inherent complexity of NNs can hinder their interpretability, making it challenging to understand and validate the underlying estimation process. Overfitting is another concern, where the model may memorize noise or spurious patterns in the training data, compromising its generalization performance on unseen data. Additionally, deploying NMHE may require significant computational resources, posing constraints in resource-constrained environments or real-time applications.

Despite these limitations, the advantages of integrating NNs with MHE hold great promise for addressing complex state estimation problems. Understanding and mitigating the limitations can help researchers harness the full potential of NMHE. This motivates a systematic review to study the existing approaches, generate design guidelines for future NMHE implementations, and inform existing gaps and future research directions.

3. Review Methodology

To ensure a comprehensive review of the literature and enhance the rigor of our analysis, we followed the preferred reporting items for systematic reviews and meta-analyses (PRISMA) 2020 guidelines [34]. This section presents the details of our review methodology.

3.1. Search Strategy and Criteria

Our search terms consisted of two key concepts of NNs and MHE. Table 1 presents the controlled and uncontrolled terms for our search. We searched three different databases, including IEEE Xplore, Scopus, and Google Scholar, for all studies published or accepted before 30 April 2025.

Table 1.

Search terms used within the databases.

We devised and applied the following inclusion and exclusion criteria to ensure a comprehensive and focused review of the literature on NMHE.

- Inclusion Criteria:

- Studies that directly involve NNs as a fundamental component of their data-driven MHE methodology.

- Peer-reviewed journal articles, conference papers, theses, and dissertations to ensure academic rigor.

- Research conducted within the last 15 years.

- Publications in the English language.

- Exclusion Criteria:

- Studies that do not focus on NMHE.

- Duplicate publications or multiple versions of the same study.

- Studies with limited relevance to the topic, specifically those that, upon full-text review, (i) did not incorporate an NN as an integral component of the estimation algorithm (e.g., NNs mentioned only in the introduction or future work) and/or (ii) did not employ an MHE formulation (e.g., lacked a finite-horizon optimization structure or moving window).

- Studies published before the specified time frame to ensure the focus on recent research.

- Studies that mention NNs but do not use them as a central component of their data-driven MHE approach.

- Publications in languages other than English, unless they provide English translations or summaries.

Note that the 15-year window (2009–2025) was chosen based on pilot searches. Earlier work prior to 2009 predominantly addressed conventional MHE without incorporating NNs.

Additionally, this review is limited to English-language, peer-reviewed publications. As with any systematic review, this introduces the possibility of language, publication, and database coverage bias, which may have resulted in the omission of relevant studies.

3.2. Study Selection

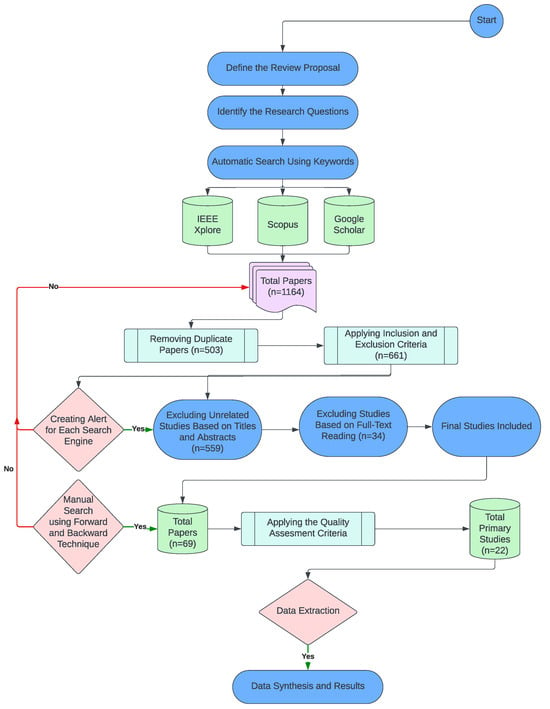

The search from the three databases yielded 1164 studies. We merged the search results, removing 503 duplicates. We screened the studies to ensure relevance to NMHE. This entailed an initial screening where two reviewers independently screened the titles and abstracts of the studies. When conflicts arose, a third reviewer resolved them. We repeated this procedure for the full-text screening, resulting in 69 studies. After quality assessment, 22 studies remained for data extraction. The quality assessment focused on the relevance of the studies. Once the full-text screening was completed, team members who had not participated in either the initial or full-text screening assessed whether the studies addressed our research questions and the objectives of this literature review. Figure 3 details the step-by-step implementation of the search strategy.

Figure 3.

Overview of review methodology.

3.3. Data Extraction

Our systematic literature review used a detailed set of data extraction questions to provide a robust framework for assessing and synthesizing research findings from various studies. These questions covered key topics such as NN structures, algorithm effectiveness, experiment designs, computational efficiency of algorithms, comparative analysis with previous approaches, and conclusions.

Two reviewers independently extracted data from each study, ensuring a thorough and unbiased assessment. When conflicts arose, a third reviewer resolved them to maintain consistency and accuracy. By methodically summarizing data, we highlighted common themes, advantages, and disadvantages of different approaches and other crucial insights. Ultimately, this systematic data extraction process provided a strong foundation for drawing robust conclusions and making evidence-based decisions.

The following sections present the results of this systematic review and the lessons learned from it.

4. Descriptive Statistics

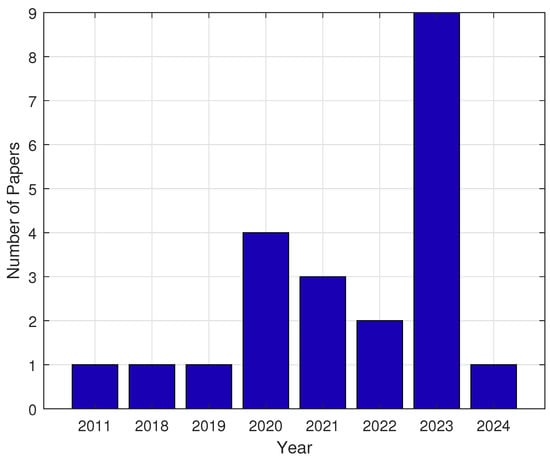

Figure 4 presents the distribution of studies by the year of publication. While the earliest work is from 2011, there is a clear upward trend in previous years, with a peak of nine studies in 2023. Note that there was only one study in 2024 up until 5 March 2024, and more studies will likely appear throughout the remainder of the year. Overall, the increase from earlier years highlights the growing attention to and research efforts in NMHE.

Figure 4.

Distribution of studies by year of publication.

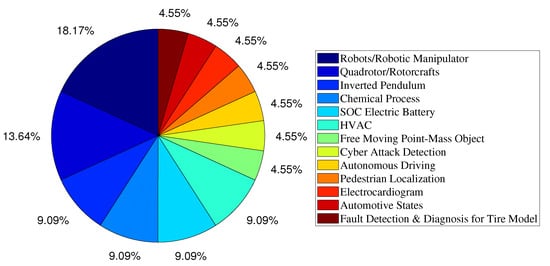

Figure 5 illustrates a pie chart depicting the distribution of studies by their application areas. The chart reveals a broad range of applications, from systems with slower dynamics like heating, ventilation, and air conditioning (HVAC) to faster dynamics like rotorcraft. Notably, about 40% of the studies focus on rotorcraft, inverted pendulums, and robotics, all of which have highly nonlinear dynamics and pose significant state estimation and control challenges. Additionally, many studies involve processes where an accurate system model is difficult to obtain, such as rotorcraft due to the unmodeled aerodynamic effects of rotors and pedestrian localization due to behavioral variability, environmental factors, and limited sensory information. These observations underscore the particular value and effectiveness of NMHE in complex problems.

Figure 5.

Distribution of studies by their application areas.

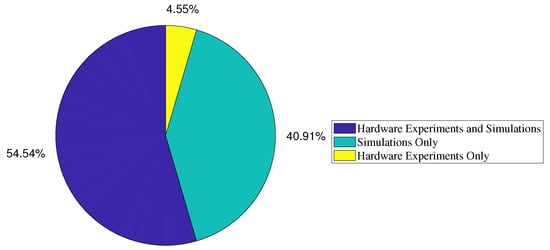

Hardware experimentation with NMHE can be challenging due to the complex structure of NNs and the need for nonlinear optimization solvers. As such, we analyzed the distribution of studies that extended beyond simulation to include hardware experiments. Figure 6 illustrates the results. Notably, 54.54% of the studies implemented NMHE in real time and conducted hardware experiments. This observation demonstrates that with advancements in software tools and computing hardware, it has become feasible to implement NMHE in real-world scenarios.

Figure 6.

The distribution of studies based on the method of NN-based MHE implementation.

5. Different NMHE Approaches

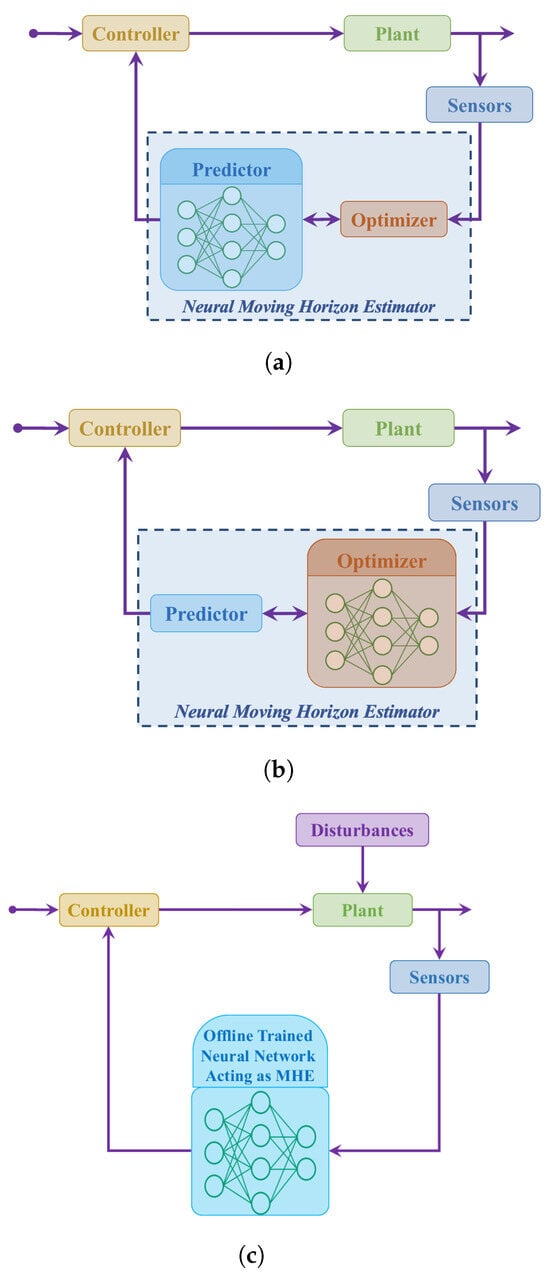

Table A1 provides an overview of all studies included in this systematic review. Based on the methodologies presented in these studies, we categorize existing NMHE work into three groups, as follows:

- The first group adopts the standard MHE formulation (3) but leverages NNs to create a more accurate model of the system, thereby enhancing state estimation accuracy.

- The second group employs NNs to modify the cost function (2), providing auto-tuning capabilities that make the estimator adaptive to varying conditions.

- The third group uses NNs to approximate the standard MHE and implements the NN in place of the MHE for state estimation. This approach eliminates the need to solve the nonlinear constrained optimization problem inherent in MHE, leading to significant speedups, though it results in slightly lower state estimation accuracy since the NN is an approximation of the MHE.

Figure 7 illustrates the architectures of these different approaches to NMHE. The rest of this section provides further details for each category.

Figure 7.

Different NMHE architectures in the existing studies. (a) Category I: using NNs for more accurate models. (b) Category II: using NNs to modify the cost function. (c) Category III: using NNs for approximating a regular MHE.

5.1. Category I: Using NNs for More Accurate Models

This category of studies builds upon the seminal work [21]. This study established theoretical results indicating that if the system model is represented by universal nonlinear approximators such as NNs, the MHE state estimations can converge to the actual state values, under certain conditions. The model is identified offline by training an NN on historical input–output data to approximate a nonlinear autoregressive moving average model with exogenous inputs (NARMAX model). Feedforward NNs such as multi-layer perceptrons (MLPs) [21] and recurrent NNs such as long-short term memory (LSTM) networks [35] are commonly used for such tasks.

A large body of literature has embraced the above theoretical findings, developing NMHE approaches for a broad spectrum of applications. Comparative analyses across these studies consistently demonstrate the superior estimation accuracy of NMHE over traditional methods, irrespective of system complexity and computational demands. Notable examples include [35,36,37,38,39,40,41,42].

The improved estimation accuracy provided by NMHE reduces the need for multiple and expensive sensors, enabling the development of more cost-effective and compact systems. This advantage is particularly evident in [43], which employs NMHE for estimating several parameters in brewery wastewater treatment, thereby eliminating the need for expensive sensors to measure each parameter.

Another key advantage of NMHE is the ability to explicitly account for system constraints while using an NN for accurate system model representation. This capability is highlighted in [44], where NMHE is applied for parameter estimation in gray-box system identification. Considering the known physical limitations of systems is proven highly effective in both offline and online parameter estimation.

NMHE excels in fault estimation and compensation. Concerning actuator faults, the study [45] demonstrates the application of NMHE in estimating the current status of car tires to facilitate fault-tolerant chassis control. Regarding sensor faults, the study [46] explores the use of NMHE in controlling complex process networks where cyberattacks can compromise the integrity of monitoring systems. Due to the vast size and complexity of these networks, such attacks may go undetected, compromising system safety. However, NMHE yields outstanding results in identifying these issues, recovering system states, and ensuring that control systems remain resilient to sensor faults and cyberattacks.

5.2. Category II: Using NNs to Modify the Cost Function

The MHE’s performance depends heavily on the choice of the weighting matrices P, Q, and R in (2). Due to varying operating points and external disturbances, the optimal choice of these matrices is non-stationary, and finding appropriate values requires extensive data, making the MHE calibration process laborious.

Several papers have addressed this challenge. For example, the study [47] replaces the cost function J in (3) with an NN. This NN is trained online using a reinforcement learning algorithm, allowing J to adapt over time to new operating points and external disturbances. The reinforcement algorithm used is deterministic policy gradient (DPG), and the reward function used is the negative of the MHE cost function.

Another approach, named differential MHE [48], updates the weighting matrices via gradient descent, with the gradient calculated recursively using a Kalman filter. In [49], differential MHE is extended to NeuroMHE, where NN represents the weighting matrices. The comparative analysis presented in [49] demonstrates NeuroMHE’s efficient training, fast adaptation, and improved estimation over the state-of-the-art estimators.

NeuroMHE is further refined in [50] with a trust-region policy optimization method replacing gradient descent for tuning the weighting matrices. This method offers linear computational complexity with respect to the MHE horizon and requires minimal data and training time. In a case study on disturbance estimation for a quadrotor, the trust-region NeuroMHE achieved highly efficient training in under 5 minutes using only 100 data points, outperforming its gradient descent counterpart by up to 68.1% in disturbance estimation accuracy while utilizing only 1.4% of the network parameters used by the gradient descent method.

5.3. Category III: Using NNs for Approximating Regular MHE

When the system model is nonlinear, MHE-based state estimation requires solving a constrained nonlinear optimization problem in real time, which is challenging for resource-constrained systems. Several studies [51,52,53,54] have shown that an NN can closely resemble the behavior of an MHE and replace it in the control system. In this setup, the NN approximates the MHE, creating a mapping between inputs and estimated states. This is an approximation of the MHE and may not generate as accurate estimations as the MHE can. However, the parallel architecture of the NN aligns well with the multi-core architecture of modern processors, making them significantly faster than the MHE, which needs to run constrained nonlinear optimization problems. Consequently, substantial speedups can be achieved at the expense of a slight reduction in state estimation accuracy.

Interestingly, in a case study on the state of charge (SOC) estimation of lithium-ion batteries [54], such an implementation of NMHE resulted in more accurate state estimation compared to the extended Kalman filter (EKF) while being over 98% faster (0.1145 ms running time vs. 6.9789 ms). This demonstrates the significant potential of this class of NMHE approaches to deliver MHE-like state estimation accuracy with a fraction of the computational load of simple state estimators like EKF.

Such improvements in computational time open new doors to achieving MHE-like state estimation accuracy for complex systems that were previously challenging to address with the regular MHE. For example, the study [52] develops an NMHE for an industrial batch polymerization reactor, a highly nonlinear system with 10 state variables, where solving a regular MHE can be difficult. Furthermore, the study [52] capitalizes on the computational efficiency of NNs to implement advanced control techniques like MPC alongside NMHE in a single control loop. Implementing two nonlinear constrained optimization problems in the same control loop in real time may sound improbable or require substantial computational hardware, but with NN-based approximations, this becomes feasible on commodity hardware.

Studies [51,53] explore the implementation of NMHE on field-programmable gate arrays (FPGAs), achieving an impressive 500 kHz frame rate for state estimation of an inverted pendulum while maintaining MHE-like state estimation accuracy. This demonstrates the potential of this class of NMHE approaches to handle high-speed, real-time state estimation tasks effectively, faster and more accurately than common nonlinear state estimators like EKF, as mentioned above.

6. NN Architectures Used in NMHE

Unfortunately, not all studies provide the details of the NNs used in their NMHE developments. However, those that do reveal a variety of architectures, each customized to meet the unique needs of specific applications. This section delves into the details of the structure of NNs used in these studies to gain deeper insights, beneficial for future NMHE designs. We use the same categorization of studies presented in Section 5 here.

6.1. Category I: Using NNs for More Accurate Models

This category of studies commonly uses multi-layer perceptron (MLP) networks to model system dynamics. Examples include [37,40,44,46].

The study [40], focusing on HVAC system state estimation, uses an MLP network with one hidden layer and three neurons. The study [44] employs an MLP network with one hidden layer and six neurons for gray-box system identification of a two-degrees-of-freedom (2-DOF) robotic manipulator. The study [37] investigates three case studies: (1) state estimation for a cartpole system using partial measurements, (2) localization for a ground robot, and (3) state estimation for a quadrotor. For the case study (1), an MLP network with two layers and eight hidden neurons is trained over 250 epochs. For the case study (2), the same architecture is used but trained over 10,000 epochs. For the case study (3), an MLP network with two layers and 16 hidden neurons is trained over 150 epochs. The study [46] employs an MLP with two hidden layers for state estimation of complex network processes in the presence of cyberattacks. The first hidden layer consists of 30 nodes, while the second has 24 nodes. The input layer comprises tracking errors, while the output layer provides information on the presence or type of cyberattack.

In addition to MLP networks, long-term short memory (LSTM) and fuzzy networks have appeared in a few studies. The study [35], focusing on reliable state estimation of sideslip and attitude angles of cars, employs an LSTM network to model the errors of the inertial navigation system. This information is then used in an MHE to estimate the car side slip angle. The network contains 20 hidden layers and 150 neurons. The study [39], focusing on parameter identification and SOC estimation of lithium-ion batteries, utilizes an autoregressive LSTM network with one hidden layer and 128 neurons. The study [38], focusing on car velocity and chassis control, implements an adaptive neuro-fuzzy inference system (ANFIS). The rule base of the network comprises 32 rules. Each input to the network is fuzzified using generalized Gaussian bell membership functions. The study [36], focusing on external force/torque estimation in bilateral telerobotics, uses a type-2 fuzzy neural network (T2FNN). For a case study of 3-DOF master and 6-DOF slave manipulators, a T2FNN with 15 rules is proven effective.

Overall, we did not find clear justifications in the existing studies for why one type of network is favored over another. However, we have made the following observations:

- MLP networks have simple yet effective structures. Among the studies that have employed MLP networks, one hidden layer seems to be sufficient for most cases. The number of neurons depends on the system’s complexity, ranging from 3 neurons for a simple system like HVAC to 30 neurons for a complex network process. Additional estimation tasks, such as identifying the type of cyberattacks, appear to require more hidden layers.

- LSTM networks, capable of capturing temporal dependencies, excel in dynamical system modeling. However, there were only two studies that employed LSTM, featuring a much more complex structure compared to the aforementioned MLP networks. Unfortunately, there is no direct comparison of LSTM and MLP networks in the context of NMHE, making it difficult to draw definitive conclusions. However, given the known advantages of LSTM networks and the outstanding state estimation results obtained with them in [35], LSTMs are worth considering, especially for high-performance applications.

- The results obtained with fuzzy networks are noteworthy. Compared to MLP networks, the design of fuzzy networks is a more involved process as they require a degree of domain knowledge to set up the membership functions and the rule base. However, this initial setup has the potential to reduce the amount of time required for training. Considering that MLP training reached up to 10,000 epochs in the aforementioned studies, the potential of fuzzy networks to reduce training times makes them an attractive option.

6.2. Category II: Using NNs to Modify the Cost Function

In [47], an NN is used to approximate the cost function. The convexity of the cost function is often desired because it guarantees that any local minimum is also a global minimum, simplifying the optimization process. It also facilitates the use of robust and efficient convex optimization solvers. Consequently, the study [47] employs an input convex neural network (ICNN) to approximate the cost function. This ICNN consists of two hidden layers, each containing 26 neurons. This configuration is applied for state estimation in a building heat control system.

In contrast to [47], the rest of the studies in this category [48,49,50] do not use NNs to approximate the entire cost function, but they rather use the weighting matrices. Consequently, the convexity of NNs is no longer required, allowing for the use of conventional architectures such as MLP networks. For instance, in NeuroMHE [49], the MLP network consists of two hidden layers, each with 50 neurons. In [50], the MLP network is composed of two hidden layers, each with eight neurons. Although this is a simpler NN compared to the one in [48], it performs better due to superior training algorithms.

The key observations in this category include the following:

- If an NN is used to approximate the entire cost function, the use of networks that guarantee convexity, e.g., ICNN, is desired.

- In the NeuroMHE setup, where an NN is used to approximate the weighing matrices, MLP networks are effective; however, the use of a trust-region optimization algorithm helps simplify the network structure while offering higher state estimation accuracy.

6.3. Category III: Using NNs for Approximating Regular MHE

As explained in Section 5, this category of studies is distinct in the sense that it focuses on approximating the regular MHE to achieve faster running times, rather than improving the estimation accuracy.

The study [54] develops a regular MHE for the state-of-charge estimation of lithium-ion batteries, subsequently approximated using an MLP network with 20 neurons and 5 hidden layers. The NN closely mimics the MHE, achieving a coefficient of determination near 99%. Remarkably, the network operates 20 times faster than the regular MHE. The reported execution time for the network is 0.1145 [ms], which is even faster than the reported EKF execution time of 6.9789 [ms]. This substantial speedup, coupled with only a 1% reduction in accuracy, underscores the potential of NN approximations of the MHE, particularly in resource-constrained environments.

The approximating network enlarges as the complexity of the system increases. The study [52] explores a regular MHE for an industrial batch polymerization reactor, a significantly more complex system compared to the aforementioned lithium-ion battery case study. The study uses an MLP network with 109 inputs, 80 neurons per hidden layer, 6 hidden layers, and 9 outputs to approximate the MHE. The 109 inputs correspond to all past measurements required for MHE calculations, resulting in a highly complex network. As mentioned in Section 5, this study also investigates the integration of NMHE with MPC, approximating MPC with another NN. The NN mimicking MPC has 9 inputs, 25 neurons per hidden layer, 6 hidden layers, and 93 outputs, revealing that approximating the MHE necessitates much larger networks than MPC, likely due to the greater number of inputs needed for meaningful state estimation. Further, this study trains an NN to approximate the control system that includes both MHE and MPC, employing an MLP with 109 inputs, 120 neurons per hidden layer, 8 hidden layers, and 12 outputs.

The studies [51,53] present a fast NMHE specifically designed for an inverted pendulum system. Initially, an MHE is crafted for the system, followed by the application of radial basis function (RBF) networks to approximate the developed MHE. The study experiments with networks featuring a single hidden layer and a varying number of neurons (ranging from 10 to 16). It concludes that an RBF network with 14 neurons is sufficient to appropriately approximate the regular MHE. Implementing this network on FPGAs achieves an impressive 500 kHz frame rate. Comparative analysis with another embedded computing platform i.e., the Raspberry Pi 3, reveals that the FPGA implementation is 31 times faster.

The main takeaways from this category are as follows:

- The MLP networks used in this category are considerably larger than the ones used in the previous categories, as the NN represents the entire state estimator, including the system model, and the optimizer. In the previous categories, the NN represented only the system model or a portion of the optimizer.

- The RBF network developed in [51,53] appears to be much smaller than the MLP networks used in [52,54]. While these two types of networks have not been compared directly in one case study, the simplicity of the RBF network makes it worth considering for future applications.

7. NMHE Running Time

Apart from studies that utilize NNs to approximate regular MHEs for faster implementations, most NMHE approaches remain computationally intensive. This is due to the simultaneous need to run NN models and nonlinear constrained optimization algorithms. Unfortunately, not all studies provide details of their NMHE running times and computing hardware. For those that do, Table 2 compiles the running times of NMHE implementations, along with the computing hardware and the length of the horizon, both of which significantly impact the computational load of the estimator.

Table 2.

NMHE running in hardware experimentation and relevant factors.

It is evident that, for the majority of studies, the NMHE running time is only a few milliseconds. However, this performance is typically achieved on general-purpose processors. While this may not pose an issue for applications like process control, where powerful computers may be available, it is impractical for mobile applications, especially in quadrotors. In such cases, embedded processors are necessary, and it remains unclear how fast the NMHE will perform on these platforms.

With recent advances in parallel computing and the use of fast optimization solvers like ACADOS [30], the real-time application of NMHE might be feasible. This is a topic that warrants further exploration in the near future.

One definitive conclusion regarding hardware implementations is that NN-based approximation techniques significantly speed up state estimations. They offer much more efficient computations compared to simple algorithms like extended Kalman filters while also bringing the benefits of MHEs to state estimation.

8. Future Directions

NMHE is still in its infancy and requires extensive research. This section presents potential future directions to advance NMHE.

One important direction involves training NN models. A critical challenge in leveraging NNs for NMHE is generating high-quality datasets. An emerging field in system modeling is physics-informed NNs (PINNs) [55], which can incorporate physical laws and constraints into the training process. This approach leads to more accurate and reliable models while generally requiring less data. Integrating PINNs with MHE holds the potential to reduce the need for large training datasets while simultaneously improving NMHE state estimation accuracy.

As discussed in Section 6, most NNs used in existing studies are MLP networks. However, recurrent NNs (RNNs), including LSTM networks, are known for their ability to detect time dependencies and model dynamical systems effectively. Surprisingly, we found only two studies that utilized RNNs [35,39]. Therefore, future research should further explore the applications of RNNs.

In addition to RNNs, convolutional NNs (CNNs) [56] and transformer networks [57] have also demonstrated exceptional performance in time series prediction and can be adapted for NMHE. Moreover, fuzzy networks and RBF networks have also shown impressive results in the existing studies and should not be excluded from future work.

There has been little work on stochastic formulations for NMHE. Given the presence of process and measurement noise, advanced stochastic optimization techniques, such as Monte Carlo methods and Bayesian approaches, can significantly improve the performance of NMHE in the presence of stochastic uncertainties.

To translate NMHE into practice, more work should focus on hardware implementations. As mentioned in Section 7, it is unclear how NMHE implementations perform on embedded processors. Focusing on efficient implementations of NNs in parallel processors and using numerically efficient solvers will optimize the computational efficiency of NMHE and enable real-world hardware experiments.

9. Conclusions

In this systematic review, we have explored the current state and future directions of NMHE in state estimation. There are three distinct categories of approaches, each offering significant advantages in state estimation accuracy or computational efficiency. We identified several key areas for future research to advance NMHE. Existing studies on NMHE have not fully leveraged the latest advances in NNs. The use of PINNs, RNNs, CNNs, and transformer networks in NMHE is underexplored. The use of such advanced NNs can potentially improve the estimation accuracy and computational efficiency of NMHE. In addition, exploring stochastic formulations can better account for process and measurement noise, enhancing the robustness of state estimation. Moreover, leveraging parallel computing and fast optimization solvers is critical to reducing the computing time of NMHE and implementing it in embedded hardware platforms for real-world applications. By addressing these research directions, NMHE can become a more robust, efficient, and versatile tool for state estimation, expanding its applicability across various industries.

Author Contributions

Conceptualization, S.M. and R.F.; Methodology, S.M.; Validation, S.M., J.C., S.S., B.R., M.I., Z.S., A.Y., H.K.S., R.B., L.E. and R.F.; Formal analysis, S.M., J.C. and R.F.; Investigation, S.M., J.C. and R.F.; Resources, S.M. and R.F.; Writing—original draft preparation, S.M.; Writing—review and editing, S.M. and R.F.; Visualization, S.M.; Supervision, R.F.; Project administration, R.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All inferences and data points are drawn from the published literature cited in the review, and full details are provided in the manuscript and its Appendix A.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Appendix A. Summary of Existing NMHE Studies

Table A1.

A list of NMHE studies included in this systematic review.

Table A1.

A list of NMHE studies included in this systematic review.

| Reference | Objective | Case Study |

|---|---|---|

| [21] | To use NNs as the predictor in MHE formulation, leveraging NNs for better state estimation accuracy | Free moving point-mass object |

| [36] | To estimate external force/torque information and disturbance rejection using NMHE | Bilateral teleoperation of robotic manipulators |

| [37] | To leverage NMHE for enhanced state estimation in different applications | State estimation for an inverted pendulum using partial measurements, localization for a ground robot, and state estimation for a quadrotor |

| [35] | To enhance the accuracy of car sideslip angle estimation using NMHE | Car chassis control |

| [38] | To leverage NMHE for high-performance state estimation | Autonomous racing of cars |

| [39] | Identify battery parameters and estimate SOC with high precision | Lithium-ion batteries |

| [40] | Estimate HVAC systems’ state variables using only building management system data | Building HVAC control for occupant comfort satisfaction and energy savings |

| [41] | For online estimation of pedestrian position, velocity, and acceleration | Pedestrian localization for car autonomous navigation |

| [42] | To remove motion artifacts from electrocardiogram signals | Electrocardiogram signal processing |

| [43] | Estimate state variables using basic measurements for process monitoring, eliminating the need for expensive sensors | Online monitoring of brewery wastewater anaerobic digester |

| [44] | Reduce modeling errors in gray-box system identification | 2-DOF robotic manipulator |

| [45] | Overcome the low accuracy of traditional tire models, enabling high-fidelity fault identification | Fault detection and diagnosis of car tires |

| [46] | Detect cyberattacks in complex process networks | The integrated process of benzene alkylation with ethylene to produce ethylbenzene |

| [58] | Identify terrain parameters using low-cost sensors | Mobile robot |

| [47] | Enable accurate state estimation in the absence of reliable system models | Climate control of smart building |

| [48] | Develop an auto-tuning state estimator for varying conditions | Quadrotor disturbance estimation |

| [49] | Develop an auto-tuning state estimator for varying conditions | Quadrotor disturbance estimation |

| [50] | Use trust-region policy optimization for an adaptable state estimator | Quadrotor disturbance estimation |

| [51] | Approximate MHE with NNs for fast state estimation | Inverted pendulum |

| [52] | Approximate MHE with NNs for fast state estimation, integrating it with MPC | Industrial batch polymerization reactor |

| [53] | Approximate MHE with NNs for fast state estimation | Inverted pendulum |

| [54] | Approximate MHE with NNs for fast state estimation | SOC estimation of lithium-ion batteries |

References

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Khodarahmi, M.; Maihami, V. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Doucet, A.; Johansen, A.M. A tutorial on particle filtering and smoothing: Fifteen years later. Handb. Nonlinear Filter. 2009, 12, 3. [Google Scholar]

- Rawlings, J.B.; Allan, D.A. Moving Horizon Estimation. In Encyclopedia of Systems and Control; Springer: Cham, Switzerland, 2021; pp. 1352–1358. [Google Scholar] [CrossRef]

- Jin, X.B.; Robert Jeremiah, R.J.; Su, T.L.; Bai, Y.T.; Kong, J.L. The New Trend of State Estimation: From Model-Driven to Hybrid-Driven Methods. Sensors 2021, 21, 2085. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Li, X.; Zhang, S.; Jian, Z.; Duan, H.; Wang, Z. A review: State estimation based on hybrid models of Kalman filter and neural network. Syst. Sci. Control Eng. 2023, 11, 2173682. [Google Scholar] [CrossRef]

- Bai, Y.; Yan, B.; Zhou, C.; Su, T.; Jin, X. State of art on state estimation: Kalman filter driven by machine learning. Annu. Rev. Control 2023, 56, 100909. [Google Scholar] [CrossRef]

- Rawlings, J. Moving Horizon Estimation; Springer: London, UK, 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Schwenzer, M.; Ay, M.; Bergs, T.; Abel, D. Review on Model Predictive Control: An Engineering Perspective. Int. J. Adv. Manuf. Technol. 2021, 117, 1327–1349. [Google Scholar] [CrossRef]

- Anderson, B.D.; Moore, J.B. Optimal Control: Linear Quadratic Methods; Courier Corporation: North Chelmsford, MA, USA, 2007. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Rao, C.V.; Rawlings, J.B.; Mayne, D.Q. Constrained state estimation for nonlinear discrete-time systems: Stability and moving horizon approximations. IEEE Trans. Autom. Control 2003, 48, 246–258. [Google Scholar] [CrossRef]

- Richalet, J.; Rault, A.; Testud, J.; Papon, J. Model predictive heuristic control. Autom. (J. IFAC) 1978, 14, 413–428. [Google Scholar] [CrossRef]

- Fagiano, L.; Novara, C. A combined Moving Horizon and Direct Virtual Sensor approach for constrained nonlinear estimation. Automatica 2013, 49, 193–199. [Google Scholar] [CrossRef]

- Wan, Y.; Keviczky, T. Real-Time Fault-Tolerant Moving Horizon Air Data Estimation for the Reconfigure Benchmark. IEEE Trans. Control Syst. Technol. 2018, 27, 997–1011. [Google Scholar] [CrossRef]

- Tenny, M.J.; Rawlings, J.B. Efficient Moving Horizon Estimation and Nonlinear Model Predictive Control. In Proceedings of the 2002 American Control Conference, Anchorage, AK, USA, 8–10 May 2002; Volume 6, pp. 4475–4480. [Google Scholar] [CrossRef]

- Bae, H.; Oh, J.H. Humanoid State Estimation Using a Moving Horizon Estimator. Adv. Robot. 2017, 31, 695–705. [Google Scholar] [CrossRef]

- Zanon, M.; Frasch, J.V.; Diehl, M. Nonlinear Moving Horizon Estimation for Combined State and Friction Coefficient Estimation in Autonomous Driving. In Proceedings of the 2013 European Control Conference (ECC), Zurich, Switzerland, 17–19 July 2013; pp. 4130–4135. [Google Scholar] [CrossRef]

- Vandersteen, J.; Diehl, M.; Aerts, C.; Swevers, J. Spacecraft Attitude Estimation and Sensor Calibration Using Moving Horizon Estimation. J. Guid. Control Dyn. 2013, 36, 734–742. [Google Scholar] [CrossRef]

- Kraus, T.; Ferreau, H.J.; Kayacan, E.; Ramon, H.; De Baerdemaeker, J.; Diehl, M.; Saeys, W. Moving Horizon Estimation and Nonlinear Model Predictive Control for Autonomous Agricultural Vehicles. Comput. Electron. Agric. 2013, 98, 25–33. [Google Scholar] [CrossRef]

- Alessandri, A.; Baglietto, M.; Battistelli, G.; Gaggero, M. Moving-horizon state estimation for nonlinear systems using neural networks. IEEE Trans. Neural Netw. 2011, 22, 768–780. [Google Scholar] [CrossRef]

- Nidhil Wilfred, K.J.; Sreeraj, S.; Vijay, B.; Bagyaveereswaran, V. System identification using artificial neural network. In Proceedings of the 2015 International Conference on Circuits, Power and Computing Technologies [ICCPCT-2015], Nagercoil, India, 19–20 March 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Ecker, L.; Schöberl, M. Data-driven observer design for an inertia wheel pendulum with static friction. IFAC-PapersOnLine 2022, 55, 193–198. [Google Scholar] [CrossRef]

- Choo, W.; Kayacan, E. Data-Based MHE for Agile Quadrotor Flight. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 4307–4314. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar] [CrossRef]

- Bejani, M.M.; Ghatee, M. A systematic review on overfitting control in shallow and deep neural networks. Artif. Intell. Rev. 2021, 54, 6391–6438. [Google Scholar] [CrossRef]

- Verschueren, R.; Frison, G.; Kouzoupis, D.; Frey, J.; Duijkeren, N.v.; Zanelli, A.; Novoselnik, B.; Albin, T.; Quirynen, R.; Diehl, M. acados—A modular open-source framework for fast embedded optimal control. Math. Program. Comput. 2022, 14, 147–183. [Google Scholar] [CrossRef]

- Chen, R.T.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D.K. Neural ordinary differential equations. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper_files/paper/2018/file/69386f6bb1dfed68692a24c8686939b9-Paper.pdf (accessed on 24 March 2025).

- Marino, R.; Buffoni, L.; Chicchi, L.; Giambagli, L.; Fanelli, D. Stable attractors for neural networks classification via ordinary differential equations (SA-nODE). Mach. Learn. Sci. Technol. 2024, 5, 035087. [Google Scholar] [CrossRef]

- Alessandri, A.; Baglietto, M.; Battistelli, G.; Zavala, V. Advances in Moving Horizon Estimation for Nonlinear Systems. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 5681–5688. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef] [PubMed]

- Song, R.; Fang, Y.; Huang, H. Reliable estimation of automotive states based on optimized neural networks and moving horizon estimator. IEEE/ASME Trans. Mechatron. 2023, 28, 3238–3249. [Google Scholar] [CrossRef]

- Sun, D.; Liao, Q.; Stoyanov, T.; Kiselev, A.; Loutfi, A. Bilateral telerobotic system using type-2 fuzzy neural network based moving horizon estimation force observer for enhancement of environmental force compliance and human perception. Automatica 2019, 106, 358–373. [Google Scholar] [CrossRef]

- Chee, K.Y.; Hsieh, M.A. LEARNEST: LEARNing Enhanced Model-based State ESTimation for Robots using Knowledge-based Neural Ordinary Differential Equations. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 11590–11596. [Google Scholar]

- Alcala, E.; Sename, O.; Puig, V.; Quevedo, J. TS-MPC for autonomous vehicle using a learning approach. IFAC-PapersOnLine 2020, 53, 15110–15115. [Google Scholar] [CrossRef]

- Chen, Y.; Li, C.; Chen, S.; Ren, H.; Gao, Z. A combined robust approach based on auto-regressive long short-term memory network and moving horizon estimation for state-of-charge estimation of lithium-ion batteries. Int. J. Energy Res. 2021, 45, 12838–12853. [Google Scholar] [CrossRef]

- Mostafavi, S.; Doddi, H.; Kalyanam, K.; Schwartz, D. Nonlinear moving horizon estimation and model predictive control for buildings with unknown HVAC dynamics. IFAC-PapersOnLine 2022, 55, 71–76. [Google Scholar] [CrossRef]

- Mohammadbagher, E.; Bhatt, N.P.; Hashemi, E.; Fidan, B.; Khajepour, A. Real-time pedestrian localization and state estimation using moving horizon estimation. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–7. [Google Scholar]

- Banerjee, S.; Singh, G.K. A New Moving Horizon Estimation Based Real-Time Motion Artifact Removal from Wavelet Subbands of ECG Signal Using Particle Filter. J. Signal Process. Syst. 2023, 95, 1021–1035. [Google Scholar] [CrossRef]

- Dewasme, L. Neural network-based software sensors for the estimation of key components in brewery wastewater anaerobic digester: An experimental validation. Water Sci. Technol. 2019, 80, 1975–1985. [Google Scholar] [CrossRef]

- Løwenstein, K.F.; Bernardini, D.; Fagiano, L.; Bemporad, A. Physics-informed online learning of gray-box models by moving horizon estimation. Eur. J. Control 2023, 74, 100861. [Google Scholar] [CrossRef]

- Zhang, B.; Lu, S.; Xie, W.; Xie, F. Fault Detection and Diagnosis Based on Interactive Multi-Model Moving Horizon Estimation and Neuro-Tire Model. IEEE/ASME Trans. Mechatron. 2024, 29, 3614–3625. [Google Scholar] [CrossRef]

- Sundberg, B.; Pourkargar, D.B. Cyberattack awareness and resiliency of integrated moving horizon estimation and model predictive control of complex process networks. In Proceedings of the 2023 American Control Conference (ACC), San Diego, CA, USA, 31 May–2 June 2023; pp. 3815–3820. [Google Scholar]

- Esfahani, H.N.; Kordabad, A.B.; Cai, W.; Gros, S. Learning-based state estimation and control using MHE and MPC schemes with imperfect models. Eur. J. Control 2023, 73, 100880. [Google Scholar] [CrossRef]

- Wang, B.; Ma, Z.; Lai, S.; Zhao, L.; Lee, T.H. Differentiable moving horizon estimation for robust flight control. In Proceedings of the 2021 60th IEEE Conference on Decision and Control (CDC), Austin, TX, USA, 14–17 December 2021; pp. 3563–3568. [Google Scholar]

- Wang, B.; Ma, Z.; Lai, S.; Zhao, L. Neural moving horizon estimation for robust flight control. IEEE Trans. Robot. 2023, 40, 639–659. [Google Scholar] [CrossRef]

- Wang, B.; Chen, X.; Zhao, L. Trust-Region Neural Moving Horizon Estimation for Robots. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 2059–2065. [Google Scholar] [CrossRef]

- Brunello, R.K.V. Nonlinear Moving-Horizon State Estimation for Hardware Implementation and a Model Predictive Control Application. Master’s Thesis, Universidade de Brasília, Brasília, Brazil, 2021. [Google Scholar]

- Karg, B.; Lucia, S. Approximate moving horizon estimation and robust nonlinear model predictive control via deep learning. Comput. Chem. Eng. 2021, 148, 107266. [Google Scholar] [CrossRef]

- Brunello, R.K.V.; Sampaio, R.C.; Llanos, C.H.; dos Santos Coelho, L.; Ayala, H.V.H. Efficient Hardware Implementation of Nonlinear Moving-horizon State Estimation with Artificial Neural Networks. IFAC-PapersOnLine 2020, 53, 7813–7818. [Google Scholar] [CrossRef]

- Lopes, E.D.R.; Soudre, M.M.; Llanos, C.H.; Ayala, H.V.H. Nonlinear receding-horizon filter approximation with neural networks for fast state of charge estimation of lithium-ion batteries. J. Energy Storage 2023, 68, 107677. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Nech, A.; Kemelmacher-Shlizerman, I. Level playing field for million scale face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7044–7053. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Kayacan, E.; Zhang, Z.Z.; Chowdhary, G. Embedded High Precision Control and Corn Stand Counting Algorithms for an Ultra-Compact 3D Printed Field Robot. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 14, p. 9. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).