Abstract

Stance detection seeks to identify the public’s position on a specific topic, providing critical insights for applications such as recommendation systems and rumor detection, which are essential for maintaining a secure social media environment. As one of China’s most influential social media platforms, Weibo significantly shapes public discourse within its complex social network structure. Despite recent advancements in stance detection research on Weibo, many studies fail to adequately address the nuanced emotional features present in text, limiting detection accuracy and effectiveness, and potentially compromising online security. This paper proposes a stance detection approach based on multi-task learning that considers the influence of emotional features to tackle these challenges. Our method utilizes a RoBERTa pre-trained model in the shared layer to extract textual features for both stance detection and sentiment analysis. In the stance detection module, a BiLSTM model captures deeper temporal information, followed by three independent modules dedicated to extracting semantic features for specific stances. Concurrently, the sentiment analysis module employs a BiLSTM model to predict emotional polarity. The experimental results on the NLPCC2016-task4 dataset demonstrate that our approach outperforms existing methods, highlighting the effectiveness of integrating sentiment analysis with stance detection to enhance both accuracy and reliability, ultimately contributing to the security of social networks.

1. Introduction

In recent years, with the rapid growth and development of internet-based social networks, people are increasingly inclined to express their opinions on social media platforms. These platforms generate vast volumes of text data, often containing strong emotional tones and personal perspectives. As a result, social media has become a crucial resource for observing and analyzing public opinion. Beyond serving as a space for expression, social media also provides researchers with valuable data for understanding public discourse. In this context, stance detection has emerged as a vital research area within natural language processing (NLP).

Stance detection focuses on identifying the author’s attitude toward a specific topic or entity, such as support, opposition, or neutrality. It highlights the stance an individual takes on particular issues. For example, users may express distinct positions on political debates, social concerns, or public events based on their beliefs or experiences. From a research perspective, detecting user stances in social media comments helps businesses better understand consumer preferences and market trends, ultimately improving user satisfaction and enhancing market competitiveness. Furthermore, as social media becomes more integral to public discourse, it also presents unique security challenges. The manipulation of information, malicious content generation, and adversarial attacks can undermine the reliability of online platforms, raising concerns about the security and integrity of social networks. In light of these risks, stance detection plays a critical role in safeguarding social media security. It contributes to identifying harmful or misleading content, enabling more robust defences against misinformation and coordinated attacks that threaten the security of online communities, aiding governments and relevant authorities in accurately assessing public opinion during emergencies, and forming a healthier online environment [1,2,3].

Sentiment analysis aims to identify emotional attitudes in text, while stance detection goes further by identifying the author’s support or opposition toward a specific target or issue. Although these two tasks are closely related in practical applications, traditional methods often treat them as independent tasks, failing to exploit their inherent connections. Recent advances in NLP have led to the development of state-of-the-art models, such as GPT (Generative Pre-trained Transformer) and other Transformer-based architectures. These models have demonstrated exceptional performance in various tasks, including sentiment analysis, text summarisation, and question-answering. However, while such models excel at generating contextual representations, they often lack the task-specific designs required for challenges like stance detection. Multi-task learning (MTL) [4] has emerged as an effective learning paradigm, demonstrating superior performance across various domains. By training related tasks simultaneously, MTL facilitates knowledge sharing and transfer, thereby improving model generalization and performance. In the field of natural language processing (NLP), multi-task learning-based approaches can perform both sentiment analysis and stance detection concurrently. Through shared representation learning at the lower levels, information from one task can assist the predictions of the other. However, effectively integrating emotional features with stance detection and designing efficient multi-task learning models remain critical challenges in current research.

In light of this, the present study explores how emotional features can be integrated into stance detection tasks. It proposes a stance detection method that incorporates emotional features through multi-task learning. By introducing the sentiment dimension into stance classification, this approach seeks to fully leverage the relationship between sentiment analysis and stance detection. The shared representation mechanism aims to improve the accuracy and robustness of stance detection. The experimental results on the publicly available NLPCC2016-task4 dataset demonstrate that the proposed multi-task learning-based method outperforms existing benchmark models. The main contributions of this paper are as follows:

- To address the issue of data scarcity in stance detection, this study applied back-translation data augmentation based on the NLPCC2016-task4 Chinese Weibo stance detection dataset, expanding the original 3000 training samples to 12,000. Subsequently, a hybrid network model was constructed for the stance detection task, combining RoBERTa and BiLSTM networks. The stance detection task was reframed from a traditional three-class classification problem into three binary classification problems. The results indicate that this approach effectively extracts stance features.

- Building on the hybrid network, this study incorporated sentiment analysis as an auxiliary task to support the stance detection task. The experimental results show that the multi-task learning stance detection method, which integrates emotional features, significantly improves the model’s stance detection performance. This is particularly evident when training on multiple-topic datasets, where integrating emotional features offers a notable advantage.

2. Related Work

2.1. Stance Detection Method

Stance detection based on machine learning is an important research area within natural language processing, aiming to identify and classify the stance of individuals or groups on specific topics or entities. In recent years, with the development of machine learning techniques, particularly the application of deep learning, significant progress has been made in stance detection research [5,6].

In the early stages of machine learning, stance detection primarily relied on carefully crafted features, including lexical features, syntactic features, and sentiment-based features. These features were used to train traditional machine learning models, such as support vector machines (SVMs), logistic regression (LR), and random forests (RFs) [7,8,9,10]. Dian et al. [11], based on statistic-based features and deep text features, applied methods such as SVMs, RFs, and gradient-boosted decision trees (GBDTs) for stance classification. They enhanced detection efficiency by combining classifiers based on different features, showing that both statistic-based features and deep text features complement each other in stance detection. The experimental results demonstrated improved performance in stance detection across various Weibo topics. Liu et al. [12] integrated RF, GBDT, and SVM classifiers, revealing that random forest performed well in identifying minority classes, while gradient boosting was better at recognizing majority classes. The complementary strengths of different classifiers in terms of precision and recall suggested that combining diverse machine learning techniques can enhance the overall performance of stance detection tasks. Sobhani et al. [13] provided a Twitter dataset that simultaneously detects stance and sentiment towards specific targets. By extracting character-level and word-level n-grams, emotional features, and word embeddings, and employing a linear SVM classifier, they achieved stance classification. Their findings highlighted the complex relationship between stance detection and sentiment analysis, and the experimental results confirmed the importance of incorporating emotional features in stance detection tasks.

Although traditional machine learning methods are effective in handling small datasets and scenarios with limited computational resources, they perform poorly in managing complex, unstructured text and exhibit weak generalization capabilities. Moreover, when large amounts of labelled data are unavailable, traditional approaches face challenges in adapting to new data and updating models. With the rise of deep learning, research on stance detection has shifted towards leveraging neural networks, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs). These models can automatically learn complex feature representations from data without the need for manually designed features. Variants such as long short-term memory (LSTM) and gated recurrent units (GRUs) are widely used in stance detection due to their advantages in processing sequential data [14,15]. To address the problem of existing models relying solely on target information while neglecting external context, Zhang et al. [16] proposed a commonsense-based adversarial learning framework. This framework uses an external commonsense graph encoder to learn unseen target information and designs a novel feature separation adversarial network to learn both target-agnostic and target-specific features, enhancing the model’s reasoning ability beyond seen targets. Umer et al. [17] developed a hybrid neural network architecture combining CNN and LSTM models. They applied two different dimensionality reduction techniques, principal component analysis (PCA) and chi-square tests, to reduce the dimensionality of feature vectors. The results showed that PCA achieved higher accuracy in the corresponding stance detection tasks.

With deeper advancements in deep learning, pre-trained language models such as BERT, GPT, and RoBERTa have achieved remarkable success in stance detection. These models are pre-trained on large-scale corpora, allowing them to capture rich linguistic knowledge, which is then fine-tuned for stance detection tasks. This approach significantly improves stance detection performance, particularly in understanding contextual and implicit semantics [18,19]. Sun et al. [20] proposed a knowledge-enhanced BERT model for Weibo stance detection, where triples from a knowledge graph were injected into sentences as domain knowledge. The experimental results showed that this model significantly outperformed competitive baselines, indicating that the integration of external knowledge can effectively boost stance detection performance. To balance performance and efficiency, Li et al. [21] proposed a knowledge distillation model, BERTtoCNN, combining classical and similarity-preserving loss. The model trains a “student” CNN from a larger “teacher” BERT, ensuring that both networks maintain similar or dissimilar activation inputs and functions. The results indicated that this model also significantly outperformed baseline methods.

Deep learning and pre-trained model-based approaches for stance detection have complex network structures that automatically extract features from text data and understand semantics. They excel in handling long texts and complex language structures, offering outstanding performance and generalization capabilities. Therefore, this paper proposes a hybrid model combining the RoBERTa pre-trained model with a BiLSTM deep learning model. By extracting more comprehensive semantic information and deeper sequential information, this approach more accurately captures the interaction between the content of comments and the target topics, effectively enhancing the performance of the hybrid network in stance detection tasks.

2.2. Multi-Task Learning

Multi-task learning aims to improve model performance by simultaneously learning multiple related tasks. When applied correctly, MTL can not only enhance the performance of models on individual tasks but also improve the model’s generalizability and efficiency. To proactively identify and mitigate potential risks in contracts, Pham et al. [22], recognizing the high financial intensity and frequent risks in construction projects, proposed a multi-task model that simultaneously addresses three tasks: risk identification, risk allocation, and risk response. Their experimental results showed that the proposed multi-task model outperformed single-task models in risk prediction and decision-making. Similarly, inspired by multi-task learning, Alturayeif et al. [23] integrated sentiment analysis and sarcasm detection to propose two multi-task learning models aimed at enhancing stance detection performance. Their multi-objective sequence MTL model, which employed a hierarchical weighting method, achieved state-of-the-art results, highlighting the potential of MTL in improving stance detection. This study also provided insights into the interaction between sentiment and stance, while accounting for the impact of sarcasm. Upadhyaya et al. [24] addressed the stance classification problem on climate change-related tweets by proposing a multi-task learning architecture that combines task-specific features and shared attention mechanisms. This approach merged the learning of common features for both the primary stance detection task and auxiliary tasks. The experimental results indicated that the proposed MTL model benefited from the auxiliary tasks, yielding better performance in stance detection compared to single-task models.

Multi-task learning presents an effective learning paradigm for text classification tasks by enabling models to share knowledge and features across related tasks. Considering that stance information in text is often intertwined with sentiment information, this paper adopts the idea of multi-task learning. It incorporates sentiment features as an auxiliary task to the primary stance detection task, thereby achieving a sentiment-integrated stance detection approach.

3. Method

Multi-task learning is a paradigm within machine learning that focuses on learning multiple related tasks simultaneously to improve performance across all tasks. The core idea is to leverage the commonalities between different tasks, allowing the model to share knowledge during the training process. This helps the model better understand subtle linguistic nuances and complex structures, resulting in improved generalization for each task.

3.1. Definition of Multi-Task Learning

In multi-task learning, the model is designed to handle a set of tasks concurrently, where each task has its own dataset . The goal is to minimize the total loss for all tasks, and the loss function can be formulated as follows:

where is the loss function for Task i, and is the weight associated with Task i, representing the importance of each task.

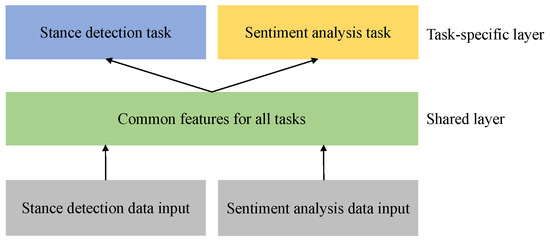

The MTL model designed in this paper consists of a shared layer and task-specific layers, aiming to perform both stance detection and sentiment analysis. The model structure is shown in Figure 1. In this architecture, the shared layer extracts general features from the input data for both stance detection and sentiment analysis, which are shared across all tasks. On the other hand, the task-specific layers are tailored for each task, extracting specific features from the shared general features for stance detection and sentiment analysis, respectively. In the same network, the task-specific layers do not share weights, allowing each task to extract task-specific information from the shared features. This design effectively utilizes the relationships between tasks, enhancing data efficiency and improving the model’s generalization ability by using a shared feature extractor. At the same time, the task-specific layers allow for customized outputs for each task, enabling the personalized processing of different tasks.

Figure 1.

Multi-task learning model structure.

3.2. Overall Framework

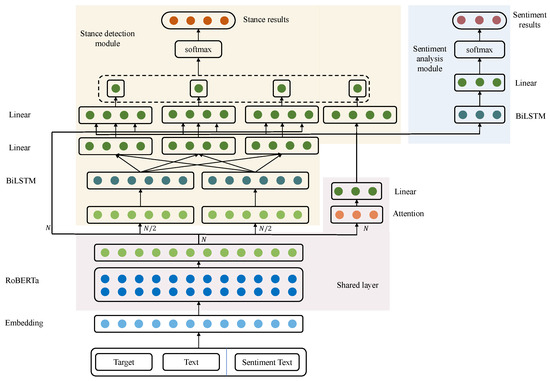

In deep learning-based methods for stance detection on Weibo, features corresponding to different stances often intertwine, complicating the feature processing. To address this, we propose a hybrid neural network model that combines RoBERTa and BiLSTM for stance detection. Furthermore, to examine the impact of emotional features on the stance detection task, an additional sentiment analysis module is incorporated into the hybrid model. This results in a multi-task learning model that integrates emotional features for stance detection, with the model framework shown in Figure 2. The multi-task learning model consists of three main components: a shared layer, a stance detection module, and a sentiment analysis module. To capture more comprehensive semantic information, text data, after initial pre-processing, is fed into the shared layer, where the RoBERTa pre-trained model is used to extract fundamental semantic features. These features, which combine stance and sentiment information, are then passed into both the stance detection and sentiment analysis modules for multi-task learning.

Figure 2.

The overall framework of proposed multi-task learning model.

3.3. Shared Layer

3.3.1. RoBERTa

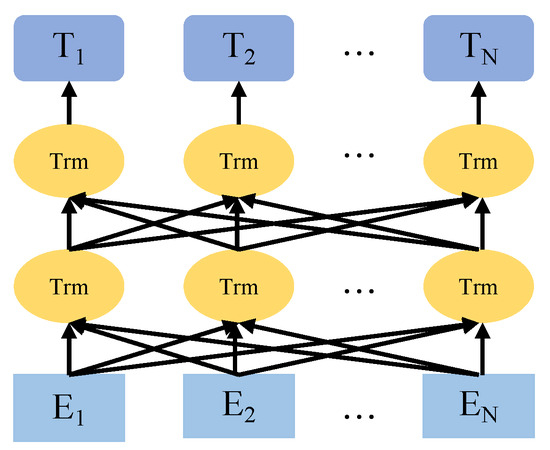

Transformers [25] are architectures based on the self-attention mechanism, allowing the model to compute the attention each element in a sequence pays to all other elements. This architecture is primarily used to solve sequence-to-sequence tasks. BERT [26], which was introduced by Google AI, is a pre-trained language model based on the Transformer architecture. Its key innovation lies in using deep bidirectional representations during pre-training, which are later fine-tuned for various downstream language processing tasks. This method provides a powerful solution for addressing complex natural language understanding challenges, with the model structure illustrated in Figure 3.

Figure 3.

BERT model structure.

RoBERTa [27] is an enhanced version of the BERT model developed by Facebook AI Research. Its improvements focus on the training process and data handling, including using a larger dataset, extending training time, and employing more efficient masking strategies. Through a series of optimizations and adjustments, RoBERTa significantly improves performance without altering the original BERT architecture, achieving new state-of-the-art results in multiple natural language processing tasks.

3.3.2. Attention Mechanism

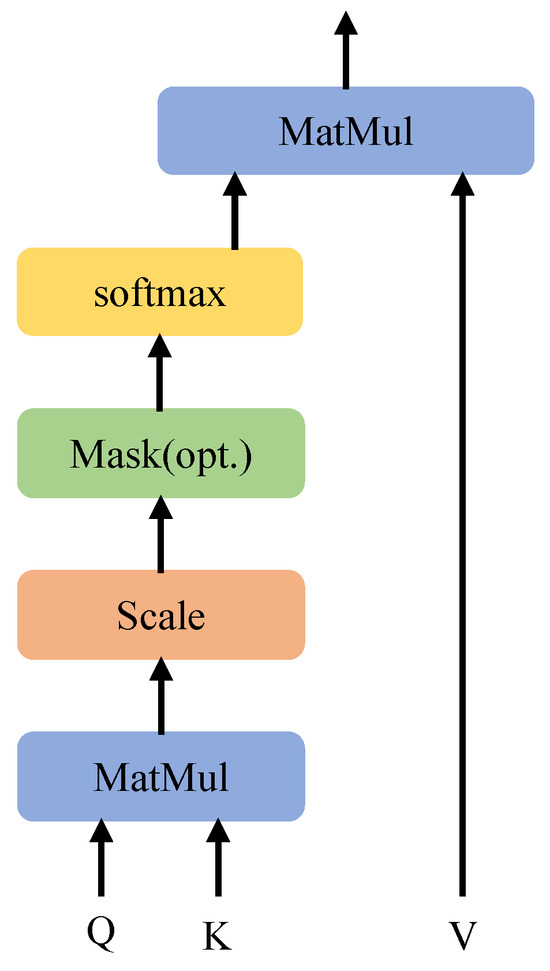

The attention mechanism [25] is a technique that simulates human attention in neural networks. It enables the model to focus on specific parts of the input sequence when processing each word, thus improving the model’s performance and interpretability. By introducing the attention mechanism, the decoder can focus on different parts of the input sequence when generating each word, assigning a weight to each part of the input sequence. This weight reflects the importance of different inputs at each step. The structure of the attention mechanism is shown in Figure 4.

Figure 4.

Attention mechanism structure.

Given an input sequence and its corresponding encoder hidden states , the attention mechanism first computes the similarity or alignment between the decoder’s current hidden state and each encoder’s hidden state at each step t of generating the output sequence. The alignment score is calculated using the function , where a represents a scoring function (e.g., dot-product or additive scoring). This results in attention weights , calculated using the formulas:

where is the alignment score between the decoder hidden state and the encoder hidden state , representing the extent to which they align. Common methods to compute include dot-product, scaled dot-product, or bilinear forms. The attention weight is a normalized score that reflects the contribution of the i-th element of the input sequence to the decoding process at the current step.

The attention vector is a weighted sum of the encoder’s hidden states, where the weights are the attention scores calculated in the previous step. Then, the attention vector is combined with the decoder’s previous hidden state to generate the current hidden state . The calculation formulas are as follows:

where f is the decoder’s recurrent function, and is the previous output.

3.3.3. Module Task Objective

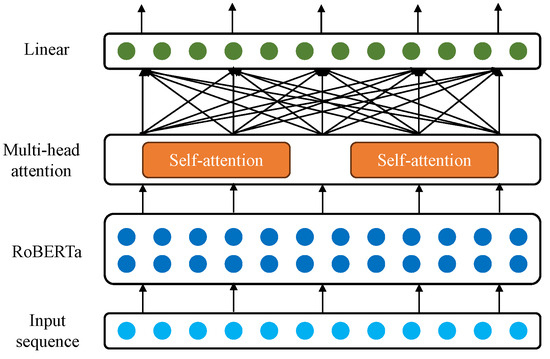

In the shared layer, the target and comment text are first pre-processed through operations like text cleaning (removing noise, such as punctuation and stopwords), tokenization (splitting text into smaller units, such as words or subwords), and adding special markers (inserting specific tokens to indicate sentence boundaries or important features, such as ‘[CLS]’ to indicate the beginning of a sentence and ‘[SEP]’ to mark the end). The pre-processed text is then passed through a RoBERTa layer to extract basic semantic information from the text. Following that, a multi-head attention mechanism and a fully connected layer are applied to capture relationships between the texts, enabling a generalized feature representation. The shared layer is designed to allow the model to capture sentiment information embedded within stance data and combine this with the semantic information of the text. Finally, the outputs are passed into the stance detection module and sentiment analysis module separately. The structure of the shared layer is shown in Figure 5.

Figure 5.

Shared layer structure.

3.4. Stance Detection Module

3.4.1. BiLSTM

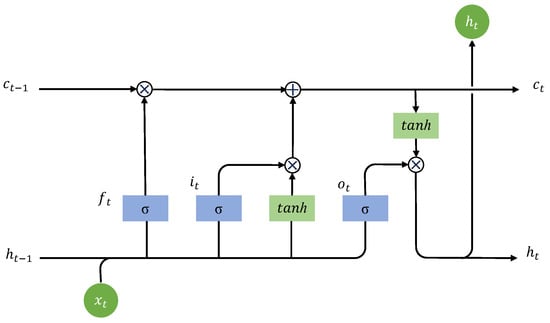

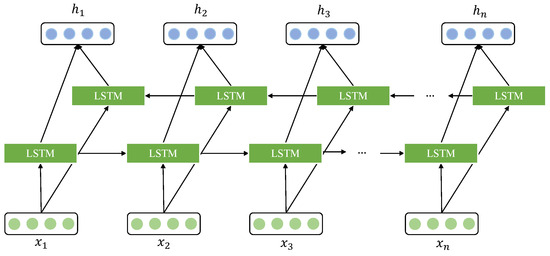

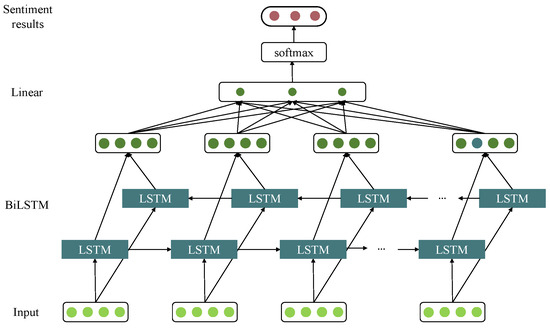

To address the issue of long-term dependencies, LSTM [28] enhances RNN by introducing gating mechanisms that control the flow of information in and out of the network. The structure of the LSTM model is shown in Figure 6. To better capture contextual semantic information, BiLSTM [29] concatenates two LSTM layers: one processes the forward sequence, while the other processes the backward sequence, as shown in Figure 7.

Figure 6.

LSTM model structure.

Figure 7.

BiLSTM model structure.

LSTM maintains and transmits long-term information through several specific structures: the forget gate, input gate, and output gate. These gates help the network selectively retain, discard, or update information. The forget gate determines which information needs to be discarded from the cell state. It does this by examining the previous hidden state and the current input , filtering each number in the cell state using a sigmoid function. The calculation formula is as follows:

where represents the weights of the forget gate, and represents the bias of the forget gate.

The input gate decides which new information to store in the cell state. It first determines the values to be updated and then calculates a new candidate vector using the tanh function, which is added to the cell state. The formulas are:

After the information passes through the forget and input gates, the current cell state is updated using the following formula:

The output gate determines which information is output. The calculation formulas are:

3.4.2. Module Task Objective

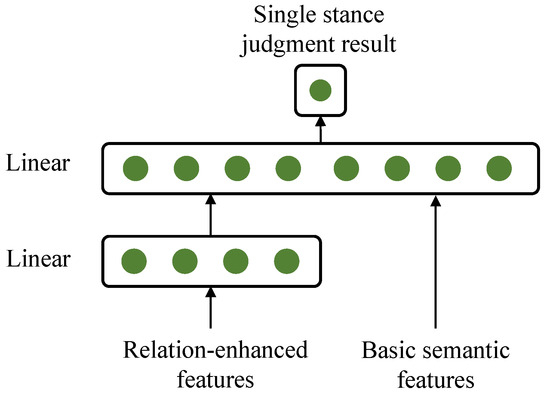

To further extract sequence features from the text and enhance the relational features between the comment text and the target topic, the semantic feature vectors extracted by the RoBERTa model are split into two equal-length parts. These are then fed into a BiLSTM model to extract deeper global sequential features. We design three modules corresponding to the three stance categories: support, opposition, and neutral. Each module uses a fully connected layer to integrate the relation-enhanced features and combines them with the base semantic features. These features are further integrated through a fully connected layer, reducing the feature length to 1. This transforms the stance detection task from a three-class classification problem into three binary classification problems, reducing the complexity of the problem. The structure for single-stance judgment is shown in Figure 8. Finally, the three feature vectors of length 1 are merged and processed by a softmax activation function to output the final stance classification result.

Figure 8.

Single-stance judgment module structure.

Since the sentiment dataset does not contain labelled stance information, when sentiment data is input into the model, an additional feature is introduced in the stance detection task output to indicate whether the input data comes from the sentiment dataset. This feature does not require determining the stance or sentiment of the text but only identifies whether the input is from the stance dataset. To achieve this, the output of the shared layer is directly connected to a fully connected layer for feature integration and dimensionality reduction, resulting in a fourth feature for the stance detection module.

3.5. Sentiment Analysis Module

In the sentiment analysis module, a BiLSTM layer is first used to extract emotional features, followed by a fully connected layer for dimensionality reduction. The results are then passed through a sigmoid function for binary classification using the softmax operation, yielding the final sentiment analysis result. The structure of the sentiment analysis module is shown in Figure 9.

Figure 9.

Sentiment analysis module structure.

4. Experiment

4.1. Datasets

Our study integrates sentiment features into stance detection tasks by using both a stance detection dataset and a sentiment dataset. For stance detection, the NLPCC2016-task4 Chinese Weibo stance detection dataset is employed. This dataset consists of 4000 stance-labeled Weibo posts across five different topics. We provide these five topics with their respective numbers, as shown in Table 1.

Table 1.

Five topics of the stance detection dataset and its respective numbers. The following text will uniformly use these numbers to represent the topics.

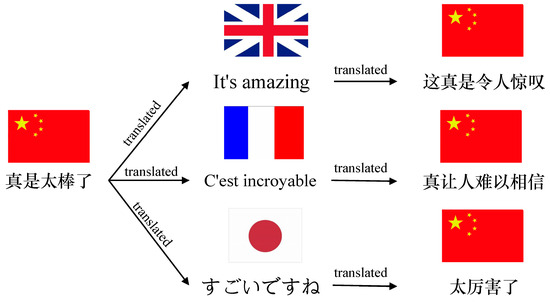

Each topic contains 800 posts, with 600 for training and 200 for testing. Each post includes a unique identifier (ID), target, content, and stance. Given the limited size of the original dataset, a data-enhancement method through back-translation was applied to the training data. The text field of the training dataset was translated using Tencent’s translation API, translating the original Chinese text into English, French, and Japanese, and then back into Chinese. An example of the data-enhancement method is shown in Figure 10. This process expanded the number of training samples per topic from 600 to 2400, while the number of test samples remained unchanged. A comparison of the data volume before and after the data-enhancement method for each topic is shown in Table 2, significantly increasing the dataset’s size and diversity.

Figure 10.

Data-enhancement method based on reverse translation.

Table 2.

The data volume before and after data enhancement for each topic.

For sentiment analysis, the weibo_senti_100k dataset is used, which consists of 119,988 sentiment-labeled Weibo posts, equally divided between 59,994 positive- and 59,994 negative-sentiment data. During the research process, we randomly selected N samples for sentiment training, including positive- and negative-sentiment samples, respectively.

4.2. Experimental Settings

The configuration environment and the hyperparameter settings involved in the experiment are shown in Table 3.

Table 3.

Hardware, software, and hyperparameter settings.

4.3. Evaluation Metrics

In text classification tasks, commonly used evaluation metrics include and [30]. These two metrics assess the model’s performance from the perspectives of accuracy and completeness, making them especially effective for evaluating models on imbalanced datasets. Therefore, we used these two evaluation metrics in the study, and their calculation formulas are as follows:

where represents the number of correctly classified positive examples, is the number of examples incorrectly classified as positive, and is the number of examples incorrectly classified as negative.

The F- [31] represents the weighted harmonic mean of and . When the weighting parameter is set to 1, and are equally important, and the F- is referred to as the -. The formula for is:

By calculating the macro-averaged , , and for both labels, we obtain the corresponding averages:

where , and , respectively, represent the , and under the “support” label, while , , and , respectively, represent the , and under the “oppose” label.

4.4. Results

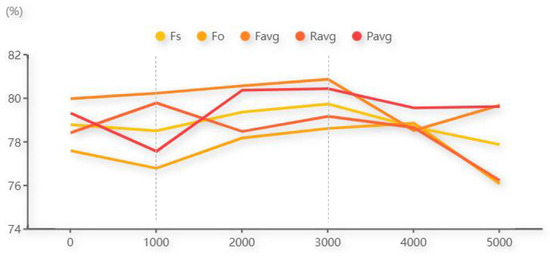

4.4.1. Effect of Different Emotional Data Volumes

To evaluate the model’s stance detection performance under varying amounts of sentiment data, we conduct six comparative experiments, with sentiment data set at 0, 1000, 2000, 3000, 4000, and 5000 data volumes. The results are presented in Table 4 and Figure 11.

Table 4.

The results of different emotional data volumes (%).

Figure 11.

The results of different emotional data volumes.

By combining the results from Table 4 and Figure 11, it can be observed that when the amount of sentiment data ranges from 0 to 1000, the , , and metrics all show a declining trend. This suggests that adding only a small amount of sentiment data is insufficient for the model to effectively learn the coupled information between stance and sentiment, and may instead introduce noise during the stance detection learning process, thereby weakening the model’s performance. In contrast, when the sentiment data volume increases from 1000 to 3000, the model’s stance detection performance begins to improve. This indicates that a moderate amount of sentiment data can effectively assist the model in capturing the correlation between stance and sentiment, enhancing its stance detection capabilities. However, as the sentiment data continues to increase from 3000 to 5000, the performance metrics start to decline overall. This may be due to the model focusing too much on the auxiliary sentiment analysis task, thereby neglecting the primary stance detection task. These experimental results demonstrate that, in multi-task learning-based stance detection, too little auxiliary data may introduce noise that interferes with the main task, while too much data may cause the model to deviate from the primary task. Therefore, it is crucial to determine the optimal amount of auxiliary task data through experiments to ensure the primary task’s performance is optimized within an appropriate multi-task learning framework.

4.4.2. Comparison with Different Network Models

To further verify the effectiveness of the multi-task learning model, the data from all five topics were trained together and compared against models such as TextCNN [32], FastText [33], BERT [26], and RoBERTa [27]. The results of these comparative experiments are shown in Table 5.

Table 5.

The results of joint training on all topics in different network models (%).

Analyzing Table 5, the experimental results show that the multi-task learning model achieves the best performance across all five metrics. This indicates that after incorporating the sentiment analysis task into the multi-task learning framework, the model’s stance detection performance improved significantly, with optimized results in all evaluation metrics. It suggests that in scenarios where multiple topics’ stance data are trained together, the proposed multi-task learning method, which integrates emotional features, can extract more accurate stance detection features and better handle the stance detection problem.

To further validate the effectiveness of the multi-task learning model, the data from the five topics were also trained separately, and the results were compared against the following models. This comparison highlights the evolution from traditional machine learning to deep learning, pre-trained models, and knowledge-enhanced methods in stance detection tasks.

- Dian [11]. This model explores multiple text features and uses supervised learning methods like SVM for stance classification.

- TAN (Target-specific Attention Neural Network) [34]. This model combines RNN, LSTM, and a target-specific attention mechanism to perform stance detection by extracting information related to the target, considering the role of target topics in stance analysis.

- ATA (Attention-Target-Attention) [35]. An improved version of the TAN model, it proposes a two-stage attention mechanism for stance classification, effectively integrating target topics with Weibo texts.

- BCC (BERT-Condition-CNN) [36]. This model uses BERT to obtain vector representations of target topics and Weibo texts, processes the relationship features between the target and text through a Condition layer, and finally uses CNN for feature extraction and stance detection.

- CBL (CNN-BiLSTM) [37]. This model adopts CNN and BiLSTM to extract local features and global semantics from text to perform stance detection on Weibo posts.

- BGA (GCN and BiLSTM) [38]. This model uses BiLSTM to obtain text features and constructs a Graph Convolution Network (GCN) to capture syntactic relations and word dependencies. It calculates attention scores for target topics to analyze the stance tendencies of Weibo texts.

- BERT-SECA [39]. A sentiment-enhanced stance detection model based on convolutional attention, it focuses on the relevant features between text and target topics and integrates emotional features to enhance text representation.

- KE-BERT (Multi-type Knowledge-Enhanced and BERT) [40]. This model combines multiple types of commonsense knowledge to enhance semantic information, using improved BERT and convolutional attention mechanisms to encode and integrate commonsense knowledge for determining stance.

- Hybrid Network. This is the RoBERTa-BiLSTM hybrid model proposed in this paper. It first extracts basic semantic information between comment texts and target topics using RoBERTa, and then uses BiLSTM to enhance the relational features between comment texts and topics. The model’s three independent stance judgment modules evaluate support, opposition, and neutrality stances separately.

- MTL (Multi-task Learning). This is the multi-task learning model proposed in this paper, which builds upon the RoBERTa-BiLSTM hybrid network model by adding a sentiment analysis module. It integrates stance detection with sentiment analysis, performing multi-task learning with stance detection as the primary task and sentiment analysis as the auxiliary task.

The experimental results as shown in Table 6, combined with Table 5, lead to the following analysis. When multi-task learning is applied to training on all topics together, the stance detection ability of the model is significantly enhanced. However, when each topic is trained individually, while good performance is still achieved, the advantage is not as pronounced as when training with all data combined. This is because the multi-task learning model has a complex network structure that requires a larger dataset to accurately capture stance information. When training each topic individually, the amount of training data is significantly reduced compared to training with all topics together, which makes it harder for the model to capture precise stance features and the coupled features between stance and sentiment.

Table 6.

The - of individual training for each topic and the macro-averaged - for all topics in different network models (%).

Overall, incorporating multi-task learning helps compensate for some of the shortcomings of the hybrid network. Specifically, the model’s stance detection performance improved significantly for topic 1, topic 2, and topic 5 after adding emotional features, with increases of 3.4%, 2.0%, and 1.3%, respectively.

For topic 3 and topic 4, adding emotional features had a negative effect. In some test cases, the stance detection results were correct before the emotional features were integrated, but after integrating the emotional features, errors occurred. Table 7 presents examples where the incorporation of emotional features led to incorrect stance detection results. Upon analyzing these instances, it was found that the misclassified text commonly contained sarcasm, particularly in discussions about topic 4. In addition to sarcasm, the unfriendly tone in some comments increased the difficulty of detecting stance after integrating emotional features, which impacted the model’s accuracy. This is because the sentiment expressed in these texts does not align with their actual stance, and sarcastic or unfriendly tones (e.g., the use of negative sentiment words, harsh language, or mocking expressions) often carry layered emotions and intentions. The model struggled to accurately determine the stance in these cases due to the ambiguous or complex emotional content.

Table 7.

Some examples of incorrect detection results after integrating emotional features.

4.4.3. Quantitative Analysis

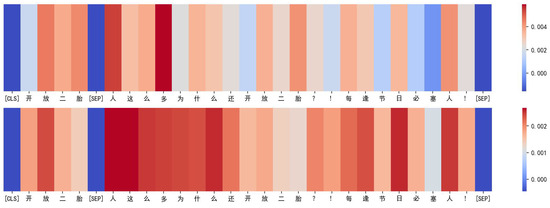

To further analyze the differences in stance detection before and after incorporating emotional features, the attention weights from the last layer of the RoBERTa model were extracted for comparison. The results are shown in Figure 12.

Figure 12.

The attention weights of the example in hybrid network model and multi-task learning model. The figure above depicts the attention weights of an example in the hybrid network model, while the figure below depicts the attention weights of the same example in the multi-task learning model. The example translated into English is “[CLS]Open second child[SEP] Why are so many people still opening second child?! Every festival is crowded! [SEP]”.

From Figure 12, it can be observed that after integrating emotional features and applying multi-task learning, the attention weight distribution in the RoBERTa layer changed for the same piece of text. Before adding sentiment information, the hybrid network mainly focused on the words “人” (“people”) and “多” (“many”), which led to the stance detection result of opposition. However, after incorporating emotional features, the model paid attention to a more comprehensive range of information, including sentiment-laden phrases such as “人为什么这么多” (“Why are there so many people”) and “每逢” (“whenever”), indicating that the proposed multi-task learning method effectively integrates stance and sentiment information. This allows the model to extract more comprehensive semantic information, thereby improving stance detection performance.

5. Conclusions

As social media continues to grow rapidly, user-generated content is increasing exponentially, containing rich personal opinions, emotions, and stances. Stance detection technology can automatically identify the emotional tone and stance within texts, providing valuable data support for areas such as public opinion monitoring, brand reputation management, and market research. It plays a crucial role in social network analysis. This paper proposes a multi-task learning stance detection method that integrates emotional features to achieve the joint learning of stance detection and sentiment analysis. The text is first passed through the RoBERTa pre-trained model to extract basic semantic features, which are then passed to an Attention-Linear layer to extract deeper fused features of stance and sentiment. These fused features are subsequently passed to both the stance detection and sentiment analysis modules. By incorporating sentiment analysis as an auxiliary task in multi-task learning, the model is guided to perform the stance detection task more effectively, and the experimental results verify the practical effectiveness of this approach.

The experimental results indicate that incorporating emotional features enhances the stance detection performance in many cases, particularly for texts with clear emotional tones. The ability to jointly model sentiment and stance provides richer contextual understanding, which can significantly improve social network security by more accurately identifying harmful content, such as hate speech or misinformation. However, the experimental results also show that, in each topic, for stance detection training scenarios with limited data, integrating emotional features into the multi-task learning approach can have negative effects on stance detection performance. This is particularly evident when processing texts containing sarcasm or strong emotional tones, where prediction errors may occur. To address this challenge, future work needs to consider the multi-dimensionality and complexity of a sentiment more carefully and focus on developing more efficient multi-task learning models. This is especially important for better adapting to specific application environments with smaller data volumes.

Author Contributions

Conceptualization, Q.P. and F.L.; methodology, Q.P., F.H. and F.L.; validation, F.H. and F.L.; formal analysis, F.H. and F.L.; writing—original draft preparation, F.H. and F.L.; writing—review and editing, Q.P., J.W. and S.J.; supervision, Q.P.; project administration, Q.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Social Science Fund of China (Grant No. 20BGL251) and was supported by the National Natural Science Foundation of China (Grant No. 52105167).

Data Availability Statement

The NLPCC2016-task4 dataset used to support the findings of this study are openly available in [http://tcci.ccf.org.cn/conference/2016/pages/page05_evadata.html], (10 January 2024). The weibo_senti_100k dataset used to support the findings of this study are openly available in [https://github.com/SophonPlus/ChineseNlpCorpus], (2 February 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alturayeif, N.; Luqman, H.; Ahmed, M. A systematic review of machine learning techniques for stance detection and its applications. Neural Comput. Appl. 2023, 35, 5113–5144. [Google Scholar] [CrossRef]

- Küçük, D.; Can, F. A tutorial on stance detection. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Tempe, AZ, USA, 21–25 February 2022; pp. 1626–1628. [Google Scholar]

- AlDayel, A.; Magdy, W. Stance detection on social media: State of the art and trends. Inf. Process. Manag. 2021, 58, 102597. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Zuo, X. Survey of multi-task learning. Chin. J. Comput. 2020, 43, 1340–1378. [Google Scholar] [CrossRef]

- Cao, R.; Luo, X.; Xi, Y.; Qiao, Y. Stance detection for online public opinion awareness: An overview. Int. J. Intell. Syst. 2022, 37, 11944–11965. [Google Scholar] [CrossRef]

- Ghosh, S.; Singhania, P.; Singh, S.; Rudra, K. Stance Detection in Web and Social Media: A Comparative Study. In Proceedings of the tenth International Conference of the CLEF Association (CLEF2019), Lugano, Switzerland, 9–12 September 2019; pp. 75–87. [Google Scholar]

- Li, Y.; Sun, Y.; Jing, W. Survey of text stance detection. J. Comput. Res. Dev. 2021, 58, 2538–2557. [Google Scholar]

- Gómez-Suta, M.; Echeverry-Correa, J.; Soto-Mejía, J.A. Stance detection in tweets: A topic modeling approach supporting explainability. Expert Syst. Appl. 2023, 214, 119046. [Google Scholar] [CrossRef]

- Nababan, A.H.; Mahendra, R.; Budi, I. Survey of multi-task learning. Procedia Comput. Sci. 2022, 197, 76–81. [Google Scholar] [CrossRef]

- Al-Ghadir, A.I.; Azmi, A.M.; Hussain, A. A novel approach to stance detection in social media tweets by fusing ranked lists and sentiments. Inf. Fusion 2021, 67, 29–40. [Google Scholar] [CrossRef]

- Dian, Y.; Jin, Q.; Wu, H. Stance detection in Chinese microblogs via fusing multiple text features. Comput. Eng. Appl. 2017, 53, 77–84. [Google Scholar]

- Liu, C.; Li, W.; Demarest, B.; Chen, Y.; Couture, S.; Dakota, D.; Haduong, N.; Kaufman, N.; Lamont, A.; Pancholi, M.; et al. Iucl at semeval-2016 task 6: An ensemble model for stance detection in twitter (SemEval-2016). In Proceedings of the Tenth International Workshop on Semantic Evaluation, San Diego, CA, USA, 16–17 June 2016; pp. 394–400. [Google Scholar]

- Sobhani, P.; Mohammad, S.; Kiritchenko, S. Detecting stance in tweets and analyzing its interaction with sentiment. In Proceedings of the Fifth Joint Conference on Lexical and Computational Semantics, Berlin, Germany, 11–12 August 2016; pp. 159–169. [Google Scholar]

- Fu, Y.; Li, X.; Li, Y.; Wang, S.; Li, D.; Liao, J.; Zheng, J. Incorporate opinion-towards for stance detection. Knowl.-Based Syst. 2022, 246, 108657. [Google Scholar] [CrossRef]

- Baly, R.; Mohtarami, M.; Glass, J.; Màrquez, L.; Moschitti, A.; Nakov, P. Integrating stance detection and fact checking in a unified corpus. arXiv 2018, arXiv:1804.08012. [Google Scholar]

- Zhang, H.; Li, Y.; Zhu, T.; Li, C. Commonsense-based adversarial learning framework for zero-shot stance detection. Neurocomputing 2024, 563, 126943. [Google Scholar] [CrossRef]

- Umer, M.; Imtiaz, Z.; Ullah, S.; Mehmood, A.; Choi, G.S.; On, B. Fake news stance detection using deep learning architecture (CNN-LSTM). IEEE Access 2020, 8, 156695–156706. [Google Scholar] [CrossRef]

- Karande, H.; Walambe, R.; Benjamin, V.; Kotecha, K.; Raghu, T. Stance detection with BERT embeddings for credibility analysis of information on social media. PeerJ Comput. Sci. 2021, 7, e467. [Google Scholar] [CrossRef] [PubMed]

- Ng, L.H.X.; Carley, K.M. Is my stance the same as your stance? A cross validation study of stance detection datasets. Inf. Process. Manag. 2022, 59, 103070. [Google Scholar] [CrossRef]

- Sun, Y.; Li, Y. Stance detection with knowledge enhanced bert. In Proceedings of the first CAAI International Conference on Artificial Intelligence, Hangzhou, China, 5–6 June 2021; pp. 239–250. [Google Scholar]

- Li, Y.; Sun, Y.; Zhu, N. BERTtoCNN: Similarity-preserving enhanced knowledge distillation for stance detection. PLoS ONE 2021, 16, e0257130. [Google Scholar] [CrossRef] [PubMed]

- Pham, H.T.; Han, S. Natural language processing with multitask classification for semantic prediction of risk-handling actions in construction contracts. J. Comput. Civ. Eng. 2023, 37, 04023027. [Google Scholar] [CrossRef]

- Alturayeif, N.; Luqman, H.; Ahmed, M. Enhancing stance detection through sequential weighted multi-task learning. Soc. Netw. Anal. Min. 2023, 14, 7. [Google Scholar] [CrossRef]

- Upadhyaya, A.; Fisichella, M.; Nejdl, W. A multi-task model for sentiment aided stance detection of climate change tweets. In Proceedings of the Seventeenth International AAAI Conference on Web and Social Media, Limassol, Cyprus, 5–8 June 2023; pp. 854–865. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Thirty-First International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Swets, J.A. Information retrieval systems. Science 1963, 141, 245–250. [Google Scholar] [CrossRef]

- van Rijsbergen, C.J. Information Retrieval, 2nd ed.; Butterworths: London, UK, 1979. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of tricks for efficient text classification. arXiv 2019, arXiv:1607.01759. [Google Scholar]

- Du, J.; Xu, R.; He, Y.; Gui, L. Stance classification with target-specific neural attention networks. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI 2017), Melbourne, Australia, 19–25 August 2017; pp. 3988–3994. [Google Scholar]

- Yue, T.; Zhang, S.; Yang, L.; Lin, H.; Yu, K. Stance Detection Method Based on Two-Stage Attention Mechanism. J. Guangxi Norm. Univ. (Nat. Sci. Ed.) 2019, 37, 42–49. [Google Scholar]

- Wang, A.; Huang, K.; Lu, L. Stance detection in Chinese microblogs via Bert-Condition-CNN model. Comput. Syst. Appl. 2019, 28, 45–53. [Google Scholar]

- Zhang, C.; Hao, J.; Liu, X.; Sun, Y. Research on stance detection in Chinese micro-blog based on CNN-BiLSTM. Comput. Technol. Dev. 2020, 30, 154–159. [Google Scholar]

- Yang, S.; Li, Y.; Zhao, Q. Stance detection method of Chinese micro-blog based on GCN and BiLSTM. J. Chongqing Univ. Technol. (Nat. Sci.) 2020, 34, 167–173. [Google Scholar]

- Geng, Y.; Zhang, S.; Zhang, Y.; Lin, H.; Yang, L. Sentiment-enhanced weibo stance detection based on convolutional attention. J. Shanxi Univ. (Nat. Sci. Ed.) 2022, 45, 302–312. [Google Scholar]

- Wang, T.; Yuan, J.; Qi, R.; Li, Y. Multi-type knowledge-enhanced microblog stance detection model. J. Guangxi Norm. Univ. (Nat. Sci. Ed.) 2024, 42, 79–90. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).