AGProto: Adaptive Graph ProtoNet towards Sample Adaption for Few-Shot Malware Classification

Abstract

1. Introduction

- Adaptive Prototyping via Graph Neural Networks: We introduce a novel approach where class prototypes dynamically adapt through Graph Neural Networks, enhancing representations and robustness by aligning prototypes closely with the features of input samples.

- Consistency Loss: We innovatively integrate the cosine similarity of predictions from various prototypes into the loss function, ensuring consistent predictions across different prototypes for a given query sample, thereby improving the model’s overall stability and accuracy.

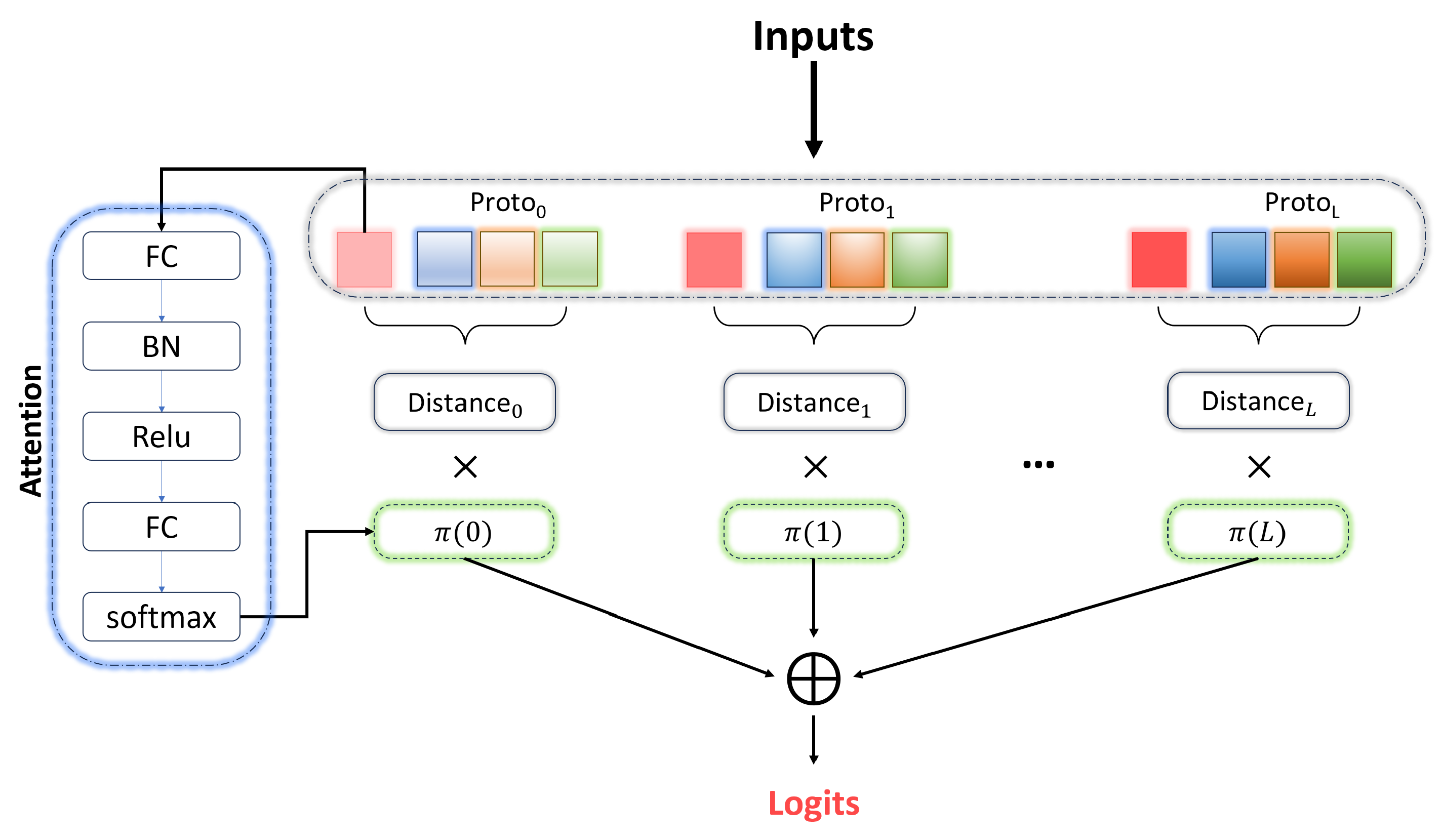

- Attention-Based Dynamic ProtoNet: We propose an innovative mechanism that generates multiple weighted prototypes per sample, utilizing an attention framework to dynamically adjust the influence of each prototype based on the sample’s unique characteristics, thereby enhancing the model’s precision and adaptability.

2. Related Work

2.1. Malware Image

2.2. Few-Shot Learning

2.3. GNN in Few-Shot Learning

3. Method

3.1. Problem Definition

3.2. Convert Malware to Image

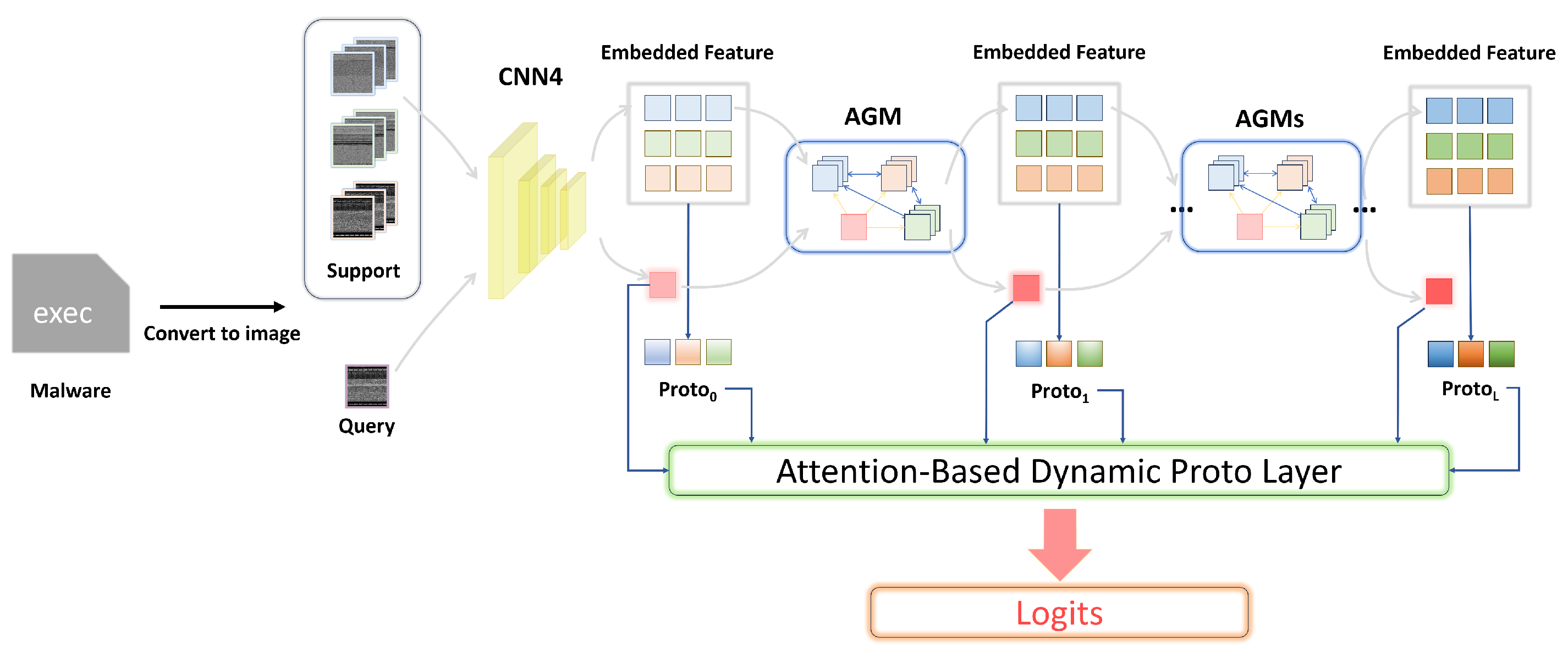

3.3. Proposed Framework

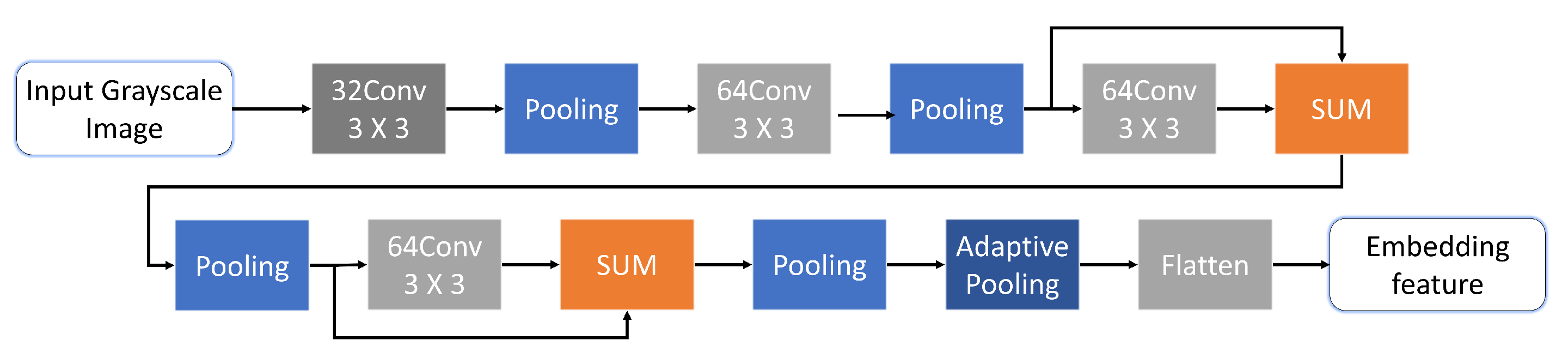

- Embedding Module: This module is responsible for projecting input samples into an initial feature space, denoted as for the support set and for the query set. Specifically, for a given support-set sample , the feature representation is computed as , where represents the embedding function implemented with a CNN4 architecture. The process is analogous for the query set.

- Adaptive Graph Modules: These models are designed to map the samples from both the support and query sets into new feature spaces, represented as and , respectively. Assuming there are n Adaptive Graph Modules in the framework, the index i ranges from 1 to n.

- Prototype Aggregation Layer: This layer is tasked with obtaining a prototype for each class within every feature space, based on the features of the support set. The prototypes serve as representative points for each class in the feature space.

- Attention-Based Dynamic Proto-Layer: This component adaptively calculates the weights of the prototypes in each feature space based on the features of the query and then aggregates these to output the final class probabilities.

| Algorithm 1 AGProto for Few-Shot Malware Classification |

|

3.3.1. Embedding Module

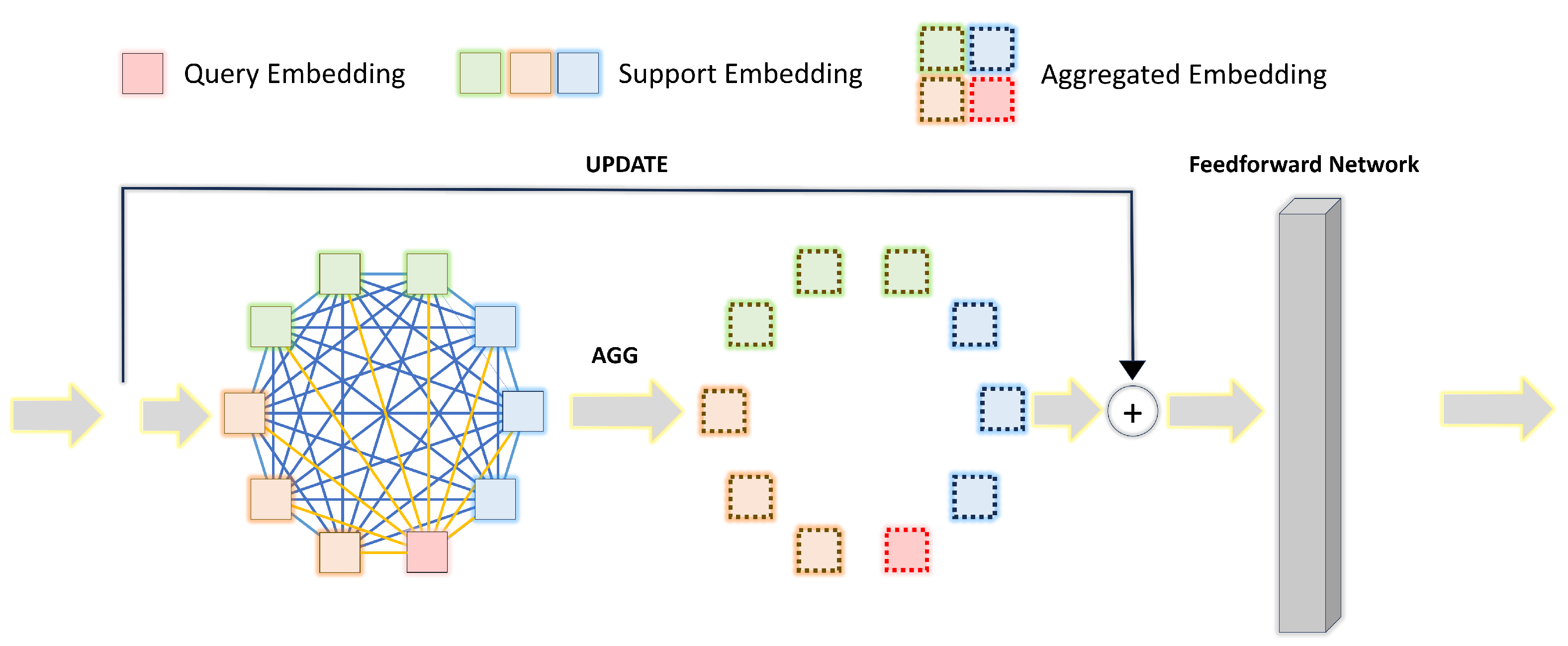

3.3.2. Adaptive Graph Module

- (1).

- Graph Construction

- (2).

- Message Passing

3.3.3. Proto Aggregation Layer

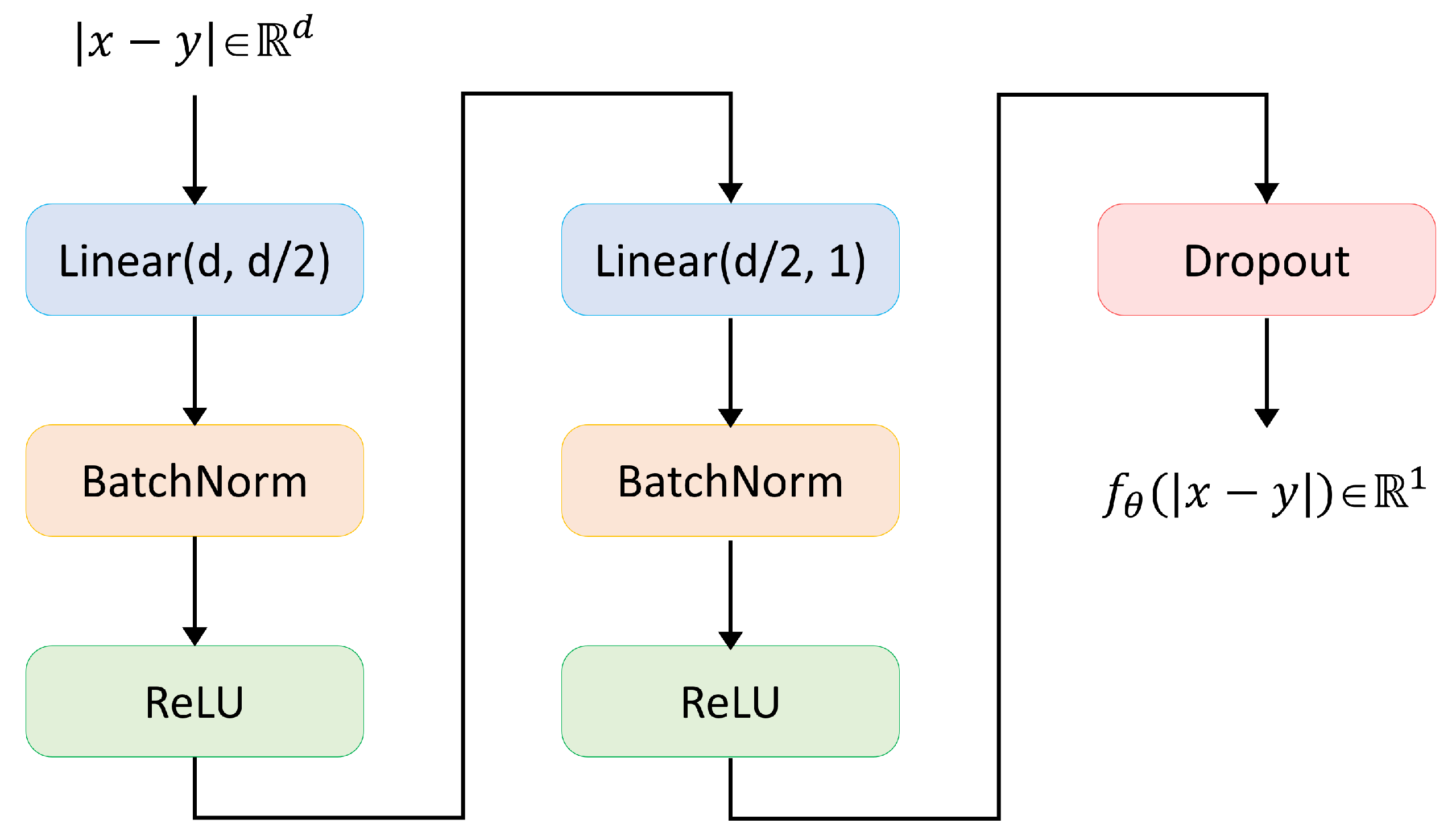

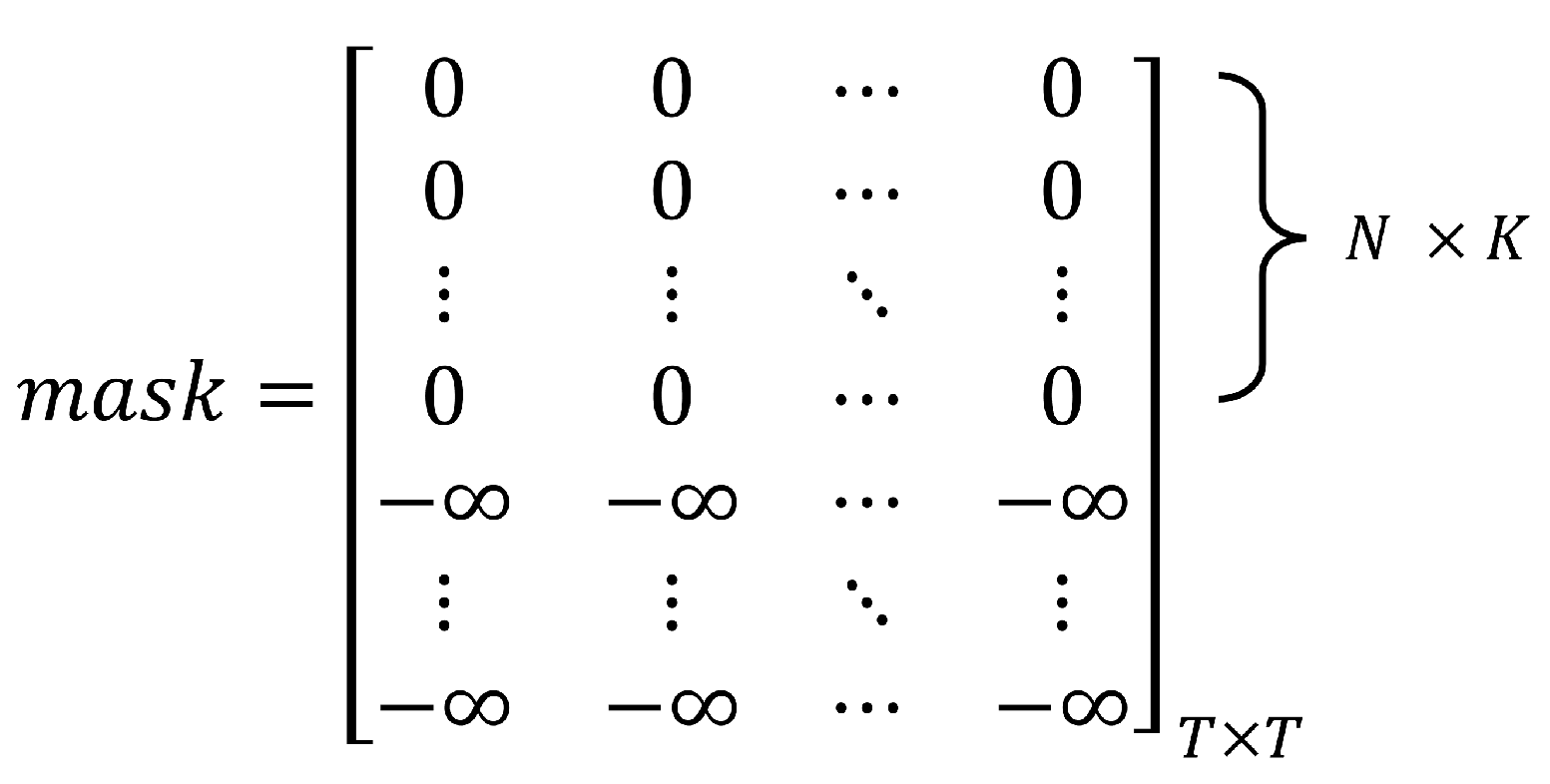

3.3.4. Attention-Based Dynamic Proto-Layer

3.4. Optimization

4. Results

4.1. Datasets

4.2. Baseline Models

- Classic machine-learning methodsGist+KNN [36]: A classic machine-learning method for few-shot malware classification that uses Gist descriptors to capture the spatial structure of an image as features for the K-Nearest Neighbors algorithm.Pixel+KNN: This method directly utilizes the normalized pixel values of images as features for the K-Nearest Neighbors algorithm.

- Optimization-Based MethodsMAML [16]: Model-Agnostic Meta Learning is designed to rapidly adapt to new tasks with minimal data. It prepares the model with an initialization that is sensitive to changes in the task, allowing for quick adaptation in just a few gradient updates.GAP [19]: This approach enhances the inner-loop optimization in meta learning by using a Geometry-Adaptive Preconditioner.

- Metric-Based MethodsProtoNet [20]: Prototypical networks learn a metric space in which classification can be performed by computing distances to prototype representations of each class.RelationNet [21]: Employs a learnable relation module to compare query and few-shot support images.ConvProtoNet [22]: This model enhances the standard ProtoNet by incorporating a convolutional induction module.

4.3. Experiment Setup

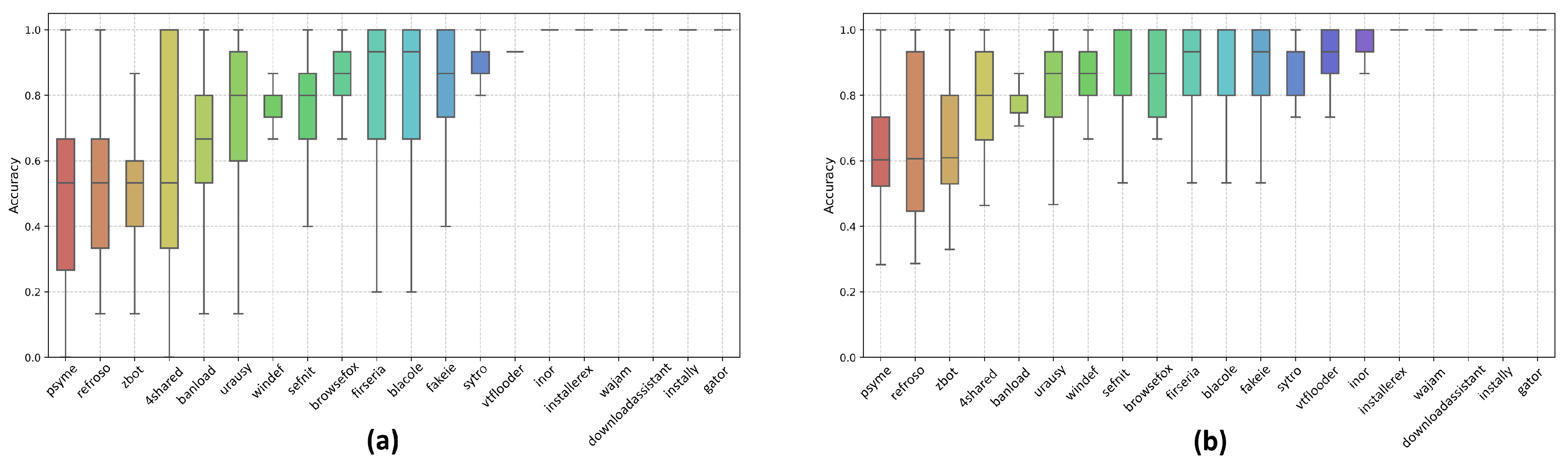

4.4. Experiment Results

4.5. Performance Analysis

5. Discussion

5.1. Why AGProto Works

5.2. Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kaspersky. Kaspersky Security Bulletin 2023. Available online: https://media.kasperskycontenthub.com/wp-content/uploads/sites/43/2023/11/28102415/KSB_statistics_2023_en.pdf (accessed on 7 January 2024).

- Yan, S.; Ren, J.; Wang, W.; Sun, L.; Zhang, W.; Yu, Q. A Survey of Adversarial Attack and Defense Methods for Malware Classification in Cyber Security. IEEE Commun. Surv. Tutor. 2023, 25, 467–496. [Google Scholar] [CrossRef]

- Ucci, D.; Aniello, L.; Baldoni, R. Survey of machine learning techniques for malware analysis. Comput. Secur. 2019, 81, 123–147. [Google Scholar] [CrossRef]

- Gibert, D.; Mateu, C.; Planes, J. The rise of machine learning for detection and classification of malware: Research developments, trends and challenges. J. Netw. Comput. Appl. 2020, 153, 102526. [Google Scholar] [CrossRef]

- Liu, L.; Wang, B.S.; Yu, B.; Zhong, Q.X. Automatic malware classification and new malware detection using machine learning. Front. Inf. Technol. Electron. Eng. 2017, 18, 1336–1347. [Google Scholar] [CrossRef]

- Cakir, B.; Dogdu, E. Malware classification using deep learning methods. In Proceedings of the ACMSE 2018 Conference, Richmond, KY, USA,, 29–31 March 2018; pp. 1–5. [Google Scholar]

- Kalash, M.; Rochan, M.; Mohammed, N.; Bruce, N.D.; Wang, Y.; Iqbal, F. Malware classification with deep convolutional neural networks. In Proceedings of the 2018 9th IFIP International Conference on New Technologies, Mobility and Security (NTMS), Paris, France, 26–28 February 2018; pp. 1–5. [Google Scholar]

- Pascanu, R.; Stokes, J.W.; Sanossian, H.; Marinescu, M.; Thomas, A. Malware classification with recurrent networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 1916–1920. [Google Scholar]

- Li, F.; Fergus, R.; Perona, P. One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 594–611. [Google Scholar]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware images: Visualization and automatic classification. In Proceedings of the 8th International Symposium on Visualization for Cyber Security, Pittsburgh, PA, USA, 20 July 2011; pp. 1–7. [Google Scholar]

- Vasan, D.; Alazab, M.; Wassan, S.; Safaei, B.; Zheng, Q. Image-Based malware classification using ensemble of CNN architectures (IMCEC). Comput. Secur. 2020, 92, 101748. [Google Scholar] [CrossRef]

- Kancherla, K.; Mukkamala, S. Image visualization based malware detection. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence in Cyber Security (CICS), Singapore, 16–19 April 2013; pp. 40–44. [Google Scholar]

- Venkatraman, S.; Alazab, M.; Vinayakumar, R. A hybrid deep learning image-based analysis for effective malware detection. J. Inf. Secur. Appl. 2019, 47, 377–389. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Wassan, S.; Naeem, H.; Safaei, B.; Zheng, Q. IMCFN: Image-based malware classification using fine-tuned convolutional neural network architecture. Comput. Netw. 2020, 171, 107138. [Google Scholar] [CrossRef]

- Cui, Z.; Zhao, Y.; Cao, Y.; Cai, X.; Zhang, W.; Chen, J. Malicious code detection under 5G HetNets based on a multi-objective RBM model. IEEE Netw. 2021, 35, 82–87. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning. PMLR, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Nichol, A.; Achiam, J.; Schulman, J. On first-order meta-learning algorithms. arXiv 2018, arXiv:1803.02999. [Google Scholar]

- Rajeswaran, A.; Finn, C.; Kakade, S.M.; Levine, S. Meta-Learning with Implicit Gradients. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Kang, S.; Hwang, D.; Eo, M.; Kim, T.; Rhee, W. Meta-Learning with a Geometry-Adaptive Preconditioner. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16080–16090. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-Shot Learning. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Tang, Z.; Wang, P.; Wang, J. ConvProtoNet: Deep prototype induction towards better class representation for few-shot malware classification. Appl. Sci. 2020, 10, 2847. [Google Scholar] [CrossRef]

- Wang, P.; Tang, Z.; Wang, J. A novel few-shot malware classification approach for unknown family recognition with multi-prototype modeling. Comput. Secur. 2021, 106, 102273. [Google Scholar] [CrossRef]

- Garcia, V.; Bruna, J. Few-shot learning with graph neural networks. arXiv 2017, arXiv:1711.04043. [Google Scholar]

- Gidaris, S.; Komodakis, N. Generating classification weights with gnn denoising autoencoders for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 21–30. [Google Scholar]

- Zhou, F.; Cao, C.; Zhang, K.; Trajcevski, G.; Zhong, T.; Geng, J. Meta-gnn: On few-shot node classification in graph meta-learning. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 2357–2360. [Google Scholar]

- Wang, N.; Luo, M.; Ding, K.; Zhang, L.; Li, J.; Zheng, Q. Graph few-shot learning with attribute matching. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Online, 19–23 October 2020; pp. 1545–1554. [Google Scholar]

- Tang, S.; Chen, D.; Bai, L.; Liu, K.; Ge, Y.; Ouyang, W. Mutual crf-gnn for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2329–2339. [Google Scholar]

- Yu, T.; He, S.; Song, Y.Z.; Xiang, T. Hybrid graph neural networks for few-shot learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 3179–3187. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching Networks for One Shot Learning. Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Total, V. Virustotal-Free Online Virus, Malware and Url Scanner. Available online: https://www.virustotal.com/en (accessed on 7 January 2024).

- Sebastián, M.; Rivera, R.; Kotzias, P.; Caballero, J. Avclass: A tool for massive malware labeling. In Proceedings of the Research in Attacks, Intrusions, and Defenses: 19th International Symposium, RAID 2016, Paris, France, 19–21 September 2016; Proceedings 19. Springer: Berlin/Heidelberg, Germany, 2016; pp. 230–253. [Google Scholar]

- Yajamanam, S.; Selvin, V.R.S.; Di Troia, F.; Stamp, M. Deep Learning versus Gist Descriptors for Image-based Malware Classification. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), Madeira, Portugal, 22–24 January 2018; pp. 553–561. [Google Scholar]

| File Size Range | Image Width |

|---|---|

| <10 kB | 32 |

| 10 kB–30 kB | 64 |

| 30 kB–60 kB | 128 |

| 60 kB–100 kB | 256 |

| 100 kB–200 kB | 384 |

| 200 kB–500 kB | 512 |

| 500 kB–1000 kB | 768 |

| >1000 kB | 1024 |

| Model/Task | 5-Way-5-Shot | 5-Way-10-Shot | 20-Way-5-Shot | 20-Way-10-Shot |

|---|---|---|---|---|

| Gist+KNN3 * | 69.07 ± 2.81 | 75.18 ± 2.53 | 55.51 ± 1.60 | 61.82 ± 1.58 |

| pixel KNN3 * | 63.60 ± 0.94 | 67.39 ± 0.96 | 42.49 ± 0.58 | 45.22 ± 0.42 |

| MAML | 83.11 ± 0.02 | 86.20 ± 0.02 | - | - |

| GAP | 83.19 ± 0.03 | 85.96 ± 0.03 | - | - |

| ProtoNet * | 79.57 ± 0.22 | 82.63 ± 0.20 | 63.31 ± 0.12 | 65.00 ± 0.11 |

| RelationNet * | 74.98 ± 0.23 | 77.28 ± 0.23 | 53.13 ± 0.12 | 57.48 ± 0.13 |

| ConvProtoNet * | 83.34 ± 0.13 | 86.63 ± 0.13 | 68.56 ± 0.08 | 71.38 ± 0.08 |

| Dynamic Conv | 82.59 ± 0.20 | 84.72 ± 0.19 | 69.27 ± 0.11 | 72.25 ± 0.12 |

| Ours | 86.08 ± 0.18 | 89.22 ± 0.16 | 72.09 ± 0.11 | 75.03 ± 0.10 |

| Model/Task | 5-Way-5-Shot | 5-Way-10-Shot | 20-Way-5-Shot | 20-Way-10-Shot |

|---|---|---|---|---|

| Gist+KNN3 | 74.30 ± 0.22 | 80.60 ± 0.19 | 61.12 ± 0.06 | 68.36 ± 0.05 |

| pixel KNN3 | 63.59 ± 0.27 | 69.28 ± 0.24 | 49.79 ± 0.08 | 55.57 ± 0.06 |

| MAML | 83.32 ± 0.02 | 85.06 ± 0.02 | - | - |

| GAP | 83.07 ± 0.02 | 86.20 ± 0.02 | - | - |

| ProtoNet | 81.78 ± 0.20 | 84.24 ± 0.17 | 67.60 ± 0.05 | 71.01 ± 0.06 |

| RelationNet | 78.25 ± 0.22 | 80.14 ± 0.19 | 65.29 ± 0.04 | 67.03 ± 0.04 |

| ConvProtoNet | 83.39 ± 0.19 | 85.87 ± 0.18 | 67.52 ± 0.06 | 71.52 ± 0.06 |

| Dynamic Conv | 83.40 ± 0.18 | 86.34 ± 0.17 | 68.43 ± 0.06 | 72.25 ± 0.06 |

| Ours | 86.15 ± 0.19 | 88.80 ± 0.17 | 71.13 ± 0.05 | 74.72 ± 0.05 |

| Model/Task | 5-Way-5-Shot | 5-Way-10-Shot | 20-Way-5-Shot | 20-Way-10-Shot |

|---|---|---|---|---|

| LargePE + AGProto | 82.13 ± 0.19 | 85.04 ± 0.18 | 66.34 ± 0.04 | 68.86 ± 0.05 |

| VirusShare + AGProto | 80.65 ± 0.25 | 83.10 ± 0.22 | 65.93 ± 0.13 | 68.12 ± 0.13 |

| Model | Parameters | Model Size | Training Speed | Inference Speed | Memory Usage |

|---|---|---|---|---|---|

| ProtoNet | 95.49 k | 390.3 KB | 38.21 it/s | 99.78 it/s | 2163 MB |

| RelationNet | 143.18 k | 601.59 KB | 37.34 it/s | 97.66 it/s | 2219 MB |

| ConvProtoNet | 106.4 k | 439.6 KB | 37.83 it/s | 98.57 it/s | 2345 MB |

| Dynamic Conv | 373.66 k | 1.50 MB | 28.64 it/s | 82.46 it/s | 2027 MB |

| AGProto (Ours) | 852.61 k | 6.65 MB | 27.37 it/s | 80.75 it/s | 2415 MB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Lin, T.; Wu, H.; Wang, P. AGProto: Adaptive Graph ProtoNet towards Sample Adaption for Few-Shot Malware Classification. Electronics 2024, 13, 935. https://doi.org/10.3390/electronics13050935

Wang J, Lin T, Wu H, Wang P. AGProto: Adaptive Graph ProtoNet towards Sample Adaption for Few-Shot Malware Classification. Electronics. 2024; 13(5):935. https://doi.org/10.3390/electronics13050935

Chicago/Turabian StyleWang, Junbo, Tongcan Lin, Huyu Wu, and Peng Wang. 2024. "AGProto: Adaptive Graph ProtoNet towards Sample Adaption for Few-Shot Malware Classification" Electronics 13, no. 5: 935. https://doi.org/10.3390/electronics13050935

APA StyleWang, J., Lin, T., Wu, H., & Wang, P. (2024). AGProto: Adaptive Graph ProtoNet towards Sample Adaption for Few-Shot Malware Classification. Electronics, 13(5), 935. https://doi.org/10.3390/electronics13050935