Enhanced Crowd Dynamics Simulation with Deep Learning and Improved Social Force Model

Abstract

1. Introduction

- Introduction of a generic multi-agent simulation model that combines deep learning techniques with the social force model, enabling the model to learn group behavior features from real data while applying constraints based on the social force model. In comparison to conventional social force model, our model yields superior simulation results across multiple evaluation metrics, without necessitating frequent parameter adjustments.

- Preservation of critical parameters of the HiDAC model within the architecture of the model. Our approach enables the flexible simulation of high-density crowds and a variety of crowd behaviors through parameter adjustments, demonstrating that the integration of deep learning with traditional models for crowd simulation, guided by a meticulous design process, is a feasible method that effectively preserves the strengths of classical models.

- Introduction of a novel training mechanism to enhance the generalization of crowd simulation. Instead of learning directly from natural crowd behavior data, the model benefits from training on modified natural crowd data, resulting in simulations characterized by reduced collision rates and more generalized crowd behaviors.

2. Related Work

2.1. Rule-Based Crowd Simulation Methods

2.2. Application of Deep Learning Methods in Crowd Tasks

3. Method

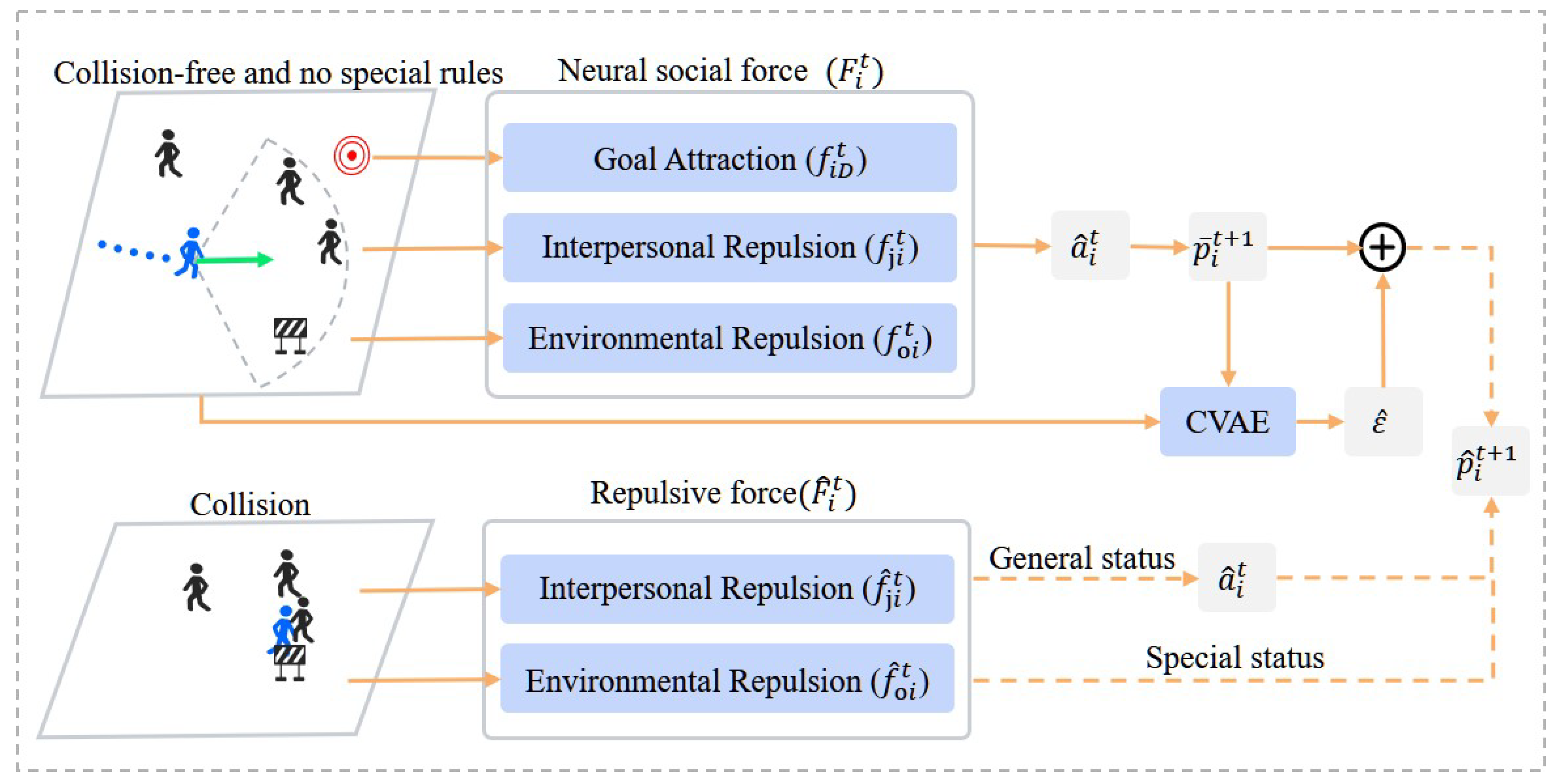

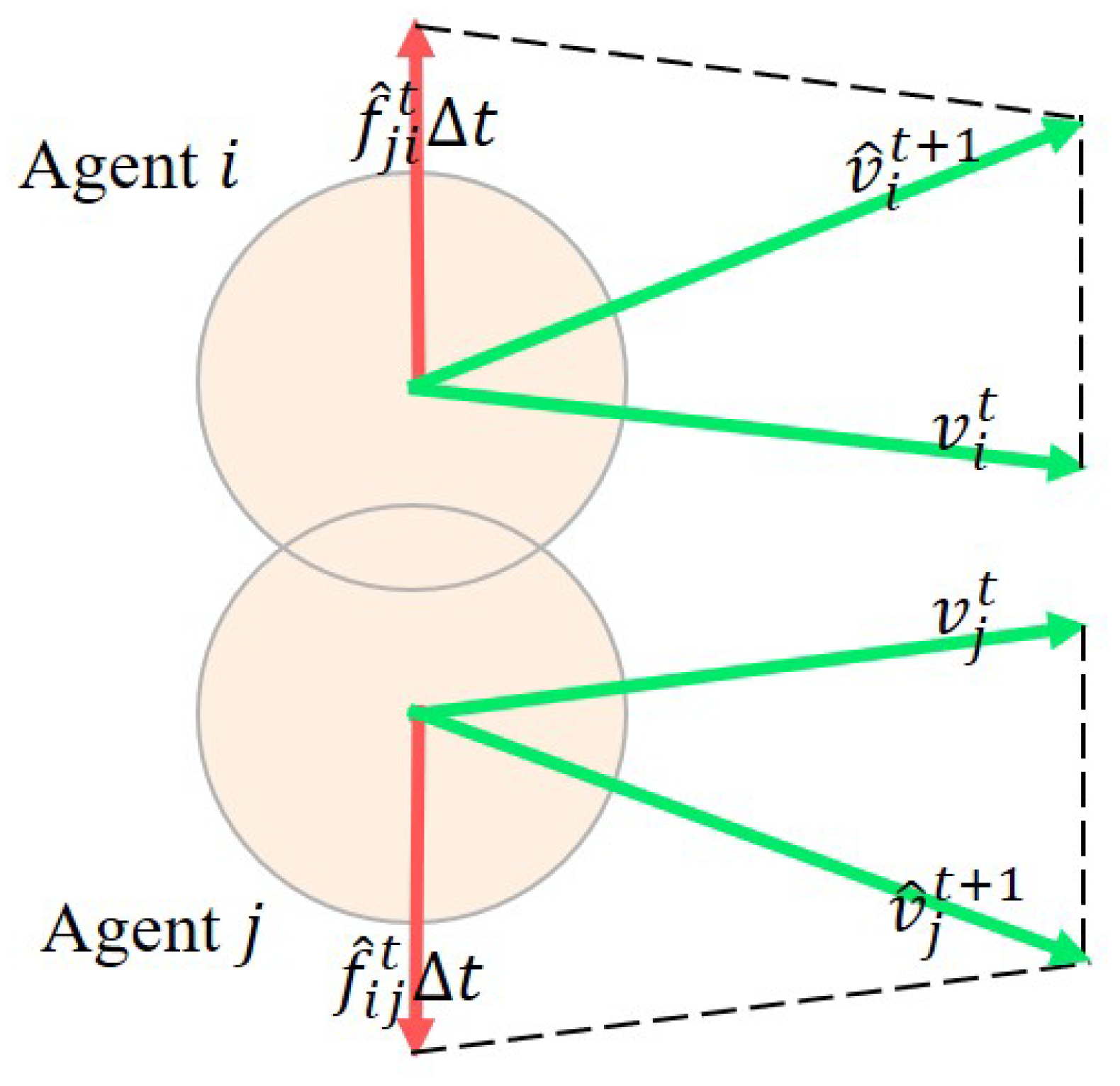

3.1. Physical Structure

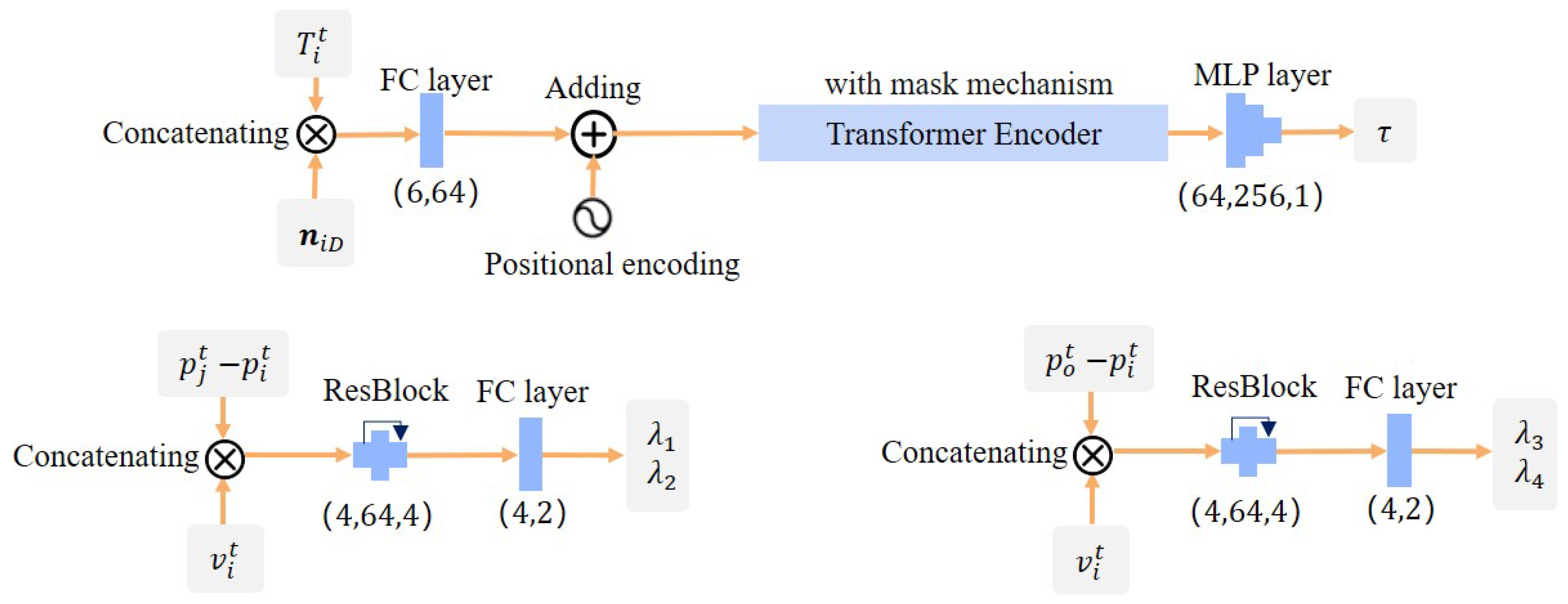

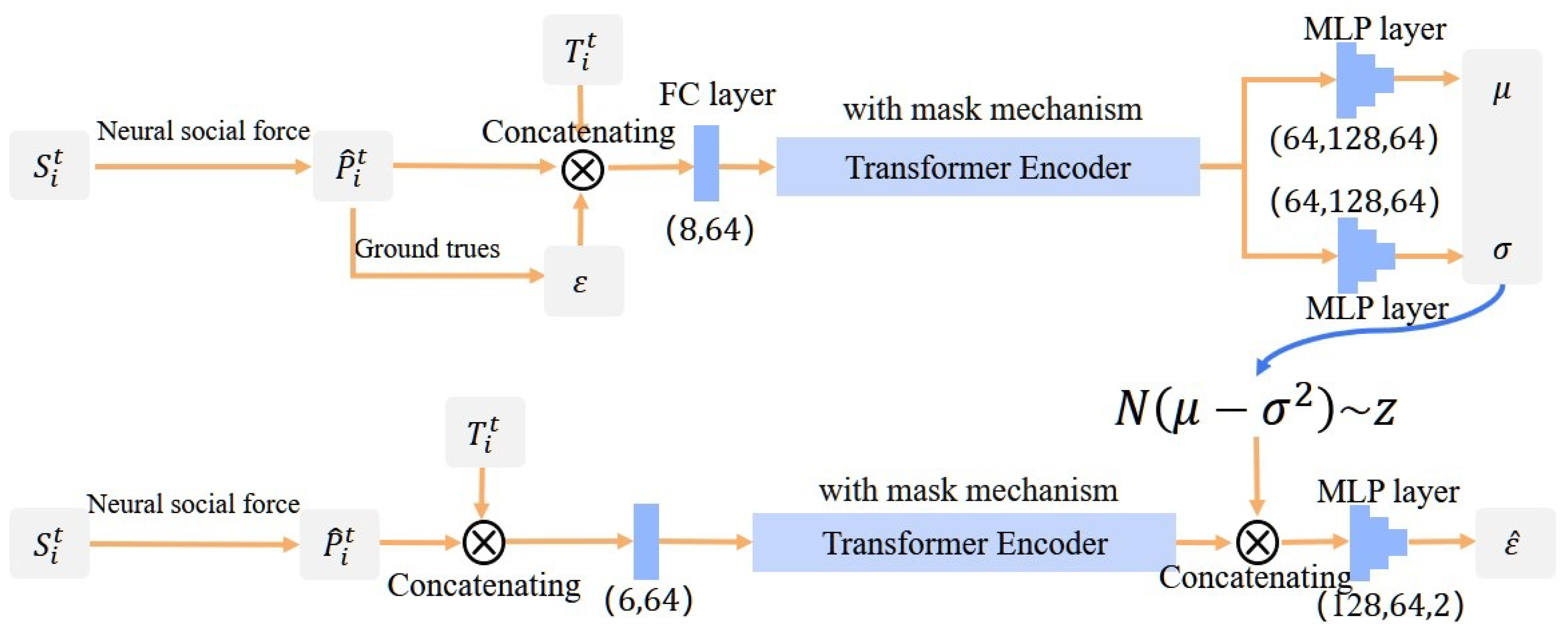

3.2. Neural Network Structure

3.3. Model Optimization Strategy

4. Experiments

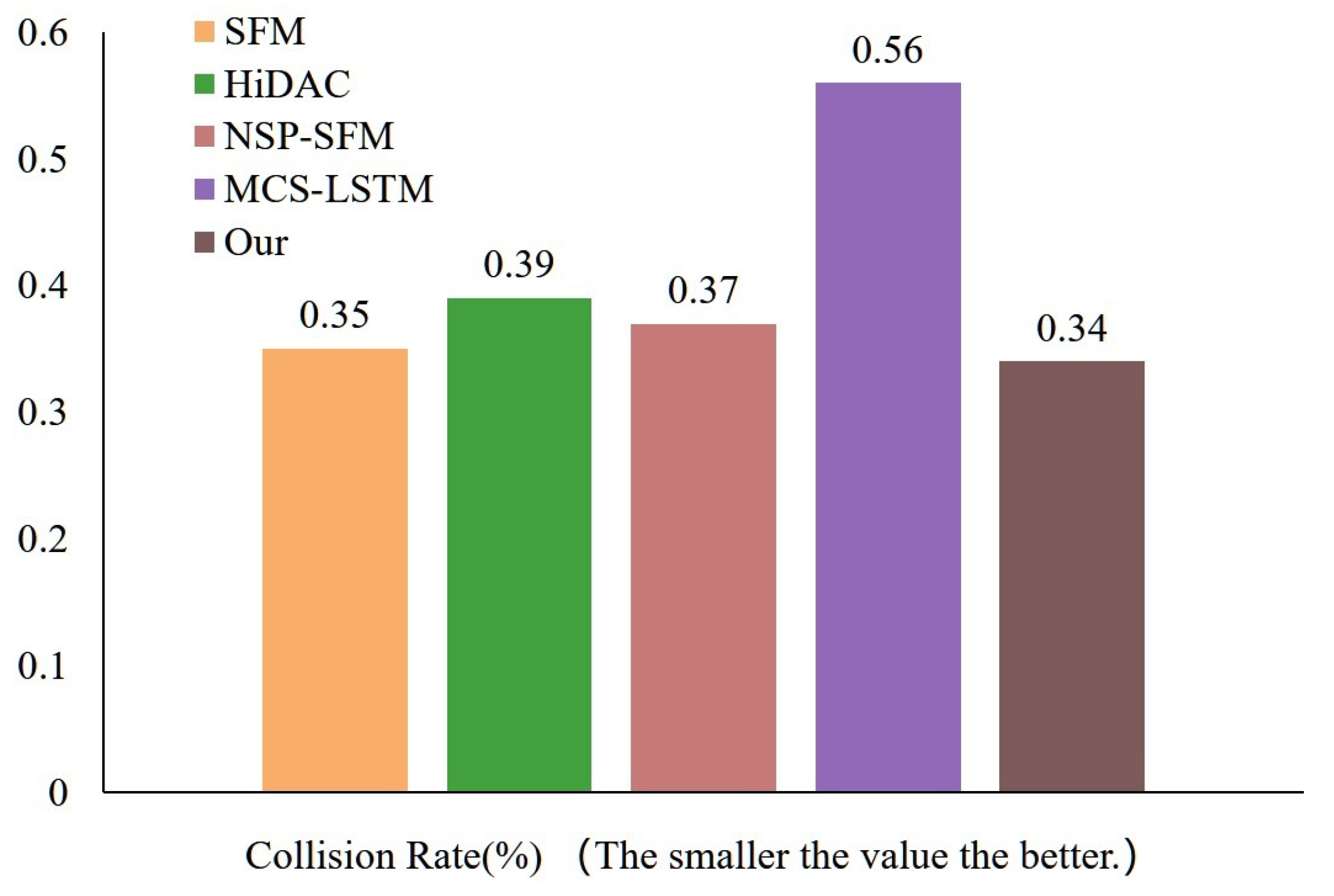

4.1. Performance Analysis

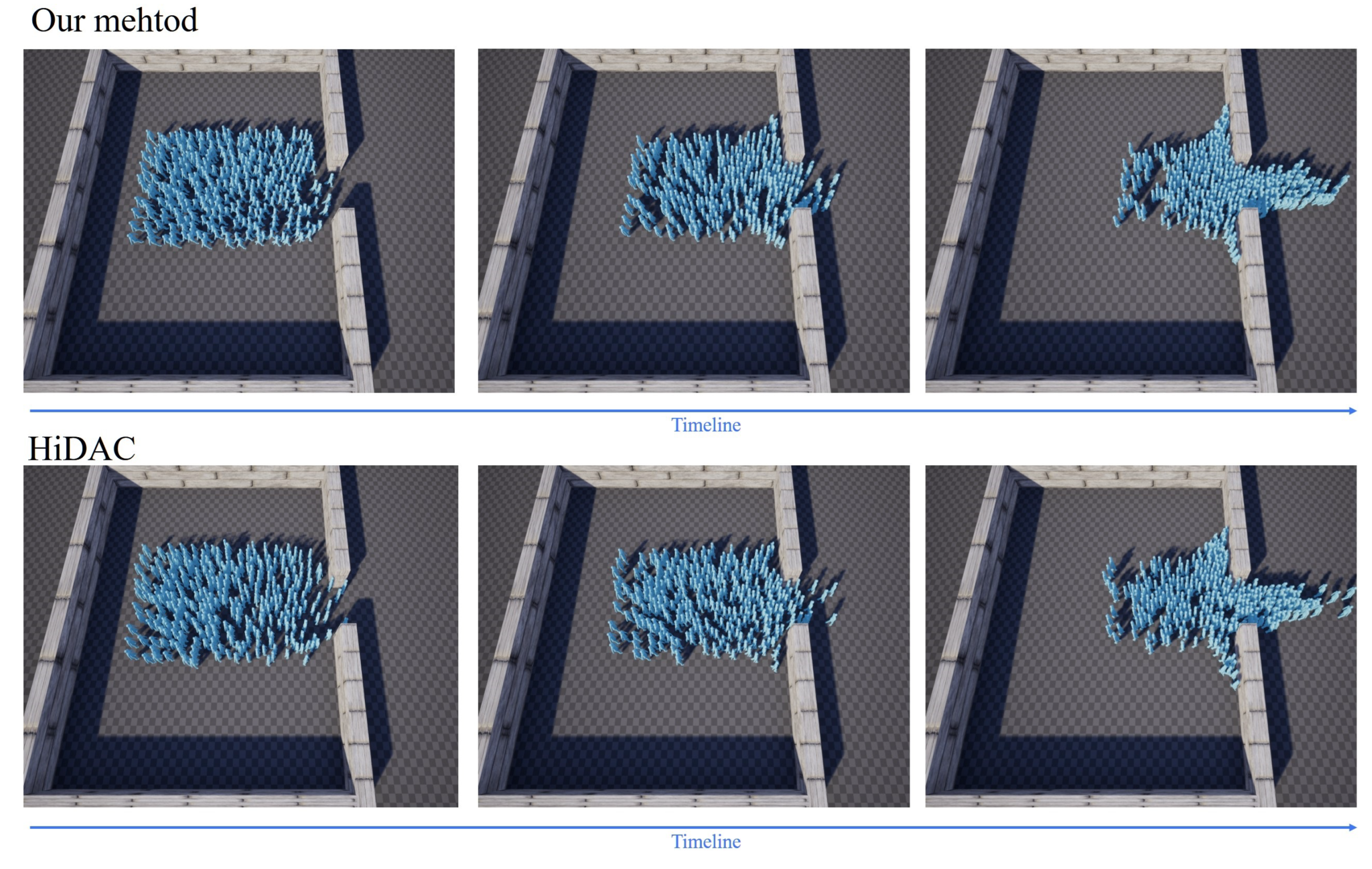

4.2. Trajectory Analysis

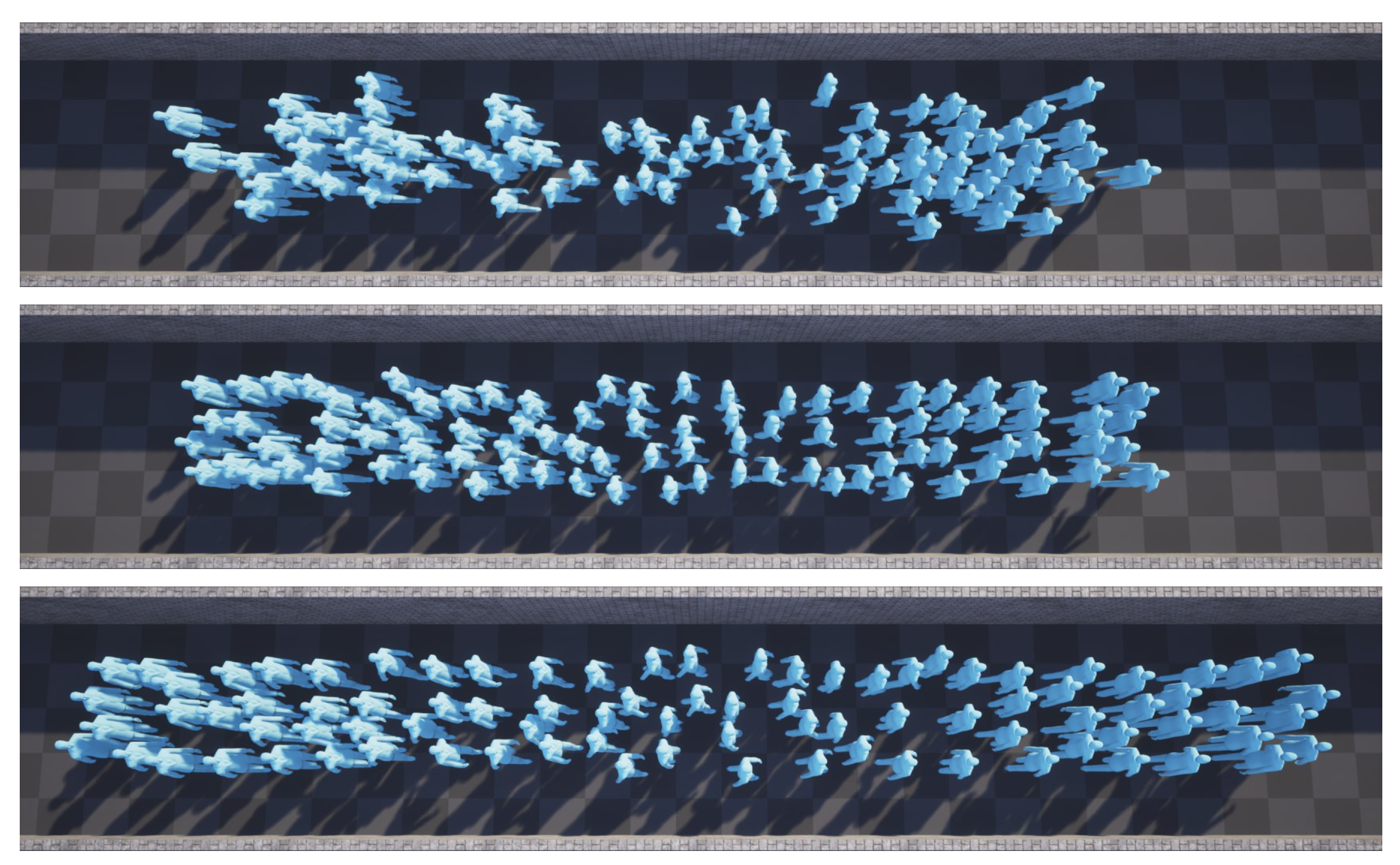

4.3. Simulation of Different Behaviors of Crowds

4.4. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Basori, A.H.; Malebary, S.J.; Firdausiah Mansur, A.B.; Tenriawaru, A.; Yusof, N.; Yunianta, A.; Barukab, O.M. Intelligent Socio-Emotional Control of Pedestrian Crowd behaviour inside Smart City. Procedia Comput. Sci. 2021, 182, 80–88. [Google Scholar] [CrossRef]

- Zhang, J.; Jin, D.; Li, Y. Mirage: An Efficient and Extensible City Simulation Framework (Systems Paper). In Proceedings of the SIGSPATIAL ’22: 30th International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 1–4 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1–4. [Google Scholar]

- Zhang, J.; Zhu, J.; Dang, P.; Wu, J.; Zhou, Y.; Li, W.; Fu, L.; Guo, Y.; You, J. An improved social force model (ISFM)-based crowd evacuation simulation method in virtual reality with a subway fire as a case study. Int. J. Digit. Earth 2023, 16, 1186–1204. [Google Scholar] [CrossRef]

- Wu, W.; Li, J.; Yi, W.; Zheng, X. Modeling Crowd Evacuation via Behavioral Heterogeneity-Based Social Force Model. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15476–15486. [Google Scholar] [CrossRef]

- Yao, Z.; Zhang, G.; Lu, D.; Liu, H. Data-driven crowd evacuation: A reinforcement learning method. Neurocomputing 2019, 366, 314–327. [Google Scholar] [CrossRef]

- Zhang, D.; Li, W.; Gong, J.; Zhang, G.; Liu, J.; Huang, L.; Liu, H.; Ma, H. Deep reinforcement learning and 3D physical environments applied to crowd evacuation in congested scenarios. Int. J. Digit. Earth 2023, 16, 691–714. [Google Scholar] [CrossRef]

- Deng, K.; Hu, X.; Li, M.; Chen, T. An extended social force model considering the psychological impact of the hazard source and its behavioural manifestation. Phys. A Stat. Mech. Its Appl. 2023, 627, 129127. [Google Scholar] [CrossRef]

- Haworth, B.; Usman, M.; Schaumann, D.; Chakraborty, N.; Berseth, G.; Faloutsos, P.; Kapadia, M. Gamification of Crowd-Driven Environment Design. IEEE Comput. Graph. Appl. 2021, 41, 107–117. [Google Scholar] [CrossRef] [PubMed]

- Nicolas, A.; Hassan, F.H. Social groups in pedestrian crowds: Review of their influence on the dynamics and their modelling. Transp. A Transp. Sci. 2023, 19, 1970651. [Google Scholar] [CrossRef]

- Lv, P.; Yu, Q.; Xu, B.; Li, C.; Zhou, B.; Xu, M. Emotional Contagion-Aware Deep Reinforcement Learning for Antagonistic Crowd Simulation. IEEE Trans. Affect. Comput. 2022, 1–15. [Google Scholar] [CrossRef]

- Berg, J.v.d.; Guy, S.J.; Lin, M.; Manocha, D. Reciprocal n-body collision avoidance. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2011; pp. 3–19. [Google Scholar]

- Helbing, D.; Molnár, P. Social force model for pedestrian dynamics. Phys. Rev. E 1995, 51, 4282–4286. [Google Scholar] [CrossRef]

- Pelechano, N.; Allbeck, J.M.; Badler, N.I. Controlling Individual Agents in High-Density Crowd Simulation. In Proceedings of the SCA ’07: 2007 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, San Diego, CA, USA, 2–4 August 2007; Eurographics Association: Goslar, Germany, 2007; pp. 99–108. [Google Scholar]

- Lerner, A.; Chrysanthou, Y.; Lischinski, D. Crowds by Example. Comput. Graph. Forum 2007, 26, 655–664. [Google Scholar] [CrossRef]

- Charalambous, P.; Chrysanthou, Y. The PAG crowd: A graph based approach for efficient data-driven crowd simulation. Comput. Graph. Forum 2014, 33, 95–108. [Google Scholar] [CrossRef]

- Zhao, M.; Cai, W.; Turner, S.J. Clust: Simulating realistic crowd behaviour by mining pattern from crowd videos. Comput. Graph. Forum 2018, 37, 184–201. [Google Scholar] [CrossRef]

- Kim, S.; Bera, A.; Best, A.; Chabra, R.; Manocha, D. Interactive and adaptive data-driven crowd simulation. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 29–38. [Google Scholar]

- Ren, J.; Xiang, W.; Xiao, Y.; Yang, R.; Manocha, D.; Jin, X. Heter-Sim: Heterogeneous Multi-Agent Systems Simulation by Interactive Data-Driven Optimization. IEEE Trans. Vis. Comput. Graph. 2021, 27, 1953–1966. [Google Scholar] [CrossRef]

- Zhao, Y.; Geraerts, R. Automatic Parameter Tuning via Reinforcement Learning for Crowd Simulation with Social Distancing. In Proceedings of the 2022 26th International Conference on Methods and Models in Automation and Robotics (MMAR), Międzyzdroje, Poland, 22–25 August 2022; pp. 87–92. [Google Scholar]

- Yao, Z.; Zhang, G.; Lu, D.; Liu, H. Learning crowd behavior from real data: A residual network method for crowd simulation. Neurocomputing 2020, 404, 173–185. [Google Scholar] [CrossRef]

- Wei, X.; Lu, W.; Zhu, L.; Xing, W. Learning motion rules from real data: Neural network for crowd simulation. Neurocomputing 2018, 310, 125–134. [Google Scholar] [CrossRef]

- Song, X.; Han, D.; Sun, J.; Zhang, Z. A data-driven neural network approach to simulate pedestrian movement. Phys. A Stat. Mech. Its Appl. 2018, 509, 827–844. [Google Scholar] [CrossRef]

- Zhang, G.; Yu, Z.; Jin, D.; Li, Y. Physics-Infused Machine Learning for Crowd Simulation. In Proceedings of the KDD ’22: 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 2439–2449. [Google Scholar]

- Amirian, J.; van Toll, W.; Hayet, J.B.; Pettré, J. Data-Driven Crowd Simulation with Generative Adversarial Networks. In Proceedings of the CASA ’19: 32nd International Conference on Computer Animation and Social Agents, Paris, France, 1–3 July 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 7–10. [Google Scholar]

- Lin, X.; Liang, Y.; Zhang, Y.; Hu, Y.; Yin, B. IE-GAN: A data-driven crowd simulation method via generative adversarial networks. Multimed. Tools Appl. 2023, 1–34. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Zhang, J.; Li, C.; Wang, C.; He, G. ORCANet: Differentiable multi-parameter learning for crowd simulation. Comput. Animat. Virtual Worlds 2023, 34, e2114. [Google Scholar] [CrossRef]

- Li, Y.; Mao, T.; Meng, R.; Yan, Q.; Wang, Z. DeepORCA: Realistic crowd simulation for varying scenes. Comput. Animat. Virtual Worlds 2022, 33, e2067. [Google Scholar] [CrossRef]

- Yang, S.; Li, T.; Gong, X.; Peng, B.; Hu, J. A review on crowd simulation and modeling. Graph. Model. 2020, 111, 101081. [Google Scholar] [CrossRef]

- Jiang, Y.Q.; Hu, Y.G.; Huang, X. Modeling pedestrian flow through a bottleneck based on a second-order continuum model. Phys. A Stat. Mech. Its Appl. 2022, 608, 128272. [Google Scholar] [CrossRef]

- Liang, H.; Du, J.; Wong, S. A Continuum model for pedestrian flow with explicit consideration of crowd force and panic effects. Transp. Res. Part B Methodol. 2021, 149, 100–117. [Google Scholar] [CrossRef]

- Narain, R.; Golas, A.; Curtis, S.; Lin, M.C. Aggregate Dynamics for Dense Crowd Simulation. In Proceedings of the SIGGRAPH Asia ’09: ACM SIGGRAPH Asia 2009 Papers, Yokohama, Japan, 16–19 December 2009; Association for Computing Machinery: New York, NY, USA, 2009; pp. 1–8. [Google Scholar]

- Tsai, T.Y.; Wong, S.K.; Chou, Y.H.; Lin, G.W. Directing virtual crowds based on dynamic adjustment of navigation fields. Comput. Animat. Virtual Worlds 2018, 29, e1765. [Google Scholar] [CrossRef]

- Kim, S.; Guy, S.J.; Hillesland, K.; Zafar, B.; Gutub, A.A.A.; Manocha, D. Velocity-based modeling of physical interactions in dense crowds. Vis. Comput. 2015, 31, 541–555. [Google Scholar] [CrossRef]

- Hughes, R.; Ondřej, J.; Dingliana, J. DAVIS: Density-Adaptive Synthetic-Vision Based Steering for Virtual Crowds. In Proceedings of the MIG ’15: 8th ACM SIGGRAPH Conference on Motion in Games, Paris, France, 16–18 November 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 79–84. [Google Scholar]

- Dutra, T.B.; Marques, R.; Cavalcante-Neto, J.; Vidal, C.A.; Pettré, J. Gradient-based steering for vision-based crowd simulation algorithms. Comput. Graph. Forum 2017, 36, 337–348. [Google Scholar] [CrossRef]

- Ma, Y.; Lee, E.W.M.; Yuen, R.K.K. An Artificial Intelligence-Based Approach for Simulating Pedestrian Movement. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3159–3170. [Google Scholar] [CrossRef]

- Salzmann, T.; Ivanovic, B.; Chakravarty, P.; Pavone, M. Trajectron++: Dynamically-Feasible Trajectory Forecasting with Heterogeneous Data. In Proceedings of the Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 683–700. [Google Scholar]

- Song, X.; Chen, K.; Li, X.; Sun, J.; Hou, B.; Cui, Y.; Zhang, B.; Xiong, G.; Wang, Z. Pedestrian Trajectory Prediction Based on Deep Convolutional LSTM Network. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3285–3302. [Google Scholar] [CrossRef]

- Mohamed, A.; Qian, K.; Elhoseiny, M.; Claudel, C. Social-STGCNN: A Social Spatio-Temporal Graph Convolutional Neural Network for Human Trajectory Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wang, Z.; Guo, J.; Hu, Z.; Zhang, H.; Zhang, J.; Pu, J. Lane Transformer: A High-Efficiency Trajectory Prediction Model. IEEE Open J. Intell. Transp. Syst. 2023, 4, 2–13. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, X.; Cao, W. An improved GAN with transformers for pedestrian trajectory prediction models. Int. J. Intell. Syst. 2022, 37, 4417–4436. [Google Scholar] [CrossRef]

- Yuan, Y.; Weng, X.; Ou, Y.; Kitani, K. AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecasting. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 11–17 October 2021; pp. 9793–9803. [Google Scholar]

- Giuliari, F.; Hasan, I.; Cristani, M.; Galasso, F. Transformer Networks for Trajectory Forecasting. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 10335–10342. [Google Scholar]

- Agarwal, A.; Lalit, M.; Bansal, A.; Seeja, K. iSGAN: An Improved SGAN for Crowd Trajectory Prediction from Surveillance Videos. Procedia Comput. Sci. 2023, 218, 2319–2327. [Google Scholar] [CrossRef]

- Yue, J.; Manocha, D.; Wang, H. Human Trajectory Prediction via Neural Social Physics. In Proceedings of the Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 376–394. [Google Scholar]

- Yu, Y.; Xiang, W.; Jin, X. Multi-level crowd simulation using social LSTM. Comput. Animat. Virtual Worlds 2023, 34, e2180. [Google Scholar] [CrossRef]

- Sohn, K.; Lee, H.; Yan, X. Learning Structured Output Representation using Deep Conditional Generative Models. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Pellegrini, S.; Ess, A.; Schindler, K.; Van Gool, L. You’ll never walk alone: Modeling social behavior for multi-target tracking. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 261–268. [Google Scholar]

| Metric | SFM [12] | HiDAC [13] | NSP-SFM [46] | MCS-LSTM [47] | Our |

|---|---|---|---|---|---|

| Velocity Score | 0.64 | 0.64 | 0.67 | 0.62 | 0.69 |

| Minimum Distance Score | 0.54 | 0.53 | 0.64 | 0.62 | 0.64 |

| Metric | SFM [12] | HiDAC [13] | NSP-SFM [46] | MCS-LSTM [47] | Our Model |

|---|---|---|---|---|---|

| SSIM | 0.83 | 0.82 | 0.88 | 0.85 | 0.90 |

| High | 0.24 | 0.23 | 0.41 | 0.53 | 0.68 |

| Medium | 0.28 | 0.24 | 0.61 | 0.11 | 0.73 |

| Low | 0.34 | 0.27 | 0.59 | 0.08 | 0.62 |

| Avg | 0.42 | 0.39 | 0.62 | 0.39 | 0.73 |

| Metric | No WaitingRule | Smaller Area | Larger Area |

|---|---|---|---|

| MNND | 0.53 | 0.58 | 0.77 |

| SDNND | 0.14 | 0.09 | 0.11 |

| CHA | 41.18 | 41.54 | 52.35 |

| - | Our Model | HiDAC |

|---|---|---|

| Theoretical Basis | Integrates deep learning with social force theories for enhanced accuracy. | Based on traditional social force theories. |

| Parameter Calculation Method | Adaptively calibrated based on real-time analysis of crowd behavior data. | Set based on experience. |

| Simulation Realism | Data distribution closer to the real crowd. | General distribution. |

| Computational Efficiency | Aims for future enhancements to balance computational demand with simulation depth. | Efficient within its scope of complexity. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, D.; Ding, G.; Huang, K.; Bai, C.; He, L.; Zhang, L. Enhanced Crowd Dynamics Simulation with Deep Learning and Improved Social Force Model. Electronics 2024, 13, 934. https://doi.org/10.3390/electronics13050934

Yan D, Ding G, Huang K, Bai C, He L, Zhang L. Enhanced Crowd Dynamics Simulation with Deep Learning and Improved Social Force Model. Electronics. 2024; 13(5):934. https://doi.org/10.3390/electronics13050934

Chicago/Turabian StyleYan, Dapeng, Gangyi Ding, Kexiang Huang, Chongzhi Bai, Lian He, and Longfei Zhang. 2024. "Enhanced Crowd Dynamics Simulation with Deep Learning and Improved Social Force Model" Electronics 13, no. 5: 934. https://doi.org/10.3390/electronics13050934

APA StyleYan, D., Ding, G., Huang, K., Bai, C., He, L., & Zhang, L. (2024). Enhanced Crowd Dynamics Simulation with Deep Learning and Improved Social Force Model. Electronics, 13(5), 934. https://doi.org/10.3390/electronics13050934