Combining Machine Learning and Edge Computing: Opportunities, Challenges, Platforms, Frameworks, and Use Cases

Abstract

1. Introduction

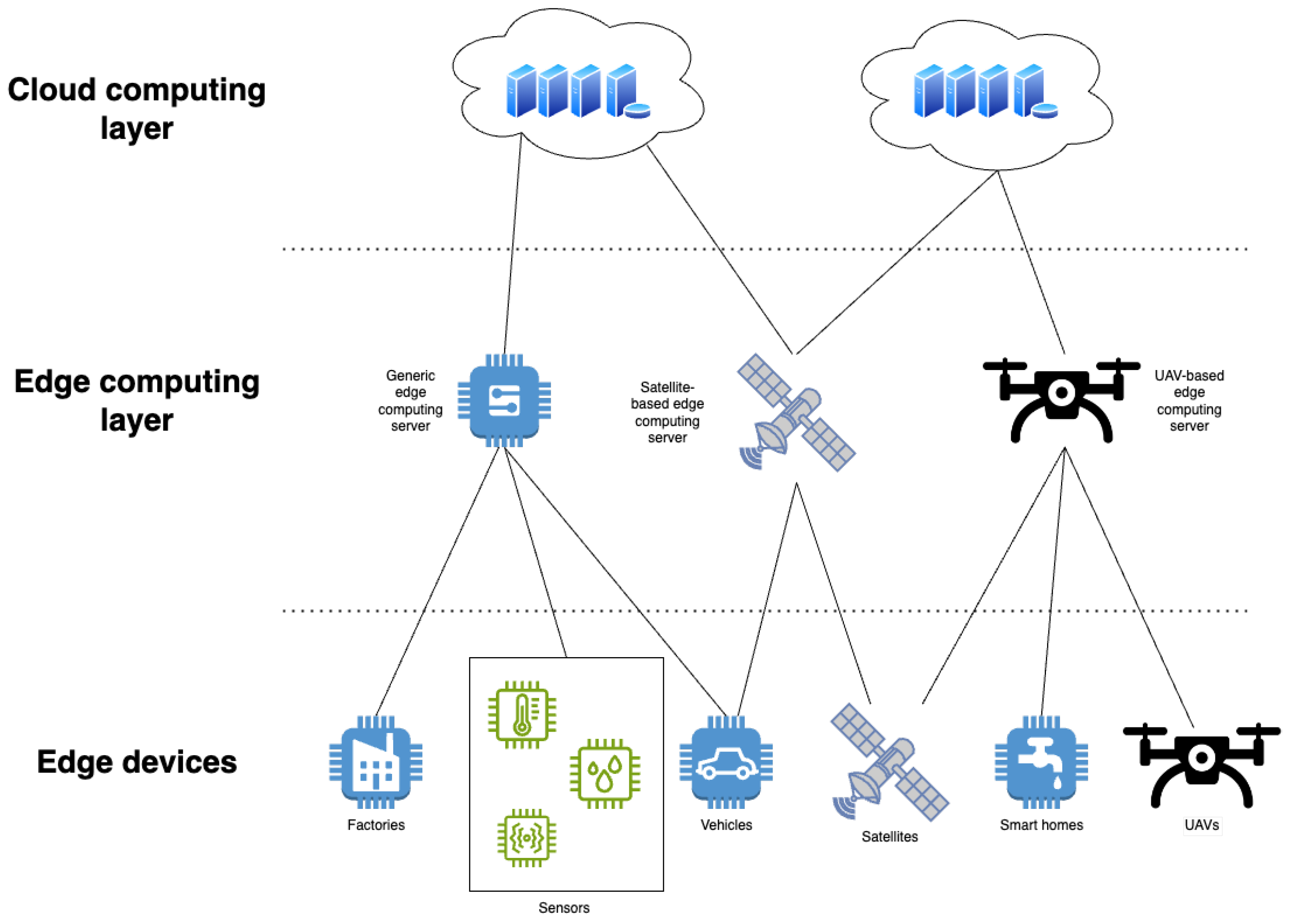

2. Challenges of Edge Computing

2.1. Constrained Devices and Computation Offloading

2.2. Security and Privacy

2.3. Energy Consumption

2.4. Device Fleet Management

3. Motivations for Combining Machine Learning and Edge Computing

3.1. More Powerful Devices Available at the Edge

3.2. Reducing Reliance on Centralized Services and Decreasing Latency

3.3. Improving Privacy of Personal Data

4. Edge Computing Platforms

4.1. Microsoft Azure IoT Edge

4.2. AWS IoT Greengrass

4.3. Balena

4.4. KubeEdge.AI

4.5. EdgeX Foundry

5. Edge Intelligence Frameworks and Libraries

5.1. TensorFlow Lite

5.2. edge-ml

5.3. TinyDL

5.4. PyTorch Mobile

5.5. CoreML

5.6. ML Kit for Firebase

5.7. Apache MXNet

5.8. Embedded Learning Library (ELL)

5.9. DeepThings

5.10. DeepIoT

6. Use Cases

6.1. Industrial Applications

6.2. Healthcare Applications

6.3. Smart Cities and Environmental Applications

6.4. Satellite–Terrestrial Integrated Networks

6.5. Autonomous and Intelligence-Assisted Vehicles

7. Trends and Future Developments in Edge Computing

8. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AGV | Autonomous Guided Vehicles |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| API | Application Programming Interface |

| AWS | Amazon Web Services |

| BLE | Bluetooth Low Energy |

| CNN | Convolutional Neural Network |

| CPU | Central Processing Unit |

| DL | Deep Learning |

| DSP | Digital Signal Processing |

| ELL | Embedded Learning Library |

| GPU | Graphics Processing Unit |

| IIoT | Industrial Internet of Things |

| IoT | Internet of Things |

| LSTM | Long Short-Term Memory |

| MES | Manufacturing Execution System |

| ML | Machine Learning |

| MLP | Multi-Layer Perception |

| MQTT | Message Queuing Telemetry Transport |

| NLP | Natural Language Processing |

| NPU | Neural Processing Unit |

| OPC UA | OPC Unified Architecture |

| PdM | Predictive Maintenance |

| PLC | Programmable Logical Controller |

| PSO | Particle Swarm Optimization |

| RFID | Radio-Frequency Identification |

| RNN | Recurrent Neural Network |

| SCADA | Supervisory Control And Data Acquisition |

| SDK | Software Development Kit |

| SQL | Structured Query Language |

| STFT | Short-Time Fourier Transform |

| SVM | Support Vector Machine |

| TPU | Tensor Processing Unit |

| TSDB | Time-Series Database |

| UML | Unified Modeling Language |

| VPU | Visual Processing Unit |

| XML | Extensible Markup Language |

| XMPP | Extensible Messaging and Presence Protocol |

References

- Paul, A.; Pinjari, H.; Hong, W.H.; Seo, H.; Rho, S. Fog Computing-Based IoT for Health Monitoring System. J. Sens. 2018, 2018, 1386470. [Google Scholar] [CrossRef]

- Krasniqi, X.; Hajrizi, E. Use of IoT Technology to Drive the Automotive Industry from Connected to Full Autonomous Vehicles. IFAC-PapersOnLine 2016, 49, 269–274. [Google Scholar] [CrossRef]

- Renart, E.G.; Diaz-Montes, J.; Parashar, M. Data-Driven Stream Processing at the Edge. In Proceedings of the 2017 IEEE 1st International Conference on Fog and Edge Computing (ICFEC), Madrid, Spain, 14–15 May 2017; pp. 31–40. [Google Scholar] [CrossRef]

- Liu, X.; Nielsen, P. Air Quality Monitoring System and Benchmarking. In Big Data Analytics and Knowledge Discovery; Springer: Cham, Switzerland, 2017; pp. 459–470. [Google Scholar] [CrossRef]

- Fadhel, M.; Sekerinski, E.; Yao, S. A Comparison of Time Series Databases for Storing Water Quality Data. In Mobile Technologies and Applications for the Internet of Things; Springer: Cham, Switzerland, 2019; pp. 302–313. [Google Scholar] [CrossRef]

- Greco, L.; Ritrovato, P.; Xhafa, F. An edge-stream computing infrastructure for real-time analysis of wearable sensors data. Future Gener. Comput. Syst. 2019, 93, 515–528. [Google Scholar] [CrossRef]

- Singh, S. Optimize cloud computations using edge computing. In Proceedings of the 2017 International Conference on Big Data, IoT and Data Science (BID), Pune, India, 20–22 December 2017; pp. 49–53. [Google Scholar] [CrossRef]

- Khan, L.U.; Yaqoob, I.; Tran, N.H.; Kazmi, S.M.A.; Dang, T.N.; Hong, C.S. Edge-Computing-Enabled Smart Cities: A Comprehensive Survey. IEEE Internet Things J. 2020, 7, 10200–10232. [Google Scholar] [CrossRef]

- Dong, P.; Ning, Z.; Obaidat, M.S.; Jiang, X.; Guo, Y.; Hu, X.; Hu, B.; Sadoun, B. Edge Computing Based Healthcare Systems: Enabling Decentralized Health Monitoring in Internet of Medical Things. IEEE Netw. 2020, 34, 254–261. [Google Scholar] [CrossRef]

- Singh, A.; Chatterjee, K. Securing smart healthcare system with edge computing. Comput. Secur. 2021, 108, 102353. [Google Scholar] [CrossRef]

- Stankovski, S.; Ostojić, G.; Baranovski, I.; Babić, M.; Stanojević, M. The Impact of Edge Computing on Industrial Automation. In Proceedings of the 2020 19th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 18–20 March 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Benecki, P.; Kostrzewa, D.; Grzesik, P.; Shubyn, B.; Mrozek, D. Forecasting of Energy Consumption for Anomaly Detection in Automated Guided Vehicles: Models and Feature Selection. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022. [Google Scholar]

- Le, N.; Ou, Y. Incorporating efficient radial basis function networks and significant amino acid pairs for predicting GTP binding sites in transport proteins. Bmc Bioinform. 2016, 17 (Suppl. S19), 183–192. [Google Scholar] [CrossRef]

- Le, N.Q.K.; Nguyen, T.T.D.; Ou, Y.Y. Identifying the molecular functions of electron transport proteins using radial basis function networks and biochemical properties. J. Mol. Graph. Model. 2017, 73, 166–178. [Google Scholar] [CrossRef]

- Cupek, R.; Drewniak, M.; Fojcik, M.; Kyrkjebø, E.; Lin, J.C.W.; Mrozek, D.; Øvsthus, K.; Ziebinski, A. Autonomous Guided Vehicles for Smart Industries–The State-of-the-Art and Research Challenges. In Proceedings of the Computational Science–ICCS 2020, Amsterdam, The Netherlands, 3–5 June 2020; pp. 330–343. [Google Scholar]

- Grzesik, P.; Benecki, P.; Kostrzewa, D.; Shubyn, B.; Mrozek, D. On-Edge Aggregation Strategies over Industrial Data Produced by Autonomous Guided Vehicles. In Proceedings of the Computational Science–ICCS 2022, London, UK, 21–23 June 2022; Groen, D., de Mulatier, C., Paszynski, M., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M.A., Eds.; Springer: Cham, Switzerland, 2022; pp. 458–471. [Google Scholar]

- Steclik, T.; Cupek, R.; Drewniak, M. Automatic grouping of production data in Industry 4.0: The use case of internal logistics systems based on Automated Guided Vehicles. J. Comput. Sci. 2022, 62, 101693. [Google Scholar] [CrossRef]

- Wang, J.; Pan, J.; Esposito, F.; Calyam, P.; Yang, Z.; Mohapatra, P. Edge Cloud Offloading Algorithms: Issues, Methods, and Perspectives. ACM Comput. Surv. 2019, 52, 1–23. [Google Scholar] [CrossRef]

- Grzesik, P.; Mrozek, D. Accelerating Edge Metagenomic Analysis with Serverless-Based Cloud Offloading. In Proceedings of the Computational Science–ICCS 2022, London, UK, 21–23 June 2022; Groen, D., de Mulatier, C., Paszynski, M., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M.A., Eds.; Springer: Cham, Switzerland, 2022; pp. 481–492. [Google Scholar]

- Mach, P.; Becvar, Z. Mobile Edge Computing: A Survey on Architecture and Computation Offloading. IEEE Commun. Surv. Tutorials 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Ribeiro, S.L.; Nakamura, E.T. Privacy Protection with Pseudonymization and Anonymization In a Health IoT System: Results from OCARIoT. In Proceedings of the 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE), Athens, Greece, 28–30 October 2019; pp. 904–908. [Google Scholar] [CrossRef]

- Silveira, M.M.; Portela, A.L.; Menezes, R.A.; Souza, M.S.; Silva, D.S.; Mesquita, M.C.; Gomes, R.L. Data Protection based on Searchable Encryption and Anonymization Techniques. In Proceedings of the NOMS 2023–2023 IEEE/IFIP Network Operations and Management Symposium, Miami, FL, USA, 8–12 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Ma, R.; Feng, T.; Fang, J. Edge Computing Assisted an Efficient Privacy Protection Layered Data Aggregation Scheme for IIoT. Secur. Commun. Netw. 2021, 2021, 7776193. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Jiang, W.; Han, H.; Zhang, Y.; Mu, J. Federated split learning for sequential data in satellite–terrestrial integrated networks. Inf. Fusion 2024, 103, 102141. [Google Scholar] [CrossRef]

- Pasquini, D.; Francati, D.; Ateniese, G. Eluding Secure Aggregation in Federated Learning via Model Inconsistency. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; CCS’22. pp. 2429–2443. [Google Scholar] [CrossRef]

- Tao, Y.; Xu, P.; Jin, H. Secure Data Sharing and Search for Cloud-Edge-Collaborative Storage. IEEE Access 2020, 8, 15963–15972. [Google Scholar] [CrossRef]

- Zheng, K.; Ding, C.; Wang, J. A Secure Data-Sharing Scheme for Privacy-Preserving Supporting Node–Edge–Cloud Collaborative Computation. Electronics 2023, 12, 2737. [Google Scholar] [CrossRef]

- Roman, R.; Lopez, J.; Mambo, M. Mobile edge computing, Fog et al.: A survey and analysis of security threats and challenges. Future Gener. Comput. Syst. 2018, 78, 680–698. [Google Scholar] [CrossRef]

- Yahuza, M.; Idris, M.Y.I.B.; Wahab, A.W.B.A.; Ho, A.T.S.; Khan, S.; Musa, S.N.B.; Taha, A.Z.B. Systematic Review on Security and Privacy Requirements in Edge Computing: State of the Art and Future Research Opportunities. IEEE Access 2020, 8, 76541–76567. [Google Scholar] [CrossRef]

- Gowers, G.O.F.; Vince, O.; Charles, J.H.; Klarenberg, I.; Ellis, T.; Edwards, A. Entirely Off-Grid and Solar-Powered DNA Sequencing of Microbial Communities during an Ice Cap Traverse Expedition. Genes 2019, 10, 902. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Chen, L.; Ren, S. Online Learning for Offloading and Autoscaling in Energy Harvesting Mobile Edge Computing. IEEE Trans. Cogn. Commun. Netw. 2017, 3, 361–373. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic Computation Offloading for Mobile-Edge Computing With Energy Harvesting Devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Ku, Y.J.; Chiang, P.H.; Dey, S. Quality of Service Optimization for Vehicular Edge Computing with Solar-Powered Road Side Units. In Proceedings of the 2018 27th International Conference on Computer Communication and Networks (ICCCN), Hangzhou, China, 30 July 2018–2 August 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Shen, J.; Jiang, X.; Zhong, X. Dynamic Offloading for Energy Harvesting Mobile Edge Computing: Architecture, Case Studies, and Future Directions. IEEE Access 2019, 7, 79877–79886. [Google Scholar] [CrossRef]

- Zhou, H.; Jiang, K.; Liu, X.; Li, X.; Leung, V.C.M. Deep Reinforcement Learning for Energy-Efficient Computation Offloading in Mobile-Edge Computing. IEEE Internet Things J. 2022, 9, 1517–1530. [Google Scholar] [CrossRef]

- Donta, P.K.; Dustdar, S. Towards Intelligent Data Protocols for the Edge. In Proceedings of the 2023 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 2–8 July 2023. [Google Scholar] [CrossRef]

- Li, B.; He, Q.; Cui, G.; Xia, X.; Chen, F.; Jin, H.; Yang, Y. READ: Robustness-Oriented Edge Application Deployment in Edge Computing Environment. IEEE Trans. Serv. Comput. 2022, 15, 1746–1759. [Google Scholar] [CrossRef]

- Song, H.; Dautov, R.; Ferry, N.; Solberg, A.; Fleurey, F. Model-Based Fleet Deployment of Edge Computing Applications. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems, Virtual Event, 16–23 October 2020; MODELS’20. pp. 132–142. [Google Scholar] [CrossRef]

- Wang, E.; Li, D.; Dong, B.; Zhou, H.; Zhu, M. Flat and hierarchical system deployment for edge computing systems. Future Gener. Comput. Syst. 2020, 105, 308–317. [Google Scholar] [CrossRef]

- Microsoft Azure IoT Edge Documentation. Available online: https://azure.microsoft.com/en-us/products/iot-edge/ (accessed on 24 November 2022).

- AWS IoT Greengrass Documentation. Available online: https://docs.aws.amazon.com/greengrass/index.html (accessed on 24 November 2022).

- Applying Federated Learning for ML at the Edge. Available online: https://aws.amazon.com/blogs/architecture/applying-federated-learning-for-ml-at-the-edge/ (accessed on 24 November 2022).

- Balena Documentation. Available online: https://www.balena.io/docs/learn/welcome/primer/ (accessed on 24 November 2022).

- Balena Labs Projects Repository. Available online: https://github.com/balena-labs-projects (accessed on 24 November 2022).

- KubeEdge Documentation. Available online: https://kubeedge.io/en/ (accessed on 24 November 2022).

- Wang, S.; Hu, Y.; Wu, J. KubeEdge.AI: AI Platform for Edge Devices. arXiv 2020, arXiv:2007.09227. [Google Scholar]

- EdgeX Foundry Documentation. Available online: https://www.edgexfoundry.org/why-edgex/ (accessed on 24 November 2022).

- TensorFlow Lite Documentation. Available online: https://www.tensorflow.org/lite (accessed on 24 November 2022).

- Röddiger, T.; King, T.; Lepold, P.; Münk, J.; Du, S.; Riedel, T.; Beigl, M. edge-ml.org-End-To-End Embedded Machine Learning. Available online: https://edge-ml.org/ (accessed on 24 November 2022).

- Darvish Rouhani, B.; Mirhoseini, A.; Koushanfar, F. TinyDL: Just-in-time deep learning solution for constrained embedded systems. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- PyTorch Mobile Documentation. Available online: https://pytorch.org/mobile/home/ (accessed on 24 November 2022).

- CoreML Documentation. Available online: https://developer.apple.com/documentation/coreml (accessed on 24 November 2022).

- ML Kit for Firebase Documentation. Available online: https://firebase.google.com/docs/ml-kit. (accessed on 24 November 2022).

- Chen, T.; Li, M.; Li, Y.; Lin, M.; Wang, N.; Wang, M.; Xiao, T.; Xu, B.; Zhang, C.; Zhang, Z. MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. arXiv 2015, arXiv:1512.01274. [Google Scholar]

- Apache MXNet Documentation. Available online: https://mxnet.apache.org/versions/1.9.0/api (accessed on 24 November 2022).

- Microsoft Embedded Learning Library Documentation. Available online: https://microsoft.github.io/ELL/ (accessed on 24 November 2022).

- Zhao, Z.; Barijough, K.M.; Gerstlauer, A. DeepThings: Distributed Adaptive Deep Learning Inference on Resource-Constrained IoT Edge Clusters. IEEE Trans.-Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 2348–2359. [Google Scholar] [CrossRef]

- Reference Implementation in C of DeepThigns Framework. Available online: https://github.com/zoranzhao/DeepThings (accessed on 24 November 2022).

- Yao, S.; Zhao, Y.; Zhang, A.; Su, L.; Abdelzaher, T. DeepIoT: Compressing Deep Neural Network Structures for Sensing Systems with a Compressor-Critic Framework. In Proceedings of the Proceedings of the 15th ACM Conference on Embedded Network Sensor Systems, Delft, The Netherlands, 6–8 November 2017. SenSys’17. [Google Scholar] [CrossRef]

- Reference Implementation of DeepIoT Framework. Available online: https://github.com/yscacaca/DeepIoT (accessed on 24 November 2022).

- Hu, L.; Miao, Y.; Wu, G.; Hassan, M.M.; Humar, I. iRobot-Factory: An intelligent robot factory based on cognitive manufacturing and edge computing. Future Gener. Comput. Syst. 2019, 90, 569–577. [Google Scholar] [CrossRef]

- Boguslawski, B.; Boujonnier, M.; Bissuel-Beauvais, L.; Saghir, F.; Sharma, R. IIoT Edge Analytics: Deploying Machine Learning at the Wellhead to Identify Rod Pump Failure. In Proceedings of the SPE Middle East Artificial Lift Conference and Exhibition, Manama, Bahrain, 28–29 November 2018. [Google Scholar] [CrossRef]

- Matthews, S.J.; Leger, A.S. Energy-Efficient Analysis of Synchrophasor Data using the NVIDIA Jetson Nano. In Proceedings of the 2020 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 22–24 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Dou, W.; Zhao, X.; Yin, X.; Wang, H.; Luo, Y.; Qi, L. Edge Computing-Enabled Deep Learning for Real-time Video Optimization in IIoT. IEEE Trans. Ind. Inform. 2021, 17, 2842–2851. [Google Scholar] [CrossRef]

- Zhang, P.; Sun, H.; Situ, J.; Jiang, C.; Xie, D. Federated Transfer Learning for IIoT Devices With Low Computing Power Based on Blockchain and Edge Computing. IEEE Access 2021, 9, 98630–98638. [Google Scholar] [CrossRef]

- Shubyn, B.; Mrozek, D.; Maksymyuk, T.; Sunderam, V.; Kostrzewa, D.; Grzesik, P.; Benecki, P. Federated Learning for Anomaly Detection in Industrial IoT-Enabled Production Environment Supported by Autonomous Guided Vehicles. In Proceedings of the Computational Science–ICCS 2022: 22nd International Conference, London, UK, 21–23 June 2022; Proceedings, Part IV. Springer: Cham, Switzerland, 2022; pp. 409–421. [Google Scholar] [CrossRef]

- Liu, Y.; Garg, S.; Nie, J.; Zhang, Y.; Xiong, Z.; Kang, J.; Hossain, M.S. Deep Anomaly Detection for Time-Series Data in Industrial IoT: A Communication-Efficient On-Device Federated Learning Approach. IEEE Internet Things J. 2021, 8, 6348–6358. [Google Scholar] [CrossRef]

- Zeng, L.; Li, E.; Zhou, Z.; Chen, X. Boomerang: On-Demand Cooperative Deep Neural Network Inference for Edge Intelligence on the Industrial Internet of Things. IEEE Netw. 2019, 33, 96–103. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Deep Learning for Smart Industry: Efficient Manufacture Inspection System With Fog Computing. IEEE Trans. Ind. Inform. 2018, 14, 4665–4673. [Google Scholar] [CrossRef]

- Park, D.; Kim, S.; An, Y.; Jung, J.Y. LiReD: A Light-Weight Real-Time Fault Detection System for Edge Computing Using LSTM Recurrent Neural Networks. Sensors 2018, 18, 2110. [Google Scholar] [CrossRef] [PubMed]

- Mohan, P.; Paul, A.; Chirania, A. A Tiny CNN Architecture for Medical Face Mask Detection for Resource-Constrained Endpoints. In Innovations in Electrical and Electronic Engineering; Springer: Singapore, 2021; pp. 657–670. [Google Scholar] [CrossRef]

- Faleh, N.; Abdul Hassan, N.; Abed, A.; Abdalla, T. Face mask detection using deep learning on NVIDIA Jetson Nano. Int. J. Electr. Comput. Eng. 2022, 12, 5427–5434. [Google Scholar] [CrossRef]

- Qayyum, A.; Ahmad, K.; Ahsan, M.A.; Al-Fuqaha, A.; Qadir, J. Collaborative Federated Learning for Healthcare: Multi-Modal COVID-19 Diagnosis at the Edge. IEEE Open J. Comput. Soc. 2022, 3, 172–184. [Google Scholar] [CrossRef]

- Adhikari, M.; Munusamy, A. iCovidCare: Intelligent health monitoring framework for COVID-19 using ensemble random forest in edge networks. Internet Things 2021, 14, 100385. [Google Scholar] [CrossRef]

- Velichko, A. A Method for Medical Data Analysis Using the LogNNet for Clinical Decision Support Systems and Edge Computing in Healthcare. Sensors 2021, 21, 6209. [Google Scholar] [CrossRef]

- Yang, Z.; Liang, B.; Ji, W. An Intelligent End–Edge–Cloud Architecture for Visual IoT-Assisted Healthcare Systems. IEEE Internet Things J. 2021, 8, 16779–16786. [Google Scholar] [CrossRef]

- Mrozek, D.; Koczur, A.; Małysiak-Mrozek, B. Fall detection in older adults with mobile IoT devices and machine learning in the cloud and on the edge. Inf. Sci. 2020, 537, 132–147. [Google Scholar] [CrossRef]

- Ahmed, I.; Jeon, G.; Piccialli, F. A Deep-Learning-Based Smart Healthcare System for Patient’s Discomfort Detection at the Edge of Internet of Things. IEEE Internet Things J. 2021, 8, 10318–10326. [Google Scholar] [CrossRef]

- Liu, C.; Cao, Y.; Luo, Y.; Chen, G.; Vokkarane, V.; Yunsheng, M.; Chen, S.; Hou, P. A New Deep Learning-Based Food Recognition System for Dietary Assessment on An Edge Computing Service Infrastructure. IEEE Trans. Serv. Comput. 2018, 11, 249–261. [Google Scholar] [CrossRef]

- Xu, M.; Qian, F.; Zhu, M.; Huang, F.; Pushp, S.; Liu, X. DeepWear: Adaptive Local Offloading for On-Wearable Deep Learning. IEEE Trans. Mob. Comput. 2020, 19, 314–330. [Google Scholar] [CrossRef]

- Pramukantoro, E.S.; Gofuku, A. A real-time heartbeat monitoring using wearable device and machine learning. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 270–272. [Google Scholar] [CrossRef]

- Zanetti, R.; Arza, A.; Aminifar, A.; Atienza, D. Real-Time EEG-Based Cognitive Workload Monitoring on Wearable Devices. IEEE Trans. Biomed. Eng. 2022, 69, 265–277. [Google Scholar] [CrossRef]

- Puerta, G.; Le Mouël, F.; Carrillo, O. Machine Learning Models for Seizure Detection: Deployment Insights for e-Health IoT Platform. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI’2021), Virtual, 27–30 July 2021. [Google Scholar]

- Coelho, Y.L.; Santos, F.d.A.S.d.; Frizera-Neto, A.; Bastos-Filho, T.F. A Lightweight Framework for Human Activity Recognition on Wearable Devices. IEEE Sen. J. 2021, 21, 24471–24481. [Google Scholar] [CrossRef]

- Arikumar, K.S.; Prathiba, S.B.; Alazab, M.; Gadekallu, T.R.; Pandya, S.; Khan, J.M.; Moorthy, R.S. FL-PMI: Federated Learning-Based Person Movement Identification through Wearable Devices in Smart Healthcare Systems. Sensors 2022, 22, 1377. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Lin, J.; Chen, Z.; Sun, F.; Zhu, X.; Fang, G. An Efficient Neural-Network-Based Microseismic Monitoring Platform for Hydraulic Fracture on an Edge Computing Architecture. Sensors 2018, 18, 1828. [Google Scholar] [CrossRef]

- Kumar, Y.; Udgata, S.K. Machine learning model for IoT-Edge device based Water Quality Monitoring. In Proceedings of the IEEE INFOCOM 2022-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), New York, NY, USA, 2–5 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Jiang, L.; Xie, S.; Zhang, Y. Intelligent Edge Computing for IoT-Based Energy Management in Smart Cities. IEEE Netw. 2019, 33, 111–117. [Google Scholar] [CrossRef]

- Cicirelli, F.; Gentile, A.F.; Greco, E.; Guerrieri, A.; Spezzano, G.; Vinci, A. An Energy Management System at the Edge based on Reinforcement Learning. In Proceedings of the 2020 IEEE/ACM 24th International Symposium on Distributed Simulation and Real Time Applications (DS-RT), Prague, Czech Republic, 14–16 September 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Ali, O.; Ishak, M.K. Bringing intelligence to IoT Edge: Machine Learning based Smart City Image Classification using Microsoft Azure IoT and Custom Vision. J. Phys. Conf. Ser. 2020, 1529, 042076. [Google Scholar] [CrossRef]

- Janjua, Z.H.; Vecchio, M.; Antonini, M.; Antonelli, F. IRESE: An intelligent rare-event detection system using unsupervised learning on the IoT edge. Eng. Appl. Artif. Intell. 2019, 84, 41–50. [Google Scholar] [CrossRef]

- Orfanidis, C.; Hassen, R.B.H.; Kwiek, A.; Fafoutis, X.; Jacobsson, M. A Discreet Wearable Long-Range Emergency System Based on Embedded Machine Learning. In Proceedings of the 2021 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Kassel, Germany, 22–26 March 2021; pp. 182–187. [Google Scholar] [CrossRef]

- Nikouei, S.Y.; Chen, Y.; Song, S.; Xu, R.; Choi, B.; Faughnan, T.R. Real-Time Human Detection as an Edge Service Enabled by a Lightweight CNN. arXiv 2018, arXiv:1805.00330. [Google Scholar]

- Pang, S.; Qiao, S.; Song, T.; Zhao, J.; Zheng, P. An Improved Convolutional Network Architecture Based on Residual Modeling for Person Re-Identification in Edge Computing. IEEE Access 2019, 7, 106748–106759. [Google Scholar] [CrossRef]

- Dhakal, A.; Ramakrishnan, K.K. Machine learning at the network edge for automated home intrusion monitoring. In Proceedings of the 2017 IEEE 25th International Conference on Network Protocols (ICNP), Toronto, ON, Canada, 10–13 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Fan, X.; Xiang, C.; Gong, L.; He, X.; Chen, C.; Huang, X. UrbanEdge: Deep Learning Empowered Edge Computing for Urban IoT Time Series Prediction. In Proceedings of the ACM Turing Celebration Conference-China, Chengdu, China, 17–19 May 2009; p. 19. [Google Scholar] [CrossRef]

- Sabbella, S.R. Fire and Smoke Detection for Smart Cities Using Deep Neural Networks and Edge Computing on Embedded Sensors. Ph.D. Thesis, Sapienza University of Rome, Rome, Italy, 2020. [Google Scholar] [CrossRef]

- Silva, M.C.; da Silva, J.C.F.; Delabrida, S.; Bianchi, A.G.C.; Ribeiro, S.P.; Silva, J.S.; Oliveira, R.A.R. Wearable Edge AI Applications for Ecological Environments. Sensors 2021, 21, 82. [Google Scholar] [CrossRef]

- Zhu, D.; Liu, H.; Li, T.; Sun, J.; Liang, J.; Zhang, H.; Geng, L.; Liu, Y. Deep Reinforcement Learning-based Task Offloading in Satellite-Terrestrial Edge Computing Networks. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, X.; Wang, P.; Liu, L.; Wang, Y. Double-edge intelligent integrated satellite terrestrial networks. China Commun. 2020, 17, 128–146. [Google Scholar] [CrossRef]

- de Prado, M.; Rusci, M.; Capotondi, A.; Donze, R.; Benini, L.; Pazos, N. Robustifying the Deployment of tinyML Models for Autonomous Mini-Vehicles. Sensors 2021, 21, 1339. [Google Scholar] [CrossRef] [PubMed]

- Kocić, J.; Jovičić, N.; Drndarević, V. An End-to-End Deep Neural Network for Autonomous Driving Designed for Embedded Automotive Platforms. Sensors 2019, 19, 2064. [Google Scholar] [CrossRef] [PubMed]

- Navarro, P.J.; Fernández, C.; Borraz, R.; Alonso, D. A Machine Learning Approach to Pedestrian Detection for Autonomous Vehicles Using High-Definition 3D Range Data. Sensors 2017, 17, 18. [Google Scholar] [CrossRef] [PubMed]

- Bibi, R.; Saeed, Y.; Zeb, A.; Ghazal, T.; Said, R.; Abbas, S.; Ahmad, M.; Khan, M. Edge AI-Based Automated Detection and Classification of Road Anomalies in VANET Using Deep Learning. Comput. Intell. Neurosci. 2021, 2021, 6262194. [Google Scholar] [CrossRef]

- Ferdowsi, A.; Challita, U.; Saad, W. Deep Learning for Reliable Mobile Edge Analytics in Intelligent Transportation Systems. IEEE Veh. Technol. Mag. 2017, 14, 62–70. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, G.; Chen, Z.; Guo, J. Object Detection Algorithm for Wheeled Mobile Robot Based on an Improved YOLOv4. Appl. Sci. 2022, 12, 4769. [Google Scholar] [CrossRef]

- Febbo, R.; Flood, B.; Halloy, J.; Lau, P.; Wong, K.; Ayala, A. Autonomous Vehicle Control Using a Deep Neural Network and Jetson Nano. In Proceedings of the Practice and Experience in Advanced Research Computing, Portland, OR, USA, 26–30 July 2020; PEARC’20. pp. 333–338. [Google Scholar] [CrossRef]

- Palossi, D.; Loquercio, A.; Conti, F.; Flamand, E.; Scaramuzza, D.; Benini, L. A 64-mW DNN-Based Visual Navigation Engine for Autonomous Nano-Drones. IEEE Internet Things J. 2019, 6, 8357–8371. [Google Scholar] [CrossRef]

- Alsamhi, S.; Almalki, F.; Al-Dois, H.; Shvetsov, A.; Ansari, S.; Hawbani, A.; Gupta, D.S.; Lee, B. Multi-Drone Edge Intelligence and SAR Smart Wearable Devices for Emergency Communication. Wirel. Commun. Mob. Comput. 2021, 2021, 6710074. [Google Scholar] [CrossRef]

- Wang, J.; Feng, Z.; Chen, Z.; George, S.; Bala, M.; Pillai, P.; Yang, S.W.; Satyanarayanan, M. Bandwidth-Efficient Live Video Analytics for Drones Via Edge Computing. In Proceedings of the 2018 IEEE/ACM Symposium on Edge Computing (SEC), Seattle, WA, USA, 25–27 October 2018; pp. 159–173. [Google Scholar] [CrossRef]

- Tsakanikas, V.; Dagiuklas, T.; Iqbal, M.; Wang, X.; Mumtaz, S. An intelligent model for supporting edge migration for virtual function chains in next generation internet of things. Sci. Rep. 2023, 13, 1063. [Google Scholar] [CrossRef]

- Ju, Y.; Cao, Z.; Chen, Y.; Liu, L.; Pei, Q.; Mumtaz, S.; Dong, M.; Guizani, M. NOMA-Assisted Secure Offloading for Vehicular Edge Computing Networks With Asynchronous Deep Reinforcement Learning. IEEE Trans. Intell. Transp. Syst. 2023; in press. [Google Scholar] [CrossRef]

- Yan, J.; Zhang, M.; Jiang, Y.; Zheng, F.C.; Chang, Q.; Abualnaja, K.M.; Mumtaz, S.; You, X. Double Deep Q-Network based Joint Edge Caching and Content Recommendation with Inconsistent File Sizes in Fog-RANs. IEEE Trans. Veh. Technol. 2023, 1–14. [Google Scholar] [CrossRef]

- Liu, J.; Fan, Y.; Sun, R.; Liu, L.; Wu, C.; Mumtaz, S. Blockchain-Aided Privacy-Preserving Medical Data Sharing Scheme for E-Healthcare System. IEEE Internet Things J. 2023, 10, 21377–21388. [Google Scholar] [CrossRef]

- Guim, F.; Metsch, T.; Moustafa, H.; Verrall, T.; Carrera, D.; Cadenelli, N.; Chen, J.; Doria, D.; Ghadie, C.; Prats, R.G. Autonomous Lifecycle Management for Resource-Efficient Workload Orchestration for Green Edge Computing. IEEE Trans. Green Commun. Netw. 2022, 6, 571–582. [Google Scholar] [CrossRef]

- Hanzel, K.; Grzechca, D.; Ziebinski, A.; Chruszczyk, L.; Janus, A. Estimating the AGV load and a battery lifetime based on the current measurement and random forest application. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 5057–5063. [Google Scholar] [CrossRef]

| Library | Supported Devices or Systems | Open Source | First Released | Edge Intelligence Features |

|---|---|---|---|---|

| TensorFlow Lite | iOS, Android, Linux-based SBC | Yes | 2019 | GPU/TPU acceleration Optimization of TensorFlow models for edge devices |

| edge-ml | Arduino Nicla Sense ME, Arduino Nano 33 BLE, ESP32, Android | Yes | 2020 | End-to-end ML workflows for edge devices. Automatic detection of optimal NN architecture |

| TinyDL | NVIDIA Jetson TK1 | Yes | 2017 | Optimization of deep learning models based on available hardware on the edge device |

| PyTorch Mobile | iOS, Android, Linux-based SBC | Yes | 2019 | GPU/NPU acceleration (in beta) Optimization of PyTorch models for edge devices |

| CoreML | iOS, WatchOS, MacOS | No | 2017 | GPU/NPU acceleration |

| ML Kit for Firebase | iOS, Android | No | 2018 | Unified SDK supporting multiple ML APIs |

| Apache MXNet | iOS (conversion), Android, Linux-based SBC | Yes | 2015 | GPU/TPU acceleration Amalgamation support Model conversion e.g., to CoreML |

| EEL | Raspberry Pi, Arduino, micro:bit | Yes | 2017 | Support for workflow: compilation-training-deployment to edge |

| DeepThings | Raspberry Pi | Yes | 2018 | Improves running inference on a cluster of edge devices |

| DeepIoT | Intel Edison | Yes | 2017 | Neural network compression for edge devices |

| Paper | Use Case | Devices/Platforms | Benefits |

|---|---|---|---|

| Hu et al. [62] | Intelligent robot factory | Robot with Android Edge server CentOS 7 Cloud Server Ubuntu 16.04 | Reduced delay in real-time monitoring Improved recognition accuracy Improved resource management |

| Boguslawski et al. [63] | Predictive maintenance of rod pumps | Microsoft IoT Edge Docker, Ubuntu Core | Ability to operate offline |

| Matthews et al. [64] | Synchrophasor data analysis | Jetson Nano | Improved performance Reduced energy consumption |

| Don et al. [65] | Video streaming | Edge server with Tesla V100 GPU | Improved detection of network quality Improved reliability of video stream |

| Zhang et al. [66] | IIoT authentication | Simulation | Improved data security and privacy |

| Shubyn et al. [67] | Predictive maintenance | Simulation | Improved prediction accuracy Improved data security |

| Liu et al. [68] | IIoT anomaly detection | Virtual edge server with Ubuntu 18.04 | Reduced communication overhead |

| Zeng et al. [69] | IIoT image recognition | Raspberry Pi 3 Desktop PC edge server | Reduced latency Improved performance |

| Li et al. [70] | IIoT defect detection | N/A | Improved computing efficiency |

| Park et al. [71] | Predictive maintenance Real-time fault detection | Raspberry Pi 3 | Improved performance Reduced data analysis costs |

| Mohan et al. [72] | Face mask detection | ARM Cortex M7 microcontroller | Ability to run on a microntroller Over 99% accuracy |

| Faleh et al. [73] | Face mask detection | Jetson Nano | Ability to run on an edge device Over 99% accuracy |

| Qayuum et al. [74] | COVID-19 diagnostics | N/A | Reduced data transmission Reduced processing time Improved data privacy |

| Adhikari et al. [75] | COVID-19 health monitoring system | N/A | Improved accuracy Allows for immediate risk assessment |

| Velichko [76] | Diseases risk assessment | Arduino Uno Arduino Nano | Ability to run detection on low-powered devices |

| Yang et al. [77] | Visual healthcare platform | N/A | Reduced amount of data sent Improved efficiency |

| Mrozek et al. [78] | Fall detection system | iPhone 8 | Reduced latency Reduced data transfer |

| Ahmed et al. [79] | Monitoring patients’ discomfort | N/A | Allows for noninvasive monitoring |

| Liu et al. [80] | Food recognition for dietary assessment | Android 6.0.1 device Edge server with CentOS 7 | Improved response time Reduced data transfer |

| Xu et al. [81] | Healthcare-related wearable devices | Nexus 6 with Android 7 LG Watch Urbane | Improved reaction time Reduced energy consumption |

| Pramukantoro et al. [82] | Real-time heartbeat monitoring | Polar H10 Desktop PC edge server | Ability to perform classification at the edge |

| Zanetti et al. [83] | Cognitive workload monitoring | eGlass ARM Cortex-M4 | Ability to perform classification at the edge |

| Puerta et al. [84] | Seizure detection | N/A | Low computational cost High accuracy |

| Coelho et al. [85] | Human activity recognition | STM32F411VE | Ability to perform classification at the edge Energy efficiency |

| Arikumar et al. [86] | Person movement identification | N/A | Reduced computation cost Reduced memory usage Reduced data transmission |

| Zhang et al. [87] | Microseismic monitoring platform | Xilinx FPGA Intel-based edge server | Reduced data transmission |

| Kumar et al. [88] | Water monitoring | AquaSense Sensor Arduino Uno | No need to communicate with central servers |

| Liu et al. [89] | Smart city energy management | N/A | Reduced cost Reduced latency |

| Cicirelli et al. [90] | Home energy management | Raspberry Pi | Reduced energy consumption |

| Ali et al. [91] | Real-time object detection | Raspberry Pi Azure IoT Edge | Reduced latency Improved scalability |

| Janjua et al. [92] | Dangerous event detection in smart cities | Raspberry Pi | Reduced data transmission Reduced latency |

| Orfanidis et al. [93] | Long-range emergency system | ESP32 | Ability to run detection on an edge device |

| Nikouei et al. [94] | Real-time human detection in video streams | Raspberry Pi 3 | Reduced data transmission Reduced latency |

| Pang et al. [95] | Surveillance | N/A | Improved performance |

| Dhakal et al. [96] | Home intrusion monitoring | OpenNet VM | Ability to operate without central server |

| Sabella [98] | Fire and smoke detection | Intel NCS2 Movidius NCS Intel-based edge server | Improved response time |

| Silva et al. [99] | Leaf disease detection | Jetson Nano | Ability to operate without centralized service |

| de Prado et al. [102] | Steering mini autonomous vehicles | STM32L4 GAP8 NXP k64f | Reduced reaction time Reduced data transmission Reduced energy consumption |

| Kocic et al. [103] | Steering autonomous vehicles | Desktop PC edge server | Ability to execute on edge devices |

| Navarro et al. [104] | Pedestrian detection | N/A | Improved performance |

| Bibi et al. [105] | Vehicular ad-hoc network for anomalies | Simulation | Improved road safety |

| Ferdowsi et al. [106] | Intelligent transportation system (ITS) | N/A | Reduced latency Improved reliability |

| Hu et al. [107] | Object detection for autonomous vehicles | Jetson TX2 | Improved detection performance |

| Febbo et al. [108] | Autonomous robots | Jetson Nano | Ability to operate without centralized service |

| Palossi et al. [109] | Autonomous nano-drones | GAP8 COTS Crazyflie 2.0 | Ability to operate autonomously |

| Alsamhi et al. [110] | Data sharing between drones and wearables | Simulation | Optimized data transmission Reduced latency |

| Zhu et al. [100] | Task offloading in satellite-terrestrial computing networks | Simulation | Reduced runtime consumption |

| Jiang et al. [25] | Electricity theft detection | Simulation | Ability to take advantage of federated learning |

| Zhang et al. [101] | Task offloading cache content delivery | Simulation | Reduced average delay of task processing |

| Wang et al. [111] | Drone surveillance | Intel Aero Drone Jetson TX2 | Reduced data transmission |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grzesik, P.; Mrozek, D. Combining Machine Learning and Edge Computing: Opportunities, Challenges, Platforms, Frameworks, and Use Cases. Electronics 2024, 13, 640. https://doi.org/10.3390/electronics13030640

Grzesik P, Mrozek D. Combining Machine Learning and Edge Computing: Opportunities, Challenges, Platforms, Frameworks, and Use Cases. Electronics. 2024; 13(3):640. https://doi.org/10.3390/electronics13030640

Chicago/Turabian StyleGrzesik, Piotr, and Dariusz Mrozek. 2024. "Combining Machine Learning and Edge Computing: Opportunities, Challenges, Platforms, Frameworks, and Use Cases" Electronics 13, no. 3: 640. https://doi.org/10.3390/electronics13030640

APA StyleGrzesik, P., & Mrozek, D. (2024). Combining Machine Learning and Edge Computing: Opportunities, Challenges, Platforms, Frameworks, and Use Cases. Electronics, 13(3), 640. https://doi.org/10.3390/electronics13030640