Mitigating Adversarial Attacks against IoT Profiling

Abstract

:1. Introduction

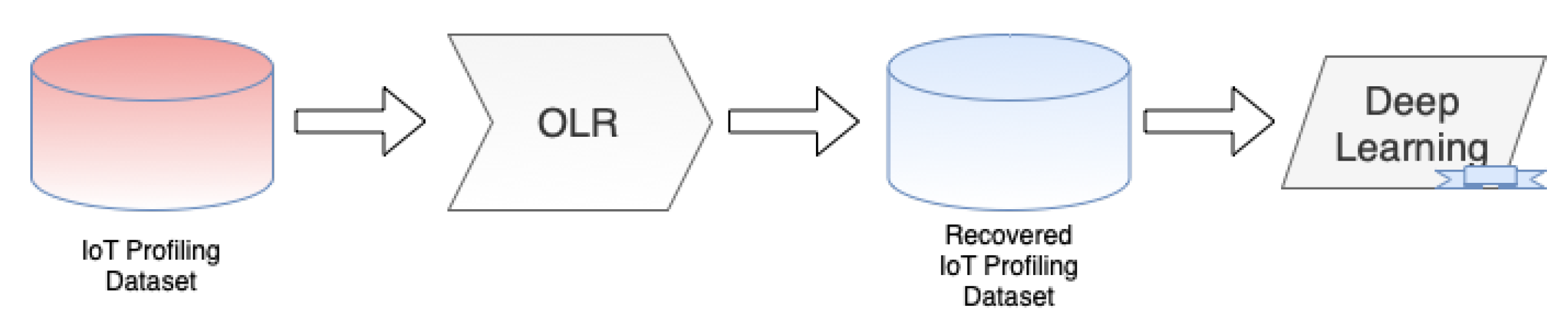

- A framework to mitigate the effects of label flipping attacks in Deep-Learning-based IoT profiling called Overlapping Label Recovery (OLR);

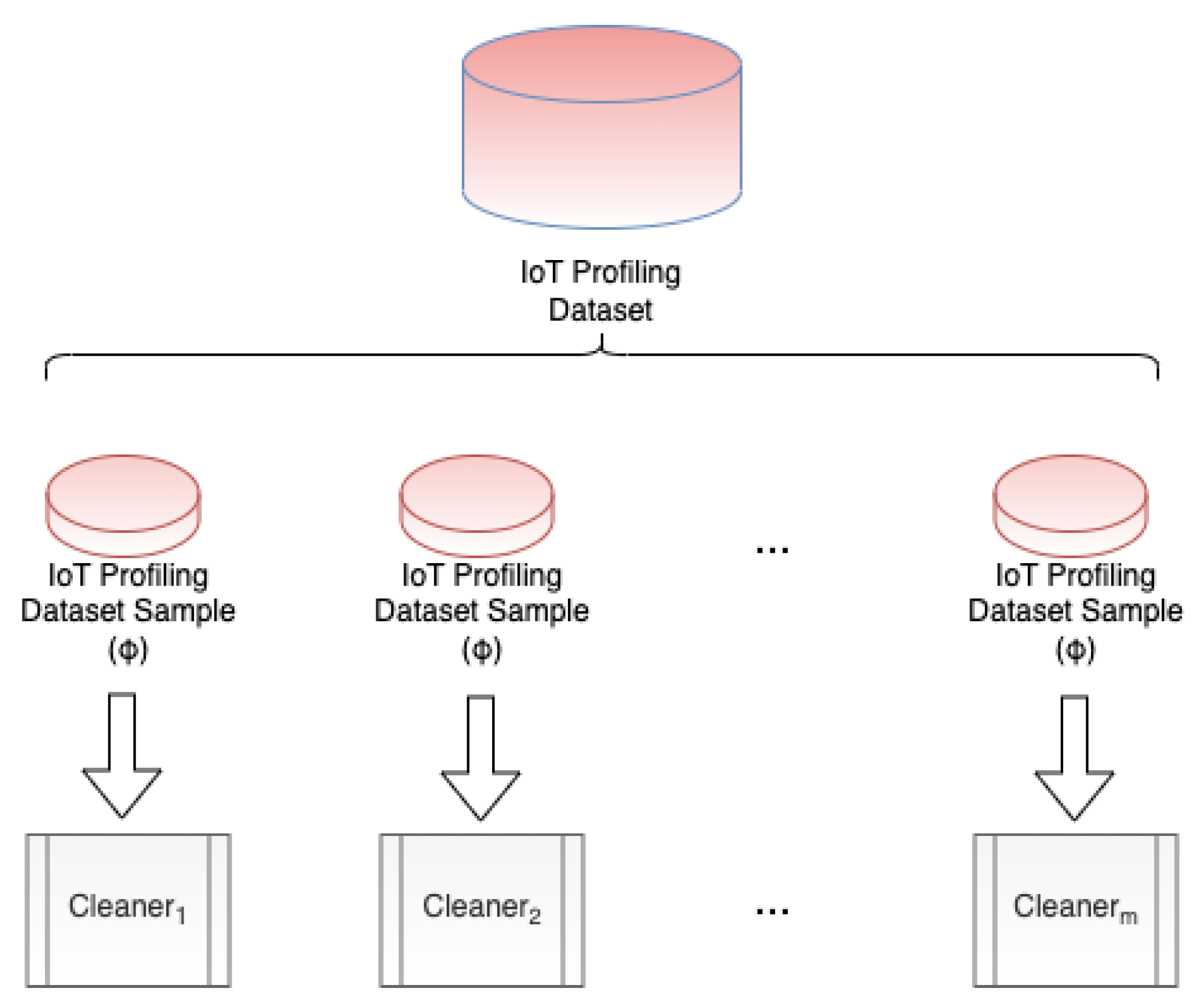

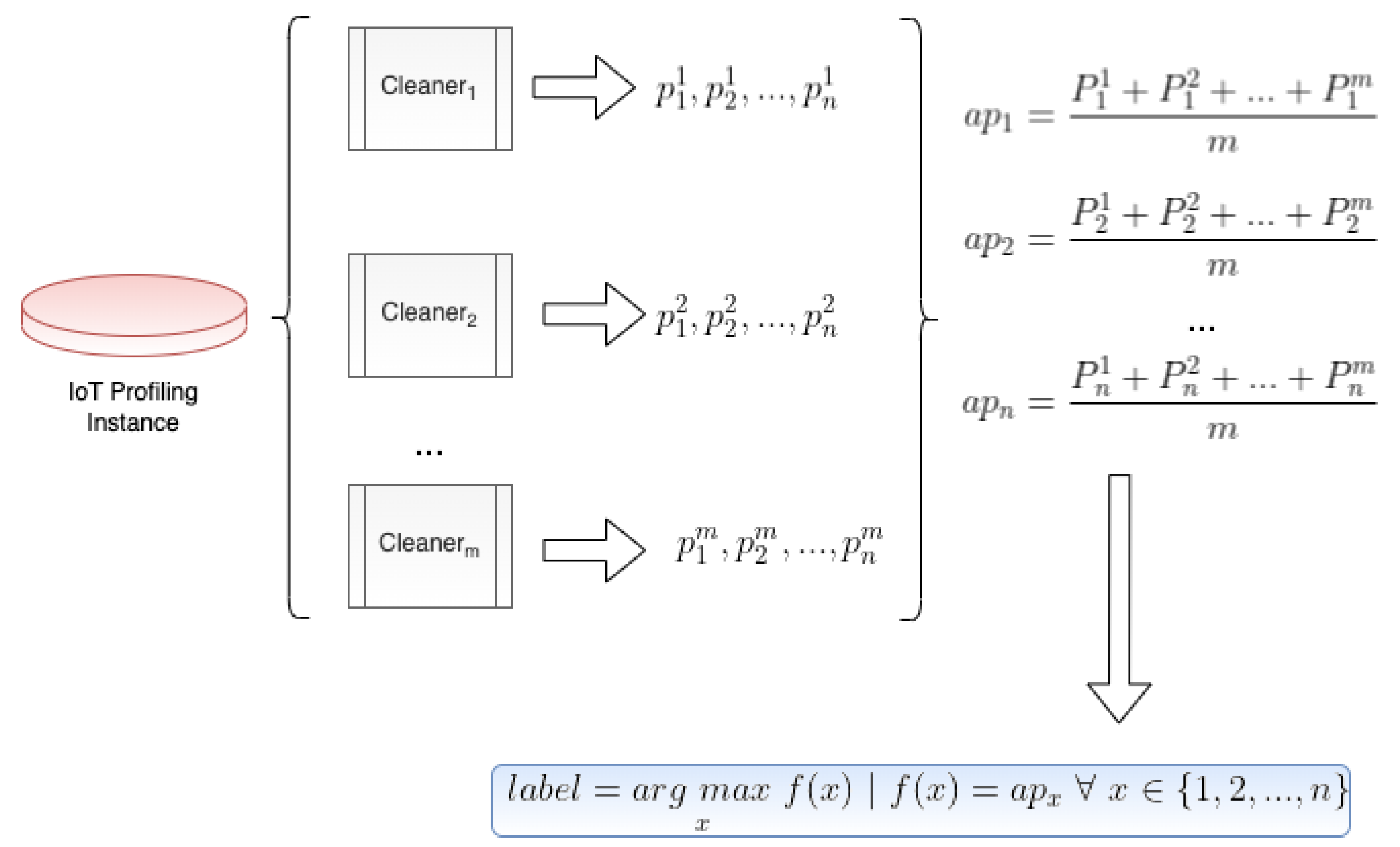

- A novel label recovery mechanism based on overlapping training and data sampling;

- An evaluation model based on the average area between performance curves considering label flipping and recovery procedure.

2. Related Works

2.1. IoT Profiling

2.2. Label Flipping Mitigation

3. Background

3.1. IoT Profiling & Label Flipping

3.2. Deep Learning (DL) & Random Forest (RF)

4. Overlapping Label Recovery for IoT Profiling

| Algorithm 1 Overlapping Label Recovery (OLR) for IoT Profiling |

|

5. Evaluation Method

6. Experiments

6.1. Experiment I

6.2. Experiment II

6.3. Experiment III

6.4. Evaluation

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Da Xu, L.; He, W.; Li, S. Internet of things in industries: A survey. IEEE Trans. Ind. Inform. 2014, 10, 2233–2243. [Google Scholar]

- Nauman, A.; Qadri, Y.A.; Amjad, M.; Zikria, Y.B.; Afzal, M.K.; Kim, S.W. Multimedia Internet of Things: A comprehensive survey. IEEE Access 2020, 8, 8202–8250. [Google Scholar] [CrossRef]

- Habibzadeh, H.; Dinesh, K.; Shishvan, O.R.; Boggio-Dandry, A.; Sharma, G.; Soyata, T. A survey of healthcare Internet of Things (HIoT): A clinical perspective. IEEE Internet Things J. 2019, 7, 53–71. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.K.; Bae, M.; Kim, H. Future of IoT networks: A survey. Appl. Sci. 2017, 7, 1072. [Google Scholar] [CrossRef]

- Marjani, M.; Nasaruddin, F.; Gani, A.; Karim, A.; Hashem, I.A.T.; Siddiqa, A.; Yaqoob, I. Big IoT data analytics: Architecture, opportunities, and open research challenges. IEEE Access 2017, 5, 5247–5261. [Google Scholar]

- Hajjaji, Y.; Boulila, W.; Farah, I.R.; Romdhani, I.; Hussain, A. Big data and IoT-based applications in smart environments: A systematic review. Comput. Sci. Rev. 2021, 39, 100318. [Google Scholar] [CrossRef]

- Madakam, S.; Ramaswamy, R.; Tripathi, S. Internet of Things (IoT): A literature review. J. Comput. Commun. 2015, 3, 164–173. [Google Scholar] [CrossRef]

- Čolaković, A.; Hadžialić, M. Internet of Things (IoT): A review of enabling technologies, challenges, and open research issues. Comput. Netw. 2018, 144, 17–39. [Google Scholar] [CrossRef]

- Selvaraj, S.; Sundaravaradhan, S. Challenges and opportunities in IoT healthcare systems: A systematic review. SN Appl. Sci. 2020, 2, 1–8. [Google Scholar] [CrossRef]

- Akkaş, M.A.; Sokullu, R.; Cetin, H.E. Healthcare and patient monitoring using IoT. Internet Things 2020, 11, 100173. [Google Scholar] [CrossRef]

- Mohammed, J.; Lung, C.H.; Ocneanu, A.; Thakral, A.; Jones, C.; Adler, A. Internet of Things: Remote patient monitoring using web services and cloud computing. In Proceedings of the 2014 IEEE International Conference on Internet of Things (IThings), and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom), Taipei, Taiwan, 1–3 September 2014; pp. 256–263. [Google Scholar]

- Zantalis, F.; Koulouras, G.; Karabetsos, S.; Kandris, D. A review of machine learning and IoT in smart transportation. Future Internet 2019, 11, 94. [Google Scholar] [CrossRef]

- Uma, S.; Eswari, R. Accident prevention and safety assistance using IOT and machine learning. J. Reliab. Intell. Environ. 2022, 8, 79–103. [Google Scholar] [CrossRef]

- Celesti, A.; Galletta, A.; Carnevale, L.; Fazio, M.; Ĺay-Ekuakille, A.; Villari, M. An IoT cloud system for traffic monitoring and vehicular accidents prevention based on mobile sensor data processing. IEEE Sens. J. 2017, 18, 4795–4802. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, W.; Tao, F.; Lin, C.L. Industrial IoT in 5G environment towards smart manufacturing. J. Ind. Inf. Integr. 2018, 10, 10–19. [Google Scholar] [CrossRef]

- Al-Emran, M.; Malik, S.I.; Al-Kabi, M.N. A survey of Internet of Things (IoT) in education: Opportunities and challenges. In Toward Social Internet of Things (SIoT): Enabling Technologies, Architectures and Applications; Springer: Berlin/Heidelberg, Germany, 2020; pp. 197–209. [Google Scholar]

- Pate, J.; Adegbija, T. AMELIA: An application of the Internet of Things for aviation safety. In Proceedings of the 2018 15th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 12–15 January 2018; pp. 1–6. [Google Scholar]

- Salam, A. Internet of things for sustainable forestry. In Internet of Things for Sustainable Community Development; Springer: Berlin/Heidelberg, Germany, 2020; pp. 147–181. [Google Scholar]

- Cisco, U. Cisco Annual Internet Report (2018–2023) White Paper; Cisco: San Jose, CA, USA, 2020. [Google Scholar]

- Vermesan, O.; Friess, P.; Guillemin, P.; Giaffreda, R.; Grindvoll, H.; Eisenhauer, M.; Serrano, M.; Moessner, K.; Spirito, M.; Blystad, L.C.; et al. Internet of things beyond the hype: Research, innovation and deployment. In Building the Hyperconnected Society-Internet of Things Research and Innovation Value Chains, Ecosystems and Markets; River Publishers: Roma, Italy, 2022; pp. 15–118. [Google Scholar]

- Shafique, K.; Khawaja, B.A.; Sabir, F.; Qazi, S.; Mustaqim, M. Internet of things (IoT) for next-generation smart systems: A review of current challenges, future trends and prospects for emerging 5G-IoT scenarios. IEEE Access 2020, 8, 23022–23040. [Google Scholar] [CrossRef]

- Lee, S.H.; Shiue, Y.L.; Cheng, C.H.; Li, Y.H.; Huang, Y.F. Detection and Prevention of DDoS Attacks on the IoT. Appl. Sci. 2022, 12, 12407. [Google Scholar] [CrossRef]

- Safi, M.; Dadkhah, S.; Shoeleh, F.; Mahdikhani, H.; Molyneaux, H.; Ghorbani, A.A. A Survey on IoT Profiling, Fingerprinting, and Identification. ACM Trans. Internet Things 2022, 3, 1–39. [Google Scholar] [CrossRef]

- Sharma, S.; Kaushik, B. A survey on internet of vehicles: Applications, security issues & solutions. Veh. Commun. 2019, 20, 100182. [Google Scholar]

- Abrishami, M.; Dadkhah, S.; Neto, E.C.P.; Xiong, P.; Iqbal, S.; Ray, S.; Ghorbani, A.A. Classification and Analysis of Adversarial Machine Learning Attacks in IoT: A Label Flipping Attack Case Study. In Proceedings of the 2022 32nd Conference of Open Innovations Association (FRUCT), Tampere, Finland, 9–11 November 2022; pp. 3–14. [Google Scholar]

- Krishnan, P.; Jain, K.; Buyya, R.; Vijayakumar, P.; Nayyar, A.; Bilal, M.; Song, H. MUD-based behavioral profiling security framework for software-defined IoT networks. IEEE Internet Things J. 2021, 9, 6611–6622. [Google Scholar] [CrossRef]

- Hamza, A.; Ranathunga, D.; Gharakheili, H.H.; Benson, T.A.; Roughan, M.; Sivaraman, V. Verifying and monitoring iots network behavior using mud profiles. IEEE Trans. Dependable Secur. Comput. 2020, 19, 1–18. [Google Scholar] [CrossRef]

- Safi, M.; Kaur, B.; Dadkhah, S.; Shoeleh, F.; Lashkari, A.H.; Molyneaux, H.; Ghorbani, A.A. Behavioural Monitoring and Security Profiling in the Internet of Things (IoT). In Proceedings of the 2021 IEEE 23rd Int Conf on High Performance Computing & Communications; 7th Int Conf on Data Science & Systems; 19th Int Conf on Smart City; 7th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Haikou, China, 20–22 December 2021; pp. 1203–1210. [Google Scholar]

- Dadkhah, S.; Mahdikhani, H.; Danso, P.K.; Zohourian, A.; Truong, K.A.; Ghorbani, A.A. Towards the development of a realistic multidimensional IoT profiling dataset. In Proceedings of the 2022 19th Annual International Conference on Privacy, Security & Trust (PST), Fredericton, NB, Canada, 22–24 August 2022; pp. 1–11. [Google Scholar]

- Tolpegin, V.; Truex, S.; Gursoy, M.E.; Liu, L. Data poisoning attacks against federated learning systems. In European Symposium on Research in Computer Security; Springer: Berlin/Heidelberg, Germany, 2020; pp. 480–501. [Google Scholar]

- Lyu, L.; Yu, H.; Yang, Q. Threats to federated learning: A survey. arXiv 2020, arXiv:2003.02133. [Google Scholar]

- Nuding, F.; Mayer, R. Data Poisoning in Sequential and Parallel Federated Learning. In Proceedings of the 2022 ACM on International Workshop on Security and Privacy Analytics, Baltimore, MD, USA, 24–27 April 2022; pp. 24–34. [Google Scholar]

- Sitawarin, C.; Bhagoji, A.N.; Mosenia, A.; Chiang, M.; Mittal, P. Darts: Deceiving autonomous cars with toxic signs. arXiv 2018, arXiv:1802.06430. [Google Scholar]

- Rosenfeld, E.; Winston, E.; Ravikumar, P.; Kolter, Z. Certified robustness to label-flipping attacks via randomized smoothing. In Proceedings of the International Conference on Machine Learning, Virtual, 12–18 July 2020; pp. 8230–8241. [Google Scholar]

- Chan, P.P.; Luo, F.; Chen, Z.; Shu, Y.; Yeung, D.S. Transfer learning based countermeasure against label flipping poisoning attack. Inf. Sci. 2021, 548, 450–460. [Google Scholar] [CrossRef]

- Demertzi, V.; Demertzis, S.; Demertzis, K. An overview of cyber threats, attacks and countermeasures on the primary domains of smart cities. Appl. Sci. 2023, 13, 790. [Google Scholar] [CrossRef]

- Mulero-Palencia, S.; Monzon Baeza, V. Detection of Vulnerabilities in Smart Buildings Using the Shodan Tool. Electronics 2023, 12, 4815. [Google Scholar] [CrossRef]

- Korium, M.S.; Saber, M.; Beattie, A.; Narayanan, A.; Sahoo, S.; Nardelli, P.H. Intrusion detection system for cyberattacks in the Internet of Vehicles environment. Ad Hoc Netw. 2024, 153, 103330. [Google Scholar] [CrossRef]

- Chen, H.; Liu, J.; Wang, J.; Xun, Y. Towards secure intra-vehicle communications in 5G advanced and beyond: Vulnerabilities, attacks and countermeasures. Veh. Commun. 2023, 39, 100548. [Google Scholar] [CrossRef]

- Taslimasa, H.; Dadkhah, S.; Neto, E.C.P.; Xiong, P.; Ray, S.; Ghorbani, A.A. Security issues in Internet of Vehicles (IoV): A comprehensive survey. Internet Things 2023, 22, 100809. [Google Scholar] [CrossRef]

- Hernandez-Jaimes, M.L.; Martinez-Cruz, A.; Ramírez-Gutiérrez, K.A.; Feregrino-Uribe, C. Artificial intelligence for IoMT security: A review of intrusion detection systems, attacks, datasets and Cloud-Fog-Edge architectures. Internet Things 2023, 23, 100887. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.B.; Afrin, S.; Rafa, S.J.; Rafa, N.; Gandomi, A.H. Insights into Internet of Medical Things (IoMT): Data fusion, security issues and potential solutions. Inf. Fusion 2024, 102, 102060. [Google Scholar] [CrossRef]

- Al-Hawawreh, M.; Alazab, M.; Ferrag, M.A.; Hossain, M.S. Securing the Industrial Internet of Things against ransomware attacks: A comprehensive analysis of the emerging threat landscape and detection mechanisms. J. Netw. Comput. Appl. 2023, 223, 103809. [Google Scholar] [CrossRef]

- Chaudhary, S.; Mishra, P.K. DDoS attacks in Industrial IoT: A survey. Comput. Netw. 2023, 236, 110015. [Google Scholar] [CrossRef]

- Yang, K.; Li, Q.; Sun, L. Towards automatic fingerprinting of IoT devices in the cyberspace. Comput. Netw. 2019, 148, 318–327. [Google Scholar] [CrossRef]

- Bezawada, B.; Bachani, M.; Peterson, J.; Shirazi, H.; Ray, I.; Ray, I. Behavioral fingerprinting of iot devices. In Proceedings of the 2018 Workshop on Attacks and Solutions in Hardware Security, Toronto, ON, Canada, 19 October 2018; pp. 41–50. [Google Scholar]

- Thangavelu, V.; Divakaran, D.M.; Sairam, R.; Bhunia, S.S.; Gurusamy, M. DEFT: A distributed IoT fingerprinting technique. IEEE Internet Things J. 2018, 6, 940–952. [Google Scholar] [CrossRef]

- Xia, W.; Wen, Y.; Foh, C.H.; Niyato, D.; Xie, H. A survey on software-defined networking. IEEE Commun. Surv. Tutor. 2014, 17, 27–51. [Google Scholar] [CrossRef]

- Ali, M.N.; Imran, M.; din, M.S.u.; Kim, B.S. Low Rate DDoS Detection Using Weighted Federated Learning in SDN Control Plane in IoT Network. Appl. Sci. 2023, 13, 1431. [Google Scholar] [CrossRef]

- Yi, B.; Wang, X.; Li, K.; Huang, M. A comprehensive survey of network function virtualization. Comput. Netw. 2018, 133, 212–262. [Google Scholar] [CrossRef]

- Ferman, V.A.; Tawfeeq, M.A. Machine learning challenges for IoT device fingerprints identification. J. Phys. Conf. Ser. 2021, 1963, 012046. [Google Scholar] [CrossRef]

- Rose, J.R.; Swann, M.; Bendiab, G.; Shiaeles, S.; Kolokotronis, N. Intrusion detection using network traffic profiling and machine learning for IoT. In Proceedings of the 2021 IEEE 7th International Conference on Network Softwarization (NetSoft), Tokyo, Japan, 28 June–2 July 2021; pp. 409–415. [Google Scholar]

- Lee, S.Y.; Wi, S.r.; Seo, E.; Jung, J.K.; Chung, T.M. ProFiOt: Abnormal Behavior Profiling (ABP) of IoT devices based on a machine learning approach. In Proceedings of the 2017 27th International Telecommunication Networks and Applications Conference (ITNAC), Melbourne, VIC, Australia, 22–24 November 2017; pp. 1–6. [Google Scholar]

- Babun, L.; Aksu, H.; Ryan, L.; Akkaya, K.; Bentley, E.S.; Uluagac, A.S. Z-iot: Passive device-class fingerprinting of zigbee and z-wave iot devices. In Proceedings of the ICC 2020-2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–7. [Google Scholar]

- Aneja, S.; Aneja, N.; Islam, M.S. IoT device fingerprint using deep learning. In Proceedings of the 2018 IEEE International Conference on Internet of Things and Intelligence System (IOTAIS), Bali, Indonesia, 1–3 November 2018; pp. 174–179. [Google Scholar]

- Msadek, N.; Soua, R.; Engel, T. Iot device fingerprinting: Machine learning based encrypted traffic analysis. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–8. [Google Scholar]

- Jafari, H.; Omotere, O.; Adesina, D.; Wu, H.H.; Qian, L. IoT devices fingerprinting using deep learning. In Proceedings of the MILCOM 2018-2018 IEEE Military Communications Conference (MILCOM), Los Angeles, CA, USA, 29–31 October 2018; pp. 1–9. [Google Scholar]

- Xiao, H.; Biggio, B.; Nelson, B.; Xiao, H.; Eckert, C.; Roli, F. Support vector machines under adversarial label contamination. Neurocomputing 2015, 160, 53–62. [Google Scholar] [CrossRef]

- Zhang, H.; Cheng, N.; Zhang, Y.; Li, Z. Label flipping attacks against Naive Bayes on spam filtering systems. Appl. Intell. 2021, 51, 4503–4514. [Google Scholar] [CrossRef]

- Lukasik, M.; Bhojanapalli, S.; Menon, A.; Kumar, S. Does label smoothing mitigate label noise? In Proceedings of the International Conference on Machine Learning, Virtual, 12–18 July 2020; pp. 6448–6458. [Google Scholar]

- Menon, A.K.; Rawat, A.S.; Reddi, S.J.; Kumar, S. Can gradient clipping mitigate label noise? In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Paudice, A.; Muñoz-González, L.; Lupu, E.C. Label sanitization against label flipping poisoning attacks. In Proceedings of the ECML PKDD 2018 Workshops: Nemesis 2018, UrbReas 2018, SoGood 2018, IWAISe 2018, and Green Data Mining 2018, Dublin, Ireland, 10–14 September 2018; Proceedings 18. Springer: Berlin/Heidelberg, Germany, 2019; pp. 5–15. [Google Scholar]

- Ortego, D.; Arazo, E.; Albert, P.; O’Connor, N.E.; McGuinness, K. Multi-objective interpolation training for robustness to label noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6606–6615. [Google Scholar]

- Zhang, K.; Tao, G.; Xu, Q.; Cheng, S.; An, S.; Liu, Y.; Feng, S.; Shen, G.; Chen, P.Y.; Ma, S.; et al. FLIP: A Provable Defense Framework for Backdoor Mitigation in Federated Learning. arXiv 2022, arXiv:2210.12873. [Google Scholar]

- Lv, Z.; Cao, H.; Zhang, F.; Ren, Y.; Wang, B.; Chen, C.; Li, N.; Chang, H.; Wang, W. AWFC: Preventing Label Flipping Attacks Towards Federated Learning for Intelligent IoT. Comput. J. 2022, 65, 2849–2859. [Google Scholar] [CrossRef]

- Li, D.; Wong, W.E.; Wang, W.; Yao, Y.; Chau, M. Detection and mitigation of label-flipping attacks in federated learning systems with KPCA and K-means. In Proceedings of the 2021 8th International Conference on Dependable Systems and Their Applications (DSA), Yinchuan, China, 11–12 September 2021; pp. 551–559. [Google Scholar]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Zhang, H.; Tae, K.H.; Park, J.; Chu, X.; Whang, S.E. iFlipper: Label Flipping for Individual Fairness. arXiv 2022, arXiv:2209.07047. [Google Scholar] [CrossRef]

- Sharma, R.; Sharma, G.; Pattanaik, M. A CatBoost Based Approach to Detect Label Flipping Poisoning Attack in Hardware Trojan Detection Systems. J. Electron. Test. 2022, 38, 667–682. [Google Scholar] [CrossRef]

- Yang, R.; He, H.; Wang, Y.; Qu, Y.; Zhang, W. Dependable federated learning for IoT intrusion detection against poisoning attacks. Comput. Secur. 2023, 132, 103381. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, W.; Chen, Y. Data quality detection mechanism against label flipping attacks in federated learning. IEEE Trans. Inf. Forensics Secur. 2023, 18, 1625–1637. [Google Scholar] [CrossRef]

- Taheri, R.; Javidan, R.; Shojafar, M.; Pooranian, Z.; Miri, A.; Conti, M. On defending against label flipping attacks on malware detection systems. Neural Comput. Appl. 2020, 32, 14781–14800. [Google Scholar] [CrossRef]

- Mahmoud, R.; Yousuf, T.; Aloul, F.; Zualkernan, I. Internet of things (IoT) security: Current status, challenges and prospective measures. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015; pp. 336–341. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A survey of the usages of deep learning for natural language processing. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 604–624. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to multi-layer feed-forward neural networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Sazli, M.H. A brief review of feed-forward neural networks. Commun. Fac. Sci. Univ. Ank. Ser.-Phys. Sci. Eng. 2006, 50. [Google Scholar] [CrossRef]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT press: Cambridge, MA, USA, 2016. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 157–175. [Google Scholar]

- Robnik-Šikonja, M. Improving random forests. In Proceedings of the Machine Learning: ECML 2004: 15th European Conference on Machine Learning, Pisa, Italy, 20–24 September 2004; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2004; pp. 359–370. [Google Scholar]

- Liu, J.; Cao, Y.; Li, Y.; Guo, Y.; Deng, W. A big data cleaning method based on improved CLOF and Random Forest for distribution network. CSEE J. Power Energy Syst. 2020. early access. [Google Scholar]

- Gu, J. Random Forest Based Imbalanced Data Cleaning and Classification; Citeseer: Gothenburg, Sweden, 2007. [Google Scholar]

- Sapountzoglou, N.; Lago, J.; Raison, B. Fault diagnosis in low voltage smart distribution grids using gradient boosting trees. Electr. Power Syst. Res. 2020, 182, 106254. [Google Scholar] [CrossRef]

- Gajera, V.; Gupta, R.; Jana, P.K. An effective multi-objective task scheduling algorithm using min-max normalization in cloud computing. In Proceedings of the 2016 2nd International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), Bangalore, India, 21–23 July 2016; pp. 812–816. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Neto, E.C.P.; Dadkhah, S.; Sadeghi, S.; Molyneaux, H. Mitigating Adversarial Attacks against IoT Profiling. Electronics 2024, 13, 2646. https://doi.org/10.3390/electronics13132646

Neto ECP, Dadkhah S, Sadeghi S, Molyneaux H. Mitigating Adversarial Attacks against IoT Profiling. Electronics. 2024; 13(13):2646. https://doi.org/10.3390/electronics13132646

Chicago/Turabian StyleNeto, Euclides Carlos Pinto, Sajjad Dadkhah, Somayeh Sadeghi, and Heather Molyneaux. 2024. "Mitigating Adversarial Attacks against IoT Profiling" Electronics 13, no. 13: 2646. https://doi.org/10.3390/electronics13132646

APA StyleNeto, E. C. P., Dadkhah, S., Sadeghi, S., & Molyneaux, H. (2024). Mitigating Adversarial Attacks against IoT Profiling. Electronics, 13(13), 2646. https://doi.org/10.3390/electronics13132646