Semi-Supervised Object Detection with Multi-Scale Regularization and Bounding Box Re-Prediction

Abstract

1. Introduction

- (1)

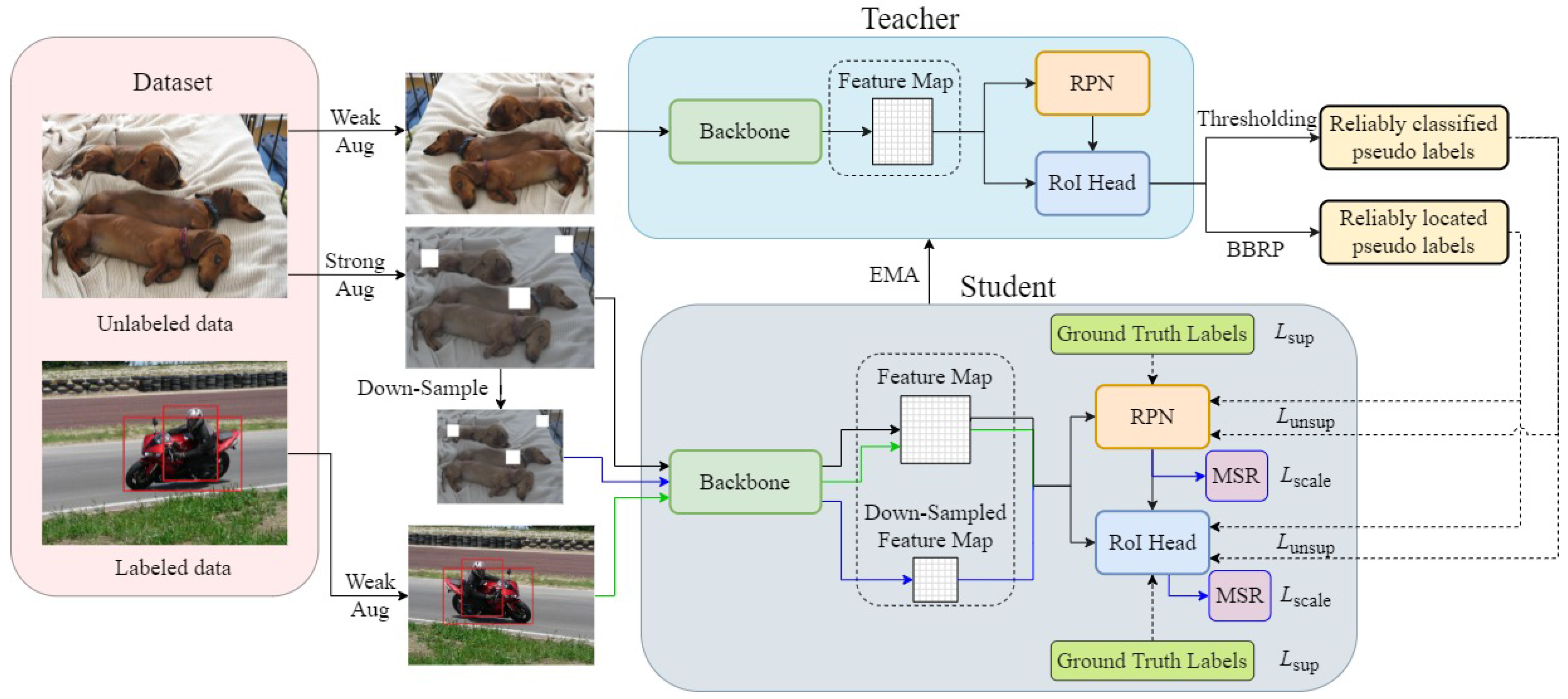

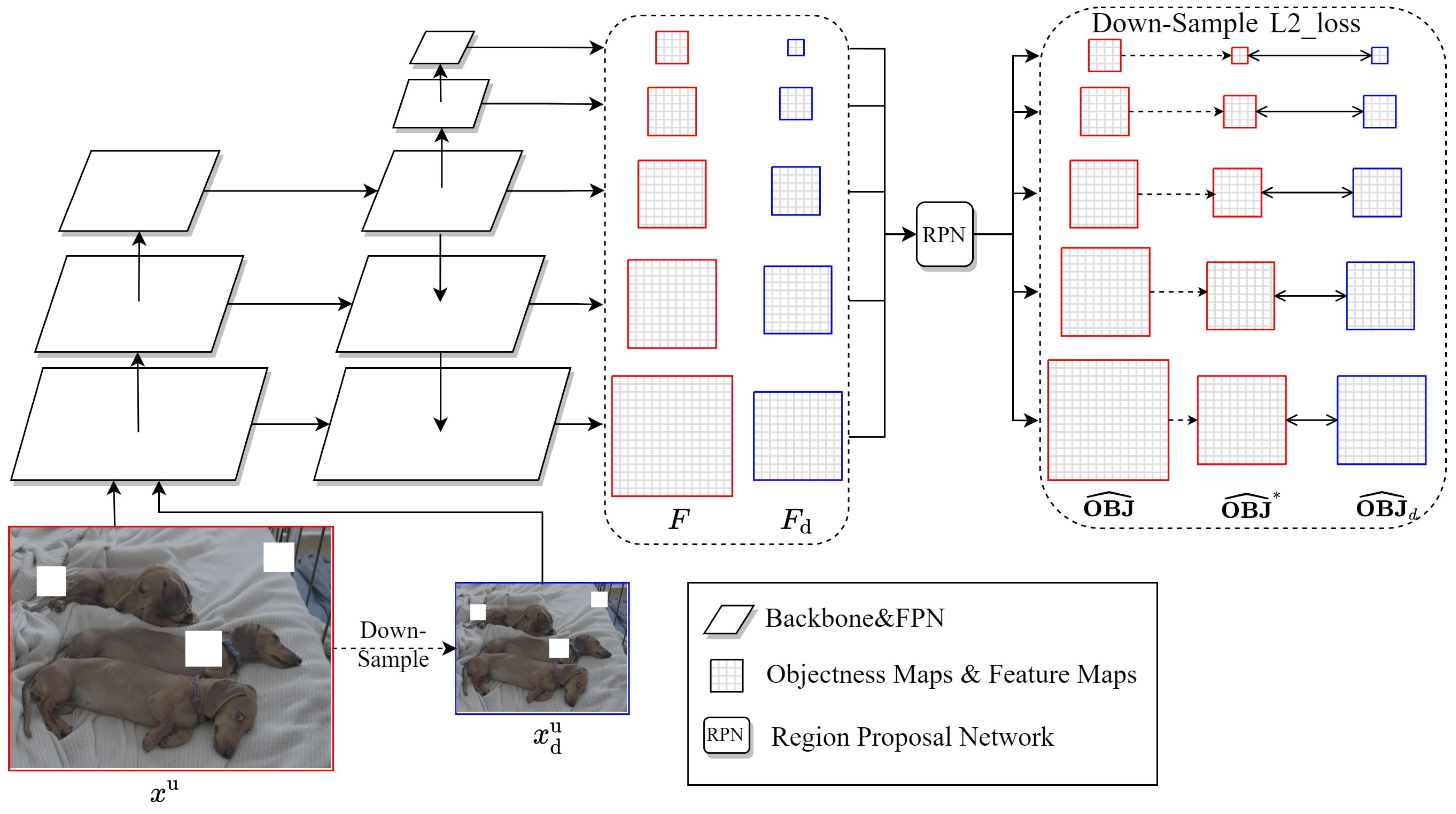

- A novel multi-scale regularization (MSR) strategy is proposed to constrain the Faster R-CNN and to generate the same prediction results for both the input images and their corresponding down-sampled ones, thus achieving better detection accuracy with the student model.

- (2)

- A novel bounding box re-prediction (BBRP) strategy is presented to re-predict the object’s bounding box, obtaining reliably located pseudo-labels of unlabeled data and thus improving the localization capability of the student model.

2. Materials and Methods

2.1. Overview of Our Proposed Method

2.2. Multi-Scale Regularization

| Algorithm 1: Multi-scale regularization. |

2.3. Bounding Box Re-Prediction

| Algorithm 2: Bounding box re-prediction. |

|

2.4. Loss Function

2.5. Datasets

| Dataset | Train | Val | Test | Total | Categories |

|---|---|---|---|---|---|

| VOC2007 | 2501 | 2510 | 4952 | 9963 | 20 |

| VOC2012 | 5717 | 5832 | - | 11,549 | 20 |

| COCO2017 | 118,287 | 5000 | - | 123,287 | 80 |

3. Experiments

3.1. Settings and Details

3.1.1. Evaluation Metrics

3.1.2. Implementation Details

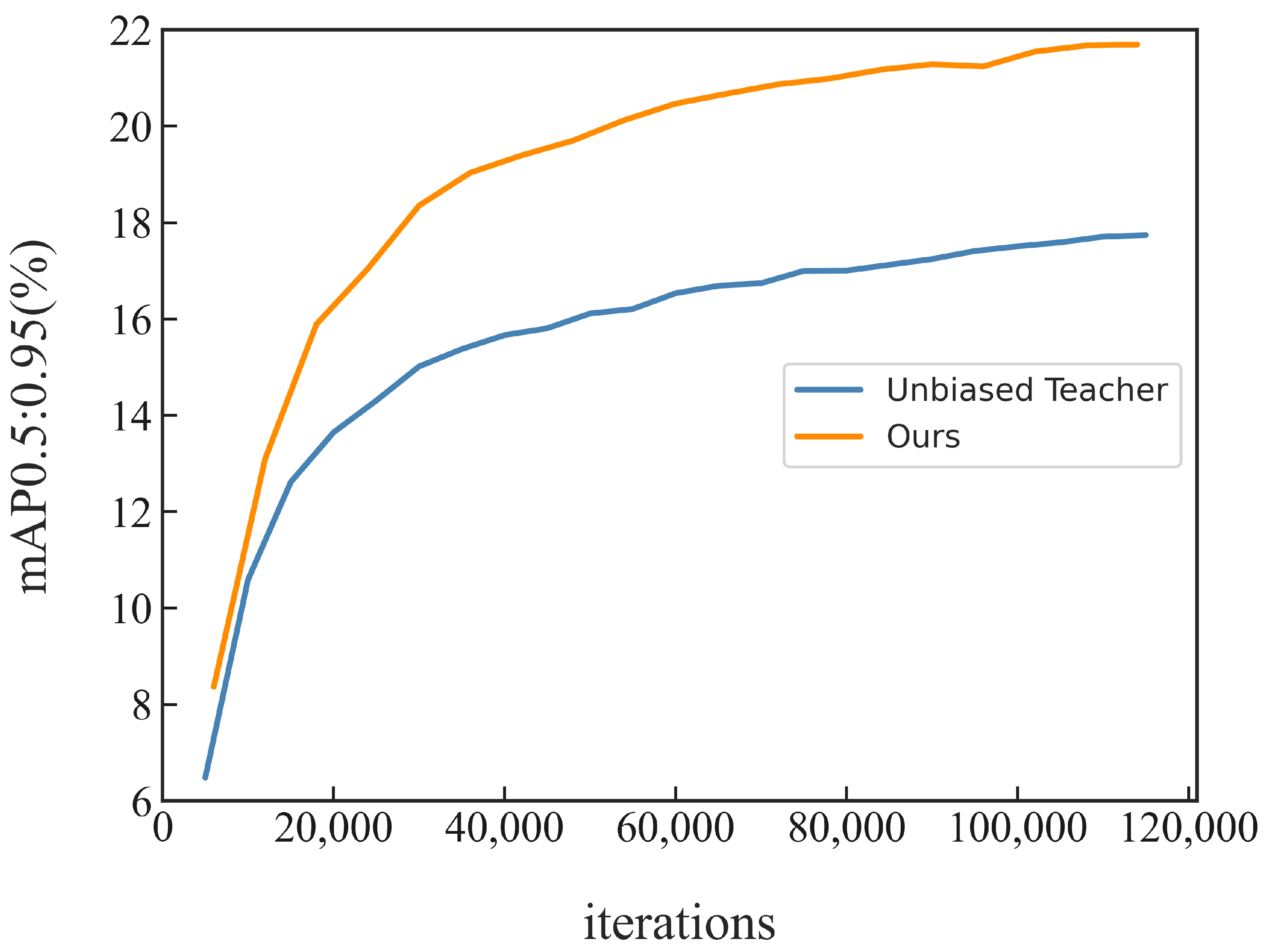

3.2. Comparison with State-of-the-Art Methods

3.3. Ablation Study

3.4. Visualization

4. Discussion

- (1)

- Training efficiency. Our method introduces additional computational costs, which decreases the training efficiency to some extent.

- (2)

- Domain adaptation. When the model begins semi-supervised learning, the training of the model becomes unstable due to the distribution gap between the labeled data and unlabeled data. We will try domain adaptation to alleviate this issue.

- (3)

- Label assignment. Our method obtains reliably classified pseudo-labels using the thresholding method, which is a simple label assignment strategy. In the future, we will try other strategies to improve the performance of our method.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Repbulic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Athiwaratkun, B.; Finzi, M.; Izmailov, P.; Wilson, A.G. There Are Many Consistent Explanations of Unlabeled Data: Why You Should Average. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Xie, Q.; Luong, M.; Hovy, E.H.; Le, Q.V. Self-Training With Noisy Student Improves ImageNet Classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 10684–10695. [Google Scholar] [CrossRef]

- Qiao, S.; Shen, W.; Zhang, Z.; Wang, B.; Yuille, A.L. Deep Co-Training for Semi-Supervised Image Recognition. In Proceedings of the Computer Vision-ECCV 2018-15th European Conference, Munich, Germany, 8–14 September 2018; pp. 142–159. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Luo, Y.; Zhu, J.; Li, M.; Ren, Y.; Zhang, B. Smooth Neighbors on Teacher Graphs for Semi-Supervised Learning. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8896–8905. [Google Scholar] [CrossRef]

- Maaløe, L.; Sønderby, C.K.; Sønderby, S.K.; Winther, O. Auxiliary Deep Generative Models. In Proceedings of the Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, 19–24 June 2016; pp. 1445–1453. [Google Scholar]

- Springenberg, J.T. Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Zhang, J.; Wang, X.; Zhang, D.; Lee, D.J. Semi-Supervised Group Emotion Recognition Based on Contrastive Learning. Electronics 2022, 11, 3990. [Google Scholar] [CrossRef]

- Jeong, J.; Lee, S.; Kim, J.; Kwak, N. Consistency-based Semi-supervised Learning for Object detection. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 10758–10767. [Google Scholar]

- Zhou, H.; Ge, Z.; Liu, S.; Mao, W.; Li, Z.; Yu, H.; Sun, J. Dense Teacher: Dense Pseudo-Labels for Semi-supervised Object Detection. In Proceedings of the Computer Vision-ECCV 2022-17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 35–50. [Google Scholar] [CrossRef]

- Guo, Q.; Mu, Y.; Chen, J.; Wang, T.; Yu, Y.; Luo, P. Scale-Equivalent Distillation for Semi-Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 14502–14511. [Google Scholar] [CrossRef]

- Li, G.; Li, X.; Wang, Y.; Wu, Y.; Liang, D.; Zhang, S. PseCo: Pseudo Labeling and Consistency Training for Semi-Supervised Object Detection. In Proceedings of the Computer Vision-ECCV 2022-17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 457–472. [Google Scholar] [CrossRef]

- Miyato, T.; Maeda, S.; Koyama, M.; Ishii, S. Virtual Adversarial Training: A Regularization Method for Supervised and Semi-Supervised Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1979–1993. [Google Scholar] [CrossRef] [PubMed]

- Sohn, K.; Zhang, Z.; Li, C.; Zhang, H.; Lee, C.; Pfister, T. A Simple Semi-Supervised Learning Framework for Object Detection. arXiv 2020, arXiv:2005.04757. [Google Scholar]

- Zhou, Q.; Yu, C.; Wang, Z.; Qian, Q.; Li, H. Instant-Teaching: An End-to-End Semi-Supervised Object Detection Framework. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; pp. 4081–4090. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, W.; Luo, Y.; Zhang, Y. Humble Teachers Teach Better Students for Semi-Supervised Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; pp. 3132–3141. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Shen, W.; Sun, J.; Tan, C. Robust Teacher: Self-correcting pseudo-label-guided semi-supervised learning for object detection. Comput. Vis. Image Underst. 2023, 235, 103788. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, C.; He, Z.; Kuo, C.; Chen, K.; Zhang, P.; Wu, B.; Kira, Z.; Vajda, P. Unbiased Teacher for Semi-Supervised Object Detection. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual, 3–7 May 2021. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-End Semi-Supervised Object Detection with Soft Teacher. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 3040–3049. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, F. Semi-Supervised Object Detection Algorithm Based on Localization Confidence Weighting. Comput. Eng. Appl. 2023. accepted. [Google Scholar]

- Kim, J.; Jang, J.; Seo, S.; Jeong, J.; Na, J.; Kwak, N. MUM: Mix Image Tiles and UnMix Feature Tiles for Semi-Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 14492–14501. [Google Scholar] [CrossRef]

- Cai, X.; Luo, F.; Qi, W.; Liu, H. A Semi-Supervised Object Detection Algorithm Based on teacher–student Models with Strong-Weak Heads. Electronics 2022, 11, 3849. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, C.; Kira, Z. Unbiased Teacher v2: Semi-supervised Object Detection for Anchor-free and Anchor-based Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 9809–9818. [Google Scholar] [CrossRef]

- Chen, B.; Li, P.; Chen, X.; Wang, B.; Zhang, L.; Hua, X. Dense Learning based Semi-Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 4805–4814. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision-ECCV 2014-13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.M.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Pham, V.; Pham, C.; Dang, T. Road Damage Detection and Classification with Detectron2 and Faster R-CNN. In Proceedings of the 2020 IEEE International Conference on Big Data (IEEE BigData 2020), Atlanta, GA, USA, 10–13 December 2020; pp. 5592–5601. [Google Scholar] [CrossRef]

- Devries, T.; Taylor, G.W. Improved Regularization of Convolutional Neural Networks with Cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

| mAP50:95 | ||

|---|---|---|

| 0.2 | 0.98 | 21.90 |

| 0.95 | 21.95 | |

| 0.9 | 21.82 | |

| 0.8 | 21.34 | |

| 0.7 | 20.67 | |

| 0.3 | 0.98 | 22.21 |

| 0.95 | 22.46 | |

| 0.9 | 22.13 | |

| 0.8 | 21.65 | |

| 0.7 | 20.89 | |

| 0.4 | 0.98 | 21.70 |

| 0.95 | 21.65 | |

| 0.9 | 21.51 | |

| 0.8 | 21.19 | |

| 0.7 | 20.38 |

| mAP50:95 | ||

|---|---|---|

| 1.0 | 1.0 | 21.12 |

| 2.0 | 21.02 | |

| 3.0 | 20.78 | |

| 2.0 | 1.0 | 22.46 |

| 2.0 | 22.32 | |

| 3.0 | 21.95 | |

| 3.0 | 1.0 | Cannot Converge |

| 2.0 | Cannot Converge | |

| 3.0 | Cannot Converge |

| mAP50:95 | ||||

|---|---|---|---|---|

| Method | 1% | 2% | 5% | 10% |

| Supervised | 9.05 | 12.70 | 18.47 | 23.86 |

| CSD [17] | 10.51 | 13.93 | 18.63 | 22.46 |

| STAC [22] | 13.97 | 18.25 | 24.38 | 28.64 |

| Instant-Teaching [23] | 18.05 | 22.45 | 26.75 | 30.40 |

| Humble Teacher [24] | 16.96 | 21.72 | 27.70 | 31.61 |

| Robust Teacher [25] | 17.91 | 21.88 | 25.81 | 28.81 |

| Unbiased Teacher [26] | 18.14 | 22.23 | 26.65 | 29.13 |

| MUM [30] | 21.88 | 24.84 | 28.52 | 31.87 |

| Our Method | 22.46 | 26.35 | 29.73 | 32.89 |

| Method | mAP50 | mAP50:95 |

|---|---|---|

| Supervised | 76.70 | 43.60 |

| CSD [17] | 74.70 | - |

| STAC [22] | 77.45 | 44.64 |

| Instant-Teaching [23] | 79.20 | 50.00 |

| Humble Teacher [24] | 80.94 | 53.04 |

| Robust Teacher [25] | 80.24 | 53.47 |

| Unbiased Teacher [26] | 79.30 | 53.50 |

| MUM [30] | 80.04 | 52.31 |

| Our Method | 81.29 | 55.26 |

| Aug | MSR (RPN) | MSR (RoI Head) | BBRP | mAP50 | mAP75 | mAP50:95 |

|---|---|---|---|---|---|---|

| 33.50 | 16.65 | 17.71 | ||||

| ✓ | 35.62 | 18.44 | 19.18 | |||

| ✓ | ✓ | 36.18 | 19.24 | 19.86 | ||

| ✓ | ✓ | ✓ | 37.02 | 20.02 | 20.74 | |

| ✓ | ✓ | ✓ | ✓ | 37.21 | 21.44 | 22.46 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Y.; Lv, C.; Zhang, R.; Yin, H.; Che, M.; Yang, G.; Jiang, Q. Semi-Supervised Object Detection with Multi-Scale Regularization and Bounding Box Re-Prediction. Electronics 2024, 13, 221. https://doi.org/10.3390/electronics13010221

Shao Y, Lv C, Zhang R, Yin H, Che M, Yang G, Jiang Q. Semi-Supervised Object Detection with Multi-Scale Regularization and Bounding Box Re-Prediction. Electronics. 2024; 13(1):221. https://doi.org/10.3390/electronics13010221

Chicago/Turabian StyleShao, Yeqin, Chang Lv, Ruowei Zhang, He Yin, Meiqin Che, Guoqing Yang, and Quan Jiang. 2024. "Semi-Supervised Object Detection with Multi-Scale Regularization and Bounding Box Re-Prediction" Electronics 13, no. 1: 221. https://doi.org/10.3390/electronics13010221

APA StyleShao, Y., Lv, C., Zhang, R., Yin, H., Che, M., Yang, G., & Jiang, Q. (2024). Semi-Supervised Object Detection with Multi-Scale Regularization and Bounding Box Re-Prediction. Electronics, 13(1), 221. https://doi.org/10.3390/electronics13010221