DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism

Abstract

1. Introduction

- The improved Mosaic data enhancement method was used to randomly crop, scale, and arrange of up to nine (instead of four) pictures and make appropriate adjustments to light and angles to generate datasets with various brightness of light, angles of rotation, and target sizes. This method effectively increases the richness of the datasets and enhances the recognition ability of the algorithm under complex weather, light, shaking, and other conditions.

- A new dynamic anchor box mechanism called Dynamic Anchor Feature Selection with K-means++ (K-DAFS) is proposed. Based on the dynamic anchor box [1], this mechanism adopts the K-means++ [2] clustering algorithm to automatically adjust the size of the training anchor boxes, accelerate the convergence of the target anchor boxes, solve the problem of mismatch between prior information and truth, and achieve faster detecting speed.

- A new YOLOv5 model with attention mechanism is proposed. The attention mechanism was introduced to enhance the weight of the header information and weaken the background information. The ROI was pooled in the spatial attention module to properly adjust the sensitivity of the model to the object’s marginal information to improve the recognition rate of the model for the occlusion target.

- It improves the GIoU in the original model structure, to CIoU, and solves the problem of slow convergence or non-convergence caused by GIoU_Loss degenerating to IoU when the prediction box and ground truth box are included.

2. Related Works

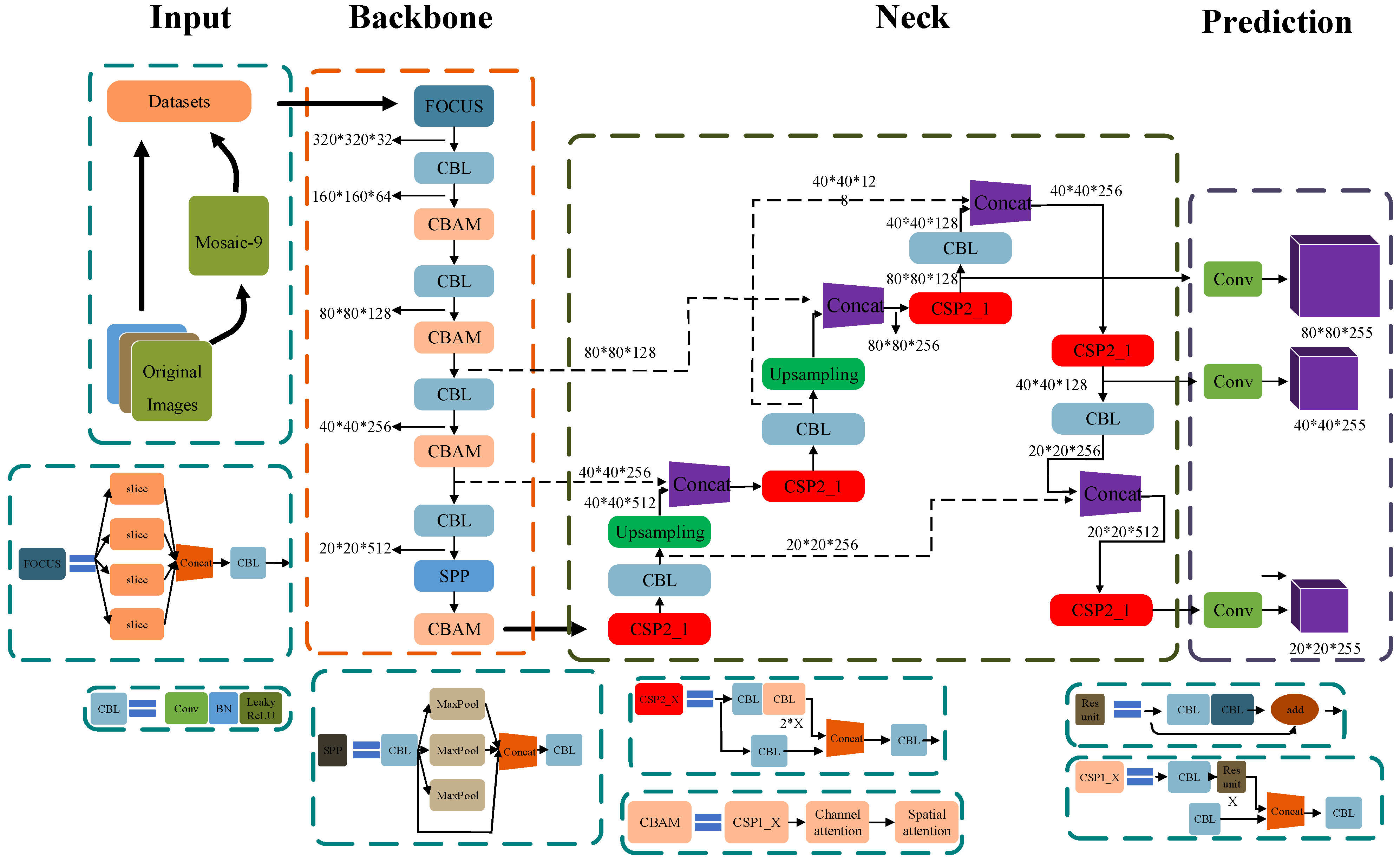

3. DAAM-YOLOv5

3.1. Network Structure

- Mosaic-4 is modified to Mosaic-9 data enhancement in the Input;

- A CBAM [5] attention mechanism is added to the Backbone;

- A K-DAFS dynamic anchor box is added during the training phase and recognition phase;

- In the Prediction part, CIoU is used to replace the original GIoU to compensate for the inherent defects of GIoU.

3.2. Improvements over the Original

3.2.1. Mosaic-9 Data Enhancement

3.2.2. K-DAFS Dynamic Anchor Box

- Among the prediction boxes, the set B is obtained through the feedback from the upper and lower levels of BFF, the center point of a prediction box is randomly selected as the initial center point .

- The Euclidean distance () between and the four anchor points of the ground truth box is calculated, and the farthest point is obtained as the new center point .

- The minimum value E of the Euclidean distance between and the anchor point in all prediction boxes in B is calculated, then the anchor box j corresponding to E is the optimal anchor box.

3.2.3. Attention Mechanism

4. Experimental Results and Analysis

4.1. Experimental Datasets

4.2. Experimental Environments

4.3. Experimental Hyperparation and Evaluation Criteria

4.4. Training Results

4.5. Detection Results of DAAM-YOLOv5 in Various Cases

4.6. Comparison of Detection Effects

4.6.1. Comparison between the YOLOv5 Algorithm and DAAM-YOLOv5 Algorithm

4.6.2. Ablation Experiment Comparison

- After the addition of CBAM alone, the mAP of the model has been improved by approximately 2.36%. However, compared with the YOLOv5 algorithm, the detection speed and recall have decreased to a certain extent, with a reduction of 17 frames/s and 2.81%, respectively. This indicates that the CBAM module can increase the mAP of the model, but it will slightly reduce the model detection speed and increase the missed-recognition rate.

- Compared with the second and fourth lines, it can be seen that the precision of the SE attention mechanism is slightly lower than that of the CBAM attention mechanism, by 0.41%, but the detection speed is faster than that of CBAM attention mechanism by 9 frames/s.

- Compared with the first and fourth lines, it can be seen that, after improving the Mosaic-9 data enhancement, the algorithm’s mAP has increased by 1.62%, while the speed rate has decreased by 11 frames/s. In addition, this module has shown significant improvements in both accuracy and recall, with gains of 4.35% and 8.68%, respectively.

- According to the fifth line, it can be seen that there is a slight decrease in mAP, by approximately 0.96%, after adding the K-DAFS dynamic anchor box. However, the obvious improvements in the detection speed and recall of the algorithm are 23 frames/s and 10.97%, respectively. This indicates that the K-DAFS module can significantly increase the detection speed of the model while reducing the model’s missed-recognition rate.

- After integrating the CBAM attention mechanism, the Mosaic-9 data augmentation, and the K-DAFS, the model’s detection speed was only slower than the K-DAFS model but it had the highest mAP. Compared with the original YOLOv5, the mAP, detection speed, and recall were improved by 4.32%, 9 frames/s, and 6.85%, respectively.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| BFF | Bidirectional Feature Fusion |

| ROI | Region of Interest |

| mAP | Mean Average Precision |

| DAFS | Dynamic Anchor Feature Selection |

| IoU | Intersection of Union |

| CIoU | Complete Intersection over Union |

| GIoU | Generalized Intersection over Union |

| SAM | Stereo Attention Module |

| CAM | Class Activation Mapping |

| CBAM | Convolutional Block Attention Module |

| SE | Squeeze-and-Excitation |

| R-CNN | Region based Convolutional Neural Network |

| R-FCN | Region-based Fully Convolutional Network |

| SSD | Single-shot MultiBox Detector |

| R-SSD | Rainbow Single-shot MultiBox Detector |

| DSSD | Deconvolutional Single-shot MultiBox Detector |

| FSSD | Feature Fusion Single-shot MultiBox Detector |

| NMS | Non-maximum Suppression |

| CSP1_X | Cross Stage Partial_1 |

| ARM | Anchor Refinement Module |

| SGD | Stochastic Gradient Descent |

| GFLOPs | Giga-floating-point Operations per Second |

References

- Li, S.; Yang, L.; Huang, J.; Hua, X.S.; Zhang, L. Dynamic anchor feature selection for single-shot object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6609–6618. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-Means++: The Advantages of Careful Seeding; Stanford University: Stanford, CA, USA, 2006. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Ying, X.; Wang, Y.; Wang, L.; Sheng, W.; An, W.; Guo, Y. A stereo attention module for stereo image super-resolution. IEEE Signal Process. Lett. 2020, 27, 496–500. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Amsterdam, The Netherlands, 2016; pp. 21–37. [Google Scholar]

- Jeong, J.; Park, H.; Kwak, N. Enhancement of SSD by concatenating feature maps for object detection. arXiv 2017, arXiv:1705.09587. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Li, Z.; Zhou, F. FSSD: Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Han, K.; Zeng, X. Deep Learning-Based Workers Safety Helmet Wearing Detection on Construction Sites Using Multi-Scale Features. IEEE Access 2022, 10, 718–729. [Google Scholar] [CrossRef]

- Li, M.; Zhang, W.; Hu, B.; Kang, J.; Wang, Y.; Lu, S. Automatic Assessment of Depression and Anxiety through Encoding Pupil-wave from HCI in VR Scenes. ACM Trans. Multimid. Comput. Commun. Appl. 2022. [Google Scholar] [CrossRef]

- Yan, J.; Zhao, L.; Diao, W.; Wang, H.; Sun, X. AF-EMS Detector: Improve the Multi-Scale Detection Performance of the Anchor-Free Detector. Remote Sens. 2021, 13, 160. [Google Scholar] [CrossRef]

- Tan, S.; Lu, G.; Jiang, Z.; Huang, L. Improved YOLOv5 network model and application in safety helmet detection. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Nagoya, Japan, 4–6 March 2021; pp. 330–333. [Google Scholar]

- Li, J.; Liu, H.; Wang, T.; Jiang, M.; Wang, S.; Li, K.; Zhao, X. Safety helmet wearing detection based on image processing and machine learning. In Proceedings of the 2017 Ninth International Conference on Advanced Computational Intelligence (ICACI), Doha, Qatar, 4–6 February 2017; pp. 201–205. [Google Scholar]

- Qing, T.; Yuan, H.; Fei, D.; Yao, N. Research on Head and Shoulders Detection Algorithm in Complex Scene Based on YOLOv5. In Proceedings of the 2022 IEEE Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2022; pp. 368–371. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Nan, X.; Lu, W.; Chongliu, J.; Yuemou, J.; Xiaoxia, M. Simulation of Occluded Pedestrian Detection Based on Improved YOLO [J/OL]. J. Syst. Simul. 2023, 35, 286. [Google Scholar] [CrossRef]

- Dai, B.; Nie, Y.; Cui, W.; Liu, R.; Zheng, Z. Real-time Safety Helmet Detection System based on Improved SSD. In Proceedings of the 2nd International Conference on Artificial Intelligence and Advanced Manufacture, Manchester, UK, 15–17 October 2020; pp. 95–99. [Google Scholar]

- Orhan, A.E.; Pitkow, X. Skip connections eliminate singularities. arXiv 2017, arXiv:1701.09175. [Google Scholar]

- Tibshirani, R.; Walther, G.; Hastie, T. Estimating the number of clusters in a data set via the gap statistic. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2001, 63, 411–423. [Google Scholar] [CrossRef]

- Zhou, X.; Lan, X.; Zhang, H.; Tian, Z.; Zhang, Y.; Zheng, N. Fully convolutional grasp detection network with oriented anchor box. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7223–7230. [Google Scholar]

- Sloss, A.; Symes, D.; Wright, C. ARM System Developer’s Guide: Designing and Optimizing System Software; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Song, S.; Chaudhuri, K.; Sarwate, A.D. Stochastic gradient descent with differentially private updates. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 245–248. [Google Scholar]

| Configuration Name | Version Parameters |

|---|---|

| Operating system | Windows10 |

| Graphics (GPU) | Tesla P4*4 (8G*4) |

| Processor (CPU) | 16 core Intel(R) Xeon(R) Gold 5120 CPU @ 2.2GHz |

| Framework | Pytorch (1.10.2) |

| GPU acceleration environment | CUDA10.2 |

| Parameter | Value |

|---|---|

| Learning rate | 0.01 |

| Weight decay | 0.00002 |

| Batch size | 32 |

| IoU | 0.35 |

| Momentum factor | 0.937 |

| Epoch | 300 |

| Algorithms | mAP@0.5 | ||||||

|---|---|---|---|---|---|---|---|

| Epoch = 10 | Epoch = 20 | Epoch = 50 | Epoch = 150 | Epoch = 234 | Epoch = 248 | Epoch = 299 | |

| YOLOv3 | 0.78805 | 0.82702 | 0.84067 | 0.89059 | 0.89519 | 0.89512 | 0.89322 |

| YOLOv5 | 0.76171 | 0.80696 | 0.87373 | 0.92099 | 0.92049 | 0.92041 | 0.91988 |

| YOLOX | 0.83723 | 0.92661 | 0.93067 | 0.95289 | 0.95618 | 0.95675 | 0.95464 |

| Ours | 0.88596 | 0.90738 | 0.95428 | 0.96609 | 0.96234 | 0.96363 | 0.96344 |

| Algorithms | Accuracy | Precision | Recall | Parameters (M) | mAP@0.5 | GFLOPs | Recognition Speed (f/s) |

|---|---|---|---|---|---|---|---|

| YOLOv5 | 0.8549 | 0.8465 | 0.7536 | 7.2 | 0.9204 (+0) | 16.1 (+0) | 135 (+0) |

| YOLOv5+CBAM | 0.8841 | 0.8631 | 0.7255 | 8.0 | 0.9440 (+0.0236) | 14.9 (−1.2) | 118 (−17) |

| YOLOv5+SE | 0.8658 | 0.8699 | 0.7320 | 8.1 | 0.9399 (+0.0195) | 15.5 (−0.6) | 127 (−8) |

| YOLOv5+Mosaic-9 | 0.8984 | 0.8223 | 0.8428 | 7.5 | 0.9366 (+0.0162) | 16.4 (+0.3) | 124 (−11) |

| YOLOv5+K-DAFS | 0.8837 | 0.8037 | 0.8633 | 8.5 | 0.9108 (−0.0096) | 16.8 (+0.7) | 158 (+23) |

| Ours | 0.8708 | 0.8471 | 0.8221 | 10.3 | 0.9636 (+0.0432) | 16.5 (+0.4) | 144 (+9) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tai, W.; Wang, Z.; Li, W.; Cheng, J.; Hong, X. DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism. Electronics 2023, 12, 2094. https://doi.org/10.3390/electronics12092094

Tai W, Wang Z, Li W, Cheng J, Hong X. DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism. Electronics. 2023; 12(9):2094. https://doi.org/10.3390/electronics12092094

Chicago/Turabian StyleTai, Weipeng, Zhenzhen Wang, Wei Li, Jianfei Cheng, and Xudong Hong. 2023. "DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism" Electronics 12, no. 9: 2094. https://doi.org/10.3390/electronics12092094

APA StyleTai, W., Wang, Z., Li, W., Cheng, J., & Hong, X. (2023). DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism. Electronics, 12(9), 2094. https://doi.org/10.3390/electronics12092094