Abstract

Many machine-learning-enabled approaches towards anomaly detection depend on the availability of vast training data. Our data are formed from power readings of cycles from domestic appliances, such as dishwashers or washing machines, and contain no known examples of anomalous behaviour. Moreover, we are limited to the machine’s voltage, amperage, and current readings, drawn from a retrofitted power outlet in 60-s samples. No rich sensor data or previous insights are available as a training basis, limiting our ability to leverage the existing work. We design a system to monitor the behaviour of electrical appliances. This system requires special consideration as different power cycles from the same machine can exhibit different behaviours, and it accounts for this by clustering unseen cycle patterns into siloed training datasets and corresponding learned parameters. They are then passed in real-time to an autoencoder ensemble for reconstruction-based anomaly detection, using the error in reconstruction as a means to flag anomalous points in time. The system correctly identifies and trains appropriate cycle clusters of data streams on a real-world machine dataset injected with stochastic, proportionate anomalies.

1. Introduction

Anomaly detection is a rich problem set in the fields of both machine learning and time-series analysis. Its goal is to uncover unexpected deviations from the time series against normal working conditions, and it has proven to be a pervasive solution across multiple real-world applications and domains, including medicine [1], engineering [2], and finance [3]. Many of these fields employ this technology to closely monitor crticial concerns of their applications.

Although several complex machine learning models have recently been shown to work well in time-series anomaly detection [4,5,6], many solutions are for fixed and well-known domains, e.g., the analysis of a particular machine or medical condition. The objective of this paper is to design a reliable machine-learning-based monitoring environment for electrical appliances with varying power cycle patterns. One element of challenge comes from a lack of rich sensor readings, commonly leveraged in applications such as predictive maintenance.

Supervised training requires labelled data; these are laborious to compile manually [7] before even considering the vast count of observations common in time-series datasets. Our requirements begin to form from these challenges, which involve designing a system that accepts unlabelled, shifting datasets. Specifically, the system must be capable of recognising the different behaviours of wash cycles and predict from a subset of similar cycles when an appliance exhibits unpermitted, anomalous behaviour.

The novelty in this paper comes from the marriage of an ensemble of autoencoder neural networks, to observe the power consumption of an electrical appliance over long periods, with an incremental clustering space that can hotswap the autoencoders’ learned parameters whilst correctly identifying and locating any anomalies. The objective is to anticipate any potential failure of the appliance and thus allow the operators to take remedial action. Our data originate from a retrofitted power outlet that records the amperage, wattage, and voltage of a laundry machine when active. Our working dataset is formed by discrete readings in 60-s samples from several machines, automatically separated into cycles (i.e., periods of continuous power activity).

This paper first presents a novel adaptation of partitioning around medoid (PAM) clustering, which operates on an evolving dataset compared to traditional clustering algorithms that rely on full access and/or predefined cluster counts. The cycle, then clustered and matched to a learned parameter set, is forwarded to an ensemble of autoencoders for reconstruction, which swaps its training parameters ad hoc depending on the cluster matched to the cycle. The key attraction of this approach is being able to account for operation cycles that differ in nature, which helps to prevent the system from falsely classifying the normal behaviour of one cycle as the anomalous behaviour of another. Broken into stages, our system’s process for a wash cycle is as follows:

- A complete wash cycle is received with individual readings for the voltage, amperage, and wattage sampled every 60 s;

- The wash cycle input is reduced to a fixed-length vector, where it is accepted into a cluster space using an incremental adaptation of K-medoid clustering;

- The learned parameters of the clustered cycle are installed into the autoencoder ensemble. An unseen cycle (i.e., a new cluster) receives a new parameter set;

- The cluster associated with the wash cycle maintains its own set of learned parameters for an autoencoder ensemble, into which the original cycle is then input;

- The autoencoder ensemble reconstructs the input to the best of its ability, with each parameter set assumed to be trained on samples with no anomalous behaviour;

- Deviation between the original cycle and the reconstruction is measured, where large reconstruction errors indicate abnormal behaviour, which are returned as points in time.

This paper is organised as follows: Section 2 presents the necessary theory and literature upon which this system is built. Section 3 then reviews some existing work attempting to solve problems of a similar nature to ours. However, none can fully address our unique challenges listed above. The architecture and rationale behind the system proposed in this paper are then detailed in Section 4, covering data preprocessing, a novel clustering mechanism, the reconstruction stage, and the anomaly-detection criteria. The performance and results of the system are given in Section 5, with the final conclusions given in Section 6.

2. Background

Machine learning strives to enable machines to approximate patterns and correlations found between data points and their labels using predictions from past data points as experience to train its accuracy for the next data point, with minimal human intervention in between. In the case of unlabelled data, the objective is to derive and utilise the underlying structure of the data—a task known as unsupervised learning. One of the most common uses of unsupervised learning is clustering, which organises a dataset into clusters of similar, coherent examples [8].

For completeness, we first briefly introduce artificial neural networks, in order to understand the mechanisms behind autoencoders in reconstruction-based approaches towards anomaly detection relative to our online learning requirements.

2.1. Artificial Neural Network

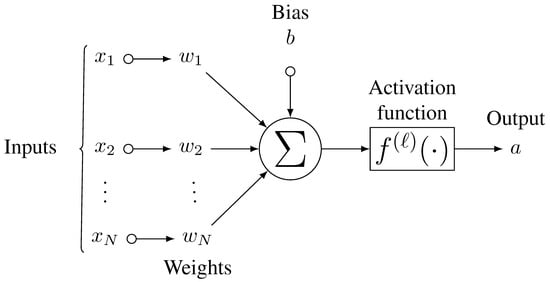

Neural networks, loosely inspired by the neural architecture of the human brain, are implemented as a network of interconnected functional elements, each with multiple inputs mapping to one output. Figure 1 illustrates a neuron in its most basic form: a series of inputs fed individually from , each with a corresponding weight w. This neuron is a computational unit that produces from its weighted inputs, along with a separately trainable bias term [9], a non-linear output guaranteed from passing through an activation function .

Figure 1.

A classic neuron isolated from part of a larger network. Inputs can be given as either from the input data at layer 0, or activations of the previous layer for all subsequent layers.

Consider layer ℓ of an L-layered neural network with neurons , , …, , each with some differentiable activation function . The inputs to layer ℓ are given by the preceding layer , and each neuron is weighted by a weight matrix, whose elements are given by , for and . The net input to is given by

Where , is simply the j-th element of the input data itself. Where , the net activation is the final output y for the network. The activation of neuron is given by

.

The result is received as an input by one or more neurons of the next layer. The dense connections between many of the neurons exampled in Figure 1, where the output of one layer serves as inupt to another, are what form a neural network. Layer is considered the input layer and is sized according to the shape of the input data, given as its initial activations at each execution. Likewise, layer L is considered the output layer, and its final activations are used as its prediction and in its evaluation. Any layers between are referred to as hidden layers, where the learning takes place.

Training and label vectors and are presented individually for processing through the network. Using t as the time step of the training process, input vector propagates through the network in accordance with the previous equations, eventually outputting . In our application, we derive our label vectors from the next reading that the model is tasked with predicting.

Following a complete forward propagation through the network, a loss function measures the deviation between the model’s prediction and the ground truth , i.e., target. As with activation functions, several loss functions have been developed, and each particular function produces different measures of loss, such as the root-mean-square error (RMSE):

.

Using an optimisation algorithm, such as gradient descent, the weights and biases of each layer ℓ are individually updated according to their degree of responsibility for the overall loss. This is calculated using the backpropagation algorithm. Those interested in more on backpropagation and the training process of artificial neural networks are directed to [10,11] and the references therein. This gradient is multiplied by a learning rate for the network: a shared hyperparameter that governs the step size taken in converging toward ideally the global minima. It should be noted the weight and bias update can be driven by independent learning rates, though it is shared in this implementation.

2.2. Autoencoder Neural Networks

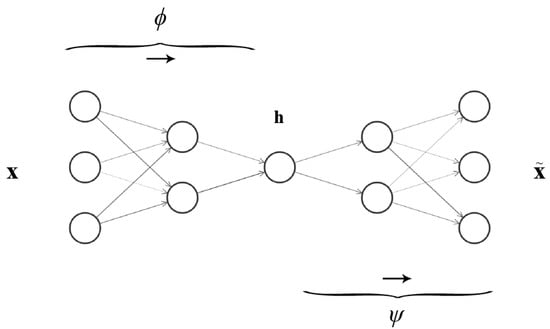

As with other machine learning models, autoencoders are a form of neural network that strive to minimise the discrepancy between input and expected output. Their defining characteristic is that their outputs are trained to approximate their input as identically as possible. This is achieved by encoding the input across narrowing layers down to a latent space, often lower in dimension, then decoding from this space the original input at the output layer. It can be thought of as two back-to-back neural networks, where the objective of the first is to compress the input as representatively as possible, which the second network receives and tries to reconstruct the original input. Figure 2 illustrates this architecture and concept, effectively showing 2 independent neural networks conjoined by the latent space.

Figure 2.

Example of an autoencoder neural network, illustrating the gradual compression from the encoder , to latent space , to the decoder .

Training an autoencoder considers the entire architecture as one network and forces this network design to derive a compressed underlying identity function from the latent space—making this an unsupervised model as the data are unlabelled. The size of the latent space, i.e., how much the input should be compressed without losing its representation, is configurable, and we express it in a range of 0–100% of the original input. Optionally, the latent space can be extracted from the network before continuing to the decoding stage, where the original input is reconstructed, making it ideal for discriminative tasks such as anomaly detection.

This architecture comprises two trainable functions accompanied with individual training parameters (encapsulated as ): the encoder , and the decoder . Note that the encoder stage has separate training parameters to the decoder (for clarity, differentiated as ). Latent space is the compressed representation of the input, from which the decoder will try to approximate. The encoder and decoder operate as parallel neural networks, the inputs for which undergo the same standard forward propagation found in the deep neural networks that were previously detailed. The decoding stage accepts as input latent space, which then undergoes a similar feedforward propagation with its own separately trainable set of weights and bias.

Outputs are then subjected to one of the same series of activation functions previously described in Section 2.1 in training respective parameters and in both components of the network through the shared optimisation problem (4); here, ∘ denotes the result of the encoder stage piped directly as an argument to the decoder stage.

Autoencoders are popularly incorporated as part of a wider machine learning system, commonly as a dimensionality-reduction technique when working with a complex dataset, or in a generative application to enrich small or sparse datasets (achieved with another variant of autoencoder, known as a variational autoencoder) based on an underlying trend. We favour the autoencoder architecture for its reconstruction characteristic. It has been shown that autoencoders that generalise well on normal data will struggle to reproduce data unseen during training [12]. Carrying the reconstruction error forward, we can analyse and determine if, where, and by what margin the autoencoder struggled to identify anomalies.

3. Related Work

Consumer-focused fault detection is largely unexplored, particularly in the context of a monitoring device fitted retrospectively. However, considering the problem at a higher level of time-series clustering and forecasting, anomaly detection within highly constrained environments offers a greater wealth of research in each field.

Forecasting is one of the largest applications of neural network modelling sequence/ time-series data; a network’s ability to generalise even the most complex relationships and patterns in data makes it an attractive alternative to traditional, statistical univariate approaches (e.g., ETL; ARMIA; and its variants, SARIMA, ARIMAX, etc.). However, vanilla neural networks often struggle beyond single-step forecasting, as investigated by [13], who found the architecture of the network, data preprocessing stages, and abundance of samples required for training all to be considerable barriers to model performance. Some work attempts to increase model performance through intelligent feature engineering, such as [14], who leverage sparse filtering to learn features from bearing data in identifying faults, though our dataset does not comprise mechanical features.

The work of [15] adopts a similar approach to this stage of the project, using the minimum message length clustering algorithm by [16] to first cluster a time-series dataset before training each cluster on a counterpart long short-term memory (LSTM) recurrent neural network: a powerful deep learning model architecture for temporal/sequential data. Although the results of this system were competent, the forecasting stage can only be undertaken when the entire dataset is well-clustered. LSTMs have proven immensely successful in time-series forecasting, given their ability to factor past readings and experience when making future predictions. An example of this is the fully connected, convolutional LSTM by [17], which extends the traditional operations inside the LSTM cell into the convolutional processes. Ensemble architectures for time-series forecasting are another choice of model design, acting on the combined results of several forecasting models as opposed to a single forecasting system. In [18], they implement this concept between multiple LSTM networks, using a weighted system at the output.

However, complex networks require vast amounts of data in specific formats and are significantly more expensive to (re)train computationally, which requires unrolling the entire LSTM network. This is not an ideal process given that we see and train inputs incrementally. Similarly, ref. [19] also follows this approach in a network intrusion detection system, this time powered by an ensemble of autoencoders—a mature theory in anomaly detection. Unlike others, this particular system is designed to work online under similar hardware constraints but requires additional training to derive a feature mapping from one continuous data stream. Further, the autoencoders are not retrained once executed, which, despite the model training online, still leaves them susceptible to model decay, though there are mechanisms explored to combat this.

4. Proposed System

This section presents the stages of our proposed system. It begins with a complete cycle, submitted in the form of voltage, wattage, and current readings in 60-s intervals. We do not consider or derive any features from timestamps or idle periods between. We prototype the system in Python 3.*, with support from standard scientific computing libraries (NumPy, Pandas).

The readings of a cycle are first detoured through a clustering process, which identifies the cycle and installs a matching learned parameter set into the autoencoders for reconstruction. This stage first builds a representation of the cycle, the process for which is elaborated in Section 4.1. If a matching cluster exists, the cycle will be evaluated against, or contribute to, the learned behaviour of that cluster from an autoencoder ensemble; if not, a new cluster will be created along with a counterpart parameter set, consisting of the following:

- Weights, initialised randomly when first instantiated;

- Biases, initialised as zeroes;

- Variables for normalised minimum and maximum of data observed during training phases for the parameter set.

The learned parameters of the autoencoder ensemble, described in Section 2.2, are hot-swapped with those of the cluster matching the wash cycle. The autoencoder’s readings at each sample of the power profile are then fed individually to the ensemble, which outputs an approximate reconstruction of the signal. Following a complete forward pass, the reconstruction is compared against the original, using the theory that anomalous cycles will thwart the autoencoder’s ability to accurately reconstruct the input.

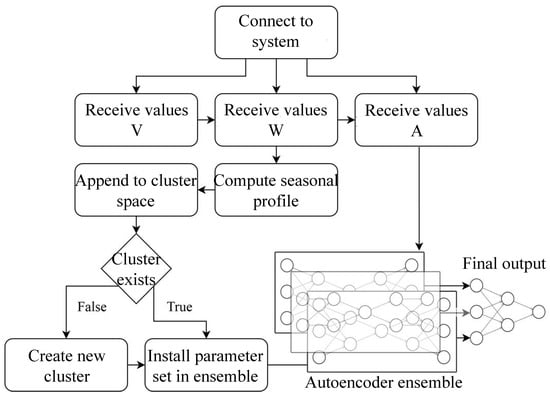

Figure 3 illustrates the process of data ingestion through to model execution. Readings of the voltage, wattage, and amperage from the cycle are received. The wattage is used to first compute a fixed-length representation of the variable-length wash cycle for clustering, which in turn provides a corresponding parameter set for the autoencoders trained on previous matching cycles.

Figure 3.

Flowchart of the proposed system. We detour the wattage readings through the clustering process to install the matching parameter set in the autoencoders. All values are eventually submitted raw to the autoencoder ensemble.

We measure the reconstruction against a set of user-defined severities, given as degrees of deviation from a threshold calculated using light statistical techniques on the errors made by the autoencoder’s reconstruction, described in Section 4.4.

4.1. Incremental Clustering

The first stage when given a wash cycle is to assign it a cluster of similar cycles—or form a new cluster if the cycle is sufficiently distinct. Different wash cycles will have different power-consumption patterns, and different washing machines will have different power-consumption patterns on the same wash cycle. One key challenge in our objective is to account for this in an unsupervised fashion. Our solution is to combine similar wash cycles into their own evolving parameter sets, swappable in and out of the autoencoder ensemble for more accurate learning.

How similarity is measured and defined is an especially important decision to make for this system. The algorithm must be sufficiently robust to account for sometimes serious anomalies in a signature that would have a place in an existing cluster if normal. By acting too inflexibly on an anomalous wash cycle, it may be cast into its own cluster; likewise, being too flexible may miscategorise an anomalous cycle as a regular cycle of a completely different cluster.

Associated with each cluster is a parameter bank (weights, biases, training records, etc.) on which the autoencoder trains and executes.

4.1.1. Preprocessing

Although later stages of the system are capable of processing in real-time, we first require a matching cluster of similar cycles on which to evaluate, where each cluster maintains its own learned parameter set to use in the autoencoder ensemble. We receive our data for this experiment as an array of distinct wash cycles, containing the timestamp, current, amperage, and voltage for each minute the cycle is in progress. Cycles in this dataset range from 70–240+ min in length and begin at the 0-th interval relative to each cycle. Each observation is first scaled to a common metric using zero-mean normalisation (or z-score) to help prevent one feature of a data point with a range different from another from becoming dominantly influential during training. More importantly, this limits noise impact and makes for accelerated convergence during training.

A time-series representation is next computed, which is given as input to the clustering stage. Opting for a representation is a favourable approach, for it can account for disturbance in the series and still draw out the essential characteristics of data [20]. Our system uses the mean seasonal profile algorithm, which converts a time series to a length of set seasonality, adapted from [21].

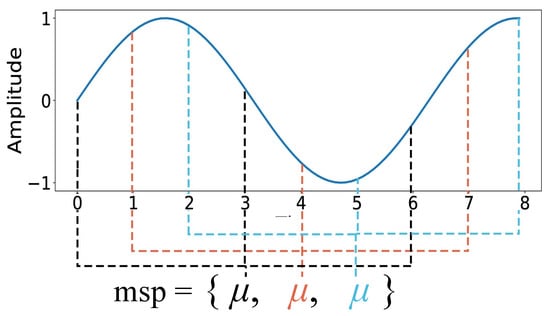

The process compresses the z-scored vector to a fixed size, using an aggregate function (in this case, the mean). Using Figure 4 as an example of the algorithm against an arbitrary series of variable length, the mean of every n-th element is inserted into the output at position n throughout the series. The representation doubles as a form of dimensionality reduction, which is beneficial to memory requirements and computational complexity.

Figure 4.

The mean seasonal profile of a time series, where the three colours each represent contribute to the mean value of the given index. Using , the mean of the points at each colour, stepping from 0 through n until the end of the series, is taken and calculated into an n-length vector.

The mean seasonal profile approach is a model-based algorithm that assumes the data processed originates from the same basic source [21]. This is an assumption we can safely make given the nature and origin of our data.

4.1.2. Existing Techniques

Many existing techniques assume a complete dataset or predefined cluster count before clustering. Common offline K-enabled clustering techniques cannot guarantee reproducible results when repeated, which disrupts the later stages of this system; we may lose or rearrange clusters in the future with these techniques. One offline technique is partitioning around medoids (PAM), an adaptation of K-means clustering, developed by [22]. PAM selects points from the data as medoids, whereas K-means clustering generates artificial points in the cluster space as centroids [23]. PAM is a favourable technique with sequential data for its flexibility toward how objects are defined, i.e., distanced, as close [24].

4.1.3. Process

We modify the process of PAM, which typically begins by building a set S, composed of k objects, as the initial medoids in the cluster space. The selection process for medoids can be randomised or conditional. In parallel, another object D records and tracks the distances between the medoids and child clusters of S. Each addition to the cluster space requires D be recalculated to include the new distances after assigning the data with the nearest cluster. The next phase clones the cluster space, and this time selects an alternative set of k medoids and computes the inter-cluster distances D of candidate medoids S. If this version offers a sufficient gain in affinity over the existing cluster space, it is replaced. This process is repeatable any number of times with each addition.

The system described in this section implements an adaptation of PAM, where predefined K is replaced with a tolerance parameter to selectively partition around medoids (S-PAM). New data are instead submitted incrementally to the cluster space. Its distance is evaluated against that of the closest medoid. As with original PAM clustering, distance is flexible in terms of how it is measured, and the tolerance can be matched to the sensitivity of the expected data.

We apply the as our measure of difference between two points, given as

where and are two data points that are compared. Both are z-scored outputs of mean-seasonal profile vectors, previously described in Section 4.1.1. When appending a wash cycle to the cluster space, the new distance after matching its nearest medoid is evaluated against the predefined tolerance parameter. If the wash cycle is within distance, it is appended to the cluster. If it exceeds the tolerance or if it is in an empty cluster space, the data point is designated as a new medoid. D and S are then both recomputed as with traditional PAM implementations to include the new data.

4.1.4. Operational Complexity

PAM has a runtime complexity of , where n represents non-medoid data points and k the count of medoids in the cluster space, as data points must be distanced k times to compute the lowest change in cost. With our online adaptation, the runtime complexity becomes per iteration, where k medoids are first traversed to find the nearest medoid, then each data point is iterated to again find its nearest medoid before evaluating the gain in fit were it placed in each candidate medoid.

4.2. Parameter Hotswapping

The system maintains a set of learned parameters for each cluster, which are installed for a given wash cycle. The clustering process described above in Section 4.1 outputs an identifier (ID) for a corresponding cluster. Control then flows to a manager to fetch the parameters and data stream counterparts for the given cluster. In a newly formed cluster, these will be fresh, randomly instantiated learned parameters.

Each cluster begins its corresponding parameter set under a fixed grace period, during which all data submitted are reserved as training data for the autoencoders. A parameter set still in its training phase, i.e., within the grace period, will not make any prediction.

4.3. Autoencoder-Enabled Reconstruction

With a parameter set from the cycle’s belonging cluster, each multivariate reading is submitted to the autoencoder ensemble incrementally. If the parameter set is sufficient to execute the autoencoder, the output is recorded in a vector.

Autoencoders trained well on non-anomalous data will approximate the target with relatively low error but struggle to reconstruct an anomalous signal to the same accuracy [25]. This is the underlying theory of this stage of the detection system. Each autoencoder is trained in silo on at least one feature of the stream, which is compressed down to a latent representation in an intermediate layer, the ratio of which is supplied by the user in a configuration. Features are repeatable across multiple autoencoders, i.e., the i-th feature of a multivariate series observation may input to ≥1 autoencoders.

Each observation is passed raw directly into the system at a time and normalised to a range of using min-max scaling, against a record of the smallest and largest values for each feature observed during training stages to date.

The output stage of this design is another autoencoder, trained on the collective outputs of all preceding autoencoders within the ensemble. It should be noted we assume in our system that a wash cycle submitted for training on a new or developing cluster is not anomalous. Following the grace period, the system then executes the autoencoders on all future data received, returning the reconstructed value and the measures loss according to root-mean-square error (RMSE) (3). In training, the RMSE is used to drive the classical backpropagation supervised training technique.

As clusters form at different rates, one cluster with sufficient training will transfer into execution. Swapping to a cluster still in training restores the autoencoders to training mode automatically.

4.4. Anomaly Grading

The final stage of the system is where anomalies are identified and flagged. Here, we receive the output of the autoencoder ensemble for each observation in the wash cycle, which is compared to the original. To treat the scores as face value indications of anomalies produces excessive false positive identifications, diminishing the reliability of the system. This stage prunes and grades the scores into definitively ranked anomalies.

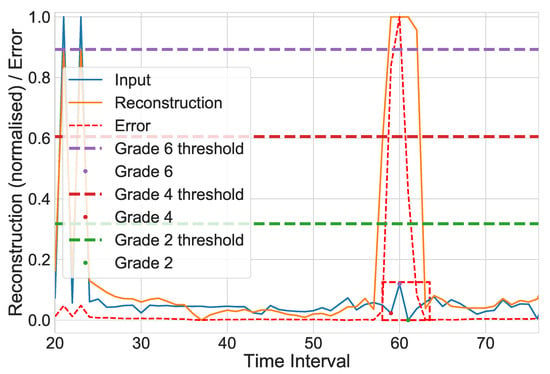

We begin by calculating the absolute error between the input and reconstructed output at each time step into a separate vector, e. Then, iterating, through user-defined severities, a set of severity grades (e.g., ), we calculate a threshold for each grade g, given by . Any absolute error score from e exceeding the threshold appropriate to the current grade is recorded in the form of a dictionary whose keys are the grades, if any, and the values of each set of intervals are considered anomalous. Figure 5 illustrates this process, showing the relative thresholds for each severity grade. It is worth noting here that a cycle with no identified anomalies can be resubmitted for the training of the cluster’s parameter set at the user’s discretion.

Figure 5.

Calculated thresholds for grades , dashed horizontally in their respective colours, for a wash cycle against an artificial anomaly, outlined in red.

This grading mechanism does not consider transposition in its decision, which allows for reconstruction to focus on the overall energy pattern as opposed to exact matches. We are particularly interested in noise and significant deviation from the ensemble’s reconstruction in our detection criteria.

5. Evaluation

Real-world power consumption data served throughout the design, development, and evaluation of this system. Our dataset for the evaluation is a set of 62 individual wash cycles from one washing machine, collected organically over a 6 month period. Each 60-s reading of an in-progress cycle contains the timestamp, current, amperage, and voltage. The system receives each wash cycle incrementally and not as a batch. In total, we have 2324 readings, with each wash cycle running 41 min on average. It should be noted that our final data population counts, shown in Table 1, are inclusive of anomalies injected.

Table 1.

Total number of readings in the dataset, which will be submitted incrementally to the system.

Data are submitted raw as one complete cycle where it enters the clustering stage. When the autoencoders are swapped to the parameters of the appropriate cluster, the cycle is reconstructed reading by reading. The results in this section are produced on a dishwasher power graph of 75 uniquely identified cycles across 314,464 min-interval timesteps. The majority of this is standby power—idle readings are not submitted to the system.

We set our tolerance for clustering wash cycles to and found a seasonality of size 20 to be effective in calculating a representative mean seasonal profile, the result of which is what is used in the cluster space. The autoencoders are assembled into a 3 layer mapping, given a grace period of 320 observations for each cluster, which averages around 2 complete wash cycles per cluster before execution begins. The autoencoders are permanently configured to compress input down to a latent representation of 80% and use a learning rate of .

5.1. Data Setup

With no clear labels of anomalous examples in our dataset on which to evaluate, anomalies are artificially injected at a rate proportional to the rest of the signal to carry our example, with a configurable variable to adjust intensity to resemble the anticipated behaviour of their real-world domain. The system is trained to expect disturbances in the power consumption characterised by prominent distortions; therefore, we include the novel ability to inject stochastic noise into some segment of a wash cycle in our testing framework.

Faced with a highliy imbalanced dataset and scarce examples of true anomalous data, the concept of white noise injection is a is a familiar compensatory tactic in the anomaly detection space. For example, the work in [26] explores the condition monitoring of UAV motors by injecting white Gaussian noise into their dataset as a training target, at a configurable signal-to-noise (S/N) ratio. Using noise in training is also seen in [27], where the authors assess the robustness of training deep learning models under synthetic noise to develop an anomaly-detection system into an unsupervised setting, again with a configurable S/N ratio, ranged proportion, and evaluation strategy. Most similarly, the work in [28] explores datasets of a similar nature, bearing local fluctuations as formulaic anomalous periods injected into multivariate time-series datasets. Their work develops a new algorithm designed to extract feature vectors for training on existing machine-learning-enabled anomaly detection frameworks.

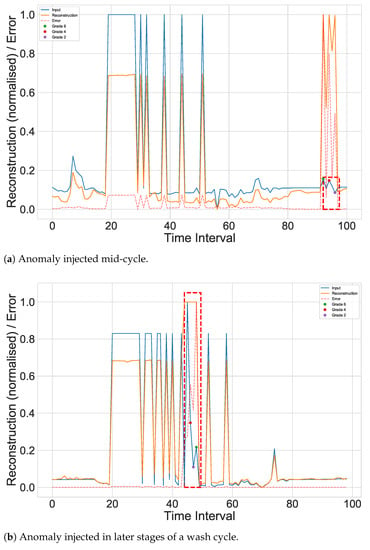

Our approach follows similarly to these works, first defining a probability of a wash cycle containing an anomaly, set at 40%. If selected, a wash cycle is injected with noise across readings of the cycle. Each axis of the multivariate observation is distorted proportionately. We use additive white Gaussian noise as our noise model and a S/N ratio of dB. The wash cycle is then modified in-place and recorded in an external data store inaccessible to the system, marking the timestep in the cluster and the break point of the anomaly for evaluation, forming our label for the cycle in the evaluation process. The “input” line in the graphs of Figure 6 shows the original power-consumption pattern, with an anomalous region outlined in red. Two different wash cycles at two different points are shown to demonstrate the nature of the disturbances the system is tasked with identifying.

Figure 6.

Rendered graphs of wash cycles from two separate machines injected with separate anomalies at separate points, shown in Watts. The autoencoder should struggle to reconstruct the anomalous region marked in red, which the grading mechanism should recognise.

5.2. Evaluation Strategy

It should be noted this does not necessarily mean 40% of our preprocessed data becomes anomalous. With an ad hoc source of truth of injected anomalies, measuring detection performance is essentially reduced to binary classification. There are two strategies depending on the context of anomalies and how they are characterised. First, the presence of any anomalous point alone may be sufficient. In this point-based approach, any one point identified within the range of the injection until the anomaly length is found to be anomalous is considered a success.

Alternatively, another system may require that a series of anomalies together first be identified before considering any action. This approach, referred to as collective anomaly detection, assesses the anomalous range as one anomaly, where anomalous points absent in this range are considered false negatives and will negatively impact its detection score.

Using the wash cycles graphed in Figure 6 as an example, the strict evaluation approach requires all of the data points within the known anomalous region (marked in red) to be identified at any grade to be considered completely successful, whereas the former approach will accept any anomaly identified within the region as an overall success.

This evaluation runs both strategies under two versions of the system: the original as proposed in this section, and another that bypasses the clustering stage, i.e., all of the wash cycles are fed to the same autoencoder parameter set. Each version is executed in silo on the same dataset, meaning it develops its parameters separately to the other versions of the system. It should be noted that the clustering will reserve some of this data as training for each identified cluster, whereas the continuous examples required will train their only cluster once.

5.3. Results

Figure 6 illustrates an excerpt of the post-processed output from distinct cycles from different white goods appliances, each injected with an anomaly at random points of their cycle, marked in red. The system should identify, from the input (Watts, in the case of Figure 6), any or all points within this region as anomalous, depending on the evaluation strategy. The output shows the different lengths of each cycle, demonstrating that the system is not confined to rigid data shaping commonly found in classic machine learning approaches. In both examples, the sharp increase along an expected steadier period in the cycles sufficiently disrupts the autoencoder’s reconstruction, reflected in the running red error line. Table 2 records the performance of the system during the experiment, showing the evaluation metrics used in both point and collective evaluation strategies. The grading mechanism identifies each of these points well. Owing to the bespoke problem we have aimed to solve, it has proven challenging to source or synthesise a comparable public dataset to use in these more complex networks that can still make good use of our incremental clustering stage.

Table 2.

Results of the simulation experiment ran on all versions (where P = positive, N = negative).

In considering the transfer detection of the proposed method between different work conditions or different electrical appliances, the user has the option to adjust the training grace period, cluster threshold tolerance, or grades of deviation within the system, or the traditional hyperparameters of the autoencoder ensemble, should the need arise. From our experiments, including the above, we observe similar performance between different machines with no adjustment to these parameters. As part of our future directions, we would be interested in obtaining a similar dataset from, e.g., a dishwasher on which to rerun this experiment.

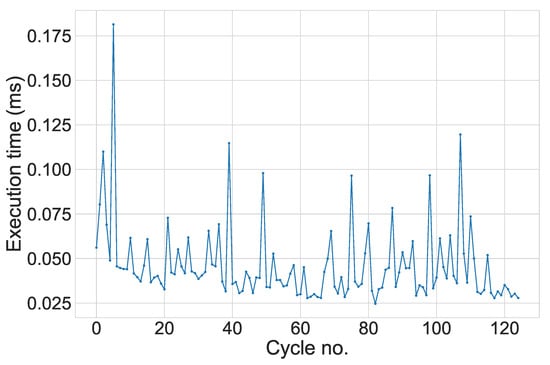

5.4. Runtime Performance

Runtime performance is another important consideration of the system. To evaluate this, the system was non-obtrusively monitored throughout its execution of various stages by wrapper blocks timing the code’s performance in two core stages of the workflow: the clustering of a cycle and the overall processing throughout the rest of the system (including autoencoder reconstruction). These are given in the timing performance graph in Figure 7.

Figure 7.

Runtime performance of the overall execution time for each wash cycle as the system evolves incrementally.

We observe that the majority of the system scales well as input continues; yet, a slight incline appears as the system continues to evolve. The cause of the performance scaling is the clustering stage—an expected outcome given its runtime complexity, described previously in Section 4. As wash cycles continue to populate the cluster space, new cycles will understandably take longer to place. A potential remedy we are interested in exploring is a mechanism of selectively thinning intense clusters. Should performance become noticeably impacted, we could assess the largest cluster(s) of the feature space and delete some of the members within, freeing up space with limited impact in terms of the cluster’s significance and strength. The data experienced from these cycles would not be lost by the parameter set for the autoencoders; only its presence in the cluster space would be deleted.

6. Conclusions

This work describes the design and implementation of an approach toward the identification of unlabelled, undefined anomalies within cyclic time-series data—in our case, wash cycles. Having to support the inherent heterogeneity of different washing machines, wash cycles, and their varying power-consumption patterns disqualifies classical training approaches on even a rich offline dataset, as the system must begin training from the first reading with few to no prior assumptions.

The system presented offers generous flexibility in terms of the appliances it can monitor through incremental clustering. Being able to hot-swap the data stream and parameters of a cycle to the autoencoder ensemble in real-time allows for the processing of radically varied data, but the system is easily optimised for the general nature of any data with minor high-level parameter tweaks, as shown in our evaluation. On evaluating the system based on both point- and contextual-anomaly detection, the system demonstrates competent performance despite being shown only a single pass of incoming data.

In terms of future work, our attention should be paid to the time complexity of the incremental clustering algorithm. Although lightweight and storing cycles only in condensed form for the cluster space, it may eventually become too intensive to process on edge hardware. A preliminary thought is to develop a thinning mechanism, which prunes high-intensity clusters to reduce the dataset size whilst preserving the identity and strength of the cluster. Additionally, we would be interested in exploring the performance increase when threading the autoencoder ensemble over multiple cores. For machine-learning-related endeavours, we would be interested in the addition of a classifier at the end of this pipeline, which could classify anomalies in the context where they are known.

Author Contributions

Conceptualization, A.C.C. and S.A.R.Z.; methodology, A.C.C. and S.A.R.Z.; software, A.C.C.; validation, A.C.C. and D.M.; formal analysis, A.C.C. and D.M.; investigation, A.C.C.; resources, A.C.C. and S.A.R.Z.; data curation, A.C.C.; writing—original draft preparation, A.C.C.; writing—review and editing, A.C.C., S.A.R.Z. and D.M.; visualization, A.C.C.; supervision, S.A.R.Z. and D.M.; and project administration, S.A.R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data not publicly available due to obligatory restrictions placed on the research and its outcomes.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Sample Availability

Samples of the compounds are available from the authors.

References

- Du, S.; Li, T.; Yang, Y.; Horng, S.J. Multivariate time-series forecasting via attention-based encoder–decoder framework. Neurocomputing 2020, 388, 269–279. [Google Scholar] [CrossRef]

- Hsieh, R.J.; Chou, J.; Ho, C.H. Unsupervised online anomaly detection on multivariate sensing time-series data for smart manufacturing. In Proceedings of the 2019 IEEE 12th Conference on Service-Oriented Computing and Applications (SOCA), Kaohsiung, Taiwan, 18–21 November 2019; pp. 90–97. [Google Scholar]

- Weber, M.; Domeniconi, G.; Chen, J.; Weidele, D.K.I.; Bellei, C.; Robinson, T.; Leiserson, C.E. Anti-money laundering in bitcoin: Experimenting with graph convolutional networks for financial forensics. arXiv 2019, arXiv:1908.02591. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM Sigkdd International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 387–395. [Google Scholar]

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-head CNN–RNN for multi-time-series anomaly detection: An industrial case study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Garcia, G.R.; Michau, G.; Ducoffe, M.; Gupta, J.S.; Fink, O. Time Series to Images: Monitoring the Condition of Industrial Assets with Deep Learning Image Processing Algorithms. arXiv 2020, arXiv:2005.07031. [Google Scholar]

- Smith, A.; Lee, T.Y.; Poursabzi-Sangdeh, F.; Boyd-Graber, J.; Elmqvist, N.; Findlater, L. Evaluating visual representations for topic understanding and their effects on manually generated topic labels. Trans. Assoc. Comput. Linguist. 2017, 5, 1–16. [Google Scholar] [CrossRef]

- Goodfellow, I.; Yoshua, B.; Aaron, C. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Vieira, A.; Ribeiro, B. Introduction to Deep Learning Business Applications for Developers; Apress: New York, NY, USA, 2018. [Google Scholar]

- Werbos, P.J. Backpropagation through time: What it does and how to do it. Proc. IEEE 1990, 78, 1550–1560. [Google Scholar] [CrossRef]

- Chauvin, Y.; Rumelhart, D.E. (Eds.) Backpropagation: Theory, Architectures, and Applications; Psychology Press: London, UK, 1995. [Google Scholar]

- Renström, N.; Bangalore, P.; Highcock, E. System-wide anomaly detection in wind turbines using deep autoencoders. Renew. Energy 2020, 157, 647–659. [Google Scholar] [CrossRef]

- Yan, W. Toward automatic time-series forecasting using neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1028–1039. [Google Scholar]

- Lei, Y.; Jia, F.; Lin, J.; Xing, S.; Ding, S.X. An intelligent fault diagnosis method using unsupervised feature learning towards mechanical big data. IEEE Trans. Ind. Electron. 2016, 63, 3137–3147. [Google Scholar] [CrossRef]

- Kasun, B.; Bergmeir, C.; Smyl, S. Forecasting across time-series databases using recurrent neural networks on groups of similar series: A clustering approach. Expert Syst. Appl. 2020, 140, 112896. [Google Scholar]

- Wallace, S.C.; Dowe, D.L. MML clustering of multi-state, Poisson, von Mises circular and Gaussian distributions. Stat. Comput. 2000, 10, 73–83. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Choi, Y.J.; Lee, B. Combining LSTM network ensemble via adaptive weighting for improved time-series forecasting. Math. Probl. Eng. 2018, 2018, 2470171. [Google Scholar] [CrossRef]

- Mirsky, Y.; Doitshman, T.; Elovici, Y.; Shabtai, A. Kitsune: An ensemble of autoencoders for online network intrusion detection. arXiv 2018, arXiv:1802.09089. [Google Scholar]

- Laurinec, P.; Lucká, M. Clustering-based forecasting method for individual consumers electricity load using time-series representations. Open Comput. Sci. 2018, 8, 38–50. [Google Scholar] [CrossRef]

- Laurinec, P. TSrepr R package: Time-series representations. J. Open Source Softw. 2018, 3, 577. [Google Scholar] [CrossRef]

- Leonard, K.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2009; Volume 344. [Google Scholar]

- Shah, S.; Singh, M. Comparison of a time efficient modified K-mean algorithm with K-mean and K-medoid algorithm. In Proceedings of the 2012 International Conference on Communication Systems and Network Technologies, Rajkot, India, 11–13 May 2012; pp. 435–437. [Google Scholar]

- Van der Laan, M.; Pollard, K.; Bryan, J. A new partitioning around medoids algorithm. J. Stat. Comput. Simul. 2003, 73, 575–584. [Google Scholar] [CrossRef]

- Pimentel, M.A.; Clifton, D.A.; Clifton, L.; Tarassenko, L. A review of novelty detection. Signal Process. 2014, 99, 215–249. [Google Scholar] [CrossRef]

- Pourpanah, F.; Zhang, B.; Ma, R.; Hao, Q. Anomaly detection and condition monitoring of UAV motors and propellers. In Proceedings of the 2018 IEEE Sensors, New Delhi, India, 28–31 October 2018; pp. 1–4. [Google Scholar]

- Xi, J.; Liu, J.; Wang, J.; Nie, Q.; Kai, W.; Liu, Y.; Wang, C.; Zheng, F. SoftPatch: Unsupervised Anomaly Detection with Noisy Data. Adv. Neural Inf. Process. Syst. 2022, 35, 15433–15445. [Google Scholar]

- Matsue, K.; Sugiyama, M. Unsupervised Tensor based Feature Extraction and Outlier Detection for Multivariate Time Series. In Proceedings of the 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA), Porto, Portugal, 6–9 October 2021; pp. 1–12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).