Abstract

Unmanned aerial vehicles (UAVs) are increasingly gaining in application value in many fields because of their low cost, small size, high mobility and other advantages. In the scenario of traditional cellular networks, UAVs can be used as a kind of aerial mobile base station to collect information of edge users in time. Therefore, UAVs provide a promising communication tool for edge computing. However, due to the limited battery capacity, these may not be able to completely collect all the information. The path planning can ensure that the UAV collects as much data as possible under the limited flight distance, so it is very important to study the path planning of the UAV. In addition, due to the particularity of air-to-ground communication, the flying altitude of the UAV can have a crucial impact on the channel quality between the UAV and the user. As a mature technology, deep reinforcement learning (DRL) is an important algorithm in the field of machine learning which can be deployed in unknown environments. Deep reinforcement learning is applied to the data collection of UAV-assisted cellular networks, so that UAVs can find the best path planning and height joint optimization scheme, which ensures that UAVs can collect more information under the condition of limited energy consumption, save human and material resources as much as possible, and finally achieve higher application value. In this work, we transform the UAV path planning problem into an Markov decision process (MDP) problem. By applying the proximal policy optimization (PPO) algorithm, our proposed algorithm realizes the adaptive path planning of UAV. Simulations are conducted to verify the performance of the proposed scheme compared to the conventional scheme.

1. Introduction

Since the advent of first unmanned aerial vehicle (UAV) in the 1920s, with its advantages of low cost, small size, mobility, easy-to-carry cameras and a variety of sensors, UAVs have increasingly gained in strong application value across many fields. In areas without sufficient ground infrastructures, i.e., such as forest areas with few people or poor natural conditions, it is usually necessary to regularly collect data to avoid emergencies such as fires. In these areas, UAVs can be a good substitute for human labor. In addition, in the case of natural disasters, such as earthquakes, floods and other emergencies that will cause extensive damage to the ground base station, UAVs can also be used as mobile base stations to ensure successful search and rescue. Therefore, UAVs are very promising for data collection as an effective tool to assist in traditional cellular network-based communication [1,2,3,4,5].

Data collection is the most basic task in the Internet of Things (IoT). At present, the data of terminal equipment in the Internet of Things can be received and sent through wired transmission (fiber, network cable), wireless transmission (Bluetooth, WIFI), mobile communication network (4G, 5G) and other methods. With the continuous expansion of its development scale, the number of terminal devices in the Internet of Things is dramatically increasing, and the traditional data collection and transmission methods are not fully competent for today’s application scope. The method of using UAVs for data collection is suitable for IoT application scenarios where surface traffic is difficult and it is more flexible. In recent years, with the rapid development of UAV technology, it has become an important mobile collector to assist data collection in the Internet of Things. The advantages of UAV-assisted IoT data collection are as follows: (1) simple deployment and strong functional scalability. It can be loaded with communication equipment as a mobile base station or relay node, which greatly expands the scope of data collection, reduces the delay of data transmission and increases the bandwidth. It is easy to program and intelligently performs path planning, node search, decision-making obstacle avoidance, etc., by interacting with environmental information; (2) strong mobility, effectively extending the network lifetime. For large-scale monitoring areas with difficult surface traffic, UAV can quickly collect the data, reduce more energy consumption of relay nodes, and radio interference caused by hidden terminal and collision problems in multi-hop routing, avoid network paralysis, thereby prolonging the network life and reducing planning costs; (3) High survival rate, low training and maintenance cost. The widespread use of UAVs is inseparable from the continuous efforts of scientific research workers, and UAVs are moving towards more economical and applicable directions.

However, there are also many problems in the implementation of UAVs. Due to its size, the battery capacity of UAVs is very limited [6,7,8]. To this end, we need to pay special attention to enable the UAV to collect data within the limited flight distance. In addition, considering the impact of flight altitude on the communication link between the UAV and the user [9,10,11], the trajectory and flight altitude of the UAV need to be jointly optimized to improve its energy efficiency as much as possible. To salve the problem, a large number of works have contributed to the data collection mechanism for UAV-assisted cellular network. The work in [12] aimed to maximize the amount of data collected by the UAV by controlling its flight speed. The work in [13] jointly optimized the data collection scheduling, the trajectory of the UAV, the data collection time and the transmission power of ground nodes to maximize the minimum amount of data collected by the UAV. The work in [14] proposed an algorithm to optimize the data acquisition based on UAV and dynamic clustering in the scenario of large-scale outdoor IoT. The work in [15] considered two data collection problems, i.e., fully or partially data collection from IoT devices by the UAV, through finding a closed tour as the trajectory of the UAV to maximize the data collected within the tour. The work in [16] jointly optimized the travel path of the UAV and the transmission power of the sensors when uploading data by considering the constraints of sensors’ power and energy.

As the scale of the network becomes larger, traditional algorithms are more and more difficult to be apply to the problem of data collection [17,18,19,20,21]. Therefore, many researchers in related fields focus on reinforcement learning. The work in [22] adopted the Q-learning algorithm by formulating the speed control of a UAV as a Markov decision process (MDP), where the energy status and location are taken into account to optimize the speed control of the UAV. The work in [23] reduced the overall packet loss by optimizing the UAV cruise velocity and the selected ground sensors for data collection through the proposed DRL algorithm. The work in [24] proposed the single-agent deep option learning (SADOL) algorithm to multi-agent deep option learning (MADOL) algorithm to minimize the flight time, where the UAVs are rechargeable. The work in [25] optimized the UAV trajectory and power allocation to maximize the fairness between the throughput among all sensor nodes and minimize the energy consumption. Proximal policy optimization (PPO) is a popular reinforcement learning algorithm that has gained widespread adoption due to its superior performance on a variety of tasks. Here are some reasons why PPO is considered a superior algorithm: Stable and Robust: PPO is designed to ensure stable and robust performance in complex environments, by carefully balancing the trade-off between exploration and exploitation. It achieves this by enforcing a constraint on the policy update step size, which helps prevent catastrophic policy updates that could lead to unstable behavior. Sample efficiency: PPO is known to be highly sample efficient, meaning that it can achieve good performance with relatively few training samples. This is especially important in real-world scenarios, where obtaining large amounts of data can be time-consuming and expensive. Scalability: PPO is highly scalable and can be used to train large-scale neural networks on distributed computing systems. This makes it well suited for training deep neural networks on complex tasks, such as playing video games or controlling robots. Overall, PPO is considered to be a superior reinforcement learning algorithm due to its stability, sample efficiency, and scalability. It has become one of the most popular algorithms in the field, and is widely used by researchers and practitioners to solve a variety of complex tasks.

In existing works, several aspects of the application of reinforcement learning in wireless networks have been proposed. However, most existing works have considered the situation with relatively few states or actions. In addition, the altitude of the UAV has a huge impact on the UAV-to-ground communication. Therefore, unlike existing work, in this work, we consider a more practical scenario of PPO-based data collection by UAVs. We consider the trajectory of the UAV, hovering altitude, wireless charging, etc., when building the model. Moreover, the energy efficiency is maximized by jointly optimizing the path and flight altitude of the UAV. In addition, we also verify the performance of the proposed algorithm through extensive simulations. The contributions of this work are as follows:

- A realistic scenario for recharging in the network edge is proposed. In this scenario, the UAV can hover near the charging point for charging while collecting data.

- In order to improve the system energy efficiency, we propose a trajectory and altitude joint optimization algorithm based on PPO. The path and altitude of the UAV are adjusted through PPO to optimize the energy efficiency of the system.

2. System Model

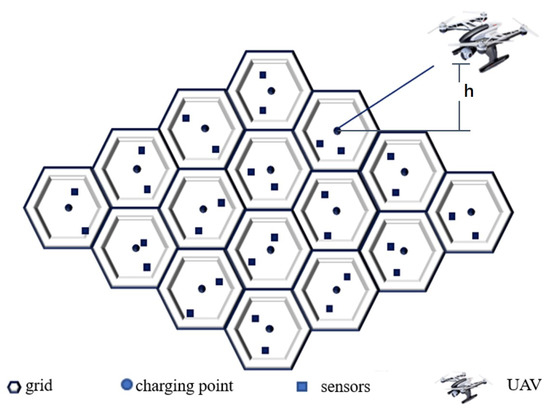

Since the exploration area is large, we divide the exploration area into N grids, and the center point of each grid is used as the charging point of the UAV, and there are J sensors in each grid. The radius of each grid is denoted as a constant , as shown in Figure 1. The UAV only moves between the charging points and hovers over the charging points to collect data from the sensors. To simplify the question, each sensor in this design has collected data, and the packaged data have a length of Z, so the upload time can be expressed as a fixed time slot, denoted as a constant . The sensors with un-uploaded signal will broadcast a signal. The wireless bandwidth of each sensor is orthogonal to each other and the sensors whose data are uploaded will stop broadcasting the signal.

Figure 1.

System model.

The radius of the UAV communication range is a fixed value, denoted as a constant R, and its flight altitude is denoted as h; then, the actual ground coverage of the UAV is , which is larger than the range of a single grid, denoted as . To simplify the question, we set . Considering the practicality of the model, there is a minimum elevation angle between the UAV and the sensor; if the elevation angle is smaller than , the UAV will not provide service, where . Therefore, the flying altitude of the UAV will affect the number of sensors it serves. At the same time, since the signal will fade with increasing distance, the increase in the number of services will come at the cost of decreasing the UAV service quality.

Let denote the location of the charging point in grid n, and denote the location of the user j in grid n, where and denote the distance between the user j in grid n and the charging point in grid n in x and y dimensions, respectively. The location of the base station and the hovering location of the UAV over grid n are denoted as , and , respectively. The path loss is

where f is the carrier frequency. In this model, we set the transmission power as a constant P, and set the wireless channel gain of the UAV hovering above the grid n serving the sensor j as

Then, the channel throughput can be calculated by Shannon’s formula as follows:

where B is the channel bandwidth, is the Gaussian white noise power and represents the minimum SNR. If the elevation angle is less than the minimum elevation angle or the SNR is less than the minimum SNR, the UAV does not provide service, that is, the bandwidth is zero.

Assume that the UAV will collect data from U sensors. Let denote the set of grids served by the UAV and the order in which the sensors in these grid are served, where , , and is the base station. The energy consumption of the UAV flying from the grid m to above the grid n is taken as an example to study the energy efficiency of the UAV. Since UAVs consume different amounts of energy in function of whether they are hovering or flying, we denote the power consumption of UAV in these two motion states by and , respectively. Therefore, the energy consumption of the UAV can be obtained as follows:

where V is the flying speed of the UAV. Thus, the energy efficiency formula of the UAV with can be expressed as

Traditional schemes often only consider optimizing the cruising time of UAV, but improving the energy efficiency of UAV is obviously also an important part of the system work. This design takes the optimization of UAV energy efficiency as the goal, and takes it as the starting point to give the application of the reinforcement learning method under this model, denoted as

3. PPO-Based Data Collection Algorithm

First, we model the process of UAV data collection as a Markov process. We take the service state of the UAV as the state space , the change in the service state of the UAV as the action space , the energy efficiency of the UAV as the reward r, and the state transition probability of the UAV as the transition function . Then, the Markov decision process can be expressed as :

: State space refers to the UAV hovering over the charging point and serving the sensors. There are states in this model, that is, the selection of state includes three dimensions of the UAV hovering position, the senser served, and hovering altitude.

: The state space represents the set of changes of the location of the UAV or service object. It includes the three following cases: (1) the UAV position is unchanged and the service object is changed; (2) the altitude of the UAV changes, the service object remains unchanged; (3) the UAV position changes. For any state, there are states to choose from .

r: The reward space represents the energy efficiency of the UAV in the process of selecting an action and reaching the next state. Denote the t-th state as and the corresponding action as . The reward corresponding to the achievement of the action is denoted as , which is decided by (5).

: The transition function represents the probability of UAV state transition. Denote the probability of the UAV transitioning from state to as . Due to the Markov property of MDP, . Thus, the state transition matrix between the two steps can be obtained as .

PPO is a very popular reinforcement learning algorithm, which has strong robustness. The PPO combines the policy gradient with actor–critic architecture. A typical actor–critic architecture contains two networks: actor and critic. The Actor network executes the algorithm and feeds feedback from the environment into a pool of experiences. The critic network then evaluates whether the action selected by the actor network is advantageous. It estimates the dominance function by parameters, denoted by

where is the agent’s policy, which is determined by the actor network with parameters . said that the current strategy and the choice of state action of total rewards. represents the average reward of all actions in the current policy and state, as estimated by the critic network. The actor network and critic network update the parameters and , respectively, by gradient descent method to jointly optimize the strategy . However, the actor–critic architecture has the problem of being too sensitive to parameters. To this end, PPO expands the actor network into the actor network and the old actor network, and introduces parameters to constrain the update range of in the actor network. Specifically, the update formulas of PPO’s old actor network, actor network, and critic network can be, respectively, expressed as

The implementation of the PPO algorithm in the data collection is presented in Algorithm 1.

| Algorithm 1 PPO-based Data Collection Algorithm. | |

| Input: locations of users , location of the base station , Z, , system bandwidth B, and transmit power of the UAV P. | |

| Output: System energy efficiency , System Delay | |

| 1: | Initialization of the environment and the state of the UAV. |

| 2: | Initialization of the parameters . |

| 3: | Clearing the experience buffer. |

| 4: | for each episode do |

| 5: | Interacts with the environment for T time steps, stores four-tuple into the experience buffer. |

| 6: | Calculate advantage function according to . |

| 7: | for for do |

| 8: | Update the parameters of actor and critic networks according to (9) and (10), respectively. |

| 9: | end for |

| 10: | |

| 11: | Clearing the experience buffer. |

| 12: | end for |

| 13: | return |

4. Simulation Results

Unless otherwise specified, the simulation parameters are set in the following Table 1.

Table 1.

Simulation parameters.

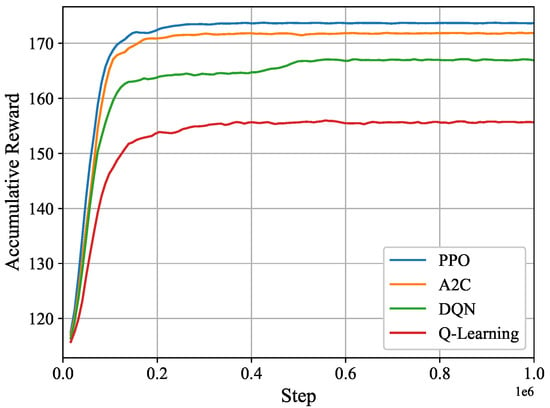

We compare the proposed algorithm with other traditional algorithms, as shown in Figure 2, namely the Q-Learning-based data collection algorithm [26], Deep Q-Network (DQN)-based data collection algorithm and the asynchronous actor–critic (A2C)-based data collection algorithm, as shown in Figure 2. We can see that the Q-learning-based data gathering algorithm has the worst performance because it uses a traditional Q-table. If there are a large number of states and actions, the Q-table that needs to be established will be very large. DQN [27] is an improved algorithm of Q, which uses a neural network to calculate the Q-value and uses the output of the neural network to replace the Q-value. DQN also uses two effective strategies, namely the experience replay and fixed Q-target, to perturb the correlation between experiences and help convergence. The experience buffer will store past transitions. As such, the neural network can use batch learning to randomly extract multiple transitions from the experience buffer in a disordered order and enter the neural network to learn parameters using backpropagation, and thus perturb the correlation between transitions. Fixed Q-targets serve to introduce two neural networks with the same architecture, called eval-net and target-net. This makes the parameter update of target-net happen late, which disturbs the correlation of the data. A2C performs better than Q-Learning and DQN because it introduces the actor–critic framework. It is a synchronous, deterministic variant of the asynchronous advantage actor–critic (A3C) [28]. However, none of them perform as well as the PPO algorithm. This proves the superior performance of our proposed scheme.

Figure 2.

The results of RL algorithms.

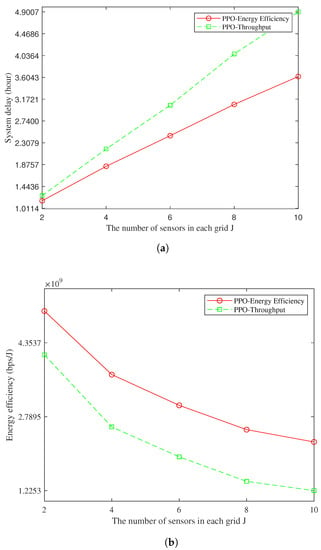

We introduce the contrast algorithm as the traditional data gathering algorithm to maximize throughput. It also uses PPO, but with as the reward, that is, the reward formula that the UAV can be expressed as. We denote our proposed algorithm as PPO-Energy Efficiency and the comparison algorithm as PPO-Through. From Figure 3a, it can be found that the time delay of the two algorithms monotonically increases with the increase in the number of sensors, because the hovering time of the UAV becomes longer with the increase in the amount of data collection. However, the delay in PPO-Energy Efficiency is always lower than that of PPO-Through as the number of sensors increases. This shows that, in order to reduce energy consumption, UAVs tend to choose shorter flight routes, which will greatly reduce the system delay. Therefore, the performance of PPO-Energy Efficiency is always better than that of PPO-Through in terms of aging as time increases. In addition, the delay growth trend of PPO-Energy Efficiency slowly decreases with the increase in the number of sensors, while the delay of PPO-Through always increases linearly, which indicates that the larger the amount of data, the more obvious the advantage of PPO-Energy Efficiency is in terms of delay. From Figure 3b, it can be found that the energy efficiency of both algorithms decreases with the increase in sensors. Energy efficiency decreases, that is, the ratio of data volume to system energy consumption decreases, which means that the increase in data collection is less than the energy consumption. In addition, the Energy Efficiency of PPO-Energy Efficiency is always higher than that of PPO-Through as the number of sensors increases, proving that the former is more energy-efficient. In summary, the increase in the number of sensors will improve the algorithm in the amount of data collection performance, but this improvement will be at the cost of reducing energy efficiency, so it is necessary to arrange the sensor density reasonably.

Figure 3.

System performance with the number of sensors in each grid. (a) System delay with the number of sensors in each grid; and (b) Energy efficiency with the number of sensors in each grid.

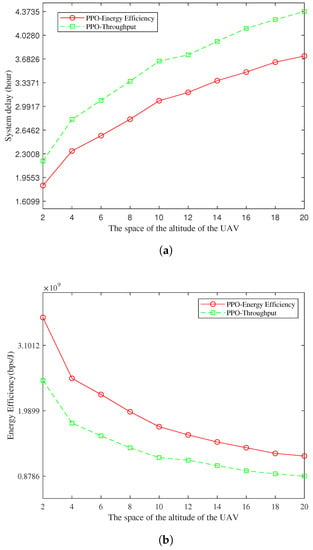

According to Figure 4a, the system delay will decrease with the increase in the altitude space of the UAV. The increases in the amount of information that the UAV can receive inevitably leads to the increase in the delay of the UAV system. According to Figure 4b, the energy efficiency of the system decreases with the increase in the height space of the UAV. The reason is the same as the reduction in the system energy efficiency in Figure 3b. It can be concluded that increasing the hovering height space of the UAV has a similar effect as increasing the sensor density. Increasing the hovering height space of the unmanned aerial vehicle (UAV) differently affects the quantity of data that will be gradually along collected as the increase in the height of the unmanned aerial vehicle (UAV) space is reduced, while the latter can guarantee the linear increase in the amount of data collection, and the increase in UAVs hovering height of PPO in space. Through the time delay of the performance, the energy efficiency performances of the two algorithms have a more significant effect. However, additional sensors are not required, so it is more economical.

Figure 4.

System performance with the space of the altitude of the UAV. (a) System delay with the space of the altitude of the UAV; and (b) Energy efficiency with the space of the altitude of the UAV.

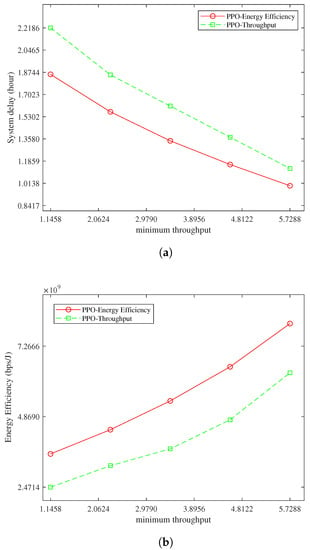

According to Figure 5a, the system delay decreases with the increase in the minimum throughput, because the reduction in detectable sensors reduces the flight delay and hovering delay of the UAV. In addition, the gap between the PPO-Energy Efficiency and PPO-Through delay performance is reduced because the number of sensors satisfying SNR is reduced, making PPO-Energy Efficiency less flexible to reducing delay through the energy-saving movement of UAVs. According to Figure 5b, the system energy efficiency increases with the increase in the minimum throughput. Due to the reduction in detectable sensors, the movement time for the transition state of the UAV is reduced, so the energy efficiency is increased.

Figure 5.

System performance with minimum throughput: (a) System delay with minimum throughput; and (b) Energy efficiency with minimum throughput.

5. Conclusions

We consider the problem of data collection at the edge of the network, where UAVs serve as aerial base stations to provide edge computing to the network. We use deep reinforcement learning as a trajectory and trajectory joint planning method to ensure that the UAVs collect more information under limited energy consumption, save as much manpower and material resources as possible, and finally, achieve higher application value. After establishing the model according to the specific situation, we choose the PPO algorithm to guide the action of the UAV according to the model. We perform simulations for two different optimization objectives and compare their performance. In the simulation, we observe the influence of sensor density, UAV hovering height space, and minimum throughput on the performance of the algorithm, and finally find that the algorithm with energy efficiency as the optimization objective is better than the traditional algorithm with delay as the optimization objective in terms of delay and energy efficiency. In addition, the system performance can be improved by appropriately increasing the sensor density, increasing the optional flight altitude of the UAV, and switching to a UAV with more powerful data collection capability.

Author Contributions

T.C., F.D. and H.Y. discussed and designed the forgery detection method. Y.W. designed and implemented the detection algorithm, B.W. tested and analyzed the experimental results, and Y.W. thoroughly reviewed and improved the paper. All authors have discussed and contributed to the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used to support the findings of this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wei, Z.; Zhu, M.; Zhang, N.; Wang, L.; Zou, Y.; Meng, Z.; Wu, H.; Feng, Z. UAV-Assisted Data Collection for Internet of Things: A Survey. IEEE Internet Things J. 2022, 9, 15460–15483. [Google Scholar] [CrossRef]

- Diels, L.; Vlaminck, M.; Wit, B.D.; Philips, W.; Luong, H. On the optimal mounting angle for a spinning LiDAR on a UAV. IEEE Sens. J. 2022, 22, 21240–21247. [Google Scholar] [CrossRef]

- Yu, J.; Guo, J.; Zhang, X.; Zhou, C.; Xie, T.; Han, X. A Novel Tent-Levy Fireworks Algorithm for the UAV Task Allocation Problem Under Uncertain Environment. IEEE Access 2022, 10, 102373–102385. [Google Scholar] [CrossRef]

- Bansal, G.; Chamola, V.; Sikdar, B.; Yu, F.R. UAV SECaaS: Game-Theoretic Formulation for Security as a Service in UAV Swarms. IEEE Syst. J. 2021, 16, 6209–6218. [Google Scholar] [CrossRef]

- Burhanuddin, L.A.b.; Liu, X.; Deng, Y.; Challita, U.; Zahemszky, A. QoE Optimization for Live Video Streaming in UAV-to-UAV Communications via Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 71, 5358–5370. [Google Scholar] [CrossRef]

- Pan, Y.; Chen, Q.; Zhang, N.; Li, Z.; Zhu, T.; Han, Q. Extending Delivery Range and Decelerating Battery Aging of Logistics UAVs using Public Buses. IEEE Trans. Mob. Comput. 2022. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, X.; Duan, L.; Tie, J. Multi-UAV Cooperative Trajectory for Servicing Dynamic Demands and Charging Battery. IEEE Trans. Mob. Comput. 2021, 22, 1599–1614. [Google Scholar] [CrossRef]

- Caposciutti, G.; Bandini, G.; Marracci, M.; Buffi, A.; Tellini, B. Capacity Fade and Aging Effect on Lithium Battery Cells: A Real Case Vibration Test With UAV. IEEE J. Miniaturization Air Space Syst. 2021, 2, 76–83. [Google Scholar] [CrossRef]

- Xu, C.; Liao, X.; Tan, J.; Ye, H.; Lu, H. Recent Research Progress of Unmanned Aerial Vehicle Regulation Policies and Technologies in Urban Low Altitude. IEEE Access 2020, 8, 74175–74194. [Google Scholar] [CrossRef]

- Youn, W.; Choi, H.; Cho, A.; Kim, S.; Rhudy, M.B. Accelerometer Fault-Tolerant Model-Aided State Estimation for High-Altitude Long-Endurance UAV. IEEE Trans. Instrum. Meas. 2020, 69, 8539–8553. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Long, K.; Nallanathan, A. Exploring Sum Rate Maximization in UAV-based Multi-IRS Networks: IRS Association, UAV Altitude, and Phase Shift Design. IEEE Trans. Commun. 2022, 70, 7764–7774. [Google Scholar] [CrossRef]

- Li, X.; Tan, J.; Liu, A.; Vijayakumar, P.; Kumar, N.; Alazab, M. A Novel UAV-Enabled Data Collection Scheme for Intelligent Transportation System Through UAV Speed Control. IEEE Trans. Intell. Transp. Syst. 2021, 22, 2100–2110. [Google Scholar] [CrossRef]

- Wang, K.; Tang, Z.; Liu, P.; Cong, Y.; Wang, X.; Kong, D.; Li, Y. UAV-Based and Energy-Constrained Data Collection System with Trajectory, Time, and Collection Scheduling Optimization. In Proceedings of the 2021 IEEE/CIC International Conference on Communications in China (ICCC), Xiamen, China, 28–30 July 2021; pp. 893–898. [Google Scholar] [CrossRef]

- Cao, H.; Yao, H.; Cheng, H.; Lian, S. A Solution for Data collection of Large-Scale Outdoor Internet of Things Based on UAV and Dynamic Clustering. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020; Volume 9, pp. 2133–2136. [Google Scholar] [CrossRef]

- Li, Y.; Liang, W.; Xu, W.; Xu, Z.; Jia, X.; Xu, Y.; Kan, H. Data Collection Maximization in IoT-Sensor Networks Via an Energy-Constrained UAV. IEEE Trans. Mob. Comput. 2021, 22, 159–174. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, M.; Pan, C.; Wang, K.; Pan, Y. Joint Optimization of UAV Trajectory and Sensor Uploading Powers for UAV-Assisted Data Collection in Wireless Sensor Networks. IEEE Internet Things J. 2022, 9, 11214–11226. [Google Scholar] [CrossRef]

- Zhang, R.; Wang, M.; Cai, L.X.; Shen, X. Learning to Be Proactive: Self-Regulation of UAV Based Networks With UAV and User Dynamics. IEEE Trans. Wirel. Commun. 2021, 20, 4406–4419. [Google Scholar] [CrossRef]

- Liu, R.; Qu, Z.; Huang, G.; Dong, M.; Wang, T.; Zhang, S.; Liu, A. DRL-UTPS: DRL-based Trajectory Planning for Unmanned Aerial Vehicles for Data Collection in Dynamic IoT Network. IEEE Trans. Intell. Veh. 2022, 1–14. [Google Scholar] [CrossRef]

- Deng, D.; Wang, C.; Wang, W. Joint Air-to-Ground Scheduling in UAV-Aided Vehicular Communication: A DRL Approach With Partial Observations. IEEE Commun. Lett. 2022, 26, 1628–1632. [Google Scholar] [CrossRef]

- Kaifang, W.; Bo, L.; Xiaoguang, G.; Zijian, H.; Zhipeng, Y. A learning-based flexible autonomous motion control method for UAV in dynamic unknown environments. J. Syst. Eng. Electron. 2021, 32, 1490–1508. [Google Scholar] [CrossRef]

- Seid, A.M.; Boateng, G.O.; Mareri, B.; Sun, G.; Jiang, W. Multi-Agent DRL for Task Offloading and Resource Allocation in Multi-UAV Enabled IoT Edge Network. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4531–4547. [Google Scholar] [CrossRef]

- Chu, N.H.; Hoang, D.T.; Nguyen, D.N.; Huynh, N.V.; Dutkiewicz, E. Fast or Slow: An Autonomous Speed Control Approach for UAV-assisted IoT Data Collection Networks. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Kurunathan, H.; Li, K.; Ni, W.; Tovar, E.; Dressler, F. Deep Reinforcement Learning for Persistent Cruise Control in UAV-aided Data Collection. In Proceedings of the 2021 IEEE 46th Conference on Local Computer Networks (LCN), Edmonton, AB, Canada, 4–7 October 2021; pp. 347–350. [Google Scholar] [CrossRef]

- Zhang, Y.; Mou, Z.; Gao, F.; Xing, L.; Jiang, J.; Han, Z. Hierarchical Deep Reinforcement Learning for Backscattering Data Collection With Multiple UAVs. IEEE Internet Things J. 2021, 8, 3786–3800. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, Z.; Zhang, J.; Cao, X.; Zheng, D.; Gao, Y.; Ng, D.W.K.; Renzo, M.D. Trajectory Design for UAV-Based Internet of Things Data Collection: A Deep Reinforcement Learning Approach. IEEE Internet Things J. 2022, 9, 3899–3912. [Google Scholar] [CrossRef]

- Clifton, J.; Laber, E.B. Q-Learning: Theory and Applications. Soc. Sci. Res. Netw. 2020, 7, 279–301. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M.A. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.P.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the ICML 2016, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1928–1937. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).