Millimeter-Wave Image Deblurring via Cycle-Consistent Adversarial Network

Abstract

1. Introduction

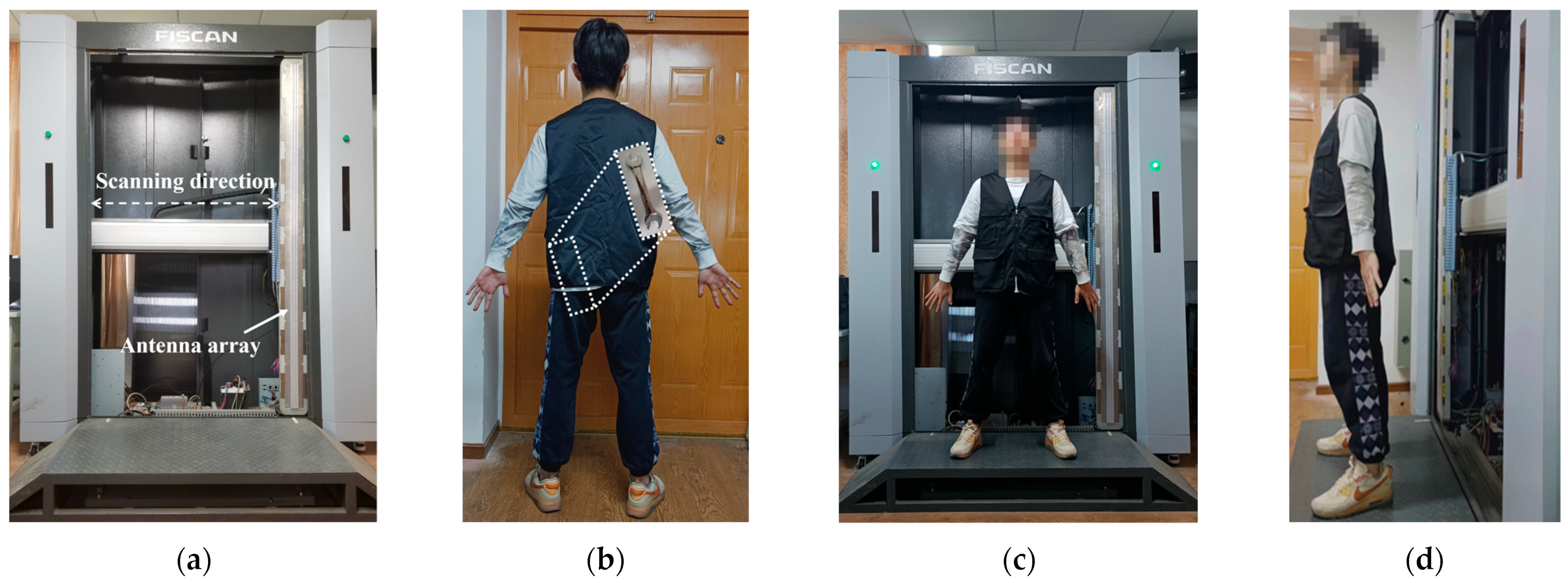

- (a)

- To the best of our knowledge, we are the first to address the phenomenon of MMW image blurring caused by the shaking of the human body, and propose an end-to-end deblurring architecture based on Cycle GAN.

- (b)

- The MSE loss and the identity loss are incorporated in Cycle GAN to ensure a more suitable model for the MMW deblurring task.

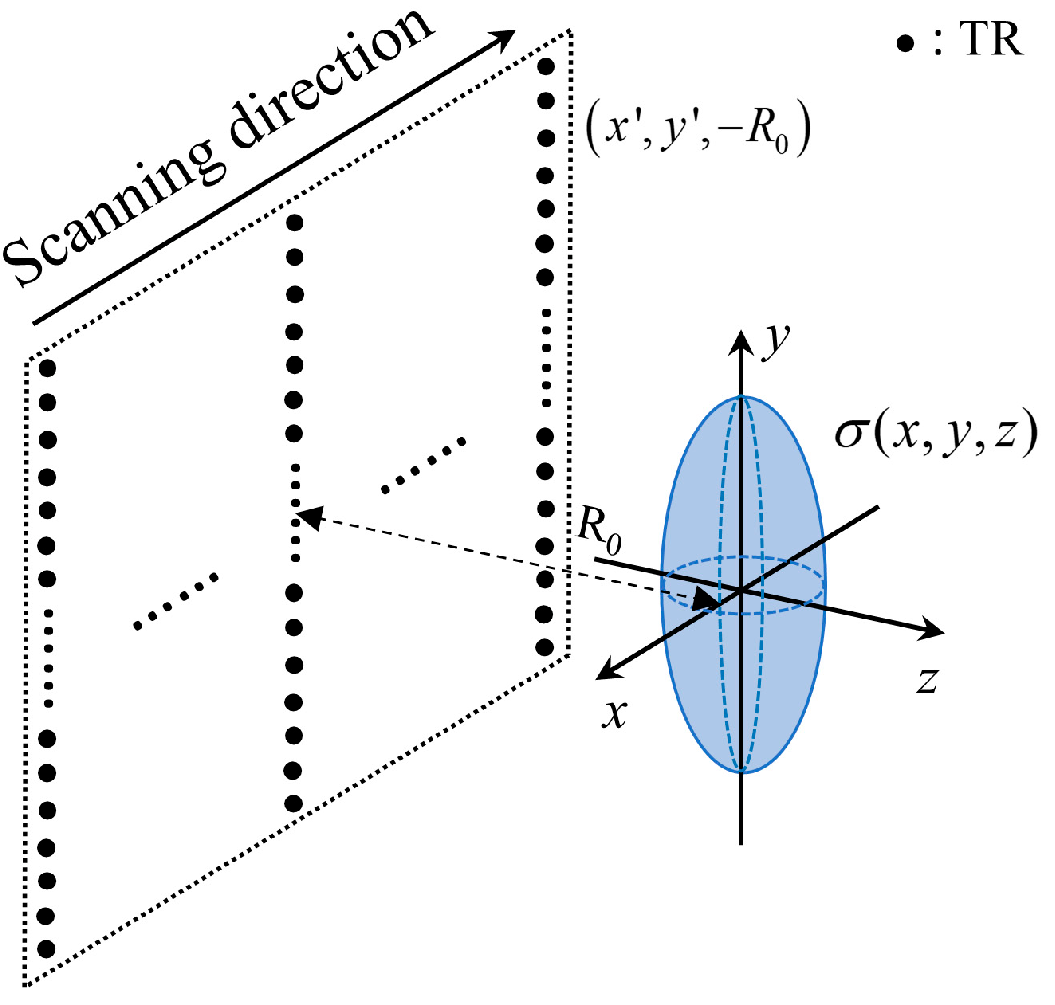

2. Formulation

2.1. Millimeter-Wave Imaging

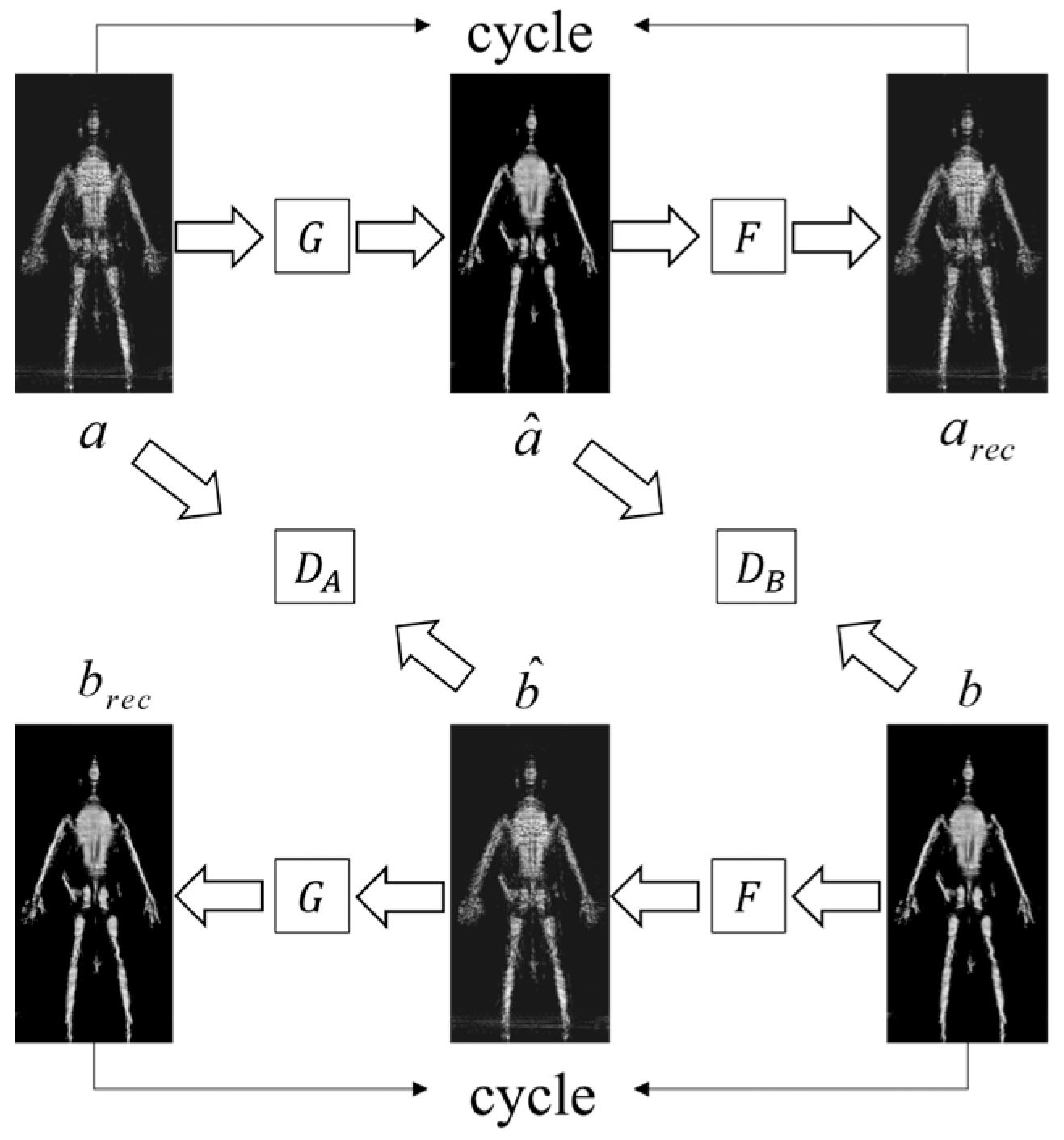

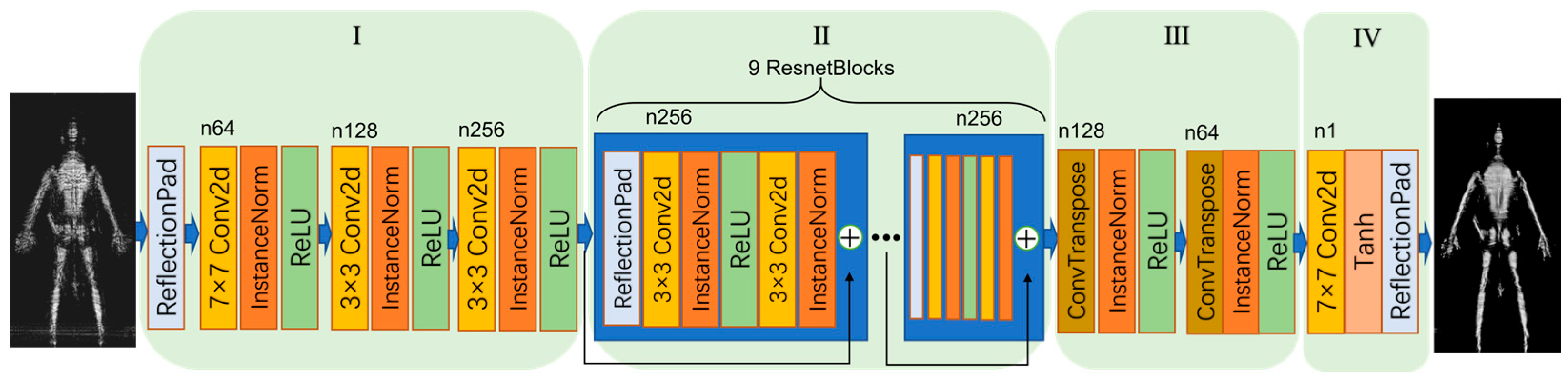

2.2. Cycle GAN Methodology for MMW Image Deblurring

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zoughi, R. Microwave Non-Destructive Testing and Evaluation; Non-Destructive Evaluation Series 4; Springer: Dordrecht, The Netherlands, 2000. [Google Scholar] [CrossRef]

- Cooper, K.B.; Dengler, R.J.; Llombart, N.; Thomas, B.; Siegel, P.H. Thz imaging radar for standoff personnel screening. IEEE Trans. Terahertz Sci. Technol. 2011, 1, 169–182. [Google Scholar] [CrossRef]

- Shen, X.; Dietlein, C.R.; Grossman, E.; Popovic, Z.; Meyer, F.G. Detection and segmentation of concealed objects in terahertz images. IEEE Trans. Image Process. 2008, 17, 2465–2475. [Google Scholar] [CrossRef] [PubMed]

- Sheen, D.; McMakin, D.; Hall, T. Near-field three-dimensional radar imaging techniques and applications. Appl. Opt. 2010, 49, E83–E93. [Google Scholar] [CrossRef] [PubMed]

- Jing, H.; Li, S.; Miao, K.; Wang, S.; Cui, X.; Zhao, G.; Sun, H. Enhanced Millimeter-Wave 3-D Imaging via Complex-Valued Fully Convolutional Neural Network. Electronics 2022, 11, 147. [Google Scholar] [CrossRef]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind motion deblurring using conditional adversarial networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications, 1st ed.; Springer: New York, NY, USA, 2010. [Google Scholar]

- Bar, L.; Kiryati, N.; Sochen, N. Image Deblurring in the Presence of Impulsive Noise. Int. J. Comput. Vis. 2006, 70, 279–298. [Google Scholar] [CrossRef]

- Krishnan, D.; Fergus, R. Fast Image Deconvolution Using Hyper-Laplacian Priors. Neural Inf. Process. Syst. 2009, 22, 1033–1041. [Google Scholar]

- Fergus, R.; Singh, B.; Hertzmann, A.; Roweis, S.T.; Freeman, W.T. Removing camera shake from a single photograph. ACM Trans. Graph. 2006, 25, 787–794. [Google Scholar] [CrossRef]

- Xu, L.; Zheng, S.; Jia, J. Unnatural L0 Sparse Representation for Natural Image Deblurring. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1107–1114. [Google Scholar] [CrossRef]

- Babacan, S.D.; Molina, R.; Do, M.N.; Katsaggelos, A.K. Bayesian blind deconvolution with general sparse image priors. In Proceedings of the 12th European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Wu, C.; Du, H.; Wu, Q.; Zhang, S. Image Text Deblurring Method Based on Generative Adversarial Network. Electronics 2020, 9, 220. [Google Scholar] [CrossRef]

- Shin, C.J.; Lee, T.B.; Heo, Y.S. Dual Image Deblurring Using Deep Image Prior. Electronics 2021, 10, 2045. [Google Scholar] [CrossRef]

- Sun, J.; Cao, W.; Xu, Z.; Ponce, J. Learning a convolutional neural network for non-uniform motion blur removal. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 769–777. [Google Scholar] [CrossRef]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 257–265. [Google Scholar] [CrossRef]

- Noroozi, M.; Chandramouli, P.; Favaro, P. Motion deblurring in the Wild. In Proceedings of the German Conference on Pattern Recognition, Basel, Switzerland, 13–15 September 2017. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.; Smolley, S.P. Least squares generative adversarial networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Taigman, Y.; Polyak, A.; Wolf, L. Unsupervised Cross-Domain Image Generation. arXiv 2016, arXiv:1611.02200. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar] [CrossRef]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Boston, MA, USA, 2005. [Google Scholar]

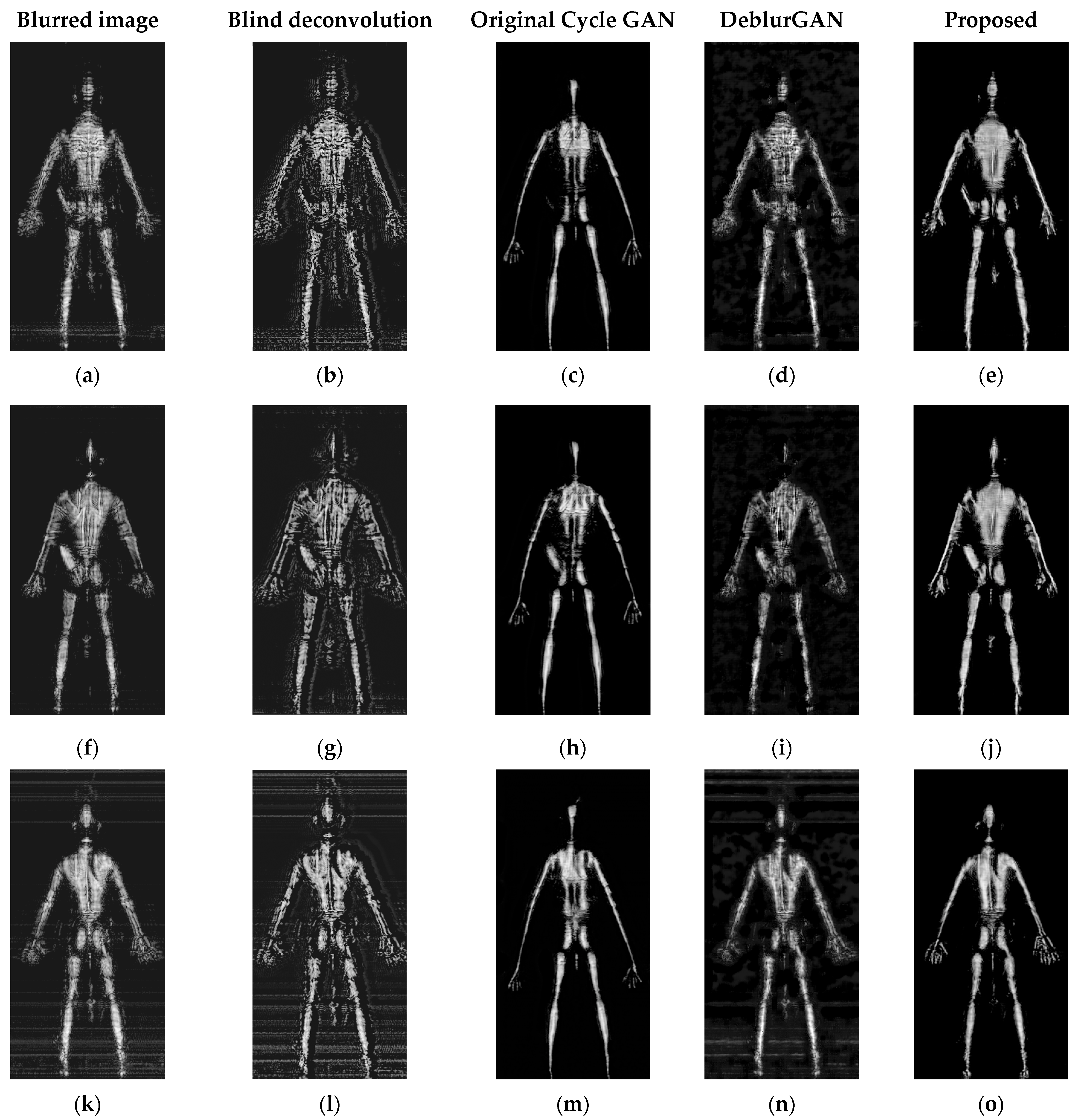

| Objects | Blurred Image | Blind Deconvolution | Original Cycle | DeblurGAN | Proposed |

|---|---|---|---|---|---|

| Wrench(a–e) | 0.7633 | 0.9805 | 0.6090 | 0.9943 | 0.6704 |

| None(f–j) | 0.9675 | 0.9824 | 0.6150 | 0.9972 | 0.6374 |

| Knife(k–o) | 0.6914 | 0.9627 | 0.6145 | 0.9801 | 0.6623 |

| Module | Computations (FLOPs) |

|---|---|

| Ⅰ | 27,099,402,788 |

| Ⅱ | 87,257,097,216 |

| Ⅲ | 5,140,119,552 |

| Ⅳ | 423,620,060 |

| Methods | Computations (FLOPs) |

|---|---|

| Blind deconvolution | 0.006 T |

| Original Cycle | 0.13 T |

| DeblurGAN | 0.13 T |

| proposed | 0.12 T |

| Blind Deconvolution | Original Cycle | DeblurGAN | Proposed | |

|---|---|---|---|---|

| Time | 2.367 s | 0.338 s | 0.322 s | 0.299 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Wang, S.; Jing, H.; Li, S.; Zhao, G.; Sun, H. Millimeter-Wave Image Deblurring via Cycle-Consistent Adversarial Network. Electronics 2023, 12, 741. https://doi.org/10.3390/electronics12030741

Liu H, Wang S, Jing H, Li S, Zhao G, Sun H. Millimeter-Wave Image Deblurring via Cycle-Consistent Adversarial Network. Electronics. 2023; 12(3):741. https://doi.org/10.3390/electronics12030741

Chicago/Turabian StyleLiu, Huteng, Shuoguang Wang, Handan Jing, Shiyong Li, Guoqiang Zhao, and Houjun Sun. 2023. "Millimeter-Wave Image Deblurring via Cycle-Consistent Adversarial Network" Electronics 12, no. 3: 741. https://doi.org/10.3390/electronics12030741

APA StyleLiu, H., Wang, S., Jing, H., Li, S., Zhao, G., & Sun, H. (2023). Millimeter-Wave Image Deblurring via Cycle-Consistent Adversarial Network. Electronics, 12(3), 741. https://doi.org/10.3390/electronics12030741