Stacked Siamese Generative Adversarial Nets: A Novel Way to Enlarge Image Dataset

Abstract

1. Introduction

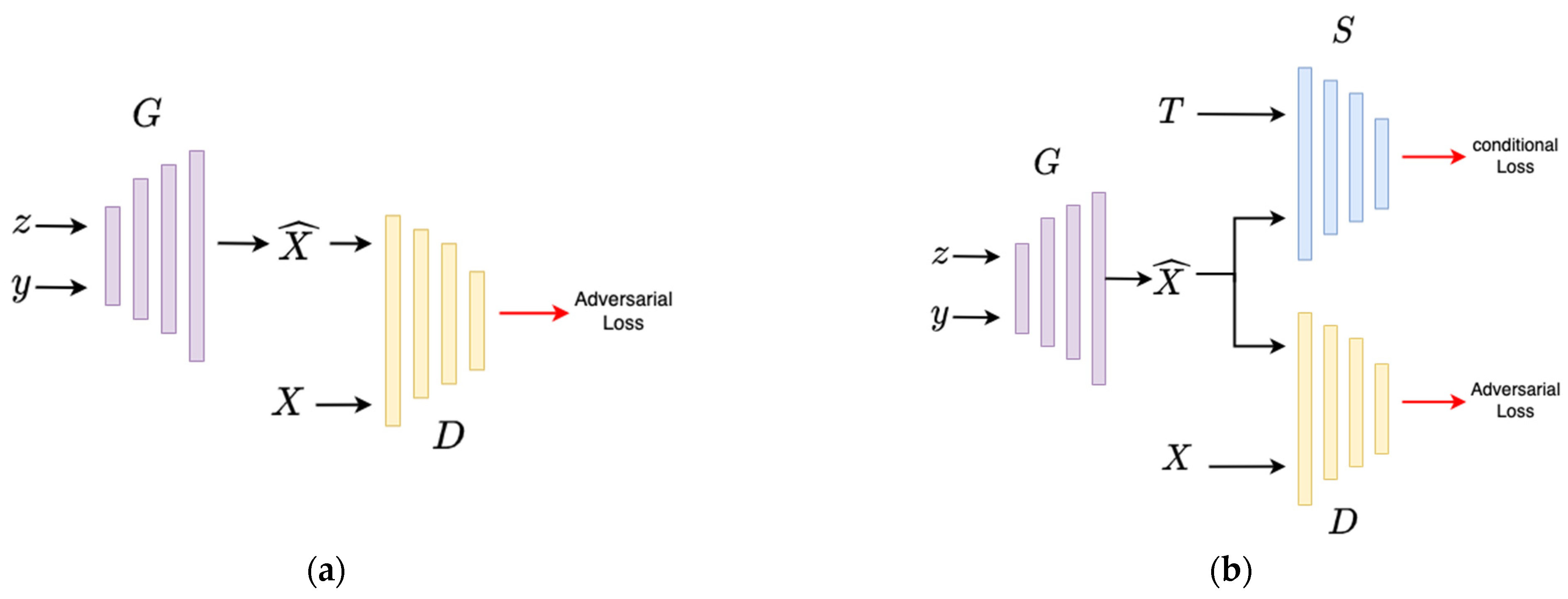

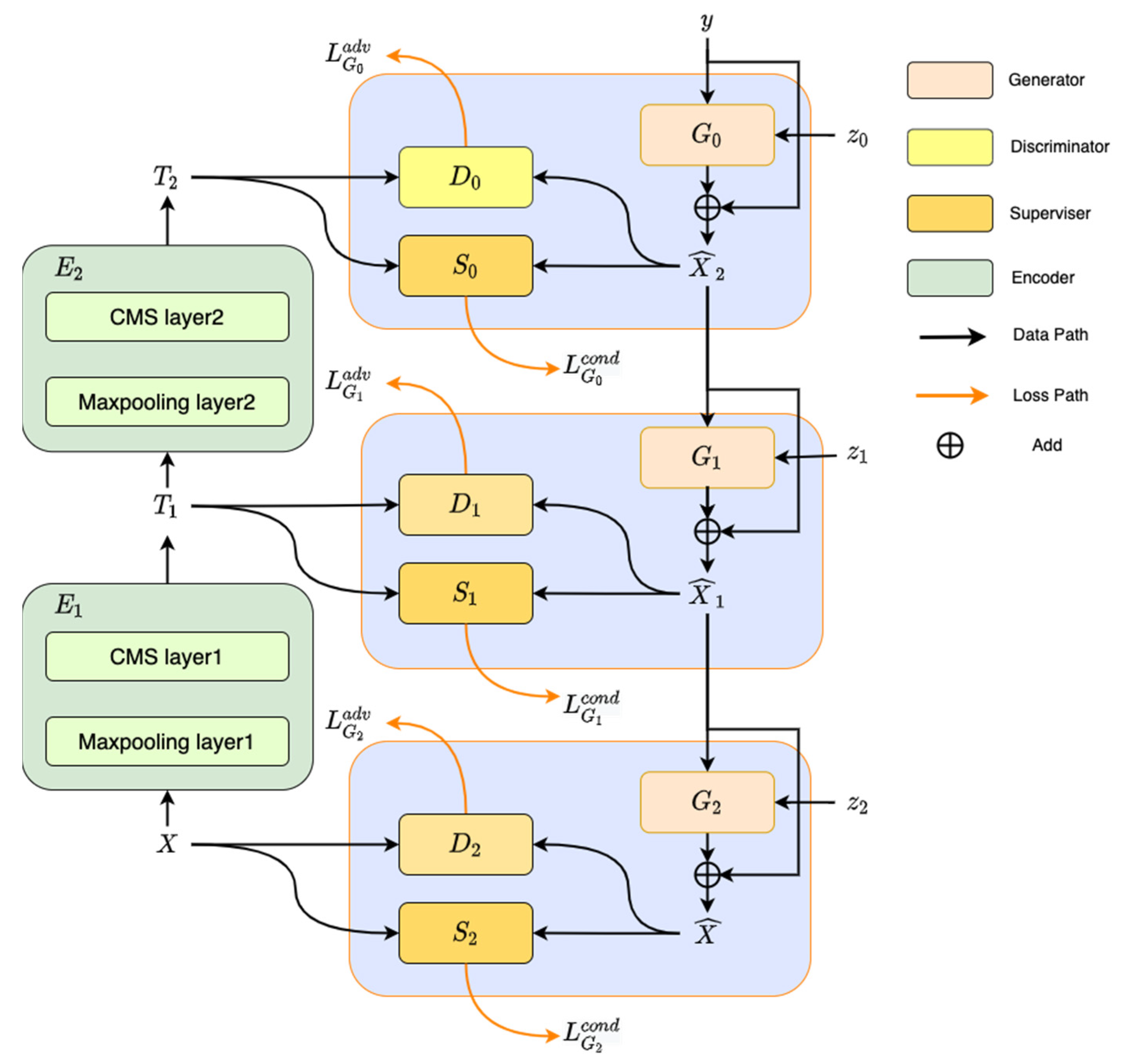

- SGAN exerts an additional restriction on the generator by introducing auxiliary loss to the generator. As a result, the generator is expanded to a conditional model and the generator can be effectively guided by the conditional information.

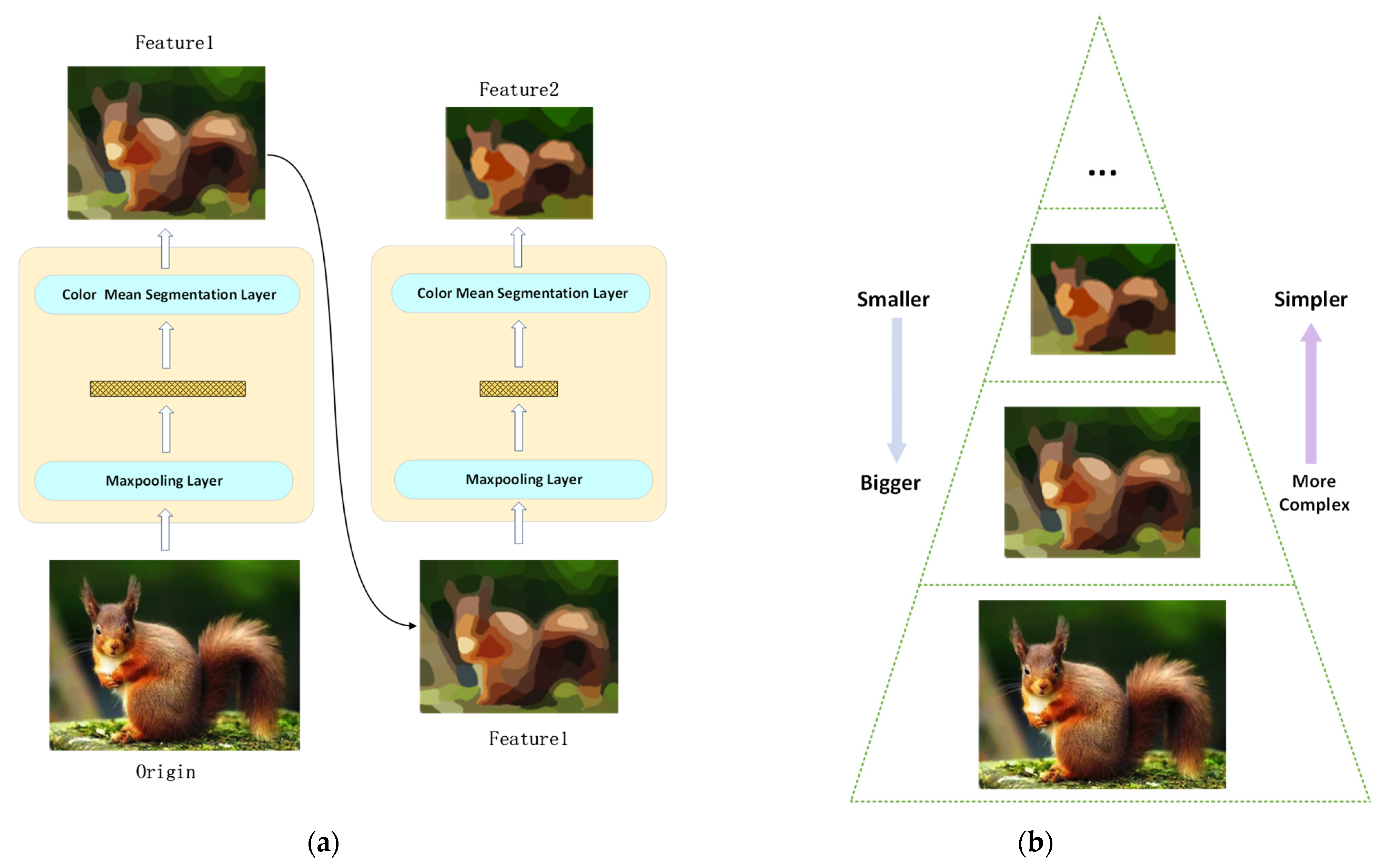

- We propose a feature extraction method based on a clustering algorithm, named Color Mean Segmentation (CMS), which can reduce complexity but still reserve the main structure and key characteristics of image data. We use CMS to construct the CMS-Encoder, which can extract abstract features from images. Compared to the convolutional neural network methods, the CMS-Encoder has three advantages: (1) Since CMS only makes use of the information of the input image, it does not need to be trained. (2) Features extracted by the CMS-Encoder have high interpretability of visuals. (3) The abstract level of output can be controlled by simply adjusting the number of superpixels.

- We proposed a new training method for generating samples. Several SGANs are stacked together to build a Stacked SGAN (SSGAN). By progressively learning features, the SSGAN can learn the distribution of the original dataset.

2. Related Work

2.1. Generative Adversarial Networks

2.2. Simple Linear Iterative Clustering

2.3. Siamese Network

3. Stacked Siamese Generative Adversarial Network

3.1. Siamese GAN

3.2. CMS-Encoder

| Algorithm 1 Color Mean Segmentation |

| /* segmentation*/ |

| repeat |

| for each cluster center do |

| for |

| end if |

| end for |

| end for |

| Compute new cluster centers. |

| Compute residual error E. |

| /* Mean */ |

| for |

| for |

| end for |

| end for |

- (1)

- Do not need to train. The CMS-Encoder alleviates the dependency on the training process and training dataset, which is critical for the CNN model. Since the CMS algorithm is based on the clustering algorithm, all the computations only rely on the input image itself. Therefore, it does not need any cautious designs for training, and we do not need to worry about the dataset for its learning.

- (2)

- Highly interpretable. The CNN is famous for its ability of extracting features of images, but it is also known as a black-box model. When we use the CNN as an end-to-end model, we do not understand what happens in it due to its terrible interpretability. However, for our proposed CMS-Encoder, we can visualize features and readily observe what they represent from a human perspective.

- (3)

- Controllable. The CMS layer, which acts as the core in the CMS-Encoder, is based on the SLIC algorithm. Therefore, we could control the number of superpixels to be segmented. As we mentioned above, the output feature can be seen as a superpixel-level image, and the number of superpixels directly decides the abstract degree of the feature. Hence, the abstract level of the feature can by controlled by simply changing the number of superpixels.

3.3. Stacked Siamese GAN

4. Result Analysis

4.1. Introduction to the Dataset

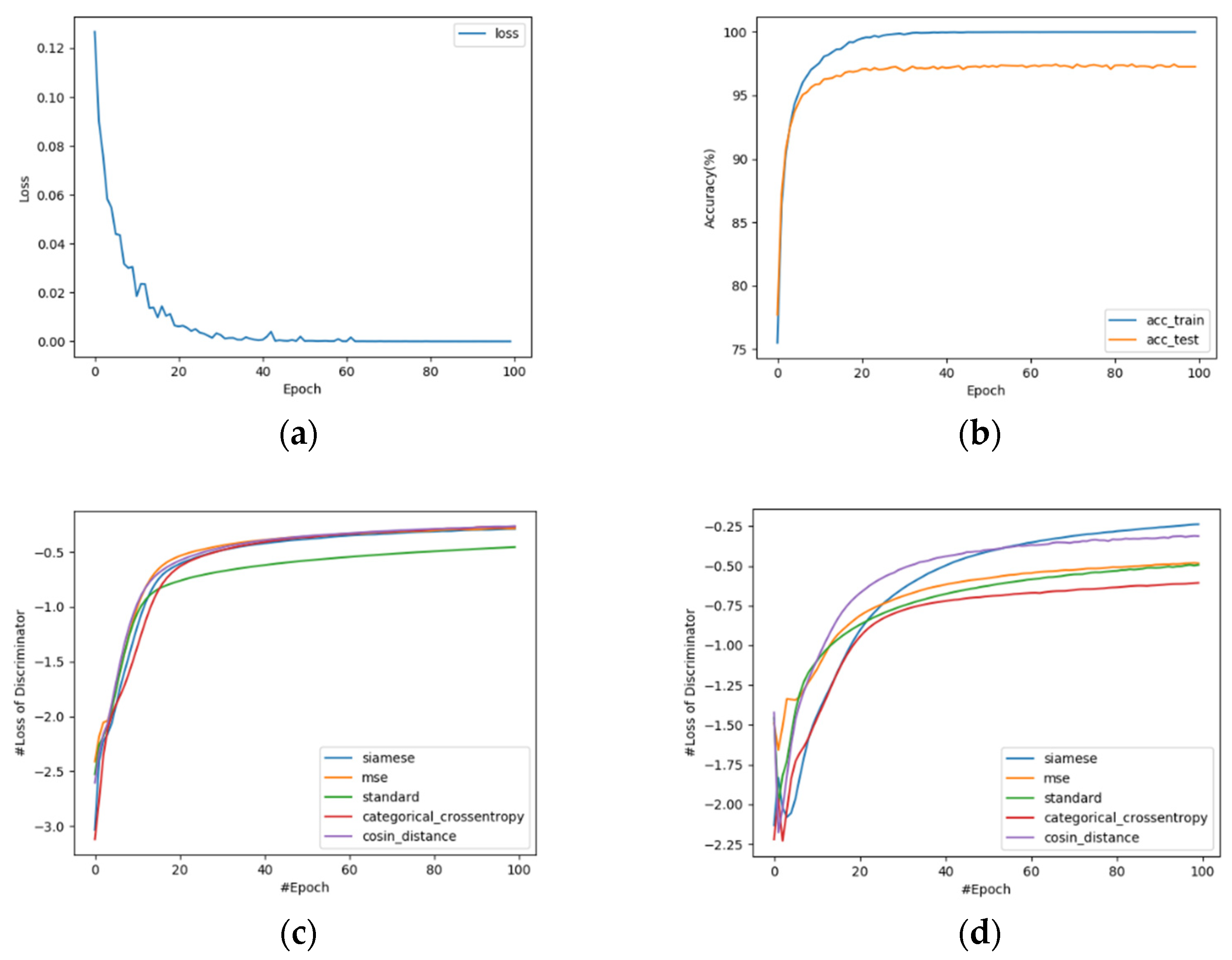

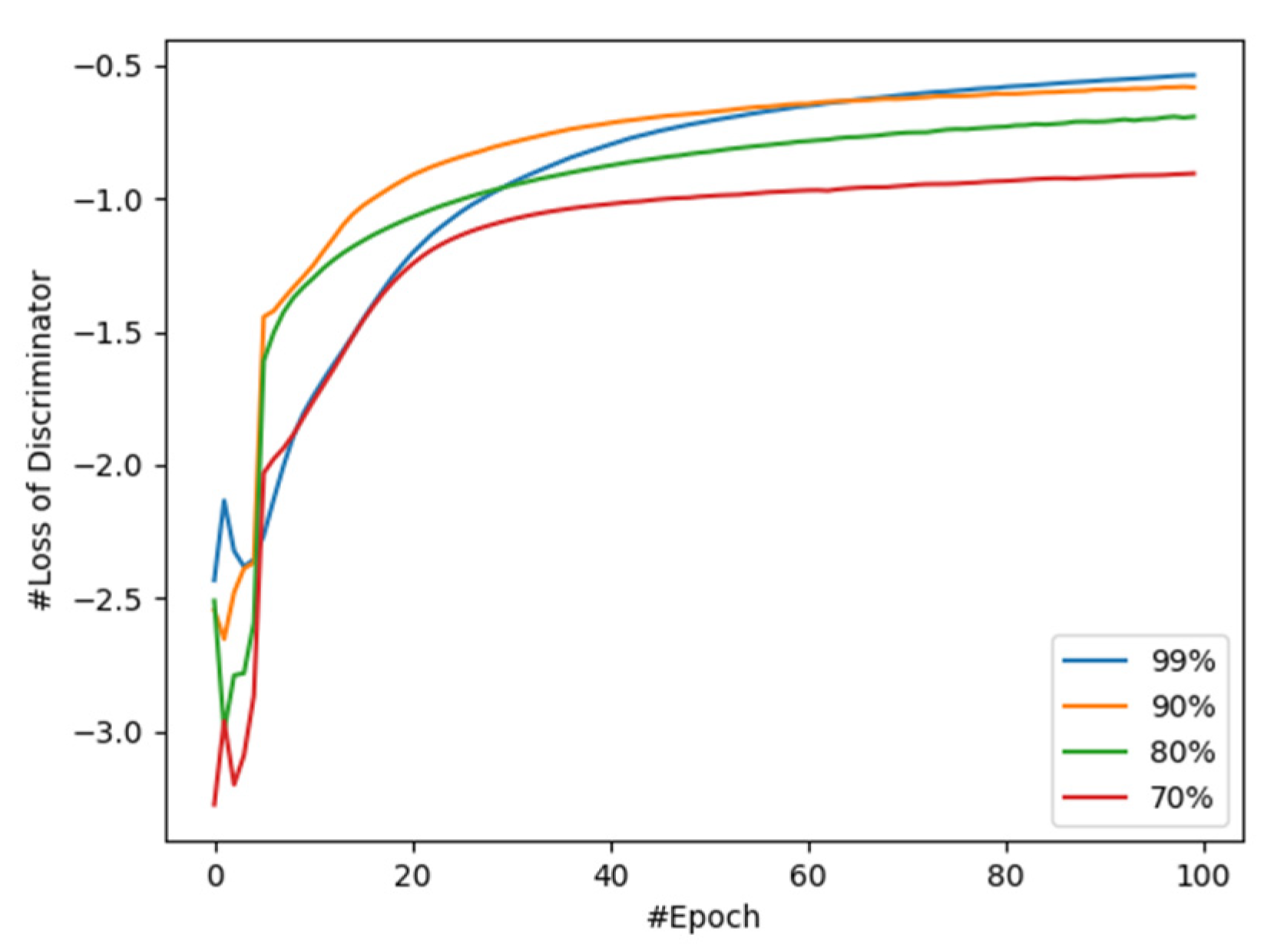

4.2. Guiding Effect of Siamese GAN

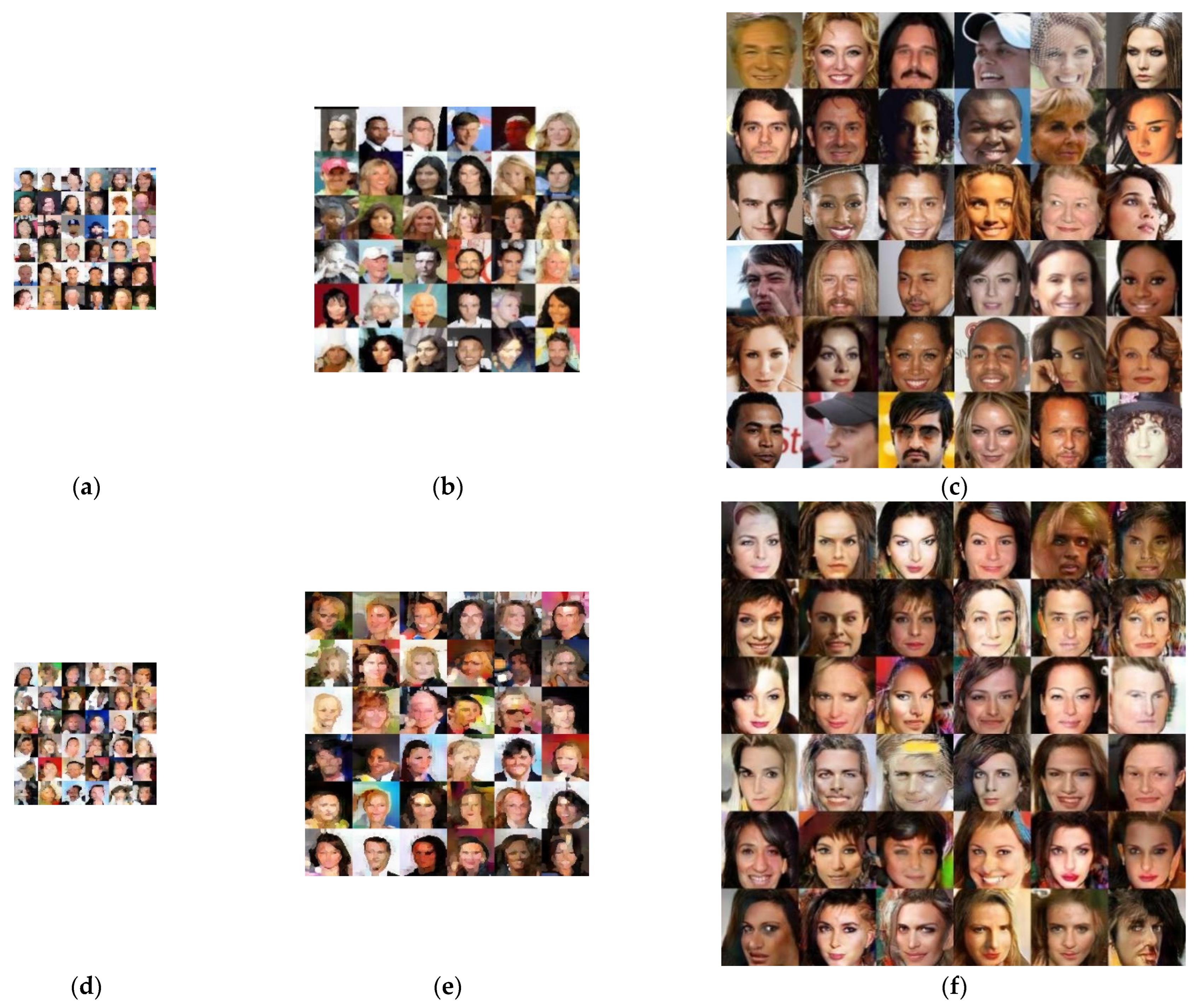

4.3. Generated Samples with Stacked Siamese GAN

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, Y.; Xiang, X.; Xiong, N.; Huang, B.; Lee, H.J.; Alrifai, R.; Jiang, X.; Fang, Z. Human Action Monitoring for Healthcare Based on Deep Learning. IEEE Access 2018, 6, 52277–52285. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Benigo, Y. Generative Adversarial Nets. In Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Huang, S.; Zeng, Z.; Ota, K.; Dong, M.; Wang, T.; Xiong, N. An intelligent collaboration trust interconnections system for mobile information control in ubiquitous 5G networks. IEEE Trans. Netw. Sci. Eng. 2020, 8, 347–365. [Google Scholar] [CrossRef]

- Na, L.; Yang, G.; An, D.; Qi, X.; Luo, Z.; Yau, S.T.; Gu, X. Mode Collapse and Regularity of Optimal Transportation Maps. arXiv 2019, arXiv:1902.02934. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Zhang, A.; Su, L.; Zhang, Y.; Fu, Y.; Wu, L.; Liang, S. EEG data augmentation for emotion recognition with a multiple generator conditional Wasserstein GAN. Complex Intell. Syst. 2021, 8, 3059–3071. [Google Scholar] [CrossRef]

- Yin, Z.; Xia, K.; He, Z.; Zhang, J.; Wang, S.; Zu, B. Unpaired image denoising via Wasserstein GAN in low-dose CT image with multi-perceptual loss and fidelity loss. Symmetry 2021, 13, 126. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumolin, V.; Courville, A. Improved Training of Wasserstein GANs. arXiv 2017, arXiv:1704.00028. [Google Scholar]

- Gurumurthy, S.; Sarvadevabhatla, R.K.; Radhakrishnan, V.B. DeLiGAN: Generative Adversarial Networks for Diverse and Limited Data. arXiv 2017, arXiv:1706.02071. [Google Scholar]

- Wu, C.; Ju, B.; Wu, Y.; Lin, X.; Xiong, N.; Xu, G.; Li, H.; Liang, X. UAV Autonomous Target Search Based on Deep Reinforcement Learning in Complex Disaster Scene. IEEE Access 2019, 7, 117227–117245. [Google Scholar] [CrossRef]

- Uddin, M.S.; Li, J. Generative Adversarial Networks for Visible to Infrared Video Conversion. In Recent Advances in Image Restoration with Applications to Real World Problems; IntechOpen: London, UK, 2020. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Uddin, M.S.; Hoque, R.; Islam, K.A.; Kwan, C.; Gribben, D.; Li, J. Converting optical videos to infrared videos using attention gan and its impact on target detection and classification performance. Remote Sens. 2021, 13, 3257. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Fu, A.; Zhang, X.; Xiong, N.; Gao, Y.; Wang, H.; Zhang, J. VFL: A Verifiable Federated Learning with Privacy-Preserving for Big Data in Industrial IoT. IEEE Trans. Ind. Inform. 2022, 18, 3316–3326. [Google Scholar] [CrossRef]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. arXiv 2016, arXiv:1606.03657. [Google Scholar]

- Zeng, Y.; Sreenan, C.J.; Xiong, N.; Yang, L.T.; Park, J.H. Connectivity and coverage maintenance in wireless sensor networks. J. Supercomput. 2010, 52, 23–46. [Google Scholar]

- Wu, C.; Luo, C.; Xiong, N.; Zhang, W.; Kim, T.-H. A Greedy Deep Learning Method for Medical Disease Analysis. IEEE Access 2018, 6, 20021–20030. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Denton, E.L.; Chintala, S.; Fergus, R. Deep generative image models using a laplacian pyramid of adversarial networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; p. 28. [Google Scholar]

- Xia, F.; Hao, R.; Li, J.; Xiong, N.; Yang, L.T.; Zhang, Y. Adaptive GTS allocation in IEEE 802.15.4 for real-time wireless sensor networks. J. Syst. Archit. 2013, 59, 1231–1242. [Google Scholar] [CrossRef]

- Huang, X.; Li, Y.; Poursaeed, O.; Hopcroft, J.; Belongie, S. Stacked generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5077–5086. [Google Scholar]

- Zhou, Y.; Zhang, Y.; Hao, L.; Xiong, N.; Vasilakos, A.V. A Bare-Metal and Asymmetric Partitioning Approach to Client Virtualization. IEEE Trans. Serv. Comput. 2014, 7, 40–53. [Google Scholar]

- Chen, Y.; Zhou, L.; Bouguila, N.; Wang, C.; Chen, Y.; Du, J. BLOCK-DBSCAN: Fast clustering for large scale data. Pattern Recognit. 2020, 109, 107624. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Cheng, H.; Xie, Z.; Shi, Y.; Xiong, N. Multi-step data prediction in wireless sensor networks based on one-dimensional CNN and bidirectional LSTM. IEEE Access 2019, 7, 117883–117896. [Google Scholar] [CrossRef]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature Verification Using A “Siamese” Time Delay Neural Network. Int. J. Pattern Recognit. Artif. Intell. 1993, 7, 669. [Google Scholar] [CrossRef]

- Ruder, S. An Overview of Multi-Task Learning in Deep Neural Networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Zhao, J.; Huang, J.; Xiong, N. An Effective Exponential-Based Trust and Reputation Evaluation System in Wireless Sensor Networks. IEEE Access 2019, 7, 33859–33869. [Google Scholar]

- Yao, Y.; Xiong, N.; Park, J.H.; Ma, L.; Liu, J. Privacy-preserving max/min query in two-tiered wireless sensor networks. Comput. Math. Appl. 2013, 65, 1318–1325. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–23 June 2016. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Alex, K.; Hinton, G. Convolutional deep belief networks on cifar-10. Comput. Sci. 2010, 40, 1–9, Unpublished manuscript. [Google Scholar]

- Liu, Z.; Ping, L.; Wang, X.; Tang, X. Deep Learning Face Attributes in the Wild. In Proceedings of the IEEE International Conference on Computer Vision, Las Vegas, NV, USA, 27–23 June 2016. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 257–269. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. arXiv 2017, arXiv:1706.08500. [Google Scholar]

- Qi, G.J. Loss-sensitive generative adversarial networks on lipschitz densities. Int. J. Comput. Vis. 2020, 128, 1118–1140. [Google Scholar]

| Symbol | Meaning |

|---|---|

| Generator | |

| Discriminator | |

| Encoder | |

| Supervisor | |

| Training sample | |

| Generated sample | |

| Label | |

| Extracted feature | |

| Generated feature | |

| Adversarial loss | |

| Conditional loss |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Han, R.; Yared, R. Stacked Siamese Generative Adversarial Nets: A Novel Way to Enlarge Image Dataset. Electronics 2023, 12, 654. https://doi.org/10.3390/electronics12030654

Liu S, Han R, Yared R. Stacked Siamese Generative Adversarial Nets: A Novel Way to Enlarge Image Dataset. Electronics. 2023; 12(3):654. https://doi.org/10.3390/electronics12030654

Chicago/Turabian StyleLiu, Shanlin, Ren Han, and Rami Yared. 2023. "Stacked Siamese Generative Adversarial Nets: A Novel Way to Enlarge Image Dataset" Electronics 12, no. 3: 654. https://doi.org/10.3390/electronics12030654

APA StyleLiu, S., Han, R., & Yared, R. (2023). Stacked Siamese Generative Adversarial Nets: A Novel Way to Enlarge Image Dataset. Electronics, 12(3), 654. https://doi.org/10.3390/electronics12030654