Multi-Vehicle Navigation Using Cooperative Localization

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Works

1.3. Main Contributions and Novelties

- This work represents the first application of cooperative localization in nonlinear closed-loop control of networks of 2D and 3D vehicles.

- This work represents the first experimental validation of cooperative localization in closed-loop control of 2D and 3D vehicle networks.

- This work provides new guidance for optimal utilization of cooperative localization in state estimation for closed-loop control.

2. Single-Vehicle Model

2.1. 3D Vehicle Pose

2.2. 3D Vehicle Velocity

2.3. 3D Vehicle State Equations

2.4. Ground Vehicle Model

3. Nonlinear Control Design

3.1. Roll and Pitch Moments

3.2. Thrust Force

3.3. Yaw Control

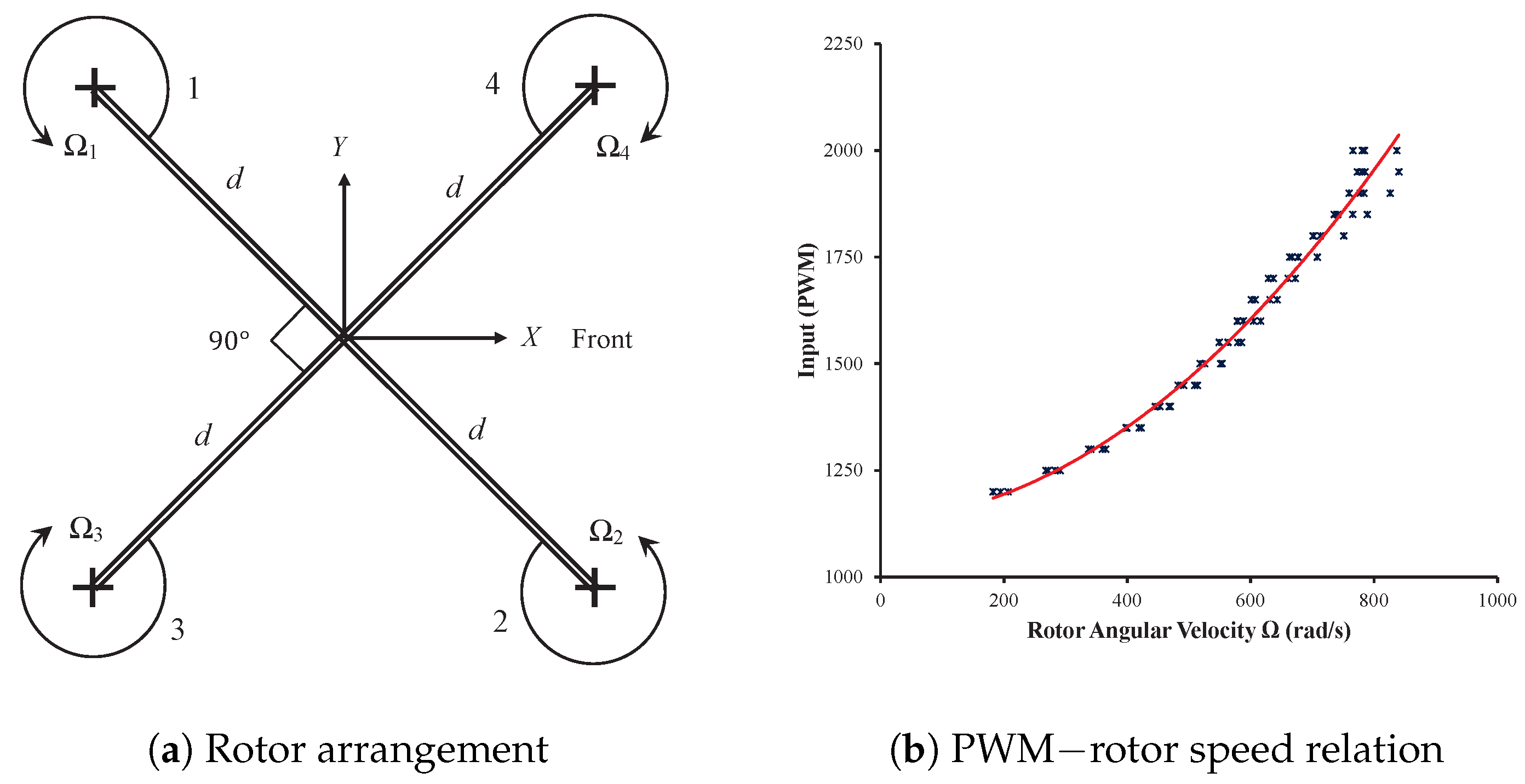

3.4. Relation between Control and Rotor Inputs

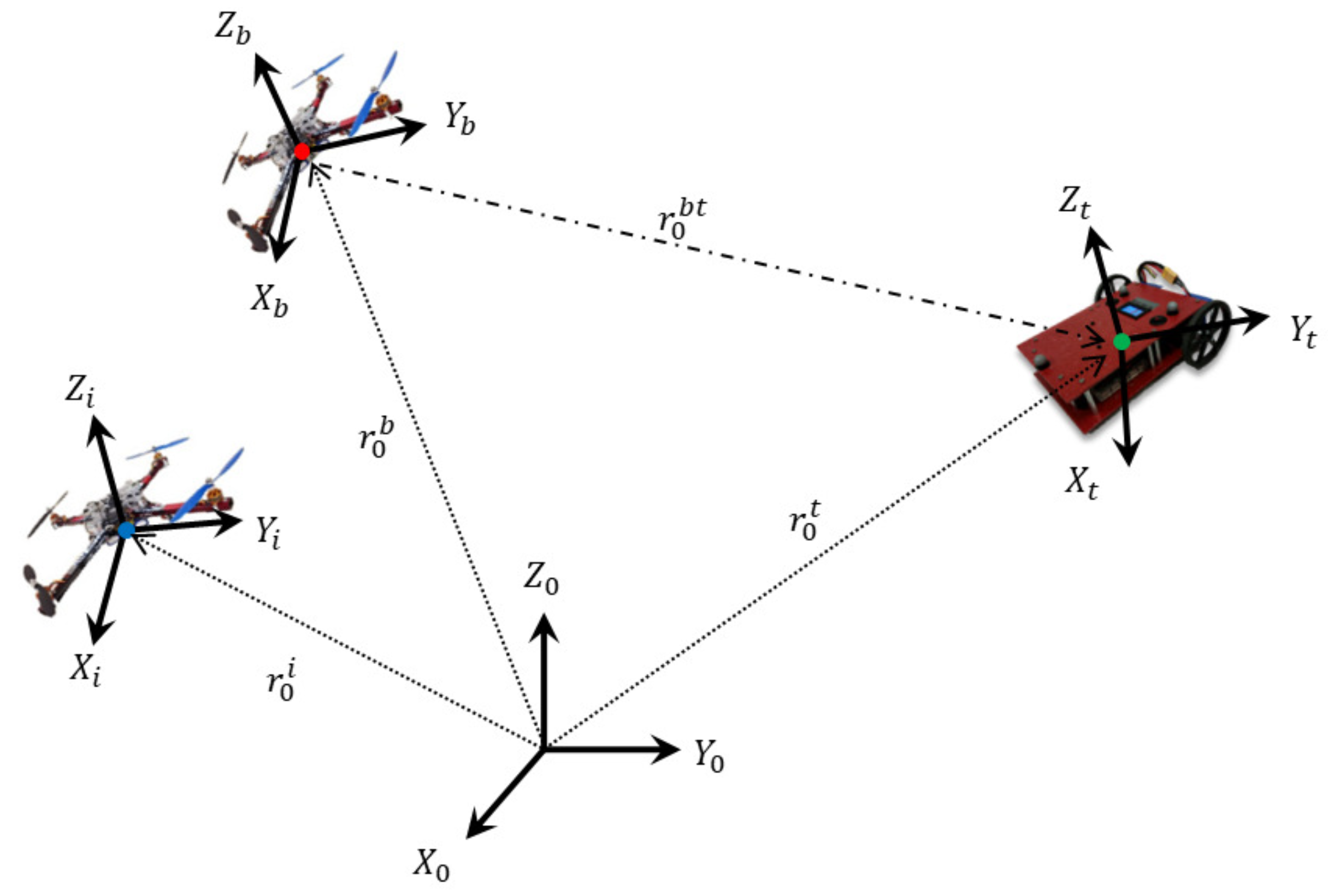

4. Multi-Vehicle System Model

5. Cooperative Localization

6. Results

6.1. Experimental Setup

6.2. Validation Results

6.2.1. Results with Distance-Based Measurements

6.2.2. Double-Caravan Measurement Formation

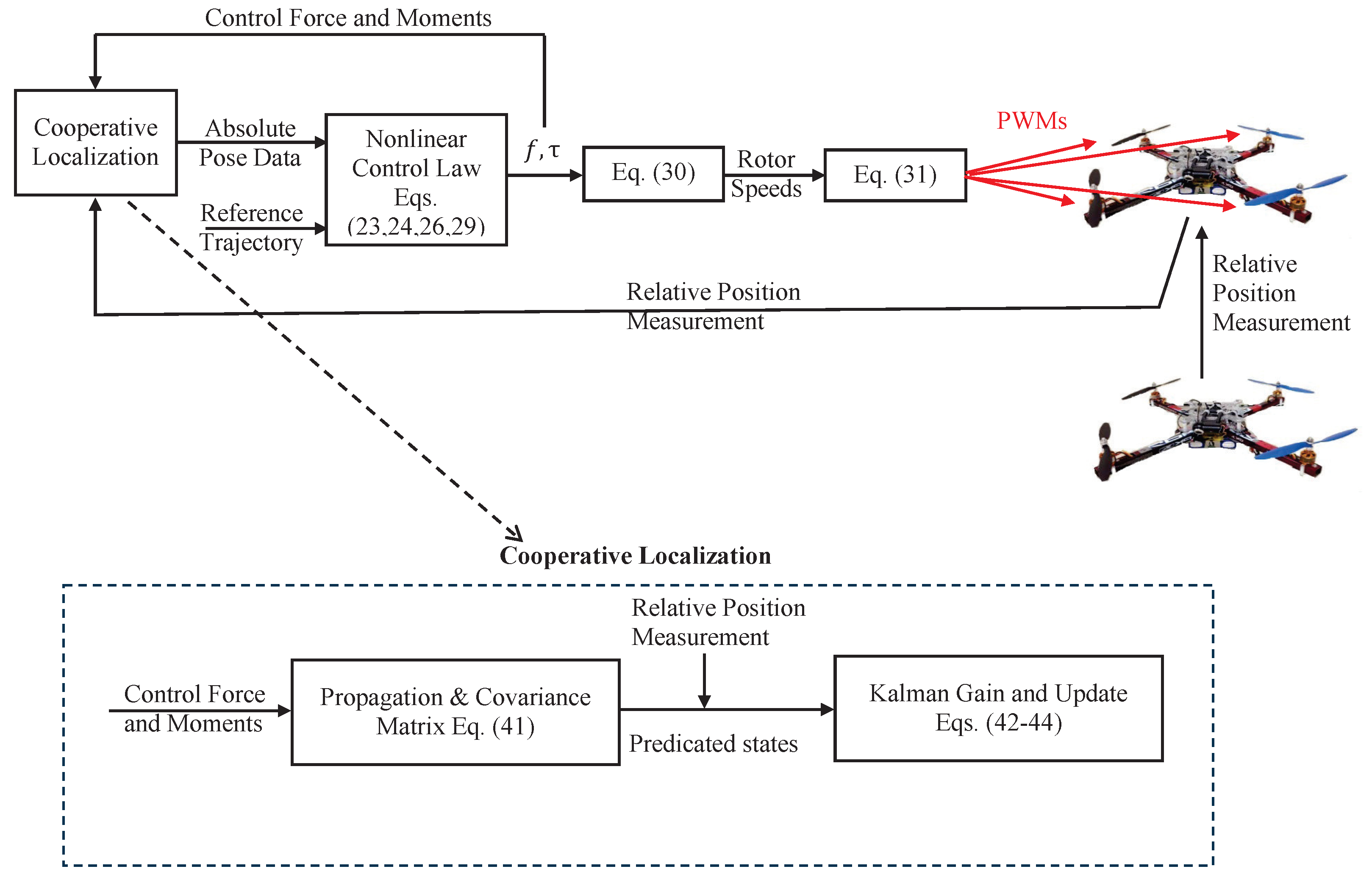

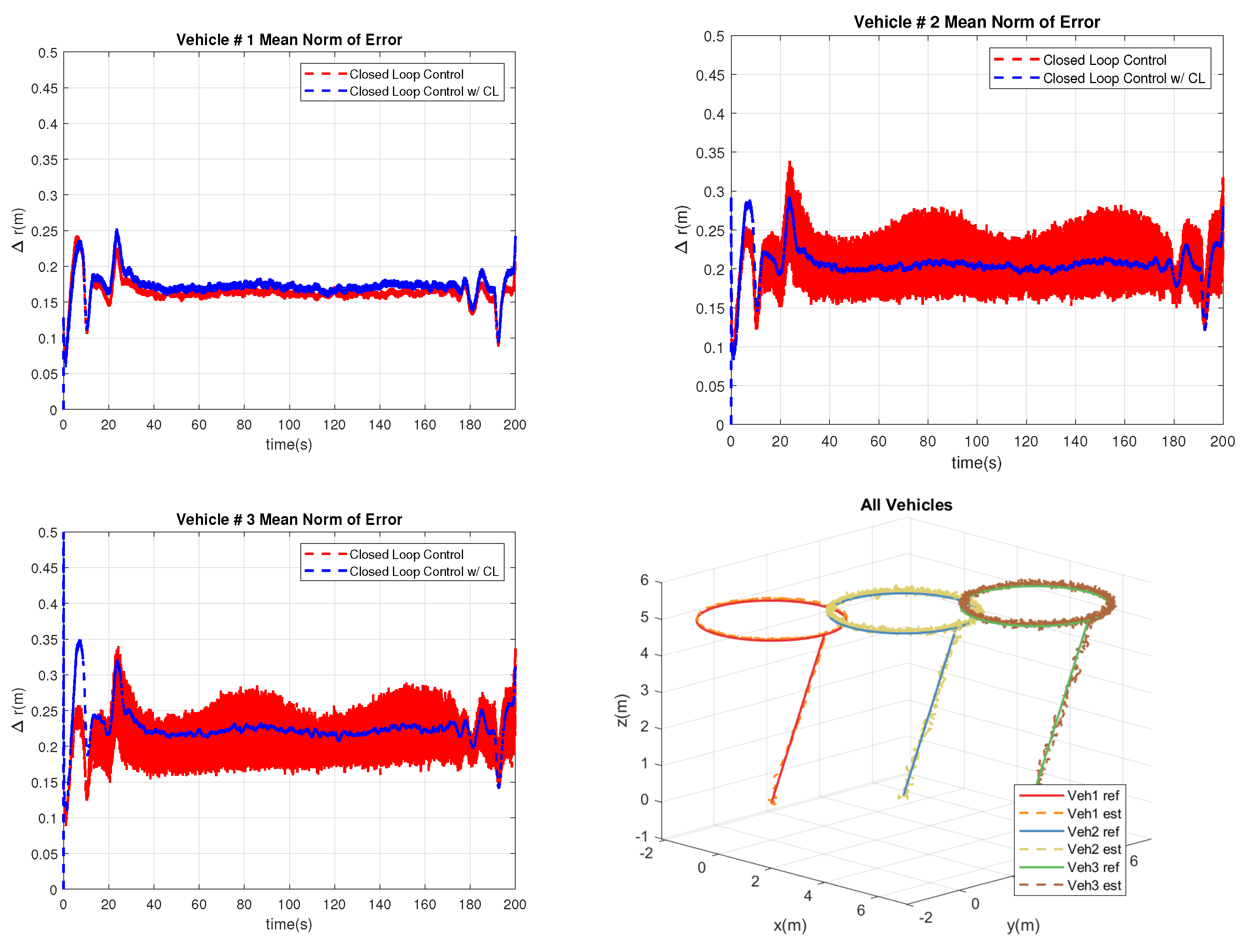

6.3. Closed-Loop Control Results

6.3.1. Control of Three-Vehicle Network

6.3.2. Control of Double-Caravan Formation

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CL | Cooperative Localization |

| 2D | Two-Dimensional |

| 3D | Three-Dimensional |

| EKF | Extended Kalman Filter |

| UKF | Unscented Kalman Filter |

| GPS | Global Positioning System |

| SLAM | Simultaneous Localization and Mapping |

References

- Kia, S.S.; Rounds, S.; Martinez, S. Cooperative Localization for Mobile Agents: A Recursive Decentralized Algorithm Based on Kalman-Filter Decoupling. IEEE Control Syst. Mag. 2016, 36, 86–101. [Google Scholar] [CrossRef]

- Gamini Dissanayake, M.W.M.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Bibuli, M.; Bruzzone, G.; Caccia, M.; Gasparri, A.; Priolo, A.; Zereik, E. Swarm-based path-following for cooperative unmanned surface vehicles. Proc. Inst. Mech. Eng. Part M J. Eng. Marit. Environ. 2014, 228, 192–207. [Google Scholar] [CrossRef]

- Liu, K.; Yang, P.; Jiao, L.; Wang, R.; Yuan, Z.; Dong, S. Antisaturation fixed-time attitude tracking control based low-computation learning for uncertain quadrotor UAVs with external disturbances. Aerosp. Sci. Technol. 2023, 142, 108668. [Google Scholar] [CrossRef]

- Roumeliotis, S.I.; Bekey, G.A. Distributed multirobot localization. IEEE Trans. Robot. Autom. 2002, 18, 781–795. [Google Scholar] [CrossRef]

- Mayle, M.N.; Sharma, R. Cooperative Localization in a GPS-Limited Environment Using Inter-Vehicle Range Measurements for a System of Multiple, Non-Homogeneous Vehicles. In Proceedings of the AIAA Scitech 2021 Forum, Virtual Event, 11–15, 19–21 January 2021; American Institute of Aeronautics and Astronautics Inc.: Reston, VA, USA, 2021; pp. 1–18. [Google Scholar] [CrossRef]

- Panzieri, S.; Pascucci, F.; Sciavicco, L.; Setola, R. Distributed multi-robot localization. Robot. Concepts Methodol. Tools Appl. 2013, 1, 391–406. [Google Scholar] [CrossRef]

- Carrillo-Arce, L.C.; Nerurkar, E.D.; Gordillo, J.L.; Roumeliotis, S.I. Decentralized multi-robot cooperative localization using covariance intersection. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1412–1417. [Google Scholar] [CrossRef]

- Wanasinghe, T.R.; Mann, G.K.I.; Gosine, R.G. Decentralized Cooperative Localization Approach for Autonomous Multirobot Systems. J. Robot. 2016, 2016, 2560573. [Google Scholar] [CrossRef]

- Nerurkar, E.D.; Roumeliotis, S.I. Asynchronous multi-centralized cooperative localization. In Proceedings of the IEEE/RSJ 2010 International Conference on Intelligent Robots and Systems, IROS 2010—Conference Proceedings, Taipei, Taiwan, 18–22 October 2010; pp. 4352–4359. [Google Scholar] [CrossRef]

- Zhu, J.; Kia, S.S. Decentralized Cooperative Localization with LoS and NLoS UWB Inter-Agent Ranging. IEEE Sens. J. 2022, 22, 5447–5456. [Google Scholar] [CrossRef]

- Zhu, P.; Geneva, P.; Ren, W.; Huang, G. Distributed Visual-Inertial Cooperative Localization. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 8714–8721. [Google Scholar] [CrossRef]

- Wang, D.; Qi, H.; Lian, B.; Liu, Y.; Song, H. Resilient Decentralized Cooperative Localization for Multisource Multirobot System. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Shen, Y.; Shi, X. Hybrid TOA-AOA WLS Estimator for Aircraft Network Decentralized Cooperative Localization. IEEE Trans. Veh. Technol. 2023, 72, 9670–9675. [Google Scholar] [CrossRef]

- Kia, S.S.; Hechtbauer, J.; Gogokhiya, D.; Martinez, S. Server-Assisted Distributed Cooperative Localization over Unreliable Communication Links. IEEE Trans. Robot. 2018, 34, 1392–1399. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, T.; Gao, F. Distributed cooperative localization with lower communication path requirements. Robot. Auton. Syst. 2015, 79, 26–39. [Google Scholar] [CrossRef]

- Wang, X.; Guo, Y.; Cao, J.; Wu, M.; Sun, Z.; Lv, C. Simplify Belief Propagation and Variation Expectation Maximization for Distributed Cooperative Localization. Appl. Sci. 2022, 12, 3851. [Google Scholar] [CrossRef]

- Huang, Y.; Xue, C.; Zhu, F.; Wang, W.; Zhang, Y.; Chambers, J.A. Adaptive Recursive Decentralized Cooperative Localization for Multirobot Systems with Time-Varying Measurement Accuracy. IEEE Trans. Instrum. Meas. 2021, 70, 1–25. [Google Scholar] [CrossRef]

- Xin, J.; Xie, G.; Yan, B.; Shan, M.; Li, P.; Gao, K. Multimobile Robot Cooperative Localization Using Ultrawideband Sensor and GPU Acceleration. IEEE Trans. Autom. Sci. Eng. 2022, 19, 2699–2710. [Google Scholar] [CrossRef]

- Lin, J.; Lou, Z.; Zweigel, R.; Abel, D. Cooperative Localization of a Networked Multi-Vehicle System. IFAC-PapersOnLine 2019, 52, 67–72. [Google Scholar] [CrossRef]

- Wu, Y.; Peng, B.; Wymeersch, H.; Seco-Granados, G.; Kakkavas, A.; Garcia, M.H.C.; Stirling-Gallacher, R.A.; Casta, M.H.; Stirling-Gallacher, R.A. Cooperative Localization with Angular Measurements and Posterior Linearization. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Qu, M.; Lu, H. Cooperative Simultaneous Localization and Mapping with Local Measurements in 2D Space. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; pp. 509–513. [Google Scholar] [CrossRef]

- Han, Y.; Wei, C.; Li, R.; Wang, J.; Yu, H. A novel cooperative localization method based on IMU and UWB. Sensors 2020, 20, 467. [Google Scholar] [CrossRef]

- Héry, E.; Xu, P.; Bonnifait, P.; Hery, E.; Xu, P.; Bonnifait, P. Consistent decentralized cooperative localization for autonomous vehicles using LiDAR, GNSS, and HD maps. J. Field Robot. 2021, 38, 552–571. [Google Scholar] [CrossRef]

- Pires, A.G.; Rezeck, P.A.F.; Chaves, R.A.; Macharet, D.G.; Chaimowicz, L. Cooperative Localization and Mapping with Robotic Swarms. J. Intell. Robot. Syst. Theory Appl. 2021, 102, 1–23. [Google Scholar] [CrossRef]

- Ben, Y.; Sun, Y.; Li, Q.; Cui, W.; Zhang, Q. A Cooperative Localization Algorithm Considering Unknown Ocean Currents for Multiple AUVs. In Lecture Notes in Electrical Engineering; Springer Science and Business Media Deutschland GmbH: Singapore, 2022; Volume 861, pp. 1379–1387. [Google Scholar]

- Shan, X.; Cabani, A.; Chafouk, H. Cooperative Localization Based on GPS Correction and EKF in Urban Environment. In Proceedings of the 2022 2nd International Conference on Innovative Research in Applied Science, Engineering and Technology, IRASET 2022, Meknes, Morocco, 3–4 March 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Oliveros, J.C.; Ashrafiuon, H. Application and Assessment of Cooperative Localization in Three-Dimensional Vehicle Networks. Appl. Sci. 2022, 12, 11805. [Google Scholar] [CrossRef]

- Oliveros, J.C.; Ashrafiuon, H. Cooperative Localization Using the 3D Euler–Lagrange Vehicle Model. Guid. Navig. Control 2022, 2, 2250018. [Google Scholar] [CrossRef]

- Miller, A.; Rim, K.; Chopra, P.; Kelkar, P.; Likhachev, M. Cooperative Perception and Localization for Cooperative Driving. In Proceedings of the IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020; pp. 1256–1262. [Google Scholar] [CrossRef]

- Ilaya, O. Cooperative Control for Multi-Vehicle Swarms. Ph.D. Thesis, RMIT University, Melbourne, Australia, 2009. [Google Scholar]

- Yin, T.; Zou, D.; Lu, X.; Bi, C. A Multisensor Fusion-Based Cooperative Localization Scheme in Vehicle Networks. Electronics 2022, 11, 603. [Google Scholar] [CrossRef]

- Ren, W.; Beard, R.W. Distributed consensus in multi-vehicle cooperative control: Theory and applications. In Communications and Control Engineering; Number 9781848000148 in Communications and Control Engineering; Springer: London, UK, 2008; pp. 1–315. [Google Scholar] [CrossRef]

- Betsch, P.; Siebert, R. Rigid body dynamics in terms of quaternions: Hamiltonian formulation and conserving numerical integration. Int. J. Numer. Methods Eng. 2009, 79, 444–473. [Google Scholar] [CrossRef]

- Ashrafiuon, H.; Nersesov, S.; Clayton, G. Trajectory Tracking Control of Planar Underactuated Vehicles. IEEE Trans. Autom. Control 2017, 62, 1959–1965. [Google Scholar] [CrossRef]

- Tang, P.; Lin, D.; Zheng, D.; Fan, S.; Ye, J. Observer based finite-time fault tolerant quadrotor attitude control with actuator faults. Aerosp. Sci. Technol. 2020, 104, 105968. [Google Scholar] [CrossRef]

- Poultney, A.; Kennedy, C.; Clayton, G.; Ashrafiuon, H. Robust Tracking Control of Quadrotors Based on Differential Flatness: Simulations and Experiments. IEEE/ASME Trans. Mechatron. 2018, 23, 1126–1137. [Google Scholar] [CrossRef]

| Simultaneous Measurements () | |

|---|---|

| Case 1 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 1 → 5 |

| Case 2 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 1 → 5, 4 → 4 (continuous) |

| Case 3 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 1 → 5, 4 → 4 (intermittent) |

| Case 4 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 4 → 8 |

| Simultaneous Measurements () | |

|---|---|

| Case 1 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 8 → 4, 4 → 4 (continuous) |

| Case 2 | 1 → 2, 2 → 3, 3 → 4, 5 → 6, 6 → 7, 7 → 8, 8 → 4, 4 → 4 (intermittent) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliveros, J.C.; Ashrafiuon, H. Multi-Vehicle Navigation Using Cooperative Localization. Electronics 2023, 12, 4945. https://doi.org/10.3390/electronics12244945

Oliveros JC, Ashrafiuon H. Multi-Vehicle Navigation Using Cooperative Localization. Electronics. 2023; 12(24):4945. https://doi.org/10.3390/electronics12244945

Chicago/Turabian StyleOliveros, Juan Carlos, and Hashem Ashrafiuon. 2023. "Multi-Vehicle Navigation Using Cooperative Localization" Electronics 12, no. 24: 4945. https://doi.org/10.3390/electronics12244945

APA StyleOliveros, J. C., & Ashrafiuon, H. (2023). Multi-Vehicle Navigation Using Cooperative Localization. Electronics, 12(24), 4945. https://doi.org/10.3390/electronics12244945