Abstract

Quality assurance (QA) plays a crucial role in manufacturing to ensure that products meet their specifications. However, manual QA processes are costly and time-consuming, thereby making artificial intelligence (AI) an attractive solution for automation and expert support. In particular, convolutional neural networks (CNNs) have gained a lot of interest in visual inspection. Next to AI methods, the explainable artificial intelligence (XAI) systems, which achieve transparency and interpretability by providing insights into the decision-making process of the AI, are interesting methods for achieveing quality inspections in manufacturing processes. In this study, we conducted a systematic literature review (SLR) to explore AI and XAI approaches for visual QA (VQA) in manufacturing. Our objective was to assess the current state of the art and identify research gaps in this context. Our findings revealed that AI-based systems predominantly focused on visual quality control (VQC) for defect detection. Research addressing VQA practices, like process optimization, predictive maintenance, or root cause analysis, are more rare. Least often cited are papers that utilize XAI methods. In conclusion, this survey emphasizes the importance and potential of AI and XAI in VQA across various industries. By integrating XAI, organizations can enhance model transparency, interpretability, and trust in AI systems. Overall, leveraging AI and XAI improves VQA practices and decision-making in industries.

1. Introduction

In the present globalized economic era, the competition in the business world requires that management teams of various organizations and businesses must constantly drive along the quality route to outpace each other [1]. Monitoring and assessing the manufacturing process is an important factor for manufacturers to detect potential failures that may lead to the degradation of the machinery of manufacturing of downgraded products and thus assure a constant product quality [2]. To ensure that a product is defect-free before leaving the factory, some type of quality assurance (QA) practices is necessary. Quality control (QC) is a subset of QA and it is mostly used to assure quality in products. Artificial intelligence (AI) can help manufacturers perform QA more accurately and cost-effectively by automating QC [3]. Moreover, as the manufacturing industry seeks innovative QA approaches, the emergence of paradigms such as Zero Defect Manufacturing (ZDM) is gaining traction. ZDM represents a transformative approach aimed at eliminating defects throughout the production process, thereby aligning with the broader industry’s pursuit of superior quality and efficiency [4]. AI applications have made progress in solving the automatic recognition of patterns in data by using machine learning (ML) and deep learning (DL) methods. These AI-based systems not only streamline QC processes, but also have the potential to seamlessly integrate with evolving QA practices aimed at achieving the core strategies of ZDM. Such integration enables manufacturers to perform QA more effectively by harnessing AI’s capabilities for precise defect detection, process optimization, predictive maintenance, and root cause analysis, all while reducing costs and enhancing overall product quality. Data-driven decision making may influence meaningful productivity gains in the industry sectors; however, for the stable deployment of AI-based systems and their acceptance by experts and regulators, decisions and results must be comprehensible or interpretable and transparent; in other words, they must be “Explainable” [5].

In recent years, explainable artificial intelligence (XAI) has emerged as a promising solution to these challenges, thus providing transparency and interpretability to AI systems. The goal of XAI is to help researchers, developers, domain experts, and users better understand the inner operation of ML models while preserving their high performance and accuracy. XAI methods seek to understand what the AI-based system discovered during training and how decisions are made for specific or new occurrences during the prediction process [6,7]. This can help to understand why predictions went wrong or why ML achieves a specific result and how to leverage the result further. The survey paper of Ahmed et al. [5] made a comparative study about AI and XAI in Industry 4.0 and discussed studies about AI and XAI to assure quality. However, that study focuses mainly on pure QC aspect, which is a subset of QA.

In this work, we systematically reviewed the state-of-the-art literature related to AI and XAI for visual quality assurance (VQA) in the manufacturing area to investigate various AI and XAI approaches and methods applied to different VQA practices and to explore to what extent XAI has already been adopted in this field. To the best of our knowledge, this is the first comprehensive survey that delves into the application of AI and XAI methods in the context of VQA in manufacturing, thus encompassing a wide spectrum of practices, including visual quality control (VQC), process optimization, predictive maintenance, and root cause analysis. Prior research has already examined the use of AI for QA [5] and even explored local explanations [8]; however, these studies have only encompassed QC or predictive maintenance. Our survey distinguishes itself in two crucial aspects. Firstly, it takes a broader perspective by spanning across various industrial sectors. Unlike many existing surveys that are confined to a specific industry, our analysis aims to better understand the use of AI and XAI in different industrial sectors, thereby providing a more comprehensive view of the landscape. Secondly, we focus on VQA practices, which are pivotal in industries where visual inspection plays a crucial role. While VQC is a common theme in existing surveys, we extended our scope to include VQA. In this survey, we meticulously examined the existing literature regarding VQA, with a specific emphasis on AI and XAI techniques. Moreover, we provide a detailed analysis of the ratio of XAI to AI methods within this context, thus including information on how many studies applied XAI to their AI systems and a breakdown of the types of XAI approaches used, which sheds light on the evolution and trends in this rapidly evolving field. Our goal is to discover the research gap and future research directions across diverse industrial domains, as well as emphasis the benefits of AI and XAI. In Section 3, we outline the difference between QA and QC, since these two terms are often used interchangeably and define the meaning of VQA. In Section 4, we describe the AI and XAI approaches that are used to meet VQA practices and explain how transparency, interpretability, and trust can be enhanced in AI systems applied to VQA. In Section 5, we define the research questions that have been considered during this literature review and disclose the search process. In Section 6, we present the results of the literature review, explain the determined role of AI and XAI for VQA, and answer the research questions from Section 5. In Section 7, we discuss the results and findings, as well as determine the potential benefits, challenges, and limitations of AI and XAI for VQA in manufacturing and the research gap. Finally, Section 8 gives a short summary of this paper.

2. Machine Learning

2.1. Types of Machine Learning

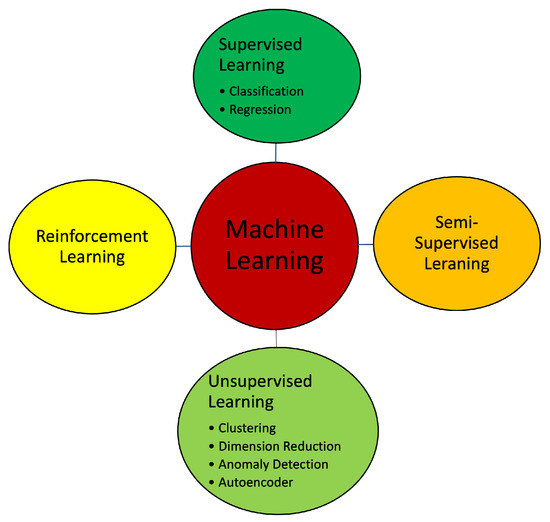

Machine learning (ML) is the study of learning algorithms. The learning refers to the situation wherein the performance of a computer program measured by a performance metric on a specific task improves itself with experience. The computer program that has been trained is called a model. Thus, ML models are computational algorithms and statistical techniques designed to enable computers to learn and make predictions or decisions without being explicitly programmed. These models are based on the principles of data-driven learning and pattern recognition. ML can be divided into four types, as illustrated in Figure 1: supervised learning, unsupervised learning, semisupervised learning, and reinforcement learning. In the following, these types are briefly explained [9]:

Figure 1.

Types of machine learning.

- Supervised Learning:

In supervised learning, the model learns to make prediction or classifications through training on labeled data. The input data (features) are paired with the corresponding output labels:

- Classification: In this task, the algorithm learns to predict discrete class labels for input data, e.g., classifying a manufactured product into defective or nondefective.

- Regression: Here, the algorithm learns to predict continuous numerical values. For instance, this includes predicting the energy consumption of a production line.

- Unsupervised Learning:

Unsupervised learning is a machine learning approach where a model learns patterns, structures, or relationships in data without explicit guidance from labeled examples. It aims to discover inherent patterns or clusters in the data, thus enabling tasks such as clustering, dimensionality reduction, and anomaly detection. Unlike supervised learning, unsupervised learning does not have known target labels, thereby making it suitable for exploring and understanding unlabeled data:

- Clustering: The algorithm groups similar data points together based on patterns or similarities in the input data. For example, this includes grouping machines with similar performance characteristics.

- Dimensionality Reduction: In this task, the algorithm reduces the number of input features while retaining important information. It aids in visualizing and understanding high-dimensional data and can be used as a preprocessing step for other machine learning tasks.

- Anomaly Detection (AD): Here, the algorithm learns the normal patterns in the data and identifies any data points that deviate significantly from those patterns, which often indicate anomalies or outliers. This method can be used, for example, to monitor signals of the process machinery to identify wear through abnormal behavior in the signal.

- Autoencoder (AE): An AE is a type of neural network that learns to encode and decode data; it is typically used for unsupervised learning and dimensionality reduction. It consists of an encoder network that compresses the input data into a lower-dimensional representation (latent space) and a decoder network that reconstructs the original data from the latent space. The goal of an AE is to minimize the reconstruction error, thereby forcing the model to learn a compressed representation that captures the most important features of the input data [10].

- Semisupervised Learning:

This learning paradigm utilizes both labeled and unlabeled data during the training process. It leverages a small amount of labeled data along with a larger amount of unlabeled data to improve the performance of the model. It is useful when labeling data is expensive or time-consuming.

- Reinforcement Learning:

This type of learning involves an agent interacting with an environment. The agent learns to take actions in the environment to maximize a reward signal. Through a trial-and-error process, the agent learns to make optimal decisions in different states of the environment to achieve long-term goals. Reinforcement learning is mainly used to solve multistep decision-making problems such as video games, robotics, and visual navigation.

2.2. Performance Metrics for VQA

In the context of VQA in manufacturing, the selection and application of appropriate performance metrics are critical for assessing the effectiveness of AI and XAI systems. After conducting a thorough review of 143 selected papers in this domain, several key performance metrics emerged as vital components in evaluating the success and reliability of AI and XAI solutions.

2.2.1. Evaluation of Artificial Intelligence Models

In this section, we introduce the most important performance metrics used in the resulting literature to assess AI models that are applied to VQA in manufacturing. Table 1 gives an overview of these metrics and provides their mathematical meanings, as well as their evaluation focuses.

Table 1.

Model performance metrics in AI for VQA.

Moreover, as VQA often involves complex decisions based on variable thresholds, the area under the precision–recall curve (PR-AUC) is crucial to understand the trade-offs between precision and recall at different decision boundaries. This metric is often used when class imbalance is a concern [15].

Speed is a further important metric for VQA, because many manufacturing processes require real-time quality assessment to identify defects or deviations from desired product specifications as products move through the production line. Speed is essential to ensure that quality issues are detected as soon as they occur, thereby allowing for immediate corrective action and preventing the production of faulty goods.

2.2.2. Evaluation of Explainable Artificial Intelligence Methods

In this section, we introduce the metrics used in the found literature to assess XAI methods. The following are the desirable characteristics that each XAI method should accomplish [16]:

- Identity: The principle of identity states that identical objects should receive identical explanations. This estimates the level of intrinsic nondeterminism in the method.

- Separability: Nonidentical objects cannot have identical explanations. If a feature is not actually needed for the prediction, then two samples that differ only in that feature will have the same prediction. In this scenario, the explanation method could provide the same explanation, even though the samples are different. For the sake of simplicity, this proxy is based on the assumption that every feature has a minimum level of importance, positive or negative, in the predictions.

- Stability: Similar objects must have similar explanations. This is built on the idea that an explanation method should only return similar explanations for slightly different objects.

- Selectivity: The elimination of relevant variables must negatively affect the prediction. To compute the selectivity, the features are ordered from the most to least relevant. One by one, the features are removed by setting it to zero, for example, and the residual errors are obtained to obtain the area under the curve (AUC).

- Coherence: It computes the difference between the prediction error over the original signal and the prediction error of a new signal where the nonimportant features are removed.

- Completeness: It evaluates the percentage of the explanation error from its respective prediction error.

- Congruence: The standard deviation of the coherence provides the congruence proxy. This metric helps to capture the variability of the coherence.

- Acumen: It is a new proxy proposed by the authors for the first time in [16], which is based on the idea that an important feature according to the XAI method should be one of the least important after it is perturbed. This proxy aims to detect whether the XAI method depends on the position of the feature. It is computed by comparing the ranking position of each important feature after perturbing it.

3. Visual Quality Assurance

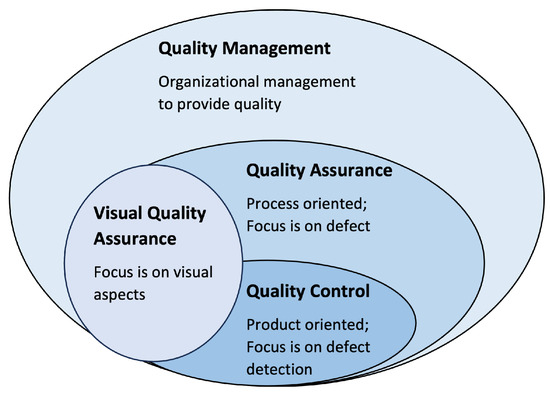

According to the American Society of Quality [17], QA and QC are two terms that are often used interchangeably. They both are associated with quality management (QM), and some QA and QC activities are interrelated; however, there are distinct differences between these two concepts. QA activities typically cover almost all of the QM system, while QC is a subset of QA. In Figure 2, the relationship between QM, QA, QC, and VQA is depicted.

Figure 2.

Relationship between quaility management, quality assurance, quality control, and visual quality assurance.

3.1. Quality Control

In the previous century, there was a strong emphasis on quality control (QC) concepts and associated processes, which were particularly focused on finished products. The total costs of all the inputs such as raw materials, labor, administrative costs, energy, special tooling, finishing, processing, machinery and equipment maintenance, and transportation of those defective products may be wholly or partially irrecoverable. Thus, the occurrence of defects affect the targeted business profits and at the same time hinder an organization’s ability to effectively compete in the global market place, which is getting more and more competitive day in and day out. On the other hand, QC does not actually assure the product buyer/end-user/consumer the quality of the product after selling. Instead, the responsibility for the monitoring of the product quality, when in use, is transferred to the end-users [1].

Definition 1

(Quality Control [1]). QC refers to the systematic process of inspecting, testing, and evaluating products or services to ensure that they meet predetermined quality standards. It involves identifying and rectifying any defects or deviations from the desired specifications, thereby maintaining consistency and ensuring that the final output meets customer expectations.

In general, QC is part of QM and focuses on fulfilling quality requirements. While QA relates to how a process is performed or how a product is made, QC is more oriented toward the inspection aspect of QM.

3.2. Quality Assurance

Product quality was somehow revolutionized toward the end of the last century by emphasizing the QA of all the inputs (tangible and intangible) and processing activities, including design, development, supplier, production, manufacturing, assembly, documentation, inventory, maintenance, and even after sales services. Therefore, it follows from here that the QA concept focuses attention generally on every stage of the work (i.e., process approach) and all the processes for realizing the product [1].

Definition 2

(Quality Assurance [1]). QA is a planned and systematic approach implemented by organizations to ensure that products, services, or processes consistently meet or exceed established quality standards. It involves the creation of processes, guidelines, and policies to maintain and enhance product or service excellence throughout all stages of development and delivery. The primary goal of quality assurance is to prevent defects, identify areas for improvement, and promote a culture of continuous quality enhancement.

The confidence provided by QA is, on the one hand, for the management of the manufacturer and, on the other hand, for the customers, government agencies, regulators, certifiers, and third parties.

3.3. Visual Quality Assurance

In this work, we focused on VQA. VQA is similar to QA; however, it focuses on the visual aspects of QA and is therefore a subset of QA. This includes focusing on image or image-like data or the combination of visual data with other sources to assure quality. In [1], various QA practices are described. However, not all of these practices apply to VQA, since VQA is a subset of QA that is limited to the visual aspects. During this extensive literature review, we extrated the following practices for VQA in manufacturing using AI:

- Visual Quality Control (VQC): This entails measuring and monitoring the quality of in-process products. By entrenching VQC measures at various processing levels and stages of production, more quality services are provided. It may therefore be said that VQC is a key element of VQA. VQA is process-oriented and thus provides additional control of the processes, such as assembly/processing/manufacturing processes.

- Process Optimization (PO): This entails measuring, monitoring, and regulating production process parameters that impact the performance of processes and the quality of outcomes. It involves setting acceptable ranges for these parameters and taking corrective action when they deviate from the defined thresholds.

- Predictive Maintenance (PM): This entails regulating and regularly calibrating the measurement equipment, as well as maintaining the components and machinery to prevent or predict quality losses.

- Root Cause Analysis (RCA): RCA involves analyzing data, identifying root causes of defects, and implementing corrective and preventive actions. The focus is on eliminating future defects and continuously raising the quality bar to achieve better results.

Based on the definition of QA in Definition 2, we derive a definition for VQA, thereby considering a focus only on the visual aspects.

Definition 3

(Visual Quality Assurance). Visual quality assurance is a planned and systematic approach implemented by organizations to ensure that the visual aspects of products, services, or processes consistently meet or exceed established quality standards. It involves the creation of processes, guidelines, and policies to maintain and enhance product or service excellence by considering the visual aspect throughout all stages of development and delivery. The primary goal of visual quality assurance is to prevent defects, identify areas for improvement, and promote a culture of continuous quality enhancement. This may encompass aspects such as aesthetic and overall visual consistency across manufactured products.

In Section 3.1 and Section 3.2, we established a foundational understanding of the concepts central to this review. Firstly, we provided the definitions of QA and QC based on existing literature. Building upon these definitions, we introduced the concept of VQA, which is a specialized subset of QA with a keen focus on visual aspects. Importantly, it should be noted that the papers explored in this review primarily pertain to VQA. As VQA is an integral component of QA, any literature reviewed in the former inherently contributes to the broader understanding of the latter. When a paper addresses VQC, it naturally fulfills the requirements of QC.

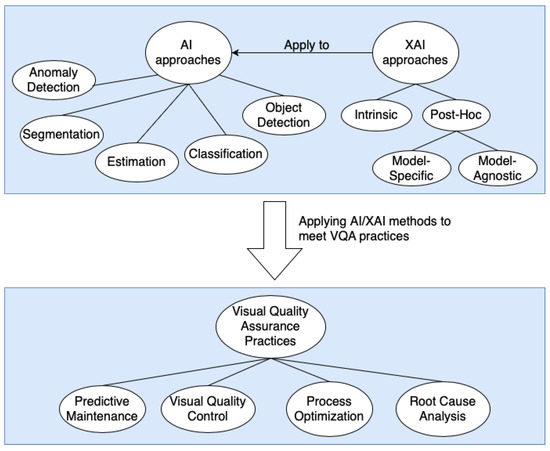

4. AI and XAI for Visual Quality Assurance

In this section, the potentials of AI and XAI approaches for the VQA process are discussed and outlined. Figure 3 represents the main AI and XAI approaches that have been used in the found literature to meet the VQA practices.

Figure 3.

Using AI and XAI approaches to meet VQA practices.

4.1. Artificial Intelligence Facilitates Visual Quality Assurance

AI is a new type of technological science that investigates and develops theories, methods, technologies, and application systems to simulate, improve, and upgrade human intelligence. It has been created to enable machines to reason like human beings and to endow them with intelligence. AI systems aim to possess characteristics such as problem-solving, pattern recognition, and decision-making capabilities. By leveraging techniques such as ML and computer vision, AI seeks to create intelligent machines that can process and interpret vast amounts of data, recognize patterns, adapt to changing circumstances, and perform complex tasks [9,18]. ML is already applied to solve real problems in different domains, such as medicine [19], handwriting recognition [20], and manufacturing [21]. The following AI approaches (see Figure 3) are typically used for VQA:

- Classification (Class): In this approach, the algorithm learns to predict discrete class labels for input data and thus can facilitate VQA by categorizing data, products, or processes [9]. It can automate VQC and ensure that only defect-free products are delivered. Classification can help in identifying areas for improvement by categorizing defects, reducing waste, and saving energy by identifying defects in early process stages [22]. By inspecting images of machine spare parts, wear can be identified and the spare parts can be replaced to prevent the quality losses of the manufactured products [23].

- Estimation (Est): Estimation refers to the process of predicting or approximating an unknown or future value based on available data. It involves making an inference or calculation to estimate a parameter or outcome [9]. By analyzing historical data, the most suitable processing parameters [24], the remaining error-free time [25], or the defect length can be predicted [14].

- Object Detection (OD): Object detection is a computer vision task that involves identifying and localizing objects within images or videos. It combines object classification (assigning labels to objects) and object localization (drawing bounding boxes around objects). Object detection algorithms can accurately detect and locate multiple objects or flaws in products [26]. Thus, objection detection is a more comprehensive approach than classification, because occuring or predicting flaws can be additionally localized.

- Segmentation (Seg): Segmentation is a computer vision task that involves dividing images or videos into distinct regions based on visual characteristics. Similar to object detection, it can be used to localize defects or anomalies within products or processes, thereby ensuring accurate inspections and prompt corrective actions [27].

- Anomaly Detection (AD): In anomaly detection, the algorithm learns the normal patterns in the data and identifies any data points that deviate significantly from those patterns, thus often indicating anomalies or outliers [28]. This method can be used, for example, to monitor image or image-like data in manufacturing and take corrective actions promptly if potential issues or anomalies are identified [10]. In VQA, it can be used to detect rare or abnormal occurences in images, thus helping organizations to make data-driven decisions to improve processes.

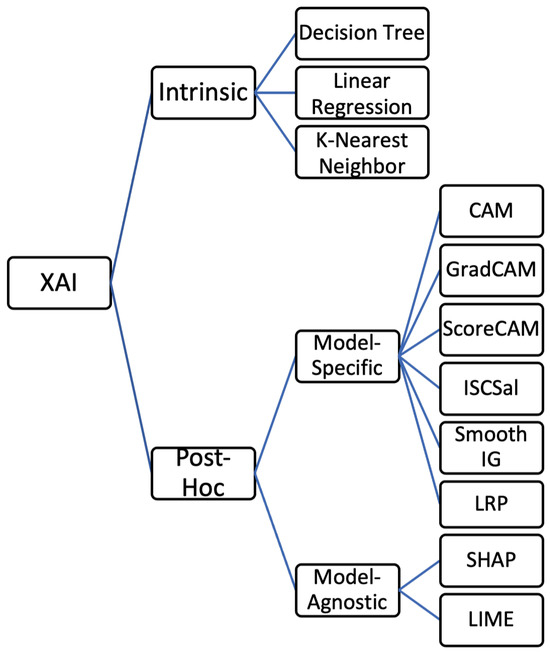

4.2. Explainable Artificial Intelligence Facilitates Quality Assurance

In conventional AI systems, the learning process cannot be interpreted by the end-users, and it looks like an opaque black box. XAI, on the other hand, specifically emphasizes the need for transparency and interpretability in AI systems. By explaining the relationships between input variables and quality outcomes, it helps identify the underlying causes of quality losses. It aims to address the black box nature of many AI models, where their decision-making processes are often difficult to understand or explain. XAI focuses on developing techniques and methods that enable humans to understand and interpret the results and inner workings of AI models. The ability to understand the mechanism of decision making of a model is an important factor for three main reasons. First, it makes it possible to further refine and improve its analysis. Second, it becomes easier to explain to nondata scientists the way in which the model uses the data to make decisions. Third, explainability can help data scientists to avoid the negative or unforeseen consequences of their models. These three factors of XAI give us more confidence in our model development and deployment [29]. Figure 4 shows a simple taxonomy that represents and categorizes the XAI methods found in this literature review.

Figure 4.

XAI taxonomy for the methods found in the literature review.

- Intrinsic Explanation: The intrinsic explanation techniques enable the extraction of decision rules directly from the model’s architecture. ML algorithms like linear regression (LR), logistic regression (LogR), decision trees (DTs), and rule-based models are frequently employed to create intrinsic models [30].

- Post Hoc Explanation: Post hoc explanation techniques are designed to uncover the relationships between feature values and predictions. In contrast, models such as deep neural networks are often less interpretable, as they do not readily yield explicit decision rules from their structural components. When seeking ways to approximate a model’s behavior for decision understanding, we can categorize methods into model-specific and model-agnostic approaches [30].

- Model-Specific Explanation (Mod-Sp): Model-specific explanation techniques in XAI are tailored to particular contexts and conditions, thus leveraging the unique characteristics of the underlying algorithm or the specific architecture of an AI model. Reverse engineering approaches are applied to probe the internals of the algorithms. For example, class activation mapping (CAM) or gradient-weighted CAM (GradCAM) methods offer visual explanations specifically designed for CNN models. These CAM-based methods generate localization maps from convolutional layers, thereby revealing the crucial image regions that contribute to predicting a particular concept [30].

- Model-Agnostic Explanation (Mod-Ag): Model-agnostic explanation techniques focus on the relations between feature values and prediction results. These methods do not depend on or make assumptions about the specific ML model or algorithm being explained. These methods are designed to provide interpretability and explanations for a wide range of ML models, thus making them versatile and applicable across various domains [30].

4.3. Explainability of Visual Quality Assurance Processes

The application of XAI approaches to VQA processes can enhance transparency, interpretability, and trust in AI systems applied to VQA.

- Transparency in Visual Quality Assurance Practices: XAI provides transparency by offering clear insights into the decision-making processes of AI models used for VQA. Transparency is essential for assessing the quality of a model’s decision [31]. For example, ref. [32] used the GradCAM method to visualize the regions on which a CNN model is most focusing its attention for decision making to provide a human-readable explanation of the CNN model’s decision-making process.

- Interpretability of Visual Quality Assurance Practices: Interpretability refers to the ability to understand the underlying workings of an AI model. It involves comprehending how AI models make their decisions. Interpretability can be achieved through techniques that explain the internals of an AI model in a manner that humans can comprehend. These techniques are known as intrinsic methods [31]. In [33], intrinsic interpretable tree-based models were used to assure quality. Interpretability helps users comprehend how various factors impact product quality and enables the identification of the most influential parameters in a manufacturing process, thus allowing for better optimization and control.

- Trust in Visual Quality Assurance Decisions: When the decision-making process in a model is thoroughly understood, the model becomes transparent. Transparency promotes trust in the model [31]. This collaboration between humans and AI models enhances the efficiency and accuracy of VQA processes, thereby leading to more reliable results.

5. Systematic Literature Review

In this section, we define the research questions (RQs) and describe the search process of the systematic literature review (SLR) [34]. For the search process, we describe the electronic databases we were using for the literature and the search string used in our research. This section also contains the inclusion and exclusion criteria of the literature selection.

5.1. Research Questions

We defined the following research questions for this SLR study to identify the sectors, in which AI and XAI are already widely applied and in which sectors more research is required. To assure quality, there are multiple practices that have to be considered. We want to explore the practices that are already empowered by AI and XAI and additionally extract and summarize the AI and XAI methods that were used. Answering these RQs would provide a clear overview of the benefits of AI and XAI for VQA in manufacturing and the research gap in that context. The research questions are as follows:

- RQ1: Which industry sectors are using AI and XAI approaches to provide VQA in manufacturing?

- RQ2: Which VQA practices in manufacturing are covered using AI and XAI approaches?

- RQ3: Which AI and XAI methods are used for VQA in manufacturing?

- RQ4: To what degree has XAI been adopted for VQA in manufacturing?

5.2. Search Process

For our research, we selected five digital online databases, including IEEE Xplore, Science Direct, ACM Digital Library, MDPI, and Scopus. In the last decade, AI technologies have developed rapidly and were initiated in many industrial sectors. They gave promising results; however, they are often not interpretable and thus are not accepted to integrate in real processes, because there is lack of trust in them. For this reason, the concept of XAI has received significant attention from the industry in recent years. Therefore, our SLR is looking for literature published between 2015 and 2023. To capture most of the literature that is applying AI and XAI methods for VQA in the manufacturing area, we determined the following search string:

This search string was used to search only within the abstract to extract the literature that corresponded to our research questions. Searching within the full text provided a lot of literature that was not related to VQA or manufacturing at all; however, since these words were potentially mentioned in the introduction or related work section, these works were returned as studies that fit into our SLR. We read all of the returned studies and further filtered them. The results still contained literature that did not relate to the context of VQA in manufacturing. Moreover, other survey papers were excluded. Table 2 represents the inclusion and exclusion criteria of this search. The last query was carried out on 20 August 2023.

Table 2.

Inclusion and exclusion criteria of the SLR.

The results of the search in the databases IEEE, Science Direct, ACM, and MDPI are represented in Section 6.1.

6. Results of the Literature Review

In this section, we first present the outcomes of the conducted SLR process. Following that, we provide a comprehensive explanation of the significance of AI and XAI for VQA, thus drawing insights from the literature we have collected. Lastly, we share our research findings in response to the specific research questions addressed in this study.

6.1. Conducted SLR

Table 3 summarizes the number of literature results found during the search process after querying the databases with the search string defined in Section 5.2. In total, 260 papers were found. A total of 117 came from IEEE Xplore, 74 came from Science Direct, only 4 came from ACM Digital Library, and MDPI provided 65 studies based on our defined search string. We also searched in Scopus, which includes other databases such as Science Direct. In Scopus, we found 80 papers that were not already included in the databases mentioned before. We examined each individual paper and excluded those that met the exclusion criteria defined in Table 2. Finally, 143 papers were analyzed and summarized, and their information was extracted regarding VQA practices, AI and XAI approaches, the methods used, and the industry sector they were applied to. We present the results in Table 4. Table 3 summarizes the number of literature results found during the search process after querying the databases with the search string defined in Section 5.2. In total 260 papers were found. A total of 117 from IEEE Xplore, 74 came from Science Direct, only 4 came from ACM Digital Library, and MDPI provided 65 studies based on our defined search string. We also searched in Scopus, which includes other databases such as Science Direct. In Scopus, we found 80 papers that were not already included in the databases mentioned before. We examined each individual paper and excluded those that met the exclusion criteria defined in Table 2. Finally, 143 papers were analyzed and summarized, and their information was extracted regarding VQA practices, AI and XAI approaches, the methods used, and the industry sector they were applied to. We present the results in Table 4.

Table 3.

Number of found and selected studies from the SLR on 20 August 2023.

6.2. Role of AI and XAI in VQA

In the following, the role of AI and XAI in VQA is presented. In Table 4, we show a lot of papers found in the SLR. In this section, we selected some papers from the results and summarized them to provide more insights drawn from the literature. We describe how AI and XAI methods can be used to meet the VQA practices (see Section 3.3) predicated on the reviewed literature.

6.2.1. Visual Quality Control

The comparitive study in [3] has already explained how AI-based visual inspection can empower VQC activities. With AI, automated visual product inspections can be realized more accurately and cost-effectively to monitor products or processes for faults, contaminations, and other anomalies. AI-based visual inspection achieves the following advantages:

- It is not biased by the operator’s viewpoint;

- It can be adjusted to products changes, and no programming is necessary;

- It is not quickly weary;

- It is fast;

- It can see a wide spectrum of colors;

- It can operate in potentially dangerous settings;

- Its operators have fewer cognitive burdens.

Most of the literature found in this survey dealt with VQC in the manufacturing process to detect defects. Most approaches used DL methods, such as pretrained CNNs, that automatically extract features from images, and only the classifier has to be replaced to classify the quality of the products of these images. For example, [35] used a pretrained VGG16 model and a pretrained XCeption model to identify defects. Afterwards, an XAI method called GradCAM was applied to this model to visualize the area that most contributed to the prediction. The study [36] built a VGG-like CNN model with 17 layers that achieved higher testing accuracy than other models, such as SVM. In order to gain insights into the interpretability of this CNN model, the authors employed CAM. This technique facilitated the generation of a heatmap, thus highlighting the regions that significantly influence the model’s decision-making process. While GradCAM and CAM are model-specific XAI approaches, the study of [37] used a model-agnostic XAI approach to provide interpretability to a CNN model. It used the SHAP method to understand the contribution of individual pixels for the outcome. These XAI methods have several advantages. On the one hand, the data scientist who develops the AI model can evaluate the model’s behavior more easily and create a more robust and reliable model. On the other hand, the model becomes more transparent. It provides explanations, justifications, and insights into the factors and features that influenced the outcome. This helps QA professionals and auditors to interpret how the AI model arrived at its decisions and identify potential errors or biases in the AI models. Furthermore, they can trust the model, since XAI addresses the black box characteristic of the AI model. The model-specific methods GradCAM and CAM highlight the regions that most contribute to the prediction. The model-agnostic method SHAP provides information about the contribution of each individual pixel for the outcome. Thus, XAI flaws can be revealed, and the stakeholders can verify the model’s prediction when quality issues are detected.

El-Geiheini et al. [38] addressed the textile industry sector using DL to estimate yarn’s parameters (tenacity and elongation%). In the preprocessing step, data vectors of the yarn images were manually extracted and used as input for a multilayer perceptron (MLP) network that estimated the tenacity and elongation.

The machine vision system proposed in [22] was implemented on the learning factory to monitor the quality of workpieces for defects. This study also offers a solution for enhancing energy and resource losses caused by the scrapping and rework of defective workpieces. When a defect is detected, the system redirects the workpiece, thereby preventing the production of additional defective items. This not only saves energy that would have been used for rework, but also eliminates the need for transporting the workpiece from the distribution station to the sorting station, thereby reducing energy consumption. The implementation of this system resulted in significant improvements in energy efficiency and a remarkable reduction in the overall carbon footprint. Depending on the placement of the machine vision system within the production flow, energy efficiency increased by 18.37%, while the total carbon footprint was reduced by 78.83%.

In summary, the literature surveyed in this study predominantly focuses on the utilization of DL methods, specifically pretrained CNNs, for VQC in manufacturing processes. VQC can help reduce energy consumption and waste by identifying visual defects in the early stages of the manufacturing process. CNNs offer the advantage of automatic feature extraction from images, thus eliminating the need for manual computer vision tasks. However, it is worth noting that CNNs can be resource-intensive. Nonetheless, this issue can be mitigated by utilizing pretrained CNN models. To enhance transparency, interpretability, and trust in black box CNN models, XAI methods like GradCAM, CAM, SHAP, and others can be employed.

6.2.2. Process Optimization

AI can help optimize the process parameters to improve the quality of manufactured products [24]. When there are multiple process parameters involved, the complexity of the outcome prediction increases, because more relationships and interactions among the variables have to be considered. Here, ML methods can be used to solve the complex task. A possible solution is to use process parameters as input parameters and predict the corresponding output or the desired quality. Using XAI helps to identify features that are most important for the quality. Varying these features or parameters has the greatest impact on quality.

The study in [39] focused on monitoring multilayer optical tomography images to predict local porosity in additive manufacturing. Random forest (RF) was utilized for porosity prediction, providing interpretability, and identifying the most important features (layers) contributing to the prediction. The proposed model not only predicted porosity, but also indicated the optimal processing window for achieving near-zero porosity.

Another study [40] employed a fusion of two neural networks to optimize process parameters in a milling process. Surface images and mean average roughness data were collected and aligned with specific cutting conditions. By selecting the appropriate cutting tools and parameters, the model aimed to improve surface quality, reduce production time, and minimize energy consumption. The use of multimodality allowed the system to function even with limited data quality, and the multimodal fusion model outperformed the unimodal model in predicting the cutting parameters.

In the context of additive manufacturing, another study [41] focused on regulating process parameters during the printing process. A camera monitored filament width using a CNN-based DL algorithm. When the filament width exceeded a defined threshold, the nozzle travel speed was adjusted to correct the filament width.

Overall, these studies highlight the application of ML and DL techniques for monitoring, optimizing, and regulating manufacturing processes parameters. The incorporation of interpretability, multimodality, and adaptive control mechanisms demonstrates the potential for improving quality. Indeed, optimizing process parameters solely based on visual data may not be sufficient in certain scenarios. While visual data can provide valuable insights into the visual aspects of a process, there are other factors and variables that may need to be considered for effective regulation. For the optimization of process parameters, it is essential to take into account multiple sources of data, including but not limited to visual data. Depending on the specific manufacturing process, additional data sources such as sensor data (e.g., temperature, pressure), historical process data, or real-time feedback from the production line may be necessary. By integrating multiple data sources, a more holistic understanding of the process can be achieved, thereby allowing for a more accurate and robust regulation of process parameters. This comprehensive approach enables the consideration of factors beyond visual defects, such as material properties, environmental conditions, and overall process stability.

6.2.3. Predictive Maintenance

In PM, information is gathered in real time to control the status of devices or machines. The aim is to discover patterns that can assist in predicting and eventually anticipating malfunctions [5]. Machineries have to be maintained in time to ensure consistent quality of the manufactured products. However, maintenance is required with additional cost. Thus, the machine should be optimally maintained just before the entrance of the malfunction or before the wear of the machine causes insufficient quality of the product. There are several AI approaches that help in achieving PM. XAI can provide interpretable insights into the prediction process of anomalies that lead to quality deviations. This helps manufacturers detect potential problems at an early stage, thereby enabling timely intervention and corrective actions. Ref. [16] used regression CNN models on time series data for PM. Then, they applied different XAI methods to explore their performance. In terms of the methods LIME, SHAP, LRP, GradCAM, and Image-Specific Class Saliency (ISCSal), GradCAM achieved best performance.

The study in [25] used a CNN autoencoder to denoise and label images of printed build parts from the laser powder bed fusion process. Additionally, they also provided a CNN model that learned these labeled images and was able to classify new images into succeeded, warning, or failed. Moreover, a statistical Cox proportional hazards (CPHs) model was trained to forecast the hazard imposed at a certain stage. For training the model, the embeddings calculated by the autoencoder were used and labeled with the time until the first five consecutive errors were detected.

Another study [23] explored the use of DL neural networks, including CNNs, to classify images of semiconductors as good or bad. Additionally, they proposed predicting the condition of test contactor pins for spare parts, thus aiming to detect wear and determine if replacements were necessary.

These studies demonstrate the potential of DL approaches, particularly CNNs, in image analysis for quality assessment. By leveraging these techniques, organizations can improve defect detection, classification, and forecasting in manufacturing processes, thereby enabling proactive measures to maintain quality standards and optimize resource allocation.

6.2.4. Root Cause Analysis

Applying VQA into the organization enables the continual improvement of the quality by meeting the VQA practices. A further approach to improve quality is the identification of root causes when a malfunction or anomaly has been detected. The identification of root causes aims to understand the underlying reasons behind these malfunctions or anomalies. By uncovering the root cause, organizations can address and eliminate the problem, thereby leading to improved product quality and a reduction in future malfunctions identified during VQC. To identify the root causes of anomalies, XAI methods can be employed. By understanding which features or factors contribute most to the detection of anomalies, organizations can gain a deeper understanding of the root causes and take appropriate corrective actions. By incorporating XAI methods into the VQC, organizations can not only identify and resolve specific issues, but also enhance overall product quality and consistency. This proactive approach helps to minimize the occurrence of future malfunctions, thus resulting in improved customer satisfaction, increased operational efficiency, and reduced costs associated with quality issues.

The study in [39] used RF with multilayer optical tomography images to predict local porosity in additive manufacturing. Since RF is a transparent model, it is used to analyze the impacts of different process parameters on the local porosity and to identify the causes for defects that occur from improper process parameters. Defects in one layer can also be eliminated by proper parameters in the following layers.

The study in [33] used the tree-based models classification and regression tree (CART), RF, and XGBoost to predict the quality of the static mechanical properties of printed parts. Since these models are intrinsically explainable, their feature importance is used to identify the influencing parameters.

In summary, identifying the root causes of defects through intrinsic explainable models allows organizations to address underlying issues, improve product quality, and reduce the number of malfunctions detected during VQC. By focusing on RCA, organizations can achieve greater consistency and reliability in their products, thereby leading to enhanced customer experiences and business success.

Table 4.

Extracted information from the selected results of the SLR.

Table 4.

Extracted information from the selected results of the SLR.

| Study | Industry Sector | VQA Practices | AI/XAI-Approaches | AI Method | XAI Method |

|---|---|---|---|---|---|

| [22,42,43,44,45,46,47,48,49,50] | Component Inspection | VQC | Class. | CNN | |

| [27,51] | Component Inspection | VQC | Seg. | CNN | |

| [52,53,54,55,56,57,58,59,60,61] | Component Inspection | VQC | OD | CNN | |

| [62,63] | Component Inspection | VQC | Est. | CNN | |

| [64] | Component Inspection | VQC | Class. | RF, DT, NB, SVM, KNN, AdaBoost | |

| [37] | Component Inspection | VQC | Class., Model-Ag. | CNN | SHAP |

| [36] | Component Inspection | VQC | Class., Model-Sp. | CNN | CAM |

| [65] | Component Inspection | VQC, PM | Est. | CNN | |

| [66] | Component Inspection | VQC | AD | CNN | |

| [67] | Component Inspection | VQC | OD, Est. | CNN | |

| [26] | Component Inspection | VQC, PO | OD, Est. | CNN | |

| [68] | Component Inspection | VQC | Class. | KNN, DT, RF, SVM, NB | |

| [69] | Component Inspection | VQC | Seg. | MLP | |

| [41] | AM | PO | Seg., Est. | CNN | |

| [70,71] | AM | VQC | Class. | RF, CART | |

| [72] | AM | VQC | Class., Model-Sp. | CNN, SVM | Smooth IG |

| [32,35] | AM | VQC | Class., Model-Sp. | CNN | GradCAM |

| [25] | AM | VQC, PM | Class., Est. | CNN, CPH | |

| [73] | AM | VQC | Est. | CNN | |

| [74] | AM | VQC | Class. | BPNN | |

| [33] | AM | VQC, RCA | Class., Intrinsic | CART, RF, XGBoost | Interpretable model |

| [75,76,77,78,79,80] | AM | VQC | Class. | CNN | |

| [39] | AM | VQC, PO, RCA | Est., Intrinsic | Multi-Otsu, RF, DT | Interpretable model |

| [81] | AM | VQC | Seg. | CNN | |

| [14] | AM | VQC | Est. | LR, GR, SVM | |

| [82,83,84,85,86] | Electronics | VQC | Class. | CNN | |

| [10,87] | Electronics | VQC | AD | CNN | |

| [23] | Electronics | PM | Class., | DLNN | |

| [88] | Electronics | VQC | Class. | CNN, LSTM | |

| [28] | Electronics | VQC | AD | osPCA, OnlinePCA, ABOD and LOF | |

| [89] | Electronics | PM | Est. | GAF, CNN | |

| [90] | Electronics | VQC | Est. | LogR, RF, SVM | |

| [91,92,93] | Electronics | VQC | Class. | SVM, k-means, GMM, KNN, RF | |

| [94] | Electronics | VQC | Seg., | CNN | |

| [95,96,97,98,99] | Electronics | VQC | OD | CNN | |

| [13,100] | Electronics | VQC | Est. | CNN | |

| [101] | Electronics | VQC | OD | CNN | |

| [102,103,104] | Machinery | VQC, PM | Class. | CNN | |

| [105] | Machinery | VQC | OD | CNN | |

| [106,107] | Machinery | VQC | Class. | CNN | |

| [108] | Machinery | VQC | Seg., OD | Otsu, CNN | |

| [109] | Machinery | PM | Est. | CNN, LSTM | |

| [16] | Machinery | PM | Est., Model-Ag. | CNN | LIME, SHAP |

| [16] | Machinery | PM | Est., Model-Sp. | CNN | LRP, Grad-CAM, ISCSal |

| [110] | Machinery | VQC, PM | AD | CNN | |

| [40] | Machinery | PO | Est. | CNN, LSTM | |

| [111] | Forming | VQC | Est. | CNN, RNN | |

| [112] | Forming | VQC | Class. | SVD, RF | |

| [113,114] | Forming | VQC | Class. | CNN | |

| [59] | Forming | VQC, PO | AD, Est. | CNN | |

| [115] | Forming | PO | Est. | CNN | |

| [116,117,118] | Textile | VQC | Class. | CNN | |

| [119] | Textile | VQC | OD, Class. | CNN | |

| [38] | Textile | VQC | Est. | MLP | |

| [120] | Textile | VQC | Seg. | CNN | |

| [121] | Textile | VQC | Class. | KNN | |

| [122] | Packaging | VQC | OD, Seg., Est. | CNN | |

| [123,124] | Packaging | VQC | Class. | CNN | |

| [125] | Packaging | VQC | AD | CNN | |

| [126,127,128] | Packaging | VQC | OD | CNN | |

| [27,129] | Packaging | VQC | Seg. | CNN | |

| [67] | Packaging | VQC | OD, Est. | CNN | |

| [130,131,132] | Food | VQC | Class. | CNN | |

| [130,132,133,134,135,136,137,138] | Food | VQC | Class. | KNN, SVM, DT, CART, RF, Fuzzy, PLS-DA, LDA, QDA, NB, AdaBoost | |

| [139] | Food | VQC | Seg. | Otsu, MBSAS | |

| [140,141] | Food | VQC | Class. | ANN | |

| [142] | Food | VQC | Est. | SVM, RF, GBM, M5, Cubist, LR | |

| [143,144,145,146] | Wood | VQC | OD | CNN | |

| [147] | Wood | VQC | Seg., Est. | CNN | |

| [148,149] | Other | VQC | Class. | XGBoost, SVM, KNN, LR, RF | |

| [62,148] | Other | VQC | Class. | ANN | |

| [150,151] | Other | VQC | Class. | CNN | |

| [152] | Other | VQC | Class. | CNN, SVM, RF, DT, NB | |

| [153] | Other | VQC | Class., Model-Sp. | CNN | GradCAM, ScoreCAM |

| [154] | Other | VQC | OD, Class. | CNN | |

| [155] | Other | VQC | Seg., Class. | Clustering, LogR, NB, CART, LDA | |

| [156] | Other | VQC | OD, AD | CNN | |

| [157] | Other | VQC | AD | CNN |

6.3. Answer to the Previously Designed Research Question

6.3.1. RQ1: Which Industry Sectors Are Using AI and XAI Approaches to Provide VQA in Manufacturing?

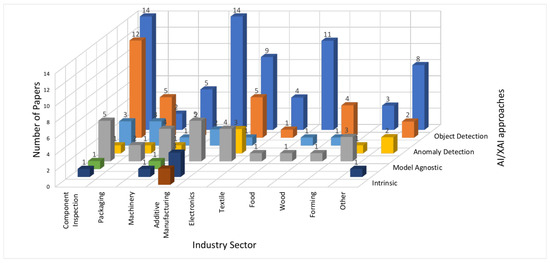

Figure 5 clusters the selected studies regarding industry sectors and AI/XAI approaches. It shows that for the industry sector “Component Inspection”, most of the studies are available. The top two is the sector “Electronics”, and the top three is “Additive Manufacturing”. For these sectors, most studies are available, because the product yield is very high and automating the processes or detecting defects in early stages has the capability to optimize the costs and resources.

Figure 5.

Clustering the selected studies regarding industry sectors and AI/XAI approaches.

6.3.2. RQ2: Which VQA Practices in Manufacturing Are Covered Using AI and XAI Approaches?

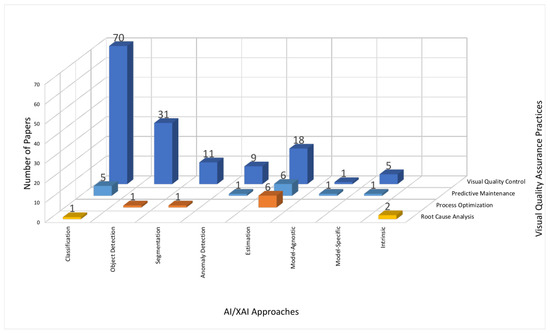

Most studies are performing VQC to assure quality (see Figure 6). Detecting defects using visual data is the easiest task from the VQA practices covered in this review. To perform PM or process optimization practices, often additional data modalities are used, because using visual data alone makes it more difficult to perform these practices. The RCA is mostly performed using XAI approaches, specifically intrinsic models. XAI provides transparency to AI models. Thus, the feature importance can be analyzed, and the root causes of the defects can be revealed.

Figure 6.

Clustering the selected studies regarding AI/XAI approaches and VQA practices.

Furthermore, Figure 6 reveals that most works are using classification methods for VQA. The reason is that you can easily feed a CNN with product images, and the model automatically extracts relevant features and classifies them accordingly. Furthermore, many studies additionally performed object detection or segmentation to highlight the defective regions of the products. On the one hand, other works used estimation methods to estimate product characteristics or process parameters, and, based on the outcomes, they classified the product as defect or nondefect, or they adapted process parameters. On the other hand, estimation methods were used to predict the remaining defect-free time. This approach helps, just like anomaly detection, to prevent defect occurrence by performing PM.

6.3.3. RQ3: Which AI and XAI Methods Are Used for VQA in Manufacturing?

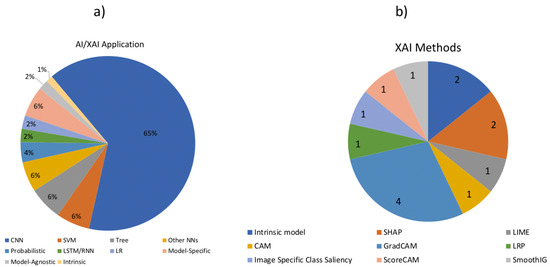

Figure 7a shows the AI and XAI methods that were used to perform VQA. Since this review focused on visual data, CNN was, at a 63% frequency, the most commonly used method. At a 6% frequency, the methods SVM, tree-based models—such as decision tree, random forest, XGBoost, and CART—and different types of neural networks were the next leading ones. When these methods are applied, features are extracted from the images and used as input. Next, probabilistic methods, such as naive Bayes, as well as logistic or Gaussian regression, achieved a proportion of 4%. Linear regression as well as time-series DL methods, such as LSTM and RNN, covered 2% of the methods.

Figure 7.

(a) Ratio of AI and XAI application used in the studies found in the SLR. (b) Number of XAI methods found in the SLR.

Figure 7b shows the XAI methods that were used. The model-specific GradCAM was used most frequently and was applied to image data in four studies. The other model-agnostic methods (ScoreCAM, CAM, Image Specific Class Saliency, Smooth IG, and LRP) were only applied in one study. The model-agnostic method SHAP was used in two studies, and the LIME was used in one study. Intrinsic models were applied in two studies.

6.3.4. To What Degree Has XAI Been Adopted for VQA in Manufacturing?

XAI approaches are far less used than AI approaches. The SLR revealed 10 studies that used XAI. Six studies applied XAI for VQC, and two studies applied XAI for PM to evaluate the prediction or assess the model. Two studies performed RCA using intrinsic models. They used interpretable models to detect defects in the product and, due to the intrinsic explainability of these models, the root cause could be identified.

Only 8% of the studies applied XAI to their AI system. A total of 5% applied model-specific models, 2% applied model-agnostic models, and only 1% applied intrinsic models (see Figure 7).

Most papers have used deep neural networks for VQA. These models have a high accuracy, however, they lack transparency due to their complexity. In total, 138 papers used black box models and 28 papers used white box models, such as LR, tree-based models, or probabilistic-based models.

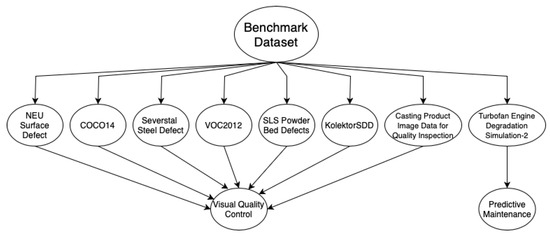

6.4. Benchmark Datasets

In our literature review, we looked for benchmark datasets that have been utilized in the context of VQA practices in manufacturing, particularly in conjunction with AI and XAI methodologies. The datasets found are represented in Figure 8. Moreover, the figure shows which VQA practices were conducted with these datasets. Most datasets were used to conduct VQC, and only one dataset was used for PM.

Figure 8.

Benchmark datasets applied to VQA practices.

In the following, we describe the individual datasets and mention the studies that used these datasets.

- NEU Surface Defect Dataset: This dataset is commonly used as a benchmark dataset in the field of computer vision and ML, particularly in the context of image-based QA and defect detection. This dataset is primarily focused on quality assessment and defect detection for steel surfaces. The NEU dataset includes 1800 grayscale images that represent six different types of defects: rolled-in scale, patches, crazing, pitted surface, inclusion, and scratches. The dataset consists of a significant number of samples for each defect class, which allows for robust training and evaluation of ML models. The studies [32,36,42,66,77] applied AI and XAI methods, such as CNN, CAM, and GradCAM, to this dataset.

- Severstal Steel Defect Dataset: The Severstal dataset is another publicly available dataset used for training and evaluating ML models, particularly for the task of detecting defects in steel sheets. This dataset is valuable in the field of computer vision, especially for semantic segmentation tasks, and it was used by [54,57] for VQA using CNN. It covers various types of defects commonly found on steel surfaces, such as, pitted surfaces, crazing, scratches, and patches. Each type of defect is associated with a specific class or label.

- COCO14 Dataset and VOC2012 Dataset: These two datasets are widely used computer vision datasets that focus on object detection, image segmentation, and captioning tasks used by various ML models, particularly DL models, for image analysis [59,60]. The dataset consists of a large collection of images, where each image contains a variety of objects.

- SLS Powder Bed Defects Dataset: The authors in [35] worked on GradCAM and used the SLS Powder Bed Defects dataset contributed by Erik Westphal and Hermann Seitz. This is a real manufacturing dataset that contains images of the powder bed surface of a selective laser sintering system. The images in this dataset were used to monitor and document the quality of the printing process. The data contains 8514 images of normal and defective powder bed images.

- KolektorSDD Dataset: The KolektorSDD dataset is a collection of images featuring surface cracks on plastic electronic commutators, which are used in various electronic devices. This dataset comprises 50 commutator samples, and each commutator has approximately eight different surfaces, thus resulting in a total of 399 images [105].

- Casting Product Image Data for Quality Inspection: This dataset focuses on casting manufacturing products, specifically submersible pump impellers. Casting defects are common in this industry and manual inspection is time-consuming and error-prone. To address this, the dataset contains 7348 grayscale images of casting products, which are divided into “Defective” or “Ok”. Consequently, DL methods, such as CNN, can be applied for automatic VQC [113].

- Turbofan Engine Degradation Simulation-2: The study [16] worked on time-series data using the gearbox, fast-charging batteries, and turbofan datasets from the full dataset, named Turbofan Engine Degradation Simulation-2. They applied CNN as a black box model to predict the remaining useful life and reviewed five XAI methods (LIME, SHAP, LRP, GradCAM, and ISCSal). This huge dataset is relevant to the VQA context, because it focuses on predicting component degradation and maintenance scheduling that are relevant to manufacturing. Moreover, they provided the source code to reproduce the experiments.

6.5. Comparison to Other Survey Papers

We compared our survey paper to other similar survey papers that were found during the SLR regarding the scope, results, and direction to identify the research gap that our paper is filling. We excluded survey papers that did not include content about AI explainability or interpretability (31 survey papers were excluded). Only three survey papers were about XAI. The comparison of these papers with our paper is presented in Table 5.

Table 5.

Comparison of other survey papers with our survey paper regarding scope, results, and direction.

7. Discussion

In this study, we conducted a literature review to explore the role of AI and XAI in VQA for various industry sectors. The results of our search process yielded a total of 340 papers, out of which 143 papers were selected for analysis based on the defined selection criteria. The selected papers covered a wide range of industries, including component inspection, additive manufacturing, electronics, packaging, machinery, forming, textile, wood, food, and others.

Primary component inspection, additive manufacturing, and electronic product manufacturing are among the leading industry sectors that have witnessed significant applications of AI and XAI methods. These sectors share common characteristics, such as high levels of automation, large production volumes, and complexity of the products. As a result, AI methods have found extensive use in addressing the challenges and maximizing the potential of these industries. XAI for industry sector The majority of the literature reviewed focused on VQC practices in manufacturing processes. AI methods, particularly using pretrained CNNs, were commonly employed for object detection and classification approaches. These methods offer the advantage of automatic feature extraction from images, thereby eliminating the need for manual computer vision tasks. Implementing visual inspection into the manufacturing process is less complex, fast, and the data acquisition procedure can be carried out by integrating a camera instead of several other sensors. Several studies utilized pretrained CNN models, such as VGG-16, Xception, and ResNet, to identify defects and classify the quality of products. Other studies utilized CNN models for segmentation tasks, e.g., to segment the parts that contain the defect or to detect anomalies. The integration of XAI methods, such as CAM, GradCAM, ScoreCAM, SmoothIG, and ISCal, provided model transparency and interpretability by highlighting the regions of an image that contributed most to the prediction. This enabled the explanation of the model behavior and enhanced trustworthiness. Additionally, XAI methods were used to analyze feature importance using tree-based intrinsic models. Based on their transparency, the feature importance could be analyzed and the root causes of defects could be identified. potential of AI/XAI.

Understanding how these complex pretrained CNN models arrive at specific predictions can be challenging, and techniques like CAM, GradCAM, ScoreCAM, SmoothIG, and ISCSal are employed to shed light on their decision-making processes. The need for these techniques underscores the complexity and black box nature of DL models, which is a key challenge in XAI. These methods are model-specific and tailored for CNNs. This highlights a challenge in XAI, the need for different techniques or approaches for different types of models. Interpretability methods that work for one model might not be applicable to others. The lack of a one-size-fits-all solution complicates the field of XAI. Moreover, these XAI methods are used to highlight important areas related to predictions, but they do not provide a comprehensive explanation of the model’s decision process. The challenge lies in developing methods that can provide deeper insights into these complex models. Applying intrinsic models to VQA can provide transparency and an understanding of the feature importance that can help idetifying the root cause of defects. The visual data used in VQA are inherently high-dimensional due to their pixel-based representation. This high dimensionality can make it challenging to develop intrinsic XAI methods. These methods may struggle to provide meaningful insights into the data, as they need to navigate a vast feature space. Furthermore, visual features often interact in nonlinear ways, which can be essential for assessing quality. Intrinsic XAI methods primarily designed for linear relationships may struggle to effectively explain the nonlinear patterns in the data.

AI-driven techniques have also found application in the field of VQA, thus extending not only to VQC, but also encompassing other practices such as process optimization, PM, and RCA. The number of studies found for these practices is less covered than for VQC because the literature search based on the visual aspect and because these tasks requires more effort. Meeting these practices is easier to realize using other data modalities than images alone.

To regulate process parameters, ML algorithms were utilized to predict process outcomes and optimize manufacturing processes. RF models were also used to predict defects and using their interpretability, it was used to optimize process parameters to improve the quality. These approaches demonstrated the potential of AI and XAI in monitoring, optimizing, and regulating manufacturing processes parameters. Interpretability, multimodality, and adaptive control mechanisms were integrated to enhance the understanding and control of complex processes.

It is worth noting that the literature surveyed predominantly utilized black box deep learning methods, particularly pretrained CNN models, for VQA in manufacturing. While these methods offered automatic feature extraction and have achieved high accuracy, they can be resource-intensive. However, the use of pretrained models mitigates this issue to some extent. Some works applied more transparent white box models, such as LR, tree-based, or probabilistic-based models, that require less resources and are additionally understandable; however, they have a lower performance accuracy and the features have to be extracted manually from the images, in contrast to CNN. Implementing interpretable ML models may reduce the model effectiveness.

Moreover, the surveyed studies showcased the importance of considering multiple sources of data for effective VQA. Vision data alone may not be sufficient in certain scenarios, and additional data sources, such as sensor data may need to be incorporated. A holistic approach that integrates multiple data sources can provide a more comprehensive understanding of the manufacturing process and enable the accurate and robust regulation of process parameters and resources.

This survey shows that the adoption of XAI methods in that domain is limited. The reason for this could be that many practitioners may not be aware of the benefits and potential applications of XAI methods. XAI is a relatively new field, and its concepts and methodologies may not be widely understood or promoted in certain domains. Implementing XAI methods can be complex and resource-intensive. It may require additional effort and expertise to integrate XAI methods into existing systems and workflows. In some cases, XAI methods may provide interpretability at the cost of predictive performance. Organizations that prioritize accuracy and performance metrics may be hesitant to adopt XAI techniques if they perceive a tradeoff between interpretability and model effectiveness.

8. Conclusions

In conclusion, the literature review highlighted the significant role of AI and XAI in VQA across various industry sectors. The findings revealed that primary component inspection, additive manufacturing, and electronic product manufacturing are among the leading industry sectors that have witnessed significant applications of AI and XAI methods; however, the application of XAI is still limited in these fields. These industries, which are characterized by high automation levels and complex products, have benefited from AI methods to address challenges and optimize their processes. AI methods, particularly pretrained CNN models, were employed for VQC, process optimization, and PM. The integration of XAI methods enhanced model transparency, interpretability, and enabled RCA by investigating the feature contribution to the prediction. Moreover, XAI enhanced trustworthiness regarding the prediction models.

The reviewed studies predominantly utilized DL methods, particularly pretrained CNN models. These models are resource-intensive, and integrating other AI models, such as decision-tree-based ML models, could enhance resource efficiency and model understandability. However, the trade-off between interpretability and model effectiveness should be carefully considered in such cases. Moreover, the importance of considering multiple data sources for effective VQA was emphasized. While visual data played a significant role, incorporating sensor data and historical process data can provide a more comprehensive understanding of the manufacturing process and enable accurate process optimization.

Despite the potential benefits, the adoption of XAI methods in the reviewed domain appears limited. This could be attributed to a lack of awareness, complexity and resource requirements, concerns regarding interpretability-performance trade-offs, and cultural and organizational factors. To promote the wider adoption of XAI, efforts should focus on education and awareness, research and development, guidelines and standards, user-friendly tools and frameworks, and collaboration and knowledge sharing.

Overall, this survey highlights the significant role of AI and the untapped potential of XAI in VQA across various industry sectors. By addressing the existing challenges, organizations can enhance their VQA practices, improve transparency and interpretability, and foster trust in AI systems.

Author Contributions

Conceptualization, R.H. and C.R.; methodology, R.H.; investigation, R.H.; writing—original draft preparation, R.H.; writing—review and editing, C.R.; visualization, R.H.; supervision, C.R.; project administration, R.H.; funding acquisition, C.R.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation), under grant DFG-GZ: RE 2881/6-1, and the French Agence Nationale de la Recherche (ANR), under grant ANR-22-CE92-0007.

Conflicts of Interest

The authors do not have any conflict of interest that affect their research regarding their honesty and transparency.

Abbreviations

The following abbreviations are used in this manuscript:

| SLR | Systematic Literature Review |

| RQ | Research Question |

| AI | Artificial Intelligence |

| XAI | Explainable Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| QM | Quality Management |

| QA | Quality Assurance |

| QC | Quality Control |

| VQA | Visual Quality Assurance |

| VQC | Visual Quality Control |

| PO | Process Optimization |

| PM | Predictive Maintenance |

| RCA | Root Cause Analysis |

| Class | Classification |

| Est | Estimation |

| OD | Object Detection |

| Seg | Segmentation |

| AD | Anomaly Detection |

| Mod-Ag | Model-Agnostic |

| Mod-Sp | Model-Specific |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| RNN | Recurrent Neural Network |

| MLP | Multilayer Perceptron |

| BPNN | Back Propagation Neural Network |

| DLNN | Deep Learning Neural Network |

| ANN | Artifical Neural Network |

| RF | Random Forest |

| DT | Decision Tree |

| CART | Classification And Regression Tree |

| AdaBoost | Adaptive Boosting |

| XGBoost | eXtreme Gradient Boosting |

| SVM | Support Vector Machine |

| LR | Linear Regression |

| LogR | Logistic Regression |

| GR | Gaussian Regression |

| GMM | Gaussian Mixture Model |

| KNN | K-Nearest Neighbor |

| LDA | Linear Discriminant Analysis |

| QDA | Quadratic Discriminant Analysis |

| PLS-DA | Partial Least Squares Discriminant Analysis |

| CPH | Cox Proportional Hazards |

| MBSAS | Modified Basic Sequential Algorithmic Scheme |

| PCA | Principal Component Analysis |

| ABOD | Angle-Based Outlier Detection |

| LOF | Local Outlier Factor |

| GAF | Gramian Angular Field |

| SVD | Singular Value Decomposition |

| GBM | Generalized Boosting regression Model |

| SHAP | SHapley Additive exPlanations |

| CAM | Class Activation Mapping |

| GradCAM | Gradient-Weighted Class Activation Mapping |

| ScoreCAM | Score Class Activation Mapping |

| Smooth IG | Smooth Integrated Gradients |

| ISCSal | Image-Specific Class Saliency |

| LIME | Local Interpretable Model-Agnostic Explanations |

| tp | True Positive |

| tn | True Negative |

| fp | False Positive |

| fn | False Negative |

| acc | Accuracy |

| p | Precision |

| r | Recall |

| PR-AUC | Area under the Precision–Recall Curve |

| F1 | F Measure |

| IoU | Intersection of Union |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| RMSE | Root Mean Squared Error |

References

- Ibidapo, T.A. From Industry 4.0 to Quality 4.0—An Innovative TQM Guide for Sustainable Digital Age Businesses; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Jakubowski, J.; Stanisz, P.; Bobek, S.; Nalepa, G.J. Explainable anomaly detection for Hot-rolling industrial process. In Proceedings of the 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA), Porto, Portugal, 6–9 October 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Arora, A.; Gupta, R. A Comparative Study on Application of Artificial Intelligence for Quality Assurance in Manufacturing. In Proceedings of the 2022 4th International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 21–23 September 2022; pp. 1200–1206. [Google Scholar] [CrossRef]

- Psarommatis, F.; Sousa, J.; Mendonça, J.P.; Kiritsis, D. Zero-defect manufacturing the approach for higher manufacturing sustainability in the era of industry 4.0: A position paper. Int. J. Prod. Res. 2022, 60, 73–91. [Google Scholar] [CrossRef]

- Ahmed, I.; Jeon, G.; Piccialli, F. From Artificial Intelligence to Explainable Artificial Intelligence in Industry 4.0: A Survey on What, How, and Where. IEEE Trans. Ind. Inform. 2022, 18, 5031–5042. [Google Scholar] [CrossRef]

- Tabassum, S.; Parvin, N.; Hossain, N.; Tasnim, A.; Rahman, R.; Hossain, M.I. IoT Network Attack Detection Using XAI and Reliability Analysis. In Proceedings of the 2022 25th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 17–19 December 2022; pp. 176–181. [Google Scholar] [CrossRef]

- Machlev, R.; Heistrene, L.; Perl, M.; Levy, K.; Belikov, J.; Mannor, S.; Levron, Y. Explainable Artificial Intelligence (XAI) techniques for energy and power systems: Review, challenges and opportunities. Energy AI 2022, 9, 100169. [Google Scholar] [CrossRef]

- Le, T.T.H.; Prihatno, A.T.; Oktian, Y.E.; Kang, H.; Kim, H. Exploring Local Explanation of Practical Industrial AI Applications: A Systematic Literature Review. Appl. Sci. 2023, 13, 5809. [Google Scholar] [CrossRef]

- Huawei Technologies Co., Ltd. Artificial Intelligence Technology; Springer: Singapore, 2023. [Google Scholar]

- Maggipinto, M.; Beghi, A.; Susto, G.A. A Deep Convolutional Autoencoder-Based Approach for Anomaly Detection with Industrial, Non-Images, 2-Dimensional Data: A Semiconductor Manufacturing Case Study. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1477–1490. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar] [CrossRef]