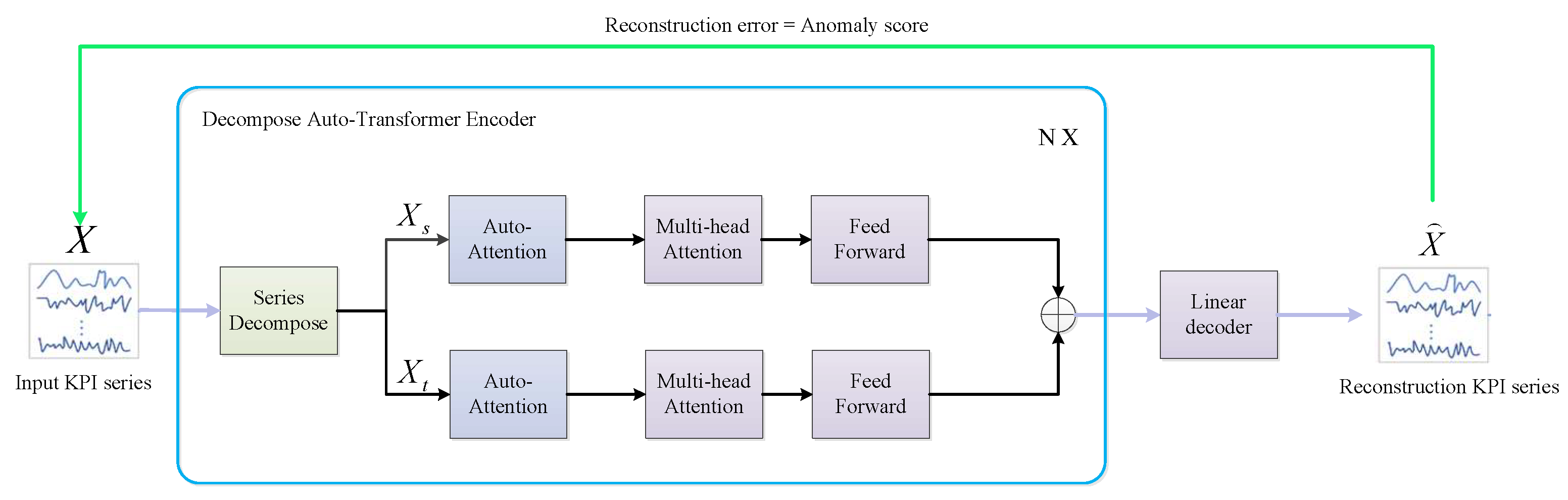

Decompose Auto-Transformer Time Series Anomaly Detection for Network Management †

Abstract

1. Introduction

- (1)

- To empower the deep anomaly detection model with imminent decomposition capability, we propose a decomposition auto-attention network as a decomposition architecture;

- (2)

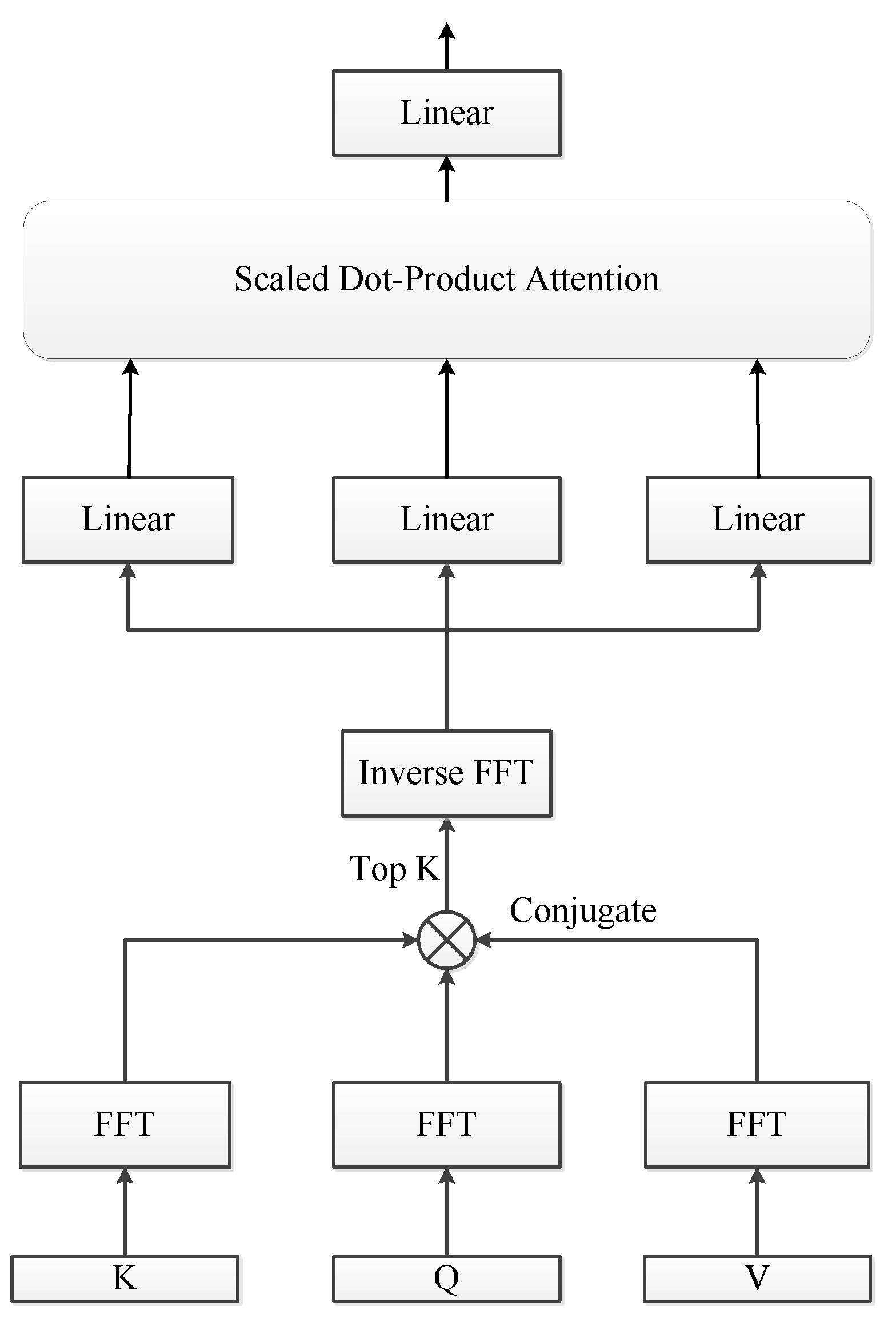

- To extract the dominating periodic patterns in the frequency domain, we propose an auto-attention module to discover the period-based dependencies of time series;

- (3)

- Extensive experimental results on multiple public datasets show that the proposed method achieves state-of-the-art performance.

2. Related Work

3. Problem Formulation

4. Decompose Auto-Transformer

5. Experiments

5.1. Datasets and Model Details

- (1)

- SMD (Server Machine Dataset) [12] collected from a large Internet company, where each observation is equally spaced by 1 min;

- (2)

- SMAP (Soil Moisture Active Passive satellite) [24] has 562,798 time points, of which the training set size is 135,182 and the testing set size is 427,616;

- (3)

- MSL (Mars Science Laboratory rover) [24] has 132,046 time points, of which the training set size is 58,317 and the testing set size is 73,729;

- (4)

- SWaT (Secure Water Treatment) [25] collected from a real-world water treatment plant with 51 dimensions;

- (5)

- PSM (Pooled Server Metrics) [26] collected from multiple application server nodes at eBay with 26 dimensions.

5.2. Baselines

- (1)

- LSTM-VAE [3]: The model utilizes both VAE and LSTM for anomaly detection;

- (2)

- OmniAnomaly [12]: The model is a stochastic recurrent neural network model that glues Gated Recurrent Unit (GRU) and VAE;

- (3)

- MTAD-GAT [14]: The model considers each univariate time-series as an individual feature and includes two graph attention layers in parallel;

- (4)

- USAD [17]: The model use adversarial training and its architecture allows it to isolate anomalies;

- (5)

- TransFram [21]: The model uses the transformer as the base architecture;

- (6)

- AnomalyTran [22]: The model combines the transformer and the Gaussian prior association makes it easier to distinguish rare anomalies;

- (7)

- TranAD [27]: The model uses an adversarial training program to amplify the reconstruction error, because the simple transformer based networks often miss small abnormal deviations.

5.3. Performance Comparison

5.4. Ablation Study

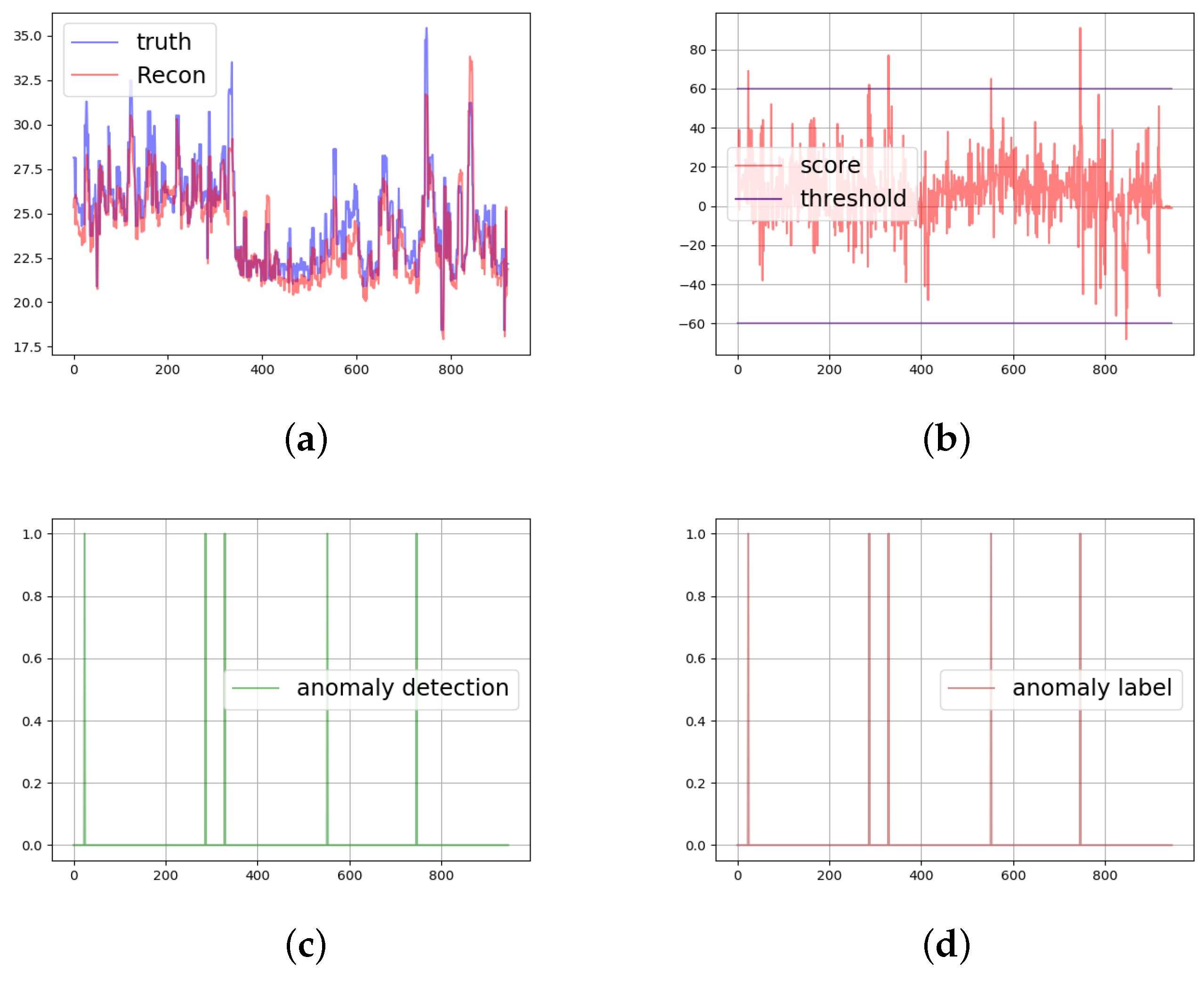

5.5. Case Study

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Simon, D.; Duque, A.; Lohfink, A.P.; Garth, C.; Schotten, H.D. Security in process: Detecting attacks in industrial process data. arXiv 2019, arXiv:1909. [Google Scholar]

- Wang, S.Y. Ensemble2: Anomaly Detection via EVT-Ensemble Framework for Seasonal KPIs in Communication Network. arXiv 2022, arXiv:2205.14305. [Google Scholar]

- Hoshi, Y.; Park, D.; Kemp, C.C. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar]

- Wang, J.; Wu, H.; Xu, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Shaukat, K.; Alam, T.M.; Luo, S.; Shabbir, S.; Hameed, I.A.; Li, J.; Abbas, S.K.; Javed, U. A review of time-series anomaly detection techniques: A step to future perspectives. In Proceedings of the Future of Information and Communication Conference, Virtual, 29–30 April 2021; pp. 865–877. [Google Scholar]

- Yi, J.; Park, C.; Yoon, S.; Choi, K. Deep learning for anomaly detection in time-series data: Review, analysis, and guidelines. IEEE Access 2021, 9, 120043–120065. [Google Scholar]

- Sgueglia, A.; Di Sorbo, A.; Visaggio, C.A.; Canfora, G. A systematic literature review of iot time series anomaly detection solutions. Future Gener. Comput. Syst. 2022, 134, 170–186. [Google Scholar] [CrossRef]

- Wolf, T.; Shanbhag, S. Accurate anomaly detection through parallelism. IEEE Netw. 2009, 23, 22–28. [Google Scholar]

- Lemes, M.; Proena, E.; Pena, H.M.; de Assis, M.V.O. Anomaly detection using forecasting methods arima and hwds. In Proceedings of the International Conference of the Chilean Computer Science Society (SCCC), Temuco, Chile, 11–15 November 2013; pp. 63–66. [Google Scholar]

- Flint, I.; Laptev, N.; Amizadeh, S. Generic and scalable framework for automated time-series anomaly detection. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, NSW, Australia, 10–13 August 2015; pp. 1939–1947. [Google Scholar]

- Wang, J.; Jing, Y.; Qi, Q.; Feng, T.; Liao, J. Alsr: An adaptive label screening and relearning approach for interval-oriented anomaly detection. Expert Syst. Appl. 2019, 136, 94–104. [Google Scholar] [CrossRef]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. Proc. ACM SIGKDD Int. Conf. Knowl. Discovery Data Mining 2019, 3, 2828–2837. [Google Scholar]

- Wu, B.; Liu, Y.; Lang, B.; Huang, L. Dgcnn: Disordered graph convolutional neural network based on the gaussian mixture model. Neurocomputing 2018, 321, 346–356. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Y.; Duan, J.; Huang, C.; Cao, D.; Tong, Y.; Xu, B.; Bai, J.; Tong, J.; Zhang, Q. Multivariate time-series anomaly detection via graph attention network. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 841–850. [Google Scholar]

- Deng, A.; Hooi, B. Graph neural network-based anomaly detection in multivariate time series. Proc. AAAI Conf. Artif. Intell. 2021, 35, 4027–4035. [Google Scholar] [CrossRef]

- Bashar, M.A.; Nayak, R. Time series anomaly detection with generative adversarial networks. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, Australia, 1–4 December 2020; pp. 1778–1785. [Google Scholar]

- Audibert, J.; Michiardi, P.; Guyard, F.; Marti, S.; Zuluaga, M.A. Usad: Unsupervised anomaly detection on multivariate time series. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 3395–3404. [Google Scholar]

- Xu, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z.; Liu, Y.; Zhao, Y.; Pei, D.; Feng, Y.; et al. Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 187–196. [Google Scholar]

- Li, Z.; Chen, W.; Pei, D. Robust and unsupervised kpi anomaly detection based on conditional variational autoencoder. In Proceedings of the 2018 IEEE 37th International Performance Computing and Communications Conference (IPCCC), Orlando, FL, USA, 17–19 November 2018. [Google Scholar]

- Yan, H.; Li, Z.; Li, W.; Wang, C.; Wu, M.; Zhang, C. Contnet: Why not use convolution and transformer at the same time? arXiv 2021, arXiv:2104.13497. [Google Scholar]

- Zerveas, G.; Jayaraman, S.; Patel, D.; Bhamidipaty, A.; Eickhoff, C. A transformer-based framework for multivariate time series representation learning. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; pp. 2114–2124. [Google Scholar]

- Xu, J.; Wu, H.; Wang, J.; Long, M. Anomaly transformer: Time series anomaly detection with association discrepancy. arXiv 2021, arXiv:2110.02642. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30–45. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–23 August 2018; pp. 387–395. [Google Scholar]

- Mathur, A.P.; Tippenhauer, N.O. Swat: A water treatment testbed for research and training on ics security. In Proceedings of the 2016 International Workshop on Cyber-physical Systems for Smart Water Networks (CySWater), Vienna, Austria, 11 April 2016; pp. 31–36. [Google Scholar]

- Abdulaal, A.; Liu, Z.; Lancewicki, T. Practical approach to asynchronous multivariate time series anomaly detection and localization. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; pp. 2485–2494. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

| Dataset | Train | Test | Dimensions | Anomalies (%) |

|---|---|---|---|---|

| SMD | 708,405 | 708,420 | 38 | 4.16 |

| SMAP | 135,183 | 427,617 | 25 | 13.13 |

| MSL | 58,317 | 73,729 | 55 | 10.72 |

| SWaT | 475,200 | 449,919 | 51 | 12.14 |

| PSM | 132,481 | 87,841 | 25 | 27.76 |

| Method | SMD | SMAP | MSL | SWaT | PSM | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| LSTM-VAE [3] | 77.63 | 89.12 | 82.98 | 88.65 | 72.18 | 79.57 | 92.32 | 78.61 | 84.92 | 81.78 | 78.55 | 80.13 | 90.16 | 74.48 | 81.57 |

| Omni Anomaly [12] | 83.34 | 94.49 | 88.57 | 74.16 | 97.76 | 84.34 | 88.67 | 91.17 | 89.89 | 86.33 | 76.94 | 81.36 | 91.61 | 71.36 | 80.23 |

| MTAD-GAT [14] | 88.28 | 84.92 | 86.57 | 89.06 | 91.23 | 90.13 | 87.54 | 94.40 | 90.84 | 92.46 | 75.12 | 82.89 | 95.28 | 75.65 | 84.34 |

| USAD [17] | 93.14 | 96.17 | 93.82 | 76.97 | 98.31 | 81.86 | 88.10 | 97.86 | 91.09 | 98.70 | 74.02 | 84.60 | 74.42 | 99.01 | 84.97 |

| TransFram [21] | 91.60 | 86.44 | 88.94 | 85.36 | 87.48 | 86.41 | 97.21 | 90.33 | 93.64 | 92.47 | 75.88 | 83.36 | 88.14 | 86.99 | 87.56 |

| Anomaly Tran [22] | 88.42 | 96.90 | 92.47 | 92.16 | 89.79 | 88.96 | 95.83 | 92.66 | 94.22 | 86.38 | 92.14 | 88.17 | 94.75 | 88.59 | 90.56 |

| TranAD [27] | 92.62 | 99.74 | 96.05 | 80.43 | 99.99 | 89.15 | 90.38 | 99.99 | 94.94 | 97.60 | 69.97 | 81.51 | 88.15 | 84.91 | 89.72 |

| DATN | 93.50 | 94.37 | 93.92 | 96.25 | 86.45 | 91.15 | 96.75 | 93.26 | 94.97 | 95.38 | 85.32 | 90.12 | 97.39 | 87.49 | 92.25 |

| Components | F1 Measures (as %) | ||||||

|---|---|---|---|---|---|---|---|

| Series Decompose | Auto-Attention | SMD | SMAP | MSL | SWaT | PSM | Avg |

| × | × | 86.21 | 85.23 | 83.13 | 82.21 | 84.22 | 84.20 |

| × | ✓ | 89.23 | 88.14 | 87.19 | 86.36 | 89.43 | 88.08 |

| ✓ | × | 92.38 | 90.38 | 92.75 | 89.17 | 92.15 | 91.41 |

| ✓ | ✓ | 93.95 | 91.13 | 94.99 | 90.05 | 92.15 | 92.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, B.; Fang, C.; Yao, Z.; Tu, Y.; Chen, Y. Decompose Auto-Transformer Time Series Anomaly Detection for Network Management. Electronics 2023, 12, 354. https://doi.org/10.3390/electronics12020354

Wu B, Fang C, Yao Z, Tu Y, Chen Y. Decompose Auto-Transformer Time Series Anomaly Detection for Network Management. Electronics. 2023; 12(2):354. https://doi.org/10.3390/electronics12020354

Chicago/Turabian StyleWu, Bo, Chao Fang, Zhenjie Yao, Yanhui Tu, and Yixin Chen. 2023. "Decompose Auto-Transformer Time Series Anomaly Detection for Network Management" Electronics 12, no. 2: 354. https://doi.org/10.3390/electronics12020354

APA StyleWu, B., Fang, C., Yao, Z., Tu, Y., & Chen, Y. (2023). Decompose Auto-Transformer Time Series Anomaly Detection for Network Management. Electronics, 12(2), 354. https://doi.org/10.3390/electronics12020354